Text

How Not To Do A Podcast

Don't have a web site: You don't really need one is this day and age. People find your podcast on Spotify, or on Apple Podcasts, or on YouTube. If you put your episodes up on YouTube, don't need hosting or a domain or a home page!

Don't link to the RSS Feed: If you do have a web site, you can just not bother with a public RSS feed. People on Apple podcasts get your episodes from Apple. If you want to post the episodes to your Web site, just embed the mp3a in blog posts! Don't give the hoi polloi access to the RSS feed, or they might steal your content, or worse, drive up hosting costs by downloading all the mp3s at once!

Don't bother with show notes: Show notes limit your reach. They don't show up the same on YouTube and Spotify, and you can't embed images in a pinned comment. Even worse, show notes lead people away from your podcast or the app. Alternatively, treat show notes as required reading. If you must have them, this is the way to get the most out of your show notes. Refer to the show notes all the time, and tell our audience to just read or listen to the linked stuff. Don't bother excerpting or paraphrasing things from elsewhere on the Internet. People are on a PC, they can click on links! In the show notes, don't bother adding context either. When your listeners have listened to the episode, they will know what the links mean.

Talk about your editing process and audio setup: Did you just buy a new mic? Are you recording on your laptop microphone in a hotel room? Don't just apologise for the audio quality, tell your listeners that normally you would record on the same hardware that NPR uses for This American Life. Talk about how you bought a new Mac Studio Ultra with 128 GB of RAM for editing the pauses out, and that time you had to interrupt the interview because your guest opened the door to accept a package delivery.

Keep introductions to a minimum: Your listeners have listened to the previous 500 episodes in chronological order, so they know what your podcast is about and who is hosting it. Don't start your podcast episodes with the name of the podcast, or introductions where every host says "Hello, I am Alice" "And I am Bob. This is the Alice and Bob send cryptic messages podcast. Today we're going to discuss PGP." This stuff is lame. Just say "Hi, here we are again, how has your last week been?" or "We're back! Sooo..."

If you really have to introduce multiple speakers, just have one host name everybody. Instead of repeating what the podcast is and who is doing it every time, start the episode with frequently updated information like upcoming meet-ups, listener feedback about the episode before last, how to reach you on twitter, your new mastodon instance, and current Patreon goals.

Use .mp3, .aac, or .wma: As long as the bit rate is high enough, people won't notice. Your goal is to reach as many people as possible, so an old file format like WMA is the best. For audiophiles, also have a feed in FLAC format. In the past, 250MB episodes would have been annoying, but everybody listens on YouTube and Spotify anyway (they do the transcoding for you). If they don't, maybe the 250MB per hour will make them reconsider.

Episodes should least at least 80 minutes long: Sometimes time flies, sometimes you need a lot of time to get to the point. People love to listen to the Joe Rogan Experience, which is sometimes 3 hours long. If your guests have more to say, don't record a bonus episode, just ask yourself: What would Rogan do?

Chapter marks work against you: Chapter marks let listeners skip past the ads, but they also let them skip past the part where you announce the next listener meet-up, the new URL of the t-shirt store, and ways to contact you. It is of vital importance that in five years, people who listen to your podcast will be familiarised with the old twitter handle you used to have, the old coupon code for RAID: Shadow Legends that doesn't work any more, and the listener meet-up in downtown Mariupol.

Frequently upgrade your web site: Like I said, it's usually not worth having a web site. But if you do, you need to

keep it fresh.

To do this, you should frequently update the URL of your home page, the URLs of blog posts where users can listen to individual episodes in their browser, your commenting system, your domain name, and the character encoding of your transcripts.

Listeners love banter and personality: Don't read from a script, because that sounds lame and stilted. Don't even have an agenda or written notes. If you want to talk something out, do it live on air. If you talk to a co-host or a guest about the topic or the ground rules for the episode, then do that live on air, too. If you go off topic, or if you have to spend a minute googling something during an episode, if your dog barks, a host goes on a tangent or if there is a package delivery at the door, just say "we'll edit that part out" and then leave the whole thing in, or edit but leave in the bit where you say "we'll edit it out in post". That joke never gets old. Asking your co-hosts about the topic of today's episode gives your podcast personality, rich texture, and entertainment value. The key is to be your raw, unfiltered self. Anybody can read from a script, but only you can answer the door for an Amazon package.

Listeners love drama: If somebody sends you a mean tweet, don't ignore it and move on. Use it! Read out all the mean tweets on your podcast. Make them a regular feature. Ask your listeners whether they agree! They will shower you with sympathy and engagement. If you don't have enough twitter drama to go on, you can invite guests for drama: Get people from twitter onto your podcast. I know, it sounds like a threat when you have twitter beef with somebody and ask them onto your show where you can edit them and you have an audience that's on your side, but you're reasonable here. You can say "twitter is such a terrible format for this, let's hash it out somewhere more appropriate". In the best case, you win the twitter argument without actually having to record the episode. You can just say in your podcast they didn't want to debate you.

Don't record episode 0 or -1: Back in 2005, it was customary to record an "episode zero" as the first thing in your RSS feed. There was even a cool service (now defunct) that aggregated all every "episode zero" from feeds into a feed of upcoming podcasts. These days, you record a trailer for your podcast and that is inserted into feeds of other podcasts at Wondery, Tortoise Media, and Serial Productions. It's passé to have a 15 minute introduction to an upcoming podcast.

Similarly, it used to be customary to record one or more "negative" episodes where you just check out your recording equipment and get used to the process, figure out which segments and interview formats work. You're a professional though. You don't need to get used to hearing your own voice.

You can go the extra mile and scrub everything but the latest 5 episodes from the feed.

5 notes

·

View notes

Text

Resolution Independence, Zoom, Fractional Scaling, Retina Displays, High-DPI: A Minefield

I already explained how CPU dispatch is a minefield: It doesn't cause intermittent bugs. It often doesn't even cause crashes. Badly implemented CPU dispatch means you build something on your machine that runs on your machine, but doesn't perform the dispatch correctly, so it crashes on somebody else's machine, or something build on a worse machine still runs fine on a better machine, but not as well as it could. Some of the bugs only manifest with a different combination of compiler, build system, ABI, and microarchitecture. CPU dispatch is a minefield because it's easy to get wrong in non-obvious ways.

I recently played an old game on Windows 11, with a high-DPI 2560x1600 (WQXGA) monitor. Text was too small to read comfortably read, and the manufacturer had set the zoom level to 150% by default. When I launched the game, it started in fullscreen mode, at 2560x1660, which Windows somehow managed to zoom up to 3840x2400. The window was centered, with all the UI elements hidden behind the edges of the screen. When I switched from fullscreen to windowed, the window still covered the whole desktop and the task bar. I quit the game and switched to another. That game let me choose the resolution before launch. At first I tried 2560x1660, but nothing worked right. Then I started it again, at 1920x1080. It didn't look quite right, and I couldn't understand what was going on. Windows has scaled the game up to 2880x1620, which looked almost correct. At this point I realised what was happening, and I set the zoom to 100%. Both games displayed normally.

The first game was an old pixel art platformer from the early 2000s, with software rendering. The second is a strategy game built with OpenGL around 2015, with high-resolution textures based on vector art, and with a UI that works equally well on an iPad and on a PC.

It was hard to read things on that monitor, so I set the font scaling to 150%, but somehow that made things harder to read. Some applications did not honour the font size defaults, and others did, and still others had tiny UI elements with big letters that were spilling out.

Next, I tried to run a game on Ubuntu, with Sway (based on wayland) as the desktop environment. It's a different machine, a 15.6 inch 1920x1080 laptop with an external 1920x1080 23 inch monitor attached. I zoomed the internal display of the laptop by 150% in order to have windows appear equally sized on both monitors.

What is happening on Windows 11 seems to be that even OpenGL games that don't think in terms of pixels, but in terms of floating point coordinates that go from -1 to +1 in both the x and y dimension, (so 0.1 screen units are different sizes in different dimensions) are treated the same as software rendering games that give a buffer of software-rendered pixels to the operating system/graphics environment. Making an already resolution-independent window bigger feels pointless.

What I would want to happen by default, especially in the case of the software-rendered game, is for the operating system to just tell my game that the desktop is not sized 2560x1600, but 1706x1066 (or just 1600x1000), and to then scale that window up. If the window is scaled up, mouse position coordinates should be automatically scaled down from real pixels to software pixels, unless the mouse cursor is captured: If I am playing a DOOM clone or any first-person game, I do not want relative mouse sensitivity to decrease when I am playing on a 4K monitor or when I am maximising the window (if playing in windowed mode). If I have a retina/zoomed display attached, and a standard definition/unzoomed display, and there is a window overlapping both screens, then only the part of a window that is on the zoomed display should be zoomed in.

What I would want to happen with a "resolution-independent" game is this: The game queries the size of the monitor with a special resolution-independent query function. There is no way to "just make it backward compatible". This is a new thing and needs new API. The query returns

Size of all desktops in hardware pixels

Size of all screens in real-world centimetres

Preferred standard text size in pt/cm (real world) or pixels

Zoom factor (in percent) of all desktops

Which screens are touch or multi-touch screens

Is dark mode enabled?

Which desktop is "currently active"

The "preferred" desktop to open the window

This information would allow an application to create a window that is the appropriate size, and scale all text and UI elements to the appropriate size. We can't assume that a certain size in pixels is big enough for the user to comfortably hit a button.

Even this information might not be enough. What should be the behaviour if a windowed OpenGL application is dragged between a 4K monitor at 200% zoom, and a 640x480 CRT? Should the OS scale the window down the same way it currently scales windows up when they aren't "retina aware"?

I don't really know. All I do know is that Windows, Mac OS, and different wayland compositors all handle high-DPI zoom/retina differently, in a way that breaks sometimes, in some environments. But it looks fine if you don't have scaling set. There are ways to tell the windowing system "I know what I am doing" if you want to disable scaling, but these are easy to abuse. There's a cargo cult of just setting "NSHighResolutionCapable" or "HIGHDPIAWARE" without understanding why and why not. Win32 provides all the information you need, with a very complex API. Wayland has a very different approach. SDL is aware of the issue.

I really hope SDL3 will make this work. If you get this wrong, you'll only realise when somebody on a different operating system with a different monitor tries to get your game to fit on the screen by fiddling with the registry, and it goes from not fitting on the monitor to text being too small read.

8 notes

·

View notes

Text

Auto-Generated Junk Web Sites

I don't know if you heard the complaints about Google getting worse since 2018, or about Amazon getting worse. Some people think Google got worse at search. I think Google got worse because the web got worse. Amazon got worse because the supply side on Amazon got worse, but ultimately Amazon is to blame for incentivising the sale of more and cheaper products on its platform.

In any case, if you search something on Google, you get a lot of junk, and if you search for a specific product on Amazon, you get a lot of junk, even though the process that led to the junk is very different.

I don't subscribe to the "Dead Internet Theory", the idea that most online content is social media and that most social media is bots. I think Google search has gotten worse because a lot of content from as recently as 2018 got deleted, and a lot of web 1.0 and the blogosphere got deleted, comment sections got deleted, and content in the style of web 1.0 and the blogosphere is no longer produced. Furthermore, many links are now broken because they don't directly link to web pages, but to social media accounts and tweets that used to aggregate links.

I don't think going back to web 1.0 will help discoverability, and it probably won't be as profitable or even monetiseable to maintain a useful web 1.0 page compared to an entertaining but ephemeral YouTube channel. Going back to Web 1.0 means more long-term after-hours labour of love site maintenance, and less social media posting as a career.

Anyway, Google has gotten noticeably worse since GPT-3 and ChatGPT were made available to the general public, and many people blame content farms with language models and image synthesis for this. I am not sure. If Google had started to show users meaningless AI generated content from large content farms, that means Google has finally lost the SEO war, and Google is worse at AI/language models than fly-by-night operations whose whole business model is skimming clicks off Google.

I just don't think that's true. I think the reality is worse.

Real web sites run by real people are getting overrun by AI-generated junk, and human editors can't stop it. Real people whose job it is to generate content are increasingly turning in AI junk at their jobs.

Furthermore, even people who are setting up a web site for a local business or an online presence for their personal brand/CV are using auto-generated text.

I have seen at least two different TV commercials by web hosting and web design companies that promoted this. Are you starting your own business? Do you run a small business? A business needs a web site. With our AI-powered tools, you don't have to worry about the content of your web site. We generate it for you.

There are companies out there today, selling something that's probably a re-labelled ChatGPT or LLaMA plus Stable Diffusion to somebody who is just setting up a bicycle repair shop. All the pictures and written copy on the web presence for that repair shop will be automatically generated.

We would be living in a much better world if there was a small number of large content farms and bot operators poisoning our search results. Instead, we are living in a world where many real people are individually doing their part.

152 notes

·

View notes

Text

Things That Are Hard

Some things are harder than they look. Some things are exactly as hard as they look.

Game AI, Intelligent Opponents, Intelligent NPCs

As you already know, "Game AI" is a misnomer. It's NPC behaviour, escort missions, "director" systems that dynamically manage the level of action in a game, pathfinding, AI opponents in multiplayer games, and possibly friendly AI players to fill out your team if there aren't enough humans.

Still, you are able to implement minimax with alpha-beta pruning for board games, pathfinding algorithms like A* or simple planning/reasoning systems with relative ease. Even easier: You could just take an MIT licensed library that implements a cool AI technique and put it in your game.

So why is it so hard to add AI to games, or more AI to games? The first problem is integration of cool AI algorithms with game systems. Although games do not need any "perception" for planning algorithms to work, no computer vision, sensor fusion, or data cleanup, and no Bayesian filtering for mapping and localisation, AI in games still needs information in a machine-readable format. Suddenly you go from free-form level geometry to a uniform grid, and from "every frame, do this or that" to planning and execution phases and checking every frame if the plan is still succeeding or has succeeded or if the assumptions of the original plan no longer hold and a new plan is on order. Intelligent behaviour is orders of magnitude more code than simple behaviours, and every time you add a mechanic to the game, you need to ask yourself "how do I make this mechanic accessible to the AI?"

Some design decisions will just be ruled out because they would be difficult to get to work in a certain AI paradigm.

Even in a game that is perfectly suited for AI techniques, like a turn-based, grid-based rogue-like, with line-of-sight already implemented, can struggle to make use of learning or planning AI for NPC behaviour.

What makes advanced AI "fun" in a game is usually when the behaviour is at least a little predictable, or when the AI explains how it works or why it did what it did. What makes AI "fun" is when it sometimes or usually plays really well, but then makes little mistakes that the player must learn to exploit. What makes AI "fun" is interesting behaviour. What makes AI "fun" is game balance.

You can have all of those with simple, almost hard-coded agent behaviour.

Video Playback

If your engine does not have video playback, you might think that it's easy enough to add it by yourself. After all, there are libraries out there that help you decode and decompress video files, so you can stream them from disk, and get streams of video frames and audio.

You can just use those libraries, and play the sounds and display the pictures with the tools your engine already provides, right?

Unfortunately, no. The video is probably at a different frame rate from your game's frame rate, and the music and sound effect playback in your game engine are probably not designed with syncing audio playback to a video stream.

I'm not saying it can't be done. I'm saying that it's surprisingly tricky, and even worse, it might be something that can't be built on top of your engine, but something that requires you to modify your engine to make it work.

Stealth Games

Stealth games succeed and fail on NPC behaviour/AI, predictability, variety, and level design. Stealth games need sophisticated and legible systems for line of sight, detailed modelling of the knowledge-state of NPCs, communication between NPCs, and good movement/ controls/game feel.

Making a stealth game is probably five times as difficult as a platformer or a puzzle platformer.

In a puzzle platformer, you can develop puzzle elements and then build levels. In a stealth game, your NPC behaviour and level design must work in tandem, and be developed together. Movement must be fluid enough that it doesn't become a challenge in itself, without stealth. NPC behaviour must be interesting and legible.

Rhythm Games

These are hard for the same reason that video playback is hard. You have to sync up your audio with your gameplay. You need some kind of feedback for when which audio is played. You need to know how large the audio lag, screen lag, and input lag are, both in frames, and in milliseconds.

You could try to counteract this by using certain real-time OS functionality directly, instead of using the machinery your engine gives you for sound effects and background music. You could try building your own sequencer that plays the beats at the right time.

Now you have to build good gameplay on top of that, and you have to write music. Rhythm games are the genre that experienced programmers are most likely to get wrong in game jams. They produce a finished and playable game, because they wanted to write a rhythm game for a change, but they get the BPM of their music slightly wrong, and everything feels off, more and more so as each song progresses.

Online Multi-Player Netcode

Everybody knows this is hard, but still underestimates the effort it takes. Sure, back in the day you could use the now-discontinued ready-made solution for Unity 5.0 to synchronise the state of your GameObjects. Sure, you can use a library that lets you send messages and streams on top of UDP. Sure, you can just use TCP and server-authoritative networking.

It can all work out, or it might not. Your netcode will have to deal with pings of 300 milliseconds, lag spikes, package loss, and maybe recover from five seconds of lost WiFi connections. If your game can't, because it absolutely needs the low latency or high bandwidth or consistency between players, you will at least have to detect these conditions and handle them, for example by showing text on the screen informing the player he has lost the match.

It is deceptively easy to build certain kinds of multiplayer games, and test them on your local network with pings in the single digit milliseconds. It is deceptively easy to write your own RPC system that works over TCP and sends out method names and arguments encoded as JSON. This is not the hard part of netcode. It is easy to write a racing game where players don't interact much, but just see each other's ghosts. The hard part is to make a fighting game where both players see the punches connect with the hit boxes in the same place, and where all players see the same finish line. Or maybe it's by design if every player sees his own car go over the finish line first.

50 notes

·

View notes

Text

Twitch Revenue Sharing: An Unrealistic Proposal

It’s been years since Phil Fish wanted YouTubers to pay him money for playing FEZ on YouTube. The prevailing counter-argument is that exposure is better than no exposure, and having people play your games on Twitch or YouTube without licensing your work for public performance means other people will see and buy it, leading to increased sales, and more revenue than you could have possibly gotten from a licensing deal. In my experience, this is not true. I understand the analogy to piracy of music and AAA games, but even that is an argument about economics of scale, not about morals.

In my own experience, this doesn’t even happen with free games. On the analytics of my own games, I saw that having YouTubers play them did almost nothing to page views or free downloads. A thousand views in one day don’t translate into one additional download, maybe three page views. The only significant effect was that one YouTube video about one of my games got small many MineCraft YouTubers to make copycat videos. A spike in downloads corresponded one-to-one with the number of YouTube videos with under a hundred views on small channels run by teenage boys.

I don’t think adding an EULA to my games that explicitly covers public performance would do anything. I could consult a lawyer to make it legally enforceable, but I could not enforce it in practice. Twitch and YouTube have cornered the market on gaming content, and even though Nintendo don’t like it when you play their games as a public performance YouTube turn a blind eye. What game developers need isn’t the copyright, what we need is the cooperation of YouTube and Twitch to allow us to enforce our copyrights. Twitch and Youtube have basically cornered the market.

We could have a chance if instead of trying to legislate or enforce public performance rights for games, we could just pressure YouTube and Twitch into codifying something into their terms of service. I know it won’t happen in practice. I don’t know what to do about YouTube. Youtube would rather ban a game from their site or negotiate a rather one-sided deal, and many YouTube videos exist in a grey area between fair use and entertainment.

I know what to do about Twitch though. I know what kind of deal I would want from them, anyway: When a streamer sets the game, a percentage of the revenue goes to the game developer. This could be 10%-30% of the ad revenue, and 2% of cheers and subscriptions, (out of Twitch’s cut of cheers and subscriptions). If the game is set to “IRL“ or “Just Chatting“, the revenue works the way it does today. There would be a lot of gnashing of teeth about PayPal donations (outside of twitch’s hands) and gifted subscriptions (do they count over the whole month, or for the moment they are gifted?), but I think this system would be fair, and a marked improvement over the status quo.

With YouTube, there is a lot more “long tail“ revenue, and videos are sometimes critique, or machinima, or edited with clips from different games. YouTube ad revenue is straightforward, but the copyright question is thorny. Twitch is live-streamed gaming, so copyright-wise and morally it’s a lot easier to just enable revenue sharing by default for twitch streams of the game, and every twitch stream has to have a game category listed (or arts and crafts, beaches, IRL, just chatting, game shows, demos, game development...).

If we managed to implement such a scheme, it would probably be through some kind of EU copyright directive. On balance, it would be terrible, because large conglomerates like Amazon, Alphabet, Apple and Microsoft would fight it every step of the way and try to make it not worth it for developers, algorithmically punishing developers of games that take a cut. It would also be terrible because it involves automated copyright enforcement. The only way this could turn out favourably for game developers is of the EU commission and US congress simultaneously threatened Amazon/Twitch with regulation, because Twitch already has streams categorised by game.

But if we somehow managed to get Twitch to cut in the developers, I think this would align the incentives between developers and streamers. We wouldn’t have to awkwardly say “but the people who watch the stream might buy the game for themselves.“ Even a story-based game with no replay value after you know the endings would have all the incentives aligned between streamers, stream audiences, and developers.

5 notes

·

View notes

Text

Language Models and AI Safety: Still Worrying

Previously, I have explained how modern "AI" research has painted itself into a corner, inventing the science fiction rogue AI scenario where a system is smarter than its guardrails, but can easily outwitted by humans.

Two recent examples have confirmed my hunch about AI safety of generative AI. In one well-circulated case, somebody generated a picture of an "ethnically ambiguous Homer Simpson", and in another, somebody created a picture of "baby, female, hispanic".

These incidents show that generative AI still filters prompts and outputs, instead of A) ensuring the correct behaviour during training/fine-tuning, B) manually generating, re-labelling, or pruning the training data, C) directly modifying the learned weights to affect outputs.

In general, it is not surprising that big corporations like Google and Microsoft and non-profits like OpenAI are prioritising racist language or racial composition of characters in generated images over abuse of LLMs or generative art for nefarious purposes, content farms, spam, captcha solving, or impersonation. Somebody with enough criminal energy to use ChatGPT to automatically impersonate your grandma based on your message history after he hacked the phones of tens of thousands of grandmas will be blamed for his acts. Somebody who unintentionally generates a racist picture based on an ambiguous prompt will blame the developers of the software if he's offended. Scammers could have enough money and incentives to run the models on their own machine anyway, where corporations have little recourse.

There is precedent for this. Word2vec, published in 2013, was called a "sexist algorithm" in attention-grabbing headlines, even though the bodies of such articles usually conceded that the word2vec embedding just reproduced patterns inherent in the training data: Obviously word2vec does not have any built-in gender biases, it just departs from the dictionary definitions of words like "doctor" and "nurse" and learns gendered connotations because in the training corpus doctors are more often men, and nurses are more often women. Now even that last explanation is oversimplified. The difference between "man" and "woman" is not quite the same as the difference between "male" and "female", or between "doctor" and "nurse". In the English language, "man" can mean "male person" or "human person", and "nurse" can mean "feeding a baby milk from your breast" or a kind of skilled health care worker who works under the direction and supervision of a licensed physician. Arguably, the word2vec algorithm picked up on properties of the word "nurse" that are part of the meaning of the word (at least one meaning, according tot he dictionary), not properties that are contingent on our sexist world.

I don't want to come down against "political correctness" here. I think it's good if ChatGPT doesn't tell a girl that girls can't be doctors. You have to understand that not accidentally saying something sexist or racist is a big deal, or at least Google, Facebook, Microsoft, and OpenAI all think so. OpenAI are responding to a huge incentive when they add snippets like "ethnically ambiguous" to DALL-E 3 prompts.

If this is so important, why are they re-writing prompts, then? Why are they not doing A, B, or C? Back in the days of word2vec, there was a simple but effective solution to automatically identify gendered components in the learned embedding, and zero out the difference. It's so simple you'll probably kick yourself reading it because you could have published that paper yourself without understanding how word2vec works.

I can only conclude from the behaviour of systems like DALL-E 3 that they are either using simple prompt re-writing (or a more sophisticated approach that behaves just as prompt rewriting would, and performs as badly) because prompt re-writing is the best thing they can come up with. Transformers are complex, and inscrutable. You can't just reach in there, isolate a concept like "human person", and rebalance the composition.

The bitter lesson tells us that big amorphous approaches to AI perform better and scale better than manually written expert systems, ontologies, or description logics. More unsupervised data beats less but carefully labelled data. Even when the developers of these systems have a big incentive not to reproduce a certain pattern from the data, they can't fix such a problem at the root. Their solution is instead to use a simple natural language processing system, a dumb system they can understand, and wrap it around the smart but inscrutable transformer-based language model and image generator.

What does that mean for "sleeper agent AI"? You can't really trust a model that somebody else has trained, but can you even trust a model you have trained, if you haven't carefully reviewed all the input data? Even OpenAI can't trust their own models.

13 notes

·

View notes

Text

Wish List For A Dumb Phone

I have a dumb phone with 20 days of standby time. It's getting old. I might need to buy another one soon.

I only really need that phone for phone stuff. It's small and light. It's my backup in places where my smartphone does not have reception.

A replacement would need physical buttons, and have bar or flip phone form factor, preferably in a blight green or orange colour. It should be a dual-SIM quad-band with GSM for basic phone calls, SMS and MMS, and 4G or 5G connectivity. The web browsing or download speed doesn't really matter.

In terms of featurephone features, I don't need twitter, facebook, whatsapp, or any of those built-in apps. I want the ability to take pictures, play MP3 and OGG files, record audio, play audio, browse the file system, and assign audio files as custom ringtones. E-mail is optional.

The hardware features I want are an SD card slot, a 240p camera or better, a light, FM radio (DAB would be a plus), a replaceable battery, bluetooth for tethering and audio headsets, and USB-C for tethering, charging, and file transfer. It doesn't have to be USB-PD or USB 3.0.

There are some features that would be nice. It would be nice to have a playlist that I can sync with a desktop podcatcher application. It would be nice to be able to move contacts between the SIM card and the SD card in bulk, and to sync contacts with my NextCloud address book with a desktop application. It would be nice if I could mount my phone's file system via USB.

All these features boil down to this: My dumb phone is a bit beat up. I wish I could just replace it with a slightly newer model with USB-C, but otherwise it doesn't need apps or wlan.

There is no phone out there that is just slightly better than my old dumb phone, and can be charged via USB. Once you have all the hardware and features, you might as well slap Android on there, and a more powerful processor, and sell it on features.

It's sad. There are many dumb phones that are almost better than my old one. There is one that is just like my old one, but with 4G instead of 3G, but no USB-C. There is one that looks great, but all reviews say the software is buggy and bluetooth doesn't work reliably. There is one with loads of features that has a couple of days of standby time, not weeks.

There's also one that has all the features but costs more than a smartphone.

All I really want is a Nokia 215 with USB-C, tethering, and a comfortable way to sync my stuff to a PC.

15 notes

·

View notes

Text

Are Game Blogs Uniquely Lost?

All this started with my looking for the old devlog of Storyteller. I know at some point it was linked from the blogroll on the Braid devlog. Then I tried to look at on old devlog of another game that is still available. The domain for Storyteller is still active. The devblog is gone.

I tried an old bookmark from an old PC (5 PCs ago, I think). It was a web site linked to pixel art and programming tutorials. Instead of linking to the pages directly, some links link led to a twitter threads by authors that collected their work posted on different sites. Some twitter threads are gone because the users were were suspended, or had deleted their accounts voluntarily. Others had deleted old tweets. There was no archive. I have often seen links accompanied by "Here's a thread where $AUTHOR lists all his writing on $TOPIC". I wonder if the sites are still there, and only the tweets are gone.

A lot of "games studies" around 2010 happened on blogs, not in journals. Games studies was online-first, HTML-first, with trackbacks, tags, RSS and comment sections. The work that was published in PDF form in journals and conference proceedings is still there. The blogs are gone. The comment sections are gone. Kill screen daily is gone.

I followed a link from critical-distance.com to a blog post. That blog is gone. The domain is for sale. In the Wayback Machine, I found the link. It pointed to the comment section of another blog. The other blog has removed its comment sections and excluded itself from the Wayback Machine.

I wonder if games stuff is uniquely lost. Many links to game reviews at big sites lead to "page not found", but when I search the game's name, I can find the review from back in 2004. The content is still there, the content management systems have been changed multiple times.

At least my favourite tumblr about game design has been saved in the Wayback Machine: Game Design Tips.

To make my point I could list more sites, more links, 404 but archived, or completely lost, but when I look at small sites, personal sites, blogs, or even forums, I wonder if this is just confirmation bias. There must be all this other content, all these other blogs and personal sites. I don't know about tutorials for knitting, travel blogs, stamp collecting, or recipe blogs. I usually save a print version of recipes to my Download folder.

Another big community is fan fiction. They are like modding, but for books, I think. I don't know if a lot of fan fiction is lost to bit rot and link rot either. What is on AO3 will probably endure, but a lot might have gone missing when communities fandom moved from livejournal to tumblr to twitter, or when blogs moved from Wordpress to Medium to Substack.

I have identified some risk factors:

Personal home pages made from static HTML can stay up for while if the owner meticulously catalogues and links to all their writing on other sites, and if the site covers a variety of interests and topics.

Personal blogs or content management systems are likely to lose content in a software upgrade or migration to a different host.

Writing is more likely to me lost when it's for-pay writing for a smaller for-profit outlet.

A cause for sudden "mass extinction" of content is the move between social networks, or the death of a whole platform. Links to MySpace, Google+, Diaspora, and LiveJournal give me mostly or entirely 404 pages.

In the gaming space, career changes or business closures often mean old content gets deleted. If an indie game is wildly successful, the intellectual property might ge acquired. If it flops, the domain will lapse. When development is finished, maybe the devlog is deleted. When somebody reviews games at first on Steam, then on a blog, and then for a big gaming mag, the Steam reviews might stay up, but the personal site is much more likely to get cleaned up. The same goes for blogging in general, and academia. The most stable kind of content is after hours hobbyist writing by somebody who has a stable and high-paying job outside of media, academia, or journalism.

The biggest risk factor for targeted deletion is controversy. Controversial, highly-discussed and disseminated posts are more likely to be deleted than purely informative ones, and their deletion is more likely to be noticed. If somebody starts a discussion, and then later there are hundreds of links all pointing back to the start, the deletion will hurt more and be more noticeable. The most at-risk posts are those that are supposed to be controversial within a small group, but go viral outside it, or the posts that are controversial within a small group, but then the author says something about politics that draws the attention of the Internet at large to their other writings.

The second biggest risk factor for deletion is probably usefulness combined with hosting costs. This could also be the streetlight effect at work, like in the paragraph above, but the more traffic something gets, the higher the hosting costs. Certain types of content are either hard to monetise, and cost a lot of money, or they can be monetised, so the free version is deliberately deleted.

The more tech-savvy users are, the more likely they are to link between different sites, abandon a blogging platform or social network for the next thing, try to consolidate their writings by deleting their old stuff and setting up their own site, only to let the domain lapse. The more tech-savvy users are, the more likely they are to mess with the HTML of their templates or try out different blogging software.

If content is spread between multiple sites, or if links link to social network posts that link to blog post with a comment that links to a reddit comment that links to a geocities page, any link could break. If content is consolidated in a forum, maybe Archive team could save all of it with some advance notice.

All this could mean that indie games/game design theory/pixel art resources are uniquely lost, and games studies/theory of games criticism/literary criticism applied to games are especially affected by link rot. The semi-professional, semi-hobbyist indie dev, the writer straddling the line between academic and reviewer, they seem the most affected. Artists who start out just doodling and posting their work, who then get hired to work on a game, their posts are deleted. GameFAQs stay online, Steam reviews stay online, but dev logs, forums and blog comment sections are lost.

Or maybe it's only confirmation bias. If I was into restoring old cars, or knitting, or collecting stamps, or any other thing I'd think that particular community is uniquely affected by link rot, and I'd have the bookmarks to prove it.

Figuring this out is important if we want to make predictions about the future of the small web, and about the viability of different efforts to get more people to contribute. We can't figure it out now, because we can't measure the ground truth of web sites that are already gone. Right now, the small web is mostly about the small web, not about stamp collecting or knitting. If we really manage to revitalise the small web, will it be like the small web of today except bigger, the web-1.0 of old, or will certain topics and communities be lost again?

55 notes

·

View notes

Text

2023 Game Of The Year: Storyteller (plus DLC)

I played Storyteller after the DLC came out, and Storyteller (with the DLC) became my game of the year.

Unfortunately, I can't really explain why without spoiling some puzzles. I will not try not to spoil any solutions in the screenshots (except one), but I will have to spoil the game mechanics.

What is Storyteller

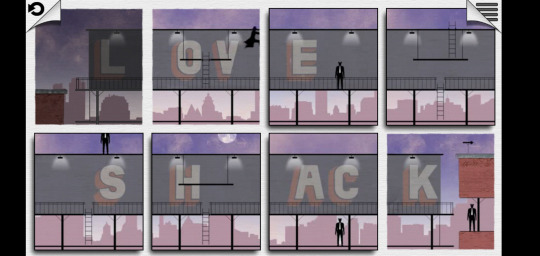

Storyteller is a game where you arrange comic book panels and characters to tell a story based on a prompt. It doesn't require any dexterity, only deduction. You drag and drop comic book panels into slots, drag and drop character into panels to arrange them, or drag populated panels and characters around.

The challenge is to understand how the characters and panels work, what the prompt wants you to do, and how to do that. Early on, the game is mostly about figuring out the rules governing the characters and panels. It slowly shifts towards more "thinky" problems, where the challenge lies not in understanding how the game works, or what the goal is, but how to fit the required story beats into a limited number of panels.

Depending on the outcome of a panel, you may see little icons, speech bubbles, or thought bubbles that describe whether an action failed or succeeded, and how the characters think or feel about that. Sometimes the outcome of a panel is communicated in a much more direct way, and acted out by the characters.

Screenshot from second chapter puzzle "A Heartbreak is Healed"

Screenshot from first chapter puzzle "Heartbreak with a Happy Ending": At this point you are still learning the game mechanics like placing panels and characters

Screenshot from "Everyone Rejects Edgar": More thinky puzzle than recreation of a storytelling cliché, and an opportunity to apply what you know. The characters except for Edgar are interchangeable. You can have them fall in love with any other, or reject Edgar in any order.

Screenshot from later puzzle "Everyone Sits on the Throne": A thinky puzzle in the same vein as "Everyone Rejects Edgar". This time, there are important differences between the initial states of characters. You cannot make them sit on the throne in any order.

The panels can be thought of as story beats, sometimes as scenes/places, as in "A and B meet in the kitchen", and sometimes as actions/verbs, as in "A and B kiss". They aren't verbs in the sense that you can just put Alice and Bob in the kissing panel and they always kiss. They only kiss if they love each other, or at least if they don't hate each other. All you can do with the characters as the player is to put them in situations. You can drag the "horse" character into the "watering hole" panel, but you can't make it drink. I mean, probably you could, you might, but would be the puzzle.

Screenshot from "Hatey Murders Father and Marries Mother": It's obviously an Oedipus reference. You see that Hatey doesn't want to murder his father or marry his mother in this constellation.

The game keeps track of the relationships between characters, beliefs of characters, and states of objects. That means (I made this example up in order to not spoil any puzzles) if Alice has been in the kitchen with the cookies, Alice believes there are cookies in the kitchen. If the Cookie Monster has been in the kitchen, there are no more cookies in the kitchen. Now Alice could tell Bob that there are cookies in the kitchen, and Bob could ge hungry and blame Alice for lying, or he could catch the Cookie Monster eating the last cookie. The possibilities for drama are there.

Characters have different initial states and motivations. For instance, the King and the Queen are initially married, and they both have the crown.

Screenshot from "Three Heads Roll": Only the Baron wants to kidnap the king.

If you're into that kind of thing, you can imagine Storyteller as a STRIPS-like planning system with a known or at least discoverable initial state, multiple goals (not just one goal state), panels as actions that take characters as parameters, and a lot of state that can be observed and deduced, but not directly manipulated.

If you're not, you can just play Storyteller as a game about telling stories based on a prompt.

Progression

After you start a new save file, the whole game is open to you. Puzzles or stories are grouped into chapters of four of five stories. Each chapter has a common theme, and a set of common characters and panels. Later chapters mix it up a bit, and introduce characters into different environments or combine characters from multiple stories or mythologies, whereas earlier chapters mostly introduce panels and characters so you can learn how they operate.

Like I said, the whole game is open to you from the start, so you are free to skip a puzzle if you are stuck, to revisit an older chapter, or to skip to the last chapter. It doesn't really matter, because you have to solve every puzzle in the game in order to get to the end.

The first chapter teaches the mechanics of dragging and dropping panels, dragging and dropping characters into panels, and dragging populated panels around to swap them. Then the next couple of chapters introduce different characters and settings. Usually the first story of a chapter only has a few new characters and panels with a rather obvious prompt, the second introduces more content and a slight variation, and the third and fourth have a slight twist.

The later chapters are more focused on actual puzzles.

Some puzzles have multiple solutions, and after solving it one way, you see two additional prompts. For example, the princess could kiss the frog and then the frog turns into a prince, or the princess could kiss the frog but the frog turns into another princess.

I skipped many of those variations on my first playthrough, and this way I blazed through about 40 puzzles without ever stopping and thinking or feeling overly challenged. I learned how most of the characters and panels work, and then I went back to solve all the variant prompts. Somebody I know played every level in order and solved the variant prompts right away. That makes for a more thinky experience earlier on. Either way works, and both are clearly intentionally permitted by the game design.

In the final chapters, Storyteller actually becomes thinky and difficult. This seems like a flaw in pacing, but it works out to the first 70% of the puzzles taking 30% of the time playing it, and the last 30% taking 70% of the time. Instead of treating Storyteller like a storytelling game, you must finally treat it like a puzzle game.

When you beat the base game, the DLC content is added. Levels in the previous chapters get an additional variant prompt, and a new character is introduced. This character acts completely different from all the previous characters, so you have to at first understand how it interacts with the panels from the base game. The variant prompts are harder than anything in the base game. For puzzle game aficionados, this is where it gets challenging for the first time. Here's a "metagaming" tip: None of the DLC variant prompts is solvable with the characters from the base game. Therefore your solution must incorporate the DLC, and it would be impossible to reproduce with base game content.

Screenshot from "Eve Dies Heartbroken Devil makes everyone miserable": You have to experiemnt to figure out how the devil interacts with the panels and characters.

Why It Works So Well

Storyteller is a bit on the easy side. So why does it work so well?

If you are an expert at puzzle games such as The Golem or Stephen's Sausage Roll, then maybe it won't work for you. But if you are looking for a puzzle game that is different, if you liked Splice or Cogs, then Storyteller could be the game for you. It's not a puzzle platformer, not a first person puzzle adventure like Obduction or Sensorium or Quern.

Storyteller is not a storytelling game in the vein of Facade, but it uses themes and literary allusion to keep you interested early on. The music sometimes feels more like a fun Easter egg, but never annoying.

Early prompts in the vein of "boy meets girl" soon turn into more complicated prompts, requiring some minimal lateral thinking to figure out what you need to do. Some of the prompts are literary or mythological allusions and references. That keeps levels interesting and varied even when the actual puzzle part is simple.

Screenshot from "Butler Gets Fired" and "Friedrich Takes Revenge": The puzzle part is quite easy, and there is little lateral thinking involved. The fun is mostly in re-enacting pop culture tropes.

Screenshot from the level selection: Every puzzle in every chapter is open from the start, but it's probably best to do them in order.

Many bad puzzle games get this wrong. You can never really solve a puzzle, because there is only one thing to do. You pick up a key, you open a lock, you see a button, you press it to open a door, you just do the next thing you can do over and over. Instead of puzzling, you just push every button and see what changes to know where to go next. It's fine if the puzzle mechanics are just an excuse to get the player to traverse a temple/dungeon back and forth, if you see the lock and the key and the puzzle mechanics are just there for flavour and motivation. It's no okay if your game is not an action adventure or a puzzle platformer, but an actual puzzle game.

Monument Valley is such a game that doesn't have any actual puzzles in it, you just walk from one place to the next. The game is carried by the interesting visuals and the novelty of the perspective mechanic. (If you are looking for something like Monument Valley but challenging, I could perhaps recommend Naya's Quest or Selene's Labyrinth.)

Early on, Storyteller has this flow of simple and easy puzzles (like Monument Valley), one solved after the other, and it gradually gets more difficult. The references to books and storytelling clichés aren't particularly deep or laugh-out-loud funny, but they make for the occasional chuckle. Sometimes part of the solution process is to realise that "boy meets girl" was last time, and this time you have to use "girl meets girl" to make the story fit the prompt with the characters you have at your disposal.

The variant prompts manage to side-step a problem I that stood out to me on my playthrough of Baba is You, and a problem I encountered designing my own puzzles. Sometimes there are two levels in a row, where one level has an "unintended" solution, and the next has the "intended" solution removed, so you have to find the second solution for the first level. If you manage to find the "unintended" solution the first time, the next level will be very confusing. Just this solution again? Wait, what was the other solution? Did the last level try to teach me something I missed? Should I go back again to find the other solution?

It was confusing to me anyway, a couple of times when I played Baba is You. The same idea is implemented in Storyteller in a much better way. Instead of having a modified copy of the same level right afterwards, Storyteller categorises solutions into "basic solution", "variant A" and "variant B". Even if you manage to find one variant with your first solution attempt, you immediately see both variant prompts, and there is always one more variant to try next. You never have to construct the same solution twice in a row. Sadly this elegant system cannot be applied to Baba is You.

Screenshot from "Tiny gets a kiss": Sorry for spoiling the solution. Even if you manage to have the prince save Tiny the first time around, you would still have to find a solution where Snowy is ungrateful, and Tiny gets a kiss.

Pre-History

I can't really talk about Storyteller without explaining why I was drawn to this game in the first place. I first heard about it in 2008, when it won the IGF. Back then it was more of a story sandbox in the vein of "Tale-Spin". That's the old story generation AI that produced the sentence "gravity drowned", if that means anything to you.

Screenshot: Storyteller prototype with old pixel art

About a hundred flash portals mirrored the initial Storyteller Flash prototype that won the IGF. The original is still here on Kongregate.

Back then, I wondered how a sand box such as storyteller could be turned into an actual game. It was a prototype and some mechanics, but not really a game. It looks like the developer wondered, too.

In the mean time, FRAMED had came out. Now FRAMED looks clearly inspired by Storyteller, because it also has a mechanic where you rearrange panels, but it's nothing like it. FRAMED is more like the Limbo to Storyteller's Braid. Ironically, FRAMED is "a game that tells you a story". Storyteller is an actual puzzle game.

It has been in development longer than Duke Nukem Forever, but even after all these years, Storyteller doesn't feel dated or superfluous. They finally found a winning formula.

Storyteller was developed by Daniel Benmergui and published by Annapurna Interactive. Get it on Steam here, or if you have a Netflix subscription and an iOS or Android device, the price of the mobile app is included in your monthly Netflix fee. Storyteller is also available for the Nintendo Switch. I would recommend playing it on a PC or tablet.

27 notes

·

View notes

Text

New Year's Resolutions

Hey everybody. It's a new year. Happy New Year!

Gamedev Blogging

Last year I have fallen behind on posting gamedev stuff. It's mostly because there is no good way to format code listings in the new editor. So this year, I am not even going to try eith gamedev tutorials on tumblr. I might post them elsewhere and just link them. I have already taken a look at Cohost, but It doesn't have the features I need. Wouldn't it be cook if you could post pico-8 carts on cohost? Or source code listings? Or LaTeX? I might as well write the HTML by hand and host it somewhere. But that won't be the focus of this blog in 2024.

Instead I'm going to do more tumblr posting about game design, just less on the code side. It will be more on the screenshot side. First thing will be about my 2023 Game Of The Year. It will probably surprise you. I did not expect it to be this good. You can also expect something about some of my old prototypes. Over the years I have started and abandoned game prototypes after either concluding that the idea won't work and can't be made to work, or after learning what I needed to learn. What did I learn? Wait and find out!

Computer Litaracy

I'll also attempt to write more about general computing and "computer literacy" topics. I have two particular "series" or "categories" in mind already. Almost Good: Technologies that sound great when you hear abut them, but that don't work as well as you might think when you try them out. Harmful Assumptions About Computing: Non-technical people often have surprising ideas about how computers work. As a technically inclined person, you don't even realise how far these unspoken assumptions about computers can reach.

Usability of computers and software seems to have gotten worse rather than better in many aspects, while computers have become entrenched in every workplace, our private lives, and in our interactions with corporations and government services. Computer literacy has also become worse in certain ways, and I think I know some reasons why.

There will also be some posts about forum moderation and community management. It's rather basic and common-sense stuff, but I want to spell it out.

Actual Game Development

I am going to release a puzzle game in 2024. You will be able to buy it for money. You can hold me to it. This is my biggest New Year's Resolution.

I will continue to work on two games of mine. One will be the game I just mentioned. The other is Wyst. I put the project on ice because I was running out of inspiration for a while, but I think I am sufficiently inspired now. I will pick it up again and add two more worlds to the game, and get it into a "complete" state. I'll also have to do a whole lot of playtesting. This may be the last time I touch Unity3d.

I will try out two new engines and write one or two proof-of-concept games in each of them, maybe something really simple like "Flappy Bird", and one game jam "warm-up" thing, with the scope of a Ludum Dare compo game. Maybe that means I'll write Tetris or Pong multiple times. I probably won't put the "Pong in Godot" on itch.io page next to a "Pong in Raylib" and "Pong in Bevy", but I'll just put the code on my GitHub. The goal is to have more options for a game jam, so I can decide to use Godot if it is a better fit for the jam topic.

In the past, I have always reached for PyGame by default, because Python is the language that has flask and Django and sqlalchemy and numpy and pyTorch, and because I mostly want to make games in 2D. I want to get out of my comfort zone. In addition to the general-purpose game engines, I will try to develop something in bitsy, AGS, twine, pico-8 or Ren'Py. I want to force myself to try a different genre this way. Maybe I'll make an archaeologist dating simulator.

All in all, this means I will so significant work on two existing projects, revisit some old failed prototypes to do a postmortem, I'll write at least six new prototypes, and two new jam games, plus some genre/narrative experiments. That's a lot already. So here's an anti-resolution: I won't even try to develop any of my new prototypes into full releases. I will only work on existing projects from 2023 or before if I develop anything into playable demo versions or full games. I won't get sidetracked by the next Ludum Dare game, I promise. After the jam is over, I'll put down the project, at least until 2025.

7 notes

·

View notes

Text

Wish List For A Game Profiler

I want a profiler for game development. No existing profiler currently collects the data I need. No existing profiler displays it in the format I want. No existing profiler filters and aggregates profiling data for games specifically.

I want to know what makes my game lag. Sure, I also care about certain operations taking longer than usual, or about inefficient resource usage in the worker thread. The most important question that no current profiler answers is: In the frames that currently do lag, what is the critical path that makes them take too long? Which function should I optimise first to reduce lag the most?

I know that, with the right profiler, these questions could be answered automatically.

Hybrid Sampling Profiler

My dream profiler would be a hybrid sampling/instrumenting design. It would be a sampling profiler like Austin (https://github.com/P403n1x87/austin), but a handful of key functions would be instrumented in addition to the sampling: Displaying a new frame/waiting for vsync, reading inputs, draw calls to the GPU, spawning threads, opening files and sockets, and similar operations should always be tracked. Even if displaying a frame is not a heavy operation, it is still important to measure exactly when it happens, if not how long it takes. If a draw call returns right away, and the real work on the GPU begins immediately, it’s still useful to know when the GPU started working. Without knowing exactly when inputs are read, and when a frame is displayed, it is difficult to know if a frame is lagging. Especially when those operations are fast, they are likely to be missed by a sampling debugger.

Tracking Other Resources

It would be a good idea to collect CPU core utilisation, GPU utilisation, and memory allocation/usage as well. What does it mean when one thread spends all of its time in that function? Is it idling? Is it busy-waiting? Is it waiting for another thread? Which one?

It would also be nice to know if a thread is waiting for IO. This is probably a “heavy” operation and would slow the game down.

There are many different vendor-specific tools for GPU debugging, some old ones that worked well for OpenGL but are no longer developed, open-source tools that require source code changes in your game, and the newest ones directly from GPU manufacturers that only support DirectX 12 or Vulkan, but no OpenGL or graphics card that was built before 2018. It would probably be better to err on the side of collecting less data and supporting more hardware and graphics APIs.

The profiler should collect enough data to answer questions like: Why is my game lagging even though the CPU is utilised at 60% and the GPU is utilised at 30%? During that function call in the main thread, was the GPU doing something, and were the other cores idling?

Engine/Framework/Scripting Aware

The profiler knows which samples/stack frames are inside gameplay or engine code, native or interpreted code, project-specific or third-party code.

In my experience, it’s not particularly useful to know that the code spent 50% of the time in ceval.c, or 40% of the time in SDL_LowerBlit, but that’s the level of granularity provided by many profilers.

Instead, the profiler should record interpreted code, and allow the game to set a hint if the game is in turn interpreting code. For example, if there is a dialogue engine, that engine could set a global “interpreting dialogue” flag and a “current conversation file and line” variable based on source maps, and the profiler would record those, instead of stopping at the dialogue interpreter-loop function.

Of course, this feature requires some cooperation from the game engine or scripting language.

Catching Common Performance Mistakes

With a hybrid sampling/instrumenting profiler that knows about frames or game state update steps, it is possible to instrument many or most “heavy“ functions. Maybe this functionality should be turned off by default. If most “heavy functions“, for example “parsing a TTF file to create a font object“, are instrumented, the profiler can automatically highlight a mistake when the programmer loads a font from disk during every frame, a hundred frames in a row.

This would not be part of the sampling stage, but part of the visualisation/analysis stage.

Filtering for User Experience

If the profiler knows how long a frame takes, and how much time is spent waiting during each frame, we can safely disregard those frames that complete quickly, with some time to spare. The frames that concern us are those that lag, or those that are dropped. For example, imagine a game spends 30% of its CPU time on culling, and 10% on collision detection. You would think to optimise the culling. What if the collision detection takes 1 ms during most frames, culling always takes 8 ms, but whenever the player fires a bullet, the collision detection causes a lag spike. The time spent on culling is not the problem here.

This would probably not be part of the sampling stage, but part of the visualisation/analysis stage. Still, you could use this information to discard “fast enough“ frames and re-use the memory, and only focus on keeping profiling information from the worst cases.

Aggregating By Code Paths

This is easier when you don’t use an engine, but it can probably also be done if the profiler is “engine-aware”. It would require some per-engine custom code though. Instead of saying “The game spent 30% of the time doing vector addition“, or smarter “The game spent 10% of the frames that lagged most in the MobAIRebuildMesh function“, I want the game to distinguish between game states like “inventory menu“, “spell targeting (first person)“ or “switching to adjacent area“. If the game does not use a data-driven engine, but multiple hand-written game loops, these states can easily be distinguished (but perhaps not labelled) by comparing call stacks: Different states with different game loops call the code to update the screen from different places – and different code paths could have completely different performance characteristics, so it makes sense to evaluate them separately.

Because the hypothetical hybrid profiler instruments key functions, enough call stack information to distinguish different code paths is usually available, and the profiler might be able to automatically distinguish between the loading screen, the main menu, and the game world, without any need for the code to give hints to the profiler.

This could also help to keep the memory usage of the profiler down without discarding too much interesting information, by only keeping the 100 worst frames per code path. This way, the profiler can collect performance data on the gameplay without running out of RAM during the loading screen.

In a data-driven engine like Unity, I’d expect everything to happen all the time, on the same, well-optimised code path. But this is not a wish list for a Unity profiler. This is a wish list for a profiler for your own custom game engine, glue code, and dialogue trees.

All I need is a profiler that is a little smarter, that is aware of SDL, OpenGL, Vulkan, and YarnSpinner or Ink. Ideally, I would need somebody else to write it for me.

6 notes

·

View notes

Text

AI: A Misnomer

As you know, Game AI is a misnomer, a misleading name. Games usually don't need to be intelligent, they just need to be fun. There is NPC behaviour (be they friendly, neutral, or antagonistic), computer opponent strategy for multi-player games ranging from chess to Tekken or StarCraft, and unit pathfinding. Some games use novel and interesting algorithms for computer opponents (Frozen Synapse uses deome sort of evolutionary algorithm) or for unit pathfinding (Planetary Annihilation uses flow fields for mass unit pathfinding), but most of the time it's variants or mixtures of simple hard-coded behaviours, minimax with alpha-beta pruning, state machines, HTN, GOAP, and A*.

Increasingly, AI outside of games has become a misleading term, too. It used to be that people called more things AI, then machine learning was called machine learning, robotics was called robotics, expert systems were called expert systems, then later ontologies and knowledge engineering were called the semantic web, and so on, with the remaining approaches and the original old-fashioned AI still being called AI.

AI used to be cool, then it was uncool, and the useful bits of AI were used for recommendation systems, spam filters, speech recognition, search engines, and translation. Calling it "AI" was hand-waving, a way to obscure what your system does and how it works.

With the advent if ChatGPT, we have arrived in the worst of both worlds. Calling things "AI" is cool again, but now some people use "AI" to refer specifically to large language models or text-to-image generators based on language models. Some people still use "AI" to mean autonomous robots. Some people use "AI" to mean simple artificial neuronal networks, bayesian filtering, and recommendation systems. Infuriatingly, the word "algorithm" has increasingly entered the vernacular to refer to bayesian filters and recommendation systems, for situations where a computer science textbook would still use "heuristic". Computer science textbooks still use "AI" to mean things like chess playing, maze solving, and fuzzy logic.

Let's look at a recent example! Scott Alexander wrote a blog post (https://www.astralcodexten.com/p/god-help-us-lets-try-to-understand) about current research (https://transformer-circuits.pub/2023/monosemantic-features/index.html) on distributed representations and sparsity, and the topology of the representations learned by a neural network. Scott Alexander is a psychiatrist with no formal training in machine learning or even programming. He uses the term "AI" to refer to neural networks throughout the blog post. He doesn't say "distributed representations", or "sparse representations". The original publication he did use technical terms like "sparse representation". These should be familiar to people who followed the debates about local representations versus distributed representations back in the 80s (or people like me who read those papers in university). But in that blog post, it's not called a neural network, it's called an "AI". Now this could have two reasons: Either Scott Alexander doesn't know any better, or more charitably he does but doesn't know how to use the more precise terminology correctly, or he intentionally wants to dumb down the research for people who intuitively understand what a latent feature space is, but have never heard about "machine learning" or "artificial neural networks".

Another example can come in the form of a thought experiment: You write an app that helps people tidy up their rooms, and find things in that room after putting them away, mostly because you needed that app for yourself. You show the app to a non-technical friend, because you want to know how intuitive it is to use. You ask him if he thinks the app is useful, and if he thinks people would pay money for this thing on the app store, but before he answers, he asks a question of his own: Does your app have any AI in it?

What does he mean?

Is "AI" just the new "blockchain"?

14 notes

·

View notes

Text

Share Your Anecdotes: Multicore Pessimisation

I took a look at the specs of new 7000 series Threadripper CPUs, and I really don't have any excuse to buy one, even if I had the money to spare. I thought long and hard about different workloads, but nothing came to mind.

Back in university, we had courses about map/reduce clusters, and I experimented with parallel interpreters for Prolog, and distributed computing systems. What I learned is that the potential performance gains from better data structures and algorithms trump the performance gains from fancy hardware, and that there is more to be gained from using the GPU or from re-writing the performance-critical sections in C and making sure your data structures take up less memory than from multi-threaded code. Of course, all this is especially important when you are working in pure Python, because of the GIL.

The performance penalty of parallelisation hits even harder when you try to distribute your computation between different computers over the network, and the overhead of serialisation, communication, and scheduling work can easily exceed the gains of parallel computation, especially for small to medium workloads. If you benchmark your Hadoop cluster on a toy problem, you may well find that it's faster to solve your toy problem on one desktop PC than a whole cluster, because it's a toy problem, and the gains only kick in when your data set is too big to fit on a single computer.

The new Threadripper got me thinking: Has this happened to somebody with just a multicore CPU? Is there software that performs better with 2 cores than with just one, and better with 4 cores than with 2, but substantially worse with 64? It could happen! Deadlocks, livelocks, weird inter-process communication issues where you have one process per core and every one of the 64 processes communicates with the other 63 via pipes? There could be software that has a badly optimised main thread, or a badly optimised work unit scheduler, and the limiting factor is single-thread performance of that scheduler that needs to distribute and integrate work units for 64 threads, to the point where the worker threads are mostly idling and only one core is at 100%.

I am not trying to blame any programmer if this happens. Most likely such software was developed back when quad-core CPUs were a new thing, or even back when there were multi-CPU-socket mainboards, and the developer never imagined that one day there would be Threadrippers on the consumer market. Programs from back then, built for Windows XP, could still run on Windows 10 or 11.

In spite of all this, I suspect that this kind of problem is quite rare in practice. It requires software that spawns one thread or one process per core, but which is deoptimised for more cores, maybe written under the assumption that users have for two to six CPU cores, a user who can afford a Threadripper, and needs a Threadripper, and a workload where the problem is noticeable. You wouldn't get a Threadripper in the first place if it made your workflows slower, so that hypothetical user probably has one main workload that really benefits from the many cores, and another that doesn't.

So, has this happened to you? Dou you have a Threadripper at work? Do you work in bioinformatics or visual effects? Do you encode a lot of video? Do you know a guy who does? Do you own a Threadripper or an Ampere just for the hell of it? Or have you tried to build a Hadoop/Beowulf/OpenMP cluster, only to have your code run slower?

I would love to hear from you.

13 notes

·

View notes

Text

Your Code Is Hard To Read!

This is one of those posts I make not because I think my followers need to hear them, but because I want to link to them from Discord from time to time. If you are a Moderator, Contributor or "Helpfulie" on the PyGame Community Discord, I would welcome your feedback on this one!

"You posted your code and asked a question. We can't answer your question. Your code is hard to read."

Often when we tell people this, they complain that coding guidelines are just aesthetic preferences, and they didn't ask if their code followed coding guidelines. They asked us to fix the bug. That may be so, but the problem remains: If you ask us to fix your code, we can only help you if we can read it.

Furthermore, if there are many unrelated bugs, architectural problems, and hard to understand control flow, the concept of fixing an isolated bug becomes more and more unclear.

In order to fix unreadable code, you could:

eliminate global variables

replace magic numbers with constants

replace magic strings with enumerations

name classes, functions, constants, variables according to consistent coding standards

have functions that do one thing and one thing only like "collision detection" or "collision handling". If your function does two things at the same time, like rendering AND collision detection, then it must be refactored

rewrite deeply nested and indented code to be shallower

rewrite code that keeps a lot of state in local variables into special-case functions

use data structures that make sense

write comments that explain the program, not comments that explain the programming language

delete unneccessary/unreachable code from the question to make it easier to read or from your program to see if the problem persists

My own programs often violate one or more of those rules, especially when they are one-off throwaway scripts, or written during a game jam, or prototypes. I would never try to ask other people for help on my unreadable code. But I am an experienced programmer. I rarely ask for help in an unhelpful way. Almost never ask for help in a way that makes other experienced programmers ask for more code, or less code, or additional context. I post a minimal example, and I usually know what I am doing. If I don't know what I am doing, or if I need suggestions about solving my problem completely differently, I say so.

Beginner programmers are at a disadvantage here. They don't know what good code looks like, they don't know what good software architecture looks like, they don't know how to pare down a thousand lines of code to a minimal example, and if they try to guess which section of code contains the error, they usually guess wrong.

None of this matters. It may be terribly unfair that I know how to ask smart questions, and beginner programmers ask ill-posed questions or post code that is so bad it would be easier and quicker for an experienced programmer to re-write the whole thing. It is often not feasible to imagine what the author might have intended the code to work like and to fix the bugs one by one while keeping the structure intact. This is not a technical skill, this is a communicative and social skill that software engineers must pick up sooner or later: Writing code for other people to read.

If your code is too hard to read, people can't practically help you.