#Apache hadoop

Text

Grow Your Business with Ksolves Apache Hadoop Development Services

Explore the partnership between Ksolves and Apache Hadoop in revolutionizing big data strategies. Learn how this collaboration enhances data processing and decision-makingExplore more: https://www.ksolves.com/apache-hadoop-development-company

0 notes

Text

How to execute Hadoop commands in hive shell or command line interface ?

How to execute Hadoop commands in hive shell or command line interface ?

We can execute hadoop commands in hive cli. It is very simple.

Just put an exclamation mark (!) before your hadoop command in hive cli and put a semicolon (;) after your command.

Example:

hive> !hadoop fs –ls / ;

drwxr-xr-x - hdfs supergroup 0 2013-03-20 12:44 /app

drwxrwxrwx - hdfs supergroup 0 2013-05-23 11:54 /tmp

drwxr-xr-x - hdfs supergroup 0 2013-05-08…

View On WordPress

#Apache Hadoop#BigData#exclamation mark#hadoop#hadoop command#hadoop command in hive#hdfs#hive#hive cli#hive permissions#mapreduce#semicolon

0 notes

Text

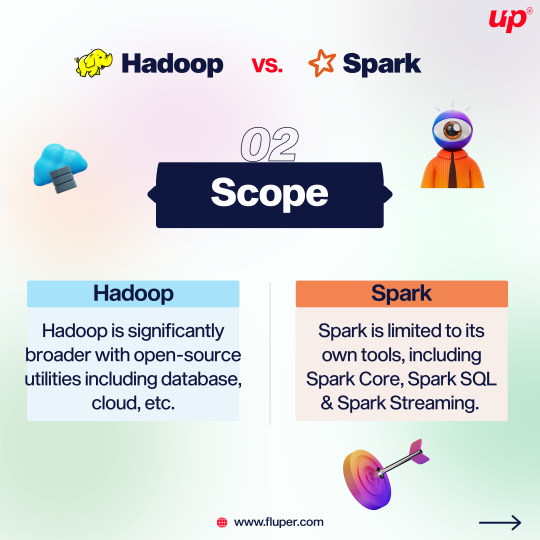

Hadoop vs Spark

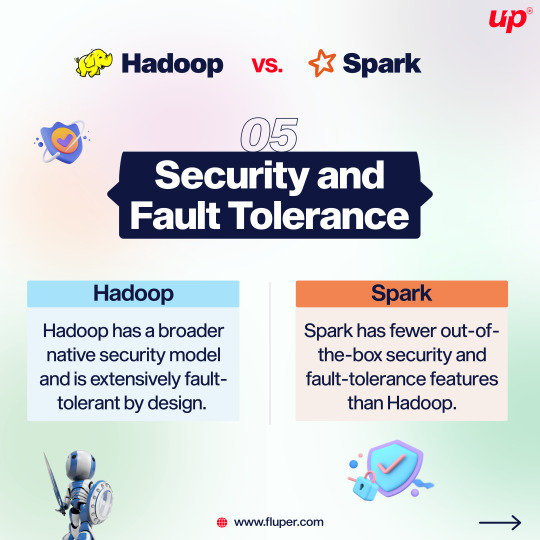

Apache Hadoop is an open source software utility that allows users to manage big datasets (from gigabytes to petabytes) by enabling a network of computers(or “nodes”) to solve vast and intricate data problems. This is highly stable, cost effective, and stores and processes structured, semi-structured and unstructured data (e.g., Internet clickstream records, web server logs, IoT sensor data, etc.).

Advantages- data protection in the event of hardware failure, vast scalability (thousands of machines), real time analytics are offered

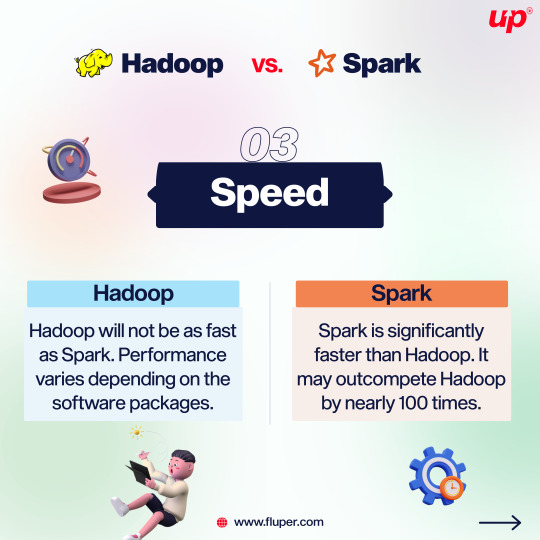

Apache Spark is also an open source, data processing engine for big datasets. It splits large tasks across many nodes like Hadoop, but it uses RAM to cache and process data instead of a file system/hard disk, which increases speed and enables Spark to handle use cases that Hadoop cannot.

Advantages- can be 100x faster than Hadoop for smaller workloads, has APIs designed for ease of use while using semi-structured data and transforming data

Spark runs faster than Hadoop at a lower batch size, but since it uses RAM rather than hard disk memory, it incurs a higher cost. Hadoop is better suited for large batch size data analyses(i.e. Extreme scaling up, say 10,000x) and linear data processing(i.e. Not scaling up at all from one node), and Spark is better suited for real time data analytics of live unstructured data streams.(i.e. Scaling up to a small extent only, say 100x)

Spark also has access to tools for ML (MLlib), which perform ML computations in memory.

(These are just my notes. This could be wrong info. Don’t take it as law.)

0 notes

Text

Apache Spark and Apache Hadoop are both popular, open-source data science tools offered by the Apache Software Foundation.

.

.

.

.

Join the development and support of the community with Fluper, and continue to grow in popularity and features.

2 notes

·

View notes

Text

instagram

#hadoop#alarm#Apache spark#coding#code#machinelearning#programming#datascience#python#programmer#artificialintelligence#deeplearning#ai#Instagram

6 notes

·

View notes

Text

Apache: Hadoop and Spark

With the rapid evolution of the Hadoop ecosystem, Apache has added Spark as a recent addition to the ecosystem. Both help with the traditional challenges of storing and processing large data sets. However, whereas Hadoop exists for data management, the primary purpose of Spark is data processing. Then, even though both are open-source big data tools, they have some key distinctions. Therefore,…

View On WordPress

#apache#big data#big data analytics#big-data#big-data-analytics#bigdata#bigdataanalytics#data infrastructure#data-infrastructure#hadoop#HDFS#spark

0 notes

Text

Is Apache spark going to replace Hadoop?

Explore Apache Spark, a high-speed data processing framework, and its relationship with Hadoop. Discover its key features, use cases, and why it's not a Hadoop replacement.

0 notes

Text

WEEK 2: SparkML

1) Select the best definition of a machine learning system.

-> A machine learning system applies a specific machine learning algorithm to train data models. After training the model, the system infers or “predicts” results on previously unseen data.

2) Which of the following options are true about Spark ML inbuilt utilities?

-> Spark ML inbuilt utilities includes a linear algebra package.

->…

View On WordPress

0 notes

Text

From Curious Novice to Data Enthusiast: My Data Science Adventure

I've always been fascinated by data science, a field that seamlessly blends technology, mathematics, and curiosity. In this article, I want to take you on a journey—my journey—from being a curious novice to becoming a passionate data enthusiast. Together, let's explore the thrilling world of data science, and I'll share the steps I took to immerse myself in this captivating realm of knowledge.

The Spark: Discovering the Potential of Data Science

The moment I stumbled upon data science, I felt a spark of inspiration. Witnessing its impact across various industries, from healthcare and finance to marketing and entertainment, I couldn't help but be drawn to this innovative field. The ability to extract critical insights from vast amounts of data and uncover meaningful patterns fascinated me, prompting me to dive deeper into the world of data science.

Laying the Foundation: The Importance of Learning the Basics

To embark on this data science adventure, I quickly realized the importance of building a strong foundation. Learning the basics of statistics, programming, and mathematics became my priority. Understanding statistical concepts and techniques enabled me to make sense of data distributions, correlations, and significance levels. Programming languages like Python and R became essential tools for data manipulation, analysis, and visualization, while a solid grasp of mathematical principles empowered me to create and evaluate predictive models.

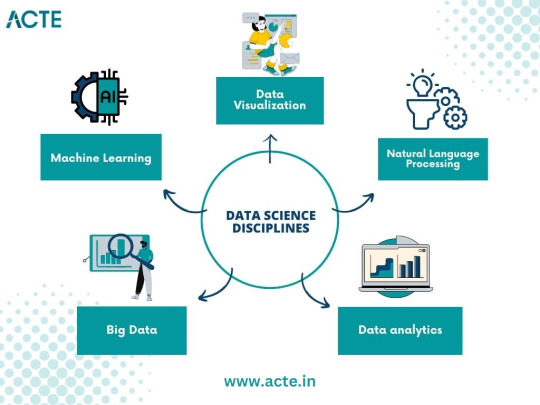

The Quest for Knowledge: Exploring Various Data Science Disciplines

A. Machine Learning: Unraveling the Power of Predictive Models

Machine learning, a prominent discipline within data science, captivated me with its ability to unlock the potential of predictive models. I delved into the fundamentals, understanding the underlying algorithms that power these models. Supervised learning, where data with labels is used to train prediction models, and unsupervised learning, which uncovers hidden patterns within unlabeled data, intrigued me. Exploring concepts like regression, classification, clustering, and dimensionality reduction deepened my understanding of this powerful field.

B. Data Visualization: Telling Stories with Data

In my data science journey, I discovered the importance of effectively visualizing data to convey meaningful stories. Navigating through various visualization tools and techniques, such as creating dynamic charts, interactive dashboards, and compelling infographics, allowed me to unlock the hidden narratives within datasets. Visualizations became a medium to communicate complex ideas succinctly, enabling stakeholders to understand insights effortlessly.

C. Big Data: Mastering the Analysis of Vast Amounts of Information

The advent of big data challenged traditional data analysis approaches. To conquer this challenge, I dived into the world of big data, understanding its nuances and exploring techniques for efficient analysis. Uncovering the intricacies of distributed systems, parallel processing, and data storage frameworks empowered me to handle massive volumes of information effectively. With tools like Apache Hadoop and Spark, I was able to mine valuable insights from colossal datasets.

D. Natural Language Processing: Extracting Insights from Textual Data

Textual data surrounds us in the digital age, and the realm of natural language processing fascinated me. I delved into techniques for processing and analyzing unstructured text data, uncovering insights from tweets, customer reviews, news articles, and more. Understanding concepts like sentiment analysis, topic modeling, and named entity recognition allowed me to extract valuable information from written text, revolutionizing industries like sentiment analysis, customer service, and content recommendation systems.

Building the Arsenal: Acquiring Data Science Skills and Tools

Acquiring essential skills and familiarizing myself with relevant tools played a crucial role in my data science journey. Programming languages like Python and R became my companions, enabling me to manipulate, analyze, and model data efficiently. Additionally, I explored popular data science libraries and frameworks such as TensorFlow, Scikit-learn, Pandas, and NumPy, which expedited the development and deployment of machine learning models. The arsenal of skills and tools I accumulated became my assets in the quest for data-driven insights.

The Real-World Challenge: Applying Data Science in Practice

Data science is not just an academic pursuit but rather a practical discipline aimed at solving real-world problems. Throughout my journey, I sought to identify such problems and apply data science methodologies to provide practical solutions. From predicting customer churn to optimizing supply chain logistics, the application of data science proved transformative in various domains. Sharing success stories of leveraging data science in practice inspires others to realize the power of this field.

Cultivating Curiosity: Continuous Learning and Skill Enhancement

Embracing a growth mindset is paramount in the world of data science. The field is rapidly evolving, with new algorithms, techniques, and tools emerging frequently. To stay ahead, it is essential to cultivate curiosity and foster a continuous learning mindset. Keeping abreast of the latest research papers, attending data science conferences, and engaging in data science courses nurtures personal and professional growth. The journey to becoming a data enthusiast is a lifelong pursuit.

Joining the Community: Networking and Collaboration

Being part of the data science community is a catalyst for growth and inspiration. Engaging with like-minded individuals, sharing knowledge, and collaborating on projects enhances the learning experience. Joining online forums, participating in Kaggle competitions, and attending meetups provides opportunities to exchange ideas, solve challenges collectively, and foster invaluable connections within the data science community.

Overcoming Obstacles: Dealing with Common Data Science Challenges

Data science, like any discipline, presents its own set of challenges. From data cleaning and preprocessing to model selection and evaluation, obstacles arise at each stage of the data science pipeline. Strategies and tips to overcome these challenges, such as building reliable pipelines, conducting robust experiments, and leveraging cross-validation techniques, are indispensable in maintaining motivation and achieving success in the data science journey.

Balancing Act: Building a Career in Data Science alongside Other Commitments

For many aspiring data scientists, the pursuit of knowledge and skills must coexist with other commitments, such as full-time jobs and personal responsibilities. Effectively managing time and developing a structured learning plan is crucial in striking a balance. Tips such as identifying pockets of dedicated learning time, breaking down complex concepts into manageable chunks, and seeking mentorships or online communities can empower individuals to navigate the data science journey while juggling other responsibilities.

Ethical Considerations: Navigating the World of Data Responsibly

As data scientists, we must navigate the world of data responsibly, being mindful of the ethical considerations inherent in this field. Safeguarding privacy, addressing bias in algorithms, and ensuring transparency in data-driven decision-making are critical principles. Exploring topics such as algorithmic fairness, data anonymization techniques, and the societal impact of data science encourages responsible and ethical practices in a rapidly evolving digital landscape.

Embarking on a data science adventure from a curious novice to a passionate data enthusiast is an exhilarating and rewarding journey. By laying a foundation of knowledge, exploring various data science disciplines, acquiring essential skills and tools, and engaging in continuous learning, one can conquer challenges, build a successful career, and have a good influence on the data science community. It's a journey that never truly ends, as data continues to evolve and offer exciting opportunities for discovery and innovation. So, join me in your data science adventure, and let the exploration begin!

#data science#data analytics#data visualization#big data#machine learning#artificial intelligence#education#information

16 notes

·

View notes

Text

Java's Lasting Impact: A Deep Dive into Its Wide Range of Applications

Java programming stands as a towering pillar in the world of software development, known for its versatility, robustness, and extensive range of applications. Since its inception, Java has played a pivotal role in shaping the technology landscape. In this comprehensive guide, we will delve into the multifaceted world of Java programming, examining its wide-ranging applications, discussing its significance, and highlighting how ACTE Technologies can be your guiding light in mastering this dynamic language.

The Versatility of Java Programming:

Java programming is synonymous with adaptability. It's a language that transcends boundaries and finds applications across diverse domains. Here are some of the key areas where Java's versatility shines:

1. Web Development: Java has long been a favorite choice for web developers. Robust and scalable, it powers dynamic web applications, allowing developers to create interactive and feature-rich websites. Java-based web frameworks like Spring and JavaServer Faces (JSF) simplify the development of complex web applications.

2. Mobile App Development: The most widely used mobile operating system in the world, Android, mainly relies on Java for app development. Java's "write once, run anywhere" capability makes it an ideal choice for creating Android applications that run seamlessly on a wide range of devices.

3. Desktop Applications: Java's Swing and JavaFX libraries enable developers to craft cross-platform desktop applications with sophisticated graphical user interfaces (GUIs). This cross-platform compatibility ensures that your applications work on Windows, macOS, and Linux.

4. Enterprise Software: Java's strengths in scalability, security, and performance make it a preferred choice for developing enterprise-level applications. Customer Relationship Management (CRM) systems, Enterprise Resource Planning (ERP) software, and supply chain management solutions often rely on Java to deliver reliability and efficiency.

5. Game Development: Java isn't limited to business applications; it's also a contender in the world of gaming. Game developers use Java, along with libraries like LibGDX, to create both 2D and 3D games. The language's versatility allows game developers to target various platforms.

6. Big Data and Analytics: Java plays a significant role in the big data ecosystem. Popular frameworks like Apache Hadoop and Apache Spark utilize Java for processing and analyzing massive datasets. Its performance capabilities make it a natural fit for data-intensive tasks.

7. Internet of Things (IoT): Java's ability to run on embedded devices positions it well for IoT development. It is used to build applications for smart homes, wearable devices, and industrial automation systems, connecting the physical world to the digital realm.

8. Scientific and Research Applications: In scientific computing and research projects, Java's performance and libraries for data analysis make it a valuable tool. Researchers leverage Java to process and analyze data, simulate complex systems, and conduct experiments.

9. Cloud Computing: Java is a popular choice for building cloud-native applications and microservices. It is compatible with cloud platforms such as AWS, Azure, and Google Cloud, making it integral to cloud computing's growth.

Why Java Programming Matters:

Java programming's enduring significance in the tech industry can be attributed to several compelling reasons:

Platform Independence: Java's "write once, run anywhere" philosophy allows code to be executed on different platforms without modification. This portability enhances its versatility and cost-effectiveness.

Strong Ecosystem: Java boasts a rich ecosystem of libraries, frameworks, and tools that expedite development and provide solutions to a wide range of challenges. Developers can leverage these resources to streamline their projects.

Security: Java places a strong emphasis on security. Features like sandboxing and automatic memory management enhance the language's security profile, making it a reliable choice for building secure applications.

Community Support: Java enjoys the support of a vibrant and dedicated community of developers. This community actively contributes to its growth, ensuring that Java remains relevant, up-to-date, and in line with industry trends.

Job Opportunities: Proficiency in Java programming opens doors to a myriad of job opportunities in software development. It's a skill that is in high demand, making it a valuable asset in the tech job market.

Java programming is a dynamic and versatile language that finds applications in web and mobile development, enterprise software, IoT, big data, cloud computing, and much more. Its enduring relevance and the multitude of opportunities it offers in the tech industry make it a valuable asset in a developer's toolkit.

As you embark on your journey to master Java programming, consider ACTE Technologies as your trusted partner. Their comprehensive training programs, expert guidance, and hands-on experiences will equip you with the skills and knowledge needed to excel in the world of Java development.

Unlock the full potential of Java programming and propel your career to new heights with ACTE Technologies. Whether you're a novice or an experienced developer, there's always more to discover in the world of Java. Start your training journey today and be at the forefront of innovation and technology with Java programming.

8 notes

·

View notes

Text

Unlocking the Potential of Big Data with Apache Hadoop

Explore the world of Apache Hadoop and Discover how this groundbreaking technology revolutionizes big data processing and analytics for businesses

Explore more: https://www.ksolves.com/apache-hadoop-development-company

0 notes

Quote

ハッカーは、ホストの検出と侵害を自動化する新しい Golang ベースのマルウェアを使用して、Apache Hadoop YARN、Docker、Confluence、または Redis を実行している誤って構成されたサーバーをターゲットにしています。

このキャンペーンで使用された悪意のあるツールは、構成の弱点を利用し、Atlassian Confluence の古い脆弱性を悪用してマシン上でコードを実行します。

クラウド フォレンジックおよびインシデント対応会社 Cado Security の研究者がこのキャンペーンを発見し、攻撃に使用されたペイロード、bash スクリプト、Golang ELF バイナリを分析しました。

研究者らは、この侵入セットは以前に報告されたクラウド攻撃に似ており、その一部は TeamTNT 、 WatchDog 、 Kiss-a-Dog などの脅威アクターによるものであると指摘しています。

彼らは、Alpine Linux をベースにした新しいコンテナがサーバー上に生成され、Docker Engine API ハニーポットに対する初期アクセス アラートを受け取った後、攻撃の調査を開始しました。

次のステップでは、攻撃者は複数のシェル スクリプトと一般的な Linux 攻撃手法を利用して、暗号通貨マイナーをインストールし、永続性を確立し、リバース シェルをセットアップします。

ターゲット検出のための新しい Golang マルウェア

のサービスを実行しているホストを識別して悪用する役割を果たす 4 つの新しい Golang ペイロードの 研究者らによると、ハッカーは、Hadoop YARN ( h.sh )、Docker ( d.sh )、Confluence ( w.sh )、および Redis セットを展開します。 ( c.sh )。

ペイロードの名前は、ペイロードを bash スクリプトとして偽装するための不適切な試みである可能性があります。 ただし、これらは 64 ビット Golang ELF バイナリです。

「興味深いことに、マルウェア開発者はバイナリの削除を怠り、DWARF デバッグ情報はそのまま残されました。 バイナリ内の文字列やその他の機密データを難読化するための努力も行われていないため、リバース エンジニアリングが簡単です。」 - Cado Security

ハッカーは Golang ツールを使用してネットワーク セグメントをスキャンし、このキャンペーンのターゲットのデフォルトのポート 2375、8088、8090、または 6379 が開いているかどうかを調べます。

「w.sh」の場合、Confluence サーバーの IP アドレスを検出した後、 CVE-2022-26134 のエクスプロイト を取得します。CVE-2022-26134は、リモート攻撃者が認証なしでコードを実行できるようにする重大な脆弱性です。

ハッカーは新しい Golang マルウェアで Docker、Hadoop、Redis、Confluence を標的にします

2 notes

·

View notes

Text

Hackers Exploit Misconfigured YARN, Docker, Confluence, Redis Servers for Crypto Mining

Source: https://thehackernews.com/2024/03/hackers-exploit-misconfigured-yarn.html

More info: https://www.cadosecurity.com/spinning-yarn-a-new-linux-malware-campaign-targets-docker-apache-hadoop-redis-and-confluence/

3 notes

·

View notes

Text

From Math to Machine Learning: A Comprehensive Blueprint for Aspiring Data Scientists

The realm of data science is vast and dynamic, offering a plethora of opportunities for those willing to dive into the world of numbers, algorithms, and insights. If you're new to data science and unsure where to start, fear not! This step-by-step guide will navigate you through the foundational concepts and essential skills to kickstart your journey in this exciting field. Choosing the Best Data Science Institute can further accelerate your journey into this thriving industry.

1. Establish a Strong Foundation in Mathematics and Statistics

Before delving into the specifics of data science, ensure you have a robust foundation in mathematics and statistics. Brush up on concepts like algebra, calculus, probability, and statistical inference. Online platforms such as Khan Academy and Coursera offer excellent resources for reinforcing these fundamental skills.

2. Learn Programming Languages

Data science is synonymous with coding. Choose a programming language – Python and R are popular choices – and become proficient in it. Platforms like Codecademy, DataCamp, and W3Schools provide interactive courses to help you get started on your coding journey.

3. Grasp the Basics of Data Manipulation and Analysis

Understanding how to work with data is at the core of data science. Familiarize yourself with libraries like Pandas in Python or data frames in R. Learn about data structures, and explore techniques for cleaning and preprocessing data. Utilize real-world datasets from platforms like Kaggle for hands-on practice.

4. Dive into Data Visualization

Data visualization is a powerful tool for conveying insights. Learn how to create compelling visualizations using tools like Matplotlib and Seaborn in Python, or ggplot2 in R. Effectively communicating data findings is a crucial aspect of a data scientist's role.

5. Explore Machine Learning Fundamentals

Begin your journey into machine learning by understanding the basics. Grasp concepts like supervised and unsupervised learning, classification, regression, and key algorithms such as linear regression and decision trees. Platforms like scikit-learn in Python offer practical, hands-on experience.

6. Delve into Big Data Technologies

As data scales, so does the need for technologies that can handle large datasets. Familiarize yourself with big data technologies, particularly Apache Hadoop and Apache Spark. Platforms like Cloudera and Databricks provide tutorials suitable for beginners.

7. Enroll in Online Courses and Specializations

Structured learning paths are invaluable for beginners. Enroll in online courses and specializations tailored for data science novices. Platforms like Coursera ("Data Science and Machine Learning Bootcamp with R/Python") and edX ("Introduction to Data Science") offer comprehensive learning opportunities.

8. Build Practical Projects

Apply your newfound knowledge by working on practical projects. Analyze datasets, implement machine learning models, and solve real-world problems. Platforms like Kaggle provide a collaborative space for participating in data science competitions and showcasing your skills to the community.

9. Join Data Science Communities

Engaging with the data science community is a key aspect of your learning journey. Participate in discussions on platforms like Stack Overflow, explore communities on Reddit (r/datascience), and connect with professionals on LinkedIn. Networking can provide valuable insights and support.

10. Continuous Learning and Specialization

Data science is a field that evolves rapidly. Embrace continuous learning and explore specialized areas based on your interests. Dive into natural language processing, computer vision, or reinforcement learning as you progress and discover your passion within the broader data science landscape.

Remember, your journey in data science is a continuous process of learning, application, and growth. Seek guidance from online forums, contribute to discussions, and build a portfolio that showcases your projects. Choosing the best Data Science Courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science. With dedication and a systematic approach, you'll find yourself progressing steadily in the fascinating world of data science. Good luck on your journey!

3 notes

·

View notes

Text

Data Science

📌Data scientists use a variety of tools and technologies to help them collect, process, analyze, and visualize data. Here are some of the most common tools that data scientists use:

👩🏻💻Programming languages: Data scientists typically use programming languages such as Python, R, and SQL for data analysis and machine learning.

📊Data visualization tools: Tools such as Tableau, Power BI, and matplotlib allow data scientists to create visualizations that help them better understand and communicate their findings.

🛢Big data technologies: Data scientists often work with large datasets, so they use technologies like Hadoop, Spark, and Apache Cassandra to manage and process big data.

🧮Machine learning frameworks: Machine learning frameworks like TensorFlow, PyTorch, and scikit-learn provide data scientists with tools to build and train machine learning models.

☁️Cloud platforms: Cloud platforms like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure provide data scientists with access to powerful computing resources and tools for data processing and analysis.

📌Data management tools: Tools like Apache Kafka and Apache NiFi allow data scientists to manage data pipelines and automate data ingestion and processing.

🧹Data cleaning tools: Data scientists use tools like OpenRefine and Trifacta to clean and preprocess data before analysis.

☎️Collaboration tools: Data scientists often work in teams, so they use tools like GitHub and Jupyter Notebook to collaborate and share code and analysis.

For more follow @woman.engineer

#google#programmers#coding#coding is fun#python#programminglanguage#programming#woman engineer#zeynep küçük#yazılım#coder#tech

24 notes

·

View notes

Text

Unleashing the Power of Big Data Analytics: Mastering the Course of Success

In today's digital age, data has become the lifeblood of successful organizations. The ability to collect, analyze, and interpret vast amounts of data has revolutionized business operations and decision-making processes. Here is where big data analytics could truly excel. By harnessing the potential of data analytics, businesses can gain valuable insights that can guide them on a path to success. However, to truly unleash this power, it is essential to have a solid understanding of data analytics and its various types of courses. In this article, we will explore the different types of data analytics courses available and how they can help individuals and businesses navigate the complex world of big data.

Education: The Gateway to Becoming a Data Analytics Expert

Before delving into the different types of data analytics courses, it is crucial to highlight the significance of education in this field. Data analytics is an intricate discipline that requires a solid foundation of knowledge and skills. While practical experience is valuable, formal education in data analytics serves as the gateway to becoming an expert in the field. By enrolling in relevant courses, individuals can gain a comprehensive understanding of the theories, methodologies, and tools used in data analytics.

Data Analytics Courses Types: Navigating the Expansive Landscape

When it comes to data analytics courses, there is a wide range of options available, catering to individuals with varying levels of expertise and interests. Let's explore some of the most popular types of data analytics courses:

1. Introduction to Data Analytics

This course serves as a perfect starting point for beginners who want to dip their toes into the world of data analytics. The course covers the fundamental concepts, techniques, and tools used in data analytics. It provides a comprehensive overview of data collection, cleansing, and visualization techniques, along with an introduction to statistical analysis. By mastering the basics, individuals can lay a solid foundation for further exploration in the field of data analytics.

2. Advanced Data Analytics Techniques

For those looking to deepen their knowledge and skills in data analytics, advanced courses offer a treasure trove of insights. These courses delve into complex data analysis techniques, such as predictive modeling, machine learning algorithms, and data mining. Individuals will learn how to discover hidden patterns, make accurate predictions, and extract valuable insights from large datasets. Advanced data analytics courses equip individuals with the tools and techniques necessary to tackle real-world data analysis challenges.

3. Specialized Data Analytics Courses

As the field of data analytics continues to thrive, specialized courses have emerged to cater to specific industry needs and interests. Whether it's healthcare analytics, financial analytics, or social media analytics, individuals can choose courses tailored to their desired area of expertise. These specialized courses delve into industry-specific data analytics techniques and explore case studies to provide practical insights into real-world applications. By honing their skills in specialized areas, individuals can unlock new opportunities and make a significant impact in their chosen field.

4. Big Data Analytics Certification Programs

In the era of big data, the ability to navigate and derive meaningful insights from massive datasets is in high demand. Big data analytics certification programs offer individuals the chance to gain comprehensive knowledge and hands-on experience in handling big data. These programs cover topics such as Hadoop, Apache Spark, and other big data frameworks. By earning a certification, individuals can demonstrate their proficiency in handling big data and position themselves as experts in this rapidly growing field.

Education and the mastery of data analytics courses at ACTE Institute is essential in unleashing the power of big data analytics. With the right educational foundation like the ACTE institute, individuals can navigate the complex landscape of data analytics with confidence and efficiency. Whether starting with an introduction course or diving into advanced techniques, the world of data analytics offers endless opportunities for personal and professional growth. By staying ahead of the curve and continuously expanding their knowledge, individuals can become true masters of the course, leading businesses towards success in the era of big data.

2 notes

·

View notes