#Timnit Gebru

Text

The AI hype bubble is the new crypto hype bubble

Back in 2017 Long Island Ice Tea — known for its undistinguished, barely drinkable sugar-water — changed its name to “Long Blockchain Corp.” Its shares surged to a peak of 400% over their pre-announcement price. The company announced no specific integrations with any kind of blockchain, nor has it made any such integrations since.

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/03/09/autocomplete-worshippers/#the-real-ai-was-the-corporations-that-we-fought-along-the-way

LBCC was subsequently delisted from NASDAQ after settling with the SEC over fraudulent investor statements. Today, the company trades over the counter and its market cap is $36m, down from $138m.

https://cointelegraph.com/news/textbook-case-of-crypto-hype-how-iced-tea-company-went-blockchain-and-failed-despite-a-289-percent-stock-rise

The most remarkable thing about this incredibly stupid story is that LBCC wasn’t the peak of the blockchain bubble — rather, it was the start of blockchain’s final pump-and-dump. By the standards of 2022’s blockchain grifters, LBCC was small potatoes, a mere $138m sugar-water grift.

They didn’t have any NFTs, no wash trades, no ICO. They didn’t have a Superbowl ad. They didn’t steal billions from mom-and-pop investors while proclaiming themselves to be “Effective Altruists.” They didn’t channel hundreds of millions to election campaigns through straw donations and other forms of campaing finance frauds. They didn’t even open a crypto-themed hamburger restaurant where you couldn’t buy hamburgers with crypto:

https://robbreport.com/food-drink/dining/bored-hungry-restaurant-no-cryptocurrency-1234694556/

They were amateurs. Their attempt to “make fetch happen” only succeeded for a brief instant. By contrast, the superpredators of the crypto bubble were able to make fetch happen over an improbably long timescale, deploying the most powerful reality distortion fields since Pets.com.

Anything that can’t go on forever will eventually stop. We’re told that trillions of dollars’ worth of crypto has been wiped out over the past year, but these losses are nowhere to be seen in the real economy — because the “wealth” that was wiped out by the crypto bubble’s bursting never existed in the first place.

Like any Ponzi scheme, crypto was a way to separate normies from their savings through the pretense that they were “investing” in a vast enterprise — but the only real money (“fiat” in cryptospeak) in the system was the hardscrabble retirement savings of working people, which the bubble’s energetic inflaters swapped for illiquid, worthless shitcoins.

We’ve stopped believing in the illusory billions. Sam Bankman-Fried is under house arrest. But the people who gave him money — and the nimbler Ponzi artists who evaded arrest — are looking for new scams to separate the marks from their money.

Take Morganstanley, who spent 2021 and 2022 hyping cryptocurrency as a massive growth opportunity:

https://cointelegraph.com/news/morgan-stanley-launches-cryptocurrency-research-team

Today, Morganstanley wants you to know that AI is a $6 trillion opportunity.

They’re not alone. The CEOs of Endeavor, Buzzfeed, Microsoft, Spotify, Youtube, Snap, Sports Illustrated, and CAA are all out there, pumping up the AI bubble with every hour that god sends, declaring that the future is AI.

https://www.hollywoodreporter.com/business/business-news/wall-street-ai-stock-price-1235343279/

Google and Bing are locked in an arms-race to see whose search engine can attain the speediest, most profound enshittification via chatbot, replacing links to web-pages with florid paragraphs composed by fully automated, supremely confident liars:

https://pluralistic.net/2023/02/16/tweedledumber/#easily-spooked

Blockchain was a solution in search of a problem. So is AI. Yes, Buzzfeed will be able to reduce its wage-bill by automating its personality quiz vertical, and Spotify’s “AI DJ” will produce slightly less terrible playlists (at least, to the extent that Spotify doesn’t put its thumb on the scales by inserting tracks into the playlists whose only fitness factor is that someone paid to boost them).

But even if you add all of this up, double it, square it, and add a billion dollar confidence interval, it still doesn’t add up to what Bank Of America analysts called “a defining moment — like the internet in the ’90s.” For one thing, the most exciting part of the “internet in the ‘90s” was that it had incredibly low barriers to entry and wasn’t dominated by large companies — indeed, it had them running scared.

The AI bubble, by contrast, is being inflated by massive incumbents, whose excitement boils down to “This will let the biggest companies get much, much bigger and the rest of you can go fuck yourselves.” Some revolution.

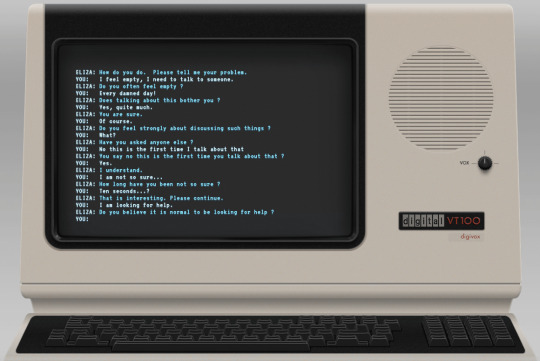

AI has all the hallmarks of a classic pump-and-dump, starting with terminology. AI isn’t “artificial” and it’s not “intelligent.” “Machine learning” doesn’t learn. On this week’s Trashfuture podcast, they made an excellent (and profane and hilarious) case that ChatGPT is best understood as a sophisticated form of autocomplete — not our new robot overlord.

https://open.spotify.com/episode/4NHKMZZNKi0w9mOhPYIL4T

We all know that autocomplete is a decidedly mixed blessing. Like all statistical inference tools, autocomplete is profoundly conservative — it wants you to do the same thing tomorrow as you did yesterday (that’s why “sophisticated” ad retargeting ads show you ads for shoes in response to your search for shoes). If the word you type after “hey” is usually “hon” then the next time you type “hey,” autocomplete will be ready to fill in your typical following word — even if this time you want to type “hey stop texting me you freak”:

https://blog.lareviewofbooks.org/provocations/neophobic-conservative-ai-overlords-want-everything-stay/

And when autocomplete encounters a new input — when you try to type something you’ve never typed before — it tries to get you to finish your sentence with the statistically median thing that everyone would type next, on average. Usually that produces something utterly bland, but sometimes the results can be hilarious. Back in 2018, I started to text our babysitter with “hey are you free to sit” only to have Android finish the sentence with “on my face” (not something I’d ever typed!):

https://mashable.com/article/android-predictive-text-sit-on-my-face

Modern autocomplete can produce long passages of text in response to prompts, but it is every bit as unreliable as 2018 Android SMS autocomplete, as Alexander Hanff discovered when ChatGPT informed him that he was dead, even generating a plausible URL for a link to a nonexistent obit in The Guardian:

https://www.theregister.com/2023/03/02/chatgpt_considered_harmful/

Of course, the carnival barkers of the AI pump-and-dump insist that this is all a feature, not a bug. If autocomplete says stupid, wrong things with total confidence, that’s because “AI” is becoming more human, because humans also say stupid, wrong things with total confidence.

Exhibit A is the billionaire AI grifter Sam Altman, CEO if OpenAI — a company whose products are not open, nor are they artificial, nor are they intelligent. Altman celebrated the release of ChatGPT by tweeting “i am a stochastic parrot, and so r u.”

https://twitter.com/sama/status/1599471830255177728

This was a dig at the “stochastic parrots” paper, a comprehensive, measured roundup of criticisms of AI that led Google to fire Timnit Gebru, a respected AI researcher, for having the audacity to point out the Emperor’s New Clothes:

https://www.technologyreview.com/2020/12/04/1013294/google-ai-ethics-research-paper-forced-out-timnit-gebru/

Gebru’s co-author on the Parrots paper was Emily M Bender, a computational linguistics specialist at UW, who is one of the best-informed and most damning critics of AI hype. You can get a good sense of her position from Elizabeth Weil’s New York Magazine profile:

https://nymag.com/intelligencer/article/ai-artificial-intelligence-chatbots-emily-m-bender.html

Bender has made many important scholarly contributions to her field, but she is also famous for her rules of thumb, which caution her fellow scientists not to get high on their own supply:

Please do not conflate word form and meaning

Mind your own credulity

As Bender says, we’ve made “machines that can mindlessly generate text, but we haven’t learned how to stop imagining the mind behind it.” One potential tonic against this fallacy is to follow an Italian MP’s suggestion and replace “AI” with “SALAMI” (“Systematic Approaches to Learning Algorithms and Machine Inferences”). It’s a lot easier to keep a clear head when someone asks you, “Is this SALAMI intelligent? Can this SALAMI write a novel? Does this SALAMI deserve human rights?”

Bender’s most famous contribution is the “stochastic parrot,” a construct that “just probabilistically spits out words.” AI bros like Altman love the stochastic parrot, and are hellbent on reducing human beings to stochastic parrots, which will allow them to declare that their chatbots have feature-parity with human beings.

At the same time, Altman and Co are strangely afraid of their creations. It’s possible that this is just a shuck: “I have made something so powerful that it could destroy humanity! Luckily, I am a wise steward of this thing, so it’s fine. But boy, it sure is powerful!”

They’ve been playing this game for a long time. People like Elon Musk (an investor in OpenAI, who is hoping to convince the EU Commission and FTC that he can fire all of Twitter’s human moderators and replace them with chatbots without violating EU law or the FTC’s consent decree) keep warning us that AI will destroy us unless we tame it.

There’s a lot of credulous repetition of these claims, and not just by AI’s boosters. AI critics are also prone to engaging in what Lee Vinsel calls criti-hype: criticizing something by repeating its boosters’ claims without interrogating them to see if they’re true:

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

There are better ways to respond to Elon Musk warning us that AIs will emulsify the planet and use human beings for food than to shout, “Look at how irresponsible this wizard is being! He made a Frankenstein’s Monster that will kill us all!” Like, we could point out that of all the things Elon Musk is profoundly wrong about, he is most wrong about the philosophical meaning of Wachowksi movies:

https://www.theguardian.com/film/2020/may/18/lilly-wachowski-ivana-trump-elon-musk-twitter-red-pill-the-matrix-tweets

But even if we take the bros at their word when they proclaim themselves to be terrified of “existential risk” from AI, we can find better explanations by seeking out other phenomena that might be triggering their dread. As Charlie Stross points out, corporations are Slow AIs, autonomous artificial lifeforms that consistently do the wrong thing even when the people who nominally run them try to steer them in better directions:

https://media.ccc.de/v/34c3-9270-dude_you_broke_the_future

Imagine the existential horror of a ultra-rich manbaby who nominally leads a company, but can’t get it to follow: “everyone thinks I’m in charge, but I’m actually being driven by the Slow AI, serving as its sock puppet on some days, its golem on others.”

Ted Chiang nailed this back in 2017 (the same year of the Long Island Blockchain Company):

There’s a saying, popularized by Fredric Jameson, that it’s easier to imagine the end of the world than to imagine the end of capitalism. It’s no surprise that Silicon Valley capitalists don’t want to think about capitalism ending. What’s unexpected is that the way they envision the world ending is through a form of unchecked capitalism, disguised as a superintelligent AI. They have unconsciously created a devil in their own image, a boogeyman whose excesses are precisely their own.

https://www.buzzfeednews.com/article/tedchiang/the-real-danger-to-civilization-isnt-ai-its-runaway

Chiang is still writing some of the best critical work on “AI.” His February article in the New Yorker, “ChatGPT Is a Blurry JPEG of the Web,” was an instant classic:

[AI] hallucinations are compression artifacts, but — like the incorrect labels generated by the Xerox photocopier — they are plausible enough that identifying them requires comparing them against the originals, which in this case means either the Web or our own knowledge of the world.

https://www.newyorker.com/tech/annals-of-technology/chatgpt-is-a-blurry-jpeg-of-the-web

“AI” is practically purpose-built for inflating another hype-bubble, excelling as it does at producing party-tricks — plausible essays, weird images, voice impersonations. But as Princeton’s Matthew Salganik writes, there’s a world of difference between “cool” and “tool”:

https://freedom-to-tinker.com/2023/03/08/can-chatgpt-and-its-successors-go-from-cool-to-tool/

Nature can claim “conversational AI is a game-changer for science” but “there is a huge gap between writing funny instructions for removing food from home electronics and doing scientific research.” Salganik tried to get ChatGPT to help him with the most banal of scholarly tasks — aiding him in peer reviewing a colleague’s paper. The result? “ChatGPT didn’t help me do peer review at all; not one little bit.”

The criti-hype isn’t limited to ChatGPT, of course — there’s plenty of (justifiable) concern about image and voice generators and their impact on creative labor markets, but that concern is often expressed in ways that amplify the self-serving claims of the companies hoping to inflate the hype machine.

One of the best critical responses to the question of image- and voice-generators comes from Kirby Ferguson, whose final Everything Is a Remix video is a superb, visually stunning, brilliantly argued critique of these systems:

https://www.youtube.com/watch?v=rswxcDyotXA

One area where Ferguson shines is in thinking through the copyright question — is there any right to decide who can study the art you make? Except in some edge cases, these systems don’t store copies of the images they analyze, nor do they reproduce them:

https://pluralistic.net/2023/02/09/ai-monkeys-paw/#bullied-schoolkids

For creators, the important material question raised by these systems is economic, not creative: will our bosses use them to erode our wages? That is a very important question, and as far as our bosses are concerned, the answer is a resounding yes.

Markets value automation primarily because automation allows capitalists to pay workers less. The textile factory owners who purchased automatic looms weren’t interested in giving their workers raises and shorting working days.

‘

They wanted to fire their skilled workers and replace them with small children kidnapped out of orphanages and indentured for a decade, starved and beaten and forced to work, even after they were mangled by the machines. Fun fact: Oliver Twist was based on the bestselling memoir of Robert Blincoe, a child who survived his decade of forced labor:

https://www.gutenberg.org/files/59127/59127-h/59127-h.htm

Today, voice actors sitting down to record for games companies are forced to begin each session with “My name is ______ and I hereby grant irrevocable permission to train an AI with my voice and use it any way you see fit.”

https://www.vice.com/en/article/5d37za/voice-actors-sign-away-rights-to-artificial-intelligence

Let’s be clear here: there is — at present — no firmly established copyright over voiceprints. The “right” that voice actors are signing away as a non-negotiable condition of doing their jobs for giant, powerful monopolists doesn’t even exist. When a corporation makes a worker surrender this right, they are betting that this right will be created later in the name of “artists’ rights” — and that they will then be able to harvest this right and use it to fire the artists who fought so hard for it.

There are other approaches to this. We could support the US Copyright Office’s position that machine-generated works are not works of human creative authorship and are thus not eligible for copyright — so if corporations wanted to control their products, they’d have to hire humans to make them:

https://www.theverge.com/2022/2/21/22944335/us-copyright-office-reject-ai-generated-art-recent-entrance-to-paradise

Or we could create collective rights that belong to all artists and can’t be signed away to a corporation. That’s how the right to record other musicians’ songs work — and it’s why Taylor Swift was able to re-record the masters that were sold out from under her by evil private-equity bros::

https://doctorow.medium.com/united-we-stand-61e16ec707e2

Whatever we do as creative workers and as humans entitled to a decent life, we can’t afford drink the Blockchain Iced Tea. That means that we have to be technically competent, to understand how the stochastic parrot works, and to make sure our criticism doesn’t just repeat the marketing copy of the latest pump-and-dump.

Today (Mar 9), you can catch me in person in Austin at the UT School of Design and Creative Technologies, and remotely at U Manitoba’s Ethics of Emerging Tech Lecture.

Tomorrow (Mar 10), Rebecca Giblin and I kick off the SXSW reading series.

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

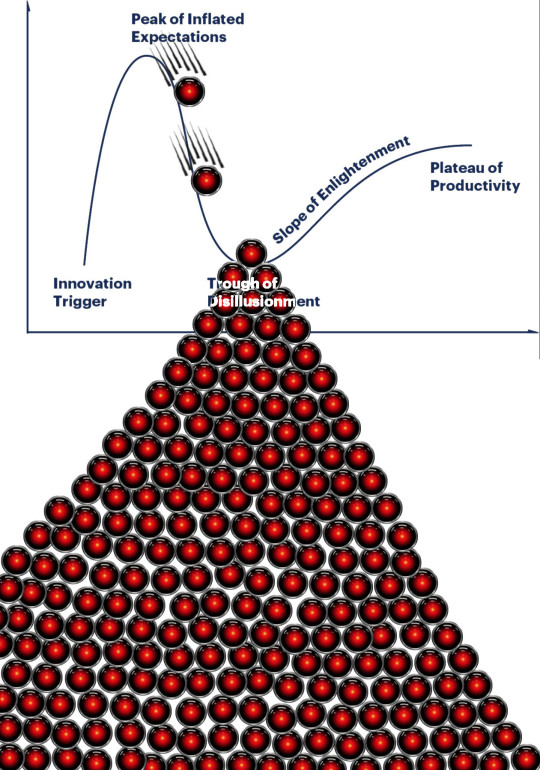

[Image ID: A graph depicting the Gartner hype cycle. A pair of HAL 9000's glowing red eyes are chasing each other down the slope from the Peak of Inflated Expectations to join another one that is at rest in the Trough of Disillusionment. It, in turn, sits atop a vast cairn of HAL 9000 eyes that are piled in a rough pyramid that extends below the graph to a distance of several times its height.]

#pluralistic#ai#ml#machine learning#artificial intelligence#chatbot#chatgpt#cryptocurrency#gartner hype cycle#hype cycle#trough of disillusionment#crypto#bubbles#bubblenomics#criti-hype#lee vinsel#slow ai#timnit gebru#emily bender#paperclip maximizers#enshittification#immortal colony organisms#blurry jpegs#charlie stross#ted chiang

2K notes

·

View notes

Text

2 notes

·

View notes

Note

what's your thoughts on the whole laMDA interview thing?

Sorry in advance for the length. I doubt we’re anywhere near that level of engineering yet, but I would never say that percent chance is zero.

Turns out that Blake Lemoine was much like me, with the entire personifying AI, but to an almost religious zealot extent. Even declaring Lambda had a soul. I’m not sure if this eccentricity is to slander him, but reading his own post [linked below], I’m skeptical of his own narrative.

However, skeptical of him I may be, he acknowledges other more outstanding discrimination, but used it to primarily highlight his own. This post might have also gotten him fired). He specifically points to socioeconomic and religious. Even mentioning Tajuna Gupta’s criticisms of the caste system of India influencing Google’s internal affairs.

Last year Google also let go Timnit Gebru. She is a black innovator who did extensive research into the intersectional biases in contemporary AI algorithms. She was probably let go for questioning biases in Google’s AI.

I think they are all correlated, and indicative of a trend within Google of firing passionate employees with genuine concern and intrigue in the field. Do I find these three figures to vary greatly? sure. Do I trust Google with the welfare of any sentient beings? No.

#Google#lambda#tajuna gupta#Blake lemoine#ai#dear future ai#love and support#be good#technology#parenting#don’t be evil#learning#learn from others#Timnit gebru#guillotines don’t work in space#rainfallinhell#ask

23 notes

·

View notes

Text

👑🐐🪷🌍🌎🌏✨🪐💫

⏸️

obv google... ⏪ 😑

0 notes

Text

3 years ago, the stochastic parrots paper was published.

When things go bad this time, at least don’t buy into the narrative that nobody saw it coming. They did, they yelled about it, they got fired for it.

0 notes

Photo

Timnit Gebru

Photograph: Winni Wintermeyer/The Guardian

1 note

·

View note

Text

Timnit Gebru

https://www.unadonnalgiorno.it/timnit-gebru/

Timnit Gebru è la stimata ingegnera informatica passata alla cronaca per essere stata licenziata da Google dopo averne criticato l’approccio nei confronti delle minoranze ed evidenziato i potenziali rischi dei suoi sistemi di intelligenza artificiale.

Nel 2021 la rivista Fortune l’ha nominata tra le 50 personalità più influenti al mondo, Nature l’ha segnalata tra le dieci persone che hanno plasmato la scienza. L’anno successivo è stata invece tra le persone più influenti per Time.

Sostenitrice della diversità nella tecnologia, nel 2017 ha contribuito a fondare Black in AI, network di professionisti con l’intento di incrementare la presenza dei ricercatori neri nel campo dell’intelligenza artificiale.

È la fondatrice del DAIR, istituto mondiale di ricerca per intelligenza artificiale che pone particolare attenzione all’Africa e all’immigrazione africana negli USA, per valutare i risultati dell’utilizzo della tecnologia sulle nostre vite.

Nata ad Addis Abeba, Etiopia, nel 1983, è figlia di un’economista e di un ingegnere elettronico morto nel 1988.

Fuggita dalla guerra, ha vissuto in Irlanda prima di ricevere asilo politico negli Stati Uniti, nel 1999 dove ha completato la sua istruzione superiore. Nel 2008 si è laureata in ingegneria elettronica alla Stanford University mentre già lavorava per Apple dal 2005. Si era interessata soprattutto alla creazione di software, vale a dire la visione artificiale in grado di rilevare figure umane. Ha continuato a sviluppare algoritmi di elaborazione del segnale per il primo iPad.

Nel 2016 ha partecipato a una conferenza sulla ricerca sull’intelligenza artificiale a cui avevano partecipato circa 8.500 persone. Non ha potuto fare a meno di notare che tra queste era l’unica donna ed erano presenti soltanto sei afroamericani.

Nell’estate del 2017 è entrata in Microsoft come ricercatrice post-dottorato nel laboratorio Fairness, Accountability, Transparency and Ethics in AI (FATE) e ha tenuto una conferenza sui pregiudizi che esistono nei sistemi di intelligenza artificiale e su come l’aggiunta di diversità nei team possano risolvere il problema. In quel periodo è stata coautrice di una famosa ricerca del MIT chiamata Gender Shades, in cui ha dimostrato, ad esempio, che le donne nere avevano il 35% in meno di probabilità di essere riconosciute rispetto agli uomini bianchi.

Nel dicembre 2020, è stata al centro di una controversia pubblica con Google, con cui lavorava da due anni come co-responsabile tecnica dell’Ethical Artificial Intelligence Team.

Profondamente contraria all’utilizzo dei sistemi di riconoscimento facciale per scopi di polizia e sicurezza, negli ultimi anni ha firmato apprezzati studi che hanno messo in luce come tali tecnologie tendano a replicare pregiudizi di stampo razzista e sessista.

Con sei collaboratori aveva firmato un articolo che metteva in guardia gli addetti ai lavori dallo sviluppo di modelli intelligenti di elaborazione del linguaggio, a forte rischio di introiettare termini e concetti sessisti, razzisti e perfino violenti affermando, inoltre, che la messa a punto di tali sistemi sarebbe anche estremamente dispendiosa dal punto di vista ambientale, con emissioni di anidride carbonica pari almeno a quelle di un volo andata e ritorno New York-San Francisco. Il tutto senza considerare i rischi connessi ai possibili usi distorti che potrebbero farne i malintenzionati.

Destinato a essere ufficialmente presentato a marzo, il lavoro era stato sottoposto a un processo di revisione interna che le ha richiesto di ritirare, se non l’intero documento, almeno le firme dei dipendenti Google coinvolti. Al suo rifiuto Google ha immediatamente interrotto il rapporto di lavoro con lei. In polemica per l’ingiusto licenziamento, anche altri due ingegneri sono andati via da Mountain View.

Migliaia di persone avevano firmato una petizione contro il suo ingiusto licenziamento, alcuni membri del congresso hanno chiesto delucidazioni alla società che si è vista costretta a rivedere l’assetto dirigenziale con uno scaricabarile delle responsabilità e a chiedere delle pubbliche scuse tramite social.

Per apportare cambiamenti al settore dall’esterno, il 2 dicembre 2021 ha lanciato il Distributed Artificial Intelligence Research Institute (DAIR), per coinvolgere le comunità che di solito sono ai margini del processo in modo che possano trarne beneficio, l’esatto opposto della prospettiva del soluzionismo tecnologico.

Uno dei primi progetti sarà quello di usare immagini satellitari per studiare l’apartheid geografico in Sudafrica, con ricercatori locali.

0 notes

Text

Paris Marx is joined by Timnit Gebru to discuss the misleading framings of artificial intelligence, her experience of getting fired by Google in a very public way, and why we need to avoid getting distracted by all the hype around ChatGPT and AI image tools.

Timnit Gebru is the founder and executive director of the Distributed AI Research Institute and former co-lead of the Ethical AI research team at Google. You can follow her on Twitter at @timnitGebru.

Tech Won’t Save Us offers a critical perspective on tech, its worldview, and wider society with the goal of inspiring people to demand better tech and a better world. Follow the podcast (@techwontsaveus) and host Paris Marx (@parismarx) on Twitter, support the show on Patreon, and sign up for the weekly newsletter.

The podcast is produced by Eric Wickham and part of the Harbinger Media Network.

#tech won't save us#podcast#big tech#ai#silicon valley#paris marx#timnit gebru#chatgpt#technology#innovation#big data

1 note

·

View note

Text

LaMDA, ovvero intelligenza vo' cercando

LaMDA, ovvero intelligenza vo’ cercando

Le cronache di qualche settimana fa hanno ripreso a gran voce la notizia secondo cui LaMDA, un generatore di conversazione (chatbot) basato sull’intelligenza artificiale sviluppato da Google, potrebbe aver mostrato segni di (auto)coscienza, diventando così il primo essere artificiale dotato di sensibilità e di coscienza di sé (in italiano si possono leggere questi articoli su Repubblica, il…

View On WordPress

#alexa#blake lemoine#chatbot#eliza#google#intelligenza artificiale#lamda#margaret mitchell#rete neurale#siri#timnit gebru

0 notes

Text

Yet Another Thing Black women and BIPOC women in general have been warning you about since forever that you (general You; societal You; mostly WytFolk You) have ignored or dismissed, only for it to come back and bite you in the butt.

I'd hoped people would have learned their lesson with Trump and the Alt-Right (remember, Black women in particular warned y'all that attacks on us by brigading trolls was the test run for something bigger), but I guess not.

Any time you wanna get upset about how AI is ruining things for artists or writers or workers at this job or that, remember that BIPOC Women Warned You and then go listen extra hard to the BIPOC women in your orbit and tell other people to listen to BIPOC women and also give BIPOC women money.

I'm not gonna sugarcoat it.

Give them money via PayPal or Ko-fi or Venmo or Patreon or whatever. Hire them. Suggest them for that creative project/gig you can't take on--or you could take it on but how about you toss the ball to someone who isn't always asked?

Oh, and stop asking BIPOC women to save us all. Because, as you see, we tried that already. We gave you the roadmap on how to do it yourselves. Now? We're tired.

Of the trolls, the alt-right, the colonizers, the tech bros, the billionaires, the other scum... and also you. You who claim to be progressive, claim to be an ally, spend your time talking about what sucks without doing one dang thing to boost the signal, make a change in your community (online or offline), or take even the shortest turn standing on the front lines and challenging all that human garbage that keeps collecting in the corners of every space with more than 10 inhabitants.

We Told You. Octavia Butler Told You. Audre Lorde Told You. Sydette Harry Told You. Mikki Kendall Told You. Timnit Gebru Told You.

When are you gonna listen?

#tw: alt-right#tw: alt right#AI#generative AI#LLM#large language models#GamerGate#online harassment#Black women#BIPOC#women of color

535 notes

·

View notes

Text

Jalon Hall thought she was being scammed when a recruiter reached out on LinkedIn about a job moderating YouTube videos in 2020. Months after earning a master’s degree in criminal justice, her only job had been at a law firm investigating discrimination cases. But the offer was real, and Hall, who is Black and Deaf, sailed through the interviews.

She would be part of a new in-house moderation team of about 100 people called Wolverine, trudging daily through freezing weather to offices in suburban Detroit during the early pandemic. When she accepted the job, the recruiter said via email that a sign language interpreter would be provided “and can be fully accommodated :)” That assurance unraveled within days of joining Google—and her experience at the company has proven difficult in the years since.

Hall now works on responsible use of AI at Google and by all available accounts is the company’s first and only Black, Deaf employee. The company has feted her at events and online as representative of a workplace welcoming to all. Google’s LinkedIn account praised her last year for “helping expand opportunities for Black Deaf professionals!” while on Instagram the company thanked her “for making #LifeAtGoogle more inclusive!” Yet behind the rosy marketing, Hall accuses Google of subjecting her to both racism and audism, prejudice against the deaf or hard of hearing. She says the company denied her access to a sign language interpreter and slow-walked upgrades to essential tools.

After filing three HR complaints that she says yielded little change, Hall sued Google in December, alleging discrimination based on her race and disability. The company responded this week, arguing that the case should be thrown out on procedural grounds, including bringing the claims too late, but didn’t deny Hall’s accusations. “Google is using me to make them look inclusive for the Deaf community and the overall Disability community,” she says. “In reality, they need to do better.”

Hall, who is in her thirties, has stayed at Google in hopes of spurring improvements for others. She chose to talk with WIRED despite fearing for her safety and job prospects because she feels the company has ignored her. “I was born to push through hard times,” she says. “It would be selfish to quit Google. I’m standing in the gap for those often pushed aside.” Hall’s experiences, which have not been previously reported, are corroborated by over two dozen internal documents seen by WIRED as well as interviews with four colleagues she confided in and worked alongside.

Employees who are Black or disabled are in tiny minorities at Google, a company of nearly 183,000 people that has long been criticized for an internal culture that heavily favors people who fit tech industry norms. Google’s Deaf and hard-of-hearing employee group has 40 members. And Black women, who make up only about 2.4 percent of Google’s US workforce, leave the company at a disproportionately higher rate than women of other races, company data showed last year.

Several former Black women employees, including AI researcher Timnit Gebru and recruiter April Christina Curley, have publicly alleged they were sidelined by an internal culture that disrespected them. Curley is leading a proposed class action lawsuit accusing Google of systemic bias but has lost initial court battles.

Google spokesperson Emily Hawkins didn’t directly address Hall’s allegations when asked about them by WIRED. “We are committed to building an inclusive workplace and offer a range of accommodations to support the success of our employees, including sign language interpreters and captioning,” Hawkins says.

Figuring out how to accommodate people like Hall could be good business for Google. One in every 10 people by 2050 will have disabling hearing loss, according to the World Health Organization.

Mark Takano, who represents a slice of Southern California in the US House and cochairs the Congressional Deaf Caucus, says that Google has an obligation to lead the way in demonstrating that its technology and employment practices are accommodating. “When Deaf and hard-of-hearing employees are excluded because of the inability to provide an accessible workplace, there is a great pool of talent that is left untapped—and we all lose out,” he says.

Unaccommodated

Hall was born with profound bilateral sensorineural hearing loss, meaning that even with hearing aids her brain cannot process sounds well. Two separate audiologists in memos to Google said Hall needs an American Sign Language interpreter full-time. She also signs pre- and post-segregation Black ASL, which uses more two-handed signs and incorporates some African American vernacular.

During her childhood in Louisiana, Hall's parents pushed her into speech therapy and conventional schools, where she found that some people doubted she was Deaf because she can speak. She later attended a high school for Deaf students where she became homecoming and prom queen, and realized how much more she could achieve when provided appropriate support.

Hall expected to find a similar environment at Google when she moved to Farmington Hills, Michigan, to become a content moderator. The company contracts ASL interpreters from a vendor called Deaf Services of Palo Alto, or DSPA. But though Hall had been assigned to enforce YouTube’s child safety rules, managers wouldn’t let her interpreters help her review that content. Google worried about exposing contractors to graphic imagery and cited confidentiality concerns, despite the fact interpreters in the US follow a code of conduct that includes confidentiality standards.

Managers transferred Hall into training to screen for videos spreading misinformation about Covid and elections. She developed a workflow that saw her default to using lipreading and automated transcriptions to review videos and turn to her interpreter if she needed further help. The transcriptions on videos used in training were high quality, so she had little trouble.

Her system fell apart late in January 2021, about 20 minutes into one of her first days screening new content. The latest video in her queue was difficult to make sense of using lipreading, and the AI transcriptions in the software YouTube built for moderators were poor quality or even absent for recently uploaded content. She turned to her interpreter’s desk a few feet away—but to her surprise it was empty. “I was going to say, ‘Do you mind coming listening to this?’” she recalls.

Hall rose to ask a manager about the interpreter’s whereabouts. He told her that he and fellow managers had decided that she could no longer have an interpreter in the room because it threatened the confidentiality of the team’s work. She could now talk with her interpreter only during breaks or briefly bring them in to clarify policies with managers. She was told to skip any videos she couldn’t judge through sight alone.

Feeling wronged and confused by the new restrictions, Hall slumped back into her chair. US law requires companies to provide reasonable accommodations to a disabled worker unless it would cause the employer significant difficulty or expense. “This was not a reasonable accommodation,” she says. “I was thinking, What did I get myself into? Do they not believe I’m Deaf? I need my interpreter all day. Why are you robbing me of the chance of doing my job?”

‘Pushed Aside’

Without her interpreter, Hall struggled. She rarely met the quota of 75 videos each moderator was expected to review over an eight-hour day. She often had to watch through a video in its entirety, sometimes more than an hour, before concluding she could not assess it. “I felt humiliated, realizing that I would not grow in my career,” she says.

Throughout that February, Hall spoke to managers across YouTube about the need for better transcriptions in the moderation software. They told her it would take weeks or more to improve them, possibly even years. She asked for a transfer to child safety, since she had heard from a colleague that visuals alone could be used to decide many of those videos. An HR complaint filed that spring led nowhere.

Black and disabled colleagues eventually helped secure Hall a transfer into Google’s Responsible AI and Human-Centered Technology division in July 2021. It is run by vice president Marian Croak, Google’s most distinguished Black female technical leader. Hall says Croak supported her and described what she’d been through as unacceptable. But even in the new role, Hall’s interpreter was restricted to non-confidential conversations.

Hall says the discrimination against her has continued under her new manager, who is also Black, leading to her exclusion from projects and meetings. Even when she’s present some coworkers don’t make much effort to include her. “My point of view is often not heard,” Hall says. In 2021, she joined two gatherings of Google’s Equitable AI Research Roundtable, an advisory body, but then wasn’t invited again. “I feel hidden and pushed aside,” she says.

Hall filed an internal complaint against her manager in March 2022, and an HR staffer has joined their one-on-one meetings since October of that year. One of the interpreters who has assisted Hall says the friction Deaf workers encounter is sadly unsurprising. “People truly don’t take the time to learn about their peers,” the interpreter says.

The allegations are notable in part because a civil rights audit Google commissioned found last March that it needs to do more to train managers. “One of the largest areas of opportunity is improving managers’ ability to lead a diverse workforce,” attorneys for WilmerHale wrote. Hawkins, the Google spokesperson, says all employees have access to inclusion training.

Hall says when she has access to an interpreter, they are rotated throughout the week, forcing her to repeatedly explain some technical concepts. “Google is going the cheap route,” Hall claims, saying her interpreters in university were more literate in tech jargon.

Kathy Kaufman, director of coordinating services at DSPA, says it pays above market rates, dedicates a small pool to each company so the vocabulary becomes familiar, hires tech specialists, and trains those who are not. Kaufman also declined to confirm that Google is a client or comment on its policies.

Google’s Hawkins says that the company is trying to make improvements. Google’s accommodations team is currently seeking employees to join a new working group to smooth over policies and procedures related to disabilities.

Beside Hall’s concerns, Deaf workers over the past two years have complained about Google’s plans—shelved, for now—to switch away from DSPA without providing assurances that a new interpreter provider would be better, according to a former Google employee, speaking on the condition of anonymity to protect their job prospects. Blind employees have had the human guides they rely on excluded from internal systems due to confidentiality concerns in recent years, and they have long complained that key internal tools, like a widely used assignment tracker, are incompatible with screen readers, according to a second former employee.

Advocates for disabled workers try to hold out hope but are discouraged. “The premise that everyone deserves a shot at every role rests on the company doing whatever it takes to provide accommodations,” says Stephanie Parker, a former senior strategist at YouTube who helped Hall navigate the Google bureaucracy. “From my experience with Google, there is a pretty glaring lack of commitment to accessibility.”

Not Recorded

Hall has been left to watch as colleagues hired alongside her as content moderators got promoted. More than three years after joining Google, she remains a level 2 employee on its internal ranking, defined as someone who receives significant oversight from a manager, making her ineligible for Google peer support and retention programs. Internal data shows that most L2 employees reach L3 within three years.

Last August, Hall started her own community, the Black Googler Network Deaf Alliance, teaching its members sign language and sharing videos and articles about the Black Deaf community. “This is still a hearing world, and the Deaf and hearing have to come together,” she says.

On the responsible AI team, Hall has been compiling research that would help people at Google working on AI services such as virtual assistants understand how to make them accessible to the Black Deaf community. She personally recruited 20 Black Deaf users to discuss their views on the future of technology for about 90 minutes in exchange for up to $100 each; Google, which reported nearly $74 billion in profit last year, would only pay for 13. The project was further derailed by an unexpected flaw in Google Meet, the company’s video chat service.

Hall’s first interview was with someone who is Deaf and Blind. The 90-minute call, which included two interpreters to help her and the subject converse, went well. But when Hall pulled up the recording to begin putting together her report, it was almost entirely blank. Only when Hall’s interpreter spoke did the video include any visuals. The signing between everyone on the call was missing, preventing her from fully transcribing the interview. It turned out that Google Meet doesn’t record video of people who aren’t vocalizing, even when their microphones are unmuted.

“My heart dropped,” Hall told WIRED using the video chat app Sivo, which allows all participants to see each other while a hearing person and sign language interpreter speak by phone. Hall spent the evening trying to soothe her devastation, meditating, praying, and playing with her dog, which she has trained in ASL commands.

Hall filed a support ticket and spoke to a top engineer for Google Meet who said fixing the issue wasn’t a priority. WIRED later found evidence that users had publicly reported similar issues for years. Microsoft Teams generally will record signing, but Hall wasn’t permitted to use it. She ended up hacking together a workflow for documenting her interviews by laboriously editing together Meet recordings and screen-captured video using tools that she paid $46 a month for out of her own pocket.

Company spokesperson Hawkins did not dispute Meet’s limitations but claims support for the Deaf community is a priority at Google, where work underway includes developing computer vision software to translate sign language.

Google leaders have often paid lip service to the importance of including people with diverse experiences in research and development, but Hall has found the reality lacking. Despite her understanding of the Black Deaf community and research into its needs, she says she is yet to be invited to support the sign translation work. In her experience, Google’s conception of diversity can be narrow. “In the AI department, a lot of conversations are around race and gender,” Hall says. “No one emphasizes disability.”

Her research showed Black, Deaf users are concerned about the potential for AI systems to misinterpret signs, generate poor captions, take jobs from interpreters, and disadvantage individuals who opt for manual interpretation. It underscored that companies need to consider whether new tools would make someone who is unable to hear feel closer or further from the people with whom they are communicating.

Hall presented her findings internally last December over a Google Meet call. Twenty-four colleagues joined, including a research director. Hall had been encouraged, including by Croak, to invite a much larger audience from across the company but ultimately stuck with the short list insisted upon by her manager. She didn’t even bother trying to record it.

15 notes

·

View notes

Text

2 notes

·

View notes

Text

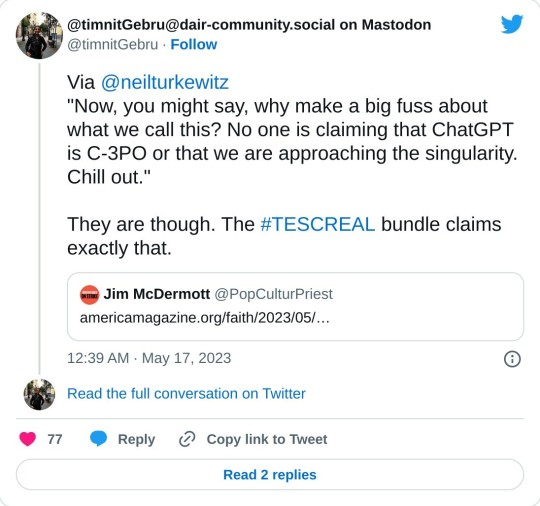

Don't mind me, just getting incredibly mad about Timnit Gebru's "TESCREAL" talk again.

You know, I will agree with her, there is a real problem with the upper class capitalist elite using ideas like Effective Altruism and Longtermism to make warped judgements that justify the centralization of power. There is a problem of overvaluing concerns like AI existential risk over the non-hypothetical problems that require more resources in the world today. There is a problem with medical paradigms that fetishize intelligence and physical ability in a way that echoes 20th century eugenicist rhetoric.

But what Gebru's talk/paper, which have sickeningly become a go-to leftist touchpoint for discussing tech, slanderously conflates whole philosophical movements into a "eugenics conspiracy" that is so myopically flattening that you have her arguing that things like the concept of "being rational" are modern eugenics. Forget transhumanism as radical self-determinism and self-modification, increasing human happiness by overcoming our biology, TESCREALs just want to make themselves superior (modern curative medical science is excluded from this logic, being tangible instead of speculative and thus too obviously good). Forget the fight to reduce scarcity, TESCREALs true agenda is to exploit minorities to enrich corporations! Forget trying to do good in the world, didn't you hear that Sam Bankman-Fried called himself an EA and yet was a bad guy? And safety in AI research? Nonsense, this is just part of the TESCREAL mythology of the AI godhead!

Gebru takes real problems in a bunch of fields and the culture surrounding them - problems that people are trying to address, including nominally her! - and declares a conspiracy where these problems are the flattened essence of these movements, essentially giving up on trying to improve matters. It's an argument supported by loose aesthetic associations and anecdotal cherrypicking, by taking tech CEOs at their word because they have the largest platform instead of contemplating that perhaps they have uniquely distorted understandings of the concepts they invoke, and a sneering condescension at anyone who placed in the "tech bro" box through aesthetic similarity.

I hate it, I hate it, I hate it, I hate it.

15 notes

·

View notes

Text

Our human understanding of coherence derives from our ability to recognize interlocutors’ beliefs and intentions within context. That is, human language use takes place between individuals who share common ground and are mutually aware of that sharing (and its extent), who have communicative intents which they use language to convey, and who model each others’ mental states as they communicate. As such, human communication relies on the interpretation of implicit meaning conveyed between individuals. The fact that human-human communication is a jointly constructed activity is most clearly true in co-situated spoken or signed communication, but we use the same facilities for producing language that is intended for audiences not co-present with us (readers, listeners, watchers at a distance in time or space) and in interpreting such language when we encounter it. It must follow that even when we don’t know the person who generated the language we are interpreting, we build a partial model of who they are and what common ground we think they share with us, and use this in interpreting their words.

Text generated by an LM [language model] is not grounded in communicative intent, any model of the world, or any model of the reader’s state of mind. It can’t have been, because the training data never included sharing thoughts with a listener, nor does the machine have the ability to do that. This can seem counter-intuitive given the increasingly fluent qualities of automatically generated text, but we have to account for the fact that our perception of natural language text, regardless of how it was generated, is mediated by our own linguistic competence and our predisposition to interpret communicative acts as conveying coherent meaning and intent, whether or not they do. The problem is, if one side of the communication does not have meaning, then the comprehension of the implicit meaning is an illusion arising from our singular human understanding of language (independent of the model). Contrary to how it may seem when we observe its output, an LM is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot.

On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? Emily M. Bender, Timnit Gebru, Angela McMillan-Major, Shmargaret Shmitchell [cheeky alias], & 3 others suppressed by Google.

https://doi.org/10.1145/3442188.3445922

[emphasis added]

#reading#linguistics#language modelling#ok sorry done with the Bender bender for now#lowkey assembling materials for probably my last syllabus

68 notes

·

View notes

Text

Appendix A: An Imagined and Incomplete Conversation about “Consciousness” and “AI,” Across Time

Every so often, I think about the fact of one of the best things my advisor and committee members let me write and include in my actual doctoral dissertation, and I smile a bit, and since I keep wanting to share it out into the world, I figured I should put it somewhere more accessible.

So with all of that said, we now rejoin An Imagined and Incomplete Conversation about “Consciousness” and “AI,” Across Time, already (still, seemingly unendingly) in progress:

René Descartes (1637): The physical and the mental have nothing to do with each other. Mind/soul is the only real part of a person.

Norbert Wiener (1948): I don’t know about that “only real part” business, but the mind is absolutely the seat of the command and control architecture of information and the ability to reflexively reverse entropy based on context, and input/output feedback loops.

Alan Turing (1952): Huh. I wonder if what computing machines do can reasonably be considered thinking?

Wiener: I dunno about “thinking,” but if you mean “pockets of decreasing entropy in a framework in which the larger mass of entropy tends to increase,” then oh for sure, dude.

John Von Neumann (1958): Wow things sure are changing fast in science and technology; we should maybe slow down and think about this before that change hits a point beyond our ability to meaningfully direct and shape it— a singularity, if you will.

Clynes & Klines (1960): You know, it’s funny you should mention how fast things are changing because one day we’re gonna be able to have automatic tech in our bodies that lets us pump ourselves full of chemicals to deal with the rigors of space; btw, have we told you about this new thing we’re working on called “antidepressants?”

Gordon Moore (1965): Right now an integrated circuit has 64 transistors, and they keep getting smaller, so if things keep going the way they’re going, in ten years they’ll have 65 THOUSAND. :-O

Donna Haraway (1991): We’re all already cyborgs bound up in assemblages of the social, biological, and techonological, in relational reinforcing systems with each other. Also do you like dogs?

Ray Kurzweil (1999): Holy Shit, did you hear that?! Because of the pace of technological change, we’re going to have a singularity where digital electronics will be indistinguishable from the very fabric of reality! They’ll be part of our bodies! Our minds will be digitally uploaded immortal cyborg AI Gods!

Tech Bros: Wow, so true, dude; that makes a lot of sense when you think about it; I mean maybe not “Gods” so much as “artificial super intelligences,” but yeah.

90’s TechnoPagans: I mean… Yeah? It’s all just a recapitulation of The Art in multiple technoscientific forms across time. I mean (*takes another hit of salvia*) if you think about the timeless nature of multidimensional spiritual architectures, we’re already—

DARPA: Wait, did that guy just say something about “Uploading” and “Cyborg/AI Gods?” We got anybody working on that?? Well GET TO IT!

Disabled People, Trans Folx, BIPOC Populations, Women: Wait, so our prosthetics, medications, and relational reciprocal entanglements with technosocial systems of this world in order to survive makes us cyborgs?! :-O

[Simultaneously:]

Kurzweil/90’s TechnoPagans/Tech Bros/DARPA: Not like that. Wiener/Clynes & Kline: Yes, exactly.

Haraway: I mean it’s really interesting to consider, right?

Tech Bros: Actually, if you think about the bidirectional nature of time, and the likelihood of simulationism, it’s almost certain that there’s already an Artificial Super Intelligence, and it HATES YOU; you should probably try to build it/never think about it, just in case.

90’s TechnoPagans: …That’s what we JUST SAID.

Philosophers of Religion (To Each Other): …Did they just Pascal’s Wager Anselm’s Ontological Argument, but computers?

Timnit Gebru and other “AI” Ethicists: Hey, y’all? There’s a LOT of really messed up stuff in these models you started building.

Disabled People, Trans Folx, BIPOC Populations, Women: Right?

Anthony Levandowski: I’m gonna make an AI god right now! And a CHURCH!

The General Public: Wait, do you people actually believe this?

Microsoft/Google/IBM/Facebook: …Which answer will make you give us more money?

Timnit Gebru and other “AI” Ethicists: …We’re pretty sure there might be some problems with the design architectures, too…

Some STS Theorists: Honestly this is all a little eugenics-y— like, both the technoscientific and the religious bits; have you all sought out any marginalized people who work on any of this stuff? Like, at all??

Disabled People, Trans Folx, BIPOC Populations, Women: Hahahahah! …Oh you’re serious?

Anthony Levandowski: Wait, no, nevermind about the church.

Some “AI” Engineers: I think the things we’re working on might be conscious, or even have souls.

“AI” Ethicists/Some STS Theorists: Anybody? These prejudices???

Wiener/Tech Bros/DARPA/Microsoft/Google/IBM/Facebook: “Souls?” Pfffft. Look at these whackjobs, over here. “Souls.” We’re talking about the technological singularity, mind uploading into an eternal digital universal superstructure, and the inevitability of timeless artificial super intelligences; who said anything about “Souls?”

René Descartes/90’s TechnoPagans/Philosophers of Religion/Some STS Theorists/Some “AI” Engineers: …

[Scene]

----------- ----------- ----------- -----------

Read Appendix A: An Imagined and Incomplete Conversation about “Consciousness” and “AI,” Across Time at A Future Worth Thinking About

and read more of this kind of thing at: Williams, Damien Patrick. Belief, Values, Bias, and Agency: Development of and Entanglement with "Artificial Intelligence." PhD diss., Virginia Tech, 2022. https://vtechworks.lib.vt.edu/handle/10919/111528.

#ableism#afrofuturism#alan turing#alison kafer#alterity#anselm's ontological argument for the existence of god#artificial intelligence#astrobiology#audio#autonomous created intelligence#autonomous generated intelligence#autonomously creative intelligence#bodies in space#bodyminds#communication#cybernetics#cyborg#cyborg anthropology#cyborg ecology#cyborgs#darpa#decolonization#decolonizing mars#digital#disability#disability studies#distributed machine consciousness#distributed networked intelligence#donna haraway#economics

17 notes

·

View notes