#computer vision

Text

Flexible circuit boards manufacturing (JLCPCB, 2023)

Inkjet print head uses a fiducial camera for registering an FPC panel, and after alignment it prints graphics with UV-cureable epoxy in two passes

#jlcpcb#pcb#fpc#manufacturing#manufacture#factory#electronics#fiducial camera#camera#cv#opencv#computer vision

114 notes

·

View notes

Text

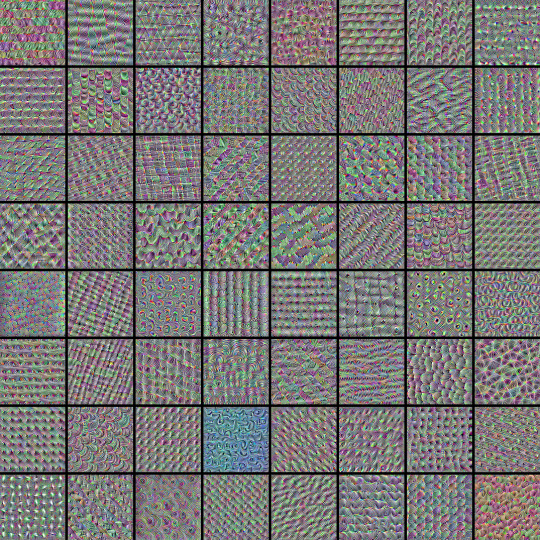

How do you read a scroll you can’t open?

With lasers!

In 79 AD, Mount Vesuvius erupts and buries the library of the Villa of the Papyri in hot mud and ash

The scrolls are carbonized by the heat of the volcanic debris. But they are also preserved. For centuries, as virtually every ancient text exposed to the air decays and disappears, the library of the Villa of the Papyri waits underground, intact

Then, in 1750, our story continues:

While digging a well, an Italian farm worker encounters a marble pavement. Excavations unearth beautiful statues and frescoes – and hundreds of scrolls. Carbonized and ashen, they are extremely fragile. But the temptation to open them is great; if read, they would more than double the corpus of literature we have from antiquity.

Early attempts to open the scrolls unfortunately destroy many of them. A few are painstakingly unrolled by an Italian monk over several decades, and they are found to contain philosophical texts written in Greek. More than six hundred remain unopened and unreadable.

Using X-ray tomography and computer vision, a team led by Dr. Brent Seales at the University of Kentucky reads the En-Gedi scroll without opening it. Discovered in the Dead Sea region of Israel, the scroll is found to contain text from the book of Leviticus.

This achievement shows that a carbonized scroll can be digitally unrolled and read without physically opening it.

But the Herculaneum Papyri prove more challenging: unlike the denser inks used in the En-Gedi scroll, the Herculaneum ink is carbon-based, affording no X-ray contrast against the underlying carbon-based papyrus.

To get X-rays at the highest possible resolution, the team uses a particle accelerator to scan two full scrolls and several fragments. At 4-8µm resolution, with 16 bits of density data per voxel, they believe machine learning models can pick up subtle surface patterns in the papyrus that indicate the presence of carbon-based ink

In early 2023 Dr. Seales’s lab achieves a breakthrough: their machine learning model successfully recognizes ink from the X-ray scans, demonstrating that it is possible to apply virtual unwrapping to the Herculaneum scrolls using the scans obtained in 2019, and even uncovering some characters in hidden layers of papyrus

On October 12th: the first words have been officially discovered in the unopened Herculaneum scroll! The computer scientists won 40,000 dollars for their work and have given hope that the 700,000 grand prize is within reach: to read an entire unwrapped scroll!

The grand prize: first team to read a scroll by December 31, 2023

#vesuvius#Vesuvius challenge#papyrus#ancient rome#ancient greece#x ray tomography#machine learning#computer vision

60 notes

·

View notes

Text

By Mobotato

Music on

#nestedneons#cyberpunk#cyberpunk art#cyberpunk aesthetic#cyberpunk artist#art#cyberwave#scifi#control#concept#3dart#3d illustration#concept art#cctv#cctv camera#computer vision#the dark side of ai#surveillance#cryptoart#crypto art

42 notes

·

View notes

Text

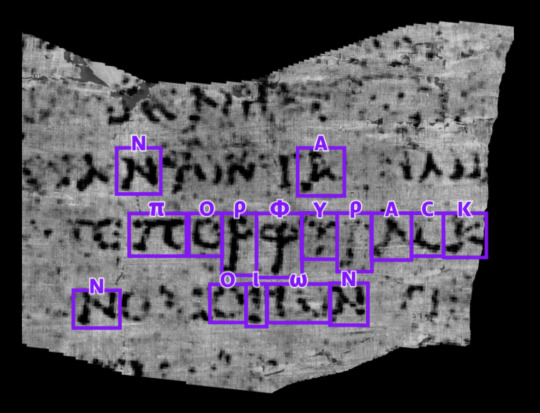

2023.08.31

i have no idea what i'm doing!

learning computer vision concepts on your own is overwhelming, and it's even more overwhelming to figure out how to apply those concepts to train a model and prepare your own data from scratch.

context: the public university i go to expects the students to self-study topics like AI, machine learning, and data science, without the professors teaching anything TT

i am losing my mind

based on what i've watched on youtube and understood from articles i've read, i think i have to do the following:

data collection (in my case, images)

data annotation (to label the features)

image augmentation (to increase the diversity of my dataset)

image manipulation (to normalize the images in my dataset)

split the training, validation, and test sets

choose a model for object detection (YOLOv4?)

training the model using my custom dataset

evaluate the trained model's performance

so far, i've collected enough images to start annotation. i might use labelbox for that. i'm still not sure if i'm doing things right 🥹

if anyone has any tips for me or if you can suggest references (textbooks or articles) that i can use, that would be very helpful!

54 notes

·

View notes

Text

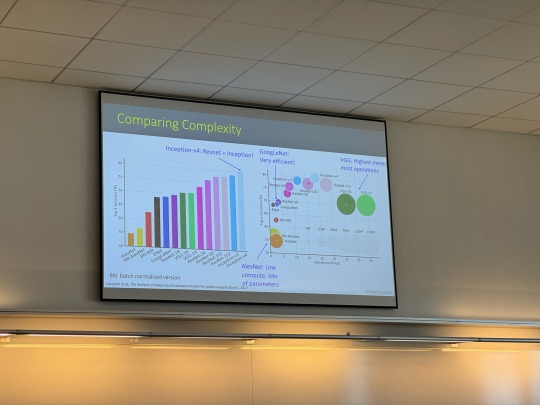

Day 19/100 days of productivity | Fri 8 Mar, 2024

Visited University of Connecticut, very pretty campus

Attended a class on Computer Vision, learned about Google ResNet, which is a type of residual neural network for image processing

Learned more about the grad program and networked

Journaled about my experience

Y’all, UConn is so cool! I was blown away by the gigantic stadium they have in the middle of campus (forgot to take a picture) for their basketball games, because apparently they have the best female collegiate basketball team in the US?!? I did not know this, but they call themselves Huskies, and the branding everyone on campus is on point.

#100 days of productivity#grad school#computer vision#google resnet#neural network#deep learning#UConn#uconn huskies#uconn women’s basketball#university of Connecticut

9 notes

·

View notes

Text

Heart Cell Line-up

Combination of microscopy and computer vision produces the first 3D reconstruction of mouse heart muscle cell orientation at micrometre scale, furthering understanding of heart wall mechanics and electrical conduction

Read the published research paper here

Image from work by Drisya Dileep and Tabish A Syed, and colleagues

Centre for Cardiovascular Biology & Disease, Institute for Stem Cell Science & Regenerative Medicine, Bengaluru, India; School of Computer Science & Centre for Intelligent Machines, McGill University, & MILA– Québec AI Institute, Montréal, QC, Canada

Video originally published with a Creative Commons Attribution 4.0 International (CC BY 4.0)

Published in The EMBO Journal, September 2023

You can also follow BPoD on Instagram, Twitter and Facebook

20 notes

·

View notes

Text

Denmark: A significant healthtech hub

- By InnoNurse Staff -

According to data platform Dealroom, Danish healthtech firms raised a stunning $835 million in 2023, an 11% rise over the previous record set in 2021.

Read more at Tech.eu

///

Other recent news and insights

A 'Smart glove' could improve the hand movement of stroke sufferers (The University of British Columbia)

Oxford Medical Simulation raises $12.6 million in Series A funding to address the significant healthcare training gap through virtual reality (Oxford Medical Simulation/PRNewswire)

PathKeeper's innovative camera and AI software for spinal surgery (PathKeeper/PRNewswire)

Ezdehar invests $10 million in Yodawy to acquire a minority stake in the Egyptian healthtech (Bendada.com)

#denmark#startups#innovation#smart glove#stroke#neuroscience#iot#Oxford Medical Simulation#health tech#digital health#medtech#education#pathkeeper#ai#computer vision#surgery#ezdehar#yodawy#egypt#mena

13 notes

·

View notes

Text

By Jorge Heraud, Vice President of Automation & Autonomy, John Deere

"Computer vision is extending the human senses. Whether it’s allowing cars to drive themselves, automating production lines for safety and efficiency, or allowing us to unlock our phones with our faces, computer vision can help make human work more productive and accurate."

"Computer vision helps farmers “see” crops in ways the human eye can’t. It allows farmers to manage their limited time and resources — like fertilizer, herbicides, and seeds — and gives them data and insights to make timely, accurate decisions for a healthier, more successful crop. This helps farmers be more productive, profitable, and sustainable."

"For example, the human eye can’t distinguish a weed from a crop while driving a tractor 15 miles per hour over a field. But a sprayer equipped with computer vision can. Using cameras, onboard processors, and millions of training images, a technologically advanced sprayer can determine if a plant it sees in the field is a weed or crop and, in milliseconds, spray only on the weed with herbicide, generating significant cost savings for farmers and increasing the sustainability of their operations."

12 notes

·

View notes

Text

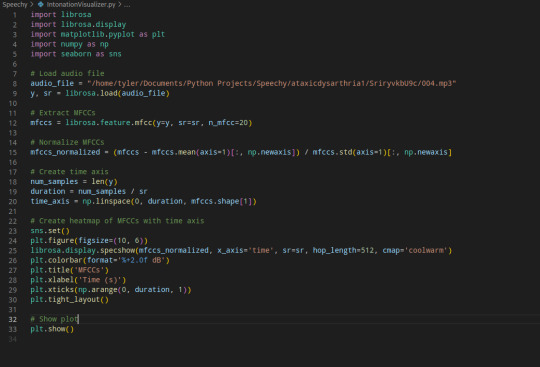

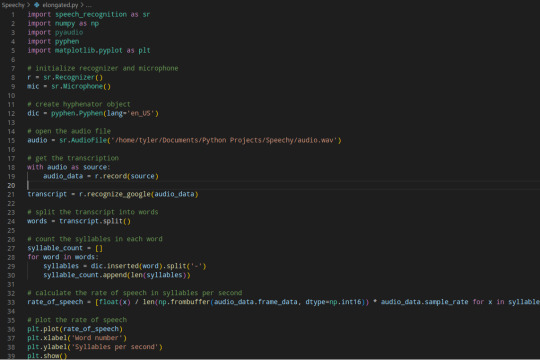

Analyzing An Ataxic Dysarthria Patient's Speech with Computer Vision and Audio Processing

Hey everyone, so as you know I have been doing research on patients like myself who have Ataxic Dysarthria and other neurological speech disorders related to diseases and conditions that affect the brain. I was analyzing this file

with a few programs that I have written.

The findings are very informative and I am excited that I am able to explain this to my Tumblr following as I feel it not only promotes awareness but provides an understanding of what we go through with Ataxic Dysarthria.

Analysis of the audio file with an Intonation Visualizer I built

As you can tell this uses a heatmap to visualize loudness and softness of a speaker's voice. I used it to analyze the file and I found some really interesting and telling signs of Ataxic Dysarthria

At 0-1 seconds it is mostly pretty quiet (which is normal because it is harder for patients with AD to start their speaking off. You can notice that around 1-3 seconds it gets louder, and then when she speaks its clearer and louder than the patients voice. However the AD makes the patients speech constantly rise and fall in loudness from around -3 to 0 decibels most of the audio when the patient is speaking. The variation though between 0 and -3 varies quickly though which is a common characteristic in AD

The combination of the constant rising and falling in loudness and intonation as well as problems getting sentences started is one of the things that makes it so hard for people to understand those with Ataxic Dysarthria.

The second method I used is using a line graph (plotted) that gives an example of the rate of speech and elongated syllables of the patient.

As you can see I primarily used the Google Speech Recognition library to transcribe and count the syllables using Pyphen via "hyphenated" (elongated) words in the speech of the patient. This isn't the most effective method but it worked well for this example and here is the results plotted out using Matplotlib:

As you can see when they started talking at first there was a rise from the softer speech, as the voice of the patient got louder, they were speaking faster (common for those with AD / and HD) my hypothesis (and personal experience) is that this is how we try to get our words out where we can be understood by "forcing" out words resulting in a rise and fall of syllables / rate of speech that we see at the first part. The other spikes typically happen when she speaks but there is another spike at the end which you can see as well when the patient tries to force more words out.

This research already indicates a pretty clear pattern what is going on in the patients speech. As they try to force out words, their speech gets faster and thus gets louder as they try to communicate.

I hope this has been informative for those who don't know much about speech pathology or neurological diseases. I know it's already showing a lot of exciting progress and I am continuing to develop scripts to further research on this subject so maybe we can all understand neurological speech disorders better.

As I said, I will be posting my research and findings as I go. Thank you for following me and keeping up with my posts!

#research#medical research#medical technology#speech pathology#speech disorder#neurology#ataxic dysarthria#ataxia#machine learning#artificial intelligence#ai#computer vision#audio processing#audio engineering#data analysis#data analyst#data science#python 3#python programming#python programmer#python code

38 notes

·

View notes

Text

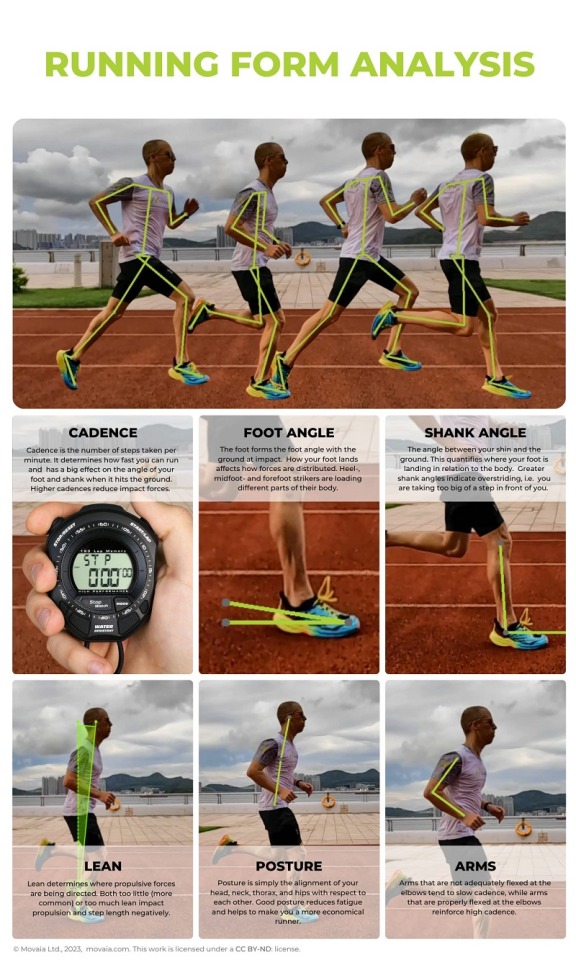

Running Form Analysis

This running form analysis visualization shows key metrics that are considered during a running form analysis, such as cadence, lean, posture, foot angle. Visual examples of these running form analysis elements are presented with a short description. Visit: https://www.movaia.com

#Running Form Analysis#Movaia#Running Form#Gait Assessment#Running Technique#Running Gait Analysis#Online Running Form Analysis#Improve running form#AI#Computer Vision

13 notes

·

View notes

Text

[1/100 of productivity]

Starting very slow, woke up late was tired most of the day which didn't help to achieve the goals I've set.

I have an assignment deadline for 12/03 which I'm stuck on, hope to manage to solve it in time.

In the meantime I am enjoying my last days of being home with before going back to hustling in paris.

It has been a long time since I baked something, so it felt refreshing trying recipes out again.

🎧Watched a podcast

🎵Music : nebni - lotfi bouchnak ft wmd and emp1re

🍰 Baked a cake which turned out delicious

Goals for the week :

Finish computer vision assignment

Write brain dump file about the last project I've done

Take notes from my nlp lesson

Do my 2 nlp labs

Prepare the lessons I am going to teach for next week

#100 days of productivity#Need to beat procrastination#Nlp#Computer vision#AI#engineering student#tunisia#france

2 notes

·

View notes

Text

Termovision HUD from The Terminator (1984)

A head-up display (HUD) is a transparent display that presents data over a visual screen. A Termovision refers to HUD used by Terminators to display analyses and decision options.

#terminator#computer vision#pattern recognition#red#sci fi#hud#OCR#termovision#natural language processing

2 notes

·

View notes

Text

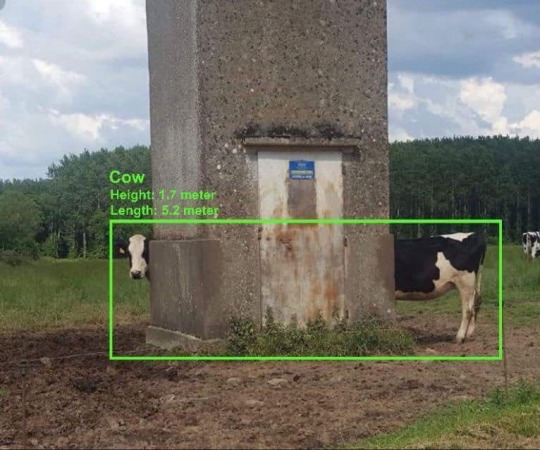

Day 37/100 days of productivity | Wed Mar 27, 2024

Worked on integrating feedback on my conference poster draft

Sent my conference poster draft to some colleagues for more feedback

Created slides for tomorrow’s Data Science Reading Group that I lead at work, the paper is about YOLO (an object detection algorithm in computer vision), there was this hilarious figure in the paper (see pic)

Brainstormed for an upcoming project briefing with my colleague, made more slides (are you sensing a theme yet?)

Read more of the paper so I can present it well

Brainstormed about my potential research

#100 days of productivity#conferences#poster#journal club#yolo#computer vision#yolo computer vision#slides#research#brainstorming#unhinged papers

2 notes

·

View notes