#datacenters

Photo

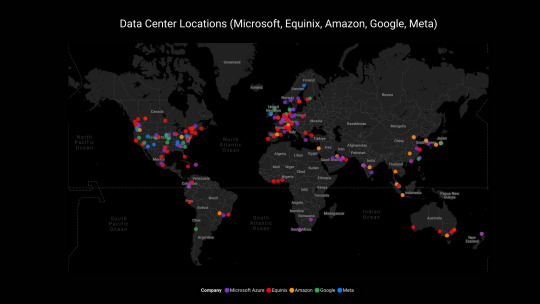

Data Centers Around the World

by u/sports_metaphors

70 notes

·

View notes

Photo

Council Bluffs Iowa data center 1 (2022) 76 cm x 83cm Council Bluffs Iowa data center 2 (2023) 76 cm x 83cm . . . . . watching the watcher: 64CP+9C Council Bluffs, Iowa, USA . . . . . #watchingthewatcher #google #datacenters #dataart #gps #inkonpaper #millionsoftinylines #iseeyou (at Council Bluffs, Iowa) https://www.instagram.com/p/CqLbffYIhIc/?igshid=NGJjMDIxMWI=

8 notes

·

View notes

Text

Gemeenteraad geeft voorwaarden bij vergunningverlening datacenters

Politiek: 'Gemeenteraad geeft voorwaarden bij vergunningverlening datacenters'

HOLLANDS KROON – De vestigingsvoorwaarden voor datacenters stonden op de agenda van de raadsvergadering van 21 maart 2024. Het dagelijks bestuur van de provincie Noord-Holland, Gedeputeerde Staten, heeft in november 2023 de Richtlijn duurzame vestigingsvoorwaarden datacenters Noord-Holland vastgesteld voor datacenters. Deze voorwaarden zijn opgesteld samen met de gemeenten Amsterdam,…

View On WordPress

0 notes

Text

Nvidia Ups Ante in AI Chip Game With New Blackwell Architecture

Nvidia is pumping up the power in its line of artificial intelligence chips with the announcement Monday of its Blackwell GPU architecture at its first in-person GPU Technology Conference (GTC) in five years.

https://jpmellojr.blogspot.com/2024/03/nvidia-ups-ante-in-ai-chip-game-with.html

1 note

·

View note

Text

Public Sector Cloud Computing Challenges and Opportunities

The difficulties and possibilities of using cloud computing to public sector

Government cloud computing usage is driven by the epidemic. As cloud computing grows increasingly widespread, government, educational, and healthcare institutions will undergo a major transformation. The recent and revolutionary transition to cloud computing, which has both and cons, may reduce public sector efficiency.

How is the public sector using cloud computing?

Cloud computing reduces costs, improves service delivery, and boosts efficiency in the public sector. In addition to personal information, they manage and preserve a large amount of research data. Solutions for cloud storage provide scalable and efficient data management.

Infrastructure as a Service (IaaS)

The government offers online citizen services, online tax filing, online benefit applications, and online permit issuance.Citizens may use these services anytime, anywhere.It no longer requires expensive on-site data centers and infrastructure. Because cloud computing is more agile, scalable, and affordable, the public sector can better meet citizen and government demands.

It becomes clear that the cutting-edge technology known as cloud computing has a big influence on the public sector. This article examines the promise and issues of cloud computing to help readers better understand how it is transforming the public sector.

Cloud Computing’s Challenges for the Public Sector

Cloud Security

This is the main barrier preventing the public sector from adopting cloud computing. Since they handle sensitive data, government agencies are vulnerable to severe security breaches. To protect sensitive data, public sector enterprises must ensure that cloud service providers use data encryption, access limits, and periodic security audits.

Public Cloud Services

Platforms for cloud computing could be difficult to integrate with legacy systems that the public sector often uses. Integrated systems may be challenging and time-consuming. Planning is required for data transfer, system integration, and migration techniques in order to prevent interruptions and ensure smooth operations.

Cost Management

Staff training and the switch to cloud-based solutions might have short-term financial expenses, but using cloud-based solutions could result in long-term cost savings.

Platform as a Service (PaaS)

Vendor lock-in is a risk while using the infrastructure and services of a single cloud provider. To avoid over-reliance, public sector organizations must be cautious when choosing a supplier.

A variety of legal and regulatory issues might arise from the usage of cloud computing in the public sector. It becomes essential to follow laws pertaining to data residency, privacy, and industry-specific requirements. Public sector companies need to thoroughly assess cloud providers to make sure they follow the necessary compliance criteria.

Prospects for Cloud Computing in the Public Sector

1. The ability to climb

Cloud services help government organizations adapt quickly to changing workloads, particularly in times of crisis or increased demand.

2. Reduced Outlays

There are many ways that cloud computing may be used by the public sector to save IT expenses. For example, cloud companies may provide economies of scale by dividing the cost of infrastructure across several clients. Cloud service providers often provide more economical and efficient means of delivering IT services.

3. Enhanced Safety

Public sector organizations utilize cloud computing to strengthen their security protocols since it has several features that assist safeguard data and systems. Cloud service providers may provide services like data encryption, access control, and disaster recovery.

4. Government Cloud Initiatives

Cloud services were crucial during the COVID-19 pandemic because they allowed users to access vital apps and data from a distance. This enables improved provision of government services to personnel and clients from any place.

5. Disaster Recovery

The reliable disaster recovery solutions offered by cloud-based services enable the fast restoration of government data and services in the event of an emergency or outage.

The public sector has completely modernized thanks to cloud computing, which has altered how government organizations run and communicate with the general public. The significant potential of cloud computing may assist public sector organizations make data-driven decisions, optimize operations, and enhance service delivery.

Data Privacy

Many opportunities, but also unique obstacles. Due to vendor lock-in, pricing limits, data security and privacy, and regulatory compliance, strategic planning is essential. Public sector enterprises must establish a balance between these complicated issues and cloud computing.

FAQS

What is public cloud advantages and disadvantages?

Less expensive: The primary benefit of choosing third-party public cloud providers is that you can avoid managing services internally or having to buy your own servers. This lowers overhead and expenses related to IT. The cloud provider you are utilizing will update and manage your servers, so you won’t need to do that anymore.

What are the benefits and challenges of cloud computing?

1.Cloud Computing’s Scalability and Flexibility Benefits

2.Economy of Cost

3.Enhanced Cooperation and Availability

4.Strengthened Security and Resilience to Disasters

5.Service dependability, vendor lock-in, and data security and privacy

6.Limitations on Data Transfer and Bandwidth

7.Compliance to Guidelines

What are the benefits of public cloud cost?

Indeed, according to 39% of businesses, using the cloud allowed them to completely save costs. Access to pre-built infrastructure reduces redundancy and saves time, which lowers labor and operational expenses.

Read more on Govindhtech.com

#PublicSector#CloudComputing#cloudstorage#IaaS#datacenters#Cost Management#PaaS#technews#technology#govindhtech

0 notes

Text

Data Center Industry | Rugged Monitoring

Rugged Monitoring offers state of the art comprehensive monitoring solutions for various electrical assets in operation across the data center. The solutions with its advanced monitoring capabilities and analysis of the complete sensitive equipment increases stability and detects critical situations.

0 notes

Text

Maximizing ROI from HR Data Centers: Driving Value for the C-Suite

In the dynamic landscape of modern business, the role of HR data centers has evolved beyond conventional administrative functions. Today, these data hubs play a pivotal role in shaping strategic decisions, driving efficiency,

Also Read: 5 Ways AI is Transforming Talent Acquisition

and maximizing return on investment (ROI) for organizations. This article explores the critical intersection of HR and data centers, delving into the nuances of people analytics, HR analytics tools, and innovative data center solutions. The insights presented here aim to showcase the immense potential of HR data centers in delivering tangible value to organizations.

Data-Center Evolution: Shaping the Future of HR

According to a recent study, organizations leveraging cloud data centers witness a 25% increase in the speed and accuracy of HR analytics, leading to more informed decision-making.

The evolution of data centers, including cloud data centers and AWS data centers, has significantly impacted how HR functions operate. Modern HR data centers go beyond conventional storage; they serve as the backbone for people analytics, allowing organizations to derive actionable insights from vast pools of HR data. For instance, cloud data centers provide scalable and flexible infrastructure, enabling HR analytics tools to process and analyze massive datasets efficiently.

HR Analytics: A Strategic Imperative

The integration of HR analytics into data center architecture is pivotal for organizations seeking a competitive edge. HR analytics encompasses a range of tools and methodologies that enable the C-suite to make data-driven decisions concerning talent management, workforce planning, and employee engagement. Leading companies leverage HR analytics to develop comprehensive HR dashboards that offer real-time insights into key performance indicators and workforce trends.

Netflix strategically utilizes HR analytics to personalize content recommendations for employees, fostering a culture of individualized professional development and growth.

Data Center Networking: Facilitating Seamless HR Operations

Efficient data center networking is crucial for seamless HR operations. It ensures that HR analytics tools can access and process data in real time, providing accurate insights. Organizations with streamlined data center networking report a 30% reduction in the time required to complete HR processes, enhancing overall operational efficiency.

The integration of talent analytics into data center services enables organizations to optimize HR processes, from recruitment to employee retention.

Data Center Management and Solutions: Navigating the HR Landscape

Effective data center management is central to harnessing the full potential of HR data. Data center solutions offer comprehensive services, from storage to automation, supporting HR analytics initiatives. Leading data center solution companies, like IBM and Cisco, have pioneered HR-centric solutions, emphasizing the role of data centers in transforming HR operations. This showcases how data center solutions drive innovation, such as predictive analytics for workforce planning and automated reporting for compliance.

Explore HRtech News for the latest Tech Trends in Human Resources Technology.

0 notes

Text

Microsoft certifica los servicios prestados desde su región cloud en España en conformidad con el Nivel Alto del Esquema Nacional de Seguridad

Microsoft

Microsoft certifica los servicios prestados desde su región cloud en España en conformidad con el Nivel Alto del Esquema Nacional de Seguridad

Esta certificación proporciona a sus clientes los máximos estándares de seguridad a la hora de adoptar las soluciones Microsoft 365, Dynamics 365 o todos los servicios de Azure -incluyendo los que dan soporte a la Inteligencia Artificial…

View On WordPress

0 notes

Text

List of Top 10 Data Center Providing Companies in India

India's digital economy has experienced unprecedented growth due to a boom in internet users, an increase in smartphone usage, and the rapid adoption of cloud services. Every single person has access to the internet. A robust data infrastructure is required as a result of this boom, which has resulted in a large influx of data.

0 notes

Text

HostGator Data Centers: Ensuring Reliable Hosting

I. Introduction

A. Brief Overview of the Importance of Data Centers in Web Hosting

In the ever-evolving landscape of online presence, the backbone of website performance lies within the intricacies of data centers. These technological hubs serve as the nerve centers, orchestrating the seamless functioning of websites by managing and storing vast amounts of data. The significance of data centers cannot be overstated, as they play a pivotal role in shaping the digital experience for users worldwide.

Data centers serve as the physical repositories for the virtual realms we navigate daily. These facilities house an intricate network of servers, storage systems, and networking equipment, all meticulously organized to ensure optimal efficiency. The strategic placement of data centers across the globe contributes to reduced latency and faster access to data, ultimately enhancing user experience.

B. Significance of Reliable Hosting for Website Performance

Reliable hosting stands as the cornerstone of a website's performance. The choice of a hosting provider can make or break the user experience, influencing factors such as site speed, uptime, and overall functionality. In a digital era where attention spans are fleeting, a website's ability to load swiftly and consistently is paramount.

The reliability of hosting is not merely about ensuring a website is accessible at all times; it extends to providing a secure and stable environment for data storage and transmission. Downtime, often the bane of online existence, can have severe repercussions, ranging from lost revenue for e-commerce platforms to a dent in reputation for content-driven websites.

In this intricate dance of digital dynamics, the interplay between data centers and hosting providers becomes the linchpin. The effectiveness of this symbiotic relationship determines whether a website thrives or falters in the competitive online ecosystem. As we delve deeper into the nuances of web hosting, we unravel the complexities that underscore the seamless functioning of the digital landscape.

II. HostGator's Commitment to Quality Data Centers

In the intricate landscape of web hosting, HostGator stands out as a frontrunner, and a pivotal element contributing to their prowess is their unwavering commitment to top-tier data centers.

A. Overview of HostGator as a Leading Hosting Provider

HostGator, a stalwart in the hosting industry, has earned its reputation through a combination of robust features, reliable services, and a customer-centric approach. At the core of their operational excellence lies a network of state-of-the-art data centers strategically positioned for optimal performance and reliability.

HostGator's commitment to quality is reflected in the meticulous planning and execution of their data center strategy. These centers serve as the backbone of their hosting services, ensuring websites hosted with them experience minimal downtime, swift loading times, and fortified security measures.

B. Emphasis on the Role of Data Centers in Their Infrastructure

The role of data centers in HostGator's infrastructure is paramount. These facilities are not merely physical locations for hosting servers; they are precision-engineered hubs designed to deliver a seamless and efficient hosting experience.

Redundancy and Reliability: HostGator's data centers are equipped with redundant systems, from power sources to network connections. This redundancy minimizes the risk of service disruptions, providing a robust and reliable hosting environment.

Advanced Security Protocols: Security is a non-negotiable aspect of HostGator's data centers. They employ cutting-edge security protocols, including surveillance systems, biometric access controls, and fire suppression systems, to safeguard servers and, by extension, the hosted websites.

Scalability and Flexibility: HostGator's data centers are designed with scalability in mind. This means they can seamlessly adapt to the evolving needs of websites, ensuring optimal performance even as businesses grow and experience increased traffic.

Environmental Considerations: In a nod to environmental responsibility, HostGator integrates eco-friendly practices within their data centers. This includes energy-efficient hardware, cooling systems, and a commitment to reducing their overall carbon footprint.

In essence, HostGator's data centers epitomize the brand's dedication to providing hosting solutions that go beyond mere functionality. They represent a commitment to excellence, reliability, and innovation, making HostGator a hosting provider of choice for businesses and individuals seeking a premium web hosting experience.

III. Key Features of HostGator Data Centers

A. Redundancy and Reliability

Multiple Data Center Locations:

HostGator ensures optimal performance and reliability by strategically placing data centers in multiple locations. This geographical distribution minimizes the risk of service interruptions due to regional issues such as natural disasters or network outages.

Backup Systems and Failover Mechanisms:

The data centers employ robust backup systems and failover mechanisms to guarantee uninterrupted service. In the event of hardware failures or unexpected incidents, these mechanisms seamlessly redirect traffic and operations to alternative systems, preventing downtime.

B. State-of-the-Art Security

Physical Security Measures:

HostGator prioritizes the physical security of its data centers. Access to these facilities is strictly controlled and monitored. Security measures include biometric authentication, surveillance cameras, and 24/7 on-site security personnel. These precautions safeguard against unauthorized access and potential physical threats.

Cybersecurity Protocols:

The data centers implement cutting-edge cybersecurity protocols to protect against digital threats. This includes firewalls, intrusion detection and prevention systems, and regular security audits. HostGator stays proactive in addressing emerging cyber threats, ensuring the integrity and confidentiality of the hosted data.

This robust combination of redundancy, reliability, and state-of-the-art security measures establishes HostGator's data centers as a foundation for a secure and stable hosting environment.

Infrastructure and Technology: Unveiling the Core of Web Hosting

In the intricate world of web hosting, the backbone lies in its infrastructure and technology. This section delves into the hardware specifications and network architecture, shedding light on the intricacies that empower hosting providers to deliver optimal performance.

A. Hardware Specifications

1. Server Types and Configurations

Web hosting is a sophisticated ballet of servers, each playing a unique role. From shared hosting on a single server to dedicated servers catering to a sole entity, understanding the spectrum of server types is crucial.

Shared Hosting Servers: Ideal for entry-level websites, where multiple sites share a single server.

Virtual Private Servers (VPS): Mimics a dedicated server within a shared environment, offering enhanced control and resources.

Dedicated Servers: Exclusively dedicated to one user, ensuring maximum control and resources.

2. Scalability Options for Growing Websites

As your website blossoms, scalability becomes paramount. Hosting providers must offer seamless transitions between hosting plans or scalable resources to accommodate increased traffic and data.

Vertical Scaling: Upgrading resources within the same server type.

Horizontal Scaling: Distributing the workload across multiple servers for enhanced performance.

B. Network Architecture

1. High-Speed Connectivity

The lifeline of web hosting is the network that interconnects servers and users. High-speed connectivity is pivotal for ensuring swift data transfer and a seamless user experience.

Bandwidth: The amount of data that can be transferred in a specific time period.

Latency: The time it takes for data to travel between the server and the user.

2. Load Balancing for Optimal Performance

Load balancing acts as the maestro, ensuring that no single server bears excessive traffic, thereby preventing downtimes and slowdowns.

Round Robin Load Balancing: Distributing traffic equally among servers.

Least Connections Load Balancing: Routing traffic to the server with the fewest active connections.

In unraveling the intricacies of hardware specifications and network architecture, we demystify the technological marvels that underpin a robust web hosting service. This knowledge empowers users to make informed choices aligned with their website's present needs and future aspirations.

Below is a set of frequently asked questions and answers related to the topic of "HostGator Data Centers: Ensuring Reliable Hosting."

Q1: What are HostGator data centers, and why are they important?

A1: HostGator data centers are facilities equipped to house computer systems and components. They are crucial for hosting services, ensuring websites are accessible and responsive. These centers play a pivotal role in delivering reliable hosting solutions.

Q2: How many data centers does HostGator have?

A2: HostGator operates multiple data centers strategically located around the globe. The exact number may change, but they typically have data centers in different regions to enhance performance and reliability.

Q3: What measures are in place to ensure data center security?

A3: HostGator employs advanced security measures, including restricted access, surveillance systems, and advanced fire detection and suppression systems, to safeguard data center infrastructure and the hosted websites.

Q4: How does HostGator ensure the reliability of its hosting services through data centers?

A4: HostGator invests in redundant hardware, power sources, and network connections within its data centers. This redundancy minimizes the risk of downtime and ensures a consistent and reliable hosting experience for users.

Q5: Can users choose the location of the data center for their hosting services?

A5: HostGator offers different hosting plans with servers in various locations. While shared hosting may not provide location choices, some plans, like VPS and dedicated hosting, often allow users to select their preferred data center location.

Q6: How does the location of a data center impact website performance?

A6: The closer a data center is to the target audience, the faster the website loads. HostGator's global network of data centers aims to provide optimal performance by reducing latency and ensuring a seamless user experience.

Q7: Are HostGator data centers environmentally friendly?

A7: HostGator is committed to environmental sustainability. While specific practices may vary, many data centers utilize energy-efficient technologies and renewable energy sources to minimize their environmental impact.

Q8: What backup measures are in place in HostGator data centers?

A8: HostGator regularly backs up data to prevent loss in case of unforeseen events. Users are also encouraged to perform their backups for added data security.

Q9: How does HostGator handle power outages in its data centers?

A9: HostGator employs backup power systems, such as generators and uninterruptible power supply (UPS) units, to ensure continuous operation during power outages and to prevent disruptions to hosted websites.

Q10: Can users visit HostGator data centers?

A10: Generally, access to data centers is restricted for security reasons. HostGator prioritizes the safety and security of its infrastructure, and physical access is limited to authorized personnel only.

Certainly, here's a glossary with thirty lesser-known terms related to HostGator and data centers:

Redundancy: Duplication of critical components or functions to increase reliability.

Latency: The time it takes for data to travel from the source to the destination.

Load Balancing: Distributing incoming network traffic across multiple servers to ensure no single server is overwhelmed.

UPS (Uninterruptible Power Supply): A device that provides emergency power in case of a power outage.

HVAC: Heating, Ventilation, and Air Conditioning systems used to regulate temperature and humidity in data centers.

Dark Fiber: Unused or underutilized optical fibers in fiber optic communication.

DDoS (Distributed Denial of Service): A cyberattack where multiple compromised computers are used to flood a target system.

RAID (Redundant Array of Independent Disks): Storage technology that combines multiple disk drives into a logical unit for data redundancy and performance improvement.

N+1 Redundancy: A setup where there is one extra component (N+1) in case of failure.

Fiber Channel: A high-speed network technology commonly used for storage area networks (SANs).

Colocation: Hosting servers and networking equipment in a third-party data center.

Latency Optimization: Strategies to minimize delays in data transmission.

UPS Load Factor: The ratio of the average load to the maximum load a UPS can handle.

Packet Loss: The percentage of data packets that fail to reach their destination.

Cross-Connect: A physical connection between two network devices.

Cabinet: A secure enclosure used to house servers and networking equipment.

Edge Computing: Processing data closer to the source of data generation rather than relying on a centralized cloud-based system.

Peering: The arrangement between Internet Service Providers (ISPs) to directly connect their networks.

Grey Space: Unused or underutilized space in a data center.

InfiniBand: A high-speed interconnect used for data-intensive computing tasks.

Network Topology: The arrangement of different elements in a computer network.

POE (Power over Ethernet): Technology that allows electrical power to be transmitted over Ethernet cables.

Thermal Imaging: Using infrared radiation to visualize temperature variations in equipment.

Zero-Day Vulnerability: A software vulnerability unknown to the vendor.

Elastic Load Balancing: Automatically distributing incoming application traffic across multiple targets.

Managed Hosting: A service where the hosting provider manages hardware, operating systems, and system software.

Bare Metal Server: A physical server dedicated to a single tenant without virtualization.

White Space: Available frequency bands that are not in use.

Bandwidth Throttling: Limiting the speed or total amount of data that can be transmitted.

Caching: Storing copies of files or data to serve future requests more quickly.

#DataCenters#WebHosting#ReliableHosting#Infrastructure#Security#GreenHosting#WebsitePerformance#Technology#CustomerTestimonials

0 notes

Photo

A number of Amazon, Google, and Microsoft Public Cloud data center regions are across the US and the EU. The US has 28 Cloud regions. The EU has 24 Cloud regions. Public Cloud is a vast, shared online computing services, essential for modernizing businesses, driving economic growth

by u/maps_us_eu

46 notes

·

View notes

Text

Efficient Cooling Solutions: Exploring the Advantages of Industrial Chillers

In the realm of industrial processes, maintaining optimal temperatures is paramount for productivity and equipment longevity. This is where industrial chiller step in as game-changers. In our latest blog post, we delve into the realm of efficient cooling solutions by exploring the myriad advantages offered by industrial chillers.

Industrial chillers are the unsung heroes behind the scenes, ensuring that various manufacturing processes, data centers, medical facilities, and more operate seamlessly. Their primary function is to remove excess heat from processes and equipment, maintaining a stable environment. This not only prevents overheating and potential damage but also enhances overall operational efficiency.

One of the standout benefits of industrial chillers is their adaptability to diverse industries. From plastics and pharmaceuticals to food and beverage, these systems can be tailored to meet specific cooling requirements. Their precise temperature control guarantees consistency in product quality, which is often a non-negotiable factor in many sectors.

Energy efficiency takes center stage in the current global scenario, and industrial chillers are stepping up to the challenge. Modern units are designed with eco-friendly refrigerants and advanced technologies that minimize energy consumption, subsequently reducing operating costs and environmental impact.

Moreover, the longevity of equipment is markedly increased when industrial chillers are in play. By curbing excess heat and maintaining steady operating conditions, wear and tear are significantly reduced, leading to extended equipment lifetimes and reduced downtime for maintenance.

In conclusion, industrial chillers offer a comprehensive package of benefits – from temperature control and energy efficiency to improved equipment durability. As industries continue to evolve, these efficient cooling solutions remain indispensable for seamless operations and heightened productivity.

#IndustrialChiller#Chiller#CoolingSolutions#EfficientCooling#TemperatureControl#IndustrialProcesses#EquipmentLongevity#EnergyEfficiency#ProductivityBoost#Manufacturing#DataCenters#OperationalEfficiency#EnvironmentalImpact#EquipmentMaintenance

1 note

·

View note

Link

Het Amerikaanse Iron Mountain Datacenters heeft zich verbonden aan het Haarlemse Prinsjesdag evenement. Iron Mountain is hoofdpartner geworden, naast co-initiatiefnemer en hoofdpartner Industriekring Haarlem. Het bedrijf is wereldwijd actief met datacenters in diverse continenten. Binnen Europa heeft het datacenters in Haarlem/Amsterdam, Frankfurt, London en Madrid. In de Waarderpolder zit al jarenlang een modern en groeiend datacenter, in het hart van het grootste netwerk van digitale ecosystemen ter wereld. Met deze samenwerking is het aantal partners van ‘het Haarlemse zakenevenement met een oranje tintje’ op 20 gekomen. De tiende editie is op dinsdag 19 september in de Koepel Haarlem. Meer informatie op de website https://www.haarlemseprinsjesdaglunch.nl.

0 notes

Text

Data backup and recovery solutions play a critical role in safeguarding your information and ensuring business continuity. Here are seven key advantages of implementing a robust data backup and recovery strategy.

0 notes

Text

What Faults Can Be Found Using a Visual Fault Locator Pen?

#fiber#cable#ftth#VFL#networking#Locator#optical#fibreoptique#datacenters#5Gnetwork#networkingcables#pen#holight#fiberoptic#fiberoptics#communication#datacenter

0 notes

Text

At Dual-Socket Systems, Ampere’s 192-Core CPUs Stress ARM64 Linux Kernel

Ampere’s 192-Core CPUs Stress ARM64 Linux Kernel

In the realm of ARM-based server CPUs, the abundance of cores can present unforeseen challenges for Linux operating systems. Ampere, a prominent player in this space, has recently launched its AmpereOne data center CPUs, boasting an impressive 192 cores. However, this surplus of computing power has led to complications in Linux support, especially in systems employing two of Ampere’s 192-core chips (totaling a whopping 384 cores) within a single server.

The Core Conundrum

According to reports from Phoronix, the ARM64 Linux kernel currently struggles to support configurations exceeding 256 cores. In response, Ampere has taken the initiative by proposing a patch aimed at elevating the Linux kernel’s core limit to 512. The proposed solution involves implementing the “CPUMASK_OFFSTACK” method, a mechanism allowing Linux to override the default 256-core limit. This approach strategically allocates free bitmaps for CPU masks from memory, enabling an expansion of the core limit without inflating the kernel image’s memory footprint.

Tackling Technicalities

Implementing the CPUMASK_OFFSTACK method is crucial, given that each core introduces an additional 8KB to the kernel image size. Ampere’s cutting-edge CPUs stand out with the highest core count in the industry, surpassing even AMD’s latest Zen 4c EPYC CPUs, which cap at 128 cores. This unprecedented core count places Ampere in uncharted territory, making it the first CPU manufacturer to grapple with the limitations of ARM64 Linux Kernel 256-core threshold.

The Impact on Data Centers

While the core limit predicament does not affect systems equipped with a single 192-core AmpereOne chip, it poses a significant challenge for data center servers housing two of these powerhouse chips in a dual-socket configuration. Notably, SMT logical cores, or threads, also exceed the 256 figure on various systems, further compounding the complexity of the issue.

AmpereOne: A Revolutionary CPU Lineup

AmpereOne represents a paradigm shift in CPU design, featuring models with core counts ranging from 136 to an astounding 192 cores. Built on the ARMv8.6+ instruction set and leveraging TSMC’s cutting-edge 5nm node, these CPUs boast dual 128b Vector Units, 2MB of L2 cache per core, a 3 GHz clock speed, an eight-channel DDR5 memory controller, 128 PCIe Gen 5 lanes, and a TDP ranging from 200 to 350W. Tailored for high-performance data center workloads that can leverage substantial core counts, AmpereOne is at the forefront of innovation in the CPU landscape.

The Road Ahead

Despite Ampere’s proactive approach in submitting the patch to address the core limit challenge, achieving 512-core support might take some time. In 2021, a similar proposal was put forth, seeking to increase the ARM64 Linux CPU core limit to 512. However, Linux maintainers rejected it due to the absence of available CPU hardware with more than 256 cores at that time. Optimistically, 512-core support may not become a reality until the release of Linux kernel 6.8 in 2024.

A Glimmer of Hope

It’s important to note that the outgoing Linux kernel already supports the CPUMASK_OFFSTACK method for augmenting CPU core count limits. The ball is now in the court of Linux maintainers to decide whether to enable this feature by default, potentially expediting the timeline for achieving the much-needed 512-core support.

In conclusion, Ampere’s 192-core CPUs have thrust the industry into uncharted territory, necessitating innovative solutions to overcome the limitations of current ARM64 Linux kernel support. As technology continues to advance, collaborations between hardware manufacturers and software developers become increasingly pivotal in ensuring seamless compatibility and optimal performance for the next generation of data center systems.

Read more on Govindhtech.com

#DualSocket#ARM64#Ampere’s192Core#CPUs#linuxkernel#AMD’s#Zen4c#EPYCCPUs#DataCenters#DDR5memory#TSMC’s#technews#technology#govindhtech

0 notes