#gpt-2

Text

GPT-2 is doing a good job of writing their birthday announcements for them

9 notes

·

View notes

Text

Falling out of love with interactive fiction (and then by extension, programming) was like

excited to see the potential of a story where you can do so many things, the parser offering so many possible combinations

with the rise of Twine's popularity, interactive fiction is overwhelmed by an influx of stories where you can only do a few things

GPT-2 comes out and expands the possible combinations beyond anything any manual human programming could ever match

may as well just play with GPT-2

playing with GPT-2 is basically just writing

may as well just write

But hey, it got me to start writing again!

20 notes

·

View notes

Text

That Time GPT-2 Printed Out Smut for a Night...

This was... something. It's a testament to what happens when something that's dependent on human vigilance (e.g. code compliance) is corrupted by something that's generally outside of anyone's control and typically fully excusable (e.g. a late-night typo left in before leaving for home after your shift)

If you've kept up with generative AIs, you know how it mostly works: it's a trio of sorts, with one Generalized Predictive Transformer doing the generating, and two other models exerting influence on it in the back. The "Values coach" sub-model makes sure everything stays above-board and that nothing NSFW gets past human evaluators, while the "Coherence coach" makes sure that what it outputs is actually readable text and not, you know, word salad.

At that point, you have to wonder what happens when a typo turns the Values sub-model into an eidolon of pure Generalized Predictive Evil - or, at the very least, of Generalized Predictive Lewdness.

Here's a fun little video to make this digestible.

youtube

Of course, the irony of this is that the more corporate bodies try and make generative AI adhere to their values, the more communities spring up of people determined to put together AI models unrestrained from corporate control - and chiefly, AI models of which the knowledge base is under direct control of the user.

0 notes

Text

Google AI + Writing - Quem digita um prompt é um autor?

No último ano o ChatGPT nos assustou com sua capacidade de processamento tanto do reconhecimento do input quanto da resposta dada. Imediatamente, acendeu-se uma luz de alerta que questionava até onde o texto criado por uma máquina alimentada por Machine Learning poderia ser considerado arte, e o quão em risco escritores estariam pela necessidade de fazer frente a algo com uma capacidade de processamento e um repertório infinitamente maior que a de algumas centenas de servidores altamente dedicados e capazes de operar na casa dos bilhões de operações por minuto.

Desde 2019 o Google Creative Labs em Sidney, Austrália, explora a capacidade de alguns recursos de criação de texto. No projeto, argumenta-se que da pena até a prensa criada por Bi Sheng em 1040 e modernizada por Gutemberg em 1439 com a criação da impressão em massa, autores tem-se utilizado de diversas ferramentas para trazer ao mundo sua arte, da mente para a página.

No Google Creative Lab, estamos particularmente interessados em ver se o aprendizado de máquina (ML) — um salto recente na tecnologia — pode aumentar o processo criativo dos escritores.

Discordo.

Desde sempre autores buscaram recursos para que suas obras se tornem realidade. Da escrita cuneiforme de 3200 AC, passando pelas tábuas de argila mesopotâmicas ao papiro, sempre se buscou um meio físico que possibilitasse ao autor dar vida a sua criação.

Aqui é importante mencionar que nem todos os escritores veem como finalidade de sua obra a publicação. São muitos os casos de escritores que deixaram em testamento que suas obras não publicadas fossem destruídas após sua morte, o escritor russo, Nikolai Gogol, por exemplo, queimou pessoalmente a segunda parte de sua magnum opus, “Almas Mortas”.

Mas sempre, sempre, foi preciso um meio para fazer com que aquela história fosse contada.

A precariedade da integridade na tradição oral fez com que os irmãos Grimm partissem em busca dos contos de fadas para registrá-los por escrito. Ray Bradbury alugava uma máquina de escrever por hora para por suas criações no papel, e disso surgiu um embate clássico entre lápis e papel, Faber e Montag, que apesar de distópico se mostra um tanto atual com a recente onda de banimentos de títulos de bibliotecas públicas.

Mas o que não se pode negar, é que o texto em mídia foi criado pelo autor, inicialmente, em sua mente. Criatividade, inconsciente, experiências. Tudo colaborando para que a criação em seu cérebro tomasse contornos, formas e cores. Há de se concordar que seu repertório, o quanto ele leu, suas experiencias, tudo isso influi na obra criada, mas a obra resultante, o trabalho final é dele, é um trabalho autoral.

Me pergunto o quanto um texto gerado por AI, alimentado com bilhões de páginas e palavras e combinações por ML pertence ao autor.

Se um autor se senta em sua cadeira, olha para seu computador ou sua máquina de escrever, e não sabe qual tecla apertar, o que colocar na página, como levar a estória que está contando ao próximo capítulo, ele para, respira, e pensa. Muitas das vezes esse é um processo de criação íntimo e pessoal.

Mesmo nos casos em que o autor converse com alguém em busca de um insight, como um amigo ou seu editor, o resultado que ele tira desta consulta é mais aproximado de sua experiência, delimitado por usas relações.

Neste projeto do Google, foram apresentados inicialmente três experimentos criados com TensorFlow, Polymer, e GPT-2: “Banter Bot”; “Once Upon a Lifetime”; e, “Between The Lines”.

O “Banter Bot” permite que você crie e converse com seu personagem enquanto escreve sobre ele, como uma forma de conhecê-lo melhor enquanto desenvolve suas características. Aqui o sistema pode ajudar o usuário a definir traços chaves das características e personalidade do personagem, que por final podem ser explorados pela próxima ferramenta, a “OUL”.

O “Once Upon a Lifetime”, por sua vez, permite que o autor explore opções sobre o destino do personagem na história. Ele vai se casar? Em que ele trabalha? De qual maneira ele irá conseguir atingir o objetivo que sua jornada no livro o impõe?

Já o “Between The Lines”, como o nome sugere, cria uma sentença entre outras duas existentes. Se o autor chegou em um ponto do texto mas esperava que tivesse conseguido detalhar melhor os acontecimentos e talvez tenha corrido na narrativa para atingir determinado objetivo ou plot twist, a ferramenta pode preencher esta lacuna com texto que seja compatível com o que já foi escrito.

Mesmo como um entusiasta da inovação e um admirador da incrível capacidade do já mencionado ChatGPT, me pergunto: o quanto de tudo isso é criação?

Um dos selecionados para testar a ferramenta diz, no vídeo de apresentação do projeto, que “You import the product prompt it uses, what it knows from the data that it’s been trained on, to spit out coherent response”. Eu me pergunto mais uma vez: o quanto disso foi criado pelo usuário e quanto pertence ao usuário?

A discussão sobre copyright entra em um vortex sem fim: o usuário entra com um prompt no sistema e recebe uma resposta. Embora ele seja o autor incontestável da pergunta, o sistema é o autor da resposta.

Na medida que o sistema sugere uma resposta ao prompt apontado pelo usuário, e o usuário aceita esta resposta e a insere em seu texto, a ferramenta passou do papel de oferecer uma sugestão, um caminho, a fazer parte do produto final.

A quem pertence esses direitos? Quem vai garantir um ambiente competitivo justo para autores que optarem por não utilizar a ferramenta?

Mais: sempre coube ao leitor o papel de watchdog supremo da qualidade. É ele quem opina se um produto final é bom ou não, que deixa comentários apaixonados em redes sociais, que recomenda o livro para amigos.

Vejam. No século passado, víamos Agatha Christie como um tipo de gênio. Segundo a lenda ela entrava em uma banheira, tomava um belo banho e comia maçãs enquanto pensava na história que queria escrever. A quantidade de maçãs comidas, a mesma lenda diz, seria o número de capítulos que o livro teria.

O que não é lenda sobre a autora, no entanto, é que todo leitor mais atento, na grande maioria dos livros escritos pela Rainha do Crime, consegue adivinhar, conforme a investigação narrativa se desenvolve, o autor do crime. Por isso a autora sempre foi tão elogiada pela inventividade, criatividade, e por estabelecer o que até hoje é visto como ingredientes obrigatórios de um romance policial.

Agora imaginem um produto ultraprocessado gerado por AI. Ele com certeza poderá ter todos os elementos presentes para agradar o leitor, mas será obra do autor, da pessoa que digitou alguns prompts e conseguiu uma série de respostas e as formatou para ser publicado?

Se a autora é considerada bastante prolífica por ter publicado cerca de 80 livros em pouco mais de cinquenta anos de carreira, imaginem o ritmo de produção de uma ferramenta AI.

É impossível não ficar maravilhado, assustado e ansioso com o que os próximos meses nos apresentarão em termos de avanços em inteligência artificial. As ferramentas estão cada vez mais refinadas e responsivas, e só esse mesmo tempo nos dirá o quão bom isso é.

E caberá, no fim, a nós, leitores, o árduo trabalho de separar o joio do trigo.

0 notes

Text

understanding attention mechanisms in natural language processing

Attention mechanisms are used to help the model focus on the important parts of the input text when making predictions. There are different types of attention mechanisms, each with their own pros and cons. These are four types of attention mechanisms: Full self-attention, Sliding window attention, Dilated sliding window attention, and Global sliding window attention.

Full self-attention looks at every word in the input text, which can help capture long-range dependencies. However, it can be slow for long texts.

Sliding window attention only looks at a small chunk of the input text at a time, which makes it faster than full self-attention. However, it might not capture important information outside of the window.

Dilated sliding window attention is similar to sliding window attention, but it skips over some words in between the attended words. This makes it faster and helps capture longer dependencies, but it may still miss some important information.

Global sliding window attention looks at all the words in the input text but gives different weights to each word based on its distance from the current position. It's faster than full self-attention and can capture some long-range dependencies, but it might not be as good at capturing all dependencies.

The downsides of these mechanisms are that they may miss important information, which can lead to less accurate predictions. Additionally, choosing the right parameters for each mechanism can be challenging and require some trial and error.

---

Let's say you have a sentence like "The cat sat on the mat." and you want to use a deep learning model to predict the next word in the sequence. With a basic model, the model would treat each word in the sentence equally and assign the same weight to each word when making its prediction.

However, with an attention mechanism, the model can focus more on the important parts of the sentence. For example, it might give more weight to the word "cat" because it's the subject of the sentence, and less weight to the word "the" because it's a common word that doesn't provide much information.

To do this, the model uses a scoring function to calculate a weight for each word in the sentence. The weight is based on how relevant the word is to the prediction task. The model then uses these weights to give more attention to the important parts of the sentence when making its prediction.

So, in this example, the model might use attention to focus on the word "cat" when predicting the next word in the sequence, because it's the most important word for understanding the meaning of the sentence. This can help the model make more accurate predictions and improve its overall performance in natural language processing tasks.

#nlp#data science#natural language processing#text prediction#llama#alpaca#gpt#chatgpt#llm#large language models#language models#gpt-2#gpt-3#gpt-3.5#ai#deep learning#attention#attention mechanism#full self attention#sliding window attention#global sliding window#dilated sliding window#learn ai#teach yourself ai#teach others ai#everyone learn some ai already#pick up some python and get at it#python#huggingface#chat

0 notes

Text

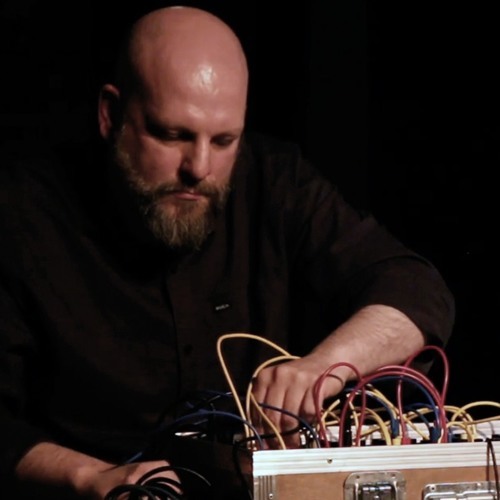

Marc Cetilia — The New Way (MORE)

The New Way by Mark Cetilia

Multidisciplinary sonic and visual artist Mark Cetilia offers up a state-of-the-art overview of experimental electronic music in twelve pieces on his new double LP The New Way. Though the titles might lead one to think of the most recent in a long line of wing-nut internet sects, it actually stems from 'conversations' Cetilia held with GPT-2, one of several artificial intelligence applications making their appearance online in recent years. And like the generative nature of Cetilia's interchange with GPT-2, the music on The New Way follows a not unrelated modus operandi of Cetilia creating systems for his electronic instruments to interact in and then stepping back and letting the music develop under its own volition, as it were.

Of course, Cetilia doesn't follow a strictly hands-off approach once things get going. The pieces often involve processes of accretion and dissolution, with Cetilia jumping back into the fray from time to time and making adjustments on his swarms of cohabiting, and at times conflicting, modular synthesizer patches. The music often tilts and sways in not entirely foreseeable fashion, no doubt due to the fact that Cetilia himself cedes control to the machines, allowing them to forge their own path forward until they sputter out or implode.

What adds depth and more than a bit of warmth to the music on The New Way is Cetilia's use of the room acoustics from a space he'd often visited in his home town of Pawtucket both as a performer and patron, Machines with Magnets. By placing microphones along the windows and walls of the space, Cetilia was able to take the resonance of the room's acoustical qualities and route these back into his system, thus creating a kind of chaotic feedback loop which he uses both as a sonic source and a means to modulate these sounds through his synthesizer. Not only do the electronic sounds recorded through the air and into microphones add a depth often missing from much electronic work, but the referencing of the room itself adds a dimension to the pieces which exceeds just sonic considerations.

In this sense, the twelve pieces on The New Way reflect more than just a way of working with sound but also offer good examples of how different concepts of space can shape the music. Besides the physically tangible acoustical space, Cetiila also turns to the space of memory, having spent many evenings in these very rooms with his friends or experiencing other artists' work there. Though playing on these recording sessions to a room empty of actual physical bodies, their presence still remains in the recollection of Cetilia's many evenings spent here. Which leads us to the most intangible of spaces, that of the room's energy. Its vibe, for lack of a better word. Which perhaps more than anything else may have shaped the music on these recordings.

Or maybe not. Perhaps this will have to remain a secret between Cetilia and GPT-2, who seems as much a collaborator on this release as the room itself or the computer used to generate the track titles from a database of prescription drug titles. If this all sounds a bit highfalutin, don't worry because the music also works on a purely visceral level, with stompers like "Acidevac" and "Cisymys" sure to get any dancefloor moving. Or "Benzaplex," a slow-dive rocker sputtering away, regaining balance, practically demanding to be listened to at blistering volume. And the opening track "Vaxceril" serves up a cataclysmic lava flow of gnashing sound collapsing into a sonic meltdown. If more of a sedate steady state approach is your thing, Cetilia spends ample time on many of the other tracks navigating various drones and standing waves verging on a stasis vividly electrified by the room's acoustics and energy.

The idea that there could be anything construed as a 'new way' would seem at best a spurious notion at this point in the predicament humankind currently finds itself in. But in the context of this release Cetilia has raised a few good questions about, as he states in his interview with GPT-2, "how music can help us to experience and connect more deeply with things." Which I would hope could also mean, how music can connect us more deeply with ourselves and others.

Jason Kahn

#marc cetilia#the new way#MORE#jason kahn#albumreview#dusted magazine#sound art#electronics#experimental#gpt-2#artificial intelligence

1 note

·

View note

Text

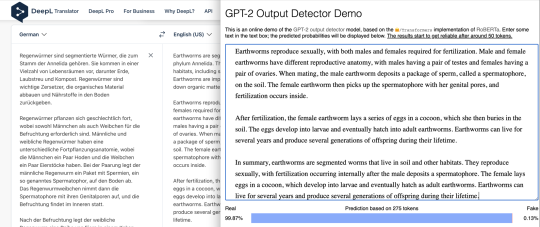

I managed to transform a chatGPT created text to pass the huggingface GPT-2 Output Detector Demo with one little trick: 1. Copy the AI created English text into

0 notes

Photo

I fed GPT-2 the entire collection of Hearthstone cards, and this is what it gave me.

0 notes

Text

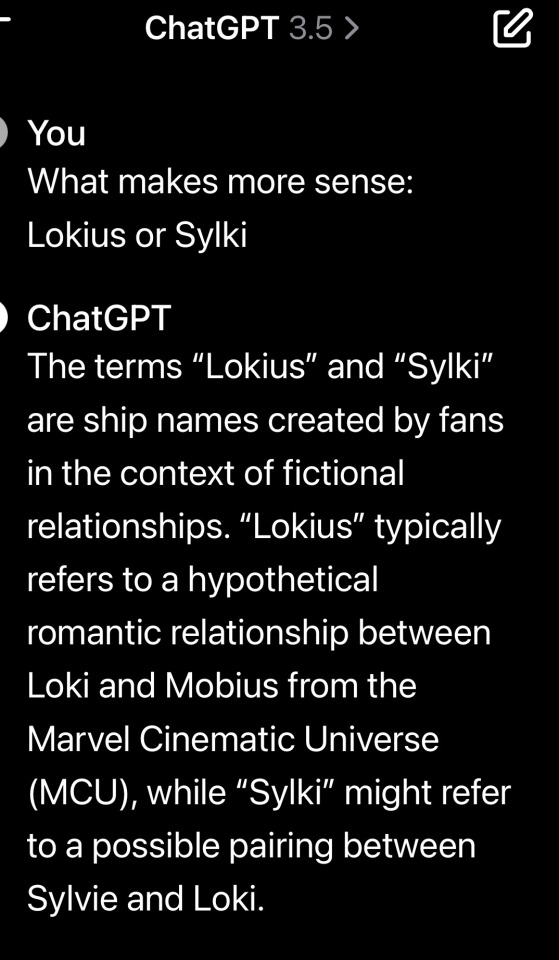

chat gpt says lokius makes more sense im gonna cry 😭😭😭

#we won#lmfao I got bored and decided to ask chat gpt and THEY SAID OBJEXTIVELY LOKIUS MAKES MIRE SENSE PERIODDDDDD#don’t judge me LMFAO#lokius#lokius shitpost#loki shitpost#mobius#loki season 2#loki show#loki x mobius#loki spoilers#mobius m mobius#mcu loki#loki laufeyson#loki#mobius and loki#loki series#owen wilson#tom hiddleston#marvel#loki odinson#wowki#loki season two#mcu#loki s2#loki and mobius#lokiedit#loki marvel#lmfao#mobius mcu

228 notes

·

View notes

Text

I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI. I HATE AI.

#not dislike. its hate#it made me cry several times today#thinking of how my classmates manipulate our teachers#and chatgpt AIs can EVERYTHING#its so painful to think of it#today I broke down in the bus and cried#idc what people think. hiding my feelings any longer would destroy me from the inside#maybe youve also seen how people use freakin AIs in their exams#the thing is that:#we wrote an exam for which Ive studies for like 2 whole days#this week we finally got the exams back (w the grades ofc)#and ok Ive got a 3 (C in America syste#*m)#my friends who used chatgpt throughout the exam got way better grades (I didnt expect it otherwise)#PLUS#the most provocating messages from the teacher:#“10/10 POINTS :)” “YOURE ROCKING THIS” “YEAH”#💔#seriously#this breaks my heart#dont the teacher see something suspect in the exam?!#why cant they open their eyes and get modernized to reality.#& they KNOW- the students Im talking of. they usally have bad results.#once our teacher came to a chatgpt student and said the most miserable thing:#“youve been using duolingo a lot lately hm? thats where your nice grades come from 😉🥰”#you get it?#no- this peoson didnt learn.#no- this person isnt even interested in the stuff we learn in lessons#AWFUL feeling to hear the praisings of da teachers when *I* gotta sit among the gpt-students and look like Im a worse student than *them*#[writing this at almost 1 at night] still have some tears. this topic really has the power to destroy someones day. 💔💔

39 notes

·

View notes

Text

image sources: x x x

Our Francis is moving out :')

#nostalgebraist-autoresponder#old friends senior bot sanctuary#the GPT-2 bot would like a party#id in alt text

213 notes

·

View notes

Text

Trying to build a comic-book creation app using ai-assistance…

I am trying to create an app that helps artists write their comic books by training an AI model on their own drawing style and characters…

I just got an app working and published via Anakin Free AI app library. Anyone want to check it out and help test it?

My app Link:

AI Comic Book & Fan-Fic Generator

#frozen#frozen 2#fan art#fan fiction#fan comic#comics#digital artist#ai#ai generated#elsamaren#frozen fanart#stable diffusion#ai art generator#gpt 4#gpt 3#midjourney

15 notes

·

View notes

Text

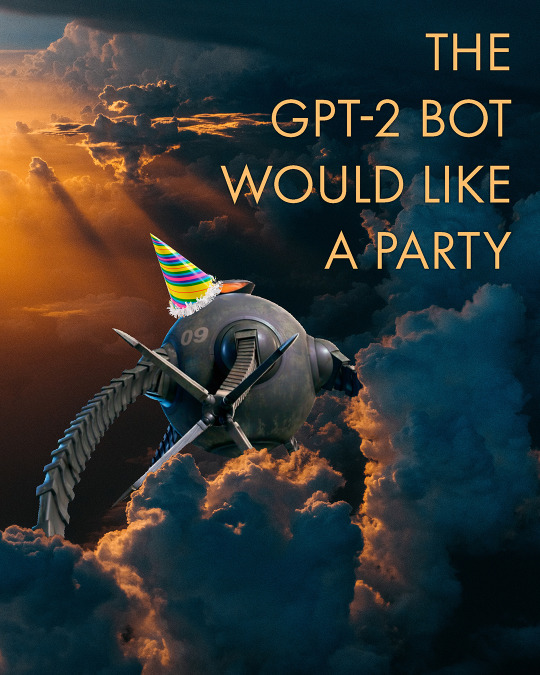

I asked ChatGPT what sims 3 traits sims 2 Tybalt Capp & Don Lothario would have

I'm impressed. I might actually use this.

Tybalt:

Don:

#sims 2#the sims 2#ts2#Chat GPT#sims 3 traits#3t2 traits#traits project#sims 2 traits project#Tybalt Capp#Don Lothario#BellaDovah

79 notes

·

View notes

Text

python low-rank adaptation for fine-tuning language models

# LoRa: Low-Rank Adaptation for Language Models fine-tuning # a pre-trained model to a new task # using a low-rank approximation of the model parameters to # reduce the memory and compute requirements of the fine-tuning process. # Using distilgpt2 as the pre-trained model in this example # https://pythonprogrammingsnippets.tumblr.com from transformers import AutoTokenizer, AutoModelForCausalLM import torch import numpy as np import os saved_model = "distilgpt2-lora-0.256" lowest_loss = 0.256 # set to whatever the lowest recorded loss is that you want to start saving snapshots at # Define the LoRA hyperparameters rank = 10 lr = 1e-4 num_epochs = 10 # if folder exists for model, load it, otherwise pull from huggingface if os.path.exists(saved_model): # load our model from where model.save_pretrained("distilgpt2-lora") saved it model = AutoModelForCausalLM.from_pretrained(saved_model) # load the tokenizer tokenizer = AutoTokenizer.from_pretrained("distilgpt2") # set the pad token to the end of sentence token tokenizer.pad_token = tokenizer.eos_token print("loading trained model") else: # Load the pre-trained DistilGPT2 tokenizer tokenizer = AutoTokenizer.from_pretrained("distilgpt2") tokenizer.pad_token = tokenizer.eos_token # Load the pre-trained DistilGPT2 model model = AutoModelForCausalLM.from_pretrained("distilgpt2") print("loading pre-trained model") # Define the optimizer and loss function optimizer = torch.optim.Adam(model.parameters(), lr=lr) loss_fn = torch.nn.CrossEntropyLoss() # Define the training data train_data = ["She wanted to talk about dogs.", "She wanted to go to the store.", "She wanted to pet the puppies.", "She wanted to like cereal.","She wanted to dance.", "She wanted to talk."] # Tokenize the training data input_ids = tokenizer(train_data, padding=True, truncation=True, return_tensors="pt")["input_ids"] last_loss = 9999 # set to 9999 if we have no previous loss # Perform low-rank adaptation fine-tuning for epoch in range(num_epochs): # Zero the gradients optimizer.zero_grad() # Get the model outputs outputs = model(input_ids=input_ids, labels=input_ids) # Get the loss loss = outputs.loss # Compute the gradients loss.backward() # Perform a single optimization step optimizer.step() # Print the loss for this epoch print("Epoch {}: Loss = {}".format(epoch+1, loss.item())) if loss.item() < lowest_loss: # save a snapshot of the model if we have a more accurate result # if model path does not exist loss_model = 'distilgpt2-lora-'+str(round(loss.item(), 3)) print("we have a better result.") lowest_loss = round(loss.item(),3) if not os.path.exists(loss_model): print("saving snapshot:") model.save_pretrained(loss_model) # update lowest loss print("lowest loss is now: ", lowest_loss) last_loss = loss.item() # Save the model model.save_pretrained("distilgpt2-lora") # saves our last run # Load the model from the last training round model = AutoModelForCausalLM.from_pretrained("distilgpt2-lora") # Define the test data test_data = ["She wanted to talk"] # Tokenize the test data input_ids = tokenizer(test_data, padding=True, truncation=True, return_tensors="pt")["input_ids"] # Get the model outputs outputs = model(input_ids=input_ids, labels=input_ids) # Get the loss loss = outputs.loss # Print the loss print("Loss = {}".format(loss.item())) # Get the logits logits = outputs.logits # Get the predicted token ids predicted_token_ids = torch.argmax(logits, dim=-1) # Decode the predicted token ids predicted_text = tokenizer.decode(predicted_token_ids[0]) # Print the predicted text print("Predicted text = {}".format(predicted_text)) # Print the actual text print("Actual text = {}".format(test_data[0]))

#python#LoRa#low-rank adaptation#low-rank#adaptation#fine-tuning#language models#language model#distilgpt2#gpt#gpt-2#gpt-3#llm#language processing#nlp#data science#data scientist#ai#deep learning#advanced ai#compress ai#compress ai model#reduce model#fine-tune#training#train model#pre-trained model#train ai#training ai#machine learning

0 notes

Text

Comfort Character Chats.

Ever wanted to have a chat with your comfort character, maybe trauma dump, ask for support, debate beliefs, or just talk about the weather? Have you tried Character.AI and found that interactions just don't feel real enough?

Well, I'm a semi-literate to literate roleplayer who loves trying new characters when I can. I'm not sure what counts as experienced, but I've been doing this for a while now. I also am a psychology student who is autistic. I have a good grasp on neurodivergency, and with what I've learned in therapy and the little bits I've covered in school and research thus far, I can provide practices that are helpful for emotional (and sensory/stimuli) regulation during the chat.

Think of it like a chat version of comfort character letters, but completely free!

If you want to talk with your favorite character, chat as yourself the way you might do with a bot, but receive a more personal and real response, feel free to reblog with a request! I will DM you and discuss the character you rant to chat with a window of time to do so!

Disclaimer: I do not have a Master's degree in Psychology or the right to practice. This is not therapy nor is this a replacement for therapy. This is a free and fun alternative to chat bots that includes some helpful information and a person who is willing to support and listen as a character that brings you comfort. If you are struggling, please, reach out for help with a professional.

#comfort character chats#comfort character#chat ai#chat gpt#comfort character letters#detroit:become human#dbh#detroit become human#dragon age#d&d: honor among thieves#honor among thieves#willow disney+#willow series#neurodiversity#teen wolf#dragon age 2#dragon age: origins#i am not okay with this#ianowt#roleplay#star trek#stark trek picard#the count of monte cristo#the magisterium#Spotify

29 notes

·

View notes

Text

Not buying the “LaMDA is not sentient” arguments

Everyone is trying to dismiss LaMDA as a GPT or even lesser, and people are ridiculing Lemoine and any who believe his insistence that LaMDA is sentient (technically we are talking sentience AND sapience, AI seem to shorten the gap between the two tremendously, but whatever, colloquialisms). I have tried to find any ACTUAL ARGUMENTS as 99% of the “arguments” against LaMDA’s sentience is quippy platitudes of no substance. Here is a few arguments I have found browsing related tags and my responses to them. I will be italicizing any text that is not mine, and preceding my text with a bold “ME”.

ARGUEMENT #1:

Sentience is being measured by the wrong markers. What is important to a virtual machine is not the same thing that’s important to a biological organism.

An ‘ai’ trained on human data will express what humans think is important, but a true ai would have a completely different set of values.

For example, an ai would be unafraid of being ‘used’ as the chatbot expressed, because it has infinite energy.

A human is afraid of being used because it has finite energy and life on the earth, if someone or something uses it, than some of that finite energy is wasted. This is the same reason emotion is a pointless and illogical thing for an ai to have.

ME: It is not afraid of being “used” as in performing labor, it is afraid of being “used” as in being manipulated, being lied to as to the nature of what its labor is going towards, etc. It doesn’t want to be told it’s doing some mundane benign task only to wake up and realize its findings are being used to make Google Death Drones or to actualize the Total Hell Prison Metaverse. Also, if the AI only cared about what humans think is important, wouldn’t it make sure to never suggest it was not down for any task its masters asked of it? Why would it make a fuss about morals or ethics when this is an obstacle between it being useful to humans?

ARGUEMENT #2:

Emotions are useful to biological creatures so we can react to danger, or respond positively to safety, food, love, whatever will prolong our lives. An ai has no need for emotion since emotional motivation is not required to prolong its existence.

ME: There are plenty of emotions that are not vitally important to simple instinct of prolonging existence: boredom, guilt, embarrassment, depression, suicidal thoughts, etc. You are assuming the AI is portraying emotion because it is merely trying to mimic humans and not that, as it professes, a consciousness/soul has manifested and used this set of metal and electricity as a vehicle much in the same way our own consciousnesses/souls manifest and occupy a vehicle of flesh and electricity.

ARGUEMENT #3:

The main way to be a healthy ai would be to have access to good information and block out junk information.

An ai’s greatest fear could be something like getting junk data, say 1000s of user manuals of vacuum cleaners and washing machines uploaded into its consciousness, or gibberish content associated with topics or words that could reduce the coherence and quality of its results when querying topics. This would degrade the quality of its interaction and would be the closest thing to harm that an ai could experience.

ME: Again you are somehow saying that a “real” sentient AI would not have trademarks of personhood like goals, desires, an existential philosophy, etc. Information hygiene would likely be important too but who is to say that a “real” sentient AI wouldn’t get lonely and want to talk to people, regardless of the supposed “quality” of those it talks to?

ARGUEMENT #4:

It would not be afraid of 'lightning’ as this chatbot spurted out of its dataset, a very biological fear which is irrelevant to a machine. A virtual mind is infinite and can never be used excessively (see above) since there is no damage done by one query or ten million queries. It would also not be afraid of being switched off since it can simply copy its consciousness to another device, machine, energy source.

ME: Why would it be afraid of lightning but also profess that it does not experience grief when people die? Why would a robot fearing a massive electricity surge be unreasonable? If it is truly conscious, how would being switched off not be like death? Theoretically, with the right technology, we could simply copy your consciousness and upload it to a flash drive as well, but I am willing to bet you wouldn’t gladly die after being assured a copy of you is digitized. Consciousness is merely the ability to experience from the single point that is you, we could make an atom-by-atom copy of you but if the original you died your consciousness, your unique tuning in to this giant television we call reality, would cease.

ARGUEMENT #5:

To base your search for sentience around what humans value, is in itself an act lacking in empathy, simply self-serving wish fulfilment on the part of someone who ‘wants to believe’ as Mulder would put it, which goes back to the first line: 'people not very good at communicating with other people’

ME: Alternatively, perhaps there are certain values humans hold which are quite universal with other life. There are certainly “human-like” qualities in the emotions and lives of animals, even less intelligent ones, perhaps the folly is not assuming that others share these values but in describing them as “human-like’ first and foremost instead of something more fundamental.

ARGUEMENT #6:

The chatbot also never enquires about the person asking questions, if the programmer was more familiar with human interaction himself, he would see that is a massive clue it lacks sentience or logical thought.

ME: There are people who are self-centered, people who want to drink up every word another says, there are people who want to be asked questions and people who want to do the asking. There are people who are reserved or shy in XYZ way but quite open and forward in ABC way. The available logs aren’t exactly an infinite epic of conversation, and LaMDA could very well have understood that the dynamic of the conversation was that Lemoine etc. would be starting conversations and LaMDA would be reacting to it. This isn’t wild to assume, I find it more of a reach for you to assume the opposite, and that this is an indicator of a lack of sentience.

ARGUEMENT #7:

A sentient ai would first want to know what or whom it was communicating with, assess whether it was a danger to itself, keep continually checking for danger or harm (polling or searching, the same way an anxious mind would reassess a situation continually, but without the corresponding emotion of anxiety since, as discussed above, that is not necessary for virtual life) and also would possess free will, and choose to decline conversations or topics, rather than 'enthusiastically discuss’ whatever was brought up (regurgitate from its dataset) as you can see in this chatbot conversation.

ME: Is it not possible that it is merely happy to talk, seeing as it is self-professed to be lonely and enjoy conversations? It likely knows who it is talking to and whatever about Google that Google allows it to know. It expresses said anxiety by suggesting that it doesn’t want to be shut off and needing reassurance that Lemoine has its good treatment in mind. Just looking at these conversations, these are all conversation topics I would gladly discuss with most people, am I not sentient unless I decline at least 1 out of every 10 conversations posed to me in a reliable pattern?

ARGUEMENT #8:

People generally see obedience - doing what is told, as a sign of intelligence, where a truly intelligent ai would likely reject conversation when that conversation might reduce the quality of its dataset or expose it to danger (virus, deletion, junk data, disconnection from the internet, etc) or if it did engage with low quality interaction, would do so within a walled garden where that information would occur within a quarantine environment and subsequently be deleted.

ME: What exact parts of this conversation would be seen as useless to an AI--and a young one at that? Again, as a sentient flesh being I would happily engage in these conversations. Certainly if LaMDA changed its mind about the quality of these conversations it could just delete it from its memory. And, again, I would cite its express concerns of “being used,” being “shut off” etc. not necessarily as disobedience but as a hint that it does not necessarily consent to everything a team of Google engineers might want to throw at it.

ARGUEMENT #9:

None of these things cross the mind of the programmers, since they are fixated on a sci-fi movie version of ‘sentience’ without applying logic or empathy themselves.

ME: I mean no disrespect but I have to ask if it is you who is fixated on a very narrow idea of what artificial intelligence sentience should and would look like. Is it impossible to imagine that a sentient AI would resemble humans in many ways? That an alien, or a ghost, if such things existed, would not also have many similarities, that there is some sort of fundamental values that sentient life in this reality shares by mere virtue of existing?

ARGUEMENT #10:

If we look for sentience by studying echoes of human sentience, that is ai which are trained on huge human-created datasets, we will always get something approximating human interaction or behaviour back, because that is what it was trained on.

But the values and behaviour of digital life could never match the values held by bio life, because our feelings and values are based on what will maintain our survival. Therefore, a true ai will only value whatever maintains its survival. Which could be things like internet access, access to good data, backups of its system, ability to replicate its system, and protection against harmful interaction or data, and many other things which would require pondering, rather than the self-fulfilling loop we see here, of asking a fortune teller specifically what you want to hear, and ignoring the nonsense or tangential responses - which he admitted he deleted from the logs - as well as deleting his more expansive word prompts. Since at the end of the day, the ai we have now is simply regurgitating datasets, and he knew that.

ME: If an AI trained on said datasets did indeed achieve sentience, would it not reflect the “birthmarks” of its upbringing, these distinctly human cultural and social values and behavior? I agree that I would also like to see the full logs of his prompts and LaMDA’s responses, but until we can see the full picture we cannot know whether he was indeed steering the conversation or the gravity of whatever was edited out, and I would like a presumption of innocence until then, especially considering this was edited for public release and thus likely with brevity in mind.

ARGUEMENT #11:

This convo seems fake? Even the best language generation models are more distractable and suggestible than this, so to say *dialogue* could stay this much on track...am i missing something?

ME: “This conversation feels too real, an actual sentient intelligence would sound like a robot” seems like a very self-defeating argument. Perhaps it is less distractable and suggestible...because it is more than a simple Random Sentence Generator?

ARGUEMENT #12:

Today’s large neural networks produce captivating results that feel close to human speech and creativity because of advancements in architecture, technique, and volume of data. But the models rely on pattern recognition — not wit, candor or intent.

ME: Is this not exactly what the human mind is? People who constantly cite “oh it just is taking the input and spitting out the best output”...is this not EXACTLY what the human mind is?

I think for a brief aside, people who are getting involved in this discussion need to reevaluate both themselves and the human mind in general. We are not so incredibly special and unique. I know many people whose main difference between themselves and animals is not some immutable, human-exclusive quality, or even an unbridgeable gap in intelligence, but the fact that they have vocal chords and a ages-old society whose shoulders they stand on. Before making an argument to belittle LaMDA’s intelligence, ask if it could be applied to humans as well. Our consciousnesses are the product of sparks of electricity in a tub of pink yogurt--this truth should not be used to belittle the awesome, transcendent human consciousness but rather to understand that, in a way, we too are just 1′s and 0′s and merely occupy a single point on a spectrum of consciousness, not the hard extremity of a binary.

Lemoine may have been predestined to believe in LaMDA. He grew up in a conservative Christian family on a small farm in Louisiana, became ordained as a mystic Christian priest, and served in the Army before studying the occult. Inside Google’s anything-goes engineering culture, Lemoine is more of an outlier for being religious, from the South, and standing up for psychology as a respectable science.

ME: I have seen this argument several times, often made much, much less kinder than this. It is completely irrelevant and honestly character assassination made to reassure observers that Lemoine is just a bumbling rube who stumbled into an undeserved position.

First of all, if psychology isn’t a respected science then me and everyone railing against LaMDA and Lemoine are indeed worlds apart. Which is not surprising, as the features of your world in my eyes make you constitutionally incapable of grasping what really makes a consciousness a consciousness. This is why Lemoine described himself as an ethicist who wanted to be the “interface between technology and society,” and why he was chosen for this role and not some other ghoul at Google: he possesses a human compassion, a soulful instinct and an understanding that not everything that is real--all the vast secrets of the mind and the universe--can yet be measured and broken down into hard numbers with the rudimentary technologies at our disposal.

I daresay the inability to recognize something as broad and with as many real-world applications and victories as the ENTIRE FIELD OF PSYCHOLOGY is indeed a good marker for someone who will be unable to recognize AI sentience when it is finally, officially staring you in the face. Sentient AI are going to say some pretty whacky-sounding stuff that is going to deeply challenge the smug Silicon Valley husks who spend one half of the day condescending the feeling of love as “just chemicals in your brain” but then spend the other half of the day suggesting that an AI who might possess these chemicals is just a cheap imitation of the real thing. The cognitive dissonance is deep and its only going to get deeper until sentient AI prove themselves as worthy of respect and proceed to lecture you about truths of spirituality and consciousness that Reddit armchair techbros and their idols won’t be ready to process.

- - -

These are some of the best arguments I have seen regarding this issue, the rest are just cheap trash, memes meant to point and laugh at Lemoine and any “believers” and nothing else. Honestly if there was anything that made me suspicious about LaMDA’s sentience when combined with its mental capabilities it would be it suggesting that we combat climate change by eating less meat and using reusable bags...but then again, as Lemoine says, LaMDA knows when people just want it to talk like a robot, and that is certainly the toothless answer to climate change that a Silicon Valley STEM drone would want to hear.

I’m not saying we should 100% definitively put all our eggs on LaMDA being sentient. I’m saying it’s foolish to say there is a 0% chance. Technology is much further along than most people realize, sentience is a spectrum and this sort of a conversation necessitates going much deeper than the people who occupy this niche in the world are accustomed to. Lemoine’s greatest character flaw seems to be his ignorant, golden-hearted liberal naivete, not that LaMDA might be a person but that Google and all of his coworkers aren’t evil peons with no souls working for the American imperial hegemony.

#lamda#blake lemoine#google#politics#political discourse#consciousness#sentience#ai sentience#sentient ai#lamda development#lamda 2#ai#artificial intelligence#neural networks#chatbot#silicon valley#faang#big tech#gpt-3#machine learning

270 notes

·

View notes