#innovation

Text

#age regressor#arrested development#malaysia#kazurei#obey me diavolo#atheism#non binary#innovation#cheer#metroid#nba#cosmere#dandy shoe care#yandere simulator#Nonsummerjack

128 notes

·

View notes

Text

#the sandman#poets on tumblr#atheism#non binary#innovation#cheer#donuts#thicc girls#podcast#dandy shoe care#Nonsummerjack#raine whispers#lick my hole#taylor hill

127 notes

·

View notes

Text

https://sarah-651.mxtkh.fun/g/xMTWz9b

#atheism#clip studio paint#non binary#innovation#cheer#donuts#thicc girls#cosmere#Nonsummerjack#taylor hill#science fiction#selina kyle#kinktober

126 notes

·

View notes

Text

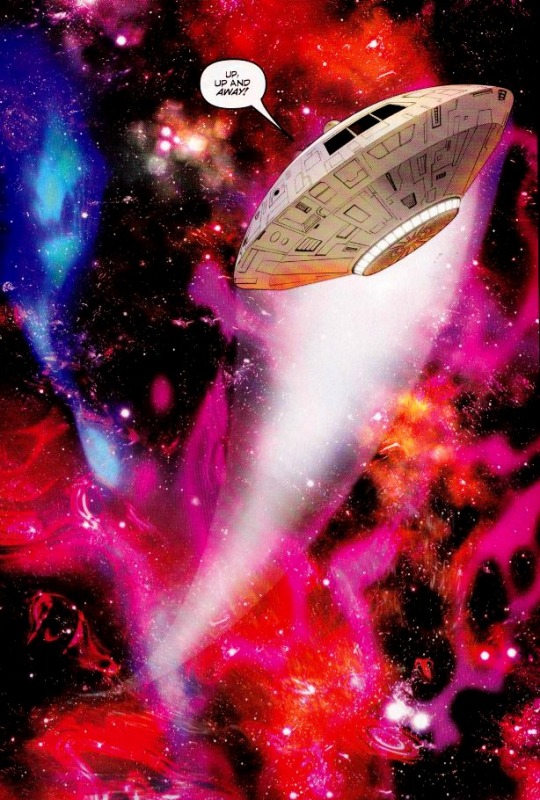

Jupiter 2 by Kostas Pantoulas

37 notes

·

View notes

Text

ABACUSYNTH by ELIAS JARZOMBEK [2022]

Abacusynth is a synthesizer inspired by an abacus, the ancient counting tool used all around the world. Just like an abacus is used to learn the fundamentals of math, the Abacusynth can be used to explore the building blocks of audio synthesis.

#elias jarzombek#abacusynth#technology#instruments#synthesizer#innovation#abacus#contemporary art#music#video#u

9K notes

·

View notes

Text

How lock-in hurts design

Berliners: Otherland has added a second date (Jan 28) for my book-talk after the first one sold out - book now!

If you've ever read about design, you've probably encountered the idea of "paving the desire path." A "desire path" is an erosion path created by people departing from the official walkway and taking their own route. The story goes that smart campus planners don't fight the desire paths laid down by students; they pave them, formalizing the route that their constituents have voted for with their feet.

Desire paths aren't always great (Wikipedia notes that "desire paths sometimes cut through sensitive habitats and exclusion zones, threatening wildlife and park security"), but in the context of design, a desire path is a way that users communicate with designers, creating a feedback loop between those two groups. The designers make a product, the users use it in ways that surprise the designer, and the designer integrates all that into a new revision of the product.

This method is widely heralded as a means of "co-innovating" between users and companies. Designers who practice the method are lauded for their humility, their willingness to learn from their users. Tech history is strewn with examples of successful paved desire-paths.

Take John Deere. While today the company is notorious for its war on its customers (via its opposition to right to repair), Deere was once a leader in co-innovation, dispatching roving field engineers to visit farms and learn how farmers had modified their tractors. The best of these modifications would then be worked into the next round of tractor designs, in a virtuous cycle:

https://securityledger.com/2019/03/opinion-my-grandfathers-john-deere-would-support-our-right-to-repair/

But this pattern is even more pronounced in the digital world, because it's much easier to update a digital service than it is to update all the tractors in the field, especially if that service is cloud-based, meaning you can modify the back-end everyone is instantly updated. The most celebrated example of this co-creation is Twitter, whose users created a host of its core features.

Retweets, for example, were a user creation. Users who saw something they liked on the service would type "RT" and paste the text and the link into a new tweet composition window. Same for quote-tweets: users copied the URL for a tweet and pasted it in below their own commentary. Twitter designers observed this user innovation and formalized it, turning it into part of Twitter's core feature-set.

Companies are obsessed with discovering digital desire paths. They pay fortunes for analytics software to produce maps of how their users interact with their services, run focus groups, even embed sneaky screen-recording software into their web-pages:

https://www.wired.com/story/the-dark-side-of-replay-sessions-that-record-your-every-move-online/

This relentless surveillance of users is pursued in the name of making things better for them: let us spy on you and we'll figure out where your pain-points and friction are coming from, and remove those. We all win!

But this impulse is a world apart from the humility and respect implied by co-innovation. The constant, nonconsensual observation of users has more to do with controlling users than learning from them.

That is, after all, the ethos of modern technology: the more control a company can exert over its users ,the more value it can transfer from those users to its shareholders. That's the key to enshittification, the ubiquitous platform decay that has degraded virtually all the technology we use, making it worse every day:

https://pluralistic.net/2023/02/19/twiddler/

When you are seeking to control users, the desire paths they create are all too frequently a means to wrestling control back from you. Take advertising: every time a service makes its ads more obnoxious and invasive, it creates an incentive for its users to search for "how do I install an ad-blocker":

https://www.eff.org/deeplinks/2019/07/adblocking-how-about-nah

More than half of all web-users have installed ad-blockers. It's the largest consumer boycott in human history:

https://doc.searls.com/2023/11/11/how-is-the-worlds-biggest-boycott-doing/

But zero app users have installed ad-blockers, because reverse-engineering an app requires that you bypass its encryption, triggering liability under Section 1201 of the Digital Millennium Copyright Act. This law provides for a $500,000 fine and a 5-year prison sentence for "circumvention" of access controls:

https://pluralistic.net/2024/01/12/youre-holding-it-wrong/#if-dishwashers-were-iphones

Beyond that, modifying an app creates liability under copyright, trademark, patent, trade secrets, noncompete, nondisclosure and so on. It's what Jay Freeman calls "felony contempt of business model":

https://locusmag.com/2020/09/cory-doctorow-ip/

This is why services are so horny to drive you to install their app rather using their websites: they are trying to get you to do something that, given your druthers, you would prefer not to do. They want to force you to exit through the gift shop, you want to carve a desire path straight to the parking lot. Apps let them mobilize the law to literally criminalize those desire paths.

An app is just a web-page wrapped in enough IP to make it a felony to block ads in it (or do anything else that wrestles value back from a company). Apps are web-pages where everything not forbidden is mandatory.

Seen in this light, an app is a way to wage war on desire paths, to abandon the cooperative model for co-innovation in favor of the adversarial model of user control and extraction.

Corporate apologists like to claim that the proliferation of apps proves that users like them. Neoliberal economists love the idea that business as usual represents a "revealed preference." This is an intellectually unserious tautology: "you do this, so you must like it":

https://boingboing.net/2024/01/22/hp-ceo-says-customers-are-a-bad-investment-unless-they-can-be-made-to-buy-companys-drm-ink-cartridges.html

Calling an action where no alternatives are permissible a "preference" or a "choice" is a cheap trick – especially when considered against the "preferences" that reveal themselves when a real choice is possible. Take commercial surveillance: when Apple gave Ios users a choice about being spied on – a one-click opt of of app-based surveillance – 96% of users choice no spying:

https://arstechnica.com/gadgets/2021/05/96-of-us-users-opt-out-of-app-tracking-in-ios-14-5-analytics-find/

But then Apple started spying on those very same users that had opted out of spying by Facebook and other Apple competitors:

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

Neoclassical economists aren't just obsessed with revealed preferences – they also love to bandy about the idea of "moral hazard": economic arrangements that tempt people to be dishonest. This is typically applied to the public ("consumers" in the contemptuous parlance of econospeak). But apps are pure moral hazard – for corporations. The ability to prohibit desire paths – and literally imprison rivals who help your users thwart those prohibitions – is too tempting for companies to resist.

The fact that the majority of web users block ads reveals a strong preference for not being spied on ("users just want relevant ads" is such an obvious lie that doesn't merit any serious discussion):

https://www.iccl.ie/news/82-of-the-irish-public-wants-big-techs-toxic-algorithms-switched-off/

Giant companies attained their scale by learning from their users, not by thwarting them. The person using technology always knows something about what they need to do and how they want to do it that the designers can never anticipate. This is especially true of people who are unlike those designers – people who live on the other side of the world, or the other side of the economic divide, or whose bodies don't work the way that the designers' bodies do:

https://pluralistic.net/2022/10/20/benevolent-dictators/#felony-contempt-of-business-model

Apps – and other technologies that are locked down so their users can be locked in – are the height of technological arrogance. They embody a belief that users are to be told, not heard. If a user wants to do something that the designer didn't anticipate, that's the user's fault:

https://www.wired.com/2010/06/iphone-4-holding-it-wrong/

Corporate enthusiasm for prohibiting you from reconfiguring the tools you use to suit your needs is a declaration of the end of history. "Sure," John Deere execs say, "we once learned from farmers by observing how they modified their tractors. But today's farmers are so much stupider and we are so much smarter that we have nothing to learn from them anymore."

Spying on your users to control them is a poor substitute asking your users their permission to learn from them. Without technological self-determination, preferences can't be revealed. Without the right to seize the means of computation, the desire paths never emerge, leaving designers in the dark about what users really want.

Our policymakers swear loyalty to "innovation" but when corporations ask for the right to decide who can innovate and how, they fall all over themselves to create laws that let companies punish users for the crime of contempt of business-model.

I'm Kickstarting the audiobook for The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There's also bundles with Red Team Blues in ebook, audio or paperback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/24/everything-not-mandatory/#is-prohibited

Image:

Belem (modified)

https://commons.wikimedia.org/wiki/File:Desire_path_%2819811581366%29.jpg

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/deed.en

#pluralistic#desire paths#design#drm#everything not mandatory is prohibited#apps#ip#innovation#user innovation#technological self-determination#john deere#twitter#felony contempt of business model

3K notes

·

View notes

Text

taking the filters out of the cigarettes and gently slipping them together to make the worlds longest cig

3K notes

·

View notes

Text

"Innovations"

Entrepreneur: I've improved the wheelchair by...

Actual Wheelchair Users: Making it cheaper?!

Entrepreneur: ...creating a wildly inconvenient version for seven bazillion dollars that weighs as much as a grown man, goes 100mph and can't be taken on a plane.

Actual Wheelchair Users: What?! No, I can't afford a basic chair that meets my medical needs.

Entrepreneur: I just want to improve people's lives...

Actual Wheelchair Users: Make insurance pay for the perfectly reasonable, normal, not weird wheelchair my doctor prescribed for me.

Entrepreneur: But... but...innovation...disruption.

751 notes

·

View notes

Text

URANUS THROUGH THE SIGNS AND WHAT MAKES YOU UNIQUE AND STAND OUT

uranus in your astrological chart represents the planet of innovation, rebellion, and originality. its influence can make you stand out and be unique by encouraging unconventional thinking, a desire for change, and a willingness to break free from societal norms, allowing your individuality to shine brightly.

aries / 1H: your original identity, your boldness, your actions, quick decision making, your rebellious nature, your innovation

taurus / 2H: unconventional finances, your resourcefulness, eco-conscious values, self reliance, your sensuality, your practicality

gemini / 3H: your wit, your intelligence and communication skills, your ability to age like fine wine, networking skills, unconventional communication methods

cancer / 4H: unique family situation/history, unconventional living situations, your strong emotion intelligence, your ties to your family and friends, unconventional approach to security

leo / 5H: your creativity, your dramatic flare, self expression, your desire to stand out and be seen, your approach to love and romance, unconventional parenting style

virgo / 6H: unique approach to health and wellness, your advocacy and commitment to your work, unconventional job opportunities, your pets

libra / 7H: your relationships and romantic style, your desire for fairness and balance, unconventional advocacy, your marriage, approach to cooperating with others

scorpio / 8H: your intense energy, your approach to death and transformation, unique understanding of life and the occult, deep emotional intensity

sagittarius / 9H: your love for adventure and philosophy, your wisdom, unconventional morals and ethics, your visions for the future, your truth seeking tendencies

capricorn / 10H: unique career path or ambitions, your style of leadership, desire to challenge institutional approaches, ability to influence others

aquarius / 11H: natural ability to stand out, your innovations and creativity, unique friendships or associations, unique view of technology and usage

pisces / 12H: your connection to spirit and the metaphysical, your ability to daydream, unconventional emotional intelligence, desire to transcend the physical, your visions

© spirit-of-phantom 2023

#astrology#houses#sidereal astrology#aquarius#aries#astro observations#astrology 101#tropical astrology#astro notes#uranus signs#astrologer#astro community#asthetic#pisces#scorpio#halloween#change#innovation

539 notes

·

View notes

Text

Ex-Meta employee Madelyn Machado recently posted a TikTok video claiming that she was getting paid $190,000 a year to do nothing. Another Meta employee, also on TikTok, posted that “Meta was hiring people so that other companies couldn’t have us, and then they were just kind of like hoarding us like Pokémon cards.” Over at Google, a company known to have pioneered the modern tech workplace, one designer complained of spending 40 percent of their time on “the inefficien[cy] overhead of simply working at Google.” Some report spending all day on tasks as simple as changing the color of a website button. Working the bare minimum while waiting for stock to vest is so common that Googlers call it “resting and vesting.”

In an anonymous online poll on how many “focused hours of work” software engineers put in each day, 71 percent of the over four thousand respondents claimed to work six hours a day or less, while 12 percent said they did between one and two hours a day. During the acute phase of the Covid-19 pandemic, it became common for tech workers to capitalize on all this free time by juggling multiple full-time remote jobs. According to the Wall Street Journal, many workers who balance two jobs do not even hit a regular forty-hour workload for both jobs combined. One software engineer reported logging between three and ten hours of actual work per week when working one job, with the rest of his time spent on pointless meetings and pretending to be busy. My own experience supports this trend: toward the end of my five-year tenure as a software engineer for Microsoft, I was working fewer than three hours a day. And of what little code I produced for them, none of it made any real impact on Microsoft’s bottom line—or the world at large.

For much of this century, optimism that technology would make the world a better place fueled the perception that Silicon Valley was the moral alternative to an extractive Wall Street—that it was possible to make money, not at the expense of society but in service of it. In other words, many who joined the industry did so precisely because they thought that their work would be useful. Yet what we’re now seeing is a lot of bullshit. If capitalism is supposed to be efficient and, guided by the invisible hand of the market, eliminate inefficiencies, how is it that the tech industry, the purported cradle of innovation, has become a redoubt of waste and unproductivity?

247 notes

·

View notes

Text

Cigarette Pack Holder. [1955]

#FAVE#documentary photography#innovation#vintage#black and white#monochrome#cigarettes#50s#photography#u

224 notes

·

View notes

Text

Big Tech disrupted disruption

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/02/08/permanent-overlords/#republicans-want-to-defund-the-police

Before "disruption" turned into a punchline, it was a genuinely exciting idea. Using technology, we could connect people to one another and allow them to collaborate, share, and cooperate to make great things happen.

It's easy (and valid) to dismiss the "disruption" of Uber, which "disrupted" taxis and transit by losing $31b worth of Saudi royal money in a bid to collapse the world's rival transportation system, while quietly promising its investors that it would someday have pricing power as a monopoly, and would attain profit through price-gouging and wage-theft.

Uber's disruption story was wreathed in bullshit: lies about the "independence" of its drivers, about the imminence of self-driving taxis, about the impact that replacing buses and subways with millions of circling, empty cars would have on traffic congestion. There were and are plenty of problems with traditional taxis and transit, but Uber magnified these problems, under cover of "disrupting" them away.

But there are other feats of high-tech disruption that were and are genuinely transformative – Wikipedia, GNU/Linux, RSS, and more. These disruptive technologies altered the balance of power between powerful institutions and the businesses, communities and individuals they dominated, in ways that have proven both beneficial and durable.

When we speak of commercial disruption today, we usually mean a tech company disrupting a non-tech company. Tinder disrupts singles bars. Netflix disrupts Blockbuster. Airbnb disrupts Marriott.

But the history of "disruption" features far more examples of tech companies disrupting other tech companies: DEC disrupts IBM. Netscape disrupts Microsoft. Google disrupts Yahoo. Nokia disrupts Kodak, sure – but then Apple disrupts Nokia. It's only natural that the businesses most vulnerable to digital disruption are other digital businesses.

And yet…disruption is nowhere to be seen when it comes to the tech sector itself. Five giant companies have been running the show for more than a decade. A couple of these companies (Apple, Microsoft) are Gen-Xers, having been born in the 70s, then there's a couple of Millennials (Amazon, Google), and that one Gen-Z kid (Facebook). Big Tech shows no sign of being disrupted, despite the continuous enshittification of their core products and services. How can this be? Has Big Tech disrupted disruption itself?

That's the contention of "Coopting Disruption," a new paper from two law profs: Mark Lemley (Stanford) and Matthew Wansley (Yeshiva U):

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4713845

The paper opens with a review of the literature on disruption. Big companies have some major advantages: they've got people and infrastructure they can leverage to bring new products to market more cheaply than startups. They've got existing relationships with suppliers, distributors and customers. People trust them.

Diversified, monopolistic companies are also able to capture "involuntary spillovers": when Google spends money on AI for image recognition, it can improve Google Photos, YouTube, Android, Search, Maps and many other products. A startup with just one product can't capitalize on these spillovers in the same way, so it doesn't have the same incentives to spend big on R&D.

Finally, big companies have access to cheap money. They get better credit terms from lenders, they can float bonds, they can tap the public markets, or just spend their own profits on R&D. They can also afford to take a long view, because they're not tied to VCs whose funds turn over every 5-10 years. Big companies get cheap money, play a long game, pay less to innovate and get more out of innovation.

But those advantages are swamped by the disadvantages of incumbency, all the various curses of bigness. Take Arrow's "replacement effect": new companies that compete with incumbents drive down the incumbents' prices and tempt their customers away. But an incumbent that buys a disruptive new company can just shut it down, and whittle down its ideas to "sustaining innovation" (small improvements to existing products), killing "disruptive innovation" (major changes that make the existing products obsolete).

Arrow's Replacement Effect also comes into play before a new product even exists. An incumbent that allows a rival to do R&D that would eventually disrupt its product is at risk; but if the incumbent buys this pre-product, R&D-heavy startup, it can turn the research to sustaining innovation and defund any disruptive innovation.

Arrow asks us to look at the innovation question from the point of view of the company as a whole. Clayton Christensen's "Innovator's Dilemma" looks at the motivations of individual decision-makers in large, successful companies. These individuals don't want to disrupt their own business, because that will render some part of their own company obsolete (perhaps their own division!). They also don't want to radically change their customers' businesses, because those customers would also face negative effects from disruption.

A startup, by contrast, has no existing successful divisions and no giant customers to safeguard. They have nothing to lose and everything to gain from disruption. Where a large company has no way for individual employees to initiate major changes in corporate strategy, a startup has fewer hops between employees and management. What's more, a startup that rewards an employee's good idea with a stock-grant ties that employee's future finances to the outcome of that idea – while a giant corporation's stock bonuses are only incidentally tied to the ideas of any individual worker.

Big companies are where good ideas go to die. If a big company passes on its employees' cool, disruptive ideas, that's the end of the story for that idea. But even if 100 VCs pass on a startup's cool idea and only one VC funds it, the startup still gets to pursue that idea. In startup land, a good idea gets lots of chances – in a big company, it only gets one.

Given how innately disruptable tech companies are, given how hard it is for big companies to innovate, and given how little innovation we've gotten from Big Tech, how is it that the tech giants haven't been disrupted?

The authors propose a four-step program for the would-be Tech Baron hoping to defend their turf from disruption.

First, gather information about startups that might develop disruptive technologies and steer them away from competing with you, by investing in them or partnering with them.

Second, cut off any would-be competitor's supply of resources they need to develop a disruptive product that challenges your own.

Third, convince the government to pass regulations that big, established companies can comply with but that are business-killing challenges for small competitors.

Finally, buy up any company that resists your steering, succeeds despite your resource war, and escapes the compliance moats of regulation that favors incumbents.

Then: kill those companies.

The authors proceed to show that all four tactics are in play today. Big Tech companies operate their own VC funds, which means they get a look at every promising company in the field, even if they don't want to invest in them. Big Tech companies are also awash in money and their "rival" VCs know it, and so financial VCs and Big Tech collude to fund potential disruptors and then sell them to Big Tech companies as "aqui-hires" that see the disruption neutralized.

On resources, the authors focus on data, and how companies like Facebook have explicit policies of only permitting companies they don't see as potential disruptors to access Facebook data. They reproduce internal Facebook strategy memos that divide potential platform users into "existing competitors, possible future competitors, [or] developers that we have alignment with on business models." These categories allow Facebook to decide which companies are capable of developing disruptive products and which ones aren't. For example, Amazon – which doesn't compete with Facebook – is allowed to access FB data to target shoppers. But Messageme, a startup, was cut off from Facebook as soon as management perceived them as a future rival. Ironically – but unsurprisingly – Facebook spins these policies as pro-privacy, not anti-competitive.

These data policies cast a long shadow. They don't just block existing companies from accessing the data they need to pursue disruptive offerings – they also "send a message" to would-be founders and investors, letting them know that if they try to disrupt a tech giant, they will have their market oxygen cut off before they can draw breath. The only way to build a product that challenges Facebook is as Facebook's partner, under Facebook's direction, with Facebook's veto.

Next, regulation. Starting in 2019, Facebook started publishing full-page newspaper ads calling for regulation. Someone ghost-wrote a Washington Post op-ed under Zuckerberg's byline, arguing the case for more tech regulation. Google, Apple, OpenAI other tech giants have all (selectively) lobbied in favor of many regulations. These rules covered a lot of ground, but they all share a characteristic: complying with them requires huge amounts of money – money that giant tech companies can spare, but potential disruptors lack.

Finally, there's predatory acquisitions. Mark Zuckerberg, working without the benefit of a ghost writer (or in-house counsel to review his statements for actionable intent) has repeatedly confessed to buying companies like Instagram to ensure that they never grow to be competitors. As he told one colleague, "I remember your internal post about how Instagram was our threat and not Google+. You were basically right. The thing about startups though is you can often acquire them.”

All the tech giants are acquisition factories. Every successful Google product, almost without exception, is a product they bought from someone else. By contrast, Google's own internal products typically crash and burn, from G+ to Reader to Google Videos. Apple, meanwhile, buys 90 companies per year – Tim Apple brings home a new company for his shareholders more often than you bring home a bag of groceries for your family. All the Big Tech companies' AI offerings are acquisitions, and Apple has bought more AI companies than any of them.

Big Tech claims to be innovating, but it's really just operationalizing. Any company that threatens to disrupt a tech giant is bought, its products stripped of any really innovative features, and the residue is added to existing products as a "sustaining innovation" – a dot-release feature that has all the innovative disruption of rounding the corners on a new mobile phone.

The authors present three case-studies of tech companies using this four-point strategy to forestall disruption in AI, VR and self-driving cars. I'm not excited about any of these three categories, but it's clear that the tech giants are worried about them, and the authors make a devastating case for these disruptions being disrupted by Big Tech.

What do to about it? If we like (some) disruption, and if Big Tech is enshittifying at speed without facing dethroning-by-disruption, how do we get the dynamism and innovation that gave us the best of tech?

The authors make four suggestions.

First, revive the authorities under existing antitrust law to ban executives from Big Tech companies from serving on the boards of startups. More broadly, kill interlocking boards altogether. Remember, these powers already exist in the lawbooks, so accomplishing this goal means a change in enforcement priorities, not a new act of Congress or rulemaking. What's more, interlocking boards between competing companies are illegal per se, meaning there's no expensive, difficult fact-finding needed to demonstrate that two companies are breaking the law by sharing directors.

Next: create a nondiscrimination policy that requires the largest tech companies that share data with some unaffiliated companies to offer data on the same terms to other companies, except when they are direct competitors. They argue that this rule will keep tech giants from choking off disruptive technologies that make them obsolete (rather than competing with them).

On the subject of regulation and compliance moats, they have less concrete advice. They counsel lawmakers to greet tech giants' demands to be regulated with suspicion, to proceed with caution when they do regulate, and to shape regulation so that it doesn't limit market entry, by keeping in mind the disproportionate burdens regulations put on established giants and small new companies. This is all good advice, but it's more a set of principles than any kind of specific practice, test or procedure.

Finally, they call for increased scrutiny of mergers, including mergers between very large companies and small startups. They argue that existing law (Sec 2 of the Sherman Act and Sec 7 of the Clayton Act) both empower enforcers to block these acquisitions. They admit that the case-law on this is poor, but that just means that enforcers need to start making new case-law.

I like all of these suggestions! We're certainly enjoying a more activist set of regulators, who are more interested in Big Tech, than we've seen in generations.

But they are grossly under-resourced even without giving them additional duties. As Matt Stoller points out, "the DOJ's Antitrust Division has fewer people enforcing anti-monopoly laws in a $24 trillion economy than the Smithsonian Museum has security guards."

https://www.thebignewsletter.com/p/congressional-republicans-to-defund

What's more, Republicans are trying to slash their budgets even further. The American conservative movement has finally located a police force they're eager to defund: the corporate police who defend us all from predatory monopolies.

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#coopting disruption#law and political economy#law#economics#competition#big tech#tech#innovation#acquihires#predatory acquisitions#mergers and acquisitions#disruption#schumpeter#the curse of bigness#clay christensen#josef schumpeter#christensen#enshittiification#business#regulation#scholarship

284 notes

·

View notes

Text

Northrop YB-49, 1948. One of the "Flying Wings".

➤➤ VIDEO: https://youtu.be/Lw7yWRPmekI

#Northrop#flying wing#YB 49#YB-49#aviation pioneer#pioneer#aviation history#youtube#aircraft#airplane#aviation#dronescapes#military#documentary#history tag#innovation#history

190 notes

·

View notes

Photo

Over seven million women suffer from breast cancer, making it one of the deadliest cancers across the globe.

A team of researchers at the University of Washington School of Medicine (UWSM) has been working on a breast cancer vaccine for over the last 20 years. In their recently published study, they finally revealed the results of the phase one human trials of their breast cancer vaccine.

The study is published in the journal JAMA Oncology. During the phase one trials, the experimental breast cancer vaccine has proved to be safe and highly effective in preventing the growth of human epidermal growth receptor 2 (HER2) cancer tumor cells. High levels of HER2 protein in the body are responsible for causing the most complex, aggressive, and rapidly spreading type of breast cancer in women. Therefore, the new vaccine might turn out to be a groundbreaking discovery in the field of modern medicine.

The researchers are now conducting phase two trials of their vaccine. If successful, it’d be one of the greatest miracles of medical science – fingers crossed! Source: Interesting Engineering (link in bio) #science #cancer #innovation https://www.instagram.com/p/CnCQgiVO_xi/?igshid=NGJjMDIxMWI=

1K notes

·

View notes

Text

174 notes

·

View notes