#nvidiaai

Text

🚀 Nvidia: Leading the AI Revolution 🚀

In the rapidly evolving landscape of technology, Nvidia stands as a titan, driving the future of artificial intelligence (AI) with pioneering chip innovations and computational systems. Their breakthroughs, from the Blackwell and Hopper chips to their unmatched collaborations with tech giants like AWS and Google, are not just technological feats; they are milestones marking the path to a future where AI reshapes our world.

🚀 From Concept to Reality: Nvidia's AI Innovations

Nvidia's journey from enhancing chip performance through crystal fusion to developing AI-driven digital twins and chatbots showcases their commitment to pushing the boundaries of what AI can achieve. The collaboration with industry leaders has led to the integration of AI technologies that are transforming business practices and daily life.

💡 AI for a Better Tomorrow

The practical applications of Nvidia's AI technologies, such as optimizing manufacturing processes and advancing machine learning through projects like General Robotics 003, are testaments to the transformative power of AI. These innovations offer a glimpse into a future where AI not only enhances efficiency but also pioneers new realms of creativity and exploration.

🌍 A Call to Action

As we stand on the brink of this new era, the importance of community and collaboration in AI development has never been clearer. Nvidia's journey underscores the potential of AI to revolutionize industries and improve lives, inviting us all to engage, contribute, and shape the future of technology.

Explore how Nvidia is leading the charge into the AI-driven future and join the conversation on how we can collectively navigate the promises and challenges of this technological revolution. Check out the full story here: Revolutionizing the Future: Nvidia's AI Breakthroughs and Collaborative Innovation.

1 note

·

View note

Text

NVIDIA Launches Generative AI Microservices for developers

In order to enable companies to develop and implement unique applications on their own platforms while maintaining complete ownership and control over their intellectual property, NVIDIA released hundreds of enterprise-grade generative AI microservices.

The portfolio of cloud-native microservices, which is built on top of the NVIDIA CUDA platform, includes NVIDIA NIM microservices for efficient inference on over two dozen well-known AI models from NVIDIA and its partner ecosystem. Additionally, NVIDIA CUDA-X microservices for guardrails, data processing, HPC, retrieval-augmented generation (RAG), and other applications are now accessible as NVIDIA accelerated software development kits, libraries, and tools. Additionally, approximately two dozen healthcare NIM and CUDA-X microservices were independently revealed by NVIDIA.

NVIDIA’s full-stack computing platform gains a new dimension with the carefully chosen microservices option. With a standardized method to execute bespoke AI models designed for NVIDIA’s CUDA installed base of hundreds of millions of GPUs spanning clouds, data centers, workstations, and PCs, this layer unites the AI ecosystem of model creators, platform providers, and organizations.

Prominent suppliers of application, data, and cybersecurity platforms, such as Adobe, Cadence, CrowdStrike, Getty Images, SAP, ServiceNow, and Shutterstock, were among the first to use the new NVIDIA generative AI microservices offered in NVIDIA AI Enterprise 5.0.

Jensen Huang, NVIDIA founder and CEO, said corporate systems have a treasure of data that can be turned into generative AI copilots. These containerized AI microservices, created with their partner ecosystem, enable firms in any sector to become AI companies.

Microservices for NIM Inference Accelerate Deployments From Weeks to Minutes

NIM microservices allow developers to cut down on deployment timeframes from weeks to minutes by offering pre-built containers that are driven by NVIDIA inference tools, such as TensorRT-LLM and Triton Inference Server.

For fields like language, voice, and medication discovery, they provide industry-standard APIs that let developers easily create AI apps utilizing their private data, which is safely stored in their own infrastructure. With the flexibility and speed to run generative AI in production on NVIDIA-accelerated computing systems, these applications can expand on demand.

For deploying models from NVIDIA, A121, Adept, Cohere, Getty Images, and Shutterstock as well as open models from Google, Hugging Face, Meta, Microsoft, Mistral AI, and Stability AI, NIM microservices provide the quickest and most efficient production AI container.

Today, ServiceNow revealed that it is using NIM to create and implement new generative AI applications, such as domain-specific copilots, more quickly and affordably.

Consumers will be able to link NIM microservices with well-known AI frameworks like Deepset, LangChain, and LlamaIndex, and access them via Amazon SageMaker, Google Kubernetes Engine, and Microsoft Azure AI.

Guardrails, HPC, Data Processing, RAG, and CUDA-X Microservices

To accelerate production AI development across sectors, CUDA-X microservices provide end-to-end building pieces for data preparation, customisation, and training.

Businesses may utilize CUDA-X microservices, such as NVIDIA Earth-2 for high resolution weather and climate simulations, NVIDIA cuOpt for route optimization, and NVIDIA Riva for configurable speech and translation AI, to speed up the adoption of AI.

NeMo Retriever microservices enable developers to create highly accurate, contextually relevant replies by connecting their AI apps to their business data, which includes text, photos, and visualizations like pie charts, bar graphs, and line plots. Businesses may improve accuracy and insight by providing copilots, chatbots, and generative AI productivity tools with more data thanks to these RAG capabilities.

Nvidia nemo

There will soon be more NVIDIA NeMo microservices available for the creation of bespoke models. These include NVIDIA NeMo Evaluator, which analyzes AI model performance, NVIDIA NeMo Guardrails for LLMs, NVIDIA NeMo Customizer, which builds clean datasets for training and retrieval, and NVIDIA NeMo Evaluator.

Ecosystem Uses Generative AI Microservices To Boost Enterprise Platforms

Leading application suppliers are collaborating with NVIDIA microservices to provide generative AI to businesses, as are data, compute, and infrastructure platform vendors from around the NVIDIA ecosystem.

NVIDIA microservices is collaborating with leading data platform providers including Box, Cloudera, Cohesity, Datastax, Dropbox, and NetApp to assist users in streamlining their RAG pipelines and incorporating their unique data into generative AI applications. NeMo Retriever is a tool that Snowflake uses to collect corporate data in order to create AI applications.

Businesses may use the NVIDIA microservices that come with NVIDIA AI Enterprise 5.0 on any kind of infrastructure, including popular clouds like Google Cloud, Amazon Web Services (AWS), Azure, and Oracle Cloud Infrastructure.

More than 400 NVIDIA-Certified Systems, including as workstations and servers from Cisco, Dell Technologies, HP, Lenovo, and Supermicro, are also capable of supporting NVIDIA microservices. HPE also announced today that their enterprise computing solution for generative AI is now available. NIM and NVIDIA AI Foundation models will be integrated into HPE’s AI software.

VMware Private AI Foundation and other infrastructure software platforms will soon support NVIDIA AI Enterprise microservices. In order to make it easier for businesses to incorporate generative AI capabilities into their applications while maintaining optimal security, compliance, and control capabilities, Red Hat OpenShift supports NVIDIA NIM microservices. With NVIDIA AI Enterprise, Canonical is extending Charmed Kubernetes support for NVIDIA microservices.

Through NVIDIA AI Enterprise, the hundreds of AI and MLOps partners that make up NVIDIA’s ecosystem such as Abridge, Anyscale, Dataiku, DataRobot, Glean, H2O.ai, Securiti AI, Scale.ai, OctoAI, and Weights & Biases are extending support for NVIDIA microservices.

Vector search providers like as Apache Lucene, Datastax, Faiss, Kinetica, Milvus, Redis, and Weaviate are collaborating with NVIDIA NeMo Retriever microservices to provide responsive RAG capabilities for businesses.

Accessible

NVIDIA microservices are available for free experimentation by developers at ai.nvidia.com. Businesses may use NVIDIA AI Enterprise 5.0 on NVIDIA-Certified Systems and top cloud platforms to deploy production-grade NIM microservices.

Read more on govindhtech.com

#nvidiaai#ai#aimodel#nim#rag#nvidianemo#amazon#aws#dell#nvidianim#technology#technews#news#govindhtech

1 note

·

View note

Video

youtube

GPU AS A SERVICE: PROYECTOS QUE CREECERAN FUERTEMENTE

#GPU AS A SERVICE: PROYECTOS QUE CREECERAN FUERTEMENTE dentro de las narrativas de #DePIN #ai #IA #ArtificialIntelligence #Cloud https://youtu.be/i64TOWP6k7M?si=kfROwOCJsuxvBb3F via @rendernetwork @nvidia @NVIDIAGeForce @NVIDIAGeForceES @NVIDIAGeForceFR @NVIDIAAI @InferixGPU @AethirCloud @aethirCSD

1 note

·

View note

Text

Nvidia presenta chip más rápido que busca cimentar dominio de IA

Los chips de IA de @NVIDIAAI pueden ahorrar dinero para los operadores de centros de datos centrados en modelos de lenguaje grandes y otras cargas de trabajo de uso intensivo de cómputo.

Nvidia presentó un nuevo chip de inteligencia artificial (IA) llamado Grace Hopper Superchip. El chip, que se basa en la arquitectura Hopper de la compañía, es el más rápido de su clase y está diseñado para impulsar los últimos desarrollos en IA.

Grace Hopper Superchip tiene una velocidad de procesamiento de hasta 280 teraflops, lo que lo convierte en el chip de IA más rápido del mundo. El chip…

View On WordPress

0 notes

Photo

Nvidia can't catch a break. Late Wednesday, the chip maker said in a filing the U.S. government has informed the company it has imposed a new licensing requirement, effective immediately, covering any exports of Nvidia's A100 and upcoming H100 products to China, including Hong Kong, and Russia. (@nvidia) Nvidia's A100 are used in data centers for artificial intelligence, data analytics and high-performance computing applications, according to the company's website. (@nvidiaai) The government "indicated that the new license requirement will address the risk that the covered products may be used in, or diverted to, a 'military end use' or 'military end user' in China and Russia," the filing said. Nvidia (ticker: NVDA) shares fell by 3.9% to $145 in after hours trading. Nvidia said it doesn't sell any products to Russia, but noted its current outlook for the third fiscal quarter had included about $400 million in potential sales to China that could be affected by the new license requirement. The company also said the new restrictions may affect its ability to develop its H100 product on time and could potentially force it to move some operations out of China. . . Follow @stoccoin for daily posts about cryptocurrencies and stocks. NOTE: This post is not financial advice for you to buy the crypto(s) or stock(s) mentioned. Do your own research and invest at your own will if you want. This also applies to stock(s) or crypto(s), which you see in our stories. Thanks for reading folks! IGNORE THE HASHTAGS: #stoccoin #nvidia #china #russia #hongkong #crypto #stocks #stockmarket #bitcoin #cryptocurrency #btc #metaverse #nft #sensex #nifty50 #bse #nse #banknifty #usd #investments #finance https://www.instagram.com/p/Ch9V32QP2uk/?igshid=NGJjMDIxMWI=

#stoccoin#nvidia#china#russia#hongkong#crypto#stocks#stockmarket#bitcoin#cryptocurrency#btc#metaverse#nft#sensex#nifty50#bse#nse#banknifty#usd#investments#finance

0 notes

Photo

I just published Create 3D Models from Images! AI and Game Development, Design… Read more (link in story): https://ift.tt/3glseny

posted on Instagram - https://instagr.am/p/CNzxIARgPqh/

#ai#machinelearning#deeplearning#artificialintelligence#datascience#ml#innovation#nvidia#nvidiaai#gtc

11 notes

·

View notes

Photo

The #EU proposal to ban facial recognition for five years; one of the most developed areas of #ML and #AI is an idea straight from medieval times. Even an #AI neophytes will agree, the tech offer enormous potential in solving complex security issues facing humanity today. It’s also true, the tech poses some risk, but the solution is not a blanket ban. The risk can be mitigated by carefully legislating on the areas of potential abuse. A decision like that would greatly stifle AI innovation within #europeanunion and doesn’t stop bad actors from accessing the technology in a highly globalized landscape. #EU bureaucrats in Brussels over and over again have demonstrated their overzealousness with regulations, a sure way of discouraging innovation and wider support by member states. #brexit is a perfect example of country that got fed up with #EU overreach and more will follow if status quo is maintained. 🍡 🍡 🍡 🍡 🍡 🍡 * * * * * #ai #artificialintelligence #computerscience #machinelearning #machinelearningalgorithms #machinelearningtools #facialrecognition #biometrics #neuralnetworks #deeplearning #amazon #googleai #googleml #nvidia #nvidiaai #nvidiageforce #computing #it #informationtechnology # (at Nairobi) https://www.instagram.com/p/B7lq_pchWZ0/?igshid=dehndrhjezwp

#eu#ml#ai#europeanunion#brexit#artificialintelligence#computerscience#machinelearning#machinelearningalgorithms#machinelearningtools#facialrecognition#biometrics#neuralnetworks#deeplearning#amazon#googleai#googleml#nvidia#nvidiaai#nvidiageforce#computing#it#informationtechnology

0 notes

Photo

Made this with @nvidia ‘s GauGAN @adobe capture , Adobe draw, and @photoshop . GauGAN, named after post-Impressionist painter Paul Gauguin, creates photorealistic images from segmentation maps, which are labeled sketches that depict the layout of a scene and it’s on @nvidiaai playground. Try it out and make something or something 👨🎨 #abstractart #art #graphicdesign #artificialintelligence #adobe #nvidia https://www.instagram.com/p/B3pNJmBpdH3/?igshid=77i92mnrrqgy

1 note

·

View note

Photo

🖥️ 🖥️ #CyberMonday ready: Best Workstations for Deep Learning, Data Science, and Machine Learning (ML) → http://news.towardsai.net/workstations 🖥️ 🖥️⠀ #NVIDIA #GEFORCE #deeplearning #neuralnetwork #machinelearning #ml #ai #ia #programming #python #100daysofcode #datascience #NVIDIAAI #coding

0 notes

Photo

@random_forests @zaidalyafeai Yes, @NvidiaAI needs to release a LSTM/GRU mode in cuDNN that is compatible with the original layers. Then we wouldn't need separate layers.

1 note

·

View note

Text

NVIDIA NIM: Scalable AI Inference Improved Microservices

Nvidia Nim Deployment

The usage of generative AI has increased dramatically. The 2022 debut of OpenAI’s ChatGPT led to over 100M users in months and a boom in development across practically every sector.

POCs using open-source community models and APIs from Meta, Mistral, Stability, and other sources were started by developers by 2023.

As 2024 approaches, companies are turning their attention to full-scale production deployments, which include, among other things, logging, monitoring, and security, as well as integrating AI models with the corporate infrastructure already in place. This manufacturing route is difficult and time-consuming; it calls for specific knowledge, tools, and procedures, particularly when operating on a large scale.

What is Nvidia Nim?

Industry-standard APIs, domain-specific code, efficient inference engines, and enterprise runtime are all included in NVIDIA NIM, a containerized inference microservice.

A simplified approach to creating AI-powered workplace apps and implementing AI models in real-world settings is offered by NVIDIA NIM, a component of NVIDIA AI workplace.

NIM is a collection of cloud-native microservices that have been developed with the goal of reducing time-to-market and streamlining the deployment of generative AI models on GPU-accelerated workstations, cloud environments, and data centers. By removing the complexity of creating AI models and packaging them for production using industry-standard APIs, it increases the number of developers.

NVIDIA NIM for AI inference optimization

With NVIDIA NIM, 10-100X more business application developers will be able to contribute to their organizations’ AI transformations by bridging the gap between the intricate realm of AI development and the operational requirements of corporate settings.Image Credit to NVIDIA

Figure: Industry-standard APIs, domain-specific code, efficient inference engines, and enterprise runtime are all included in NVIDIA NIM, a containerized inference microservice.

The following are a few of the main advantages of NIM.

Install somewhere

Model deployment across a range of infrastructures, including local workstations, cloud, and on-premises data centers, is made possible by NIM’s controllable and portable architecture. This covers workstations and PCs with NVIDIA RTX, NVIDIA Certified Systems, NVIDIA DGX, and NVIDIA DGX Cloud.

Various NVIDIA hardware platforms, cloud service providers, and Kubernetes distributions are subjected to rigorous validation and benchmarking processes for prebuilt containers and Helm charts packed with optimized models. This guarantees that enterprises can deploy their generative AI applications anywhere and retain complete control over their apps and the data they handle. It also provides support across all environments powered by NVIDIA.

Use industry-standard APIs while developing

It is easier to construct AI applications when developers can access AI models using APIs that follow industry standards for each domain. With as few as three lines of code, developers may update their AI apps quickly thanks to these APIs’ compatibility with the ecosystem’s normal deployment procedures. Rapid implementation and scalability of AI technologies inside corporate systems is made possible by their seamless integration and user-friendliness.

Use models specific to a domain

Through a number of important features, NVIDIA NIM also meets the demand for domain-specific solutions and optimum performance. It bundles specialized code and NVIDIA CUDA libraries relevant to a number of disciplines, including language, voice, video processing, healthcare, and more. With this method, apps are certain to be precise and pertinent to their particular use case.

Using inference engines that have been tuned

NIM provides the optimum latency and performance on accelerated infrastructure by using inference engines that are tuned for each model and hardware configuration. This enhances the end-user experience while lowering the cost of operating inference workloads as they grow. Developers may get even more precision and efficiency by aligning and optimizing models with private data sources that remain within their data center, in addition to providing improved community models.

Assistance with AI of an enterprise-level

NIM, a component of NVIDIA AI Enterprise, is constructed using an enterprise-grade base container that serves as a strong basis for corporate AI applications via feature branches, stringent validation, service-level agreements for enterprise support, and frequent CVE security upgrades. The extensive support network and optimization tools highlight NIM’s importance as a key component in implementing scalable, effective, and personalized AI systems in real-world settings.

Accelerated AI models that are prepared for use

NIM provides AI use cases across several domains with support for a large number of AI models, including community models, NVIDIA AI Foundation models, and bespoke models given by NVIDIA partners. Large language models (LLMs), vision language models (VLMs), voice, picture, video, 3D, drug discovery, medical imaging, and other models are included in this.

Using cloud APIs provided by NVIDIA and available via the NVIDIA API catalog, developers may test the most recent generative AI models. Alternatively, they may download NIM and use it to self-host the models. In this case, development time, complexity, and expense can be reduced by quickly deploying the models on-premises or on major cloud providers using Kubernetes.

By providing industry-standard APIs and bundling algorithmic, system, and runtime improvements, NIM microservices streamline the AI model deployment process. This makes it possible for developers to include NIM into their current infrastructure and apps without the need for complex customization or specialist knowledge.

Businesses may use NIM to optimize their AI infrastructure for optimal performance and cost-effectiveness without having to worry about containerization or the intricacies of developing AI models. NIM lowers hardware and operating costs while improving performance and scalability on top of accelerated AI infrastructure.

NVIDIA offers microservices for cross-domain model modification for companies wishing to customize models for corporate apps. NVIDIA NeMo allows for multimodal models, speech AI, and LLMs to be fine-tuned utilizing private data. With an expanding library of models for generative biology, chemistry, and molecular prediction, NVIDIA BioNeMo expedites the drug development process. With Edify models, NVIDIA Picasso speeds up creative operations. Customized generative AI models for the development of visual content may be implemented thanks to the training of these models using licensed libraries from producers of visual material.

Read more on govindhtech.com

#nvidianim#scalableai#microservices#openai#chatgpt#nvidiaai#generativeai#aimodel#technology#technews#news#govindhtech

1 note

·

View note

Video

youtube

We (@codebasicshub + @AtliQTech) are doing a giveaway in association with @NVIDIAAI for #GTC20. We'll be giving away two passes to people with the best ideas for our #SkillsBasics Initiative. For details:

0 notes

Photo

We are proud to be part of the #NVIDIAInception Program! 🤖

We're really excited to have been admitted in the #AI major league. We are in good company, among a closed group of highly specilized and promising companies. It's awesome to be part of @NVIDIAAI family!

https://www.nvidia.com/en-us/deep-learning-ai/startups/

#NVIDIA #ML #MachineLearning #MadeinItaly @nvidia

0 notes

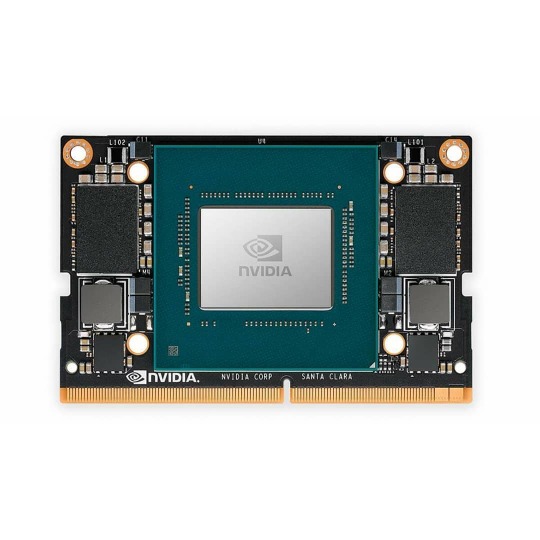

Photo

Meet the world's smallest #AI #Supercomputer - #NVIDIA #JetsonNX - Jetson Xavier™ NX brings supercomputer performance to the edge in a small form factor system-on-module (SOM). Up to 21 TOPS of accelerated computing delivers the horsepower to run modern neural networks in parallel and process data from multiple high-resolution sensors—a requirement for full AI systems. The developer kit is supported by the entire NVIDIA software stack, including accelerated SDKs and the latest NVIDIA tools for application development and optimization. A Jetson Xavier NX module and reference carrier board are included, along with AC power supply. You get the performance of 384 NVIDIA CUDA® Cores, 48 Tensor Cores, 6 Carmel ARM CPUs, and two NVIDIA Deep Learning Accelerators (NVDLA) engines. Combined with over 51GB/s of memory bandwidth. It has the performance to run all modern AI networks and frameworks with accelerated libraries for deep learning, computer vision, computer graphics, multimedia, and more. It's IDEAL FOR CRITICAL EMBEDDED APPLICATIONS! @nvidia @nvidiaai @nvidiageforceindia https://lnkd.in/g_DKchm #yashchauhan #machinelearning #MSP #NVIDIA #AItools #student #power #deeplearning #computervision #neuralnetworks #ml #onelifetoomanydreams (at Supercomputer) https://www.instagram.com/p/CAcWqh9FUDu/?igshid=1959lnbu76v8r

#ai#supercomputer#nvidia#jetsonnx#yashchauhan#machinelearning#msp#aitools#student#power#deeplearning#computervision#neuralnetworks#ml#onelifetoomanydreams

0 notes

Text

Top 10 Instagram Tech Influencers to Follow in 2020

A hundred years ago, no one could have predicted that you can post a picture here in the United States, and someone in the remote areas of Bangladesh would see your video. These possibilities that now occur give credence to what we can achieve with technology.

If you’re a tech enthusiast, you should keep tabs on people who are at the forefront of technology, Here are some Instagram accounts that you should follow if you want to have a first-hand update on what goes on in the tech world. There are lots of things to do after you buy Instagram followers, and these guys are doing them all correctly!

The Top 10 Instagram Tech Influencers You Should Follow Now!

@nvidiaai

Artificial intelligence has come to stay as a part of our daily lives. Why won’t it? It is increasing productivity in the workplace and making our jobs easier, solving problems at a faster rate than human speed, and introducing fun to technology. If you want to understand and further appreciate the involvement of artificial intelligence in our lives, then you should follow Nvidia AI (@nvidiaai).

On the accounts are stories and quotes about AI from scientists across the globe. If you aspire to be an artificial intelligence scientist, you should follow this account as it will inspire you on your path to fulfillment.

Some other information that you will get if you follow this account includes News on AI, devices that support AI, AI software, etc.

@madewithcode

Men control the tech industry, although more women are coming into the industry, tech inclusion rate for women is still not impressive. Although some of the largest tech industries in the world have a female Ceo, the fact remains that women only occupy 20% of those who work in tech industries.

Made With Code is the perfect account to follow if you’re a woman in a technological field, or you’re passionate about women’s inclusion in the tech industry. @madewithcode encourages women to get involved in technology and to create awareness on the importance of inclusion of women in the tech.

Made With Code is well-tailored for young women. It recently partnered with teen vogue to enlighten teenagers and young women on why they should get involved in tech.

@talkwidtech

Talk Wid Tech is an account whose primary goal is to make it easy for the public to understand tech. Their publication website has topics that will help you to understand what goes on in your tech devices; you will also learn how to do some tips and tricks on your gadgets.

Their Instagram account is fascinating to follow as they feature scientific discoveries, successful and failed experiments, advancement in technology, etc. Talk Wid Tech ensures that they break down their tech topics in a way that an average English reader can understand the content of their posts.

@techbyguff

Guff is one of the most popular tech accounts on Instagram. On their bio, you will see a link to their website. The site has numerous articles that are mostly easy to read and understandable to a non-native English speaker.

If you follow their Instagram account, you will get numerous technology tips and tricks that you can try yourself.

Tech By Guff shares highly captivating pictures with a detailed explanation of what’s happening in the image, the Instagram account has quality contents that are exciting to read.

@ronald_vanloon

Although this Instagram account has a meager 7000 followers, it is going to be one of the most followed Instagram accounts on tech in few years; this is because of the quality of post emanating from the account.

Ronald Vanoon’s primary goal is to help organizations and governments to understand how to use data science, Artificial intelligence, and big data to achieve their goals.

When you go through his Instagram account, you will see a lot of videos on the interviews he had with tech experts and heads of tech organizations. He also hosts Instagram Live videos regularly; this way, you can easily connect with him to ask him questions. He’s quite friendly; I’m sure you’ll have a fun experience watching his videos.

@sirajraval

Sir Raval founded the School of Artificial Intelligence that is in California. The school aims to spread knowledge on the importance and benefit of Artificial Intelligence. The organization has the largest community of Artificial Intelligence advocates in the world, part of which includes over thirteen thousand followers.

You will get quality information on Artificial Intelligence if you follow this Instagram account. He shares content with Instagram image posts, although he sometimes posts videos, stories, and hosts live videos to spice things up for his followers.

You can check the link to his website on his Instagram profile. His website has a lot of articles on practically anything Artificial intelligence.

@gadgetflow

Gadget flow is an Instagram account that has made a name for itself by posting content on tech. The Instagram handle has over 230,000 Instagram followers at the time of writing this article.

Gadget Flow official website receives over ten million visitors a month, making it one of the most visited tech sites in the world. You should follow this account if you’re tech-savvy. Gadget flow searches the internet for anything tech, and write an article on the product.

If you follow their Instagram account, you will get snippets on the content of their articles. You will also know of tech products before its official release.

@futurism

Futurism has over 1 million Instagram followers, and the majority of those followers are tech-savvy followers who log in daily to check for new posts.

They mostly post on discoveries in science, technology, and computer intelligence. Some of the topics discussed in the past are 3D printed human heart, electromagnetic stimuli for the brain, etc.

@elonmusk

He’s a known personality when it comes to tech; I will be surprised if you’re into anything tech, and you don’t know Elon Musk. He is at the forefront of many innovations such as Hyperloop and SpaceX. Elon is a billionaire with a heart for extreme ideas. Some of the ideas he’s pioneering are highly evolutionary.

@techcrunch

Tech Crunch is a company whose focus is primarily on profiling startups, reviewing new software and devices, gizmos, and making bold predictions on tech.

https://growinsta.xyz/top-10-instagram-tech-influencers-to-follow-in-2020/

#free instagram followers#free followers#free instagram followers instantly#get free instagram followers#free instagram followers trial#1000 free instagram followers trial#free instagram likes trial#100 free instagram followers#famoid free likes#followers gratis#famoid free followers#instagram followers generator#100 free instagram followers trial#free ig followers#free ig likes#instagram auto liker free#20 free instagram followers trial#free instagram followers no#verification#20 free instagram likes trial#1000 free instagram likes trial#followers instagram gratis#50 free instagram followers instantly#free instagram followers app#followers generator#free instagram followers instantly trial#free instagram followers no survey#insta 4liker#free followers me#free instagram followers bot

0 notes