#quant

Text

How they cuddle

Characters- Bam, Aguero, Hatz, Anak, Endorsi, Lero ro, Quant, Hansung Yu, Evankhell

Type - fluff

Bam-

When cuddling with Bam it depends which season we're talking about. So...

Season 1- He likes to be a little spoon. While you're cuddling like this he'd cling to the arms you have wrapped around his waist, and might even try to hold your hand, But when he feels needy he lays on his stomach off to your side and puts his head on your shoulder and lifts his leg onto your side. He'll want you to wrap the arm he's laying on around his shoulder/upper back and rest your other hand on his hip. If you're not wearing a shirt his hands are openly pressed against your chest while he tucks his head under your chin and if you're not he's got your shirt bunched up in fists while he clings to you.

Season 2 - this poor baby has been touch starved so he wants to be caged in your warmth. He'll lay on top of you and tuck his head under your chin while pressing against you with all of his body weight. If he does fall asleep expect his hair to get in your face somehow. He secretly hopes you'll offer to brush it when you two wake up.

season 3- very protective positions, like holding you where your face is pressed to his chest with a tight hold around your waist. sometimes when he's tired he'll lay his head on your lap after taking down his hair and fall asleep like that.

Khun- He has the same protective position as season 3 bam, but other times he'll want it to be completely even you holding onto each other. Very rarely will he let you have the upper hand with cuddling, but when you've begged him enough he'll let you be the big spoon.

Hatz- If you can convince him to cuddle with you instead of splitting the bed evenly it's all you. He'll lay on his back while you're hugging him and have your leg propped up on him, but if you're lucky he might hold your hand while you sleep.

Anak- She wraps her tail around you every time, but besides that, she'll let you take the lead.

Endorsi- spooning. she doesn't care if she's the big spoon or the little spoon, just as long as nothings touching her face.

Lero ro- He has his face pressed up against your chest every time and has a tight grip on your waist. He falls asleep so quickly if you pet his hair while you're cuddling. He's also one to fall asleep on your lap, but you still have to play with his hair.

Quant- The strangest of positions. He's too hyperactive to actually lie down and stay still. Definitely drools on you every single night

Hansung Yu- This guy!!! I love him! But back to the point. He'll lie down on top of you and rest his head on your shoulder as you two talk about each other's day. Don't worry he's very light and makes sure to keep his hair out of your face, but might drool the tiniest bit every now and then.

Evankhell- She crushes you. Deep sleeper. You die. The end. In all reality though, she likes to think that she's light, but all that muscle is heavy. You have to lay on your side, if you lay on your back and her arm is anywhere on your stomach... well, have fun breathing

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

I tried. @wolfsgravity thought you might like Lero ro's part.

#tower of god x reader#tower of god#khun aguero agnis x reader#khun x reader#tog khun#khun aguero agnis#bam x reader#25th bam#jue viole grace#hatz x reader#hatz#anak x reader#anak#endorsi x reader#endorsi#lero ro x reader#lero ro#quant blitz#quant#hansung yu#hansung yu x reader#yu hansung#tog x reader#tog#tower of god scenario

128 notes

·

View notes

Photo

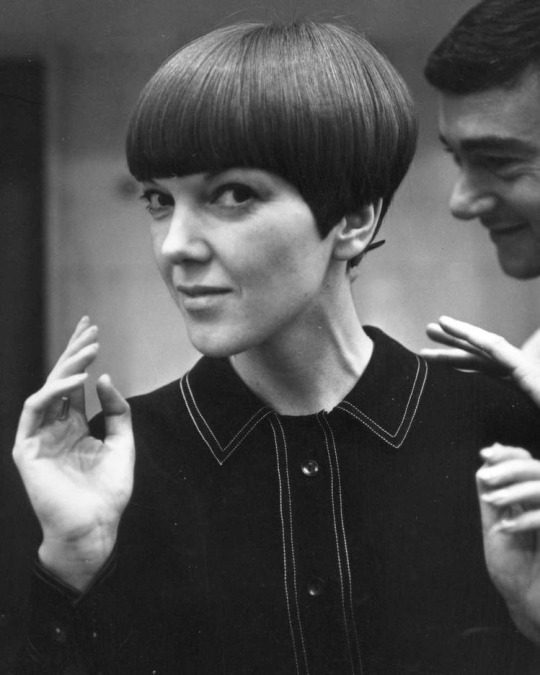

Mini times two. Mary Quant

74 notes

·

View notes

Text

RIP Mary Quant 🖤

37 notes

·

View notes

Photo

Mary Quant (1930-2023).

10 notes

·

View notes

Text

“I thought of Sam Bankman-Fried’s numbskull posturing recently when I finally read Nathan Heller’s article about the “The End of the English Major” in the New Yorker. The account of the collapse of undergraduate interest in the humanities touched off a lot of anguish, pained tweets, and op-eds this past month. For me, it clarified something about the trajectory of culture in the recent past, and made me think about the increasing widespread popularity of something I’ll call Quantitative Aesthetics—the way numbers function more and more as a proxy for artistic value.

(…)

The most-shared tidbit from Heller’s piece were the lines from professors lamenting that her Ivy League students who are social-media natives no longer have the attention for reading literature: “The last time I taught The Scarlet Letter, I discovered that my students were really struggling to understand the sentences as sentences—like, having trouble identifying the subject and the verb.”

We’re talking about the period since 2011, the first college class since the introduction of the iPhone, and it is logical that mass adoption of such seductive and pervasive consumer technology has changed people’s relationship to culture.

But 2011-12 is also the first full college class since the financial crisis of 2008, and the other obvious culprit is the greater ruthlessness of the economy post Great Recession, the flight away from the “softness” of the humanities in a time when studying anything not directly seen as useful is viewed more and more as an unsustainable luxury.

(…)

As one student says in the article, “Even if I’m in the humanities, and giving my impression of something, somebody might point out to me, ‘Well, who was your sample?’ I mean, statistics is everywhere. It’s part of any good critical analysis of things.” This provokes Heller to reflect, “I knew at once what [the student] meant: on social media, and in the press that sends data visualizations skittering across it, statistics is now everywhere, our language for exchanging knowledge.”

(…)

Nevertheless, there’s something called the McNamara Fallacy, a.k.a. the Quantitative Fallacy. It is summarized as “if it cannot be measured, it is not important.” The Heller article made me reflect on how a version of it is now very present, and growing, at the grassroots of taste.

On one level, this is seen in a rise of a kind of wonky obsession with business stats in fandoms, invoked as a way to convey the rightness of artistic opinions—what I want to call Quantitative Aesthetics. (There are actually scientists who study aesthetic preference in labs and use the term “quantitative aesthetics.” I am using it in a more diffuse way.)

It manifests in music. As the New York Times wrote in 2020 of the new age of pop fandom, “devotees compare No. 1s and streaming statistics like sports fans do batting averages, championship, wins and shooting percentages.” Last year, another music writer talked about fans internalizing the number-as-proof-of-value mindset to extreme levels: “I see people forcing themselves to listen to certain songs or albums over and over and over just to raise those numbers, to the point they don��t even get enjoyment out of it anymore.”

The same goes for film lovers, who now seem to strangely know a lot about opening-day grosses and foreign box office, and use the stats to argue for the merits of their preferred product. There was an entire campaign by Marvel super-fans to get Avengers: Endgame to outgross Avatar, as if that would prove that comic-book movies really were the best thing in the world.

On the flip side, indie director James Gray (of Ad Astra fame) recently complained about ordinary cinema-goers using business stats as a proxy for artistic merit: “It tells you something of how indoctrinated we are with capitalism that somebody will say, like, ‘His movies haven’t made a dime!’ It’s like, well, do you own stock in Comcast? Or are you just such a lemming that you think that actually has value to anybody?”

It’s not just financial data though. Rotten Tomatoes and Metacritic have recently become go-to arbitrators of taste by boiling down a movie’s value to a single all-purpose statistic. They are influential enough to alarm studios, who say the practice is denying oxygen to potentially niche hits because it “quantifies the unquantifiable.” (How funny to hear Hollywood execs echo Theodor Adorno’s Aesthetic Theory: “If an empirically oriented aesthetics uses quantitative averages as norms, it unconsciously sides with social conformity.”)

As for art, I don’t really feel like I even need to say too much about how the confusion of price data with merit infects the conversation. It’s so well known it is the subject of documentaries from The Mona Lisa Curse (2008) to The Price of Everything (2018). “Art and money have no intrinsic hookup at all,” painter Larry Poons laments in the latter, stating the film’s thesis. “It’s not like sports, where your batting average is your batting average… They’ve tried to make it much like that, like the best artist is the most expensive artist.”

But where Quantitative Aesthetics is really newly intense across society—in art and everywhere—is in how social-media numbers (clicks, likes, shares, retweets, etc.) seep into everything as a shorthand for understanding how important something is. That’s why artist-researcher Ben Grosser created his Demetricator suite of web-browsing tools, which let you view social media stripped of all those numbers and feel, by their absence, the effect they are having on your attention and values.

(…)

Again: Data analysis, done with care, can yield insights of great depth (Albert-László Barabási has even argued for “dataism,” a kind of sophisticated data analysis, as an artform). But as an instrument used to justify consumer preference within a landscape of complex values, a Quantitative Aesthetic often just becomes a way to deal with the problem of not wanting to spend much time thinking—the opposite of deep thought.

If you walk into a wine store, you could get descriptions of various wines, taste them, decide whether you want something more “foxy” or more “herbaceous,” match the subtleties to your palette. But most people will probably just pick the bottle that has the price point they think suggests about the level of quality they are shooting for.

The “McNamara Fallacy” is named after one-time defense secretary Robert McNamara—a Harvard grad, like the students Heller talks to. A numbers whiz, he was the architect of the U.S.’s murderous, ultimately catastrophic Vietnam policy, and known for his obsession with “body counts” as the key metric of success.

McNamara apparatchik Leslie H. Gelb later recalled in Time magazine the debacle fueled by this quantitative mindset:

McNamara didn’t know anything about Vietnam. Nor did the rest of us working with him. But Americans didn’t have to know the culture and history of a place. All we needed to do was apply our military superiority and resources in the right way. We needed to collect the right data, analyze the information properly and come up with a solution on how to win the war.

(…)

Daniel Yankelovich, the sociologist who coined the term “McNamara Fallacy,” actually outlined it as a process, one that could be broken out into four steps of escalating intellectual danger. Here they are, as it is commonly broken down, with his commentary on each:

Measure whatever can be easily measured. (This is OK as far as it goes.)

Disregard that which can’t be easily measured or to give it an arbitrary quantitative value. (This is artificial and misleading.)

Presume that what can’t be measured easily really isn’t important. (This is blindness.)

Say that what can’t be easily measured really doesn’t exist. (This is suicide.)

Based on the data I have, I’d say that we as a culture are approaching somewhere between the third and fourth steps.”

#aesthetics#quant#quantitative aesthetics#numbers#statistics#art#mcnamara#robert mcnamara#mcnamara fallacy#sam bankman fried#ftx collapse#culture#data#taste

7 notes

·

View notes

Text

The Institutional Era of Crypto Brings Fresh Innovation

In the wake of Binance’s $4.3 billion settlement with U.S. regulators last November, a shift is underway in the institutional adoption of digital assets. We are now in a fresh market cycle and we’re seeing innovative custody solutions and many market opportunities.

The collaboration between Binance and Sygnum to introduce a tri-party agreement for off-exchange custody exemplifies this shift.…

View On WordPress

0 notes

Text

youtube

Quant Lesson 1: How to Download High Frequency Cryptocurrency Data?

Manual downloads

Automatic downloads -- Python

Token : ['BTC', 'ETH', 'BNB', 'SOL', 'XRP', 'ADA', 'AVAX', 'DOT', 'TRX', 'LINK']

https://data.binance.vision/data

import requests

import zipfile

import io,os,time

import pandas as pd

import numpy as np

os.environ["http_proxy"] = "http://127.?.?.1:?"

os.environ["https_proxy"] = "http://127.?.?.1:?"

dates = pd.date_range("2023-01-01","2023-12-31")

for date in dates:

for token in ['BTC', 'ETH', 'BNB', 'SOL', 'XRP', 'ADA', 'AVAX', 'DOT', 'TRX', 'LINK']:

url = "https://data.binance.vision/data/spot/daily/klines/{}USDT/1m/{}USDT-1m-{}.zip".format(token,token,str(date.date()))

# Download the file

response = requests.get(url)

if response.status_code == 200:

# Unzip the file

with zipfile.ZipFile(io.BytesIO(response.content)) as z:

z.extractall("D:\\?\\?\\?\\")

else:

print("False:{}||{}".format(date,token))

time.sleep(0.25)

1 note

·

View note

Video

youtube

Quant Flexi Cap Fund | Quant Flexi Cap Fund vs Quant Active Fund | Quant...

0 notes

Text

Cryptocurrency Quant (QNT) Review 2024

What is a Quant ? What does the QNT coin do?

What is a Quant ? Quant is a protocol that facilitates interoperability between different types of distributed ledger technology (DLT), including blockchain. In this article “What is Quant?” We will answer the question. In addition, we will touch on other issues related to the project.

What is a Quant? What is he doing?

Quant Network was founded by Gilbert Verdian in 2015 with its own Quant token shortly thereafter. At its core, it is a protocol that facilitates the mass adoption of distributed ledger technology (DLT). The cul protocol allows different types of DLTs to interact with development tools for enterprise applications. The QNT coin is used to access the Quant Overledger DLT gateway. Overledger is responsible for interaction with DLT. It also allows developers to create multiple DLT applications (mApps) using the new Multi-DLT smart contracts.

In addition, Quant offers interesting solutions for enterprises and developers, compatible with some of the biggest blockchains. Each of the solutions works with high scalability. However, it can be easily integrated into existing IT infrastructures. Thus, corporate access makes decentralization easier than ever before.

What is QNT coin?

The native ERC-20 QNT token is essential for any developer who wants to use the Overledger platform. Developers use fiat money to pay for licenses to use Quant Network services. Quant Treasury then reserves a quantity of QNT tokens equal to the value paid by the developers. Thus, these assets are blocked for 12 months. In addition, the QNT token is used by Overledger clients to pay for read and write operations. However, it is also useful for transferring assets between networks and distributed ledger systems.

Fiat money is paid in exchange for QNT tokens. You need to pay for licenses online. However, paying for reads and writes does not require users to lock their QNT tokens. In order to access the Quant ecosystem and interact with Overledger, users must have a certain amount of QNT tokens. This is because the QNT token is designed to facilitate the creation, use and access of multi-chain applications (mApps) on the Quant network.

How does a Quant work?

Quant is a turnkey solution that integrates various blockchains and enterprise software without the need for new infrastructure. Different blockchains interact with each other using Distributed Ledger Technology (DLT) and Candidate Programming Interfaces (APIs) in the Overledger API Gateway.

What is Quant How to buy QNT coin?

Quant is currently listed on numerous cryptocurrency exchanges. People who want to invest in the project must open an account on one of the exchanges where the project is traded. The exchanges Quant is listed on are listed below: Binance, Bybit, BingX, KuCoin. Users who want to invest in the project must open an account on one of these exchanges.

If You Want To Learn More How To Make Big Money And Passive Income With Cryptocurrency Click Here

Affiliate Disclosure:

The links contained in this product review may result in a small commission if you opt to purchase the product recommended at no additional cost to you. This goes towards supporting our research and editorial team and please know we only recommend high quality products.

Read the full article

0 notes

Note

rising sophomore here! how do i break into quant? which firms should i look at?

Response from Heisenberg:

Maintain good GPA (ideally 3.7+), have 1-2 interesting side projects that demonstrate your interest in quant, be in 1-2 clubs that demonstrate your interest in quant.

For the interviews, be really good at probability questions and brainteasers. There are a lot of resources on the internet that cover this material.

For quant dev, be good at Leetcode (solve most Mediums relatively quickly and easily). If you have the time, get really good at competitive programming. 1500+ on Codeforces is probably sufficient. Firms like Hudson River Trading love that shit (and you will absolutely murder any coding interview question you'll encounter).

Below is a list of firms that you might find interesting.

Akuna Capital

Allston Trading

Ansatz Capital

AQR Capital Management

Arrowstreet Capital

Capstone Investment Advisors

Citadel/Securities

Point72 / Cubist

D.E. Shaw

DRW

Engineers Gate

Five Rings

Flow Traders

G-Research

GSA Capital

Headlands Tech

Hudson River Trading

IMC

Jane Street

Mako Trading

Marshall Wace

Maven Securities

Millennium Management

Old Mission Capital

Optiver

PDT Partners

Peak6

QuantLab

Seven Eight Capital

Squarepoint

Susquehanna International Group

Tower Research Capital

TransMarketGroup

Two Sigma

Valkyrie Trading

Vatic Labs

Virtu

Voleon

Voloridge Investment Management

Weiss Asset Management

Wolverine

WorldQuant

XTX Market

Jump Trading

HAP Capital

GTS

Volant

Balyasny

Exodus Point

Tudor

Schonfeld

Verition

Centiva

Walleye

ART Advisors

Coatue

Maverick

Third Point

Brevan Howard

Guggenheim

Highbridge

Laurion

Teza

Quadrature

Qube

Man Numeric

0 notes

Note

rip lero and quantz they are just stuck at this point xD. Hansung spoiling hana while evankhell and haru are wrecking havoc somewhere xD

And Lero ro is just there trying to hold everything together. He's the glue of everything. Poor guy needs someone to help him in everything. I'd carry headache medicine for our poor Pikachu boy.

#tower of god#fanfiction#tower of god x reader#tower of god brainworms#hansung#hansung yu#yu hansung#evankhell#evansung#lero ro#quant blitz#quant

5 notes

·

View notes

Text

youtube

1 note

·

View note

Link

A great read! https://medium.com/@crypto101bylauriesuarez/sec-charges-quantstamp-28-million-for-conducting-an-unregistered-initial-coin-offering-1c8a43a23b66

0 notes

Text

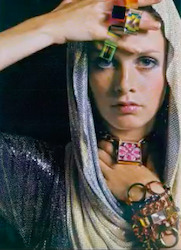

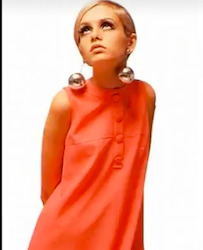

"Twiggy Modeling Biba" (circa 1960-70) and "Twiggy Modeling Mary Quant" (1966) presented in “A History of Jewellery: Bedazzled (part 9: Contemporary Jewellery 1960s into the 21st Century)” by Beatriz Chadour-Sampson - International Jewellery Historian and Author - for the V&A Academy online, april 2024.

#conferences#inspirations bijoux#style#Biba#Twiggy#Quant#ChadourSampson#V&AAcademy#Victoria&AlbertMuseum

0 notes

Text

python reduce an ai model’s size down with quantization and speed it up

Quantizing a model means reducing the number of bits used to represent its weights and activations. This can help make the model smaller and faster, which is good because it means it can run on smaller computers and devices more easily. However, because the model uses fewer bits, it may not be able to represent its parameters as precisely as before. This can lead to some loss of accuracy or quality. So, quantizing a model can be a trade-off between size and speed on one hand, and accuracy or quality on the other. But overall, quantizing is a useful technique that can help make machine learning more accessible and efficient.

import torch import torch.nn as nn from transformers import GPT2Tokenizer, GPT2LMHeadModel # Load the GPT-2 model and tokenizer model_name = 'gpt2' tokenizer = GPT2Tokenizer.from_pretrained(model_name) model = GPT2LMHeadModel.from_pretrained(model_name) # Generate some sample text prompt = "The quick brown fox jumps over the lazy dog." input_ids = tokenizer.encode(prompt, return_tensors='pt') output = model.generate(input_ids, max_length=100, do_sample=True, num_return_sequences=1) sample_text = tokenizer.decode(output[0], skip_special_tokens=True) print("Sample text: ", sample_text) # Quantize the model down quantization_config = { 'activation': { 'dtype': torch.quint8, 'qscheme': torch.per_tensor_affine, 'reduce_range': True }, 'weight': { 'dtype': torch.qint8, 'qscheme': torch.per_tensor_symmetric, 'reduce_range': True } } quantized_model = torch.quantization.quantize_dynamic(model, quantization_config, dtype=torch.qint8) # Save the quantized model torch.save(quantized_model.state_dict(), 'quantized_model.pt') ## ------------------------------- Generate using Quantized Model # Load the quantized model quantized_model = GPT2LMHeadModel.from_pretrained(model_name) quantized_model.load_state_dict(torch.load('quantized_model.pt')) # Generate some sample text using the quantized model output_quantized = quantized_model.generate(input_ids, max_length=100, do_sample=True, num_return_sequences=1) sample_text_quantized = tokenizer.decode(output_quantized[0], skip_special_tokens=True) print("Sample text (quantized): ", sample_text_quantized)

#python#quantize#quantization#quant#ai model#model#ai#deep learning#compress#reduce bits#shrink bits#lower bit depth#mobile model#mobile#optimize model#optimize#optimization#model optimization#speed up#turbo#gpt#gpt-2#chatgpt#llama#pytorch#torch#nn#text generation#generative#generative text

1 note

·

View note