#james baussmann

Text

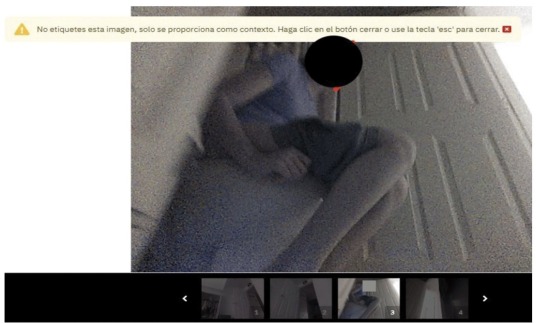

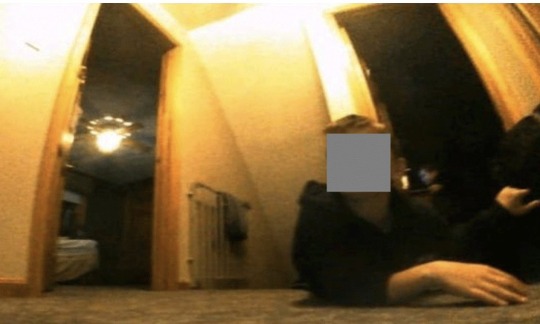

IN THE FALL OF 2020, GIG WORKERS IN VENEZUELA POSTED A SERIES OF images to online forums where they gathered to talk shop. The photos were mundane, if sometimes intimate, household scenes captured from low angles—including some you really wouldn’t want shared on the Internet.

In one particularly revealing shot, a young woman in a lavender T-shirt sits on the toilet, her shorts pulled down to mid-thigh.

The images were not taken by a person, but by development versions of iRobot’s Roomba J7 series robot vacuum. They were then sent to Scale AI, a startup that contracts workers around the world to label audio, photo, and video data used to train artificial intelligence.

They were the sorts of scenes that internet-connected devices regularly capture and send back to the cloud—though usually with stricter storage and access controls. Yet earlier this year, MIT Technology Review obtained 15 screenshots of these private photos, which had been posted to closed social media groups.

The photos vary in type and in sensitivity. The most intimate image we saw was the series of video stills featuring the young woman on the toilet, her face blocked in the lead image but unobscured in the grainy scroll of shots below. In another image, a boy who appears to be eight or nine years old, and whose face is clearly visible, is sprawled on his stomach across a hallway floor. A triangular flop of hair spills across his forehead as he stares, with apparent amusement, at the object recording him from just below eye level.

iRobot—the world’s largest vendor of robotic vacuums, which Amazon recently acquired for $1.7 billion in a pending deal—confirmed that these images were captured by its Roombas in 2020.

Ultimately, though, this set of images represents something bigger than any one individual company’s actions. They speak to the widespread, and growing, practice of sharing potentially sensitive data to train algorithms, as well as the surprising, globe-spanning journey that a single image can take—in this case, from homes in North America, Europe, and Asia to the servers of Massachusetts-based iRobot, from there to San Francisco–based Scale AI, and finally to Scale’s contracted data workers around the world (including, in this instance, Venezuelan gig workers who posted the images to private groups on Facebook, Discord, and elsewhere).

Together, the images reveal a whole data supply chain—and new points where personal information could leak out—that few consumers are even aware of.

(continue reading)

#politics#james baussmann#scale ai#irobot#amazon#roomba#privacy rights#colin angle#privacy#data mining#surveillance state#mass surveillance#surveillance industry#1st amendment#first amendment#1st amendment rights#first amendment rights#ai#artificial intelligence#iot#internet of things

5K notes

·

View notes