#data de identification techniques

Text

The Rafale's debut mission under the F4.1 standard occurred on Friday, February 16, 2024, at Air Base 118 in Mont-de-Marsan. (Picture source: AAE)

@aircraftrecognition via X

French Rafale F4.1 fighter jet executes first operational flight

Wednesday, 28 February 2024 09:30

On February 23, 2024, the French Air and Space Force (AAE) revealed that the Rafale F4.1 fighter jet had completed its inaugural operational sortie in support of the 30th Fighter Wing's training exercises, affirming its readiness under the new standard. The aircraft's debut mission under the F4.1 standard occurred on Friday, February 16, 2024, at Air Base 118 in Mont-de-Marsan.

Follow Air Recognition on Google News at this link

Ezoic

French Rafale F4.1 fighter jet 925 001 The Rafale's debut mission under the F4.1 standard occurred on Friday, February 16, 2024, at Air Base 118 in Mont-de-Marsan. (Picture source: AAE)

Ezoic

After being delivered to the 01.030 "Côte d'Argent" fighter and experimentation squadron stationed at Air Base 118 a year prior, the Rafale F4.1 has been utilized for training missions by the armed forces and has now achieved its Initial Operational Capability (IOC), enabling its deployment for operational tasks, including air policing duties.

EzoicThe final phase will encompass achieving Full Operational Capability (FOC), anticipated upon the full integration of all capacity enhancements and new equipment associated with the standard. These enhancements encompass a helmet-mounted sight and a new 1,000 kg armament, improvements to air-to-air and air-to-ground targeting systems, enhancements to the self-protection system, and integration of the Talios targeting pod. In the future, all Rafale aircraft within the French Air and Space Force and the Navy will undergo a gradual retrofit to meet the new F4.1 standard.

Initial testing of the Rafale F4.1, supervised by the DGA Flight Tests Center, occurred in April 2021 at Istres. Further tests in electronic warfare, aircraft survivability, and weaponry were conducted between 2021 and 2023 at various DGA facilities, including the Information Mastery, Aeronautical Techniques, and Missile Testing centers.

The French Defense Procurement Agency (DGA), Direction générale de l'Armement, declared the qualification of the Rafale combat aircraft's F4.1 standard on March 13, 2023, indicating its readiness for collaborative aerial combat. The F4.1 standard incorporates capabilities such as collaborative air combat, integration of the 1000 kg AASM weapon, and enhanced cyber threat resilience. This standard brings significant enhancements in aerial combat capabilities, including integration of the Scorpion helmet sight, improvements in firing control for the Meteor missile, advancements in passive threat detection algorithms, and increased data exchange capabilities among Rafale aircraft.

Additionally, the F4.1 standard introduces the integration of the 1,000 kg AASM (Air-to-Ground Modular Weapon) with GPS/laser guidance, improved cyber threat protection, new features for sensors like Talios, OSF, and RBE2, and initial developments in connectivity. Alongside these advancements, various equipment and weapons are introduced onto the Rafale, including a new IRST for the Front Sector Optronics to enhance passive target detection and identification against low signature aircraft, upgrades to the RBE2 AESA radar with new SAR and GMTI/T modes for improved radar imagery and ground target detection, collaborative modes to enhance detection, tracking, and firing capabilities, the introduction of the Thales Scorpion helmet-mounted display and larger cockpit displays for target designation, and expansion of the Hammer family of munitions to include 1,000 kg variants, offering stand-off capabilities against larger or hardened targets.

Furthermore, the introduction of the F4.1 Standard will continue over the decade, incorporating new functionalities and equipment such as the MICA NG air-to-air missile, enhancements in connectivity with communication server and Satcom satellite link, and a digital jammer for the SPECTRA self-defense/electronic warfare suite.

4 notes

·

View notes

Text

WHOO!

I get to teach Forensic Sciences again next semester! With a prof I really like and have previously TA’ed for! It’s a super fun course - Intro to Criminalistics - so it’s a little bit of everything. Prints, bones, blood, DNA, drugs, mapping, and more. She’s also researching Forensic Anth, so we can dork out about bones.

AND I’m teaching Crim Theory, too, which is a designated writing-intensive course. I have not worked with the prof for this course, but I hear she’s awesome. Not only do I get to dive into the history of Criminology again, but go absolutely ham on essay-writing technique and tips. (YOU WILL LEARN TO STACK AN ARGUMENT. I can’t guarantee you will learn to love APA, but you will come to grips with it and develop a personalized checklist with samples of in-text citations and title page contents, in order.)

Lesson 1: Who Are You? (Who who? Who who?)

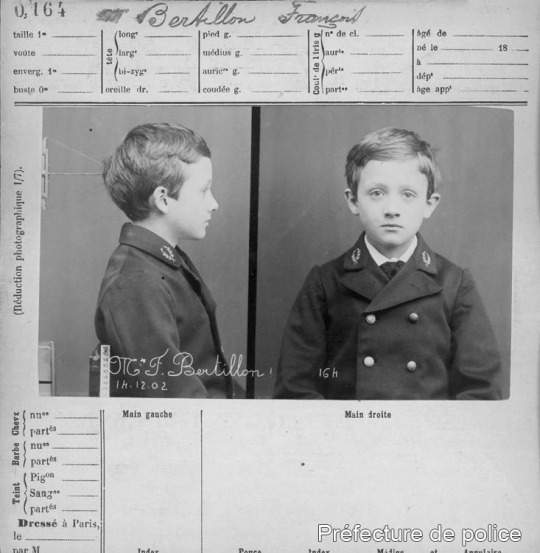

[Text ID: An antique parody of a double mug shot, in sepia tones. A 23-month-old infant with curly light hair sits in a plain wooden high chair, a winsome expression on his face. He is dressed in a typical child’s white frock of the period with a frilled collar, sleeves and skirts. The first panel is in profile, with the child facing right. The second panel is face-on. The inscription reads: François Bertillon, âgé de 23 mois. 17 - oct - 93.]

This infantile mug shot, now in the MOMA collection, is commonly known as: “François Bertillon, 23 months (Baby, Gluttony, Nibbling All the Pears from a Basket)”

Alphonse Bertillon, a French police officer from the late 1800s, sought to revolutionize criminal identification by statistical means. He developed a system, which he called Bertillonage, of body measurements - anthropometry - that could be tabulated and compared with others, under the assumption that no two people would share the exact same measurements.

Now, this idea was an offshoot of biological determinism, a theory that the body itself predicted behaviour and the state of the mind. Biological determinism was actually a revolution in its day: it represented a split from the previous belief that aberrant behaviour and physical infirmity were proof of demonic influence and a directly-involved God. However, biological determinism, itself an offshoot of Platonic essentialism, led to such notions as Lombroso’s “atavistic”-bodied criminal with a hulking body, a lowered brow and a “stupid stare”, as well as pseudo-science parlour fun like phrenology. Not to mention the blatant eugenicism and superior-more-developed-race blather that still persists in many branches of social sciences.

But two hundred and some years into the European Enlightenment, empirical science was moving slowly towards the acceptance of provable, testable hypotheses based in reason and repetition. So Bertillon reasoned that, if you went about the task scientifically, with enough detail, you ought to be able to prove that no two people had the same bodies, and could therefore be told apart. (And just maybe prove that you could tell a criminal from looking at them.)

But no. The collection of Bertillonage data was incredibly painstaking. Subjects had to have a long series of measurements taken, in the exact same postures, using the same equipment. Then, the subjects were required to have photographs taken, from specific angles: the first mug shots. Bertillion spent years perfecting his photographic system. The above photos of his little nephew François are just one example of Bertillon bringing his whole family into the process - an excuse to combine his work with his hobby of photography and his love of his close-knit family.

(Note the implication here: “My family is the control group, the ideal specimens. Normal people look and behave like us.” When thinking about data, always ask yourself: who’s taking the photographs? Who’s collecting the samples, and from where, and how, and why those samples in particular?)

Bertillonage didn’t take off. People have too many similarities as well as differences, and the human error involved in the measurements and photography was too great. But he did create a stunning longitudinal study of his family and friends over a couple of decades, as well as of local criminals. Here’s François a few years later. Can you see details that persist through out his aging? How would you describe them?

You can see here some of the prescribed measurements in the Bertillonage system - and they didn’t have spreadsheets to look up and compare cases!

Bertillon’s underlying idea had merit. No two people are exactly alike. Even identical twins develop epigenetic differences over time. Fingerprints form in the womb, with randomized development due to the uterine environment. We can only measure these things with technical tools - low tech like magnifying glasses, high tech like digitized pattern recognition and molecular amplification. But we’ll get to that later.

Before you leave! Your homework this week is to write a description of yourself that is detailed enough that it would help investigators identify your remains. Under 500 words please. Point form is fine. Post to Canvas by midnight Sunday.

23 notes

·

View notes

Text

Geocoding: An Overview

Geocoding is a process used in geographic information systems (GIS) and mapping applications to convert addresses or location descriptions into geographic coordinates, such as latitude and longitude. This conversion enables the precise identification and representation of locations on maps, allowing users to visualize and analyze spatial data effectively. Geocoding plays a critical role in various industries and applications, ranging from navigation and logistics to urban planning and market analysis. Let's delve into an overview of geocoding:

Basic Principles of Geocoding: Geocoding involves translating human-readable addresses or location descriptions, such as street addresses, postal codes, landmarks, or place names, into machine-readable geographic coordinates. This process relies on reference data sources, algorithms, and geospatial technologies to accurately determine the spatial coordinates corresponding to a given address or location.

Components of Geocoding: Geocoding typically consists of two main components: address parsing and address matching. Address parsing involves breaking down an address into its individual components, such as street name, city, state, and postal code. Address matching involves comparing parsed address components against a reference database or spatial index to identify the corresponding geographic coordinates.

Reference Data Sources: Geocoding relies on reference data sources, such as street databases, postal directories, and geographic information systems (GIS), to map addresses to geographic coordinates. These reference datasets contain comprehensive information about streets, addresses, landmarks, and administrative boundaries, enabling accurate and reliable geocoding results.

Algorithms and Geospatial Technologies: Geocoding algorithms and geospatial technologies play a crucial role in the geocoding process. These include techniques such as address standardization, fuzzy matching, spatial indexing, and reverse geocoding. Address standardization ensures that addresses are formatted consistently and adhere to postal conventions, while fuzzy matching accounts for variations and discrepancies in address input.

Forward and Reverse Geocoding: Geocoding can be categorized into two main types: forward geocoding and reverse geocoding. Forward geocoding involves converting addresses or location descriptions into geographic coordinates, while reverse geocoding involves determining the nearest address or location description corresponding to a set of geographic coordinates. Both types are essential for various mapping and spatial analysis applications.

Applications of Geocoding: Geocoding has diverse applications across multiple industries and domains. In navigation and mapping applications, geocoding enables accurate route planning, location-based services, and real-time vehicle tracking. In logistics and transportation, geocoding facilitates efficient delivery routing, fleet management, and supply chain optimization. In urban planning and development, geocoding supports land use planning, site selection, and infrastructure management.

Market Analysis and Business Intelligence: Geocoding is widely used in market analysis, business intelligence, and location-based marketing. By geocoding customer addresses or demographic data, businesses can identify target markets, analyze market trends, and optimize sales territories. Geocoding also enables businesses to visualize customer distribution, assess market penetration, and identify areas for expansion.

Emergency Response and Public Safety: Geocoding plays a critical role in emergency response and public safety applications. Emergency dispatch systems use geocoding to identify the location of emergency incidents, dispatch appropriate response units, and route emergency vehicles efficiently. Geocoding also helps in disaster management, evacuation planning, and resource allocation during emergencies.

Challenges and Considerations: Despite its many benefits, geocoding poses several challenges and considerations. Accuracy and completeness of reference data, variations in address formats and conventions across regions, and data privacy and security concerns are some of the key challenges associated with geocoding. Additionally, geocoding may be subject to errors and discrepancies, particularly in densely populated or rapidly changing urban areas.

Future Trends and Innovations: The field of geocoding continues to evolve with advancements in technology and data analytics. Emerging trends include the integration of artificial intelligence (AI) and machine learning (ML) techniques for enhanced address matching and geocoding accuracy. Additionally, the proliferation of location-based services and Internet of Things (IoT) devices is expected to drive further innovation in geocoding applications and solutions.

In summary, geocoding is a fundamental process in GIS and mapping applications, enabling the conversion of addresses or location descriptions into geographic coordinates. By leveraging reference data sources, algorithms, and geospatial technologies, geocoding facilitates accurate location identification, spatial analysis, and decision-making across various industries and domains. Despite its challenges, geocoding continues to play a vital role in enabling navigation, logistics, market analysis, emergency response, and other critical applications in our increasingly interconnected world.

youtube

SITES WE SUPPORT

Medicare Marketing Leads & Address Verification API – Wordpress

SOCIAL LINKS

Facebook

Twitter

LinkedIn

Instagram

Pinterest

0 notes

Text

Unveiling the Shield: Understanding Data De-identification

In an era where data fuels innovation and shapes industries, the protection of personal information is paramount. With increasing concerns about privacy breaches and data misuse, organizations are under immense pressure to safeguard sensitive information while still leveraging its potential for insights. This is where the concept of data de-identification emerges as a critical tool in the arsenal of data protection strategies.

What is Data De-identification?

Data de-identification, also known as anonymization or pseudonymization, is the process of removing or altering personally identifiable information (PII) from datasets to make it impossible or at least more difficult to identify individuals. This involves either stripping data of direct identifiers (such as names and social security numbers) or modifying them in a way that renders them meaningless, while still maintaining the utility of the data for analysis and research purposes.

The Importance of Data De-identification

The significance of data de-identification cannot be overstated, especially in light of stringent data protection regulations like the General Data Protection Regulation (GDPR) and the Health Insurance Portability and Accountability Act (HIPAA). Compliance with these regulations requires organizations to adopt robust data privacy measures, and de-identification is often a crucial component of such measures.

By de-identifying data, organizations can:

Mitigate Privacy Risks: De-identifying data reduces the risk of unauthorized access and misuse, thus safeguarding individuals' privacy rights.

Facilitate Data Sharing: De-identified data can be shared more freely for research, collaboration, and secondary usage without infringing on individuals' privacy.

Promote Innovation: With access to de-identified datasets, researchers and data scientists can innovate and develop new insights without compromising individuals' privacy.

Enhance Trust: Demonstrating a commitment to data privacy through de-identification practices enhances trust among consumers, partners, and regulatory bodies.

Methods of Data De-identification

There are several techniques employed in the process of data de-identification, including:

Removing Identifiers: This involves straightforward removal of direct identifiers such as names, addresses, social security numbers, etc., from the dataset.

Masking: Masking involves replacing or obscuring certain identifiable elements within the data with non-sensitive placeholders or pseudonyms.

Generalization: Generalization involves replacing specific values with a broader category. For example, replacing exact ages with age ranges.

Data Perturbation: This technique involves introducing random noise or alterations to the data to prevent re-identification while still maintaining its analytical value.

Data Swapping: Data swapping involves exchanging certain attributes between records, making it difficult to trace specific information back to an individual.

Challenges and Considerations

While data de-identification is a powerful tool for privacy protection, it is not without its challenges and considerations:

Risk of Re-identification: Even de-identified data can sometimes be re-identified through various means, such as data linkage or inference attacks.

Maintaining Data Utility: Striking the right balance between preserving data utility for analysis and protecting privacy can be challenging. Over-de-identification can render data useless for its intended purposes.

Evolution of Data: Data evolves over time, and what may be considered de-identified today may become re-identifiable in the future as new data sources and analytical techniques emerge.

Regulatory Compliance: Meeting the requirements of data protection regulations while still deriving value from de-identified data requires careful navigation of legal and ethical considerations.

Conclusion

In an age where data is both a valuable asset and a potential liability, the practice of data de-identification emerges as a crucial safeguard for protecting individuals' privacy rights while still enabling data-driven innovation. By adopting robust de-identification techniques and adhering to privacy best practices, organizations can navigate the complex landscape of data privacy regulations while harnessing the full potential of data for the greater good.

In essence, data de-identification serves as a shield, preserving the anonymity of individuals within datasets, and unlocking new possibilities for research, analysis, and collaboration in the data-driven world of today and tomorrow.

0 notes

Text

Streamlining Success: Understanding the Data Cleansing Process

In today's digital age, data has become the lifeblood of businesses, driving decisions, innovations, and strategies. However, the sheer volume of data generated daily often leads to inconsistencies, errors, and redundancies. This necessitates a crucial procedure known as data cleansing, which ensures that information remains accurate, reliable, and actionable. Let's delve into the intricacies of the data cleansing process and its significance.

Defining Data Cleansing: Data cleansing, also referred to as data scrubbing or data cleaning, is the methodical process of identifying and rectifying errors, inconsistencies, and inaccuracies within a dataset. These discrepancies can include misspellings, duplicates, incomplete records, outdated information, and formatting errors.

Importance of Data Cleansing: Clean and accurate data is vital for informed decision-making. It enhances the credibility of analytics, improves operational efficiency, and aids in generating reliable insights. Additionally, it ensures compliance with regulatory standards like GDPR, CCPA, and HIPAA, reducing risks associated with incorrect or outdated information.

Steps in the Data Cleansing Process:a. Data Assessment: Begin by assessing the existing dataset to identify issues and understand its structure and quality.b. Data Standardization: Normalize formats, remove special characters, and ensure uniformity in naming conventions to streamline data for consistency.c. De-duplication: Identify and eliminate duplicate entries to maintain data integrity and accuracy.d. Error Correction: Address misspellings, inconsistencies, and inaccuracies by leveraging algorithms or manual review to rectify errors.e. Data Validation: Validate data against predefined rules, ensuring its adherence to specified criteria and enhancing its reliability.f. Data Enrichment: Supplement existing data with additional information from reliable sources to enhance its value and completeness.

Tools and Technologies: Various software tools and technologies facilitate the data cleansing process. These tools utilize algorithms, machine learning, and artificial intelligence to automate tasks such as duplicate detection, error identification, and standardization.

Continuous Improvement: Data cleansing is an iterative process. Regularly revisiting and refining the process ensures that data remains accurate and up-to-date. Establishing protocols for ongoing data maintenance is essential for sustained data integrity.

In conclusion, data cleansing is a fundamental aspect of data management, ensuring that organizations work with accurate, reliable, and actionable information. By implementing a structured data cleansing process and utilizing appropriate tools and methodologies, businesses can unlock the full potential of their data, leading to more informed decision-making and improved operational efficiency.

For more info visit here:-

data cleansing techniques

tools for data cleaning

crm data cleaning

0 notes

Text

Process of the scan to BIM

INTRODUCTION

The technique known as “Scan to BIM,” or “Scan to Building Information Modeling,” entails creating a Building Information Model (BIM) from point cloud data gathered through 3D laser scanning. The architecture, engineering, and construction (AEC) sector uses this methodology extensively to improve the precision and effectiveness of project design, construction, and management.

An overview of the Scan to BIM procedure is provided below:

Understanding Scan to BIM:

1. Laser Scanning:

Gather Information: Accurate measurements of real-world settings and structures are obtained through the use of 3D laser scanners.

Point Cloud: An extensive dataset of three-dimensional (3D) points that represent the surfaces of scanned objects is produced by the scanner.

Process of the scan to BIM | Virtuconscantobim

2. Data Registration:

Alignment: It is possible to obtain several scans from various angles. These scans are aligned and combined into a single, coherent point cloud by data registration.

3. Point Cloud Processing:

De-noise and eliminate superfluous data points.

Decimation: To make handling easier, decrease the density of the point cloud.

Colorization: Based on scanned surfaces, assign colors to points.

4. Conversion to BIM:

Import Point Cloud Data into BIM Programs: Revit, AutoCAD, or ArchiCAD are three examples of programs that may import Point Cloud data.

Modeling: BIM experts build a 3D virtual model of the scanned area by referring to the point cloud.

5. Advantages of BIM to Scan:

Precision: Laser scanning reduces errors in the modeling process by providing extremely exact measurements.

Efficiency: Provides a thorough reference for design and analysis, which speeds up the modeling process.

As-Built Accuracy: Assures that the virtual model closely resembles the physical structure’s as-built circumstances.

Clash Detection: Assists in finding conflicts between design components and pre-existing architecture.

6. Uses:

Refitting and Renovation: Perfect for projects involving pre-existing buildings where precise as-built data is essential.

Facility administration: Facilitates the development of intricate models for continuing upkeep and administration of facilities.

7. Challenges:

Data Size: Managing sizable point cloud datasets might present difficulties.

Expertise: Accurate data processing and modeling call for qualified specialists.

8. Future Trends:

Automated: Artificial intelligence developments could result in more automated scan-to-BIM workflows.

Integration with AR/VR: For improved visualization and collaboration, BIM models created from scanning data can be incorporated into augmented reality (AR) and virtual reality (VR) apps.

Benefits of the scan to bim

For many stakeholders in the architectural, engineering, and construction (AEC) sector, the Scan to BIM process has many advantages. Here are a few main benefits:

1. Precision:

Precision: By using laser scanning technology, measurements can be made with extreme precision and detail, guaranteeing that the final BIM model accurately captures the scanned environment’s as-built circumstances.

2. Effectiveness:

Time Savings: By offering a thorough and precise reference for design and analysis, Scan to BIM speeds up the modeling process. The amount of time needed for conventional manual measurements and modeling can be greatly decreased by doing this.

Process of the scan to BIM | Virtuconscantobim

3. As-Built Documentation:

Current circumstances: By precisely capturing a building or structure’s current state, the procedure makes it possible to document and comprehend existing circumstances more effectively. For projects involving expansion, retrofitting, or renovation, this is essential.

4. Clash Detection and Coordination:

Finding Conflicts: Early in the project lifecycle, clash detection is made easier by BIM models created from scanning data, which aids in the identification of any conflicts between new designs and pre-existing structures.

5. Improved Decision-Making:

Data-Driven Insights: Precise as-built data minimizes errors and rework by facilitating improved decision-making during the design and construction phases.

6. Visualization and Communication:

Improved Communication: Project stakeholders may more easily grasp the needs and scope of the work thanks to the 3D representation of scanned data in BIM models.

7. Cost Savings:

Minimized Rework: Scan to BIM helps cut down on rework by identifying conflicts and inconsistencies early on, which lowers project expenses overall.

8. Facility Management:

Lifecycle Management: Better maintenance planning and operations are made possible by the use of BIM models created from scanning data in continuing facility management.

9. Safety Improvements:

Remote Scanning: Laser scanning can be done remotely in hazardous or difficult-to-reach regions, saving staff from having to go into potentially dangerous situations.

10. Historical Documentation:

Archival Uses: Scan to BIM offers an extensive digital archive of current constructions, acting as important historical records for later use.

11. Adaptability to Changes:

Iterations in Design: BIM models provide simple revisions and adjustments, supporting modifications in design and guaranteeing that the virtual model stays in sync with any changes made to the real structure.

12. Integration with Other Technologies:

Integration of AR/VR: BIM models created from scanned data can be included in applications for virtual reality (VR) and augmented reality (AR), providing immersive experiences for teamwork and project visualization.

Process of the scan to BIM | Virtuconscantobim

Drawbacks of scan to bim

Although there are many advantages to using Scan to BIM, there are also possible disadvantages and difficulties. To make wise judgments throughout project execution, it is imperative to be aware of these limits. The following are some Scan to BIM drawbacks:

1. Complexity and Learning Curve:

Expertise: Scan-to-BIM implementation that works well calls for expertise in point cloud processing, laser scanning, and BIM modeling. To achieve correct outcomes, one must possess training and knowledge.

2. Costs:

Software and Equipment: Purchasing BIM software and laser scanning equipment can need a substantial upfront investment. The cost of employing qualified experts also raises the total cost.

3. Data Management:

Big Data Sets: The point cloud data produced by laser scanning can be enormous, necessitating a significant amount of computing power and storage for efficient handling and processing.

4. Data Processing Time:

Time-consuming: Project schedules may be impacted by the time-consuming nature of processing big point cloud datasets. The entire processing time is increased by the requirement for extensive data cleaning and decimation.

5. Integration Challenges:

Compatibility Problems: Compatibility problems might arise when integrating point cloud data into BIM software. It can be difficult to ensure smooth data movement between several software platforms.

6. Limited Automation:

Manual Intervention: Despite improvements, there is still a chance that some steps in the Scan to BIM process may need to be done by hand. This limits the process’s ability to be fully automated and efficient.

7. Environmental Factors:

Scanning Conditions: Unfavorable environmental factors, like dim lighting or bad weather, might affect the quality of the results of laser scanning and, consequently, the precision of the point cloud data that is produced.

8. Resolution and Detail:

Limited Detail: The point cloud’s level of detail might not always be able to capture minute details or complex elements, which could cause errors in the BIM model.

9. Lack of Standards:

Industry Standards: Variations in the quality and accuracy of deliverables may arise from the lack of set norms for Scan to BIM processes. Although there are ongoing efforts to standardize, they might not be adopted by all.

10. Project Size and Complexity:

Suitability: For smaller or less complex projects where standard surveying and modeling techniques are adequate, scanning to BIM might not be essential or cost-effective.

11. Privacy and Legal Concerns:

Data privacy: Sensitive information may be captured by scanning existing buildings, raising privacy concerns. It is important to keep ethical and legal issues in mind, particularly while scanning populated locations.

12. Maintenance Challenges:

Updates and Calibration: To provide precise and dependable results, laser scanning equipment needs routine calibration and maintenance. Data acquisition problems might result from maintenance neglect.

Conclusion:

In conclusion, Scan to BIM represents a transformative approach in the architecture, engineering, and construction (AEC) industry, leveraging advanced technology to bridge the gap between physical structures and virtual models. While the process offers numerous benefits, including unparalleled accuracy, improved efficiency, and enhanced decision-making, it is essential to consider potential drawbacks and challenges.

The complex nature of Scan to BIM, requiring specialized skills and substantial upfront investments, may pose barriers for some projects. Challenges related to data management, processing time, and integration with existing workflows must be carefully navigated. Additionally, issues such as environmental factors, limited automation, and the absence of universal standards can impact the overall effectiveness of the process.

Despite these challenges, ongoing advancements in technology, increased industry expertise, and the development of standards are addressing many of the drawbacks associated with Scan to BIM. The potential for improved collaboration, reduced rework, and enhanced project outcomes make it a compelling choice for projects where accurate as-built information and 3D modeling are critical.

As Scan to BIM continues to evolve, it is essential for AEC professionals to stay abreast of technological developments, invest in training and expertise, and carefully evaluate the suitability of the approach for specific projects. By doing so, industry stakeholders can maximize the benefits of Scan to BIM, contributing to more efficient, accurate, and collaborative project workflows in the ever-evolving landscape of construction and design.

#Virtucon#VirtuconEngineeringLLC#VirtuconScantoBIM#CADservicesprovider#AECindustry#CAD&BIM#Architecrute#2dAsbuiltdrawings

0 notes

Text

Ethical Considerations in Data Science: Balancing Innovation and Privacy

In the rapidly evolving landscape of technology, data science has emerged as a powerful tool driving innovation across various industries. From personalized recommendations to predictive analytics, the capabilities of data science are seemingly boundless. However, as organizations harness the power of data to fuel their advancements, ethical considerations become paramount. Striking a delicate balance between innovation and privacy is crucial to ensure the responsible and sustainable development of data-driven technologies.

The Ubiquity of Data Collection

In the digital age, data is ubiquitous, generated at an unprecedented pace from various sources such as social media, smart devices, and online transactions. While this influx of data fuels the insights that drive innovation, it raises ethical questions regarding the extent and purpose of data collection. Organizations must carefully evaluate the necessity and proportionality of collecting personal information, considering the potential impact on individual privacy.

Informed Consent and Transparency

Ethical data science practices demand transparency and informed consent. Individuals should be aware of what data is being collected, how it will be used, and have the option to opt out. Clear and concise communication about data practices is essential to empower individuals to make informed decisions about sharing their personal information. Ensuring transparency builds trust, a cornerstone of ethical data handling.

Fairness and Bias in Algorithms

Algorithms are the backbone of data science, but they are not immune to biases. Biased algorithms can perpetuate and even exacerbate existing social inequalities. Ethical data scientists must strive for fairness in algorithmic decision-making, identifying and mitigating biases to prevent discriminatory outcomes. Implementing fairness-aware models and continuous monitoring can help address these concerns.

Anonymization and De-Identification

Protecting privacy often involves anonymizing or de-identifying data to prevent the identification of individuals. However, with advances in re-identification techniques, the effectiveness of these methods is in question. Striking the right balance between preserving anonymity and maintaining data utility is a delicate task. Ethical data scientists must constantly reassess and enhance anonymization strategies to stay ahead of potential privacy breaches.

Security Measures and Data Breach Prevention

Ensuring the security of collected data is a non-negotiable aspect of ethical data science. Robust cybersecurity measures are crucial to prevent unauthorized access and data breaches. Organizations must invest in encryption, secure storage, and regular security audits to protect sensitive information. The ethical responsibility extends beyond data collection to include safeguarding the data throughout its lifecycle.

Social Responsibility and Impact Assessment

The consequences of data science reach far beyond individual privacy, influencing societal structures and norms. Ethical data scientists should conduct impact assessments to anticipate and address potential social implications of their work. Considering the broader ethical landscape allows for the identification of unintended consequences, enabling proactive measures to minimize harm and maximize positive societal impact.

Collaboration and Ethical Guidelines

To foster ethical practices in data science, collaboration among stakeholders is imperative. Establishing industry-wide ethical guidelines promotes a collective commitment to responsible data handling. Collaboration between data scientists, policymakers, ethicists, and the public creates a comprehensive framework that considers diverse perspectives, ensuring a holistic approach to ethical decision-making.

Continuous Education and Adaptation

The field of data science is dynamic, with new technologies and methodologies emerging regularly. Ethical considerations must evolve alongside these advancements. Continuous education for data scientists and professionals in related fields is essential to keep them informed about the latest ethical standards and practices. This commitment to ongoing learning ensures that ethical considerations remain at the forefront of data science endeavors.

Ethical considerations in data science are not mere theoretical constructs; they are the foundation upon which responsible innovation stands. Balancing the pursuit of groundbreaking advancements with a commitment to privacy and ethical practices is a challenging but necessary endeavor. By prioritizing transparency, fairness, and social responsibility, data scientists can contribute to a future where innovation and privacy coexist harmoniously, fostering a technologically advanced yet ethically conscious society

0 notes

Text

Évaluation Compétitive - Définition, Avantages, Identification des Joueurs Clés Et Leurs Forces/Faiblesses, Analyse des Tendances du Marché Et des Préférences du Client Par l'Évaluation Compétitive, Outils Et Techniques, Comment Effectuer une Évaluation Compétitive dans Votre Entreprise ?

Qu'est-ce que l'évaluation concurrentielle et pourquoi est-elle nécessaire ?L'évaluation concurrentielle, également connue sous le nom d'analyse concurrentielle ou d'intelligence concurrentielle, est le processus de collecte et d'évaluation des informations sur vos concurrents afin de mieux comp [...] https://academypedia.info/fr/index-du-glossaire/evaluation-competitive-definition-avantages-identification-des-joueurs-cles-et-leurs-forces-faiblesses-analyse-des-tendances-du-marche-et-des-preferences-du-client-par-levaluation-competitive/

#business #communication #data #education #ict #information #intelligence #technology - Created by David Donisa from Academypedia.info

0 notes

Text

Fwd: Workshop: Online.MetagenomicsMetabarcoding.Oct23-26

Begin forwarded message:

> From: [email protected]

> Subject: Workshop: Online.MetagenomicsMetabarcoding.Oct23-26

> Date: 4 October 2023 at 08:07:06 BST

> To: [email protected]

>

>

> Dear all

>

> We'd like to invite applicants to our online course: Introduction to

> Metabarcoding and Metagenomics Analysis.

>

> The ability to identify organisms from traces of genetic material

> in environmental samples has reshaped the way we see life on

> earth. Especially for microorganisms, where traditional identification

> is hard or near impossible, metagenomic techniques have granted us

> unprecedented insight into the microbiome of animals and the environment

> more broadly. This live online course gives an introduction to the

> pipelines and best practices for metagenomic data analysis, lead by

> expert bioinformaticians from Edinburgh Genomics.

>

> Date: 23rd - 26th October 2023 (10 am - 3:30 pm each day)

>

> Registration fee: £380; £405; £430 (University of Edinburgh

> staff/students; Other University staff/students; Industry staff)

>

> Instructors: Urmi Trivedi, Head of Bioinformatics, Edinburgh Genomics &

> Heleen De Weerd, Bioinformatician, Edinburgh Genomics

>

> Requirements: A general understanding of molecular biology and a working

> knowledge of Linux at the level of the Edinburgh Genomics' 'Linux for

> Genomics' workshop.

>

> Please see our website for details and sign-up:

> https://ift.tt/5aHBNLg

>

> Kind Regards

>

> Nathan Medd (Training and Outreach Manager - Edinburgh Genomics)

>

> The University of Edinburgh is a charitable body, registered in Scotland,

> with registration number SC005336. Is e buidheann carthannais a th’

> ann an Oilthigh Dhùn Èideann, clàraichte an Alba, àireamh clàraidh

> SC005336.

>

> Nathan Medd

0 notes

Text

Empowering Organizations: Unraveling the Potential of Cloud Identity Management Solutions

Image Source:Pexels

In the cutting-edge digital panorama, coping with personal identities and getting entry to privileges has turned out to be a vital aspect of organizational protection and performance. Cloud Identity Management Solutions have emerged as an effective device, revolutionizing the way organizations deal with authentication, authorization, and identity provisioning. This article delves into the transformative capacity of those solutions, inspecting their key benefits, implementation techniques, and their position in shaping the destiny of organizational protection.

Understanding Cloud Identity Management Solutions

Cloud Identity Management, regularly abbreviated as CIM, is a comprehensive approach to dealing with consumer identities, their get entry to rights, and authentication protocols in a cloud-based environment. Unlike traditional on-premises answers, CIM leverages the cloud's scalability and flexibility to offer a continuing and stable identity management experience.

Key components of cloud identity management

Single Sign-On (SSO): Allows users to log in as soon as possible and gives them the right of entry to multiple programs, improving consumer comfort and productivity.

Multi-Factor Authentication (MFA): Adds an additional layer of protection by requiring users to authenticate their identity through more than one manner, such as passwords, biometrics, or clever cards.

Identity Provisioning and De-Provisioning: Streamlines the manner of onboarding and offboarding employees, ensuring timely admission to and revocation of privileges.

Access Control Policies: Enables corporations to outline and implement granular get right of entry to policies, making sure that users best have get right of entry to the resources they want.

Benefits Of Cloud Identity Management Solutions

Enhanced Security: CIM answers provide strong safety features, inclusive of encryption, MFA, and continuous monitoring, safeguarding against unauthorized admission to and statistics breaches.

Improved Productivity: SSO abilities reduce the need for a couple of logins, saving time and lowering user frustration. This ends in increased basic productivity.

Scalability and Flexibility: Cloud-based answers can effortlessly adapt to organizational increases or modifications, allowing for seamless integration of recent packages and customers.

Cost Efficiency: With no need for on-premises hardware or renovation, cloud-primarily based CIM solutions can bring about sizable cost savings for organizations.

Image Source:Picfinder.ai

Implementing Cloud Identity Management Solutions

1. Assessment and Planning: Before implementation, organizations have to behavior a radical assessment of their contemporary identification control methods. This consists of figuring out present vulnerabilities, evaluating compliance requirements, and defining the scope of the CIM deployment.

2. Vendor Selection: Selecting the right CIM supplier is important. Factors to take into account consist of the seller's popularity, compliance with industry standards, scalability of the answer, and the level of help provided.

3. Data Migration and Integration: Migrating existing person records and integrating CIM with different programs and systems is an essential step. This procedure must be meticulously deliberate to ensure a continuing transition.

4. User Training and Adoption: Proper schooling and schooling are important to make sure that personnel understand how to use the brand-new CIM machine efficiently. This consists of educating them on quality practices for password control and using MFA.

The Future of Organizational Security: Cloud Identity Management Solutions

As the digital panorama continues to conform, the function of Cloud Identity Management Solutions will become even extra pivotal. Here are a few emerging developments so as to form the destiny of CIM:

1. AI-Driven Authentication

Artificial intelligence and gadget studying will play a considerable function in improving authentication procedures, enabling groups to detect and respond to anomalous behavior in actual time.

2. Zero Trust Security Model

This model operates at the principle of "in no way trust, always verify." It requires continuous authentication and authorization, even for users within the company's community perimeter.

3. Blockchain for Identity Verification

Blockchain technology holds the potential to revolutionize identity verification by providing a decentralized, immutable ledger of identities.

Image Source: Freepik

In addition to the fundamental components, Cloud Identity Management (CIM) solutions offer various advanced capabilities that further beautify their abilities and fees to companies.

1. Role-Based Access Control (RBAC)

RBAC permits groups to outline entry to permissions based totally on an individual's role in the company. This guarantees that customers have get right of entry to the resources and statistics vital for his or her particular task features. By assigning roles and related permissions, agencies can maintain an established and secure admission to the environment.

2. Identity Federation

Identity Federation allows for seamless integration between an enterprise's inner identification control machine and outside cloud-primarily based applications or services. This permits customers to get admission to a couple of systems with a single set of credentials, improving consumer enjoyment and decreasing the administrative burden of handling a couple of accounts.

3. Self-Service Password Reset

This characteristic empowers customers to reset their passwords independently, lowering the reliance on IT assistance for habitual password-related problems. It no longer handiest improves personal delight but also increases usual productivity by means of minimizing downtime associated with forgotten passwords.

Conclusion

Cloud Identity Management Solutions have emerged as a powerful force in current organizational safety and efficiency. By leveraging the cloud's competencies, those answers offer stronger security, progressed productivity, and price performance. Implementing CIM requires careful planning and consideration, but the benefits some distance outweigh the attempt. As we look beforehand, the evolution of CIM guarantees to convey even extra state-of-the-art and steady identification management answers to the forefront, solidifying its position as a cornerstone of organizational security within the virtual age.

0 notes

Text

What are the Challenges in Door-to-Door Fundraising?

In the realm of fundraising, one traditional approach that has stood the test of time is door-to-door fundraising. Rooted in personal connections and grassroots engagement, this method involves fundraisers going directly to potential donors' homes to seek contributions for a specific cause or organisation.

While door-to-door fundraising offers a unique opportunity for face-to-face interactions and personalised appeals, it has its share of challenges. This blog delves into the intricacies of door-to-door fundraising and sheds light on the hurdles that fundraisers must overcome to achieve success.

Building Trust in a Digital Age

In an era dominated by online transactions and digital communication, building trust in a brief encounter becomes a significant hurdle for door-to-door fundraisers. Overcoming this challenge requires fundraisers to not only embody authenticity and transparency but also adapt their approach to the concerns of modern-day residents. Sharing tangible evidence of the organisation's impact, such as success stories or data-driven results, can go a long way in establishing credibility and assuaging potential donors' doubts.

Overcoming Rejection and Resilience

Rejection is an inevitable part of any fundraising endeavour, but door-to-door fundraisers face it on a more personal level. Enduring repeated refusals and navigating through varied responses can be emotionally taxing. Fundraisers must develop a thick skin and the resilience to carry on despite setbacks. Training that focuses on effective communication, objection handling, and maintaining a positive attitude is essential to help fundraisers bounce back and maintain their motivation.

2. Navigating Diverse Demographics

Communities are often diverse in terms of socioeconomic backgrounds, cultures, languages, and beliefs. Door-to-door fundraisers must possess cultural sensitivity and adaptability to connect with people from all walks of life. Tailoring the fundraising pitch to resonate with different demographics requires a deep understanding of the community's values and needs. This challenge underscores the importance of investing in comprehensive training that equips fundraisers with the tools to engage effectively with various individuals.

3. Time and Territory Management

Door-to-door fundraising is a time-intensive process that demands meticulous territory management. Fundraisers must strategise their routes, plan their schedules, and optimise their interactions to maximise efficiency. Balancing the quantity and quality of interactions becomes crucial – spending too much time at one doorstep may hinder progress, while rushing through interactions can result in missed opportunities. Employing technology, such as mapping and route optimisation apps, can aid fundraisers in effectively managing their time and territories.

4. Adapting to Changing Regulations

Door-to-door fundraising is subject to varying local regulations and ordinances that fundraisers must adhere to. These regulations range from obtaining permits to specific timeframes for door-to-door interactions. Staying updated on the legalities and adapting fundraising strategies accordingly is essential to avoid legal complications. This challenge is about the importance of ongoing training and support to ensure that fundraisers are well-versed in the legal landscape.

5. Safety Concerns

For both fundraisers and residents, safety is a paramount concern. Fundraisers may encounter unpredictable situations or face hostility from residents. Likewise, residents might be wary of allowing strangers into their homes. Mitigating safety concerns requires thorough background checks for fundraisers, clear identification, and comprehensive safety protocols. Moreover, training in conflict resolution and de-escalation techniques equip fundraisers to handle potentially tense situations with professionalism and poise.

6. Impact of Technology and Digital Marketing

Digital marketing and online fundraising platforms have reshaped the landscape of fundraising. Many potential donors prefer the convenience of making contributions through digital channels rather than engaging in face-to-face interactions. Fundraisers must adapt by incorporating technology into their approach, leveraging social media and online campaigns to supplement their door-to-door efforts. Balancing the integration of technology while preserving the personal touch of in-person interactions presents an ongoing challenge.

Conclusion

Whilst rooted in tradition and personal connections, door-to-door fundraising comes with its fair share of challenges. Overcoming these challenges requires a combination of empathy, adaptability, resilience, and strategic thinking. By acknowledging and addressing the challenges it presents, fundraisers can hone their skills, enhance their effectiveness, and continue to make a meaningful difference in communities worldwide.

Wesser is the place to go if you're looking to work for door-to-door fundraising jobs. The company specialises in recruiting passionate individuals for rewarding fundraising positions. Whether you're an experienced fundraiser or new to the field, the organisation offers comprehensive training and a supportive environment to help you thrive. Join them and turn your passion for change into a fulfilling profession.

#door to door fundraising#face to face fundraising#fundraising recruitment agencies#fundraising agency#fundraising jobs

0 notes

Text

[ad_1]

Secure Data Masking in Data Warehouses: Pro Coding Tips & Tricks for Data Privacy

Introduction:

In today's data-driven world, protecting sensitive information has become more critical than ever. Data masking, a technique used to safeguard data privacy, plays a crucial role in securing data warehouses. This article delves deep into the concepts of secure data masking, providing pro coding tips and tricks to ensure maximum data privacy. Let's explore the intricacies of data masking and its significance in maintaining a secure environment.

Table of Contents:

1. What is Data Masking?

2. Why is Data Masking Essential for Data Warehouses?

3. Types of Data Masking Techniques

3.1 Full Masking

3.2 Partial Masking

3.3 Format Preserving Masking

3.4 Dynamic Masking

4. Implementing Secure Data Masking

4.1 Identifying Sensitive Data

4.2 Choosing the Right Data Masking Technique

4.3 Designing an Effective Data Masking Strategy

4.4 Evaluating and Testing the Masked Data

5. Advanced Data Masking Techniques

5.1 Tokenization

5.2 Encryption

5.3 Shuffling

5.4 Salting

6. Challenges in Data Masking

6.1 Consistency and Referential Integrity

6.2 Performance Impact

6.3 Compliance with Data Privacy Regulations

7. Best Practices for Secure Data Masking

7.1 De-identification and Re-identification

7.2 Data Masking in Development and Testing Environments

7.3 Role-Based Access Control

7.4 Secure Key Management

7.5 Data Masking Auditing and Monitoring

8. FAQs (Frequently Asked Questions)

8.1 What is the purpose of data masking?

8.2 Is data masking reversible?

8.3 How can data masking help in achieving compliance?

8.4 Does data masking impact performance?

8.5 Can data masking fully eliminate the risk of data breaches?

8.6 What are the legal implications associated with data masking?

8.7 Are there any industry-specific data masking requirements?

1. What is Data Masking?

Data masking is a technique that involves replacing sensitive data with realistic but fictitious information. It ensures that the critical information remains obscured and cannot be accessed by unauthorized individuals. By preserving the format and structure of data while rendering it useless for malicious purposes, data masking helps maintain the integrity of data without compromising its functionality.

2. Why is Data Masking Essential for Data Warehouses?

Data warehouses store vast amounts of valuable information, ranging from customer details to financial records. Such repositories become prime targets for cybercriminals looking to exploit data for various malicious purposes. Data masking acts as a vital defense mechanism that adds an additional layer of security to prevent unauthorized access. By rendering sensitive data useless for anyone other than authorized users, data masking reduces the risk of data breaches and subsequent legal ramifications.

3. Types of Data Masking Techniques

3.1 Full Masking:

- Full masking replaces the original sensitive data with completely fictitious information.

- It ensures that no traces of the original data are left, guaranteeing maximum privacy.

3.2 Partial Masking:

- Partial masking obscures certain portions of the original data while retaining some essential information.

- This technique allows for limited access to data when required while still protecting sensitive elements.

3.3 Format Preserving Masking:

- Format preserving masking retains the format and structure of the original data while replacing specific sensitive components.

- It ensures that the masked data appears realistic, minimizing disruption to applications that utilize the data.

3.4 Dynamic Masking:

- Dynamic masking applies masking techniques in real-time, based on predefined rules and user access privileges.

- It provides an additional layer of security by dynamically masking data according to individual user requirements.

4. Implementing Secure Data Masking

4.1 Identifying Sensitive Data:

- The first step towards implementing secure data masking involves identifying and classifying sensitive data.

- Understand the regulatory and privacy requirements applicable to the data to determine the level of masking required.

4.2 Choosing the Right Data Masking Technique:

- Assess the sensitivity of the data, the needs of the business, and the impact on the applications that handle the data.

- Choose the appropriate data masking technique that balances privacy and usability effectively.

4.3 Designing an Effective Data Masking Strategy:

- Create a comprehensive data masking strategy that includes masking rules, data flow diagrams, and access controls.

- Incorporate data masking within the overall data governance framework to ensure consistency and compliance.

4.4 Evaluating and Testing the Masked Data:

- Perform thorough testing to validate the effectiveness of the data masking implementation.

- Evaluate the quality of the masked data, considering factors such as format preservation, referential integrity, and performance.

5. Advanced Data Masking Techniques

5.1 Tokenization:

- Tokenization replaces sensitive data with randomly generated tokens.

- The original data is stored separately in a secure vault, while tokens are used for processing and application functionality.

5.2 Encryption:

- Encryption transforms sensitive data using cryptographic algorithms, rendering it unreadable without the decryption key.

- By encrypting data at rest and in transit, data masking ensures secure storage and transmission.

5.3 Shuffling:

- Shuffling involves rearranging the order of sensitive data elements.

- This technique helps protect data while maintaining referential integrity.

5.4 Salting:

- Salting involves adding random data to sensitive information before applying data masking techniques.

- It adds an extra layer of complexity to prevent reverse engineering of data from masked values.

6. Challenges in Data Masking

6.1 Consistency and Referential Integrity:

- Ensuring consistency and referential integrity among masked data elements presents a significant challenge.

- Masked data should still be usable and relevant within the context of the application or analytical processes.

6.2 Performance Impact:

- Data masking operations can introduce performance overhead due to additional computations and transformations.

- Balancing the need for data privacy with performance requirements is crucial.

6.3 Compliance with Data Privacy Regulations:

- Adhering to data privacy regulations and industry-specific compliance standards is vital while implementing data masking techniques.

- Ensure that the chosen data masking approach meets regulatory requirements to avoid legal ramifications.

7. Best Practices for Secure Data Masking

7.1 De-identification and Re-identification:

- Implement robust de-identification and re-identification processes to ensure data privacy and usability.

- De-identification involves masking sensitive data, while re-identification allows authorized users to access the original information when necessary.

7.2 Data Masking in Development and Testing Environments:

- Extend data masking practices to development and testing environments to maintain data privacy and security across the entire data lifecycle.

- Test data should be masked to prevent unintended exposure to sensitive information during software development and testing processes.

7.3 Role-Based Access Control:

- Implement role-based access controls (RBAC) to restrict access to masked and unmasked data based on user roles and privileges.

- RBAC ensures that authorized individuals can access relevant data while preventing unauthorized access to sensitive information.

7.4 Secure Key Management:

- Adopt secure key management practices to ensure the confidentiality and integrity of encryption keys used in data masking techniques.

- Ensure proper key rotation, storage, and access controls to mitigate the risk of key compromise.

7.5 Data Masking Auditing and Monitoring:

- Establish robust auditing and monitoring systems to track and record data masking activities.

- Regularly analyze and audit masked data to ensure ongoing effectiveness and compliance with privacy regulations.

8. FAQs (Frequently Asked Questions)

8.1 What is the purpose of data masking?

- The purpose of data masking is to safeguard sensitive data by ensuring it remains hidden to unauthorized individuals while preserving its functionality.

8.2 Is data masking reversible?

- Data masking is generally reversible, allowing authorized individuals to access the original data using re-identification techniques when necessary.

8.3 How can data masking help in achieving compliance?

- Data masking aids compliance by protecting sensitive information, reducing the risk of data breaches, and ensuring adherence to data privacy regulations.

8.4 Does data masking impact performance?

- Data masking can introduce performance overhead due to additional operations and transformations. Careful planning and optimization can minimize any impact.

8.5 Can data masking fully eliminate the risk of data breaches?

- While data masking significantly reduces the risk of data breaches, it does not entirely eliminate the possibility. It should be combined with other security measures.

8.6 What are the legal implications associated with data masking?

- Implementing data masking techniques should adhere to legal and regulatory requirements governing data privacy and protection.

8.7 Are there any industry-specific data masking requirements?

- Different industries may have specific data masking requirements based on regulations, standards, and business practices in their respective domains.

In conclusion, secure data masking is an essential technique for protecting sensitive information within data warehouses. By implementing the discussed tips and tricks, organizations can ensure maximum privacy while preserving the integrity and usability of their data. Remember to adapt the data masking techniques and strategies to meet individual business requirements and comply with relevant data privacy regulations.

[ad_2]

#Secure #Data #Masking #Data #Warehouses #Pro #Coding #Tips #Tricks #Data #Privacy

0 notes

Text

Data De-identification or Pseudonymity Software market Unidentified Segments – The Biggest Opportunity Of 2023

Latest business intelligence report released on Global Data De-identification or Pseudonymity Software Market, covers different industry elements and growth inclinations that helps in predicting market forecast. The report allows complete assessment of current and future scenario scaling top to bottom investigation about the market size, % share of key and emerging segment, major development, and technological advancements. Also, the statistical survey elaborates detailed commentary on changing market dynamics that includes market growth drivers, roadblocks and challenges, future opportunities, and influencing trends to better understand Data De-identification or Pseudonymity Software market outlook.

List of Key Players Profiled in the study includes market overview, business strategies, financials, Development activities, Market Share and SWOT analysis are:

IBM (United States)

Thales Group (France)

TokenEx (United States)

KI DESIGN (United States)

Anonos (United States)

Aircloak European Union

AvePoint (United States)

Very Good Security (United States)

Dataguise (United States)

SecuPi (United States)

The most common technique to de-identify data in a dataset is through pseudonymization. Data De-identification and Pseudonymity software replace personal identifying data in datasets with artificial identifiers or pseudonyms. Companies choose to de-identify or pseudonymize their data to reduce their risk of holding personally identifiable information and comply with privacy and data protection laws such as the CCPA and GDPR. Data De-identification and Pseudonymity software allows companies to use realistic, but not personally identifiable datasets. This protects the anonymity of data subjects whose personal identifying data, such as names, dates of birth, and other identifiers, are in the dataset. Data De-identification and Pseudonymity solutions help companies derive value from datasets without compromising the privacy of the data subjects in a given dataset. Data masking is often used as a way companies maintain sensitive data, but prevent misuse of data by employees or insider threats.

Key Market Trends: Increasing Demand from various Enterprise

Growing Demand from Government Sector

Opportunities: Technological Advancement associated with Data De-identification or Pseudonymity Software

Rising Demand from the various Developing Countries

Market Growth Drivers: Increasing Adoption from Smart City Projects

High Adoption due to increasing security Threats

The Global Data De-identification or Pseudonymity Software Market segments and Market Data Break Down by Application (Individual, Enterprise (SME's, Large Enterprise), Others), Deployment Mode (Cloud, On Premise), Technology (Encryption, Tokenisation, Anonymization, Pseudonymisation), Price (Subscription-Based (Annual, Monthly), One Time License), End User (BFSI, IT and telecom, Government and defense, Healthcare, Other)

Presented By

AMA Research & Media LLP

0 notes

Text

A Comprehensive Guide To Anti-Phishing Solutions

In today's digital landscape, it is essential for businesses to stay ahead of the curve when it comes to cyber threats. One of the most pressing cyber threats businesses face is phishing, which is when someone tries to gain access to personal information or company data by masquerading as a trusted entity.

To ensure your business is well-protected against phishing attempts, it is important to implement a comprehensive anti-phishing solution.

In this blog post, we will provide a comprehensive guide to anti-phishing solutions, so that your business can stay ahead of the curve and be secure against potential cyber threats.

We will explore the different types of anti-phishing solutions available, their features and benefits, and the steps you can take to ensure your business is taking advantage of the best anti-phishing solutions. For more information on anti phishing solutions, visit phishprotection.com.

What Is Anti Phishing?

Anti-phishing is a set of techniques, tools, and strategies designed to protect individuals and organizations from phishing attacks. Phishing is a type of online attack where attackers use social engineering to trick victims into divulging sensitive information, such as passwords, usernames, credit card details, and other personal data.

Anti-phishing techniques aim to prevent such attacks by detecting and blocking phishing attempts, educating users on how to recognize and avoid phishing attempts, and creating a culture of security within organizations.

Anti-phishing tools include email filtering, web filtering, two-factor authentication, browser protection, and endpoint protection.

By using these tools and strategies, individuals and organizations can significantly reduce the risk of falling prey to phishing attacks and protect sensitive data from theft or misuse.

Guide To Anti-Phishing Solutions

Phishing is a type of online attack where attackers try to trick users into divulging sensitive information, such as usernames, passwords, and credit card details, by posing as a trustworthy entity. Anti-phishing solutions are tools designed to detect and prevent phishing attacks.

Here is a comprehensive guide to anti-phishing solutions:

1. Email Filtering

Email filtering is one of the most popular anti-phishing solutions. It involves the use of software that filters incoming email messages and identifies any emails that contain phishing attempts.

The filtering software can block emails from known phishing sources and quarantine suspicious emails, allowing the user to inspect the message before opening it.

2. Web Filtering

Web filtering involves using software that blocks access to websites known to be phishing sites. The software can also analyze website content to identify potential phishing attempts and prevent access to malicious links.

3. Two-Factor Authentication

Two-factor authentication (2FA) is a security process that requires users to provide two forms of identification to access their accounts. This could include something the user knows (such as a password) and something the user has (such as a mobile phone).

4. Anti-Phishing Training

Anti-phishing training involves educating users on how to recognize phishing emails and websites. The training can teach users to scrutinize email messages, hover over links to inspect them before clicking, and avoid providing sensitive information to unsolicited requests.

5. Security Awareness

Security awareness involves creating a culture of security within an organization. It involves educating employees on the importance of security and encouraging them to be vigilant when accessing emails and websites.

6. Browser Protection

Browser protection involves using software that detects and blocks malicious websites. The software can also analyze website content and block access to any websites that are known to be phishing sites.

7. Endpoint Protection

Endpoint protection involves using software that protects individual devices, such as laptops and smartphones, from phishing attacks. The software can detect and block phishing attempts, and it can also prevent users from accessing malicious websites.

8. Machine Learning and Artificial Intelligence

Machine learning and artificial intelligence (AI) can be used to analyze vast amounts of data and identify patterns that may indicate a phishing attack. The software can then block access to phishing sites and prevent users from divulging sensitive information.

Considering all this, staying ahead of the curve when it comes to combating phishing attacks is essential for businesses of all sizes. By investing in a comprehensive anti-phishing solution, organizations can protect themselves from malicious actors and create a secure environment for their customers and employees. With the right tools, organizations can monitor and detect phishing attempts before they become a problem, ensuring that their data remains safe and secure.

1 note

·

View note

Text

Benefits of Using Golang to Develop Fintech Applications

The primary requirements of a Fintech solution are security, speed, and scalability, and Golang perfectly meets these requirements. As a result, go for Fintech with Golang!

Go is a statically typed programming language. It allows compilers to go through the source code early on, preventing and correcting all potential errors before they occur. Despite its similarities to other languages, particularly Python and C++, many developers have recently switched to Golang. A wide range of fintech companies are also considering switching to Golang for application development. Let's take a closer look at the main benefits of using Golang for app development in the FinTech industry.

Handling Heavy-Load Services

FinTech applications must be available 24 hours a day, seven days a week. Furthermore, this industry necessitates the rapid identification and resolution of errors, as well as the provision of high-load services such as online messaging, chats, and requests that must be addressed and processed efficiently. Golang is well-built to meet high load requirements because it has the ability to run multiple apps concurrently, addressing each responsibility individually.

Outstanding performance

The performance of Golang is the second most notable advantage of using it in the FinTech industry. All because of its effective garbage collection feature. Because Go is built into the binary, it can communicate directly with the hardware, eliminating the need for an additional layer such as the Java Virtual Machine. In terms of performance in complex and challenging situations, Go outperforms all other languages.

The simplicity

One excellent example of this feature is the payment behemoth American Express. According to the company, Golang is a straightforward and easy-to-learn language due to its simplicity. Developers with little experience can master the programming language in a month, and beginners can quickly grasp its fundamentals.

Unrivaled memory management

Another critical aspect of the FinTech industry is memory management. Golang, which has this feature, assigns blocks to multiple running programmes, handling, monitoring, and coordinating each one on a system level. By performing these activities, Golang ensures that the system memory is optimised to the highest level possible.

Typing that is static

Languages that use static typing rather than dynamic typing have a short learning curve. In Golang, unlike a programming language that uses dynamic typing, developers can easily detect and correct code errors. Ultimately, throughout their fintech app development journey, developers benefit from the speed and accuracy of the language in which the code can be written.

High Level of Security

This is one of the most important advantages of using Go in the FinTech industry. The finance industry is one such industry that cybercriminals frequently target. According to statistics, financial institutions and banks account for more than a quarter of all malware attacks. Access to customer data has become very simple thanks to techniques such as reverse engineering, clickjacking, and so on. Golang, on the other hand, has the ideal set of tools for making codes and applications extremely secure. The Go Module is one such excellent example. Typically, Golang provides three symmetric encryption algorithm packages: base64, AES, and DES. To ensure the security of your application, you can request that your developer follow the best security practises, such as:

Encoding web content with a template/HTML package, protecting users from cross-site scripting attacks.

Using Go's static binding feature in conjunction with minimal base images in containers to reduce the container attack surface. Concurrency

One of the key premises on which Go was built is its ability to run programmes independently of one another. Given that Golang allows multiple apps to run concurrently, it makes use of its simple built-in goroutines, which provide incredible flexibility and speed in comparison to Java. To summarise, the concurrency feature makes Golang for FinTech a preferred partner by improving performance.

Compatibility with multiple platforms

Without cross-platform compatibility, fintech applications are incomplete. Golang provides excellent cross-platform compatibility by allowing software or devices to run on one or more operating systems or hardware platforms. As a result, when a company uses this language, it gains access to a larger audience by providing them with access to apps across multiple devices/platforms such as smartphones, tablets, and laptops.

Exceptional Scalability

Creating a fintech application is not enough. When a company decides to launch a fintech app, it must ensure that the system is robust enough to handle a significant increase in the number of users. After all, leveraging market share is the primary need for any startup/company. Here, Golang provides excellent tools to its developers, such as concurrent Goroutines, to help them build a performant and scalable solution. Unlike traditional architecture, Goroutines allow a single process to serve a large number of requests at the same time. As a result, it is currently the preferred language for many Fintech developers.

Also read : Star by face App

0 notes

Text

Why do play schools need to emphasize the mental health of children?