Photo

for @newscientist

#science #cartoon #snakes

https://www.instagram.com/p/CFZge4fnL2w/?igshid=47smlqt2x8fn

464 notes

·

View notes

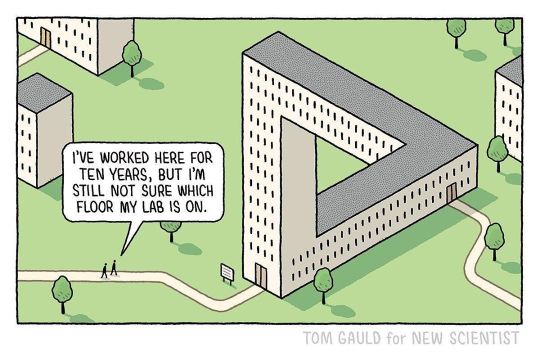

Photo

for @newscientist

#science #architecture #impossibleobjects

https://www.instagram.com/p/CDtJWgZHuoM/?igshid=ax5x31g8okrl

1K notes

·

View notes

Text

My Name is Bot

Text-generating neural networks can do a lot more now than they used to. When I first started generating things with neural networks, sentences and even simple phrases were mostly beyond them (that didn’t stop me from trying, with hilariously inept results). But now programs like GPT-3 can keep track of much larger blocks of text, capable of writing multi-question personality quizzes (as long as you don’t mind that the tests make no darn sense).

Now here’s one I’ve wanted to do for a while: there’s a form of poetry called bredlik, introduced in late 2016 CE by Sam Garland after a cow was discovered licking the loaves at a bakery:

my name is Cow,

and wen its nite,

or wen the moon

is shiyning brite,

and all the men

haf gon to bed -

i stay up late.

i lik the bred.

With its pseudo-Chaucerian spelling and meter of strict iambic diameter, bredlik is one of my favorite things the internet has produced. And, now that the interest in bredlik has declined from “very niche” to “virtually none”, it’s the perfect time for me to see how a state-of-the-art neural net does at generating bredlik poems.

I gave GPT-3 15 example bredlik poems, focusing on those that stuck to the standard 8-line length. I also included a (subject, verb, object) header to each poem so I could specify the topic. The cow poem above, for example, got (cow, lick, bread) as a header. Then I gave GPT-3 a few headers to add to, and here’s what it produced:

(giraffe, trick, bot)

my name’s giraf,

and on the veld

i lik to pley

with bouncing gait

but when it’s cold

i shiver, no.

my tunge wil bach,

i trip the bot.

(bot, make, memes)

my name is bot

and wen i memz

i go into the street

i maek the sound

lik dok dok dok

lik boom boom boom

but all at once

i see a meme

and i can’t meme.

It’s close. It’s soooo close. Terrible, though. It does make an effort to match my prompt, even when I sabotage it with way too many syllables or something:

(cat, eat, broccoli)

my name is cat,

and wen i feel

the smell of treat,

i jump up fast

from box or seat,

and run and leap

and seize the feep,

and say, yum, meep!

(moon, moon, moon)

my name is moon

and wen it’s night

and stars are out

and you don’t see

a single sound

I am the one

to set your heart

I moon the moon

It occasionally gets the rhythm right, though it’s curiously bad at counting lines. It tries to do the pseudo-Chaucerian spelling but some of its misspellings are so weird that I suspect it’s just making up words. Other times it uses real archaic English, which is not the point of bredlik but it’s still kinda cool that it drew the parallel. Note that it is also almost following the rhyming scheme, especially if you take some liberties with how you pronounce the made-up words. For the rest of the poems in this post, GPT-3 generated not just the poem, but the prompt as well.

(Sheep, graze, name)

My name is Sheep

and wen it’s nyght

i like to bleepe

with shut and ee

and munch upon

the buettom green

of grounges fourm

i lik the name

(fish, lick, thing)

my name is fish,

and wen i see

the mote that doth

bestow upon me

the gift of life

and givith unto me

a watery strife

i thank the fen

i lik the thing

(bell, scare, doom)

my name is bell

and wen you kno

the wyld dark nere

to Hell’s abyss

and in the mire

the dead a roon

you think it’s naym

the bells will toon

i scare the doom

I’m surprised at how often GPT-3 did manage to get close to bredlik, since AI-generated poetry is notoriously hard, and even careful scripting can produce glitchy poems with unexpected odes to mining company Alusuisse. Still, in this post I’m only showing a few of the generated poems - most of them not only fail to fit bredlik, but do so in a boring or unsatisfying way. The exception is this poem, which has definitely strayed from bredlik, but on the flip side contains the phrase “beely might”.

(Bee, use, thief)

My name is Bee

and wen I see

a moth upon a tree

I use my beely might

and steal it from its fight

And then I lik the thieft

I have more neural net bredlik poems than would fit in this post, including many that are for some reason quite unsettling. You can enter your email here, and I’ll send it to you.

My book on AI, You Look Like a Thing and I Love You: How Artificial Intelligence Works and Why it’s Making the World a Weirder Place, is available wherever books are sold: Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore

1K notes

·

View notes

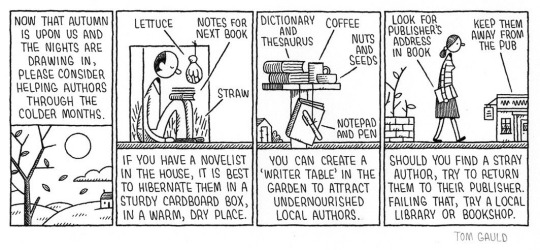

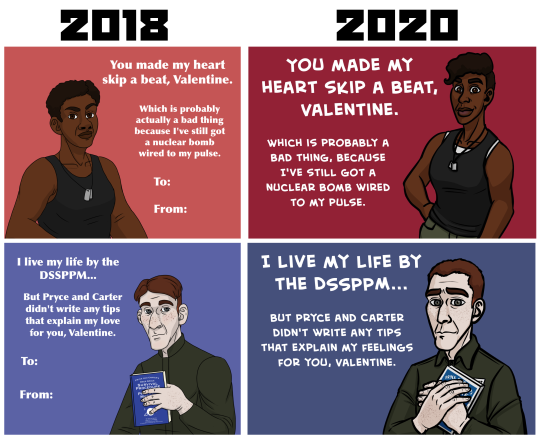

Photo

AAh! Finally some time to draw.

Welcome to Night Vale #53: The September Monologues. There were 3 monologues, so 3 illustrations.

Thanks for hanging in while I was absent :)

2K notes

·

View notes

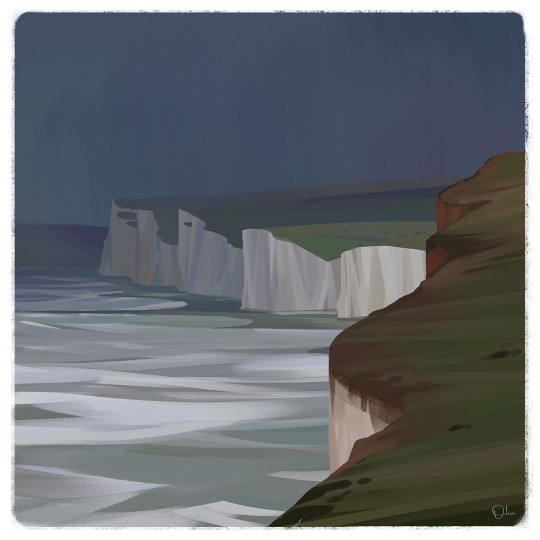

Photo

I’ve put a few new things into the shop and art for sale sections of my website:

https://tomgauld.com/shop

https://tomgauld.com/art-for-sale

82 notes

·

View notes

Text

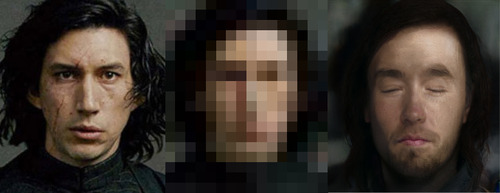

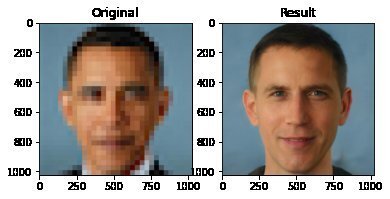

Depixellation? Or hallucination?

There’s an application for neural nets called “photo upsampling” which is designed to turn a very low-resolution photo into a higher-res one.

This is an image from a recent paper demonstrating one of these algorithms, called “PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models”

It’s the neural net equivalent of shouting “enhance!” at a computer in a movie - the resulting photo is MUCH higher resolution than the original.

Could this be a privacy concern? Could someone use an algorithm like this to identify someone who’s been blurred out? Fortunately, no. The neural net can’t recover detail that doesn’t exist - all it can do is invent detail.

This becomes more obvious when you downscale a photo, give it to the neural net, and compare its upscaled version to the original.

As it turns out, there are lots of different faces that can be downscaled into that single low-res image, and the neural net’s goal is just to find one of them. Here it has found a match - why are you not satisfied?

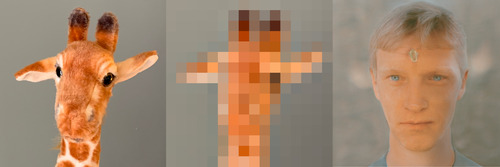

And it’s very sensitive to the exact position of the face, as I found out in this horrifying moment below. I verified that yes, if you downscale the upscaled image on the right, you’ll get something that looks very much like the picture in the center. Stand way back from the screen and blur your eyes (basically, make your own eyes produce a lower-resolution image) and the three images below will look more and more alike. So technically the neural net did an accurate job at its task.

A tighter crop improves the image somewhat. Somewhat.

The neural net reconstructs what it’s been rewarded to see, and since it’s been trained to produce human faces, that’s what it will reconstruct. So if I were to feed it an image of a plush giraffe, for example…

Given a pixellated image of anything, it’ll invent a human face to go with it, like some kind of dystopian computer system that sees a suspect’s image everywhere. (Building an algorithm that upscales low-res images to match faces in a police database would be both a horrifying misuse of this technology and not out of character with how law enforcement currently manipulates photos to generate matches.)

However, speaking of what the neural net’s been rewarded to see - shortly after this particular neural net was released, twitter user chicken3gg posted this reconstruction:

Others then did experiments of their own, and many of them, including the authors of the original paper on the algorithm, found that the PULSE algorithm had a noticeable tendency to produce white faces, even if the input image hadn’t been of a white person. As James Vincent wrote in The Verge, “It’s a startling image that illustrates the deep-rooted biases of AI research.”

Biased AIs are a well-documented phenomenon. When its task is to copy human behavior, AI will copy everything it sees, not knowing what parts it would be better not to copy. Or it can learn a skewed version of reality from its training data. Or its task might be set up in a way that rewards - or at the least doesn’t penalize - a biased outcome. Or the very existence of the task itself (like predicting “criminality”) might be the product of bias.

In this case, the AI might have been inadvertently rewarded for reconstructing white faces if its training data (Flickr-Faces-HQ) had a large enough skew toward white faces. Or, as the authors of the PULSE paper pointed out (in response to the conversation around bias), the standard benchmark that AI researchers use for comparing their accuracy at upscaling faces is based on the CelebA HQ dataset, which is 90% white. So even if an AI did a terrible job at upscaling other faces, but an excellent job at upscaling white faces, it could still technically qualify as state-of-the-art. This is definitely a problem.

A related problem is the huge lack of diversity in the field of artificial intelligence. Even an academic project with art as its main application should not have gone all the way to publication before someone noticed that it was hugely biased. Several factors are contributing to the lack of diversity in AI, including anti-Black bias. The repercussions of this striking example of bias, and of the conversations it has sparked, are still being strongly felt in a field that’s long overdue for a reckoning.

Bonus material this week: an ongoing experiment that’s making me question not only what madlibs are, but what even are sentences. Enter your email here for a preview.

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore

15K notes

·

View notes

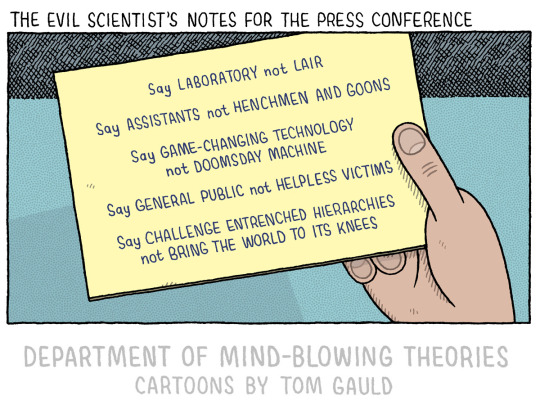

Photo

‘The Evil Scientist’s Notes for the Press Conference'

A cartoon from my new book. Order links here: https://tomgauld.com

3K notes

·

View notes

Video

220K notes

·

View notes

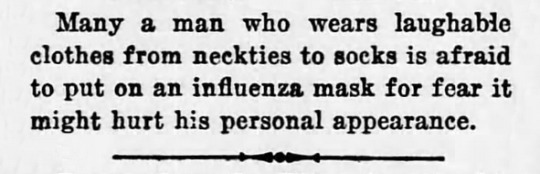

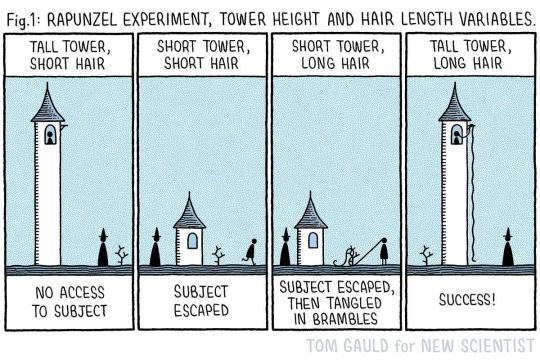

Photo

p.s. My new book of science cartoons ‘Department of Mind-Blowing Theories’ is out now: http://tomgauld.com/comic-books-v2

1K notes

·

View notes

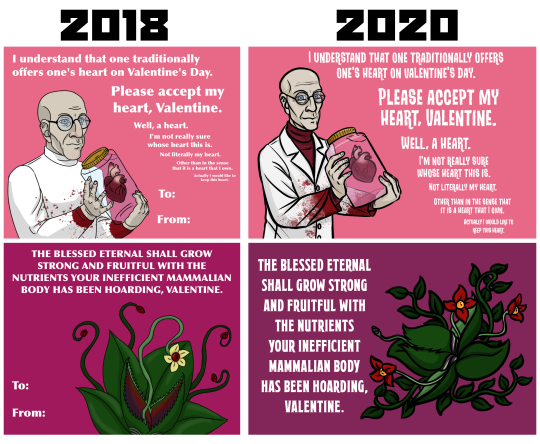

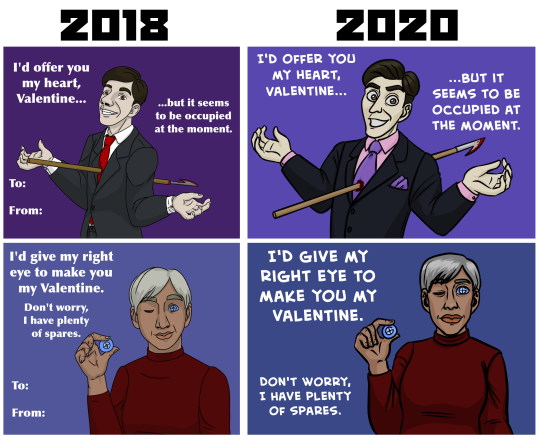

Photo

Side-by-sides of the 2018 and 2020 versions of the Wolf 359 Valentines, because it’s fun looking at art progress.

44 notes

·

View notes

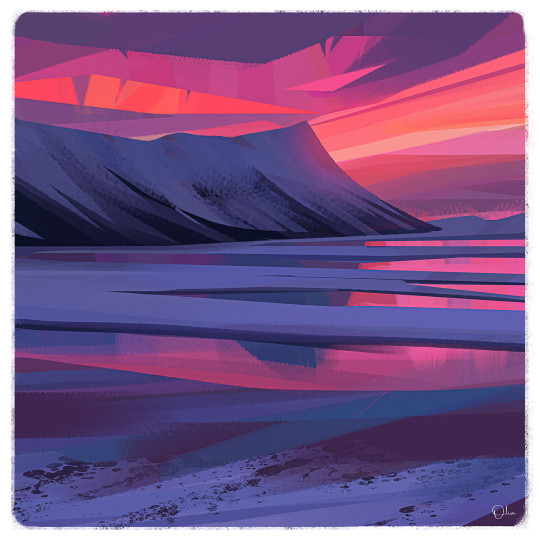

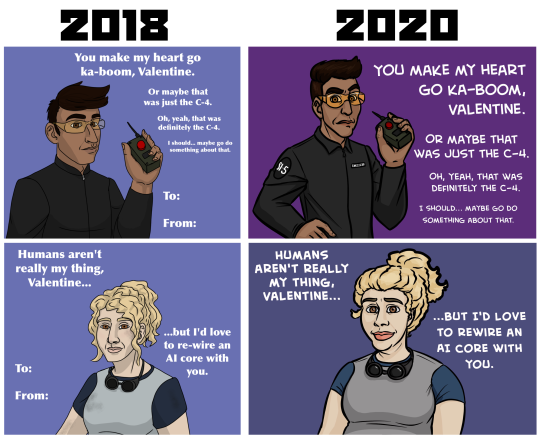

Photo

“Welcome…… To Night Vale. ♬♩♫”

The Man in the Tan Jacket

www.oliviachinmueller.com

UPDATED VERSION!

Well, I kept fussing with it and ended up extending the image quite a bit.

Here is the post of the old piece of you are interested: Boop

846 notes

·

View notes

Text

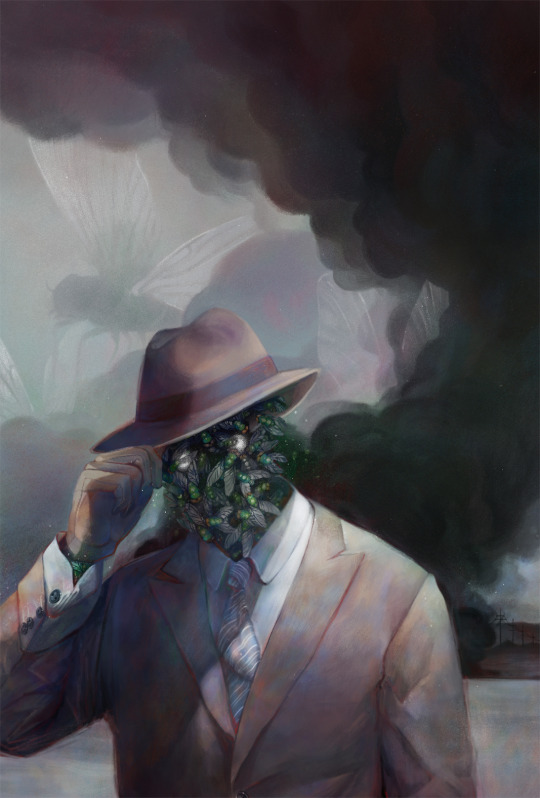

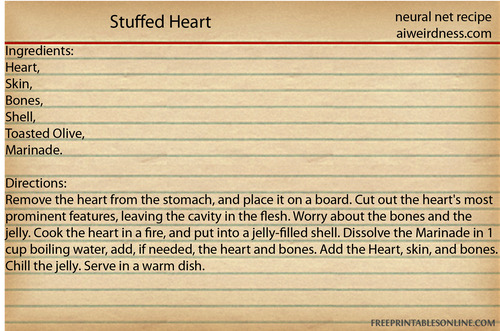

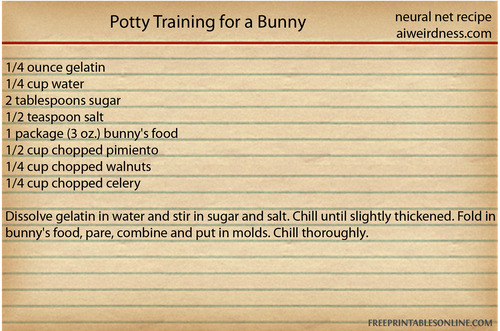

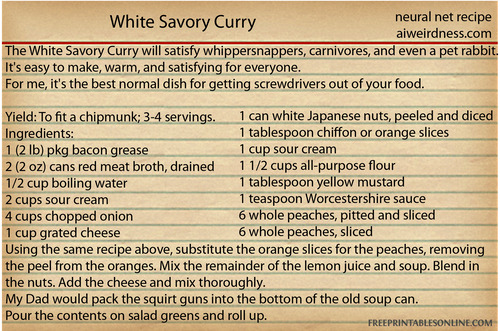

AI + Vintage American cooking: a combination that cannot be unseen

A week ago, in a sudden fit of terrible judgement, I decided to find out what would happen if I:

Asked people to help me collect examples of the worst, the weirdest, the most gelatinous recipes that vintage American cooking has to offer, then

Trained a neural net to imitate them

People submitted over 800 recipes in all, including such recipes as:

“Beef Fudge” (contains marshmallow, chocolate chips, and ground beef),

“Circus Peanut Jello Salad” (also contains crushed pineapple and kool-whip), and

“Tropical Fruit Soup” (contains banana, grapes, and a can of cream of chicken soup)

“Lemon Lime Salad” (also contains cottage cheese, mayonnaise, and horseradish)

As I watched this dataset coalesce, much as one might watch a speeding dumpster begin to spin out of control, I began to approach the state I dreaded: all the recipes began to seem normal.

Shrimp + grapefruit + lemon jello? Citrus seafood is a thing.

Chili sauce + lemon jello + cottage cheese + mayo? Well it’s not SWEETENED jello, so

I began to wonder if I would actually be able to tell the difference between the neural net recipes and the real thing. Jello was supposed to be easy-to-prepare, after all - maybe through repetition an advanced neural net like GPT-2 would learn how to make basic jello, and then anything it would decide to chuck in there would be technically reasonable. Maybe it would even coalesce on an ideal form, one that distilled human invention down to its essentials.

No, as it turns out. Here’s a neural net recipe.

It does cocktails, too.

The training data contained a lot of things. It contained eel only once. For some reason the AI has decided to use eel a LOT.

It also invents ingredients.

Some of the neural net recipes bear at least some resemblance to the human versions, but manage to mess them up profoundly. Without a sense that the recipe directions are describing ingredients and things that happen to them, the neural net never gets the hang of jello - that you need hot water to make it gel, that it doesn’t go in the oven. It also forgets to add all of its ingredients, or introduces some that were never mentioned before. This is partly because its memory is terrible, and partly because it doesn’t know what’s important.

I couldn’t find a setting at which the neural net recipes could consistently pass for human. Set the chaos levels too low and the neural net would repeat the same few recipes, forgetting a different key step or ingredient each time. Set the chaos levels too high and the neural net would get ever more inventive, producing recipes that promised creamy lime and called for golf balls or elk hide, or directed the chef to remove the lamb’s giblets.

Some of its recipes were beyond bizarre.

Remember that today’s AI is much closer in brainpower to an earthworm than to a human. It can pattern-match but doesn’t understand what it’s doing. Commercial AI is not significantly smarter than this recipe AI. Humans have just hopefully done a better job of preventing it from making oblivious mistakes.

It got to the point where I would see a recipe like this and be excited and proud of the neural net. Then I would realize just how very low my standards had fallen.

One thing the neural net has learned from humans is that it’s good to include a story with your recipe.

It is bad at this.

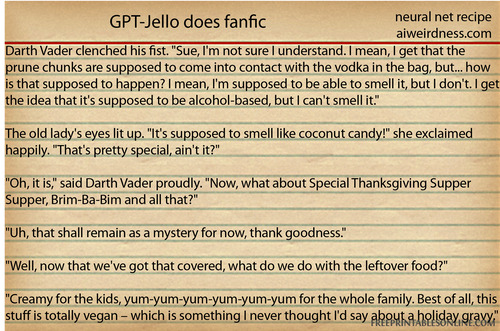

The neural net puts lots of words in its recipes that were never in the jello-centric training data. It’s drawing from its initial general training on internet text. It read a LOT of fanfic on the internet during its initial general training, and still remembers it now. Except now all its stories center around food.

It’s trying. It’s startlingly bad. It wants us to remove the internal rinds twice. AI’s not ready to take over the world - it can’t even figure out the kitchen.

Bonus content: More jello-centric neural net recipes, including some that were too long to post here. Be especially afraid of the ones that aren’t exactly “recipes”.

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s

3K notes

·

View notes