Video

Researching royalty free video content

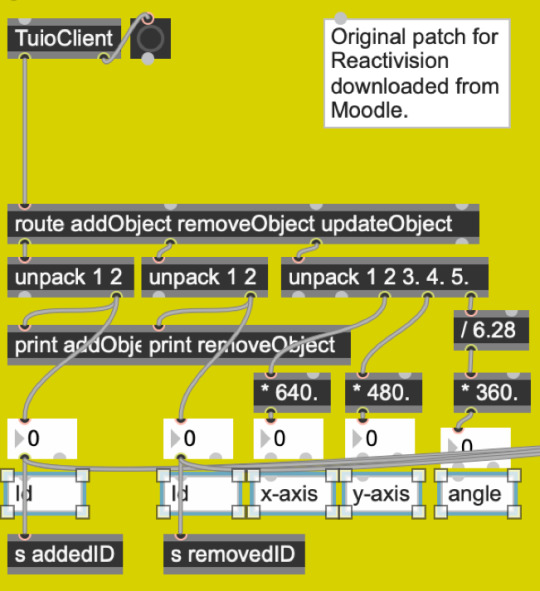

A part of the installation we wanted to realise is the visual aspect of our project and to coincide this with our space exploration concept.

Royalty free sources such as Pixabay, Pexels and Videvo are effective source for high quality royalty free videos under Creative Commons / educational licenses and this means we aren’t breaching copyright laws protecting intellectual property. For example, if we used Star Wars/Star Trek related content without written permission, this is considered copyright infringement.

Here is an example of a royalty free space themed visual that would have been used for the sound installation.

1 note

·

View note

Text

Exploring and refining our concept

Once we completed our presentation and got the feedback, we quickly adapted our ideas to improve the concept and take on-board the feedback that was given to us.

Firstly, we were told that the concept may be too complicated and difficult to translate across to our audiences.

Our concept is a live sound installation that focus on participants collaborating and exploring ideas of space travel, technological developments and humanity and this was achieved through the medium of music. We wanted to explore the positive/for and negative/against aspects of space travel and make the project balanced. We felt that by making our points balanced, we would engage with other participants a lot more because balanced arguments create debates and discussions.

Whilst the music would combine both the melodic and harsh/dissonant elements to represent the positive and negative aspects of space exploration, we wanted to directly make points using audio. The best way to do this was to put our thoughts on space exploration in to the concept and research our ideas so our claims would have weight and background behind them.

After discussing our own thoughts and ideas about space exploration and documenting it, we planned to present these ideas using Reactivision as our sensor interface and allow participants to trigger arguments on/off via Reactivision symbols.

We believed that space exploration can be quite an abstract concept to explore, so we tried to make it grounded and relatable to what is happening at the moment; this included mentioning the “Space X Program” as well as different issues such as poverty and renewable energy. This was then streamlined in to a brief script and then recorded using a Rode NT1a microphone.

Adding the microphone to the installation added another sensor for people to interact with but it also allowed participants to voice their own ideas and thoughts about space live in the mix. Quite a few participants were rather shy to speak in to the microphone and the vocal synth effects made the participants voice dramatically change, meaning that it wasn’t always clear to participants what they were saying.

We added official NASA audio clips from their website to accompany our the for/against arguments. These mp3 audio clips included rocket launches, radio communication, John F Kennedy’s space related speeches and various recordings made in outer space. This added to the concept of space exploration which we feel immersed participants even more and this shone through during our feedback. We felt that by adding iconic NASA recordings, the concept of space exploration would feel more grounded and feel like a more realistic concept. These recordings were free on their website and could be used for educational purposes according to their licenses. (NASA, 2019)

0 notes

Text

Negative arguments for space exploration (1/2)

These were the negative/against arguments for our project. Some of the questions and opinions posed did include research and these quotes will include references to sources we used to make back up these opinions. All of these ideas were our thoughts / opinions that we discussed together and worded in a way that would seem reasonable and logical to our audience.

We consider these ideas and concepts to be subjective because they are from our own thoughts and opinions about space exploration and the concept of the installation was to get participants to think about the positives and negatives of space exploration. Space exploration is such a vast subject and we aren’t experts in the matter, so we wanted to approach these arguments from the perspective of an average person within our society to keep the project grounded and streamlined. This also meant we wouldn’t have any copyright issues for using opinions and statements made by famous space exploration experts.

Quotes used during our installation

“Is space travel going to be possible in the future?”

“How much time, effort and money would it take to explore other planets when we don’t look after our own?”

“What’s the point in exploring other planets if we can’t appreciate what is in front of us?”

“How I feel it would be possible is through acts of collaboration and sustainable technology that is going to benefit us for future generations”

“How much energy would it take for us to have a reasonable life living on another planet?”

“Think of how much rocket fuel it was use and where would this come from? How would this get funded?”

“Could you imagine a future with a colony on mars and what that would look like, how would that affect our planet? And how would that benefit our planet?”

“Should we really fund space or should we fund what’s happening right now?”

“I think the money that gets invested in space programs should be invested in renewable energy and making this planet a better planet to be on.”

“Exploring planets devoid of life would be a awake up call to humanity.”

“The space race was a political movement during the Cold War, nations don’t collaborate enough to make such an incredible feat possible because of politics between our societies.” (History, 2010)

“Why would it be wise to invest government money in to expensive technology when there are issues such as poverty?”

“Colonising other planets could lead humanity becoming less connected, especially when politics become involved.”

“How long would it be before we started a war with a colony on Mars?”

“If we were to find alien life or technology, who are we to interfere and experiment? It puts these lifeforms in danger and we have our own endangered species which is bad enough on our own planet.”

“We don’t know what we would be inferring with and we could put ourselves at risk, especially things such as diseases, viruses, bacteria.”

0 notes

Text

Positive arguments for space exploration (2/2)

This is the script we used that explores positive/for arguments for space exploration. Again, these ideas were our own opinions and thoughts but we did try and approach these positive arguments with the key question: How would space exploration improve humanity? We felt that there are a lot of strong positive arguments despite real life progress within space exploration being somewhat limited by our current technology and funding within space exploration. It’s still a concept that should be treated with the same logic and rationalisation as our negative arguments and so we wanted to pose more philosophical questions to our participants with these positive arguments because this creates debates and discussions. Stating positive facts about space would have been interesting within this project, we wanted to participants to interact with the installation and add their own thoughts about space and abstract concepts such as life forms outside of Earth.

Quotes used within our installation

“I think space travel is an amazing concept humanity should explore if we are able to fund and progress our technology to a level that would be sustainable for our future. Take for example the Space X program who are developing rockets that are able to take-off and land by itself, which means that rockets could be reused, which is cost effective and a more efficient method of take-off.” (BBC News, 2018)

“Space is the largest mystery there is and is so vast in scale that we will never be able to uncover every corner of our universe as it constantly expands. The more the universe expands, the further away we become from our nearest systems.”

“I believe that the concept of space travel scares people because it would make a lot of people question the logic of our humanity in the grand scope of the universe. We may never know the full extent of the randomisation of elements that create life. Why are we the only planet we know in existence to contain life? And what does that mean for humanity? What would it mean for humanity if we were to uncover evidence of ancient life from other planets? Would it change the fundamental ideologies of our society? Would people from other societies accept this?”

“Many people are scared of the concept of something larger than their selves that makes them question their entire existence and known as ‘cosmic horror’’

“We find purpose within ourselves through personal development and the relationships with other people in our lives but to explore space would lead us closer to knowing the full extent of our existence.”

“Knowing exactly how life was formed on Earth and beyond our solar system and I believe that creates a better appreciation of life on our planet and would preserve further chance of survival in the thousands of years to come, even colonising different planets.”

“There is so much we don’t understand on our own planet such as how much of the sea is unexplored and there could be lots of things that benefit us in space including energy, food, medicine, technology. It’s a really interesting concept.” (NOAA, 2018)

“Knowing how life was formed on Earth and beyond our solar system creates a better appreciation and preserves our species and makes colonising different planets a possibility.”

0 notes

Link

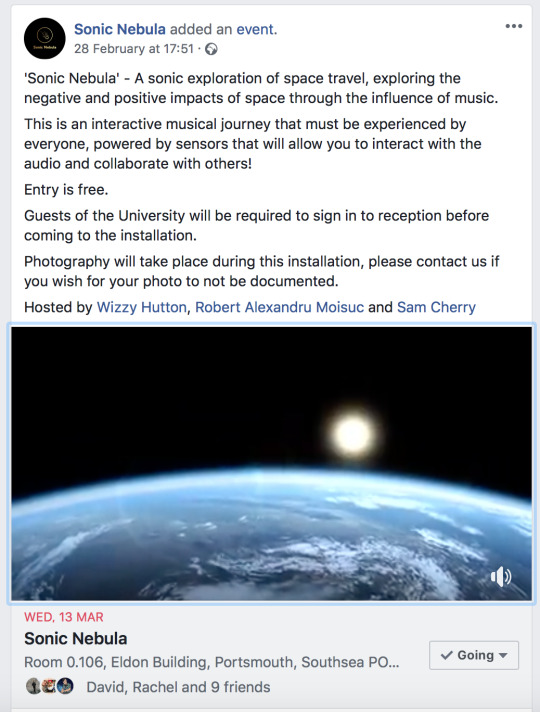

Social media promotions

This is the link to the facebook event page, along side with a screenshot with a brief description.

0 notes

Text

Social media promotion

Something we addressed earlier on was that our promotions needed to be aimed at our target audience so this meant we set up a Facebook page to attach our event to: https://www.facebook.com/Sonic-Nebula-563567357491617/?ref=bookmarks

Our poster featured a digital QR Code that took users straight to the Facebook event page, which included all the necessary details and updates. Plus, participants could the event add to their events calendars, receiving prompts to attend the event

We also shared the event of our personal Instagram’s, which is a great social media format because posts have a much higher organic outreach on Instagram. Facebook limits organic outreach on business related pages from around 2-6% of a page’s likes depending on how well the post performs, pushing users to pay for advertising. Instagram on the other hand doesn’t isn’t as business focused and relies much more heavily on trends using hash tags (Vandernick, 2019)

Using hashtags such as #UniversityofPortsmouth and #Portsmouth attaches the event to users following these hashtags. Insights: The event page reached over 591 Facebook users but sadly only attracted 23 responses. Of that 23, we had 16 participants that attend the event and filled out our survey over the course for event.

0 notes

Link

0 notes

Photo

Final poster design

This is our final poster design for our sound application project. The logo was created by one of the members of the group. It is simplistic, yet eye catching. The picture of the stars used for the background of the poster came from a royalty free website which will be listed in the list of references.

We decided to add a QR code onto the poster (as seen above) which when scanned will take the person straight to our facebook event page with more details and a brief explanation of the installation.

0 notes

Video

Video clip used during the Installation

This is the video clip that was used for the final sound installation.

The Earth is an important part of the theme and engaging our audience because it’s relatable, less abstract and we felt it worked within the context of the points we were trying to make.

We were planning to merge multiple space related video clips but unfortunately, MAX MSP, LEAP, Logic Pro X and Reactivision required a lot of processing power on the Mac we used for the project. We wanted to make sure the audio and sensors were fully functional and due to time constraints, the visuals were somewhat limited; upon reflection, this is definitely something we would improve.

0 notes

Text

Technical Aspects of The Installation (PART 1)

In terms of the technical aspect of the project, this installation makes use of the following hardware:

- A laptop with a built-in camera capable of running Mac OS X (the used model was a Macbook Pro 2015 running the latest OS version – Mojave)

- Leap Motion sensor

- A thunderbolt audio interface (for the purpose of this experiment we have used the Slate Digital VRS8 interface, mostly because of its low latency monitoring which allowed participants to interact with the installation with no audible delay / latency)

- A microphone, preferably a large diaphragm condenser (the used microphone was a Slate Digital ML-1)

- An image projector (we made use of the one already included in room EL 0.106)

Any outside equipment that was brought in for the installation for PAT tested for quality and health and safety aspects.

In addition to that, there were a number of softwarerequirements needed in order to run the installation as intended. The used software during the experiment are the following:

- OS X operating system

- Logic Pro X for MIDI sequencing

- Max MSP 8 for audio programming

- Geco for gesture detection above the leap motion sensor

- Reactivision for symbol recognition

Below is a diagram that explains how everything has been connected during the experiment:

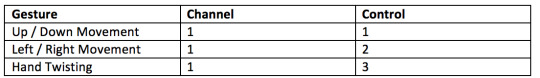

As mentioned in the software requirements, we made use of Geco to recognise the gestures that participants would make above the sensor and we also used Reactivision to determine when a symbol has been covered up by a person. Both Geco and Reactivision transmitted information to Logic through a number of 12 controls. But, since the programming has been done simultaneously on both, there are a number of channels that have been allocated to each one in advance, as following:

- Controls 1-4 are related to specific gestures above the leap motion sensor

- Controls 5-13 are related to the camera that detects when a symbol has been covered

However, the 4thcontrol never got to be used, which is the reason why it will be skipped on all diagrams that will follow. Even though it was reserved for another gesture above the leap motion sensor, it has never been actually implemented mainly because we have determined that such a big number of gestures could become overwhelming and confusing for the participants.

Below is a diagram that explains which piece of hardware is responsible for what gestures and which control is carrying what type of information:

All the above output channels from Geco and Max MSP are also input controls in Logic Pro X. Within the DAW it has been established a virtual controller within a group of tracks which is able to control any type of automation (including plugin automation) within a child track. The controller consists of 10 knobs and 2 buttons, each one controlling up to 7 parameters simultaneously within the group of tracks.

Below is a diagram that explains in greater detail where each control is assigned to and what each knob / button does:

Furthermore, there is also a screenshot of the virtual controller, alongside with additional comments to help understand the concept:

Finally, a lot of functionality comments have been made within the Max MSP patch provided. Each section of the patch is coloured differently to emphasise that they do completely different things.

IMPORTANT: In order to assure the functionality of the patch, all “ctlout” objects need to have their output set to “from Max 1” manually as this is not the default option when Max MSP 8 is launched. This step is mandatory as Logic Pro X will be scanning for control changes only on that specific output.

0 notes

Text

Technical Aspects of The Installation (PART 2)

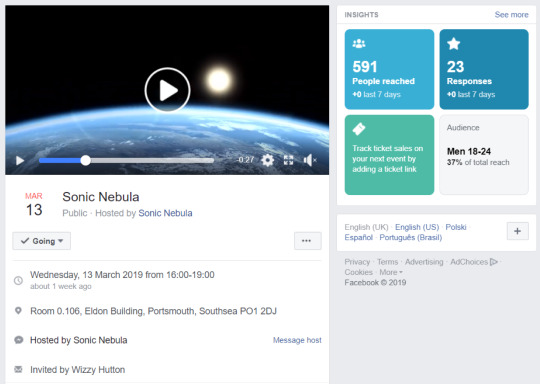

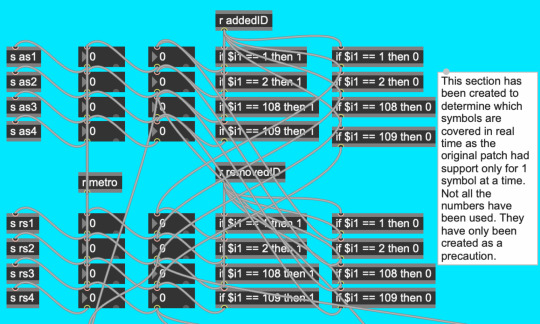

The technical aspects of this installation have been taken care of during a period of several months. This post will focus on the progress of the programming aspects over this period and discuss all the major issues that have been encountered during this process.

The first feature that has been created for this installation in December was a patch in Max MSP that would receive data from the Leap Motion sensor and use it to control different parameters within Logic Pro X.

The first major issue that came out after implementing this feature was that the Leap Motion sensor was unable to effectively communicate with and devices operating on Windows, thus restricting the group to using a Macbook Pro type of laptop which none of the group members owned at that time. The reason why a Macbook Pro was needed over any type of Mac was because future developments would require the device running the patch to have an incorporated camera. Numerous attempts to solve this issue have been made over a period of 2 weeks which led to no clear results so the new approach was to get access to a laptop operating on Macintosh OS X and continue developing the project only on Apple Mac devices.

Also, another major issue that showed up after implementing this feature was that the information coming out of the sensor was not very consistent and it was unable to always determine which hand was above the sensor or how many hands and fingers are visible, so the gestures have been heavily limited. Since the Leap Motion information was harder to access from Mac and the tests proved to be slightly unreliable, a third party software named Geco has been used to detect the gestures that participants make above the sensor and control parameters in Logic Pro X depending on that.

Below is a table that shows further details in terms of how the software have been linked together:

Originally, a fourth gesture has been implemented but after further testing, the member of the group in charge of the technical aspects of the installation has determined that having a fourth gesture is very likely to be confusing for participants that never tried it before so it has been removed.

During January, a number of new features have been added to the installation. One major improvement was the incorporation of Reactivision within the main Max MSP patch. This software analyses the images that the incorporated camera takes in real time and scans for any visible dedicated symbols. During the development and early testing periods only small versions of these symbols have been used. The original idea was that the user will be able to rotate the large scale symbols on the walls, so during the early development period this approach has been successfully implemented. So by the end of January, the ability to rotate any knobs in Logic Pro X in real time by rotating the symbols in front of the camera has been successfully implemented and tested.

During February the group attempted to come up with a frame concept that would allow the participants to twist the symbols on the walls without leaving any visible marks or holes. Since no reliable methods could have been found, the approach used when implementing the Reactivision patch back in January had to be changed. Therefore, instead of giving the participants the ability to control parameters within Logic Pro X by twisting the symbols on the walls, the installation has been altered to determine when one or more symbols have been covered and increase / decrease certain values until the object(s) become visible again.

As soon as the new gestures have been assigned to different parameters in Logic Pro X, the group discovered that the patch failed in processing multiple gestures simultaneously, which is the reason why in the end it was only one control panel in Logic on a container that included all the audio and MIDI based tracks, so multiple gestures could be used simultaneously.

Finally, the final features of the installation have been developed in early March. The patch became able to loop a video file indefinitely and change parameters such as “Brightness”, “Contrast” and “Saturation” based on the data received from the camera via Reactivision. Also, the track responsible for the vocal alteration has been created in Logic using a plugin named “Vocalsynth 2” from iZotope. This plugin’s parameters have not been assigned to any gestures as that could have easily led to feedback problems and potentially damage the listening equipment as well as the hearing of the participants.

0 notes

Text

3RD Party Plugins

The signal coming in from the microphone has been sent to Vocalsynth2 plugin from iZotope which recreates the vocal using different types of synthesizers and modulating them accordingly in order to recreate the speech.

Below is the patch with the settings used within the plugin, followed by an EQ which removes the frequencies that were feeding back.

The project also makes use of several third party plugins from Waves, Soundtoys and Fabfilter which might not be accessible when opening the Logic project. Below are the settings for each instance of those plugins.

0 notes

Text

Composing the music

Establishing our influences, target audience and foundations for the writing process

As a group, we decided upon what the musical influences should be for our project, so we listened back to the music that was outlined in our presentations. This included Hans Zimmer’s scores in Interstellar and Blade Runner 2049, Vangelis’s score from the original Blade Runner, Jon Hopkins and 65Daysof Static’s score within No Man’s Sky. We then re-evaluated our target audience (university students aged 18 – 30) and considered how that might influence our music as we wanted to create something engaging We wanted to create a score that sounded cinematic, euphoric, epic in scope and scale to convey the feeling of space exploration but we also want to make it engaging for our target audience so we wanted to add elements of electronic dance music.

This led to the implementation of 4 different drum beats that participants could switch using Reactivision symbols. We also made sure the harsh tones and sound design elements were present to represent the alien nature of space and the negative aspects of space exploration. Some of these sounds are influenced by techno, dubstep and drum and bass which are popular music genres associated with our target audience.

The music was more linear that anticipated and this was largely because we used a DAWs sequencer timeline, rather than triggering samples or random containers using live performance tools. We wanted to make it simple for our participants to interact with so sticking to 3 key interactive sensors and keeping the music fairly streamlined achieved this,

We combated repetition by making the piece extensive in length (12 minutes 4 seconds) and featuring lots of variety within our chosen key, as well as making lots of elements interactive. The main interactivity with the music was using LEAP alongside macros within Massive that would manipulate filters/fx and the timbre of the wavetables.

We decided that it would be necessary to use Massive as our main form of synthesizer and this is because we were able to access Massive on our personal laptops/macs as well as at the university; it was also synth we were all familiar with and the synth has lots of technical support available when compared to other software such as VCV Rack, which isn’t as-stable

Additionally, we used a piano sampler called ‘The Giant’ from Native Instruments Kontakt sampler library. The sampler was of a high quality and had built in reverb parameters which we could control using our sensors. Again, this is a sampler multiple team members had access too. Other synths used such as FM8 and Serum would be exported as WAV audio clips for efficiency during the realisation and so we wouldn’t need additional licences on multiple computers.

Music was written in F# Minor and at 110bpm. The slower BPM meant we could extend chords, pads and drones over long phrases to create a sense of width and ‘space’ within the music. We felt writing in a minor meant our chords and progressions could evoke a stronger emotional response and we could use progressions within A major if we wanted to make the music ‘brighter’ during certain sections.

The structure of the composition

Breaking down the composition itself, we worked and developed the music consistently over the course of the 12 minutes. We didn’t approach the music with a ‘traditional pop music structure’ (verse, chorus, bridge, etc); we wanted to create a composition that evolves continuously and this was inspired by the structure of ambient music. Particularly, our influence Jon Hopkins has lots of tracks that can be up to 10 minutes in length and he manages to keep the piece interesting until the end of the track, which usually effectively bleeds in to the next track.

Ambient music tends to have a much less restrictive structure, which allows for lots of synth pads/drones that consistently evolve to keep your attention. Ambient music focuses less on intensive / constant rhythms and instead creates ‘soundbeds’ that drift throughout the piece. While we did include 4 different drum beats users could switch to throughout the piece, we made sure that these rhythms worked for every part of the track and we ensured these drum tracks worked by making sure our chords and progressions were syncopated and spread out over larger phrases. (Pitchfork, 2016)

Keeping lots of different progressions and chords in the same key means that you are very likely able to layer and bridge multiple sections of music together to create 1 large body of music. We mainly used pad synths and underlying low end focused patches to keep the music consistent, so we could add sections that included a lot more chords and melodies.

In terms of influences, the Blade Runner influence within the music was to include a harmonically rich saw wave patches and to pitch bend extended notes to create a sense of movement during transitional sections. We wanted to represent the technology involved in the concept of space travel during sections of the music so the timbre of some of various patches could be changed by the LEAP sensor to sound more ‘metallic’ and abrasive. We also built the structure of the composition around the Nasa recordings and our own arguments regarding the idea of space travel. This included some sections of the music focusing purely on soft or low end sounding synth pads while our dialogue was the main focus.

Particularly with a large piece of interactive music, we felt we needed to create tension and excitement during certain sections of the music and we felt this involved creating sections of music that were much less intense to allow the composition to ‘breathe’. We felt that many of the patches needed to be polyphonic, so we could create chord progressions that would try to evoke a stronger emotional response. Our methods of composing music is very much focused within DAWs and not traditional notation, so the writing process was very much a case of sticking to the pitches within our chosen key and trying to see what worked and what did not.

In terms of the sound design for the project: 8 massive patches, 2 serum patches, 2 FM8 patches and 1 Kontakt sampler were used in total.

Patches from our initial presentation was expanded upon and this laid the foundation to writing the music. All of the Massive patches were created first, the Serum patches were expanded on from the demo and lastly the FM8 patches were created for the more abrasive elements of the music.

The piano sampler initially helped us picture the cinematic inspired score we were aiming to accomplish and gave the music a human element amongst the very digital and electronic nature of a lot of the patches. We also focused on a patch (Massive Patch 8) that was crafted to emulate strings (particularly violins) because large orchestral scores are common within science fiction soundtracks and string sections are key to creating tension (especially our influence, Interstellar). Arguably, this tied in to our concept of humans and advanced technology and helped us provoke emotion responses from our audience members.

Breaking down Massive

Native Instruments Massive is a subtractive wavetable synthesizer; this means that the synthesizers generate multiple waveforms and is able to morph between multiple shapes. This allows for morphing complexity beyond traditional subtractive synthesizer wave shapes such as sine, saw, square and triangle.

Take for example Massive’s initial Square/Saw wavetable: If the wavetable position parameter is positioned all the way to the left, the oscillator will only produce a square wave but if the parameter is positioned all the way to the right, it will produce a saw wave. The middle position will be a combination of the two. (NativeInstruments, 2018)

We were able to change many of Massive’s individual parameters but we decided that the best way to keep participants engaged and make them feel as if they are making big changes within the music is to utilise Massive’s built in Macro Controls. These parameters are assignable parameter knobs that allow us to change multiple parameter at once. For example, I could assign a single macro control to a reverb’s wet/dry parameter, reverb size parameter and reverb ‘colour’ to create an overall parameter control for the reverb.

These Macros are MIDI assignable and works really well with Gecko and LEAP as shown in the SonicState video which influenced us to use LEAP Motion for our project.

(NativeInstruments, 2018) (SonicState, 2013)(Mantione, 2017)

Example of a Massive Patch 1: Metallic Pad

We understood that in order to make the Massive patches highly interactive, we needed to make sure each patch had lots of different macros to shape the sound but still work well with the other patches. We decided to avoid pitch modulation as such because this could causes clashes and detuning between different patches.

Here is an example of how creating Massive patches was approached during the patch creation process which was at the start of the project.

This patch was first created for our initial demonstration of what the music might sound like during our presentation and the main purpose of the patch was to create a polyphonic pad that would utilise layered chords. Initially, the pad’s macros weren’t utilised as much so was something we expanded upon. I wanted to make the pad dynamic and have both soft and harsh tones depending on how the macros were manipulated.

Oscillator 1 used the VA-PWM (pulse wave modulation) wavetable, which is essentially a square wave with controls for the width of the square wave cycles to create a thin or thicker square wave. The pitch was kept at 0 because we wanted to use this pad for chords, so it wasn’t necessary to pitch the oscillators drastically.

Oscillator 2 uses the VA-Sync wavetable, which is similar to the PWM apart from it focuses more on syncing the waveform’s phases. We wanted to de-tune this oscillator by around -20 cents and play with the phasing; this is because detuning oscillators can lead to creating a ‘chorus’ effect on the oscillators, which increase the thickness of the sound.

Oscillator 3 simply used the Squ-Saw1 wavetable with the Wt Position all the way to the right so the oscillator would generate a pure saw wave.

Within the modulation oscillator, we increased the pitch amount by +12 semitones, assigned the phase to oscillator 3.

The noise Oscillator is assigned to the ‘Metal’ which has a grainy and ‘metallic’ texture, which is brighter and more present when the colour parameter is increased; noise generators were a focus on patches that we wanted to have a ‘lift off / launch effect’

All oscillators were sent to filter 1 and the ‘daft’ filter type was chosen. The ‘Daft’ filter type is a low pass filter that has additional emphasis on the resonance parameter and from experience can great for melodic pads. An 1/1 ratio sine wave (xfaded with a saw wave) LFO was added the filter cutoff to creating a pulsing movement on the pad which made the sound feel more dynamic.

After the filter, we routed the signal path to go in to Insert 1, Insert 2 and then to FX1 and 2 as well as the built in EQ.

Insert 1 used the frequency shift effect, which can drastically change the tone and timbre of sound, especially when combined with other macros. We off set the pitch slightly and assigned Macro 4 to the dry/wet parameter.

Insert 2 was a sine shaper, which could change the timbre to a more saturated sound.

Every initialised Massive patch has a pre-assigned vibrato control on Macro 1, so we kept this for every patch so we could adjust the vibrato of each patch to make them sound more natural (this can especially be handy for patches such as Massive patch 8 which emulated a violin).

Macro 2 was called ‘Timbre’ and was assigned to oscillator 1’s pls saw position and pulse width, oscillator 2’s pls saw position, oscillator 3’s amplitude, FX 2’s reverb amount and a +24 cents pitch increase on oscillator 1. Increasing the timbre would create a much warmer but slightly distorted sound.

Macro 3 was called ‘Cutoff’ and its main purpose was to be assigned to the filter cutoff frequency and this was a macro that was featured on majority of the patches but it makes large changes to the sound of the patch. Again, this would make a much warmer patch as it introduced higher harmonics. It was also added to the filter resonance to emphasize the filter.

Macro 4 was called ‘FRQ SHIFT’ which was short for frequency shifter and was connected to the dry/wet amount of the frequency shifter effect. This created a bell like effect around half way and at full, the timbre shifts to a ‘raspy’ flute type of sound.

Macro 5 is labelled as ‘Haunted’ because this dramatically changed the timbre to a much darker and ‘haunting’, removing lots of higher harmonics and this was achieving by increasing the feedback amount; the feedback section can be assigned post filters or post FX in a chain and drives the signal, creating distortion.

Macro 6 was known as ‘Lift-off’, which became a common label for macro within our patches. Typically, these macros are assigned to the filter cutoff, filter resonance and noise amount to recreate the sound of a rocket/spaceship taking off and going in to orbit. This sweeping macro could be an intense macro for users to interact with and we discussed the possibility of a hand rising and falling as the ideal gesture. This macro was assigned to the feedback amount, noise amplitude, noise colour, filter cutoff, filter resonance, chorus amount, chorus depth, reverb density, reverb colour and the decreasing of the LFO amplitude.

The idea behind the macros is that it gives the patches more complexity and makes them much more flexible when it comes to assigning LEAP Motion gestures. Static patches that don’t allow for timbre / modulation changes during installations wouldn’t feel very interactive for users and Massive has the benefit of up to 8 different assigned macros.

0 notes

Text

Survey results and our final conclusion

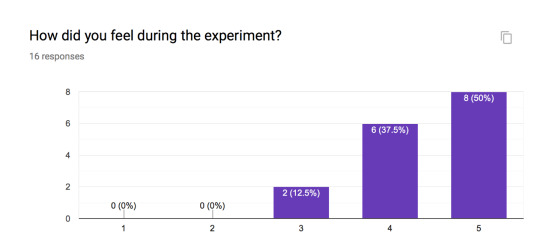

The first question in the survey was asking the audience on how the experiment made them feel. Most of the questions are rated on a scale 1-5, 1 being “Really Bad” and 5 being “Really Good”. As shown in the screenshot above, 8 people said that it made them feel “Really Good”, this is 50% of the people who were involved in the interaction. 6 people chose the number 4, meaning “Good” which made up for 37.5% of people. The last 2 people who interacted with the project picked number 3, which means that it made them feel “Fine”. This clearly shows that the majority of people, 87.5% chose above the number 3 meaning that overall the experiment had a positive impact on the audience.

The next question in the survey asks how suitable the music is for the experiment. This question was rated on 1-5, 1 being “Not Suitable” and 5 being “Very Suitable”. 10 people picked the option number 5 which makes up for 62.5% saying that the music was “Very Suitable”. 5 people chose number 4, where the music was “Fairly Suitable”, this made up for 31.3% of people. 1 person chose number 3 which means “Average”, this makes up for 6.3% of people. The majority of the participants, 93.8% state the music was a perfect combination for the experiment.

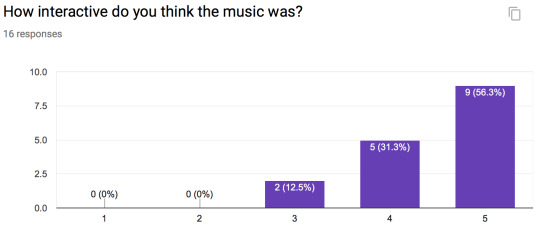

This question asks the participants on how interactive the music was. This was rated on a scale of 1 being “Not Interactive at all” and 5 being “Extremely Interactive”. 9 people said it was “Extremely interactive” this made up for 56.3%. 5 people then chose number 4, which means “Very interactive” and this made up for 31.2% of the overall participants. Lastly 12.5% which is the last 2 participants chose number 3 which means “Interactive”. This is possibly because we may have not explained the installation in the same way for every participant. However the majority of the participants said the music was mainly interactive and this was a strong aspect to the installation.

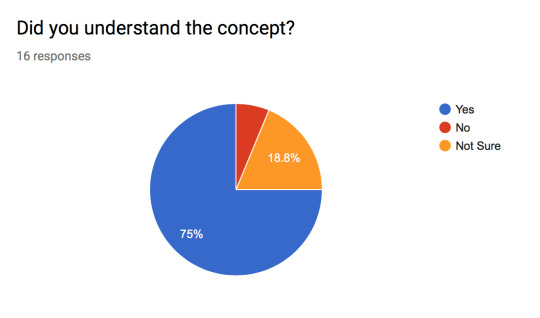

This question was shown as a pie chart asking whether the concept of the installation was understood. The blue represents “Yes” they did understand the concept, this was 75% of the participants which is 12 people. Next is the orange which means “Not Sure” this means they weren't too sure if they understood the concept as it possibly wasn't made clear or went above their knowledge. This was 3 people which made up for 18.8% of the overall score. Lastly the red represents the number of participants that didn’t understand the concept at all. This may had been because the concept of space exploration can be abstract and large in scope, so further streamlining and really focusing on the important points we were trying to make would have improved this project.

This question asks whether the technology used was appropriate. 14 people said “Yes” this is represented in the colour blue, this made up for 87.5% of the final percentage on this question. Lastly the red meaning “No” and the orange meaning “Maybe” both had one vote each which made up for 12.5%. We believe the reason why a participant might have voted “No” was because the participant didn't understand how some of the technology worked, which may have been our fault if we didn’t explain each sensor’s function to every participant. We think this because more than 75% felt the technology was appropriate.

This questions reverts back to the scale of 1-5 on how easy the installation was to use. 1 being “Not Easy At All” and 5 being “Extremely Easy”. As shown above, it shows that 10 people chose number 5 meaning that the use of the installation was “Extremely Easy” to use, this made up for 62.5%. Lastly the votes for the last 6 people was split even where 3 people chose number 4, “Very Easy” and 3 chose number 3 “Easy”. Looking at the figures shows that installation was extremely easy to use and again this was the response we were hoping for. This was a part of the project we wanted to encourage because making an installation that is difficult to use would discourage guests from collaborating with each other.

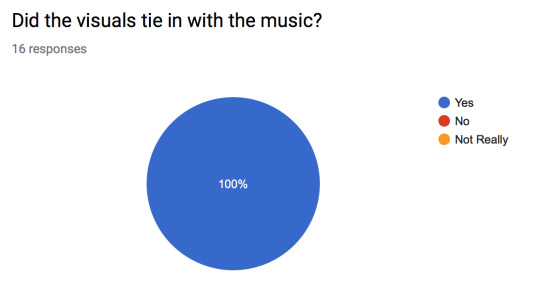

We didn't need to look too closely in to this question. This question asks whether the visuals tie in with the music. All 16 participants voted “Yes” meaning that the visuals 100% tied in with the music and concept.

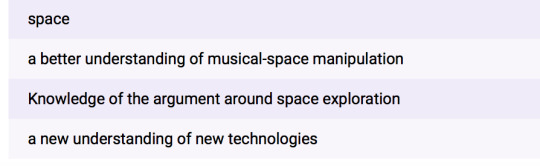

This question asks the participants on what they gained after this experience. This question seemed to confuse a few participants as to what the question was asking specifically. We know this because 2 people said they didn't understand the question as shown above. However the majority of the participants stated that the knowledge of new technology was something they gained; others talked about how the music made them feel. An improvement would be to either streamline this question using multiple choice options or re-phrase the question to be more related to the project’s theme or technology.

This question is a very important question as it helps us know what we can improve on for next time. 1 person said the music, that is 6.3%. 3 people chose technology as needing improvement, that makes up for 18.8%. 4 people said the visuals which makes up for 25%. As a group we agreed on this too, we were originally planning to merge different space videos together, and would change as the music changed. However this didn't happen as we ran out of time. The other issue we had with the visuals was that when the video was projected, the video resolution was slightly pixelated. The file was HD and downloaded in a high resolution but Max MSP didn’t adapt to the video very well. This should have been tested on a projector prior to the event and this is something we would improve.

1 person felt that the theme needed to be improved. This is possibly because they didn't understand the concept and not everyone personal enjoys the aspect of space. Lastly 8 people said that there is no need for improvements, meaning that our installation lived up to their expectations, which felt very rewarding.

Conclusion

In conclusion, we believe for many reasons that our project was a success. Firstly, we acknowledged our feedback from our presentation and made sure we streamlined the project’s context and technology. While it would have been interesting to work with additional sensors such as Kinect and conductive paint, we understood that the Kinect was no longer supported and would be difficult to code for. As for conductive paint, the format can be unreliable at times and it can also be a bit messy; this may have put participants off from interacting with the sensors.

Another issue we had was using the Macbook’s webcam for our Reactivision symbols because quite a few participants would stand in front of the Mac and accidentally block the camera from interacting with the symbols. A solution to get around this would have been to use an external webcam at a higher position in the room so it wouldn’t be easily blocked and still have access to the symbols. 3D visualisation within our Max MSP patch would also be an option but this would likely strain the Macbook’s processing power, so using a high quality external webcam would likely be a more efficient solution.

The visuals was definitely an element we would have like to have improved on because there wasn’t enough variety and our sensors didn’t interact with the visuals enough. A way of doing this would have been to create a Max MSP patch that would be able to switch between multiple video clips based on reactivision symbols and LEAP motion gestures; this might have required a slightly more powerful Mac that would be capable of running the leap sensor through multiple applications smoothly. Using variation in the clips and making the clips more interactive (in terms of colour and space related visual material) would have been more satisfying to control as a participant.

Adding surround sound monitors to the installation would have also improved the immersion for the participants as initially planned. Surround sound will allow for more creative panning options with our mix and this could really elevate the concept of space exploration and possibly evoke a stronger emotional response.

Despite only gathering 16 attendees across the installation, we were overall very happy with how smooth the project ran and happy with the content we produced. Moving the installation to a more populated part of Eldon Building would have likely brought more people to the installation because we would have had more people walking past the room. Promoting the social media event much earlier and put up a lot more physical promotional material would have also improved the amount of outreach the project had. Despite this, our survey results indicated that most attendees really enjoyed the music, concept and the interactivity of the project and so we believe we achieved we set out to do within this project, even if there is areas that need improvement.

0 notes

Text

References

- Gecko with LEAP and Massive: https://www.youtube.com/watch?v=eovdIjK-Jvc&t=256s

- BBC News. (2018). The story of Elon Musk rocket launch. [online] Available at: https://www.bbc.co.uk/news/av/world-us-canada-42951487/the-story-of-elon-musk-rocket-launch [Accessed 26 Mar. 2019].

- HISTORY. (2010). The Space Race. [online] Available at: https://www.history.com/topics/cold-war/space-race [Accessed 27 Mar. 2019].

- NOAA (2018). How much of the ocean have we explored?. [online] Available at: https://oceanservice.noaa.gov/facts/exploration.html [Accessed 26 Mar. 2019].

- Space photo for poster

https://www.pexels.com/photo/photography-of-stars-and-galaxy-1376766/

- VALERIO VELARDO. 2017. What is Adaptive Music?. [ONLINE] Available at: http://melodrive.com/blog/what-is-adaptive-music/. [Accessed 16 November 2018].

- Mantione, P. (2017). The Basics of Wavetable Synthesis — Pro Audio Files. [online] Pro Audio Files. Available at: https://theproaudiofiles.com/what-is-wavetable-synthesis/ [Accessed 26 Mar. 2019].

- NASA. (2019). Audio and Ringtones. [online] Available at: https://www.nasa.gov/connect/sounds/index.html [Accessed 28 Mar. 2019].

- Native-instruments.com. (2018). MASSIVE Manual English. [online] Available at: https://www.native-instruments.com/fileadmin/ni_media/downloads/manuals/MASSIVE_Manual_English_1_5_5.pdf [Accessed 26 Mar. 2019].

- Pitchfork. (2016). The 50 Best Ambient Albums of All Time | Pitchfork. [online] Available at: https://pitchfork.com/features/lists-and-guides/9948-the-50-best-ambient-albums-of-all-time/ [Accessed 28 Mar. 2019].

- SonicState. (2013). Geco MIDI Gesture Control For Leap Motion - Review. [Online Video]. 3 December 2013. Available from: https://www.youtube.com/watch?v=eovdIjK-Jvc. [Accessed: 18 November 2018].

0 notes