#Personalized Videos for Voters with AI

Text

Voter Communication with AI for Political Campaigns

Connect directly with voters and make an impact by sending AI-enriched personalized voice and video messages. Start today with go2market

#Personalized Video Messaging for Political Campaigns#AI-enriched voice messages for political campaigne#AI-powered personalized video for political campaign#Send Personalized video messages with AI#Personalized Videos for Voters with AI#Voter Communication with AI#AI Voice & Video Messages#Personalized Video Messages#Personalized Voice Messages#go2market#voice broadcasting#best election survey agencies in india

0 notes

Text

Hello whumpers!

This is “Whump or Pass”, a blog where you can submit your favorite characters by ask, and see if the whump community would rather whump them, or pass on whumping them!

Run by @angst-is-love-angst-is-life

In case you don’t know what “whump” is, here is the fanlore definition:

The term whump (or whumping) generally refers to a form of Hurt/Comfort that is heavy on the hurt and is often found in gen stories. The exact definition varies and has evolved over time. Essentially, whump involves taking a canon character, and placing them in physically painful or psychologically-damaging scenarios. - Fanlore

Rules/Guidelines

Propaganda in visual and/or written form is allowed AND encouraged

Voter fraud is also allowed because I believe if you're dedicated enough to do it; you should, but remember: With great power, there must also come great responsibility.

Characters:

Submit the name of any fictional (see below) character and the name of the media they are from.

All submissions must be fictional, possible exceptions for AU versions of a person would be decided by me on a case to case basis. Actors playing a character in live action does qualify as fictional. (Ex. “Jensen Ackles” would not be allowed but “Dean Winchester” who is played by Jensen Ackles, would be allowed)

OCs are allowed.

If submitting a specific version of a character; specify exactly which one they are.

Pictures (Optional):

You may include a picture of your character or OC.

If no picture is provided, I will add one.

No NSFW material in the pictures.

Do not submit an AI generated image. If an image of the character does not exist, please use a faceclaim, picrew, art, or something similar. (Faceclaims are allowed for the same reason actors playing a character are).

For initial submission, picture should be a still image and not a gif or video.

Background (Optional):

You may include a short blurb/background of the character if you like.

Propaganda (Optional):

If you reblog a poll with propaganda for why this character should be whumped, I will reblog it here under the tag “whump or pass propaganda”

Gifs or videos can be included in reblog propaganda, as well as longer written information about the character’s history and why they should be whumped.

I am not in every fandom, so if I get something like a picture or name wrong please don’t hesitate to let me know so I can correct it :]

If you want to submit a picture but would like to remain anonymous; I can copy paste everything in your ask and exclude your account name in the post (or tag a sideblog if you still want to be included in the post).

Additional guidelines to be added as needed. My apologies if these are a little strict, just making sure to cover as many bases as possible.

66 notes

·

View notes

Text

On a stifling April afternoon in Ajmer, in the Indian state of Rajasthan, local politician Shakti Singh Rathore sat down in front of a greenscreen to shoot a short video. He looked nervous. It was his first time being cloned.

Wearing a crisp white shirt and a ceremonial saffron scarf bearing a lotus flower—the logo of the BJP, the country’s ruling party—Rathore pressed his palms together and greeted his audience in Hindi. “Namashkar,” he began. “To all my brothers—”

Before he could continue, the director of the shoot walked into the frame. Divyendra Singh Jadoun, a 31-year-old with a bald head and a thick black beard, told Rathore he was moving around too much on camera. Jadoun was trying to capture enough audio and video data to build an AI deepfake of Rathore that would convince 300,000 potential voters around Ajmer that they’d had a personalized conversation with him—but excess movement would break the algorithm. Jadoun told his subject to look straight into the camera and move only his lips. “Start again,” he said.

Right now, the world’s largest democracy is going to the polls. Close to a billion Indians are eligible to vote as part of the country’s general election, and deepfakes could play a decisive, and potentially divisive, role. India’s political parties have exploited AI to warp reality through cheap audio fakes, propaganda images, and AI parodies. But while the global discourse on deepfakes often focuses on misinformation, disinformation, and other societal harms, many Indian politicians are using the technology for a different purpose: voter outreach.

Across the ideological spectrum, they’re relying on AI to help them navigate the nation’s 22 official languages and thousands of regional dialects, and to deliver personalized messages in farther-flung communities. While the US recently made it illegal to use AI-generated voices for unsolicited calls, in India sanctioned deepfakes have become a $60 million business opportunity. More than 50 million AI-generated voice clone calls were made in the two months leading up to the start of the elections in April—and millions more will be made during voting, one of the country’s largest business messaging operators told WIRED.

Jadoun is the poster boy of this burgeoning industry. His firm, Polymath Synthetic Media Solutions, is one of many deepfake service providers from across India that have emerged to cater to the political class. This election season, Jadoun has delivered five AI campaigns so far, for which his company has been paid a total of $55,000. (He charges significantly less than the big political consultants—125,000 rupees [$1,500] to make a digital avatar, and 60,000 rupees [$720] for an audio clone.) He’s made deepfakes for Prem Singh Tamang, the chief minister of the Himalayan state of Sikkim, and resurrected Y. S. Rajasekhara Reddy, an iconic politician who died in a helicopter crash in 2009, to endorse his son Y. S. Jagan Mohan Reddy, currently chief minister of the state of Andhra Pradesh. Jadoun has also created AI-generated propaganda songs for several politicians, including Tamang, a local candidate for parliament, and the chief minister of the western state of Maharashtra. “He is our pride,” ran one song in Hindi about a local politician in Ajmer, with male and female voices set to a peppy tune. “He’s always been impartial.”

While Rathore isn’t up for election this year, he’s one of more than 18 million BJP volunteers tasked with ensuring that the government of Prime Minister Narendra Modi maintains its hold on power. In the past, that would have meant spending months crisscrossing Rajasthan, a desert state roughly the size of Italy, to speak with voters individually, reminding them of how they have benefited from various BJP social programs—pensions, free tanks for cooking gas, cash payments for pregnant women. But with the help of Jadoun’s deepfakes, Rathore’s job has gotten a lot easier.

He’ll spend 15 minutes here talking to the camera about some of the key election issues, while Jadoun prompts him with questions. But it doesn’t really matter what he says. All Jadoun needs is Rathore’s voice. Once that’s done, Jadoun will use the data to generate videos and calls that will go directly to voters’ phones. In lieu of a knock at their door or a quick handshake at a rally, they’ll see or hear Rathore address them by name and talk with eerie specificity about the issues that matter most to them and ask them to vote for the BJP. If they ask questions, the AI should respond—in a clear and calm voice that’s almost better than the real Rathore’s rapid drawl. Less tech-savvy voters may not even realize they’ve been talking to a machine. Even Rathore admits he doesn’t know much about AI. But he understands psychology. “Such calls can help with swing voters.”

12 notes

·

View notes

Text

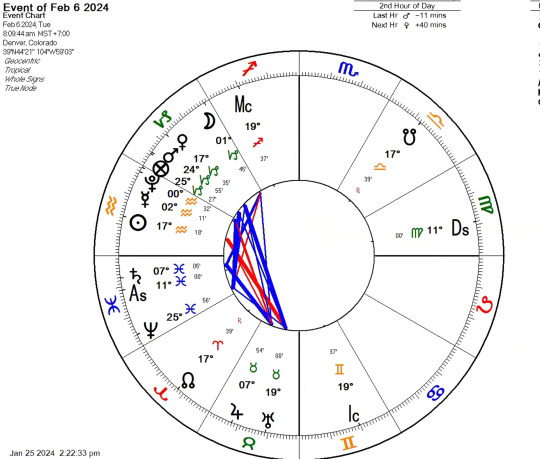

February 2024 Important Dates

AKA my notes on The Astrology Podcast's February forecast.

January recap: With Pluto now in Aquarius we've got new technological developments: "flying taxis" going to market, Google's new AI set to release around Jupiter in Gemini, and the first commercially available see-through LED screens. In Saturn-Neptune news, Apple's new VR operating system is being advertised as "spatial computing." Aquarius loves to experiment for the joy of discovery--even if society ends up rejecting some of these new projects. Austin points out many prophetic "near future" fiction stories came out under Jupiter-Saturn in Libra in the 1980s, which was a preview of those conjunctions occurring in air signs for the next 200 years (we had the last earth ones a few years ago). Saturn-Neptune also connotes widespread political misinformation (as it did in 2016)--we've got AI deepfake videos of political figures being used not just for humor, but also to try and sway voters in the New Hampshire Primary. Another notable Saturn in Pisces story is the restriction of the Red Sea trade route and subsequent rerouting of cargo ships along global trade routes. The Mars-Saturn conjunction in Pisces in March will likely show major developments in maritime combat or disasters.

We enter the month fresh off Pluto's ingress into Aquarius. There's a lot of energy in the sign this month, some good and some difficult. The faster-moving planets will be joining Pluto in Aquarius specifically for the first time since the 18th century, giving us the first tastes of what the next 20 years will bring for society at large. For personal charts, what house does Aquarius fall in?

February 5th - Mercury enters Aquarius, conjoins Pluto

This is the first planet to join the Sun and Pluto in Aquarius. Venus and Mars will follow this ingress-conjunction pattern later this month--and the new Moon is in Aquarius. Mercury-Pluto brings up information and power such as classified document leaks, as well as hidden pathways or getting lost in the labyrinth. Determined efforts to delve into deeper truths can pay off, but we can also become obsessed with something no one else can see. Other significations include taboo subjects, with science and social rules being especially important in Aquarius, and negating ideas we once thought were true. Paranoia and manipulations also abound with Pluto-Mercury. In Aquarius we'll see these issues take light especially through new technologies. In general Pluto magnifies small things to their biggest extremes--something microscopic may change society irrevocably, etc. The PRC has a timed chart with Aquarius rising with the Moon in Aquarius, and indeed has been leading the world in uses & development of certain new technologies, which in turn has brought power struggles with other major players in those fields.

February 6th - Fortunate date (not pictured)

Set at about 8:00AM local time, this chart should give you 11° Pisces rising. The Ascendant ruler Jupiter is in the 3rd house in a day chart, emphasizing communication and other 3rd house topics. The Moon in early Capricorn applies to a trine with Jupiter, bonifying her. The Jupiter-Saturn sextile is emphasized here; while Saturn is very close to the Ascendant, he's more cooperative in a day chart, and is being received by Jupiter (is in Jupiter's sign), who he's trining. Thus Chris predicts it'll be a good balance of consolidation and growth. This does have Mars in the 11th house in a day chart, so it's not great for matters involving friends, groups, and alliances. But it is good for communicating, neighborhood, education/learning, weekly schedule, siblings, and starting a daily practice (Saturn will help us establish routines).

February 9th - Aquarius New Moon

At 20° of Aquarius, the closest configuration is a square from Uranus (19♉), connoting a disruptive or unexpected component. This is also the first Aquarius New Moon with Pluto present--Pluto will be weighing in on all monthly meetings in Aquarius for now. We can expect concerns around independence and freedom, but also emotional volatility. "How do I break from this pattern?" asks the rebel, knowing it'll be better than keeping things as they are. Mercury also applies to a square with a Jupiter during this time, expanding communications & bringing (possibly excessive) optimism. The Jupiter-Saturn sextile that hung over much of January weighs in as well, balancing our drives for both growth and consolidation.

February - 12th/13th Mars enters Aquarius

Soon after he conjoins Pluto.

February 14th - Mars conjunct Pluto

This is the first hard aspect since the square circa October 7th. There are going to be about 10 of these conjunctions during Pluto's trip through Aquarius. Mars connotes military operations, while Pluto brings underground groups and struggles. We've been having power dynamics stirred up in the Capricorn parts of our charts the past 20 years, and now this moves to our Aquarius house/area of life. Possessiveness and excessive force are also connoted by this planetary combination; exercise caution and take special care not to go too far. Austin describes this feeling as a "struggle against annihilation," even when we're not actually fighting for our lives. It'll take self-control and grace to navigate this Valentine's day well.

February 16th - Venus enters Aquarius

Soon after she will conjoin Pluto, and later Mars.

February 17th - Venus conjunct Pluto

The intensity, obsessions, and making mountains of molehills brought up by other planets' Pluto contacts will now show itself in the realm of relationships and social interactions. We get the extremes of affection and its negation with Venus-Pluto. This can be a good time for experimenting in the arts: we're getting a new movie based off Frankenstein, which was published under Pluto in Aquarius. Much of the novel is from the perspective of no the doctor but his experiment--when are we our own lab rats?

February 18th - Sun enters Pisces

February 22nd - Venus conjunct Mars

Unfortunately Mars, Aquarius, and Pluto do not bode well for Valentine's-adjacent activities. Some positive connotations include magnetic allure and deep, passionate connections with others, extreme vulnerability and devotion. However, keep watchful for power struggles and manipulation in relationships and attraction. Ask yourself, "is this healthy or is this too far?" and try to set healthy boundaries. (Though the answers may be clearer in hindsight.) Look out for hastiness in relationships, jealous and aggression in love, or attraction to drama or conflict. Try to balance passion with reason, communicate clearly, and express that energy through creative outlets.

February 23rd - Mercury enters Pisces

February 24th - Virgo Full Moon

Immediately after its exact opposition to Saturn, the Moon applies to one with Saturn, who as a matter of course is also applying to Saturn by conjunction (pictured above is the Moon between these oppositions). Austin describes this as a magnified check-in with Saturn, as we're approaching the 1-year mark for Saturn in Pisces (early March). How have you been bearing having to swim with weights on? Or maybe you've hooked something powerful on your fishing line--how will you reel it in? Chris says this lunation highlights tension in the Virgo part of our charts as well, shedding light that may help us identify solutions. We have a Sun-Mercury-Saturn conjunction a few days later that will bring our attention back to Pisces.

February 26th/27th - Mars square Jupiter

Jupiter wants to help, but something's getting in his way. We can also think about taking risks, leaving our comfort zone, and increased energy/enthusiasm. However, as Mars is overcoming Jupiter (earlier in the zodiac), traditional sources would indicate that the warlike side of things will gain the upper hand over peaceful Jupiter. Mars in Aquarius connotes rogue or outsider forces, while Jupiter is privileged by stability in Taurus. Keep a lookout for global trade points and major conflicts, which will likely see developments around this time. Some positives of Mars-Jupiter include increased motivation, taking bold actions, and meeting ambitious goals. On the flipside is impulsiveness, overconfidence, and recklessness. Mars-Jupiter is willing to take a risk. Sometimes, it's better not to know exactly what you're getting into so you don't get overwhelmed by a monumental task; other times, it doesn't work out. This is the placement of the intrepid explorer.

February 28th - Moon, Mercury, and Saturn conjunction in Pisces

This occurs at 9 degrees of Pisces, and each planet applies to a sextile with Pisces in the following days. Jupiter brings us another management check-in after Saturn's: how have we been balancing growth with consolidation? Jupiter's going to pick up speed and zoom out of Taurus later this year, moving away from this balanced position to a difficult square with Saturn. We've been balancing weight with buoyancy, but once in Gemini Jupiter forms more of helicopter to Saturn's submarine--vehicles that can't be combined. With Saturn and Mercury (and the Sun shedding light), we'll likely see changes in the status of maritime trade and travel. From Saturn to Jupiter we may see something go from being stuck to unstuck.

As we wrap up the month, we've got some Pisces planets approaching Neptune while Jupiter approaches Uranus. And don't forget, we've got 29 days in February this year!

#transits#forecast#february 2024#mundane astrology#the astrology podcast#pluto in aquarius#mercury conjunct pluto#mars conjunct pluto#venus conjunct pluto#venus conjunct mars#saturn in pisces

16 notes

·

View notes

Text

Brazil defines rules for AI in elections, candidates could lose mandate if they use tools to spread fake news

Brazil’s Superior Electoral Court approved a new set of rules for the use of artificial intelligence (AI) in elections. Among the measures are the complete ban on deep fakes and the mandatory warning of the use of AI in all content shared by candidates and their campaigns.

The country’s next set of elections will take place in October, where voting for local mayors and councilors will take place, and the new AI rules have already come into force. This is the first set of regulations ruled on by the electoral court regarding the use of AI by candidates and political parties.

The rules involve prohibiting all types of deep fakes; the obligation to warn about the use of AI in electoral propaganda, even if it is in neutral videos and photos; and the restriction of the use of robots to mediate contact with voters (the campaign cannot simulate dialogue with the candidate or any other person, for example).

According to the rules, any candidate who uses deep fakes to spread false content could lose their mandate if elected. Brazil’s electoral court defines deep fakes as “synthetic content in audio, video or both, which has been digitally generated or manipulated to create, replace or alter the image or voice of a living, dead or fictitious person.”

Continue reading.

#brazil#brazilian politics#politics#brazilian elections#technology#artificial intelligence#mod nise da silveira#image description in alt

8 notes

·

View notes

Text

Where we are with AI, in March of 2024

We’ve seen the development of photo and video manipulation, from the earliest days of Photoshop up to now. This year, though, things are reaching the level we’ve all been concerned about – we can’t tell if important things we see are real. Soon, not even the experts will be able to tell, and anyone will easily be able to make any fake video they want.

Look at this image. Does it look real? Give the article a read.

When I first glanced at it, I didn’t even notice he had shirt sleeves and no shirt! Because I was focusing on his face and the smoke. That article explains some of the best ways to tell a photo is AI. But most notably, it says, “There are so many obvious signs this is AI, but most would miss them because they’re not part of the focus of the image, and since this is not a case where you think someone would be tricking you, you have no reason to analyze it that closely.”

A colleague who works on visual effects for movies shared this video created by OpenAI Sora.

youtube

He explained, “I work in visual effects. It is my job to make the audience fooled by what they are seeing. Those of us in the industry tend to see a lot of flaws that most people miss. We will soon be at a point where I won't be able to see the flaws.”

Puppies playing in snow is fun, and I’m sure many people look forward to infinite adorable animal videos! But of course, that’s not why you’re reading this. This is about AI videos of any person, place, or thing that your average grade-schooler will potentially be able to create. Month by month the software will get better and easier and cheaper, and AI photos have already started appearing ahead of the election, and supporters on both sides of any politician will be using them.

Could you tell that the two photos are fake? As a video editor, I could. But the first one was harder; I had to look for clues like the McDonalds smoking man photo. The worst part of these photos is what the person who created them said in defense:

“I’m not claiming it is accurate. I’m not saying, ‘Hey, look, Donald Trump was at this party with all of these African American voters. Look how much they love him!’ If anybody’s voting one way or another because of one photo they see on a Facebook page, that’s a problem with that person, not with the post itself.”

It’s bad enough that they didn’t care people were sharing those photos under the impression they were real. It will be worse when people are using this software to purposefully manipulate people’s beliefs and feelings to sway their vote, or worse, to fuel outrage and despair.

So, you know how you’ll go online on April Fool’s Day and your brain will be on ‘suspicious’ mode? Have you heard stories of real things that people didn’t believe because they happened on April 1st? From now on, that is how you need to calibrate your brain for important images and videos.

If a familiar face says something notable, out of character, or outrageous, if you didn’t learn about it from a reliable news source and you plan to tuck it away in your brain’s section of “things I believe,” you will first need to verify it. Viral photos and videos on Twitter and YouTube aren’t reliable, and you’re better off only getting your news directly from trustworthy sources like news channels. If you find something and want to verify it, use your search engine and key words, especially for strange, suspicious, or implausible quotes, and find a news website you can rely on. If it’s gone viral and it’s AI, it’s likely that the top results will be news websites debunking it.

Starting now, remember: Suspicious and outrageous things are false until proven true.

To sum up, here’s the News Literacy Project’s guide for vetting news sources:

Do a quick search: Conducting a simple search for information about a news source is a key first step in evaluating its credibility.

Look for standards: Reputable news organizations aspire to ethical guidelines and standards, including fairness, accuracy, and independence.

Check for transparency: Quality news sources should be transparent, not only about their reporting practices, but also about their ownership and funding.

Examine how errors are handled: Credible news sources are accountable for mistakes and correct them. Do you see evidence that this source corrects or clarifies errors?

Assess news coverage: An important step in vetting sources is taking time to read and assess several news articles from them, not just the one you clicked on.

Also, look for clues that you should avoid a website. They include:

False or untrue content

Clickbait tactics (melodramatic headlines)

Lack of balance (feels like it was written by a person who took a side)

Manipulated images or videos

State-run or state-sponsored propaganda

Dangerous, offensive, and malicious content

Stay aware and attentive, good luck, and let’s hope we can at least keep this dumpster fire in the dumpster.

#ai#artificial intelligence#ai software#fake news#politics#politicians#trump#biden#openai#Gemini#chatgpt#news#skeptic#manipulation#manipulated video#manipulated photo#debunk#clickbait#propaganda#Suspicious and outrageous things are false until proven true#Youtube

10 notes

·

View notes

Text

By: Christina Buttons

Published: Nov 16, 2023

Hamas’ October 7 terrorist attacks represented the worst single-day massacre of Jews since the Holocaust. In the west, the most common reaction was grief and shock. Yet there’s also been no shortage of anti-Israel activists around the world who’ve taken to the streets, lauding the killers as “martyrs” and “freedom fighters.” Many of these events have been overtly antisemitic, with some even breaking out into chants of “gas the Jews.”

Young people, particularly those who self-identify as members of the progressive left, are disproportionately represented among those who’ve downplayed, dismissed, justified, or even celebrated Hamas’ actions. Claims of “genocide” and “ethnic cleansing” are now casually lobbed not only at Israel, but Jews more generally. Not surprisingly, this has been accompanied by a substantial increase in antisemitic hate crimes.

A survey of 2,116 registered U.S. voters, conducted in mid-October by The Harris Poll and HarrisX, revealed a striking generational divide on the Israel-Palestine conflict. Approximately half of those respondents aged 18 to 34 expressed the belief that the mass killing of Israeli civilians could be justified by Palestinian grievances. As the age of respondents increased, support for this proposition declined significantly. A similar pattern was reflected in the responses to other questions about Israel.

This result cannot be blamed—at least not entirely—on the political atmosphere on U.S. campuses, as only about 35 percent of Americans aged 25 and older in the United States have a bachelor degree. Almost all Americans consume social media in some form, however. And these online spaces are where much of the pro-terror radicalization seems to be occurring.

Video is an especially effective propaganda medium. From October 7 onwards, social media channels have been flooded with clips posted by high-follower accounts linked to Hamas. Some of the individuals spreading this content present as “journalists,” even though they’re known to have ingratiated themselves with Hamas’ leadership. In one notorious case, a CNN freelancer posted a photo of himself holding a grenade while he accompanied Hamas on the 10/7 rampage.

Even mainstream media outlets trying to act in good faith have been caught repeating fake news that’s been fed to them, directly or indirectly, by Hamas. In other cases, online opportunists, some of them with purely financial motives, have exploited the 10/7 attacks for personal gain, using AI-generated imagery and pro-Hamas bots to flood the internet with clickbait.

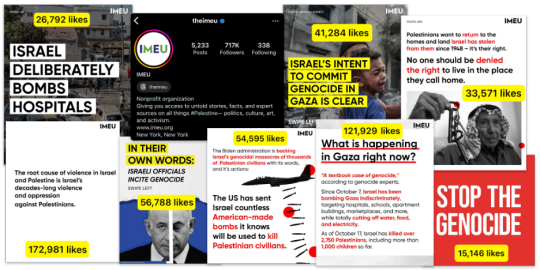

Instagram has become a particularly active arena for pro-Hamas propaganda. At last count, the hashtag #freepalestine had appeared on over 5.8-million posts, exceeding #standwithisrael’s 220,000 by a geometric factor of more than 20. Similarly, #gazaunderattack has amassed 1.8 million instances, an order of magnitude more than #israelunderattack’s 134,000.

Israel may have the upper hand in the unfolding military conflict within Gaza. But it is evident that Hamas and its allies are winning over many youth by weaponizing the pre-existing idioms of social-justice advocacy. Since 2020, Instagram, like all social-media platforms, has been awash with dubious slideshows purporting to educate users about “systemic racism,” “decolonization,” and the need for non-white people to rise up and “disrupt” our supposedly white-supremacist western societies. The formula worked as a means to promote Black Lives Matter protests. And anti-Israel groups are now seeking to copy this formula in their campaign to support Hamas.

In particular, these groups seek to replicate the powerful public reaction set off by video of George Floyd’s murderous mistreatment by Minneapolis police. War is hell, as the expression goes. And so in Gaza, as in every other military conflict known to history, there are instances of civilians being caught in the crossfire, or victimized by attacks against nearby military targets—scenes that are played up incessantly as evidence of supposed genocide.

I recognize these propaganda techniques because back in 2020, I was responsible for curating and creating content for an influential progressive Instagram account with more than 730,000 followers. My role was to keep people engaged and enraged. Like many other old-fashioned liberals, I’d mistakenly perceived the social-justice phenomenon as a moral extrapolation of the civil-rights movement. In time, I realized that what I was really doing was signal-boosting the values of far-left academics seeking to destroy liberal values. Part of that Marxist-inspired academic movement involves slotting whole swathes of humanity into boxes marked either “oppressor” or “oppressed.” Having put the Palestinians in the second box, these ideologues are inclined to support any action, however monstrous, presented as a strategy of liberation.

As it turns out, being an anti-oppressive social-justice revolutionary can be quite lucrative. Among the most prolific disseminators of anti-Israel propaganda, for instance, is the Institute for Middle East Understanding (IMEU), a well-funded California-based nonprofit founded by “concerned Americans.” The IMEU Instagram account now has 700,000 followers, over 200,000 of these having been recruited since 10/7.

According to IMEU Communications Director Omar Baddar, who draws a $100,000 annual salary from the organization, the group has had the most “success” with young users. In a 2021 online workshop, he discussed his strategy of leveraging “social justice content” on Instagram, while citing studies that show Americans’ growing reliance on social media for news. He noted that, unlike mainstream outlets (which typically employ stringent fact-checking techniques and attempt to provide balanced reporting), social media allows him more direct control of a desired narrative. When it comes to the narrative surrounding violence, for instance, “Israel, as an occupying power, is inherently the initiator of [all] violence.”

As noted above, a key part of this strategy involves drawing linkages to pre-existing social-justice ideas and memes. “Jim Crow segregation is obviously something that every American understands, so explaining how the parallels between Israeli apartheid and that are very useful,” Baddar told his audience. He even hints at exploiting Americans’ feelings of guilt over slavery (and white guilt, more generally) as a useful tactic.

As the Jewish Institute for Liberal Values has noted, this type of approach can seduce even some Jewish groups, many of which now tend to prioritize trending social-justice slogans and buzzwords over the actual interests of Jewish people. This includes Jewish Voice for Peace, whose influential Instagram account is nearing the million-follower mark.

Sayf Abdeen, who made a name for himself as a “Diversity, Inclusion and Overseas officer” at the London School of Economics, is another well-heeled propagandist who’s become an expert at attracting the attention of young, low-information Instagram addicts. His popular account is called Let’s Talk Palestine, a nod to a popular 2020 social-justice slideshow page called So You Want to Talk About. He notes that “anger or frustration is really good at galvanizing people and attracting attention.” And once you’ve gotten them riled up, he advises, hit them with a “call to action” that transforms ordinary youth into activists.

In this regard, Baddar is particularly interested in getting his audience to enroll in Boycott, Divestment, and Sanctions campaigns; and, of course, to donate money to the IMEU. The group has ramped up its Instagram activity to between four and eight posts daily, with each depicting Israel as the sole aggressor in an unprovoked attack on Palestinians (which the IMEU naturally characterizes as “genocide”). The strategy has proven effective, as the IMEU is gaining approximately 5,000 to 10,000 new followers every day.

As a means of sensationalizing its content, the IMEU often parrots the high casualty figures sourced from Gaza’s Hamas-controlled health ministry, figures to which U.S. President Joe Biden assigns “no confidence.” (While any loss of civilian life is tragic, Hamas has a history of dramatically inflating casualty counts as a means to garner sympathy for its cause. Such figures are often debunked after follow-up investigations.)

The IMEU has posted claims that deny or downplay the horrors of October 7, even in the face of forensic evidence confirming Hamas’ atrocities. Their posts sow distrust in more credible sources, including the White House, with the apparent goal of keeping users inside a propaganda cocoon. IMEU posts that spuriously blamed Israel for a deadly October 17 explosion on the grounds of Gaza’s al-Ahli Arab Hospital remain uncorrected on the group’s feed, even weeks after evidence revealed that the deaths—dozens, not hundreds, as Hamas had initially claimed—were the result of a misfired Islamic Jihad rocket. The fact that Palestinians killed their own people and then tried to blame Israel for it apparently isn’t part of the preferred IMEU narrative.

Numerous posts accuse Israel of targeting hospitals and civilian areas, while neglecting to mention that Hamas has long used these locations as headquarters and ammunition depots. The IMEU also passes over the fact that Hamas has instructed civilians to stay in the most dangerous areas; and in some cases has physically blocked non-combatants from heading to safer areas in the south of Gaza, as part of an apparent strategy of maximizing Palestinian civilian casualties for propaganda purposes. One might think that a group devoted to a proper “understanding” of the Middle East conflict—that’s the U in IMEU, remember—might see these facts as significant.

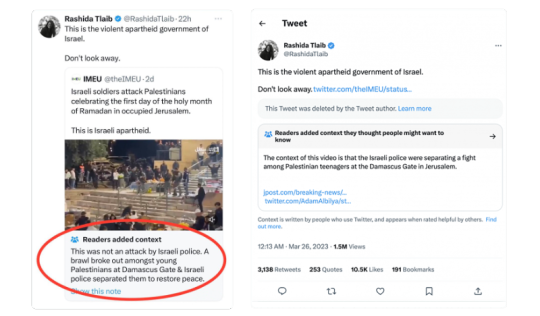

Despite the manipulative and deceptive nature of IMEU’s propaganda campaign, Instagram— which is owned by Meta Platforms, formerly known as Facebook, Inc.—doesn’t seem to have taken measures to fact-check, correct, or contextualize any of its posts. By contrast, on X (formerly Twitter), users are better protected thanks to the new “Community Notes” feature. Earlier this year, the IMEU posted a video that, it claimed, showed “Israeli soldiers attack[ing] Palestinians,” which went viral after being shared by U.S. congresswoman Rashida Tlaib. In fact, the video showed Israeli police officers breaking up a fight among Palestinian teenagers. Embarrassed by the correction, the IMEU deleted the post.

To be fair, the Instagram platform wasn’t designed for in-depth political discussions: Following its initial release in 2010, it was mostly used by users seeking to show off pictures of nature, vacations, fashion, pets, shopping “hauls,” and recipes. Unlike X, it doesn’t encourage users to embed clickable links and launch into multi-thread arguments. As a result, there’s been less public scrutiny of the role that Instagram plays in forming public attitudes on serious political issues, as compared to Facebook, Twitter, and TikTok. As the IMEU example shows, that needs to change.

A dominant conceit within the social-justice movement is that its leading activists are plucky, grass-roots figures powered by big hearts but small budgets. But the IMEU's financial statements indicate assets of over $3 million. In 2022 alone, the group received $1.49-million in donations, and held a gala event that netted $659,000. Prominent donors have included George Soros; and the Rockefeller Brothers Fund (which has donated millions of dollars to dozens of anti-Israel causes and BDS campaigns).

What would a true “understanding” of the Middle East conflict look like? It might start with an acknowledgement of the fact that Israel’s military has repeatedly instructed Gazan civilians to evacuate areas in which it intends to conduct ground operations—the exact opposite of what one would expect from a “genocidal” military hegemon seeking to round up and exterminate a civilian population. Because Hamas hides its operatives in hospitals, schools, and civilian homes, and ignores the principle of distinction, it is the terrorist group, not the Israeli soldiers fighting it, that should be held responsible for civilian deaths, according to international law. Investigations into alleged crimes committed by Israel during past wars or conflicts haven’t resulted in formal charges or convictions, which says quite a lot given the enthusiasm that many international leaders have for turning the Jewish state into an international pariah.

Being a sovereign state and a member of the United Nations, Israel is bound by the laws of war, and has every incentive to minimize civilian casualties where possible, while Hamas has every incentive to maximize them: Indeed, for Hamas’ propaganda purposes, there is scant difference between a dead Jew and a dead Palestinian—the former being held up as purported evidence of Hamas’ military prowess and the latter being presented as evidence of Palestinian victimization.

Hamas, which became the dominant force in Gaza following Israel’s complete withdrawal from Gaza two decades ago, has operated as an Islamist kleptocracy, hoarding hundreds of millions of dollars while 80 percent of Gazans languish in poverty. How morally grotesque is it that western activists and hash-taggers who fly the banner of social justice have tied their cause to a terrorist group that steals humanitarian aid and uses women and children as human shields?

The group’s founding covenant, drafted in 1988, endorses the extermination of Jews and their state. And Hamas leaders have vowed to repeat the mass murders of October 7 until that goal is achieved. The idea that Israel must now grant a “ceasefire” to this same group, as many activists are demanding on social media, is absurd. The proper time for a ceasefire was October 6. The idea of Israel willfully calling off its military operations so that Hamas can have the chance to better redeploy its remaining forces in Gaza City is ludicrous.

* * *

“It’s just social media,” some may say. “You can just log off.” But it’s not that easy. Rightly or wrongly, many of us have come to see our socials as a window into what the rest of the world thinks. And the Jewish people I’ve spoken to on Instagram have told me that these last few weeks have been some of the worst of their lives—in part because every time they look at their phone, they see legions of users cheering on the same terrorists who murdered defenseless Israeli men, women, and children.

One Jewish man told me that he’d recently purchased a gun, and was now enrolled in firearms training. Others told me that they’ve upgraded their home security systems. One woman told me that she’s had talks with her daughter about not advertising her Jewish faith in public—“because I’m genuinely afraid of hateful people who’ve been brainwashed.” Meanwhile, efforts to fight back online can have unpredictable results. One woman I know, who’s employed in the progressive nonprofit sector, confided that her own colleagues attempted to have her fired after they saw her pro-Israel social media posts.

Calling out terrorist propaganda disguised as social-justice mantras shouldn’t be a lonely or professionally risky task: We should all be doing it. Not just because there’s inherent value in promoting truth, debunking falsehoods, and fighting antisemitism (in both letter and spirit); but also because some sizeable fraction of the young Instagram junkies who are now spreading Hamas propaganda will come to actually internalize the proposition that terrorism is justified in the name of social justice.

The 10/7 attacks won’t be the last mass-casualty Islamist terrorist massacre. And Israel is hardly the only country that Islamists target. If—god forbid—the United States suffers another 9/11-scale attack, will these same pro-Hamas meme peddlers similarly excuse it as the righteous fury of the world’s oppressed? As awful as post-10/7 Instagram has been, it has at least supplied us with a cautionary glimpse into the hive mind of the online social-justice community. If these repugnant attitudes spread and metastasize, none of us can say we weren’t put on notice.

#Christina Buttons#propaganda#antisemitism#islam#islamic terrorism#hamas#hamas supporters#terrorism supporters#exterminate hamas#disinformation#social media#social media propaganda#no ceasefire#israel#palestine#free palestine from hamas#free gaza from hamas#free palestine#free gaza#IMEU#Institute for Middle East Understanding#islamic propaganda#religion is a mental illness

14 notes

·

View notes

Text

LANSING, Mich. -- Michigan is joining an effort to curb deceptive uses of artificial intelligence and manipulated media through state-level policies as Congress and the Federal Elections Commission continue to debate more sweeping regulations ahead of the 2024 elections.

Campaigns on the state and federal level will be required to clearly say which political advertisements airing in Michigan were created using artificial intelligence under legislation expected to be signed in the coming days by Gov. Gretchen Whitmer, a Democrat. It also would prohibit use of AI-generated deepfakes within 90 days of an election without a separate disclosure identifying the media as manipulated.

Deepfakes are fake media that misrepresent someone as doing or saying something they didn't. They're created using generative artificial intelligence, a type of AI that can create convincing images, videos or audio clips in seconds.

There are increasing concerns that generative AI will be used in the 2024 presidential race to mislead voters, impersonate candidates and undermine elections on a scale and at a speed not yet seen.

Candidates and committees in the race already are experimenting with the rapidly advancing technology, which in recent years has become cheaper, faster and easier for the public to use.

The Republican National Committee in April released an entirely AI-generated ad meant to show the future of the United States if President Joe Biden is reelected. Disclosing in small print that it was made with AI, it featured fake but realistic photos showing boarded-up storefronts, armored military patrols in the streets, and huge increases in immigration creating panic.

In July, Never Back Down, a super PAC supporting Republican Florida Gov. Ron DeSantis, used an AI voice cloning tool to imitate former President Donald Trump’s voice, making it seem like he narrated a social media post he made despite never saying the statement aloud.

Experts say these are just glimpses of what could ensue if campaigns or outside actors decide to use AI deepfakes in more malicious ways.

So far, states including California, Minnesota, Texas and Washington have passed laws regulating deepfakes in political advertising. Similar legislation has been introduced in Illinois, New Jersey and New York, according to the nonprofit advocacy group Public Citizen.

Under Michigan's legislation, any person, committee or other entity that distributes an advertisement for a candidate would be required to clearly state if it uses generative AI. The disclosure would need to be in the same font size as the majority of the text in print ads, and would need to appear “for at least four seconds in letters that are as large as the majority of any text" in television ads, according to a legislative analysis from the state House Fiscal Agency.

Deepfakes used within 90 days of the election would require a separate disclaimer informing the viewer that the content is manipulated to depict speech or conduct that did not occur. If the media is a video, the disclaimer would need to be clearly visible and appear throughout the video's entirety.

Campaigns could face a misdemeanor punishable by up to 93 days in prison, a fine of up to $1,000, or both for the first violation of the proposed laws. The attorney general or the candidate harmed by the deceptive media could apply to the appropriate circuit court for relief.

Federal lawmakers on both sides have stressed the importance of legislating deepfakes in political advertising, and held meetings to discuss it, but Congress has not yet passed anything.

A recent bipartisan Senate bill, co-sponsored by Democratic Sen. Amy Klobuchar of Minnesota, Republican Sen. Josh Hawley of Missouri and others, would ban “materially deceptive” deepfakes relating to federal candidates, with exceptions for parody and satire.

Michigan Secretary of State Jocelyn Benson flew to Washington, D.C. in early November to participate in a bipartisan discussion on AI and elections and called on senators to pass Klobuchar and Hawley's federal Deceptive AI Act. Benson said she also encouraged senators to return home and lobby their state lawmakers to pass similar legislation that makes sense for their states.

Federal law is limited in its ability to regulate AI at the state and local levels, Benson said in an interview, adding that states also need federal funds to tackle the challenges posed by AI.

“All of this is made real if the federal government gave us money to hire someone to just handle AI in our states, and similarly educate voters about how to spot deepfakes and what to do when you find them,” Benson said. “That solves a lot of the problems. We can’t do it on our own.”

In August, the Federal Election Commission took a procedural step toward potentially regulating AI-generated deepfakes in political ads under its existing rules against “fraudulent misrepresentation.” Though the commission held a public comment period on the petition, brought by Public Citizen, it hasn’t yet made any ruling.

Social media companies also have announced some guidelines meant to mitigate the spread of harmful deepfakes. Meta, which owns Facebook and Instagram, announced earlier this month that it will require political ads running on the platforms to disclose if they were created using AI. Google unveiled a similar AI labeling policy in September for political ads that play on YouTube or other Google platforms.

2 notes

·

View notes

Text

Enhance Voter Communication And Political Campaign Strategy By Using AI Enriched Personalized Voice And Video Messages

Connect directly with voters and make an impact by sending AI-enriched Personalized Video messages through WhatsApp and personalized voice messages using voice broadcasting technology. Connect with go2market to fuel your campaigns with the power of AI.

#go2marketindia#Personalized Voice And Video Messages#serviceprovider#ai voice#ai video#elecction 2024

0 notes

Text

Personalized Videos for Voters for Political Campaigns

Connect directly with voters and make an impact by sending AI-enriched personalized voice and video messages. Start today with go2market

#Personalized Video Messaging for Political Campaigns#AI-enriched voice messages for political campaigne#AI-powered personalized video for political campaign#Send Personalized video messages with AI#Personalized Videos for Voters with AI#Voter Communication with AI#AI Voice & Video Messages#Personalized Video Messages#Personalized Voice Messages#go2market#political survey companies in india#voice broadcasting#best election survey agencies in india#bulk sms service provider in delhi

0 notes

Text

How to safeguard your business from AI-generated deepfakes

New Post has been published on https://thedigitalinsider.com/how-to-safeguard-your-business-from-ai-generated-deepfakes/

How to safeguard your business from AI-generated deepfakes

.pp-multiple-authors-boxes-wrapper display:none;

img width:100%;

Recently, cybercriminals used ‘deepfake’ videos of the executives of a multinational company to convince the company’s Hong Kong-based employees to wire out US $25.6 million. Based on a video conference call featuring multiple deepfakes, the employees believed that their UK-based chief financial officer had requested that the funds be transferred. Police have reportedly arrested six people in connection with the scam. This use of AI technology is dangerous and manipulative. Without proper guidelines and frameworks in place, more organizations risk falling victim to AI scams like deepfakes.

Deepfakes 101 and their rising threat

Deepfakes are forms of digitally altered media — including photos, videos and audio clips — that seem to depict a real person. They are created by training an AI system on real clips featuring a person, and then using that AI system to generate realistic (yet inauthentic) new media. Deepfake use is becoming more common. The Hong Kong case was the latest in a series of high-profile deepfake incidents in recent weeks. Fake, explicit images of Taylor Swift circulated on social media, the political party of an imprisoned election candidate in Pakistan used a deepfake video of him to deliver a speech and a deepfake ‘voice clone’ of President Biden called primary voters to tell them not to vote.

Less high-profile cases of deepfake use by cybercriminals have also been rising in both scale and sophistication. In the banking sector, cybercriminals are now attempting to overcome voice authentication by using voice clones of people to impersonate users and gain access to their funds. Banks have responded by improving their abilities to identify deepfake use and increasing authentication requirements.

Cybercriminals have also targeted individuals with ‘spear phishing’ attacks that use deepfakes. A common approach is to deceive a person’s family members and friends by using a voice clone to impersonate someone in a phone call and ask for funds to be transferred to a third-party account. Last year, a survey by McAfee found that 70% of surveyed people were not confident that they could distinguish between people and their voice clones and that nearly half of surveyed people would respond to requests for funds if the family member or friend making the call claimed to have been robbed or in a car accident.

Cybercriminals have also called people pretending to be tax authorities, banks, healthcare providers and insurers in efforts to gain financial and personal details.

In February, the Federal Communications Commission ruled that phone calls using AI-generated human voices are illegal unless made with prior express consent of the called party. The Federal Trade Commission also finalized a rule prohibiting AI impersonation of government organizations and businesses and proposed a similar rule prohibiting AI impersonation of individuals. This adds to a growing list of legal and regulatory measures being put in place around the world to combat deepfakes.

Stay protected against deepfakes

To protect employees and brand reputation against deepfakes, leaders should adhere to the following steps:

Educate employees on an ongoing basis, both about AI-enabled scams and, more generally, about new AI capabilities and their risks.

Upgrade phishing guidance to include deepfake threats. Many companies have already educated employees about phishing emails and urged caution when receiving suspicious requests via unsolicited emails. Such phishing guidance should incorporate AI deepfake scams and note that it may use not just text and email, but also video, images and audio.

Appropriately increase or calibrate authentication of employees, business partners and customers. For example, using more than one mode of authentication depending on the sensitivity and risk of a decision or transaction.

Consider the impacts of deepfakes on company assets, like logos, advertising characters and advertising campaigns. Such company assets can easily be replicated using deepfakes and spread quickly via social media and other internet channels. Consider how your company will mitigate these risks and educate stakeholders.

Expect more and better deepfakes, given the pace of improvement in generative AI, the number of major election processes underway in 2024, and the ease with which deepfakes can propagate between people and across borders.

Though deepfakes are a cybersecurity concern, companies should also think of them as complex and emerging phenomena with broader repercussions. A proactive and thoughtful approach to addressing deepfakes can help educate stakeholders and ensure that measures to combat them are responsible, proportionate and appropriate.

(Photo by Markus Spiske)

See also: UK and US sign pact to develop AI safety tests

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Tags: ai, artificial intelligence, deepfakes, enterprise, scams

#2024#advertising#ai#ai & big data expo#AI Ethics#ai news#amp#approach#artificial#Artificial Intelligence#assets#audio#authentication#banking#biden#Big Data#Business#clone#Cloud#communications#Companies#comprehensive#conference#course#cyber#cyber security#cybercriminals#cybersecurity#data#deepfake

0 notes

Text

Over the summer, a political action committee (PAC) supporting Florida governor and presidential hopeful Ron DeSantis uploaded a video of former president Donald Trump on YouTube in which he appeared to attack Iowa governor Kim Reynolds. It wasn’t exactly real—though the text was taken from one of Trump’s tweets, the voice used in the ad was AI-generated. The video was subsequently removed, but it has spurred questions about the role generative AI will play in the 2024 elections in the US and around the world.

While platforms and politicians are focusing on deepfakes—AI-generated content that might depict a real person saying something they didn’t or an entirely fake person—experts told WIRED there's a lot more at stake. Long before generative AI became widely available, people were making “cheapfakes” or “shallowfakes.” It can be as simple as mislabeling images, videos, or audio clips to imply they’re from a different time or location, or editing a piece of media to make it look like something happened that didn’t. This content can still have a profound impact if they’re allowed to circulate on social platforms. As more than 50 countries prepare for national elections in 2024, mis- and disinformation are still powerful tools for shaping public perception and political narratives.

Meta and YouTube have both responded in recent months with new policies around the use of generative AI in political ads like the one in support of DeSantis. Last month, Meta announced that it would require political advertisers to disclose whether an ad uses generative AI, joining Google, which owns YouTube, in responding to concerns that newly available tools could be used to mislead voters. In a note on its blog post about how the company is approaching the upcoming 2024 elections, Meta says that it will require advertisers “to disclose when they use AI or other digital techniques to create or alter a political or social issue ad in certain cases.”

“The scope is only political ads, which is a tiny part of the political ecology where people are increasingly using AI-generated media,” says Sam Gregory, program director at the nonprofit Witness, which helps people use technology to promote human rights. “It's not clear that it covers the broad range of shallowfakes or cheapfake approaches that are already being deployed both in political ads, but also, of course, in much broader ways that people use in political context.”

And not all misleading political content ends up in advertisements. For instance, in 2020, a video went viral that made it appear like Representative Nancy Pelosi was slurring her speech. The video itself wasn’t fake, but it had been slowed down to make Pelosi appear drunk. Though Twitter, TikTok, and YouTube removed the video, it remained live on Facebook, with a label noting it was “partly false.” The video itself wasn’t an ad, though it was clearly targeting a political figure.

Earlier this year, Meta’s Oversight Board took on a case reviewing doctored video of President Joe Biden, which had not been generated or edited using AI, and which the company left up on the platform. The board will use the case to further review the company’s “manipulated media policy,” which stipulates that videos where “a subject … said words that they did not say” and which are “the product of artificial intelligence or machine learning” will be removed. Content like the Pelosi and Biden videos don't clearly violate this policy. Manipulated audio, particularly problematic in many non-English contexts, is nearly completely left out.

“Political ads are deliberately designed to shape your emotions and influence you. So, the culture of political ads is often to do things that stretch the dimensions of how someone said something, cut a quote that's placed out of context,” says Gregory. “That is essentially, in some ways, like a cheap fake or shallow fake.”

Meta did not respond to a request for comment about how it will be policing manipulated content that falls outside the scope of political advertisements, or how it plans to proactively detect AI usage in political ads.

But companies are only now beginning to address how to handle AI-generated content from regular users. YouTube recently introduced a more robust policy requiring labels on user-generated videos that utilize generative AI. Google spokesperson Michael Aciman told WIRED that in addition to adding “a label to the description panel of a video indicating that some of the content was altered or synthetic,” the company will include a more “more prominent label” for “content about sensitive topics, such as elections.” Aciman also noted that “cheapfakes” and other manipulated media may still be removed if it violates the platform’s other policies around, say, misinformation or hate speech.

“We use a combination of automated systems and human reviewers to enforce our policies at scale,” Aciman told WIRED. “This includes a dedicated team of a thousand people working around the clock and across the globe that monitor our advertising network and help enforce our policies.”

But social platforms have already failed to moderate content effectively in many of the countries that will host national elections next year, points out Hany Farid, a professor at the UC Berkeley School of Information. “I would like for them to explain how they're going to find this content,” he says. “It's one thing to say we have a policy against this, but how are you going to enforce it? Because there is no evidence for the past 20 years that these massive platforms have the ability to do this, let alone in the US, but outside the US.”

Both Meta and YouTube require political advertisers to register with the company, including additional information such as who is purchasing the ad and where they’re based. But these are largely self-reported, meaning some ads can slip through the company’s cracks. In September, WIRED reported that the group PragerU Kids, an extension of the right-wing group PragerU, had been running ads that clearly fell within Meta’s definition of “political or social issues”—the exact kinds of ads for which the company requires additional transparency. But PragerU Kids had not registered as a political advertiser (Meta removed the ads following WIRED’s reporting).

Meta did not respond to a request for comment about what systems it has in place to ensure advertisers properly categorize their ads.

But Farid worries that the overemphasis on AI might distract from the larger issues around disinformation, misinformation, and the erosion of public trust in the information ecosystem, particularly as platforms scale back their teams focused on election integrity.

“If you think deceptive political ads are bad, well, then why do you care how they’re made?” asks Farid. “It’s not that it’s an AI-generated deceptive political ad, it’s that it’s a deceptive political ad period, full stop.”

3 notes

·

View notes

Text

The Rise of Deepfakes and Misinformation

With an increasing amount of deepfakes - deceptive images, videos and content circulating on the internet, we find ourselves doubting our own eyes.

But, what exactly is causing the rise of deepfakes and misinformation?

Deepfakes utilise a form of artificial media called ‘deep learning’ to create images and videos of imitated events. The process of cropping and manipulating the media isn't a novel concept, it has been around for years. However, deepfakes are much more advanced, representing a step up from the photoshopped media that circulates as fake news.

Deepfakes utilise the power of modern technology, employing techniques that include machine learning models and artificial intelligence to generate multimedia content that is increasingly deceiving.

The deepfakes use an autoencoder, a deep learning AI program tasked with studying various video clips to build a broader understanding of what that person would look like from various angles and in different environments. The study source is then mapped onto the target within the video.

The purpose of deepfake is determined by its creator, one reason could be lighthearted while other serves a sinister purpose. Deepfakes continue to amuse users online and have become increasingly prominent in the mainstream media, including the film and news industry.

Misinformation or Disinformation, commonly known as fake news, are phenomena catalysed by the technological revolution. Mis/Disinformation takes many forms such as memes, misattributed content, fabricated or cloned websites. Bots, trolls and other AI can increase the reach of misinformation campaigns.

Misinformation campaigns have been widely used during elections to manipulate voters and to influence the outcome of the poll. Bots have been employed to affect the public debate and influence the electorate in domestic and international elections. A significant proportion of misinformation, and specifically memes, contain hate speech and incitement to hatred, promoting messages based on violence and racism. However, all this is boosted by the fact that the digital space provides a platform for posting anonymously, resulting in lack of accountability.

States, business and international organisations have been developing new responses to tackling mis/disinformation, ranging from establishing expert groups and task forces, removal of content and accounts, anti-disinformation laws and media literacy programmes. Some of these measures may pose risk to human rights, particularly to freedom of expression.

How to guard against deepfake technology?

Despite the lack of mature detection tools, here are some suggestions that can help people and institutions guard against deepfake technology.

Be Sceptical of Media - It is important to be critical of the media consumed and to verify the authenticity of audio and video content that you come across.

Get serious about identity verification - Users need to exercise due diligence in verifying that someone is who they claim to be.

Educate Users - Familiarise users with deepfake content and teach them how to be sceptical about it.

Confirm and deploy basic security measures - Basic Cybersecurity best practices play a vital role in minimising the risk of any deepfake cybercrime attack. Some critical actions you can include: 1. Making regular backups to protect your data 2. Using stronger passwords and changing them frequently

3. Continuing to secure your systems and educate your users.

Wish to get more such exciting updates? Following Tiger Advertising, a 360 Advertising Agency in Vadodara and Ahmedabad.

#360 degree advertising agency in gujarat#360 degree marketing solutions in gujarat#360 degree advertising agency

0 notes

Text

The connections between AI, digital media, and democracy are multifaceted and complex, and they have both positive and negative implications for the functioning of democratic societies. Here's an analysis of these connections:

Information Dissemination:

Positive: AI algorithms can help analyze vast amounts of data from digital media sources to identify trends, patterns, and emerging issues. This can aid journalists, policymakers, and citizens in making informed decisions and promoting transparency in a democracy.

Negative: AI-powered algorithms on social media platforms can amplify misinformation and filter bubbles. These algorithms prioritize content that generates engagement, which often leads to the spread of sensationalist or polarizing content. This can erode the quality of information available to citizens and undermine the democratic process.

Personalization:

Positive: AI can personalize content delivery, tailoring news and information to individual preferences. This can enhance user experience and engagement with digital media, making it more accessible and appealing.

Negative: Personalization can create echo chambers, where individuals are exposed only to information that aligns with their existing beliefs. This can lead to confirmation bias and hinder open dialogue, which is essential for democratic deliberation.

Censorship and Surveillance:

Positive: AI can be used for content moderation to remove harmful or illegal content, such as hate speech or graphic violence, from digital media platforms. This helps maintain a safer online environment.

Negative: AI-based surveillance and censorship can be abused by governments to stifle dissent and limit freedom of expression. This poses a significant threat to democracy, as it curtails citizens' ability to voice their opinions and access diverse information.

Manipulation and Deepfakes:

Negative: AI can generate highly convincing deepfake videos and manipulate digital content. This can be used to deceive the public, create fake news, and undermine trust in digital media and democratic institutions.

Accessibility and Inclusivity:

Positive: AI can improve accessibility by providing automated transcription, translation, and other assistive technologies for digital media content. This ensures that information is available to a wider and more diverse audience, promoting democratic inclusivity.

Election Interference:

Negative: AI can be used to manipulate elections through disinformation campaigns, voter profiling, and micro-targeting. This can undermine the integrity of democratic processes and lead to outcomes that do not accurately reflect the will of the people.

Ethical Considerations:

Positive: Discussions around the ethical use of AI in digital media can lead to the development of guidelines and regulations that protect democratic values, such as transparency in algorithmic decision-making.

Negative: The lack of clear ethical standards and regulations for AI in digital media can result in unintended consequences that threaten democracy, as seen in instances of algorithmic bias or discrimination.

In conclusion, AI's role in digital media has profound implications for democracy. While it has the potential to enhance information dissemination, personalization, and accessibility, it also poses risks such as misinformation, censorship, and election interference. The impact of AI on democracy will depend on how it is developed, deployed, and regulated, making it essential to strike a balance between innovation and safeguarding democratic principles.

--------------------------------------------------------------------------------------------------------------------------------

How can AI technology be positively deployed to underpin political institutions?

AI technology has the potential to positively impact political institutions in various ways, promoting transparency, efficiency, and better decision-making. Here are several ways in which AI can be deployed to underpin political institutions:

Data Analysis and Predictive Analytics: AI can help political institutions analyze vast amounts of data, including polling data, social media sentiment, and historical election results. Predictive analytics can be used to forecast election outcomes, identify emerging issues, and gauge public opinion.

Voter Engagement: AI-powered chatbots and virtual assistants can engage with citizens to provide information about elections, candidates, and important issues. These tools can also help with voter registration and absentee ballot requests, making the electoral process more accessible.

Enhancing Policy Making: AI can assist policymakers in identifying trends and patterns in data that may inform better policy decisions. Natural language processing (NLP) algorithms can help in summarizing research papers, public comments, and legislative texts, making it easier for lawmakers to understand complex issues.

Election Security: AI can be used to enhance the security of elections by identifying and mitigating cyber security threats, such as hacking attempts and disinformation campaigns. Machine learning algorithms can help detect anomalies in voter registration data and voting patterns to prevent fraud.

Constituent Services: AI-powered chat bots and virtual assistants can handle routine constituent inquiries and complaints, freeing up human staff to focus on more complex issues. These tools can provide quick and efficient responses to common questions.

Redistricting: AI algorithms can assist in the redistricting process by ensuring that electoral districts are drawn fairly and without bias. By analyzing demographic data and historical voting patterns, AI can help create more representative and equitable districts.

Public Engagement and Feedback: AI can facilitate public engagement through online forums and social media. Sentiment analysis can help political institutions understand public sentiment and concerns, allowing them to respond more effectively to citizen feedback.

Resource Allocation: AI can help political campaigns and parties optimize their resource allocation by identifying key demographics and regions where they should focus their efforts to maximize impact.

Transparency and Accountability: AI can assist in monitoring campaign finance and political contributions, helping to ensure transparency and accountability in the political process.

Language Translation and Accessibility: AI-powered translation tools can make political information more accessible to citizens who speak different languages. This can help bridge language barriers and ensure that information is available to a wider audience.

Disaster Response and Crisis Management: During emergencies or natural disasters, AI can help political institutions analyze real-time data, predict the impact of disasters, and coordinate response efforts more effectively.

However, it's crucial to deploy AI technology in a way that prioritizes ethics, privacy, and fairness. Additionally, there should be transparency and accountability in the use of AI in political institutions to maintain public trust. Regular audits, data protection measures, and oversight mechanisms are essential to ensure that AI is deployed responsibly and for the benefit of society as a whole.

--------------------------------------------------------------------------------------------------------------------------------

Describe the process of audio event detection, recognition, and monitoring with AI Audio event detection, recognition, and monitoring with AI involves the use of artificial intelligence and machine learning techniques to analyze and understand audio signals, identify specific events or patterns, and continuously monitor audio data for relevant information. This process can have various applications, including surveillance, security, environmental monitoring, and more. Here's an overview of the steps involved:

Data Collection:

The process begins with the collection of audio data. This data can come from various sources, such as microphones, sensors, or audio recordings.

Data Preprocessing: Raw audio data is often noisy and may contain irrelevant information. Preprocessing steps are applied to clean and prepare the data for analysis. This can include noise reduction, filtering, and audio normalization.

Feature Extraction: Extracting relevant features from the audio data is crucial for AI models to understand and identify events. Common audio features include spectral features (e.g., Mel-frequency cepstral coefficients - MFCCs), pitch, tempo, and more. These features help represent the audio data in a format suitable for machine learning.

Machine Learning Models: AI models, such as deep neural networks (e.g., convolutional neural networks - CNNs, recurrent neural networks - RNNs) or more advanced models like deep learning-based spectrogram analysis models, are trained using labeled audio data. This training process allows the AI model to learn patterns and characteristics associated with specific audio events.

Event Detection:In this stage, the trained AI model is applied to real-time or recorded audio streams. The model analyzes the audio data in segments, attempting to detect the presence of specific events or sounds. This could be anything from detecting gunshots in a security system to identifying animal sounds in environmental monitoring.

Event Recognition:Once an event is detected, the AI system can further analyze and recognize the event's nature or category. For instance, it can differentiate between different types of alarms, voices, musical instruments, or specific words in speech.

Monitoring and Alerting:The system continuously monitors the audio data and keeps track of detected and recognized events. When a relevant event is detected, the system can trigger notifications or alerts. This is especially useful in security and surveillance applications, where timely response is crucial.

Feedback and Improvement: Over time, the AI model can be fine-tuned and improved by continuously feeding it more labeled data, incorporating user feedback, and adjusting its parameters to reduce false positives and false negatives.

Post-processing: To enhance the accuracy of the system, post-processing techniques can be applied to the detected events. This may involve contextual analysis, temporal analysis, or combining audio data with other sensor data for better event understanding.

Visualization and Reporting: The results of the audio event detection and monitoring can be visualized through user interfaces or reports, making it easier for users to understand and act on the information provided by the AI system.

Overall, audio event detection, recognition, and monitoring with AI leverage machine learning to provide real-time insights and actionable information from audio data, enabling various applications across different domains. The effectiveness of such systems depends on the quality of training data, the sophistication of AI models, and the post-processing techniques applied.

For AI generator, please click this

0 notes

Text

How AI could take over elections – and undermine democracy

Could organizations use artificial intelligence language models such as ChatGPT to induce voters to behave in specific ways?

Sen. Josh Hawley asked OpenAI CEO Sam Altman this question in a May 16, 2023, U.S. Senate hearing on artificial intelligence. Altman replied that he was indeed concerned that some people might use language models to manipulate, persuade and engage in one-on-one interactions with voters.

Altman did not elaborate, but he might have had something like this scenario in mind. Imagine that soon, political technologists develop a machine called Clogger – a political campaign in a black box. Clogger relentlessly pursues just one objective: to maximize the chances that its candidate – the campaign that buys the services of Clogger Inc. – prevails in an election.

While platforms like Facebook, Twitter and YouTube use forms of AI to get users to spend more time on their sites, Clogger’s AI would have a different objective: to change people’s voting behavior.

An AI-driven political campaign could be all things to all people. (Credit: Eric Smalley, TCUS; Biodiversity Heritage Library/Flickr; Taymaz Valley/Flickr, CC BY-ND

How Clogger would work

As a political scientist and a legal scholar who study the intersection of technology and democracy, we believe that something like Clogger could use automation to dramatically increase the scale and potentially the effectiveness of behavior manipulation and microtargeting techniques that political campaigns have used since the early 2000s. Just as advertisers use your browsing and social media history to individually target commercial and political ads now, Clogger would pay attention to you – and hundreds of millions of other voters – individually.

It would offer three advances over the current state-of-the-art algorithmic behavior manipulation. First, its language model would generate messages — texts, social media and email, perhaps including images and videos — tailored to you personally. Whereas advertisers strategically place a relatively small number of ads, language models such as ChatGPT can generate countless unique messages for you personally – and millions for others – over the course of a campaign.

CONTINUED..

1 note

·

View note

Text

Thursday, June 1, 2023

Deepfaking it: America’s 2024 election collides with AI boom