Text

How NLP can increase Financial Data Efficiency

The finance sector is driven to make a significant investment in natural language processing (NLP) in order to boost financial performance by the quickening pace of digitization. NLP has become an essential and strategic instrument for financial research as a result of the massive growth in textual data that has recently become widely accessible. Research reports, financial statistics, corporate filings, and other pertinent data gleaned from print media and other sources are all subject to the extensive time and resource analysis by analysts. NLP can analyze this data, providing chances to find special and valuable insights.

NLP & AI for Finance

The automation now includes a new level of support for workers provided by AI. If AI has access to all the required data, it can deliver in-depth data analysis to help finance teams with difficult decisions. In some situations, it might even be able to recommend the best course of action for the financial staff to adopt and carry out.

NLP is a branch of AI that uses machine learning techniques to enable computer systems to read and comprehend human language. The most common projects to improve human-machine interactions that use NLP are a chatbot for customer support or a virtual assistant.

Finance is increasingly being driven by data. The majority of the crucial information can be found in written form in documents, texts, websites, forums, and other places. Finance professionals spend a lot of time reading analyst reports, financial print media, and other sources of information. By using methods like NLP and ML to create the financial infrastructure, data-driven informed decisions might be made in real time.

NLP in finance – Use cases and applications

Loan risk assessments, auditing and accounting, sentiment analysis, and portfolio selection are all examples of finance applications for NLP. Here are some examples of how NLP is changing the financial services industry:

Chatbots

Chatbots are artificially intelligent software applications that mimic human speech when interacting with users. Chatbots can respond to single words or carry out complete conversations, depending on their level of intelligence, making it difficult to tell them apart from actual humans. Chatbots can comprehend the nuances of the English language, determine the true meaning of a text, and learn from interactions with people thanks to natural language processing and machine learning. They consequently improve with time. The approach employed by chatbots is two-step. They begin by analyzing the query that has been posed and gathering any data from the user that may be necessary to provide a response. They then give a truthful response to the query.

Risk assessments

Based on an evaluation of the credit risk, banks can determine the possibility of loan repayment. The ability to pay is typically determined by looking at past spending patterns and loan payment history information. However, this information is frequently missing, especially among the poor. Around half of the world’s population does not use financial services because of poverty, according to estimates. NLP is able to assist with this issue. Credit risk is determined using a range of data points via NLP algorithms. NLP, for instance, can be used to evaluate a person’s mindset and attitude when it comes to financing a business. In a similar vein, it might draw attention to information that doesn’t make sense and send it along for more research. Throughout the loan process, NLP can be used to account for subtle factors like the emotions of the lender and borrower.

Stock behavior predictions

Forecasting time series for financial analysis is a difficult procedure due to the fluctuating and irregular data, as well as the long-term and seasonal variations, which can produce major flaws in the study. However, when it comes to using financial time series, deep learning and NLP perform noticeably better than older methods. These two technologies provide a lot of information-handling capacity when utilized together.

Accounting and auditing

Businesses now recognize how crucial NLP is to gain a significant advantage in the audit process after dealing with countless everyday transactions and invoice-like papers for decades. NLP can help financial professionals focus on, identify, and visualize anomalies in commonplace transactions. When the right technology is applied, identifying anomalies in the transactions and their causes requires less time and effort. NLP can help with the detection of significant potential threats and likely fraud, including money laundering. This helps to increase the amount of value-creating activities and spread them out across the firm.

Text Analytics

Text analytics is a technique for obtaining valuable, qualitative structured data from unstructured text, and its importance in the financial industry has grown. Sentiment analysis is one of the most often used text analytics objectives. It is a technique for reading a text’s context to draw out the underlying meaning and significant financial entities.

Using the NLP engine for text analysis, you may combine the unstructured data sources that investors regularly utilize into a single, better format that is designed expressly for financial applicability. This intelligent format may give relevant data analytics, increasing the effectiveness and efficiency of data-driven decision-making by enabling intelligible structured data and effective data visualization.

Financial Document Analyzer

Users may connect their document finance solution to existing workflows using AI technology without altering the present processes. Thanks to NLP, financial professionals may now automatically read and comprehend a large number of financial papers. Businesses can train NLP models using the documentation resources they already have.

The databases of financial organizations include a vast amount of documents. In order to obtain relevant investing data, the NLP-powered search engine compiles the elements, conceptions, and ideas presented in these publications. In response to employee search requests from financial organizations, the system then displays a summary of the most important facts on the search engine interface.

Key Benefits of Utilizing NLP in Finance

Consider the following benefits of utilizing NLP to the fullest, especially in the finance sector:

Efficiency

It can transform large amounts of unstructured data into meaningful insights in real-time.

Consistency

Compared to a group of human analysts, who may each interpret the text in somewhat different ways, a single NLP model may produce results far more reliably.

Accuracy

Human analysts might overlook or misread content in voluminous unstructured documents. It gets eliminated to a greater extent in the case of NLP-backed systems.

Scaling

NLP technology enables text analysis across a range of documents, internal procedures, emails, social media data, and more. Massive amounts of data can be processed in seconds or minutes, as opposed to days for manual analysis.

Process Automation

You can automate the entire process of scanning and obtaining useful insights from the financial data you are analyzing thanks to NLP.

Final Thoughts

The finance industry can benefit from a variety of AI varieties, including chatbots that act as financial advisors and intelligent automation. It’s crucial to have a cautious and reasoned approach to AI given the variety of choices and solutions available for AI support in finance.

We have all heard talk about the potential uses of artificial intelligence in the financial sector. It’s time to apply AI to improve both the financial lives of customers and the working lives of employees. TagX has an expert labeling team who can analyze, transcribe, and label cumbersome financial documents and transactions.

0 notes

Text

MLOps and ML Data pipeline: Key Takeaways

If you have ever worked with a Machine Learning (ML) model in a production environment, you might have heard of MLOps. The term explains the concept of optimizing the ML lifecycle by bridging the gap between design, model development, and operation processes.

As more teams attempt to create AI solutions for actual use cases, MLOps is now more than just a theoretical idea; it is a hotly debated area of machine learning that is becoming increasingly important. If done correctly, it speeds up the development and deployment of ML solutions for teams all over the world.

MLOps is frequently referred to as DevOps for Machine Learning while reading about the word. Because of this, going back to its roots and drawing comparisons between it and DevOps is the best way to comprehend the MLOps concept.

MLOps vs DevOps

DevOps is an iterative approach to shipping software applications into production. MLOps borrows the same principles to take machine learning models to production. Either Devops or MLOps, the eventual objective is higher quality and control of software applications/ML models.

What is MLOps?

Machine Learning Operations is referred to as MLOps. Therefore, the function of MLOps is to act as a communication link between the operations team overseeing the project and the data scientists who deal with machine learning data.

For the development and improvement of machine learning and AI solutions, MLOps is a helpful methodology. By utilizing continuous integration and deployment (CI/CD) procedures with appropriate monitoring, validation, and governance of ML models, data scientists and machine learning engineers can work together and accelerate the speed of model creation and production by using an MLOps approach.

The key MLOps principles are:

Versioning – keeping track of the versions of data, ML model, code around it, etc.;

Testing – testing and validating an ML model to check whether it is working in the development environment;

Automation – trying to automate as many ML lifecycle processes as possible;

Reproducibility – we want to get identical results given the same input;

Deployment – deploying the model into production;

Monitoring – checking the model’s performance on real-world data.

What are the benefits of MLOps?

The primary benefits of MLOps are efficiency, scalability, and risk reduction.

Efficiency: MLOps allows data teams to achieve faster model development, deliver higher quality ML models, and faster deployment and production.

Scalability: Thousands of models may be supervised, controlled, managed, and monitored for continuous integration, continuous delivery, and continuous deployment thanks to MLOps’ extensive scalability and management capabilities. MLOps, in particular, makes ML pipelines reproducible, enables closer coordination between data teams, lessens friction between DevOps and IT, and speeds up release velocity.

Risk reduction: Machine learning models often need regulatory scrutiny and drift-check, and MLOps enables greater transparency and faster response to such requests and ensures greater compliance with an organization’s or industry’s policies.

Data pipeline for ML operations

One significant difference between DevOps and MLOps is that ML services require data–and lots of it. In order to be suitable for ML model training, most data has to be cleaned, verified, and tagged. Much of this can be done in a stepwise fashion, as a data pipeline, where unclean data enters the pipeline, and then the training, validating, and testing data exits the pipeline.

The data pipeline of a project involves several key steps:

Data collection:

Whether you source your data in-house, open-source, or from a third-party data provider, it’s important to set up a process where you can continuously collect data, as needed. You’ll not only need a lot of data at the start of the ML development lifecycle but also for retraining purposes at the end. Having a consistent, reliable source for new data is paramount to success.

Data cleansing:

This involves removing any unwanted or irrelevant data or cleaning up messy data. In some cases, it may be as simple as converting data into the format you need, such as a CSV file. Some steps of this may be automatable.

Data annotation:

Labeling your data is one of the most time-consuming, difficult, but crucial, phases of the ML lifecycle. Companies that try to take this step internally frequently struggle with resources and take too long. Other approaches give a wider range of annotators the chance to participate, such as hiring freelancers or crowdsourcing. Many businesses decide to collaborate with external data providers, who can give access to vast annotator communities, platforms, and tools for any annotating need. Depending on your use case and your need for quality, some steps in the annotation process may potentially be automated.

After the data has been cleaned, validated, and tagged, you can begin training the ML model to categorize, predict, or infer whatever it is that you want the model to do. Training, validation, and hold-out testing datasets are created out of the tagged data. The model architecture and hyperparameters are optimized many times using the training and validation data. Once that is finished, you test the algorithm on the hold-out test data one last time to check if it performs enough on the fresh data you need to release.

Setting up a continuous data pipeline is an important step in MLOps implementation. It’s helpful to think of it as a loop, because you’ll often realize you need additional data later in the build process, and you don’t want to have to start from scratch to find it and prepare it.

Conclusion

MLOps help ensure that deployed models are well maintained, performing as expected, and not having any adverse effects on the business. This role is crucial in protecting the business from risks due to models that drift over time, or that are deployed but unmaintained or unmonitored.

TagX is involved in delivering Data for each step of ML operations. At TagX, we provide high-quality annotated training data to power the world’s most innovative machine learning and business solutions. We can help your organization with data collection, Data cleaning, data annotation, and synthetic data to train your Machine learning models.

0 notes

Text

Implementation of Artificial Intelligence in Gaming

What is AI in Gaming?

AI in gaming is the use of artificial intelligence to create game characters and environments that are capable of responding to a player’s actions in a realistic and dynamic way. AI can be used to create believable characters that can interact with the player, create dynamic levels, and generate new gaming experiences. AI can even be used to create challenging opponents that require the player to think strategically.

AI Development in Gaming

AI development in gaming refers to the use of artificial intelligence (AI) to create non-player characters (NPCs) that can interact with players in a game environment. AI development is used in modern video games to create immersive and realistic gaming experiences. AI development has been used to create NPCs that can respond to players in various ways, such as offering advice and guidance. AI can also be used to create NPCs that can challenge players and offer a more realistic gaming experience. Additionally, AI development is used to create more complex and lifelike game environments, such as virtual worlds and cities. AI can also be used to create more intelligent game enemies that can react to players’ actions and strategies. AI development is also being used to create autonomous game characters that can act on their own or interact with players.

Features in AI Gaming include:

1. Dynamic Environments:

AI games can have dynamic environments that change in real-time. This allows for greater complexity and unpredictability compared to games that have a fixed environment.

2. AI Opponents:

AI opponents can be programmed to use a range of strategies to challenge the player and make the game more interesting.

3. Adaptive Learning:

AI games can learn from their mistakes and adjust their strategies over time to become more challenging.

4. Procedural Generation:

AI games can generate levels and opponents in real-time, making the game more unpredictable and providing an ever-changing challenge.

5. Natural Language Processing:

AI games can use natural language processing to interpret player commands and understand the player’s intent.

6. Real-Time Decision Making:

AI games can make decisions in real-time, allowing the game to be more responsive to the player’s actions.

7. Realistic Physics and Animation:

AI games can use realistic physics and animation to create a believable game world.

8. Audio Recognition:

AI games can use audio recognition to interpret player commands and understand the player’s intent.

How is AI used in Video Games?

Ai is used in video games to bring realism and challenge to the gaming experience. This can include non-player characters (NPCs) that react to the player’s actions, enemy units that use strategic decision-making, environment-specific behaviors, and more. AI can also be used to create and manage dynamic in-game events and levels, as well as to generate opponents that can adapt to the player’s skill level.

Application of AI in Games:

1. Autonomous Opponents:

Autonomous opponents are computer-controlled characters in a video game. AI can be used to create autonomous opponents that can adapt to the player’s behavior and provide a challenging gaming experience.

2. Pathfinding:

Pathfinding is a cornerstone of game AI and is used to help the characters and enemies move around the game environment correctly. AI techniques such as A* search and Dijkstra’s algorithm are used to calculate the best possible routes for characters to take.

3. Natural Language Processing:

Natural language processing (NLP) is a form of artificial intelligence that allows machines to understand and interpret human language. AI can be used to create virtual characters in games that are capable of understanding and responding to the player’s input in natural language.

4. Decision-Making and Planning:

AI can be used to create characters that can make decisions and plan actions based on the current game state. AI techniques such as Monte Carlo Tree Search and Reinforcement Learning are used to help characters make decisions in the most optimal way.

5. Procedural Content Generation:

Procedural content generation is a form of AI that can be used to generate content in games such as levels, items

Advantages of AI in Gaming:

1. Improved User Experiences:

Artificial Intelligence technology can be used to enhance the user experience in gaming by providing players with more engaging and immersive gameplay. AI can be used to generate more interesting scenarios, create more challenging puzzles, and provide better feedback to the player.

2. Increased Realism:

AI can be used to create more realistic environments, characters, and stories. This can lead to a more believable and engaging gaming experience.

3. Improved Performance:

AI can be used to optimize the performance of a game. AI can be used to analyze the user’s gaming experience and provide feedback on how to improve performance.

4. Greater Variety:

AI can be used to generate more diverse and interesting content. This can create more dynamic and exciting gaming experiences.

5. Improved Accessibility:

AI can be used to create more accessible gaming experiences. AI can be used to create more intuitive and user-friendly interfaces, making gaming more accessible to a wider range of players.

Top 5 AI Innovations in the Gaming Industry:

1. Autonomous AI Agents:

Autonomous AI agents are programmed to act independently in a virtual environment. These agents are able to interact with a game’s environment and other characters, as well as make decisions about when and how to act.

2. Natural Language Processing:

Natural language processing (NLP) is the ability of AI to understand and interpret human language. This technology is used in many video games to help players communicate with each other and the game itself.

3. Adaptive Difficulty:

Adaptive difficulty is a feature that allows the game to adjust its difficulty level based on the player’s performance. This helps keep the game interesting, as the challenge can be adjusted to match each player’s skill level.

4. Automated Level Design:

Automated level design is a technology that uses AI to create levels for video games. This allows developers to quickly and easily generate a variety of levels for their games.

5. AI-Driven NPCs:

Non-player characters (NPCs) are characters in a game that are controlled by the AI. This technology allows NPCs to act realistically and react to the player’s actions.

Conclusion:

AI in gaming has come a long way since its early days, and it will continue to evolve in the future. AI has changed the way games are designed, developed, and played, and has opened up new possibilities for gamers. It can help create immersive experiences, create smarter opponents, and create more realistic and varied gaming experiences. It will continue to be used to explore new ways of playing games, providing gamers with ever more exciting and engaging gaming experiences.

AI is being used in various aspects of gaming, from game design and development to helping players with strategy and tactics.AI can help game developers create games with more complex environments and have more intelligent opponents. It can also help players find better strategies and tactics to win games. AI can also be used to create games with more sophisticated storylines and narrative arcs. In the future, AI can be used to create virtual worlds with more diverse and complex populations, allowing for more immersive and dynamic gaming experiences.

0 notes

Text

Intelligent Document Processing Workflow and Use cases

Artificial Intelligence has stepped up to the front line of real-world problem solving and business transformation with Intelligent Document Processing (IDP) becoming a vital component in the global effort to drive intelligent automation into corporations worldwide.

IDP solutions read the unstructured, raw data in complicated documents using a variety of AI-related technologies, including RPA bots, optical character recognition, natural language processing, computer vision, and machine learning. IDP then gathers the crucial data and transforms it into formats that are structured, pertinent, and usable for crucial processes including government, banking, insurance, orders, invoicing, and loan processing forms. IDP gathers the required data and forwards it to the appropriate department or place further along the line to finish the process.

Organizations can digitize and automate unstructured data coming from diverse documentation sources thanks to intelligent document processing (IDP). These consist of scanned copies of documents, PDFs, word-processing documents, online forms, and more. IDP mimics human abilities in document identification, contextualization, and processing by utilizing workflow automation, natural language processing, and machine learning technologies.

What exactly is Intelligent Document Processing?

A relatively new category of automation called “intelligent document processing” uses artificial intelligence services, machine learning, and natural language processing to help businesses handle their papers more effectively. Because it can read and comprehend the context of the information it extracts from documents, it marks a radical leap from earlier legacy automation systems and enables businesses to automate even more of the document processing lifecycle.

Data extraction from complicated, unstructured documents is automated by IDP, which powers both back office and front office business operations. Business systems can use data retrieved by IDP to fuel automation and other efficiencies, including the automated classification of documents. Enterprises must manually classify and extract data from these papers in the absence of IDP. They have a quick, affordable, and scalable option with IDP.

How does intelligent document processing work?

There are several steps a document goes through when processed with IDP software. Typically, these are:

Data collection

Intelligent document processing starts with ingesting data from various sources, both digital and paper-based. For taking in digitized data, most IDP solutions feature built-in integrations or allow developing custom interfaces to enterprise software. When it comes to collecting paper-based or handwritten documents, companies either relies on its internal data or outsource the collection requirement to third party vendor like TagX who can handle the whole collection process for a specific IDP usecase.

Pre-processing

Intelligent document processing (IDP) can only produce trustworthy results if the data it uses is well-structured, accurate, and clean. Because of this, intelligent document recognition software cleans and prepares the data it receives before actually extracting it. For that, a variety of techniques are employed, ranging from deskewing and noise reduction to cropping and binarization, and beyond. During this step, IDP aims to integrate, validate, fix/impute errors, split images, organise, and improve photos.

Classification & Extraction

Enterprise documentation typically has multiple pages and includes a variety of data. Additionally, the success of additional analysis depends on whether the various data types present in a document are processed according to the correct workflow. During the data extraction stage, knowledge from the documents is extracted. Machine learning models extract specific data from the pre-processed and categorised material, such as dates, names, or numbers. Large volumes of subject-matter data are used to train the machine learning models that run IDP software. Each document’s pertinent entities are retrieved and tagged for IDP model training.

Validation and Analytics

The retrieved data is available to ML models at the post-processing phase. To guarantee the accuracy of the processing results, the extracted data is subjected to a number of automated or manual validation tests. The collected data is now put together into a finished output file, which is commonly in JSON or XML format. A business procedure or a data repository receives the file. IDP can anticipate the optimum course of action. IDP can also turn data into insights, automation, recommendations, and forecasts by utilising its AI capabilities.

Top Use Cases of Intelligent Document Processing

Invoice Processing

With remote work, processing bills has never been simpler for the account payable and human resources staff. Invoice collection, routing, and posting via email and paper processes results in high costs, poor visibility, and compliance and fraud risks. Also, the HR and account payable staff shares the lion’s part of their day on manual repetitive chores like data input and chasing information that leads to delay and inaccurate payment. However, intelligent document processing makes sure that all information is gathered is in an organised fashion, and data extraction in workflow only concentrates on pertinent data. Intelligent document processing assists the account payable team in automating error reconciliation, data inputs, and the decision-making process from receipt to payment. IDP ensures organizations can limit errors and reduce manual intervention.

Claims Processing

Insurance companies frequently suffer with data processing because of unstructured data and varying formats, including PDF, email, scanned, and physical documents. These companies mainly rely on a paper-based system. Additionally, manual intervention causes convoluted workflows, sluggish processing, high expenses, increased mistake, and fraud. Both insurers and clients must wait a long time during this entire manual process. However, intelligent document processing is a cutting-edge method that enables insurers to swiftly examine the large amount of structured and unstructured data and spot fraudulent activity. Insurance companies can quickly identify, validate, and integrate the data automatically and offer quicker claims settlement by utilising AI technologies like OCR and NLP.

Fraud Detection

Document fraud instances are increasing as a result of the processing of a lot of data. Additionally, the manual inspection of fraudulent documents and invoices is a time-consuming traditional procedure. Any fraudulent financial activity involving paper records may result in diminished client confidence and higher operating expenses. Therefore, implementing automated workflows for transaction validation and verification is essential to preventing fraudulent transactions. Furthermore, intelligent document processing has the ability to automatically identify and annotate questionable transactions for the fraud team. Furthermore, IDP frees the operational team from manual labour while reducing fraud losses.

Logistics

Every step of the logistics process, including shipping, transportation, warehousing, and doorstep consumer delivery, involves thousands of hands exchanging data. For manual processing by outside parties, this information must be authenticated, verified, cross-checked, and sometimes even re-entered. Companies utilize IDP to send invoices, labels, and agreements to vendors, contractors, and transportation teams at the supply chain level. IDP enables to read unstructured data from many sources, which eliminates the need for manual processing and saves countless hours of work. It also helps to handle the issue of document variability. IDP keeps up with enterprises as they grow and scale to handle larger client user bases due to intelligent automation of various document processing workflow components.

Medical records

It is crucial to keep patient records in the healthcare sector. In a particular situation, quick and easy access to information may be essential; as a result, it is crucial to digitize all patient-related data. IDP can now be used to effectively manage a patient’s whole medical history and file. Many hospitals continue to save patient information in manual files and disorganised paper formats that are prone to being lost. So it becomes a challenge for a doctor to sort through all the papers in the files to find what they’re looking for when they need to access a specific file. All medical records and diagnostic data may be kept in one location using an IDP, and only pertinent data can be accessed when needed.

The technologies behind intelligent document processing

When it comes to processing documents in a new, smart way, it all heavily relies on three cornerstones: Artificial intelligence, optical character recognition, and robotic process automation. Let’s get into a bit more detail on each technology.

Optical Character Recognition

OCR is a narrowly focused technology that can recognize handwritten, typed, or printed text within scanned images and convert it into a machine-readable format. As a standalone solution, OCR simply “sees” what’s there on a document and pulls out the textual part of the image, but it doesn’t understand the meanings or context. That’s why the “brain” is needed. Thus OCR is trained using AI and deep learning algorithms to increase its accuracy.

Artificial intelligence

Artificial intelligence deals with designing, training, and deploying models that mimic human intelligence. AI/ML is used to train the system to identify, classify, and extract relevant information using tags, which can be linked to a position or visual elements or a key phrase. AI is a field of knowledge that focuses on creating algorithms and training models on data so that they can process new data inputs and make decisions by themselves. So, the models learn to “understand” imaging information and delve into the meaning of textual data the way humans do.IDP heavily relies on such ML-driven technologies as

Computer Vision (CV)

CV utilizes deep neural networks for image recognition. It identifies patterns in visual data say, document scans, and classifies them accordingly. Computer vision uses AI to enable automatic extraction, analysis, and understanding of useful information from digital images. Only a few solutions leverage computer vision technology to recognize images/pictures within documents.

Natural Language Processing (NLP)

NLP finds language elements such as separate sentences, words, symbols, etc., in documents, interprets them, and performs a linguistic-based document summary. With the help of NLP, IDP solutions can analyze the running text in documents, understand the context, consolidate the extracted data, and map the extracted fields to a defined taxonomy. It can help in recognizing the sentiments from the text (e.g., from emails and other unstructured data) and in classifying documents into different categories. It also assists in creating summaries of large documents or data from charts using NLG by capturing key data points.

Robotic Process Automation

RPA is designed to perform repetitive business tasks through the use of software bots. The technology has proved to be effective in working with data presented in a structured format. RPA software can be configured to capture information from certain sources, process and manipulate data, and communicate with other systems. Most importantly, since RPA bots are usually rule-based, if there are any changes in the structure of the input, they won’t be able to perform a task.RPA bots can extend the intelligent process automation pipeline, executing such tasks as processing transactions, manipulating the extracted data, triggering responses, or communicating with other enterprises IT systems.

Conclusion

It is needless to say; the number of such documents will keep on piling up and making it impossible for many organizations to manage effectively. Organizations should be able to make use of this data for the benefit of businesses, but when it becomes so voluminous in physical documents gleaning insights from it will become even more tedious. With the use of Intelligent Document Processing, the time-consuming, monotonous, and tedious process is made simpler without any risks of manual errors. This way, data becomes more powerful even in varying formats and also helps organizations to ensure enhanced productivity and operational efficiency.

The implementation of IDP is not as easy. The big challenge is a lack of training data. For an artificial intelligence model to operate effectively, it must be trained on large amounts of data. If you don’t have enough of it, you could still tap into document processing automation by relying on third-party vendors like Tagx who can help you with the collection, classification, Tagging, and data extraction. The more processes you automate, the more powerful AI will become, enabling it to find ways to automate even more.

0 notes

Text

How Training Data is prepared for Computer Vision

As humans, we generally spend our lives observing our surroundings using optic nerves, retinas, and the visual cortex. We gain context to differentiate between objects, gauge their distance from us and other objects, calculate their movement speed, and spot mistakes. Similarly, computer vision enables AI-powered machines to train themselves to carry out these very processes. These machines use a combination of cameras, algorithms, and data to do so. Today, computer vision is one of the hottest subfields of artificial intelligence and machine learning, given its wide variety of applications and tremendous potential. Its goal is to replicate the powerful capacities of human vision.

Computer vision needs a large database to be truly effective. This is because these solutions analyze information repeatedly until they gain every possible insight required for their assigned task. For instance, a computer trained to recognize healthy crops would need to ‘see’ thousands of visual reference inputs of crops, farmland, animals, and other related objects. Only then would it effectively recognize different types of healthy crops, differentiate them from unhealthy crops, gauge farmland quality, detect pests and other animals among the crops, and so on.

How Does Computer Vision Work?

Computer Vision primarily relies on pattern recognition techniques to self-train and understand visual data. The wide availability of data and the willingness of companies to share them has made it possible for deep learning experts to use this data to make the process more accurate and fast.

Generally, computer vision works in three basic steps:

1: Acquiring the image Images, even large sets, can be acquired in real-time through video, photos, or 3D technology for analysis.

2: Processing and annotating the image The models are trained by first being fed thousands of labeled or pre-identified images. The collected data is cleaned according to the use case and the labeling is performed.

3: Understanding the image The final step is the interpretative step, where an object is identified or classified.

What is training data?

Training data is a set of samples such as videos and images with assigned labels or tags. It is used to train a computer vision algorithm or model to perform the desired function or make correct predictions. Training data goes by several other names, including learning set, training set, or training data set. It is used to train the machine learning model to get desired output. The model also scrutinizes the dataset repetitively to understand its traits and fine-tune itself for optimal performance.

In the same way, human beings learn better from examples; computers also need them to begin noticing patterns and relationships in the data. But unlike human beings, computers require plenty of examples as they don’t think as humans do. In fact, they don’t see objects or people in the images. They need plenty of work and huge datasets for training a model to recognize different sentiments from videos. Thus a huge amount of data needs to be collected for training

Types of training data

Images, videos, and sensor data are commonly used to train machine learning models for computer vision. The types of training data used include:

2D images and videos: These datasets can be sourced from scanners, cameras, or other imaging technologies.

3D images and videos: They’re also sourced from scanners, cameras, or other imaging technologies.

Sensor data: It’s captured using remote technology such as satellites.

Training Data Preparation

If you plan to use a deep learning model for classification or object detection, you will likely need to collect data to train your model. Many deep learning models are available pre-trained to detect or classify a multitude of common daily objects such as cars, people, bicycles, etc. If your scenario focuses on one of these common objects, then you may be able to simply download and deploy a pre-trained model for your scenario. Otherwise, you will need to collect and label data to train your model.

Data Collection

Data collection is the process of gathering relevant data and arranging it to create data sets for machine learning. The type of data (video sequences, frames, photos, patterns, etc.) depends on the problem that the AI model aims to solve. In computer vision, robotics, and video analytics, AI models are trained on image datasets with the goal of making predictions related to image classification, object detection, image segmentation, and more. Therefore, the image or video data sets should contain meaningful information that can be used to train the model for recognizing various patterns and making recommendations based on the same.

The characteristic situations need to be captured to provide the ground truth for the ML model to learn from. For example, in industrial automation, image data needs to collected that contains specific part defects. Therefore a camera needs to gather footage from assembly lines to provide video or photo images that can be used to create a dataset.

The data collection process is crucial for developing an efficient ML model. The quality and quantity of your dataset directly affect the AI model’s decision-making process. And these two factors determine the robustness, accuracy, and performance of the AI algorithms. As a result, collecting and structuring data is often more time-consuming than training the model on the data.

Data annotation

The data collection is followed by Data annotation, the process of manually providing information about the ground truth within the data. In simple words, image annotation is the process of visually indicating the location and type of objects that the AI model should learn to detect. For example, to train a deep learning model for detecting cats, image annotation would require humans to draw boxes around all the cats present in every image or video frame. In this case, the bounding boxes would be linked to the label named “cat.” The trained model will be able to detect the presence of cats in new images.

Once you have a good set of images collected you will need to label the images. Several tools exist to facilitate the labeling process. These include open-source tools such as labelImg and commercial tools such as Azure Machine Learning, which support image classification and object detection labeling. For large labeling projects, it is recommended to select a labeling tool that supports workflow management and quality reviews. These features are essential to ensure quality and efficiency in the labeling process. Labeling is a very tedious job. So companies prefer to outsource this to third-party labeling vendors like Tagx who take care of this whole labeling process.

What are the labels?

Labels are what the human-in-the-loop uses to identify and call out features that are present in the data. It’s critical to choose informative, discriminating, and independent features to label if you want to develop high-performing algorithms in pattern recognition, classification, and regression. Accurately labeled data can provide ground truth for testing and iterating your models.

Label Types of Computer Vision Data Annotation

Currently, most computer vision applications use a form of supervised machine learning, which means we need to label datasets to train the applications.

Choosing the correct label type for an application depends on what the computer vision model needs to learn. Below are four common types of computer vision models and annotations.

2D Bounding Boxes

Bounding boxes are one of the most commonly relied-on techniques for computer vision image annotation. It’s simple all the annotator has to do is draw a box around the target object. For a self-driving car, target objects would include pedestrians, road signs, and other vehicles on the road. Data scientists choose bounding boxes when the shape of target objects is less of an issue. One popular use case is recognizing groceries in an automated checkout process.

3D Bounding Boxes

Not all bounding boxes are 2D. Their 3D cousins are called cuboids. Cuboids create object representations with depth, allowing computer vision algorithms to perceive volume and orientation. For annotators, drawing cuboids means placing and connecting anchor points. Depth perception is critical for locomotive robots. Understanding where to place items on shelves involves an understanding of more than just height and width.

Landmark Annotation

Landmark annotation is also called dot/point annotation. Both names fit the process: placing dots or landmarks across an image, and plotting key characteristics such as facial features and expressions. Larger dots are sometimes used to indicate more important areas.

Skeletal or pose-point landmark annotations reveal body position and alignment. These are commonly used in sports analytics. For example, skeletal annotations can show where a basketball player’s fingers, wrist, and elbow are in relation to each other during a slam dunk.

Polygons

Polygon segmentation introduces a higher level of precision for image annotations. Annotators mark the edges of objects by placing dots and drawing lines. Hugging the outline of an object cuts out the noise that other image annotation techniques would include. Shearing away unnecessary pixels becomes critical when it comes to irregularly shaped objects, such as bodies of water or areas of land captured by autonomous satellites or drones.

Final thoughts

Training data is the lifeblood of your computer vision algorithm or model. Without relevant, labeled data, everything is rendered useless. The quality of the training data is also an important factor that you should consider when training your model. The work of the training data is not just to train the algorithms to perform predictive functions as accurately as possible. It is also used to retrain or update your model, even after deployment. This is because real-world situations change often. So your original training dataset needs to be continually updated.

If you need any help, contact us to speak with an expert at TagX. From Data Collection, and data curation to quality data labeling, we have helped many clients to build and deploy AI solutions in their businesses.

0 notes

Text

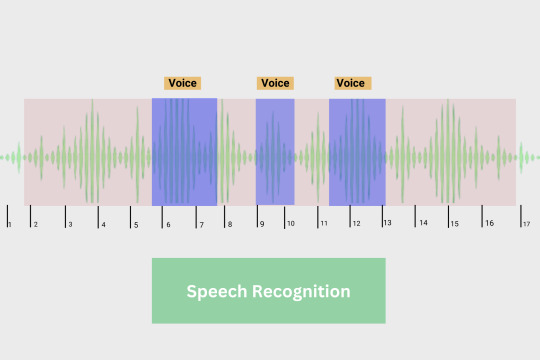

How Data Annotation is used for Speech Recognition

Speech recognition refers to a computer interpreting the words spoken by a person and converting them to a format that is understandable by a machine. Depending on the end goal, it is then converted to text or voice, or another required format. For instance, Apple’s Siri and Google’s Alexa use AI-powered speech recognition to provide voice or text support whereas voice-to-text applications like Google Dictate transcribe your dictated words to text.

Speech recognition AI applications have seen significant growth in numbers in recent times as businesses are increasingly adopting digital assistants and automated support to streamline their services. Voice assistants, smart home devices, search engines, etc are a few examples where speech recognition has seen prominence.

Data is required to train a speech recognition model because it allows the model to learn the relationship between the audio recordings and the transcriptions of the spoken words. By training on a large dataset of audio recordings and corresponding transcriptions, the model can learn to recognize patterns in the audio that correspond to different words and phonemes (speech sounds).

For example, if the model is trained on a large dataset of audio recordings of people speaking English, it will learn to recognize common patterns in the audio that corresponds to English words and phonemes. These patterns might include the frequency spectrum of different phonemes, the duration of different vowel and consonant sounds, and the context in which different words are used. By learning these patterns, the model can then take as input a new audio recording and use what it has learned to transcribe the spoken words in the audio. Without a large and diverse dataset of audio recordings and transcriptions, the model would not have enough data to learn these patterns and would not be able to perform speech recognition accuracy.

What is speech recognition data?

Speech recognition data refers to audio recordings of human speech used to train a voice recognition system. This audio data is typically paired with a text transcription of the speech, and language service providers are well-positioned to help.

The audio and transcription are fed to a machine-learning algorithm as training data. That way, the system learns how to identify the acoustics of certain speech sounds and the meaning behind the words.

There are many readily available sources of speech data, including public speech corpora or pre-packaged datasets, but in most cases, you will need to work with a data services provider to collect your own speech data through the remote collection or in-person collection. You can customize your speech dataset by variables like language, speaker demographics, audio requirements, or collection size.

The data collected need to be annotated for further training of the speech recognition model.

What is Speech or Audio Annotation?

For any system to understand human speech or voice, it requires the use of artificial intelligence (AI) or machine learning. Machine learning models that are developed to react to human speech or voice commands need to be trained to recognize specific speech patterns. The large volume of audio or speech data required to train such systems needs to go through an annotation or labeling process first, rather than being ingested in a raw audio file.

Effectively, audio or speech annotation is the technique that enables machines to understand spoken words, human emotions, sentiments, and intentions. Just like other types of annotations for image and video, audio annotation requires manual human effort where data labeling experts can tag or label specific parts of audio or speech clips being used for machine learning. One common misconception is that audio annotations are simply audio transcriptions, which are the result of converting spoken words into written words. Audio annotation goes beyond audio transcription, adding labeling to each relevant element of the audio clips being transcribed.

Speech annotation is the process of adding metadata to spoken language data. This metadata can include a transcription of the spoken words, as well as information about the speaker’s gender, age, accent, and other characteristics. Speech annotation is often used to create training data for natural language processing and speech recognition systems.

There are several different types of speech or audio annotation, including:

Transcription:

The process of transcribing spoken words into written text.

Part-of-speech tagging:

The process of identifying and labeling the parts of speech in a sentence, such as nouns, verbs, and adjectives.

Named entity recognition:

The process of identifying and labeling proper nouns and other named entities in a sentence, such as people, organizations, and locations.

Dialog act annotation:

The process of labeling the types of actions that are being performed in a conversation, such as asking a question or making a request.

Speaker identification:

The process of identifying and labeling the speaker in an audio recording.

Speech emotion recognition:

The process of identifying and labeling emotions that are expressed through speech, such as happiness, sadness, or anger.

Acoustic event detection:

The process of identifying and labeling specific sounds or events in an audio recording, such as the sound of a car horn or the sound of a person speaking.

These are just a few examples of the types of speech or audio annotation that can be performed. The specific types of annotation that are used will depend on the needs and goals of the natural language processing or speech recognition system being developed. Speech annotation can be a time-consuming and labor-intensive process, but it is an important step in the development of many natural language processing and speech recognition systems.

How to Annotate Speech Data

To perform audio annotation, organizations can use software currently available in the market. Free and open-source annotation tools exist that can be customized for your business needs. Alternatively, you can opt for paid annotation tools that have a range of features to support different types of annotation. Such paid annotation tools are generally supported by a team of professionals, who can configure the tool for your purpose. Another option would be to develop your own customized annotation tool within your organization. However, this can be slow and expensive and requires you to have an in-house team of annotation experts.

Companies that do not want to spend their resources on in-house annotation, can opt to outsource their work to an external service provider specializing in the annotation. Outsourcing may be the best choice for your organization, because service providers:

have a team of available data experts who are skilled in the time-intensive tasks of data cleaning and preparation that are required prior to data annotation

can often start immediately executing the type of labeling that your business needs

deliver high-quality data for your machine learning models and requirements

accelerate the scaling (and ROI) of your resource-intensive annotation initiatives

Use Cases of Speech Recognition

Speech recognition is a technology that allows computers to understand and interpret human speech. It has a wide range of applications, including:

Voice assistants:

Speech recognition is used in voice assistants, such as Apple’s Siri and Amazon’s Alexa, to allow users to interact with their devices using voice commands.

Dictation software:

Speech recognition can be used to transcribe spoken words into written text, making it easier for people to create documents and emails.

Customer service:

Speech recognition is used in customer service centers to allow customers to interact with automated systems using voice commands.

Education:

Speech recognition can be used to provide feedback to students on their pronunciation and speaking skills.

Healthcare:

Speech recognition is used in healthcare settings to transcribe doctors’ notes and to allow patients to interact with their electronic health records using voice commands.

Transportation:

Speech recognition is used in self-driving cars to allow passengers to give voice commands to the vehicle.

Home automation:

Speech recognition is used in smart home systems to allow users to control their appliances and devices using voice commands.

These are just a few examples of the many applications of speech recognition technology. It has the potential to revolutionize how we interact with computers and other devices, making it easier and more convenient for people to communicate with them.

Conclusion

With natural language processing (NLP) becoming more mainstream across business enterprises, the need for high-quality audio annotation services is being realized by organizations looking to build efficient machine-learning data models. Rather than developing in-house expertise, companies are finding that they are better served by outsourcing their annotation work to qualified third-party experts. TagX has extensive experience providing a variety of data annotation, cleansing, and enrichment services to its global clients. Want to know how data labeling could benefit your business? Please contact us anytime.

0 notes

Text

AI and Data Annotation for Manufacturing and Industrial Automation

Industrial automation refers to the use of technology to control and optimize industrial processes, such as manufacturing, transportation, and logistics. This can involve the use of automation equipment, such as robots and conveyor belts, as well as computer systems and software to monitor and control the operation of these machines. The goal of industrial automation is to increase the efficiency, accuracy, and speed of industrial processes while reducing the need for manual labor and minimizing the risk of errors or accidents.

Every manufacturer aims to find fresh ways to save and make money, reduce risks, and improve overall production efficiency. This is crucial for their survival and to ensure a thriving, sustainable future. The key lies in AI-based and ML-powered innovations. AI tools can process and interpret vast volumes of data from the production floor to spot patterns, analyze and predict consumer behavior, detect anomalies in production processes in real time, and more. These tools help manufacturers gain end-to-end visibility of all manufacturing operations in facilities across all geographies. Thanks to machine learning algorithms, AI-powered systems can also learn, adapt, and improve continuously.

Why use AI for the Manufacturing industry

There are several reasons why AI (artificial intelligence) can be helpful in industrial automation:

Improved accuracy:

AI algorithms can analyze large amounts of data and make decisions based on that analysis with a high degree of accuracy. This can help to improve the precision and reliability of industrial processes.

Enhanced efficiency:

AI-powered systems can work continuously without needing breaks, which can help to increase the overall efficiency of industrial operations.

Reduced costs:

By automating tasks that would otherwise need to be performed manually, AI can help to reduce labor costs and increase profitability.

Improved safety:

AI can be used to monitor industrial processes and alert operators to potential hazards or problems, which can help to improve safety in the workplace.

Increased speed:

AI-powered systems can often process and analyze data much faster than humans, which can help to speed up industrial processes

Use cases of Manufacturing AI

There are many potential use cases for AI in manufacturing and industry, including:

Quality control:

AI can be used to inspect products and identify defects or errors, improving the overall quality of the finished product.

Supply chain optimization:

AI can be used to optimize the flow of materials and components through the supply chain, reducing waste and increasing efficiency.

Predictive maintenance:

AI can be used to predict when equipment is likely to fail, allowing maintenance to be scheduled before problems occur.

Process optimization:

AI can be used to optimize manufacturing processes, such as by identifying bottlenecks, improving efficiency, and reducing waste.

Personalized product customization:

AI can be used to customize products to individual customer specifications, increasing the value of the finished product.

Energy management:

AI can be used to optimize the use of energy in industrial processes, reducing costs and improving sustainability.

Data Annotation to implement Manufacturing AI

Data annotation plays a key role in many applications of AI in manufacturing. In order for AI algorithms to be able to accurately analyze and make decisions based on data, the data must be properly labeled and organized. This is where data annotation comes in. By categorizing and labeling data, it becomes easier for AI algorithms to understand and make sense of the data, improving their accuracy and effectiveness.

Data annotation is an essential part of many AI applications in manufacturing, as it allows AI algorithms to effectively analyze and make decisions based on data, leading to improved efficiency, accuracy, and effectiveness.

Quality control:

Data annotation can be used to label images of products according to their defects or errors. This allows an AI algorithm to learn what constitutes a defect, and to identify defects in new images with a high degree of accuracy.

Supply chain optimization:

Data annotation can be used to label data points according to their position in the supply chain and their characteristics, such as their location, type, and quantity. This allows an AI algorithm to learn the patterns that are associated with efficient supply chain management, and to suggest ways to optimize the flow of materials and components.

Predictive maintenance:

Data annotation can be used to label data points according to the type of equipment, the maintenance history of the equipment, and other relevant factors. This allows an AI algorithm to learn the patterns that are associated with equipment failures, and to predict when maintenance will be needed in the future.

Process optimization:

Data annotation can be used to label data points according to the characteristics of the manufacturing process, such as the type of equipment being used, the materials being processed, and the output of the process. This allows an AI algorithm to learn the patterns that are associated with efficient manufacturing, and to suggest ways to optimize the process.

Personalized product customization:

Data annotation can be used to label data according to the specific characteristics and preferences of individual customers. This allows an AI algorithm to learn the patterns that are associated with customer preferences, and to suggest ways to customize products to meet the specific needs of individual customers.

Energy management:

Data annotation can be used to label data points according to the energy usage of different equipment and processes, as well as the factors that influence energy consumption. This allows an AI algorithm to learn the patterns that are associated with efficient energy management, and to suggest ways to optimize energy usage in industrial processes.

Final thoughts

AI will impact manufacturing in ways we have not yet anticipated. As the need for automation in factories continues to grow, factories will increasingly turn to AI-powered machines to improve the efficiency of day-to-day processes. This opens the door to introducing even smarter applications into today’s factories, from smart anomaly detection systems to autonomous robots and beyond. In conclusion, AI and data annotation are increasingly being used in the manufacturing industry to improve efficiency, reduce costs, improve quality, and increase the value of products. As AI and data annotation technologies continue to advance, it is likely that we will see even greater adoption of these technologies in the manufacturing industry in the coming years.

0 notes

Text

How Data Annotation is used for AI-based Recruitment

The ability of AI to assess huge data and swiftly estimate available possibilities makes process automation possible. AI technologies are increasingly being employed in marketing and development in addition to IT. It’s not surprising that some businesses have begun to adopt (or are learning to use) AI solutions in hiring, seeking to automate the hiring process and find novel ways to hire people. You’ll definitely kick yourself for not learning about and utilizing AI as one of the most crucial recruitment technology solutions.

Artificial intelligence has the potential to revolutionize the recruitment process by automating many of the time-consuming tasks associated with recruiting, such as resume screening, scheduling interviews, and sending follow-up emails. This can save recruiters a significant amount of time and allow them to focus on more high-level tasks, such as building relationships with candidates and assessing their fit for the company.

AI-powered recruitment tools use natural language processing (NLP) and machine learning (ML) to better match candidates with job openings. This can be done by analyzing resumes and job descriptions to identify the skills and qualifications that are most important for the position and then matching those with the skills and qualifications of the candidates. AI also facilitates more efficient scheduling, by taking into account the availabilities of the candidates and interviewers and suggesting the best times for an interview.

Applications of Recruitment AI

There are several use cases of AI in the recruitment process, including:

Resume screening: Resume screening is the first step in the recruitment and staffing process. It involves the identification of relevant resumes or CVs for a certain job role based on their qualifications and experience. AI can be used to scan resumes and identify the most qualified candidates based on certain criteria, such as specific skills or qualifications. This can save recruiters a significant amount of time that would otherwise be spent manually reviewing resumes.

Interview scheduling: AI can be used to schedule interviews by taking into account the availability of both the candidates and the interviewers, and suggesting the best times for the interviews.

Pre-interview screening: AI can be used to conduct pre-interview screening by conducting initial screening calls or virtual interviews to shortlist suitable candidates before passing it to the human interviewer.AI can be used to check the references of potential candidates by conducting automated reference checks over the phone or email.

Chatbots for recruitment: AI-powered chatbots can be used to answer candidates’ queries, schedule an interview and help them navigate the hiring process, which can improve the candidate’s experience. The use of bots to conduct interviews is beneficial to recruiters, as they guarantee consistency in the interview process since the same interview experience is meant to provide equal experiences to all candidates.

Interview evaluation: AI-powered video interview evaluation tools can analyze a candidate’s facial expressions, tone of voice, and other nonverbal cues during a video interview to help recruiters evaluate their soft skills and potential cultural fit within the organization. NLP-based reading tools can be used to analyze the speech patterns and written responses of candidates during the interview process. In addition, NLP algorithms can conduct an in-depth sentiment analysis of a candidate’s speech and expressions.

Job & Candidate matching: AI can be used to match candidates with job openings by analyzing resumes, job descriptions, and other data to identify the most qualified candidates for the position. This facet of AI in recruiting focuses on a customized candidate experience. It means the machine understands what jobs and type of content the potential candidates are interested in, monitors their behavior, then automatically sends them content and messages based on their interests.

Predictive hiring: AI can be used to predict which candidates are most likely to be successful in a given role by analyzing data on past hires, such as performance reviews and tenure data.

These are some of the most common ways AI is currently being used in the recruitment process, but as the technology continues to evolve, there will likely be new use cases for AI in the future.

Data Annotation for Recruitment AI

Data annotation is an important step in the process of training AI systems, and it plays a critical role in several cases of AI-based recruitment processes. Here are a few examples of how data annotation is used in AI-based recruitment:

Resume screening: For the implementation of the resume screening model to identify the most qualified candidates based on certain criteria, such as specific skills or qualifications, it is necessary to annotate a large dataset of resumes with relevant information, such as the candidate’s name, education, and work experience. Large volumes of resumes with diverse roles and skills are annotated to specify how much work experience the candidate has for a particular field, what skills, certifications, and education the candidate is qualified and much more.

Job matching: To train an AI system to match candidates with job openings, it is required to annotate large volumes of job descriptions with relevant information, such as the roles and responsibilities of a particular job and the requirements of the job opening.

Interview evaluation: For interview evaluation, different NLP models are trained like sentiment analysis and speech pattern evaluation. To analyze a candidate’s facial expressions, tone of voice, and other nonverbal cues during a video interview, it is necessary to annotate a large dataset of video interviews with labels that indicate the candidate’s level of engagement, energy, and enthusiasm.

Predictive hiring: Based on the job requirement details, the AI model can predict the most relevant candidates from a large pool of resumes. For training of such a model to predict which candidates are most likely to be successful in a given role, it is necessary to first annotate a large dataset of past hires with labels that indicate the candidate’s performance and tenure.

Chatbot Training: A chatbot can mimic a human’s conversational abilities in the sense that it’s programmed to understand written and spoken language and respond correctly. The dataset of questions and answers needs to be annotated appropriately in order to train the AI chatbot to comprehend the candidate’s inquiries and respond appropriately.

The process of data annotation is time-consuming but it is essential to ensure that the AI system is able to learn from the data and make accurate predictions or classifications. It’s also worth mentioning that as a part of data annotation quality assurance is also very crucial, as the model is only as good as the data it’s been trained on. Thus, quality annotation and quality assurance checks on the data are very important to ensure the model’s performance.

Advantages of Recruitment AI

There are several advantages to using AI in the recruitment process, including:

Efficiency: AI can automate many of the time-consuming tasks associated with recruiting, such as resume screening and scheduling interviews. This can save recruiters a significant amount of time, allowing them to focus on more high-level tasks, such as building relationships with candidates and assessing their fit for the company.

Objectivity: AI can help to reduce bias in the recruitment process by removing subjective elements such as personal prejudices. The algorithms are not influenced by personal biases, this can make the selection process more objective and fair, which can lead to better candidate selection.

Increased speed: AI can process resumes and conduct initial screening and job matching much faster than a human can. This can speed up the recruitment process and reduce the time it takes to fill a job opening.

Improved candidate matching: AI can use natural language processing and machine learning to better match candidates with job openings by analyzing resumes and job descriptions to identify the skills and qualifications that are most important for the position.

Increased scalability: AI can handle a high volume of resumes and job openings, which can be challenging for human recruiters. This can allow the companies to expand and increase their recruitment efforts.

Better candidate experience: AI-powered chatbots can be used to answer candidates’ queries, schedule an interview, and help them navigate the hiring process, which can improve the candidate’s experience and helps the company with candidate retention.

However, it’s important to note that AI is not a replacement for human recruiters, instead, it should be viewed as a tool to assist them. It is necessary to keep in mind that AI, despite its advantages, is not able to fully understand the nuances of a job or company culture and that the human touch is still necessary for the recruitment process.

Conclusion

Artificial intelligence in recruitment will grow because it is prominently beneficial for the company, recruiters, and candidates. With the right tools, software and programs, you can develop an automated process that improves the quality of your candidates and their experience. High-quality data annotation is required to train AI systems to effectively automate tasks such as resume screening, job matching, and predictive hiring.

TagX a data annotation company plays a vital role in helping organizations to implement AI-powered recruitment automation by providing them with high-quality annotated data that they can use to train their AI systems. With TagX, organizations can leverage the benefits of AI while still maintaining a high level of human oversight and judgment, leading to an overall more efficient, effective, and objective recruitment process.

0 notes

Text

The Ultimate Guide to Data Ops for AI

Data is the fuel that powers AI and ML models. Without enough high-quality, relevant data, it is impossible to train and develop accurate and effective models.

DataOps (Data Operations) in Artificial Intelligence (AI) is a set of practices and processes that aim to optimize the management and flow of data throughout the entire AI development lifecycle. The goal of DataOps is to improve the speed, quality, and reliability of data in AI systems. It is an extension of the DevOps (Development Operations) methodology, which is focused on improving the speed and reliability of software development.

What is DataOps?

DataOps (Data Operations) is an automated and process-oriented data management practice. It tracks the lifecycle of data end-to-end, providing business users with predictable data flows. DataOps accelerate the data analytics cycle by automating data management tasks.

Let's take the example of a self-driving car. To develop a self-driving car, an AI model needs to be trained on a large amount of data that includes various scenarios, such as different weather conditions, traffic patterns, and road layouts. This data is used to teach the model how to navigate the roads, make decisions, and respond to different situations. Without enough data, the model would not have been exposed to enough diverse scenarios and would not be able to perform well in real-world situations. DataOps needs high-performance and scalable data lakes, which can handle mixed workloads, and different data types audio, video, text, and data from sensors and that have the performance capabilities needed to keep the compute layer fully utilized.

What is the data lifecycle?

Data Generation: There are various ways in which data can be generated within a business, be it through customer interactions, internal operations, or external sources. Data generation can occur through three main methods:

Data Entry: The manual input of new information into a system, often through the use of forms or other input interfaces.

Data Capture: The process of collecting information from various sources, such as documents, and converting it into a digital format that can be understood by computers.

Data Acquisition: The process of obtaining data from external sources, such as through partnerships or external data providers like Tagx.

Data Processing: Once data is collected, it must be cleaned, prepared, and transformed into a more usable format. This process is crucial to ensure the data's accuracy, completeness, and consistency.

Data Storage: After data is processed, it must be protected and stored for future use. This includes ensuring data security and compliance with regulations.

Data Management: The ongoing process of organizing, storing, and maintaining data, from the moment it is generated until it is no longer needed. This includes data governance, data quality assurance, and data archiving. Effective data management is crucial to ensure the data's accessibility, integrity, and security.

Advantages of Data Ops

DataOps enables organizations to effectively manage and optimize their data throughout the entire AI development lifecycle. This includes:

Identifying and Collecting Data from All Sources: DataOps is widely used to identify and collect data from a wide range of sources, including internal data, external data, and public data sets. This is helpful for organizations to have access to the data they need to train and test their AI models.

Automatically Integrating New Data: DataOps enables organizations to automatically integrate new data into their data pipelines. This ensures that data is consistently updated and that the latest information is always available to users.

Centralizing Data and Eliminating Data Silos: Companies focus on Dataops to centralize their data and eliminate data silos. This improves data accessibility and helps to ensure that data is used consistently across the organization.

Automating Changes to the Data Pipeline: DataOps implementation helps to automate changes to their data pipeline. This increases the speed and efficiency of data management and helps to ensure that data is used consistently across the organization.

By implementing DataOps, organizations can improve the speed, quality, and reliability of their data and AI models, and reduce the time and cost of developing and deploying AI systems. Additionally, by having proper data management and governance in place, the AI models developed can be explainable and trustworthy, which can be beneficial for regulatory and ethical considerations.

TagX Data as a Service

Data as a service (DaaS) refers to the provision of data by a company to other companies. TagX provides DaaS to AI companies by collecting, preparing, and annotating data that can be used to train and test AI models.

Here's a more detailed explanation of how TagX provides DaaS to AI companies:

Data Collection: TagX collects a wide range of data from various sources such as public data sets, proprietary data, and third-party providers. This data includes image, video, text, and audio data that can be used to train AI models for various use cases.

Data Preparation: Once the data is collected, TagX prepares the data for use in AI models by cleaning, normalizing, and formatting the data. This ensures that the data is in a format that can be easily used by AI models.

Data Annotation: TagX uses a team of annotators to label and tag the data, identifying specific attributes and features that will be used by the AI models. This includes image annotation, video annotation, text annotation, and audio annotation. This step is crucial for the training of AI models, as the models learn from the labeled data.

Data Governance: TagX ensures that the data is properly managed and governed, including data privacy and security. We follow data governance best practices and regulations to ensure that the data provided is trustworthy and compliant with regulations.