#NvidiaH100

Text

Google Cloud Confidential VMs for Data Protection with AMD

Confidential virtual machines

Organisations processing sensitive data in the cloud with robust confidentiality assurances may benefit from Confidential Computing. Google has kept moving forward with developing this significant technology, and they are working with top players in the market including NVIDIA, AMD, and Intel to further Confidential Computing solutions.

Google Confidential VMs or Google Cloud Confidential VMs

Google Cloud is thrilled to present developments in their Confidential Computing solutions at Google Cloud Next today. These developments include increased hardware options, support for data migrations, and expanded partnerships, all of which have contributed to the recognition of Confidential Computing as a critical solution for data security and confidentiality.

Confidential virtual machines with Intel TDX and an integrated accelerator with AMX

Intel TDX

The Intel Trust Domain Extensions (TDX) for Confidential Computing preview is being released with much excitement. For virtual machines, Intel TDX provides a new degree of hardware-based confidentiality, integrity, and isolation, enhancing security for your most critical files and programmes.

Anand Pashupathy, vice president and general manager of Intel’s security software and services division, stated, “Intel’s collaboration with Google Cloud on Confidential Computing helps organizations strengthen their data privacy, workload security, and compliance in the cloud, especially with sensitive or regulated data.” “Organizations can effortlessly move their workloads to a confidential environment and work with partners on joint analyses while keeping their data private with Google Cloud’s new C3 instances and Confidential Spaces solution.”

Intel AMX

The new C3 virtual machine series’ Google Cloud Confidential VMs make use of Intel Trust Domain Extensions (Intel TDX) technology and 4th generation Intel Xeon Scalable CPUs. They collaborate with Intel AMX, a recently introduced integrated accelerator that enhances deep learning training and inference performance on the CPU. Additionally, they are perfect for workloads including image identification, recommendation systems, and natural language processing.

Now that Confidential Computing is available on Intel TDX and AMX, their customers may experience its advantages and provide input as they continue to improve this ground-breaking technology.

“By using Google Cloud’s C3 virtual machines that are outfitted with Intel TDX, Edgeless Systems has been able to improve Google Constellation and Contrast offerings even further. According to Moritz Eckert, chief architect of Edgeless Systems, “the addition of Intel TDX now gives their customers greater choice and flexibility, ensuring they have access to the latest in Confidential Computing hardware options.”

AMD SEV-SNP

Preview of private virtual machines on the N2D series with AMD SEV-SNP

AMD Secure Encrypted Virtualization-Secure Nested Paging (SEV-SNP)-equipped general purpose N2D machine series now provides Google Cloud Confidential VMs in preview. The newest Confidential Computing technology from AMD is called AMD SEV-SNP. In addition to adding robust memory integrity protection to help thwart malicious hypervisor-based attacks like data replay and memory remapping, it improves upon AMD SEV and its security features of data and memory confidentiality. Your most sensitive data may be completely safeguarded in the cloud with remote attestation thanks to Google Cloud Confidential VMs equipped with AMD SEV-SNP technology.

Confidential VM live migration support is now widely accessible

Customers may employ Confidential Computing technology with their long-lived workloads by using Live Migration for Confidential VMs. It can maintain in-use protection while reducing downtime caused by host maintenance activities. Live Migration for Confidential Virtual Machines is now available to all regions on the N2D machine series.

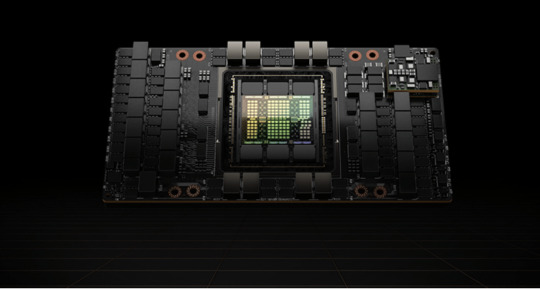

Announcing NVIDIA H100 Tensor Core GPU-Powered Confidential Virtual Machines for AI Tasks

Google is thrilled to introduce Confidential VMs on the A3 machine series with NVIDIA Tensor Core H100 GPUs, extending their capabilities for secure compute. This product allows for faster workloads that are essential to artificial intelligence (AI), machine learning, and scientific simulations by extending hardware-based data and model security to GPUs.

It also provides confidentiality, integrity, and isolation from the CPU. Customers no longer have to choose between security and performance with NVIDIA and Google, they can have the benefit of both as NVIDIA Confidential Computing on H100 GPUs gives them the extra advantage of per formant GPUs to protect their most valuable workloads. This allows customers to secure data while in use and protect their most valuable AI workloads while accessing the power of GPU-accelerated computing.

NVIDIA H100 GPUs with NVIDIA HGX Protected PCIe are available in Confidential VMs on A3 VMs, which may help guarantee that data, AI models, and sensitive code are kept safe even while doing compute-intensive tasks. Private previews of Google Cloud Confidential VMs will be available later this year on accelerator-optimized A3 machine series with NVIDIA H100 GPUs.

“In the most efficient and secure manner possible, the Confidential VM A3 powered by NVIDIA HGX H100 with Protected PCIe will help power a new era of business innovation driven by generative AI insights.”—Vice President of Product Security at NVIDIA, Daniel Rohrer

Confidential VMs

Forming important alliances

Google’s dedication to developing a cooperative environment for Confidential Computing does not waver. Their alliances with top industry players, such as semiconductor manufacturers, cloud service providers, and software suppliers, have grown. These partnerships are crucial to hastening the development and use of Confidential Computing solutions, which will eventually improve cloud security as a whole.

“There is a growing need to protect the privacy and integrity of data, particularly sensitive workloads, intellectual property, AI models, and valuable information, as more businesses move their data and workloads to the cloud. Through this partnership, businesses can safeguard and manage their data while it’s in use, in transit, and at rest with completely verifiable attestation. Their strong partnership with Google Cloud and Intel boosts our clients’ confidence when moving to the cloud,” said Todd Moore, vice president of Thales’ data security solutions.

Google is dedicated to making Confidential Computing the cornerstone of a safe and prosperous cloud ecosystem via constant innovation and teamwork. They cordially encourage you to peruse their most recent offers and set out on your path to a safe and private cloud computing future.

Read more on Govindhtech.com

#govindhtech#news#technews#technology#TechTrends#GoogleCloud#confidentialvms#virtualmachines#NvidiaH100#AMD#aimodels

0 notes

Text

Nvidia H100'den 30 kat daha zayıf, ancak 200-400 kat daha ucuz

Yapay zeka için en verimli hızlandırıcılar onbinlerce dolara mal olurken, Çinli Intellifusion şirketi çok ucuz olan çözümünü sundu.

Deep Eyes adı verilen yapay zeka chipi, SoC DeepEdge10Max formunda sunuluyor ve müşterilere yalnızca 140 dolara mal olacak. Elbette bu fiyata çok mütevazı bir performanstan bahsediyoruz - 48 TOPS. Bu, modern Intel ve AMD işlemcilerden birkaç kat daha fazladır, ancak Snapdragon X Elite SoC, 75 TOPS'a kadar NPU performansına sahip olacaktır. Bu arada bir PC işlemcisinde bir dizi Copilot AI fonksiyonunu yerel olarak çalıştırmak için en az 40 TOPS performansa sahip bir NPU ünitesine ihtiyacınız olduğunu da hatırlatalım .

Karşılaştırma için, aynı INT8 modundaki Nvidia H100'ün neredeyse 4000 TOPS performansı var, yani neredeyse 30 kat daha yüksek, ancak maliyeti 200-400 kat daha fazla. Bununla birlikte, böyle bir karşılaştırma yalnızca farklı yonga seviyelerinin gösterilmesi açısından anlamlıdır.

Şirket ayrıca daha sonra 24 TOPS AI motorlu DeepEdge10Pro SoC'yu ve 96 TOPS performansına sahip DeepEdge10Ultra'yı piyasaya sürmeye hazırlanıyor.

Tüm bu çözümler şirketin kendi teknolojileridir ve özel bir yapay zeka çipi Intellifusion NNP400T'ye dayanmaktadır. SoC konfigürasyonu 1,8 GHz frekansına sahip 10 çekirdekli RISC-V işlemciyi, 800 MHz frekansına sahip GPU'yu da içeriyor ve tüm bunlar 14 nm işlem teknolojisi kullanılarak üretilecek.

Read the full article

0 notes

Text

Upgrade Your Computing Power: NVIDIA H100 80GB Stock Available Now at VIPERA!

Are you ready to elevate your computing experience to unparalleled heights? Look no further, as VIPERA proudly announces the availability of the NVIDIA H100 80GB, a game-changer in the world of high-performance GPUs. Don’t miss out on this opportunity to supercharge your computational capabilities — order your NVIDIA H100 80GB now exclusively at VIPERA!

Specifications:

Form Factor:

H100 SXM

H100 PCIe

Performance Metrics:

FP64 (Double Precision):

H100 SXM: 34 teraFLOPS

H100 PCIe: 26 teraFLOPS

FP64 Tensor Core:

H100 SXM: 67 teraFLOPS

H100 PCIe: 51 teraFLOPS

FP32 (Single Precision):

H100 SXM: 67 teraFLOPS

H100 PCIe: 51 teraFLOPS

TF32 Tensor Core:

H100 SXM: 989 teraFLOPS

H100 PCIe: 756 teraFLOPS

BFLOAT16 Tensor Core:

H100 SXM: 1,979 teraFLOPS

H100 PCIe: 1,513 teraFLOPS

FP16 Tensor Core:

H100 PCIe: 1,513 teraFLOPS

H100 SXM: 1,979 teraFLOPS

FP8 Tensor Core:

H100 SXM: 3,958 teraFLOPS

H100 PCIe: 3,026 teraFLOPS

INT8 Tensor Core:

H100 SXM: 3,958 TOPS

H100 PCIe: 3,026 TOPS

GPU Memory:

80GB

Unmatched Power, Unparalleled Possibilities:

The NVIDIA H100 80GB is not just a GPU; it’s a revolution in computational excellence. Whether you’re pushing the boundaries of scientific research, diving into complex AI models, or unleashing the full force of graphic-intensive tasks, the H100 stands ready to meet and exceed your expectations.

Why Choose VIPERA?

Exclusive Availability: VIPERA is your gateway to securing the NVIDIA H100 80GB, ensuring you stay ahead in the technological race.

Unrivaled Performance: Elevate your projects with the unprecedented power and speed offered by the H100, setting new standards in GPU capabilities.

Cutting-Edge Technology: VIPERA brings you the latest in GPU innovation, providing access to state-of-the-art technologies that define the future of computing.

Don’t miss out on the chance to revolutionize your computing experience. Order your NVIDIA H100 80GB now from VIPERA and unlock a new era of computational possibilities!

M.Hussnain

Visit us on social media: Facebook Twitter LinkedIn Instagram YouTube TikTok

#nvidia#nvidiah100#nvidiah10080gb#availablestock#h100available#nvidiah100stock#viperatech#vipera#stockavailable

0 notes

Text

"Unleashing Innovation: NVIDIA H100 GPUs and Quantum-2 InfiniBand on Microsoft Azure"

#aiinnovation#highperformancecomputing#nvidiah100#azureai#quantum2infiniband#microsoftazure#futureoftechnology#gpucomputing#innovationinai#techupdates#aIoncloud#cloudcomputing#hpc#aiinsights#cuttingedgetech#supercomputing#nvidiaquantum2#aIonazure#techbreakthrough#jjbizconsult

0 notes

Text

Se Anuncian Sistemas NVIDIA H100 y Quantum-2

Se Anuncian Sistemas NVIDIA H100 y Quantum-2

NVIDIA anunció la adopción de sus NVIDIA H100 TENSOR CORE de próxima generación y Quantum-2 InfiniBand; en las que se destacan las nuevas ofertas en la nube de Microsoft Azure y más de 50 nuevos sistemas asociados para acelerar los descubrimientos científicos.

Los socios de NVIDIA revelaron las nuevas ofertas en Super Computing 2022 (SC22), donde NVIDIA lanzó actualizaciones importantes para sus…

View On WordPress

0 notes

Text

Intel Gaudi 3 and Dell PowerEdge Give AI Possibilities

Intel Gaudi 3 AI Accelerator

The Dell PowerEdge XE9680 server has developed into a vital component in the machine learning, deep learning training, HPC modelling, and AI and generative AI acceleration. This server portfolio has advanced significantly with the addition of Intel Gaudi 3 AI Accelerator, which offers an improved set of technological capabilities tailored to difficult, data-intensive tasks. This development offers choices for developers and corporate experts to push the boundaries of GenAI acceleration while accommodating a wider variety of workloads.

Intel Gaudi 3 release date

The AI accelerator Intel Gaudi 3, which debuted on April 9, 2024, is intended primarily for corporate applications, especially those that use generative AI (GenAI). Below is a brief summary of our current understanding:

Gaudi 3 Intel Performance

Provides significant gains in performance over Gaudi 2, its predecessor.

Compared to Nvidia’s H100, a top rival, Intel says it offers 40% greater power efficiency and 50% quicker inference at a more affordable price.

It promises 1.5x more memory capacity, doubled networking bandwidth for large-scale systems, and 4x AI compute for BF16 format.

Emphasis on Generative AI

Gaudí 3 is intended to tackle the difficulties that businesses have while implementing and growing GenAI projects, such as multimodal and large language models (LLMs).

In 2023, just 10% of businesses will have successfully moved GenAI initiatives into production. With Gaudi 3, Intel hopes to close this gap.

Choice and Openness

Gaudi 3’s open architecture is highlighted by Intel, enabling flexible integration with a range of hardware and applications.

In addition to giving businesses greater autonomy, this may lessen vendor lock-in.

Intel Gaudi 3 architecture

Using Silicon Diversities to Create Tailored Solutions

Being the first platform from Dell to combine eight-way GPU acceleration with x86 server architecture, the PowerEdge XE9680 stands out for its exceptional performance in AI-centric operations. This ecosystem’s capabilities are further enhanced with the addition of the Intel Gaudi 3 accelerator, which gives customers the option to customize their systems to meet certain processing requirements, notably those related to GenAI workloads. This calculated inclusion demonstrates a dedication to provide strong and adaptable no-compromise AI acceleration solutions.

Technical Details Increasing Client Success

The XE9680 architecture fosters scalability and dependability, since it is engineered to flourish at temperatures as high as 35°C. The configuration options for the server are enhanced with the inclusion of Intel Gaudi 3 accelerators. This has eight PCIe Gen 5.0 slots for increased connection and bandwidth, up to 32 DDR5 memory DIMM slots for increased data throughput, and 16 EDSFF3 storage drives for greater data storage options. Combining two up to 56 core 4th Generation Intel Xeon Scalable processors, the XE9680 is designed to perform exceptionally well in complex AI and ML tasks, giving it a competitive advantage in data processing and analysis.

Strategic Developments for AI Understanding

With the addition of more accelerators, the PowerEdge XE9680 surpasses the capabilities of traditional hardware and becomes an indispensable tool for companies looking to use AI to get deep data insights. By combining cutting-edge processing power with an effective, air-cooled architecture, this system redefines AI acceleration and produces quick, actionable insights that improve business results.

Technological Transparency Promotes Innovation

Performance features that are essential for generative AI workloads are brought to the table by the Intel Gaudi 3 AI accelerator. These features include 128 GB of HBMe2 memory capacity, 64 custom and programmable tensor processor cores (TPCs), 3.7 TB of memory bandwidth, and 96 MB of on-board static random-access memory (SRAM). The strong structure of model libraries and collaborations optimize the Gaudi3’s open ecosystem. With its development tools, existing codebases may shift with ease, requiring just a few lines of code to migrate.

Specialized Networking and Video Decoder Features

With the Intel Gaudi3 accelerator added, the PowerEdge XE9680 offers new networking features that are embedded into the accelerators via six OSFP 800GbE ports. These links eliminate the requirement for external NICs to be installed in the system by enabling direct connections to an external accelerator fabric. This tries to reduce the overall cost of ownership and complexity of an infrastructure in addition to simplifying it. Additionally, the specialized media decoders Intel Gaudi 3 are made for AI vision applications. These can handle heavy pre-processing jobs, which speeds up the translation of video to text and improves the efficiency of AI applications for businesses.

With the Intel Gaudi 3, the Dell PowerEdge XE9680 represents a revolutionary advancement in AI development.

A turning point in AI computing has been reached by Dell and Intel’s partnership, which is embodied in the Dell PowerEdge XE9680 with the Intel Gaudi 3 AI accelerator. It provides an innovative solution that anticipates the requirements of the industry going ahead while meeting the demands of AI workloads now. Through this relationship, technology experts will have access to cutting-edge tools for innovation that will push the envelope in AI research and establish new benchmarks for computational excellence and efficiency.

Are you prepared to take off with the Gaudi Accelerator? Through their Intel Developer Cloud offering, a limited number of clients may now start testing Intel’s accelerators thanks to a partnership between Dell and Intel. Find out more.

In the latest Forrester Wave study, Dell was named as a leader in artificial intelligence. Dell provides complete solutions for IT and data scientists to use AI and increase productivity, which creates end-to-end GenAI results. Dell can be your go-to counsel in accelerating your AI goals, regardless of where you are in the process.

Availability:

Intel is already sending samples to prospective clients, however wide availability is anticipated in Q3 2024.

In Q4 2024, PCIe add-in cards a popular form factor for AI accelerators are expected to be released.

All things considered, Intel Gaudi 3 seems to be a formidable competitor in the market for AI accelerators, especially for companies wishing to use generative AI technology. Because of its emphasis on effectiveness, efficiency, and transparency, it has the potential to revolutionize this quickly developing area.

Read more on Govindhtech.com

#govindhtech#AI#intelgaudi3#gaudi#GenAI#NvidiaH100#news#TechNews2024#technologynews#technews#technology

0 notes

Text

NVIDIA Triton Speeds Oracle Cloud Inference!

Triton Speeds Inference

Thomas Park is an enthusiastic rider who understands the need of having multiple gears to keep a quick and seamless ride.

Thus, the software architect chose NVIDIA Triton speeds Inference Server when creating an AI inference platform to provide predictions for Oracle Cloud Infrastructure’s (OCI) Vision AI service. This is due to its ability to swiftly and effectively handle almost any AI model, framework, hardware, and operating mode by shifting up, down, or sideways.

The NVIDIA AI inference platform, according to Park, a competitive cyclist and computer engineer based in Zurich who has worked for four of the biggest cloud service providers in the world, “gives our worldwide cloud services customers tremendous flexibility in how they build and run their AI applications.”

More specifically, for OCI Vision and Document Understanding Service models that were transferred to Triton, Triton speeds decreased inference latency by 51%, enhanced prediction throughput by 76%, and decreased OCI’s total cost of ownership by 10%. According to a blog post made earlier this year by Park and a colleague on Oracle, the services are available worldwide across more than 45 regional data centers.

Computer Vision Quickens Understanding

For a wide range of object identification and image classification tasks, customers rely on OCI Vision AI. To avoid making busy truckers wait at toll booths, a U.S.-based transportation agency, for example, utilizes it to automatically determine the number of vehicle axles going by to calculate and bill bridge tolls.

Additionally, Oracle NetSuite a suite of business tools utilized by over 37,000 enterprises globally offers OCI AI. One application for it is in the automation of invoice recognition.

Park’s efforts have led to the adoption of Triton speeds by other OCI services as well.

A Data Service Aware of Triton Speeds

Tzvi Keisar, a director of product management for OCI’s Data Science service, which manages machine learning for Oracle’s internal and external users, stated, “We’ve built a Triton-aware AI platform for our customers.”

“We will save customers time by automatically completing the configuration work in the background and launching a Triton-powered inference endpoint for them if they want to use Triton speeds ,” added Keisar.

Additionally, his team intends to facilitate the adoption of the quick and adaptable inference server by its other users even more. Triton speeds is part of NVIDIA AI Enterprise, an OCI Marketplace-available platform that offers all the security and support that enterprises require.

An Enormous SaaS Platform

The machine learning foundation for NetSuite and Oracle Fusion software-as-a-service applications is provided by OCI’s Data Science service.

He claimed, “These platforms are enormous, with tens of thousands of users building their work on top of our service.”

A broad range of users, mostly from enterprises in the manufacturing, retail, transportation, and other sectors are included. They are creating and utilizing AI models in almost all sizes and shapes.

One of the group’s initial offerings was inference, and shortly after its launch, Triton speeds caught the team’s attention.

An Unmatched Inference Framework

We began testing with Triton speeds after observing its rise in popularity as the best serving framework available, according to Keisar. “They observed very strong performance, and it filled a vacuum in their current offerings, particularly with regard to multi-model inference it’s the most sophisticated and adaptable inferencing framework available today.”

Since its March OCI launch, Triton speeds has drawn interest from numerous Oracle internal teams that want to use it for inference tasks requiring the simultaneous feeding of predictions from several AI models.

He said that Triton speeds performed exceptionally well on several models set up on a single endpoint.

Quickening the Future

Going forward, Keisar’s group is testing the NVIDIA TensorRT-LLM program to accelerate inference on the intricate large language models (LLMs) that have piqued the interest of numerous users.

Keisar is a prolific blogger, and his most recent post described innovative quantization methods for using NVIDIA A10 Tensor Core GPUs to run a Llama 2 LLM with an astounding 70 billion parameters.

“The quality of model outputs is still quite good, even at four bits,” he stated. “They found a good balance, and he hasn’t seen anyone else do this yet, but he can’t explain all the math.”

This is just the beginning of more faster efforts to come, after announcements this fall that Oracle is installing the newest NVIDIA H100 Tensor Core GPUs, H200 GPUs, L40S GPUs, and Grace Hopper Superchips.

Read more on Govindhtech.com

0 notes

Text

Learning Azure’s GPU Future Strategy

Azure’s GPU future strategy is private

They innovate to improve security at Microsoft Azure. Their collaboration with hardware partners to create a silicon-based foundation that protects memory data using confidential computing is a pioneering effort.

Data is created, computed, stored, and moved. Customers already encrypt their data at rest and in transit. They haven’t had the means to protect their data at scale. Confidential computing is the missing third stage in protecting data in hardware-based trusted execution environments (TEEs) that secure data throughout its lifecycle.

Microsoft co-founded the Confidential Computing Consortium (CCC) in September 2019 to protect data Azure’s GPU in use with hardware-based TEEs. These TEEs always protect data by preventing unauthorized access or modification of applications and data during computation. TEEs guarantee data integrity, confidentiality, and code integrity. Attestation and a hardware-based root of trust prove the system’s integrity and prevent administrators, operators, and hackers from accessing it.

For workloads that want extra security in the cloud, confidential computing is a foundational defense in depth capability. Verifiable cloud computing, secure multi-party computation, and data analytics on sensitive data sets can be enabled by confidential computing.

Confidentiality has recently become available for CPUs, but Azure’s GPU based scenarios that require high-performance computing and parallel processing, such as 3D graphics and visualization, scientific simulation and modeling, and AI and machine learning, have also required it. Confidential computing is possible for GPU scenarios processing sensitive data and code in the cloud, including healthcare, finance, government, and education.

Azure has worked with NVIDIA for years to implement GPU confidentiality. This is why previewed Azure confidential VMs with NVIDIA H100-PCIe Tensor Core GPUs at Microsoft Ignite 2023. The growing number of Azure confidential computing (ACC) services and these Virtual Machines will enable more public cloud innovations that use sensitive and restricted data.

GPU confidential computing unlocks use cases with highly restricted datasets and model protection. Scientific simulation and modeling can use confidential computing to run simulations and models on sensitive data like genomic, climate, and nuclear data without exposing the data or code (including model weights) to unauthorized parties. Azure’s GPU This can help scientists collaborate and innovate while protecting data.

Medical image analysis may use confidential computing for image generation. Confidential computing allows healthcare professionals to analyze medical images like X-rays, CT scans, and MRI scans using advanced image processing methods like deep learning without exposing patient data or proprietary algorithms. Keeping data private and secure can improve diagnosis and treatment accuracy and efficiency. Confidential computing can detect medical image tumors, fractures, and anomalies.

Given AI’s massive potential, confidential AI refers to a set of hardware-based technologies that provide cryptographically verifiable protection of data and models throughout their lifecycle, including use. Confidential AI covers AI lifecycle scenarios.

Inference confidentiality. Protects model IP and inferencing requests and responses from model developers, service operations, and cloud providers.

Private multi-party computation. Without sharing models or data, organizations can train and run inferences on models and enforce policies on how outcomes are shared.

Training confidentiality. Model builders can hide model weights and intermediate data like checkpoints and gradient updates exchanged between nodes during training with confidential training. Confidential AI can encrypt data and models to protect sensitive information during AI inference.

Computing components that are private

A robust platform with confidential computing capabilities is needed to meet global data security and privacy demands. It uses innovative hardware and Virtual Machines and containers for core infrastructure service layers. This is essential for services to switch to confidential AI. These building blocks will enable a confidential GPU ecosystem of applications and AI models in the coming years.

Secret Virtual Machines

Confidential Virtual Machines encrypt data in use, keeping sensitive data safe while being processed. Azure was the first major cloud to offer confidential Virtual Machines powered by AMD SEV-SNP CPUs with memory encryption that protects data while processing and meets the Confidential Computing Consortium (CCC) standard.

In the DCe and ECe virtual machines, Intel TDX-powered Confidential Virtual Machines protect data in use. These virtual machines use 4th Gen Intel Xeon Scalable processors to boost performance and enable seamless application onboarding without code changes.

Azure offers confidential virtual machines, which are extended by confidential GPUs. Azure is the sole provider of confidential virtual machines with 4th Gen AMD EPYC processors, SEV-SNP technology, and NVIDIA H100 Tensor Core GPUs in our NCC H100 v5 series.Azure’s GPU Data is protected during processing due to the CPU and GPU’s encrypted and verifiable connection and memory protection mechanisms. This keeps data safe during processing and only visible as cipher text outside CPU and GPU memory.

Containers with secrets

Containers are essential for confidential AI scenarios because they are modular, accelerate development/deployment, and reduce virtualization overhead, making AI/machine learning workloads easier to deploy and manage.

Azure innovated CPU-based confidential containers:

Serverless confidential containers in Azure Container Instances reduce infrastructure management for organizations. Serverless containers manage infrastructure for organizations, lowering the entry barrier for burstable CPU-based AI workloads and protecting data privacy with container group-level isolation and AMD SEV-SNP-encrypted memory.

Azure now offers confidential containers in Azure Kubernetes Service (AKS) to meet customer needs. Organizations can use pod-level isolation and security policies to protect their container workloads and benefit from Kubernetes’ cloud-native standards. Our hardware partners AMD, Intel, and now NVIDIA have invested in the open-source Kata Confidential Containers project, a growing community.

These innovations must eventually be applied to GPU-based confidential AI.

Road ahead

Hardware innovations mature and replace infrastructure over time. They aim to seamlessly integrate confidential computing across Azure, including all virtual machine SKUs and container services. This includes data-in-use protection for confidential GPU workloads in more data and AI services.

Pervasive memory encryption across Azure’s infrastructure will enable organizations to verify cloud data protection throughout the data lifecycle eventually making confidential computing the norm.

Read more on Govindhtech.com

#Azure#GPU#MicrosoftAzure#Microsoft#CPUs#MachineLearning#NVIDIAH100#AI#Intel#VirtualMachines#AzureKubernetesService#AMD#4thGenIntelXeonScalableprocessors#technews#technology#govindhtech

0 notes