#these were both made on colors 3D for the 3DS (costs less than 10 dollars but isn’t free!)

Text

to 12 year old me who wrote “i’m not worried about illumination doing the mario movie one bit at all anymore oh man oh yeah oh heck mr krabs” on a 3DS painting of the grinch .....we made it.....

#older pic is from oct/nov 2018!!! i was also 12 haha#these were both made on colors 3D for the 3DS (costs less than 10 dollars but isn’t free!)#i’m still sooooooo happy 🥺🥺🥺#super mario#luigi#redraw/repaint?#vics silly art zone 2022#vics silly art zone 2018#10/10/22#edited because the read more feature on tumblr mobile hates me

38 notes

·

View notes

Text

Industrial AI Applications – How Time Series and Sensor Data Improve Processes

(This article was written by TechEmergence Research and originally appeared on: https://www.techemergence.com/industrial-ai-applications-time-series-sensor-data-improve-processes/)

Over the past decade the industrial sector has seen major advancements in automation and robotics applications. Automation in both continuous process and discrete manufacturing, as well as the use of robots for repetitive tasks are both relatively standard in most large manufacturing operations (this is especially true in industries like automotive and electronics).

One useful byproduct of this increased adoption has been that industrial spaces have become data rich environments. Automation has inherently required the installation of sensors and communication networks which can both double as data collection points for sensor or telemetry data.

According to Remi Duquette from Maya Heat Transfer Technologies (Maya HTT), an engineering products and services provider, huge industrial data volumes don’t necessarily lead to insight:

“Industrial sectors over the last two decades have become very data rich in collecting telemetry data for production purposes… but this data is what I call ‘wisdom-poor’… there is certainly lots of data, but little has been done to put it into productive business use… now with AI and machine learning in general we see huge impacts for leveraging that data to help increase throughput and reduce defects.”

Remi’s work at Maya HTT involves leveraging artificial intelligence to make valuable use of otherwise messy industrial data. From preventing downtime to reducing unplanned maintenance to reducing manufacturing error – he believes that machine learning can be leveraged to detect patterns and refine processes beyond what was possible with previous statistical-based software approaches and analytics systems.

During our interview, he explored a number of use-cases that Maya HTT is currently working on – each of which highlights a different kind of value that AI can bring to an existing industrial process.

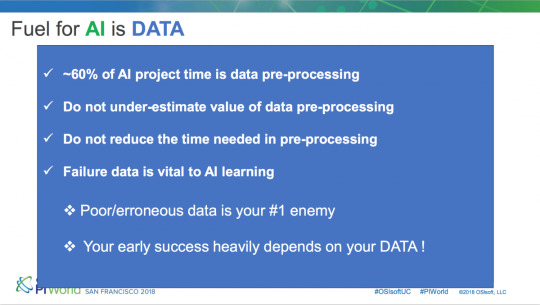

This point is reiterated in an important slide from one of his recent presentations at the OsiSoft PIWorld Conference in San Francisco:

Use Case: Discrete Manufacturing

Increasing throughputs and reducing turnaround times are some of the biggest business challenges faced by the manufacturing sector today – irrespective of industry.

One interesting trend here was that individual machine optimization was the most common form of early engagement with AI for most manufacturers – and much more value can be gleaned from simultaneously optimizing the combined efficiencies of all the machines in a manufacturing line.

“There has been little that has been done to really help that industry to go beyond automation and statistical-based procedures, or optimizing individual machines.

What we find in our AI engagements is that – at the individual machine level – there is little that can be done, as it’s been fairly optimized… if we look at where AI can help significantly, it’s at looking at the production chain as a whole or combining different type of data to further optimize a single machine or a few machines together.”

In a typical discrete manufacturing environment today, we would likely find that there are over ten manufacturing stages and that data is collected at each stage from a plethora of devices. Remi explains that in such a scenario AI can today track correlations between many machines and identify patterns in the industrial data which can potentially lead to overall improved efficiency.

He adds some color here with a real-life example from one of Maya HTT’s clients. He elaborates that for one particular discrete manufacturer’s industrial process which faced high rejection rates, Maya HTT collected around 20,000 real-time telemetry variables and by analysing the data along with manufacturing logs and other non real-time production data, the AI platform was able to find what led to the high rejection rates.

“A discrete manufacturing process with over 10 manufacturing stages – each collecting tons of data. Yet towards the end of the process there were still high rejection rates… In this case we applied AI to look at various processes throughout this ‘food chain’.

There were 20,000 real-time telemetry variables collected, and by correlating them and combining them with production data, the AI was able to learn which combinations and variance led to the rejection rate. In this particular case the AI was able to remove over 70% of the rejection issues.”

This particular AI solution was able to quickly recognize faulty pieces so the machine operators could remove them from the process thereby reducing the work that the quality assurance team had to handle and leading to saved material and less real work hours spent detecting or correcting faulty pieces.

What this means in real business terms is that the manufacturer used fewer raw materials, had a lot less rework hours, which essentially translated to saving of half a million dollars per production line per year. “We estimated a savings of over $500,000 per year in this particular case”, said Remi.

Use Case: Fleet Management

Another such use-case that Remi explored was in fleet management or freight operations in the logistics sector where maintaining high fuel efficiency for vehicles like trucks or ships is essential for cost-savings in the long run.

Remi explains that AI can enable ‘real-time’ fleet management which would otherwise be difficult to achieve with plain automation software. Various patterns identified by AI from data, like weather or temperature conditions, operational usage, mileage, etc can potentially be used to pick out anomalies and recommend what the best route or speed would be in order to operate at the highest efficiency levels.

Remi agreed that no matter the mode of transportation used (trucks or ships, for example), some of the more common emerging use-cases for AI in logistics are around overall fuel consumption analysis for fleets.

“When you have a large fleet, it’s possible to mitigate a lot of loss by finding refinements in fuel use.” In cases of very large fleets, AI can simulate and potentially mitigate huge loss exposure by reducing fuel consumption for the fleet.

Use Case: Engineering Simulation

The last use-case that Remi touched on was 3D engineering simulations for design in the field of computational fluid dynamics.

Traditional simulation software can be fed input criteria like flow rates of fluids and can generate a 3D simulation which usually takes around 15 minutes to be rendered and involves a long, iterative, and often manual process.

Maya HTT’s team claims that it was able to deliver an AI tool for this situation resulting in getting simulation results time falling to less than 1 second. Maya HTT claims that this improvement of over 900x in efficiency gains (from 15 minutes to 1 second) during the design process enabled design teams to explore far more design variants than previously possible.

Phases of Implementation for Industrial AI

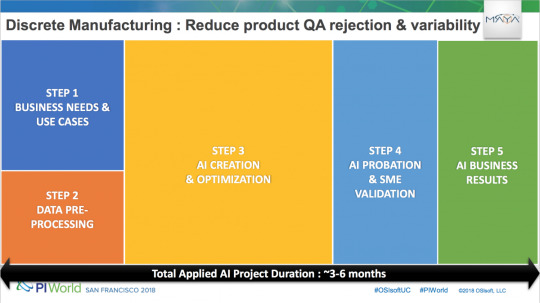

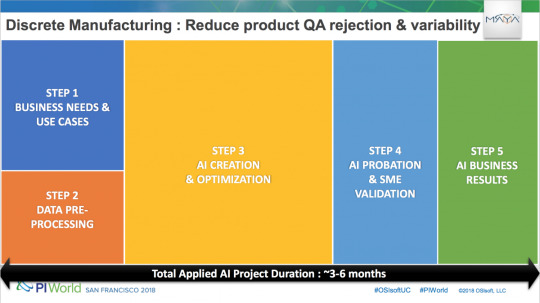

When asked about what business leaders in the manufacturing sector interested in adopting AI would need to do, Remi outlines a 5 step approach used at Maya HTT:

The first step would be a business assessment to make sure any target AI project will eventually lead to significant business impact.

Next businesses would need to understand data source access and manipulations that may be needed in order to train and configure the AI platform. This may also involve restructuring the way data is collected in order to enhance compatibility.

The actual creation and optimization of an AI application.

Training and conclusions from AI need to be properly explained and understood. The operational AI agent is put to work and final tweaks are made to improve accuracy to desired levels.

Delivery and measurement of tangible business results from the AI solution.

In almost all sectors we cover, data preprocessing is an underappreciated (and often complex) part of an AI application process – and it seems evident that in the industrial sector, the challenges in this step could be significant.

A Look to the Future

Remi believes that for the time being, industrial AI applications are safely in the realm of augmenting worker’s skills as opposed to wholly replacing jobs:

“At least for the short-medium timeframe… we augment people on the shop floor in order to increase throughput and production rates.”

He also stated that the longer-term implications of AI in heavy industry involve more complete automation – situations where machines not only collect and find patterns in the data, but take direct action from those found patterns. For example:

A manufacturing line might detect tell-tale signs of error in a given product on the line (possibly from a visual analysis of the item, or from other information such as temperature and vibration as it moves through the manufacturing process), and remove that product from the line – possibly for human examination, or simply to recycle or throw out the faulty item.

A fleet management system might detect a truck with unusual telemetry data coming from its breaks, and automatically prompt the driver to pull into the nearest repair shop on the way to the truck’s destination – prompting the driver with an exact set of questions for the diagnosing mechanics.

He mentions that these further developments towards autonomous AI action will involve much more development in the core technologies involved in industrial AI, and a greater period of time to flesh out the applications and train AI systems on huge sets of data over time.

Many industrial AI applications are still somewhat new and bespoke. This is due partially to the unique nature of manufacturing plants or fleets of vehicles (no two are the same), but it’s also due to the fact that AI hasn’t been applied in the domain of manufacturing or heavy industry for as long as it has been in the domains of marketing or finance.

As the field matures and the processes for using data and delivering specific results become more established, AI systems will be able to expand their function from merely detecting and “flagging” anomalies (and possibly suggesting next steps), to fully taking next steps autonomously when a specific degree of certainty has been achieved.

About Maya HTT

Maya HTT is a leading developer of simulation CAE and DCIM software. The company provides training and digital product development, analysis, testing services, as well as IIoT, real-time telemetry system integrations, and applied AI services and solutions.

Maya HTT are a team of specialized engineering professionals offering advanced services and products for the engineering and datacenter world. As a Platinum level VAR and Siemens PLM development partner and OSIsoft OEM and PI System Integration partner, Maya HTT aims to deliver total end-to-end solutions to its clients.

The company is also a provider of specialized engineering services such as Advanced PLM Training, Specialized Engineering Consulting for Thermal, Flow and Structural projects as well as Software Development with extensive experience implementing NX Customization projects.

3 notes

·

View notes

Link

At the base $6,000 price the 2019 Mac Pro is a terrible value packing minimal specs like an 8 core processor 5 ATX graphics 32 gigabytes of RAM in a measly 256 gigs of storage.

So the question is why in the world would Apple sell this computer at that price and who would pay up to fifty-three thousand dollars for it and why but in this video we're not only going to answer those questions but will also tell you seven specific things The Mac Pro offers that you can't find anywhere else.

But before we get into that and what we wish Apple did differently we want to take a minute to show off some of the features that make it seriously impressive first off the design looks very unique and it's also very solid built out of mostly high-strength aluminum and stainless steel the tower is smaller than expected yet weighs over 40 pounds on our lower end config not only does the lattice pattern on the front and back look really cool and does an okay job at grating cheese it also allows for a massive amount of air to flow through the case while retaining its strengths taking the case off is shockingly easy and the internals look really clean the various locking mechanisms are engineered incredibly well making it easier and quicker to replace components than on basically any other computer you literally won't find a cable on the inside because a Mac Pro doesn't use them every single connection from powering the fans to the graphics cards happens with metal contact connectors and even with that the Mac Pro scored an impressive 9 out of 10 repairability score from iFixit the fans have a design that we haven't seen on any other machine and it led to whisper-quiet performance even when we pushed our mac pro to the max in our thermal performance video it packs a beast of a motherboard with 8 PCI Express card slots 4 of them supporting full 16x speeds as well as dual 10 Gigabit Ethernet it supports up to 12 Thunderbolt 3 ports 1.5 terabytes of RAM and not only one but 2 which is of the most powerful graphics cards ever made.

The Mac Pro is basically the Lambo of computers it's got the Lambo design it's got Lambo performance it's over-engineered like a Lambo it's got a state-of-the-art system like a Lambo and it's also priced like a Lambo. And just like a Lambo is obviously not meant for regular people that need to get from point A to point B the Mac Pro isn't meant for the average or maybe even above-average computer user there's a recent coffee shops don't use consumer-grade espresso machines that you can buy for five hundred dollars sure they can get the job done but they're not gonna perform as well and they're not going to be anywhere near as reliable even a coffee stand run by a small business is going to be using a commercial grade espresso machine that could cost up to $10,000 and beyond and that's what a workstation computer like the Mac Pro is it's a commercial-grade machine that gets things done as fast and reliably as possible and it doesn't come cheap.

There are companies like studios that render movies like frozen or Jumanji - that will literally spend millions of dollars every year just on paying their employees alone so for any company that can utilize the power of the Mac Pro it's actually not expensive if buying a high price reliable Mac Pro can help their employees save time by running their programs faster they will actually save money in the long run through higher efficiency alone. Now you might be thinking why not just buy a reliable Windows workstation instead of a ripoff Mac Pro right well we actually made a video where we compared the Mac Pro to six other Windows workstation brands and surprisingly the Mac Pro is actually a bit cheaper in most of the comparisons and on top of that, there are seven specific things the Mac Pro offers that you can't get anywhere else things that we think will sell a lot of Mac Pros. But first, let me give a huge shout out to micro Center for making our Mac Pro content possible micro Center has 25 stores nationwide with an impressive variety of electronics from gaming VR computer parts like processors graphics and everything else needed to build or upgrade a PC or Mac micro Center has been an Apple-authorized dealer since 1980 they have a dedicated Apple Department with highly trained Apple sales associates.

Aside from the iPhone Micro Center carries the full line of Apple products and they have the largest selection of third-party products made for Mac income into a local MicroCenter today and talks in one of their Apple experts to order the specific Mac Pro configuration that best suits your needs check the link to find a location near you or browse all of micro centers Apple products back to the Mac Pro for those people or companies who use Mac OS based apps and need the most performance they can get the new Mac Pro is an answered prayer previously the best performance they could get is an iMac with an 18 core processor 256 gigs of RAM in a limiting Vega 64 X graphics card the new Mac Pro raises the bar to what most people would call overkill like supporting up to a massive 1.5 terabytes of RAM and up to a 28 core processor even the Mac pros mid-range 16 core processor now outperforms the best 18 core option in the iMac Pro yes the iMac Pro is a better value at the base price but if you spec them up.

The Mac Pro actually gives you better performance at a lower price another thing that's exclusive to the Mac Pro is the afterburner card which boosts video editing performance when working with pro res which is the industry standard professional codec for editing video we tested it out with our $13,000 mac pro and during playback of a key prores footage we noticed that CPU usage went from around 70 percent down to 2 percent meaning that the afterburner card was taking care of the workload we also saw improved render times in various tests especially a key pro res and of course you can't get the afterburner card on any other machine and it won't work in each of you either.

If you want to see a detailed review of the afterburner card use a link another thing you can't get anywhere else is incredible amounts of expandability while also getting great reliability with Mac OS like getting up to 12 Thunderbolt 3 ports in duel 10 Gigabit Ethernet while still having extra PCI Express slots left over.

Now some of you may disagree that Mac OS is more reliable than Windows but last year rescue comm a computer repair service revealed data that shows off the number of repair calls they received per computer brand and Apple scored the highest reliability school by far and in 2015 IBM switched out 90,000 PCs to Macs and they found that they had to call in for tech support around two times less than when they previously used pcs and 73% of their employees said they wanted another Mac when it was time to upgrade.

There's also the option of building a Hackintosh to get upgradeability and Mac OS together but companies won't do that since it's illegal and for small businesses like ours Hackintosh is a pain in the reliability department we actually used two of them a few years ago and spent way too much time troubleshooting instead of working so we switched them out for genuine Macs another thing you can't get.

On any other branded system is an incredibly silent cooling system from our testing our mid-range Mac Pro model was the only computer we've ever tested that can run both a CPU stress test and a graphic stress test at the same time without the fans audibly kicking up at all it's so quiet that it almost feels unnatural and there are people who will pay for that we also think apples $6,000 Pro display Tex your is going to sell a lot of Mac Pros simply because it's a better deal than a lot of the other professional reference monitors out there and is packing a much higher 6k resolution Apple's graphics cards are the only ones out there that have Thunderbolt 3 ports that are required to power the pro display XDR so even if you were to somehow connect the display to a Windows workstation PC it would most likely be limited in one way or another like only working at 4k resolution or not supporting 10-bit color or HDR defeating the purpose of the display.

And finally, AMD built the most powerful single graphics card in the world the Vega 2 duo exclusively for the Mac Pro offering 64 gigabytes of HBM to memory and 28.4 teraflops of performance compared to the more expensive Quadrille RT x 8000 with 48 gigabytes of slower memory in 16.3 teraflops a performance technically the Vega 2 duo has two GPUs on one card but with infinity fabric link and apples metal to framework, they work as a single GPU similar to a raid 0 hard drive doubling both the memory and the bandwidth. So basically if you want this level of graphics and memory performance you have to get a Mac and it has to be the Mac Pro.

Apple sent a 3d graphics to do lunar animations a new Mac Pro to test out for their work and that's actually what they use to do 3d animation for the latest Jumanji movie they said they love the Mac Pro because the 32 gigs of memory in the graphics card allowed them to get over five times more frames per second during playback which allowed them to review change in preview all of their work at quote lightening speed while avoiding the need to create proxy textures and models.

And the best part is that they weren't using a $53,000 Mac Pro like a lot of people are led to believe is the price of the Mac Pro the model that they received was actually under $20,000 so in reality, if you're working with graphics a twelve or sixteen core with 192 gigs of ram will honestly be enough just like what lunar animations received and for programmers photo editors or logic Pro users the base 5 a DX graphics will be perfectly fine and if you're connecting to an ass server like we do the base 256 gigs of storage is enough for most, for example, iMac Pro is still only using around 110 gigabytes of storage but we still think Apple should have given us a one terabyte SSD as standard realistically most high-end professional configurations will likely average around $15,000 now that's not cheap but far from the outcry about the $53,000 Mac Pro in a decent value compared to other workstations.

The Biggest complaints we hear from PC enthusiasts is that the Mac Pro doesn't use PCI Express 4.0 and that the Xeon CPUs price to performance is much worse compared to the just release chips from AMD and we completely agree with that now this really isn't apples fault but Intel's for not innovating while charging a ton of money and while we wish Apple used those brand new AMD processors this Mac Pro has been in the works for multiple years so Apple would have had to delay the Mac Pro to switch to using AMD processors but for the future we seriously hope Apple will make the switch now the low-end $6000 model is honestly a horrible value unless you plan on upgrading it yourself but there's a reason for that price adjusting for inflation apples high-end desktops have always started at around $3000 but this year it starts at double the price with specs that seem a bit underwhelming but there's actually a sensible reason for it let me explain the previous map pros ran into various limitations like the 2012 model not having enough PCI Express slots or having a power supply so weak that it forced users to rig up external power supplies to run multiple graphics cards the 2013 trashcan Mac Pro also-ran into major thermal limitations not being able to keep the graphics cards cool but this time around Apple made sure that every Mac Pro comes equipped to properly sustain a maxed-out system featuring a massive 1,400-watt power supply which is utterly over Kayla for the base model.

Every Mac Pro also packs a top-of-the-line motherboard with tons of room for upgradeability in a cooling system that's built to be able to properly cool a 28 core for GPU system while staying quiet yes the Mac Pro is expensive but it gives you a lot of options but now and later if you're a small business owner who wants to get the most value you can buy the base model operate it yourself and save thousands of dollars while at the same time big corporations can configure the Mac Pro how they want it right out of the gate but in terms of upgradeability it's incredibly quick and easy like we showed in our everything you can upgrade on the Mac Pro video we were able to easily add in our own M.2 SSD save over $2000 on 192 gigs of RAM add in our own graphics cards and we even removed the socketed CPU and found better value options you can upgrade to.

Apart from the high base price is Apple trying to get a little extra profit since they are losing money by allowing you to upgrade everything yourself but even then it's not that high once you consider market value prices for all the parts a similar motherboard packing close to the same features would cost two thousand dollars alone the Mac pros custom-built an extremely solid case as compared to cases priced close to $1000 in the are still cheaper and easier to manufacture than the Mac Pros that's close to $3,000 already without mentioning any of the performance or silent cooling components and then adding in the rest of the base parts gives the total price up to about five thousand dollars so the Apple tax isn't really as bad as people think whether you're buying a base model and upgrading yourself or buying an already spec top model from Apple the beauty of the Mac Pro is that you're getting a combination of three different things high performance right now upgradeability four years down the road and reliable Mac OS software to keep your work running efficiently and based on the feedback from businesses with a high budget and workloads demanding enough to warrant such powerful machines along with the fact that both the Mac Pro and XDR display are back-ordered between three weeks to two months we would say that the new Mac Pro is a big success for Apple if you enjoy this review make sure to check out some of our other reviews right over here and below if any case if you have queries on this review we'd like to thank my Crescenta for helping make this review possible thanks for reading this blog and we'll see you in the next interesting review on the latest products.

0 notes

Text

Industrial AI Applications – How Time Series and Sensor Data Improve Processes

(This article was written by TechEmergence Research and originally appeared on: https://www.techemergence.com/industrial-ai-applications-time-series-sensor-data-improve-processes/)

Over the past decade the industrial sector has seen major advancements in automation and robotics applications. Automation in both continuous process and discrete manufacturing, as well as the use of robots for repetitive tasks are both relatively standard in most large manufacturing operations (this is especially true in industries like automotive and electronics).

One useful byproduct of this increased adoption has been that industrial spaces have become data rich environments. Automation has inherently required the installation of sensors and communication networks which can both double as data collection points for sensor or telemetry data.

According to Remi Duquette from Maya Heat Transfer Technologies (Maya HTT), an engineering products and services provider, huge industrial data volumes don’t necessarily lead to insight:

“Industrial sectors over the last two decades have become very data rich in collecting telemetry data for production purposes… but this data is what I call ‘wisdom-poor’… there is certainly lots of data, but little has been done to put it into productive business use… now with AI and machine learning in general we see huge impacts for leveraging that data to help increase throughput and reduce defects.”

Remi’s work at Maya HTT involves leveraging artificial intelligence to make valuable use of otherwise messy industrial data. From preventing downtime to reducing unplanned maintenance to reducing manufacturing error – he believes that machine learning can be leveraged to detect patterns and refine processes beyond what was possible with previous statistical-based software approaches and analytics systems.

During our interview, he explored a number of use-cases that Maya HTT is currently working on – each of which highlights a different kind of value that AI can bring to an existing industrial process.

This point is reiterated in an important slide from one of his recent presentations at the OsiSoft PIWorld Conference in San Francisco:

Use Case: Discrete Manufacturing

Increasing throughputs and reducing turnaround times are some of the biggest business challenges faced by the manufacturing sector today – irrespective of industry.

One interesting trend here was that individual machine optimization was the most common form of early engagement with AI for most manufacturers – and much more value can be gleaned from simultaneously optimizing the combined efficiencies of all the machines in a manufacturing line.

“There has been little that has been done to really help that industry to go beyond automation and statistical-based procedures, or optimizing individual machines.

What we find in our AI engagements is that – at the individual machine level – there is little that can be done, as it’s been fairly optimized… if we look at where AI can help significantly, it’s at looking at the production chain as a whole or combining different type of data to further optimize a single machine or a few machines together.”

In a typical discrete manufacturing environment today, we would likely find that there are over ten manufacturing stages and that data is collected at each stage from a plethora of devices. Remi explains that in such a scenario AI can today track correlations between many machines and identify patterns in the industrial data which can potentially lead to overall improved efficiency.

He adds some color here with a real-life example from one of Maya HTT’s clients. He elaborates that for one particular discrete manufacturer’s industrial process which faced high rejection rates, Maya HTT collected around 20,000 real-time telemetry variables and by analysing the data along with manufacturing logs and other non real-time production data, the AI platform was able to find what led to the high rejection rates.

“A discrete manufacturing process with over 10 manufacturing stages – each collecting tons of data. Yet towards the end of the process there were still high rejection rates… In this case we applied AI to look at various processes throughout this ‘food chain’.

There were 20,000 real-time telemetry variables collected, and by correlating them and combining them with production data, the AI was able to learn which combinations and variance led to the rejection rate. In this particular case the AI was able to remove over 70% of the rejection issues.”

This particular AI solution was able to quickly recognize faulty pieces so the machine operators could remove them from the process thereby reducing the work that the quality assurance team had to handle and leading to saved material and less real work hours spent detecting or correcting faulty pieces.

What this means in real business terms is that the manufacturer used fewer raw materials, had a lot less rework hours, which essentially translated to saving of half a million dollars per production line per year. “We estimated a savings of over $500,000 per year in this particular case”, said Remi.

Use Case: Fleet Management

Another such use-case that Remi explored was in fleet management or freight operations in the logistics sector where maintaining high fuel efficiency for vehicles like trucks or ships is essential for cost-savings in the long run.

Remi explains that AI can enable ‘real-time’ fleet management which would otherwise be difficult to achieve with plain automation software. Various patterns identified by AI from data, like weather or temperature conditions, operational usage, mileage, etc can potentially be used to pick out anomalies and recommend what the best route or speed would be in order to operate at the highest efficiency levels.

Remi agreed that no matter the mode of transportation used (trucks or ships, for example), some of the more common emerging use-cases for AI in logistics are around overall fuel consumption analysis for fleets.

“When you have a large fleet, it’s possible to mitigate a lot of loss by finding refinements in fuel use.” In cases of very large fleets, AI can simulate and potentially mitigate huge loss exposure by reducing fuel consumption for the fleet.

Use Case: Engineering Simulation

The last use-case that Remi touched on was 3D engineering simulations for design in the field of computational fluid dynamics.

Traditional simulation software can be fed input criteria like flow rates of fluids and can generate a 3D simulation which usually takes around 15 minutes to be rendered and involves a long, iterative, and often manual process.

Maya HTT’s team claims that it was able to deliver an AI tool for this situation resulting in getting simulation results time falling to less than 1 second. Maya HTT claims that this improvement of over 900x in efficiency gains (from 15 minutes to 1 second) during the design process enabled design teams to explore far more design variants than previously possible.

Phases of Implementation for Industrial AI

When asked about what business leaders in the manufacturing sector interested in adopting AI would need to do, Remi outlines a 5 step approach used at Maya HTT:

The first step would be a business assessment to make sure any target AI project will eventually lead to significant business impact.

Next businesses would need to understand data source access and manipulations that may be needed in order to train and configure the AI platform. This may also involve restructuring the way data is collected in order to enhance compatibility.

The actual creation and optimization of an AI application.

Training and conclusions from AI need to be properly explained and understood. The operational AI agent is put to work and final tweaks are made to improve accuracy to desired levels.

Delivery and measurement of tangible business results from the AI solution.

In almost all sectors we cover, data preprocessing is an underappreciated (and often complex) part of an AI application process – and it seems evident that in the industrial sector, the challenges in this step could be significant.

A Look to the Future

Remi believes that for the time being, industrial AI applications are safely in the realm of augmenting worker’s skills as opposed to wholly replacing jobs:

“At least for the short-medium timeframe… we augment people on the shop floor in order to increase throughput and production rates.”

He also stated that the longer-term implications of AI in heavy industry involve more complete automation – situations where machines not only collect and find patterns in the data, but take direct action from those found patterns. For example:

A manufacturing line might detect tell-tale signs of error in a given product on the line (possibly from a visual analysis of the item, or from other information such as temperature and vibration as it moves through the manufacturing process), and remove that product from the line – possibly for human examination, or simply to recycle or throw out the faulty item.

A fleet management system might detect a truck with unusual telemetry data coming from its breaks, and automatically prompt the driver to pull into the nearest repair shop on the way to the truck’s destination – prompting the driver with an exact set of questions for the diagnosing mechanics.

He mentions that these further developments towards autonomous AI action will involve much more development in the core technologies involved in industrial AI, and a greater period of time to flesh out the applications and train AI systems on huge sets of data over time.

Many industrial AI applications are still somewhat new and bespoke. This is due partially to the unique nature of manufacturing plants or fleets of vehicles (no two are the same), but it’s also due to the fact that AI hasn’t been applied in the domain of manufacturing or heavy industry for as long as it has been in the domains of marketing or finance.

As the field matures and the processes for using data and delivering specific results become more established, AI systems will be able to expand their function from merely detecting and “flagging” anomalies (and possibly suggesting next steps), to fully taking next steps autonomously when a specific degree of certainty has been achieved.

About Maya HTT

Maya HTT is a leading developer of simulation CAE and DCIM software. The company provides training and digital product development, analysis, testing services, as well as IIoT, real-time telemetry system integrations, and applied AI services and solutions.

Maya HTT are a team of specialized engineering professionals offering advanced services and products for the engineering and datacenter world. As a Platinum level VAR and Siemens PLM development partner and OSIsoft OEM and PI System Integration partner, Maya HTT aims to deliver total end-to-end solutions to its clients.

The company is also a provider of specialized engineering services such as Advanced PLM Training, Specialized Engineering Consulting for Thermal, Flow and Structural projects as well as Software Development with extensive experience implementing NX Customization projects.

0 notes