Text

AI and ML in the European Pharmaceutical Industry: Recent Applications, Challenges, and Response to the Pandemic

Innovations in the pharmaceutical industry proved to be a tall order, as drug development's success rate has been traditionally tremendously low. However, the COVID-19 pandemic whipped up R&D centers, markets, and leaders to develop the response to the crisis faster than ever. AI and ML bring to the table a toolbox to overcome new challenges in the whole industry. Significant that the Healthcare Artificial Intelligence market is expected to reach $51.3 billion by 2027 at CAGR (compound annual growth rate) of 41.4% starting from 2020. Check out this fresh guide on the latest updates that AI and machine learning brought to the pharmaceutical market in the EU, including recent applications and the most significant challenges.

AI and Pharma at the “Slope of Enlightenment”

Recent AI and ML applications in healthcare and pharma proved that these technologies are reaching the 'Slope of Enlightenment' in the Gartner Hype Cycle. It seems like the industry has no choice at the moment. Per Subroto Mukherjee, Head of Innovation and Emerging Technology at GlaxoSmithKline, the development of the new vaccines against coronavirus would take from eight to ten years before. In contrast, the ones available now took 300 days from the start of development to the first testings. The rationale of using ML in the industry is to lower attrition and costs while increasing the success rate for new drug development.

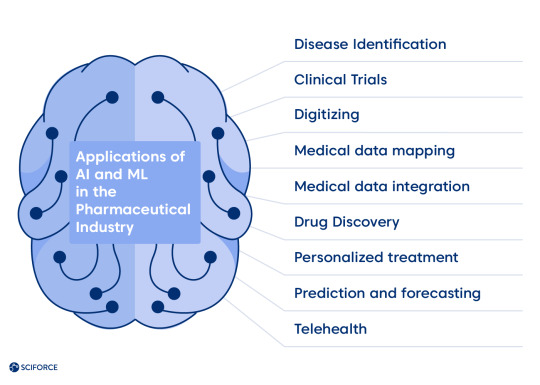

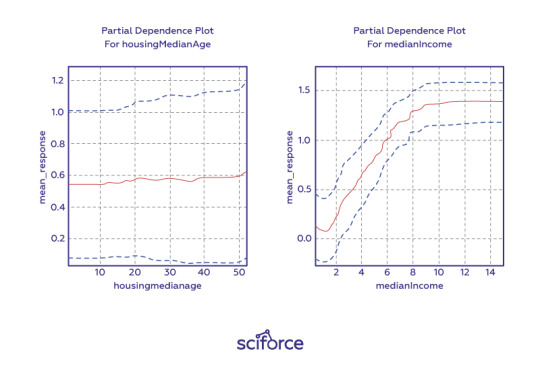

Hence, the power of using ML algorithms to parse significant amounts of data to learn from it and make determination or prediction about the future of the new data sets come to the scene. The algorithms show higher performance with the increase of quantity and quality of data, leading to the question of data regulation, which we cover further. Data collected like images, texts, biometrics, assay, and other information from wearables stand as the field for developing the new models or formulas that are still unknown but could bring crucial changes. Practically there has been next proven applications of AI and ML in the market:

Disease Identification — reaching all the possible areas where data is available.

Clinical Trials — matching the ideal candidate for a trial based on their data.

Digitizing — facilitating conversion from paper/image medical info into fully structured digital data via optical character recognition (OCR) and natural language processing (NLP).

Medical data mapping — enabling medical research and development by standardizing data formats.

Medical data integration — helping medical data owners sell/give access to their data to research pharma companies via Extract/Transform/Load (ETL) technology.

Drug Discovery — components screening, RNA and DNA fast measurement, personalized medication development.

Personalized treatment — enhancing the diagnostic accuracy of healthcare providers.

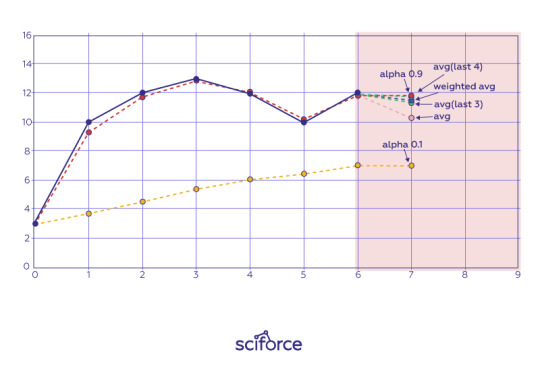

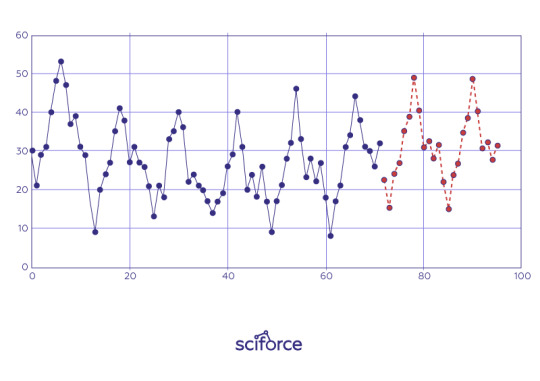

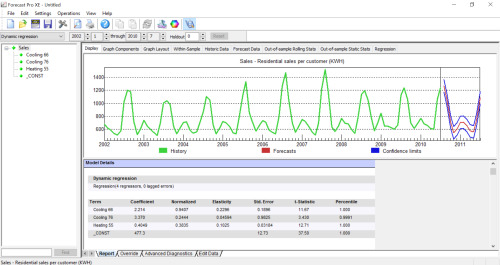

Prediction and forecasting — monitoring the seasonal illnesses globally and facilitating early diagnostics and precision treatment via data analysis.

Telehealth — helping caretakers in delivering treatment remotely via mobile/web development

Recent Use Cases in the European Pharma and Healthcare

To illustrate the mentioned applications, see how the European companies resolve the scientific problems delivering faster pharmaceutical, bispecific target diseases treatments, and rare disease patients treatment matching using machine learning. The UK and France-based companies are on our list this time.

Tessella is a data science consultancy offering AI and data science services by building machine learning models. They claim to have helped GlaxoSmithKline (GSK) improve the salt and polymorph screening process of the drugs' development. It helps to find the best physical form of the new drug substance. The company developed an ML model that automates medicine preparation processes like liquid addition and mixing, heating and cooling, shaking, sample transfer, and solid dispensing.

Healx has developed HealNet, software helping in matching rare disease patients with appropriate drug treatments. Their ML algorithms are built on a database consisting of publicly available data and specific sources, including clinical trials, symptoms data, chemical structure, drug targets, patents, and scientific literature.

Exscientia claims their software can discover small molecules and compounds treating bispecific target diseases. This solution is using an ML model that predicts the specific development of the bispecific diseases.

Owkin claims their solution, Socrates database helps in creating predictive models for drug development optimization.

GlaxoSmithKline, a UK-based multinational company, applied the ML in predictive forecasting for some popular seasonal brands. It helps to foresee the possible peaks and troughs of coming cold and flu or allergy in a specific region, assisting local authorities to deliver an effective health communications campaign.

Challenges of Big Data for Big Pharma

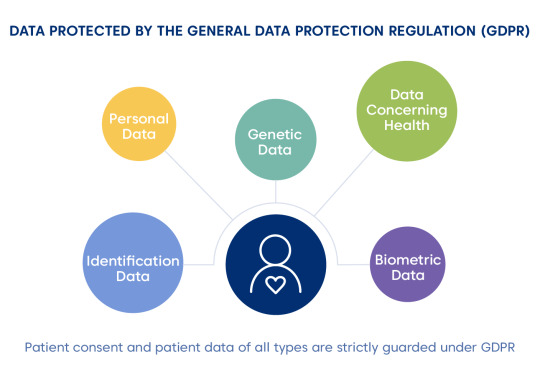

Statistical technology depends on the data quality to generate meaningful and impactful results. Hence, pharmaceutical and healthcare companies should balance the EU's General Data Protection Regulation (GDPR), starting from 2018. It is also still applicable to the UK, which is leaving the EU. The data, which is under GDPR patient's consent, includes the following categories:

Identification data: any information identifies an individual (name, address, ID number, email, social media accounts, etc.).

Personal data: data relating to a person's physical, genetic, physiological, mental, cultural, economic, or social identity.

Biometric data: any data including an individual's physical, behavioral or physiological information.

Genetic data: data of the acquired genetic or the inherited characteristics, any data from a biologic sample.

These regulations hold the extraterritorial reach, which means that any global business dealing with EU customers should consider this aspect. Noncompliance to the GDPR leads to 4% of annual global revenue or €20 million fine. It does not sound inspirational, is it?

Per David Champagne, a member of the McKinsey Digital Practice, et al. in their article devoted to realizing machine learning potential, there is a way to escape a bottleneck. Pharmaceutical companies can move through the regulatory landscape effectively. In the meantime, all the actors should consider these points:

Data quality is a crucial success factor, and we all need judicious consideration of data usage.

Transparency: leaving behind the 'black box' approach and explaining the 'magic' elements to the key stakeholders.

Credibility: results should be consistent with domain expertise and established science.

Fairness: avoiding the biases of the clinical and social environments.

Impact: ability to quantify the results as more productive than previous practices.

Reproducibility of the results: algorithms should still perform while dealing with real-time data.

AI and ML vs. COVID-19

Recent studies worldwide are experimenting with AI and ML techniques for decision-making in treatment, recovery prediction, and patients' prioritizing. The pandemic also triggered telehealth development, as a recent case in Spain shows the effect of using patients' data for predicting whether a person needs immediate intensive care unit admission. Read more on AI and ML grappling pandemic in our recent post.

Per Subroto Mukherjee, AI and machine learning can hold their role in the fight with the pandemic by finding out coronavirus's biological secret. The crisis affected not only drug development but also a global supply chain. AI's power of planning, forecasting, automation, and collaboration can also unleash supply companies' management.

Such applications as natural language processing and computer vision apply to current initiatives. The US White House, with the help of the AI community, started the process of medical literature mining to understand a coronavirus's nature. Medical imaging companies are already using CT image processing to detect coronavirus-induced pneumonia.

Wrapping Up

Machine learning and AI are demonstrating transformative power on the European pharmaceutical and healthcare market. Meanwhile, it is a domain that still needs to find out optimal modus operandi concerning privacy and clarity to all the actors. To meet all the criteria and ensure progress in a current global fight with the pandemic, we should apply the known tools considering the guidelines. Daring to bring positive results in exponential growth using AI and machine learning is real. We have checked it out.

#artificial intelligence#machine learning#healthcare#health tech#data science#pharmaindustry#pharmacy#covid 19

0 notes

Text

Reinforcement Learning and Asynchronous Actor-Critic Agent (A3C) Algorithm, Explained

While supervised and unsupervised machine learning is a much more widespread practice among enterprises today, reinforcement learning (RL), as a goal-oriented ML technique, finds its application in mundane real-world activities. Gameplay, robotics, dialogue systems, autonomous vehicles, personalization, industrial automation, predictive maintenance, and medicine are among RL's target areas. In this blog post, we provide a concrete explanation of RL, its applications, and Asynchronous Actor-Critic Agent (A3C), one of the state-of-the art algorithms developed by Google's DeepMind.

Key Terms and Concepts

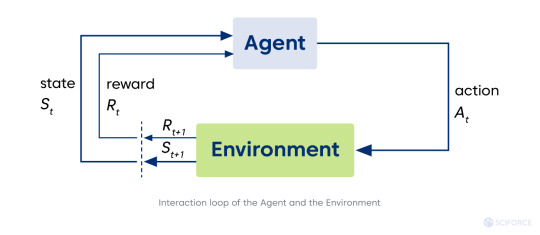

Reinforcement learning refers to the type of machine learning technique enabling an agent to learn to interact with an environment (area outside the agent's borders) by trial and error using reward (feedback from its actions and experiences). The agent seeks ways to maximize the reward via interacting with the environment instead of analyzing the data provided.

The agent is a learning controller taking actions in the environment and receives feedback in the form of reward.

The environment, space where the agent gets everything needed from a given state. The environment can be static or dynamic, and its changes can be stochastic and deterministic correspondingly. It is usually formulated as Markov decision process (MDP), a mathematical framework for decision-making development.

However, real-world situations often do not convey information to commit a decision (some context is left behind the currently observed scene). Hence, the Partially Observable Markov Decision Processes (POMDPs) framework comes on the scene. In POMDP the agent needs to take into account probability distribution over states. In cases where it’s impossible to know that distribution, RL researchers use a sequence of multiple observations and actions to represent a current state (i.e., stack of image frames from a game) to better understand a situation. It makes possible to use RL methods as if we are dealing with MDP.

The reward is a scalar value that agents receive from the environment, and it depends on the environment’s current state (St ), the action the agent has performed grounding on the current state (At ), and the following state of the environment (St+1):

Policy (π) stands for an agent’s strategy of behavior at a given time. It is a mapping from the state to the actions to be taken to reach the next state. Speaking formally, it is a probability distribution over actions in a given state, meaning the likelihood of every action in a particular state. In short, policy holds an answer to the “How to act?” question for an agent.

State-value function and action-value function are the ways to assess the policy, as RL aims to learn the best policy. Value function V holds an answer to the question “How good current state is?”, namely an expected return starting from the state (S) and following policy (π).

Sebastian Dittert defines the action-value of a state as “the expected return if the agent chooses action A according to a policy π.” Correspondingly, it is the answer to “How good current action is?”

Thus, the goal of an agent is to find the policy () maximizing the expected return (E[R]). Through the multiple iterations, the agent’s strategy becomes more successful.

One of the most crucial trade-offs for RL is balancing between exploration and exploitation. In short, exploration in RL aims at collecting experience from new, previously unseen regions. It potentially holds cons like a risk, nothing new to learn, and no guarantee to get any useful further information. On the contrary, exploitation updates model parameters according to gathered experience. In its turn, it does not provide any new data and could not be efficient in case of scarce rewards. An ideal approach is making an agent explore the environment until being able to commit an optimal decision.

Reinforcement Learning vs. Supervised and Unsupervised Learning

Comparing RL with AI planning, the latter does cover all aspects, but not the exploration. It leads to computing the right sequence of decisions based on the model indicating the impact on the environment.

Supervised machine learning involves only optimization and generalization via learning from the previous experience, guided with the correct labels. The agent is learning from its experience based on the given dataset. This ML technique is more task-oriented and applicable for recognition, predictive analytics, and dialogue systems. It is an excellent option to solve the problems having the reference points or ground truth.

Similarly, unsupervised machine learning also involves only optimization and generalization but having no labels referring to the environment. It is data-oriented and applicable for anomaly and pattern discovery, clustering, autoencoders, association, and hyper-personalization pattern of AI.

Asynchronous Advantage Actor-Critic (A3C) Algorithm

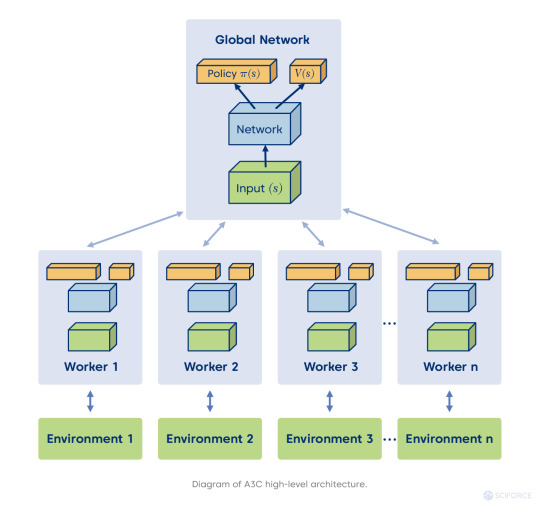

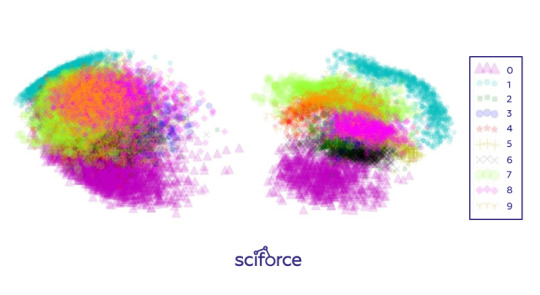

The A3C algorithm is one of RL's state-of-the-art algorithms, which beats DQN in few domains (for example, Atari domain, look at the fifth page of a classic paper by Google Deep Mind). Also, A3C can be beneficial in experiments that involve some global network optimization with different environments in parallel for generalization purposes. Here is the magic behind it:

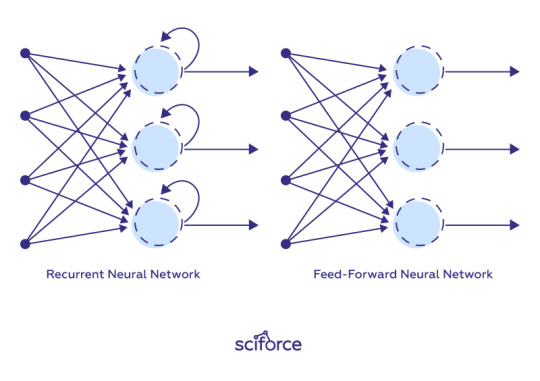

Asynchronous stands for the principal difference of this algorithm from DQN, where a single neural network interacts with a single environment. On the contrary, in this case, we've got a global network with multiple agents having their own set of parameters. It creates every agent's situation interacting with its environment and harvesting the different and unique learning experience for overall training. That also deals partially with RL sample correlation, a big problem for neural networks, which are optimized under the assumption that input samples are independent of each other (not possible in games).

Actor-Critic stands for two neural networks — Actor and Critic. The goal of the first one is in optimizing the policy (“How to act?”), and the latter aims at optimizing the value (“How good action is?”). Thus, it creates a complementary situation for an agent to gain the best experience of fast learning.

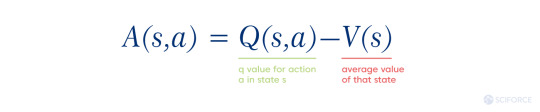

Advantage: imagine that advantage is the value that brings us an answer to the question: “How much better the reward for an agent is than it could be expected?” It is the other factor of making the overall situation better for an agent. In this way, the agent learns which actions were rewarding or penalizing for it. Formally it looks like this:

Q(s, a) stands for the expected future reward of taking action at a particular state

V(s) stands for the value of being in a specific state

Challenges and Opportunities

Reinforcement learning’s first application areas are gameplay and robotics, which is not surprising as it needs a lot of simulated data. Meanwhile, today RL applies for mundane tasks like planning, navigation, optimization, and scenario simulation in various verticals chains. For instance, Amazon used it for their logistics and warehouse operations’ optimization and for developing autonomous drone delivery.

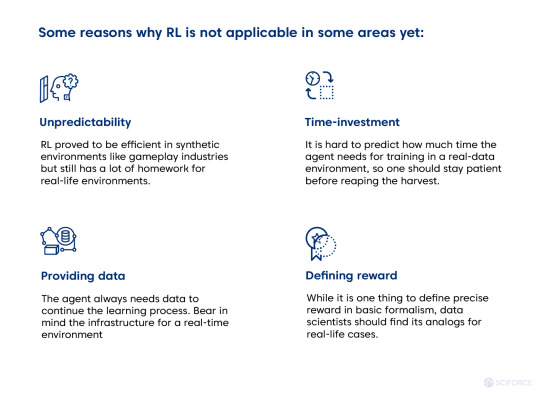

Simultaneously, RL still poses challenging questions for industries to answer later. Given its exploratory nature, it is not applicable in some areas yet. Here are some reasons:

Meanwhile, RL seems to be worth time and resources investment as industry players like Amazon show. Just give it some time, since investment in knowledge always requires it.

#reinforcement learning#machine learning#supervised learning#unsupervised learning#artificial intelligence#data science#ai

0 notes

Text

Potential of Using Machine Learning & AI During COVID-19

The medical application of machine learning & AI is one of the most principal and fast-growing fields of our expertise. Following the global rush for minimizing the coronavirus crisis effects, SciForce can not stay behind in the tremendous eHealth development.

The Severe Acute Respiratory Syndrome CoronaVirus 2 (SARS-CoV-2), popularly known as COVID-19, has become a matter of great concern globally since the end of 2019. While Pfizer/BioNTech, Moderna, AstraZeneca, and Sinovac vaccines have become household names and bring some light at the end of the tunnel, we've got more tasks to solve. Patients' diagnosis, treatment, and monitoring cause enormous pressure on healthcare providers worldwide. Healthcare software development turns out to be on the frontline. Given the pandemic peculiarities, the latest approaches of ML & AI have come to tackle the challenge.

Read on to find out the latest AI & ML corona-driven applications for the eHealth industry that help understand and battling the virus.

1. Diagnosis: Coping with the Challenge at Its Infancy

The efficient testing of the population is a key to curbing the spread of coronavirus. However, the available tests are not meeting the demand and resolving the problem to the end. RT-RCP aimed at the viral RNA is a time- and costs-consuming variant and is not widely applicable. Rapid antigen test detects the viral proteins but still is not a precise and guaranteed answer. The antibody test is only relevant to people who have already undergone the COVID-19.

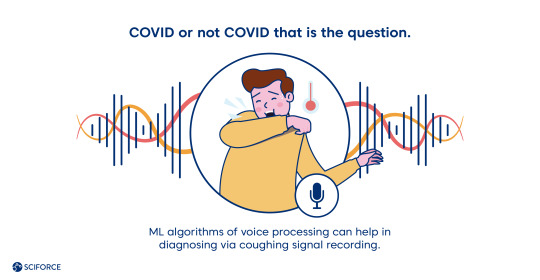

Per recent researches, AI algorithms can diagnose the virus without using the RT-RCP test. Thus, machine learning algorithms detect coronavirus via blood or urine tests, analyzing the samples to predict the COVID-19. The data received from the blood test of the patients stands as the base for neural network analysis for RT-RCP test prediction based on the blood test parameters. ML algorithms of voice processing can help in diagnosing via coughing signal recording. Medical wearables are also widely used in telehealth systems and could be helpful for diagnostics. Hence, virtual visits to the health care providers become possible thanks to the natural language processing algorithm. The Health Center of the Medical University of South Carolina stands as a good example.

Mobile phones serve as great data-generating tools providing immediate access to the potential coronavirus hosts, opening the window of opportunities for epidemiological control as some Pacific Asian governments demonstrate it.

2. AI Assistance for a Treatment: Decision-Making, Recovery Prediction, and Patients' Prioritizing

In constant vital resource scarcity, machine learning algorithms can assist in multi-criteria decision-making for healthcare providers. It is beneficial when it goes about detecting and predicting the disease severity. Thus, medical personnel can prioritize the patients to respond to coronavirus disease and its outcomes. Text processing can help to compare the treatment plans and predict the patient's recovery. Patients prioritizing is particularly crucial in the state of the breathing equipment scarcity.

ML algorithms assist the selection criteria for such existent treatment practice as antibodies circulating blood transfusion. In this case, ML helps to detect whether a subject meets blood donor selection criteria and selects the most suitable plasma.

3. Monitoring Patients: Overcoming Limitations with Telehealth

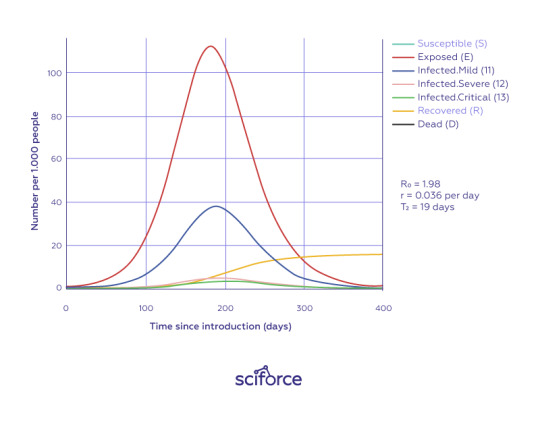

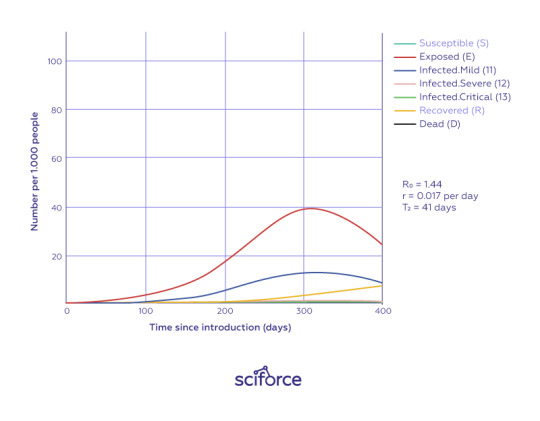

Deep learning algorithms demonstrate efficacy in predicting patients' severity, mortality, and recovery. In March 2020, the researchers in Wuhan, PRC used the clinical variables of almost 200 hospitalized patients to develop a deep-learning algorithm and risk stratification score system to predict mortality.

One can use the same idea for predicting the disease severity of the patient. It helps forecast the coronavirus effects using clinical and laboratory data like a blood test or even voice signal.

Thus, telehealth gets the new dimension and scope with the pandemic outbreak and promises to show even more tremendous application soon. Combining classical epidemiological methods with deep learning algorithms, natural language processing to process electronic health records using other sensors (temperature, color sensor, camera, and microphone) can cause a paradigm shift for the eHealth industry. So, researchers in Spain show that using clinical variables of more than 10000 patients like age, fever, and tachypnea (abnormal respiration rate) to detect whether a person needs immediate intensive care unit admission.

4. Tackling the Pandemic and Control Regaining

Social distancing, contact tracing, and identifying COVID-19 cases are the crucial steps to keep the reproduction rate small. AI methods of large amounts of data processing come as a powerful tool for large-scale problem-solving. Tracing the person's contact and offering tools for a self-assessment come as one of the possible applications.

Policymakers can use different AI techniques to provide physical distancing among the population, along with surveillance video analysis (PRC), gathering spatio-temporal data provided by mobile phones, and identifying whether a person wears a facemask automatically. Interesting that deep learning methods like computer vision for facemask identification applied for the first time.

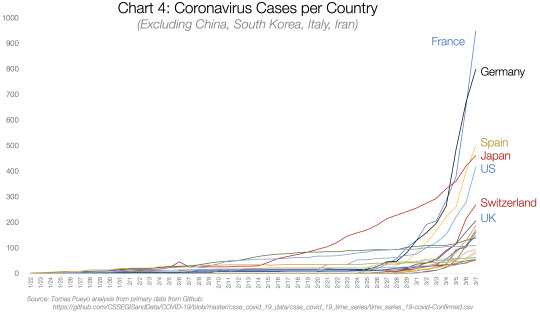

Image source

The hidden danger of this pandemic is in the fact that a lot of COVID-19 cases are asymptomatic. ML methods help create mobilized assessment centers based on spatio-temporal data of individuals analyzed. Developing self-organizing feature maps (SOFM) to monitor the epidemic in live time and a particular place is possible. Thus, one can build a projection model for a community spread among the population of a city.

Summing It Up

It is better to predict the problem than to deal with its consequences. However, 2020 showed the globe that we still have a lot to work on for a reliable prognosis. There is not much data on the AI and ML methods to predict the pandemic humankind can face in the future. Meanwhile, the tremendous development of the vaccine and lateral applications for coronavirus diagnosis, treatment, and monitoring shed some light on the overall picture.

AI-based technologies have become great solutions for COVID-19 detecting without RT-RCP testing. They assist in multi-criteria decision-making for healthcare providers, overcome the limitations, and predict the upcoming epidemiological scenarios. It is all already happening now.

SciForce is ready to face the challenge and join the frontline to develop the solutions for coming challenges. The power of science has always empowered people to strive, especially during the last couple of years.

#healthcare#covid19#coronavirus#flattenthecurve#machine learning#data science#artificial intelligence#data

0 notes

Text

Artificial Intelligence and the Shift to the Circular Economy

Over the past 200 years, a model backing by extracting and consuming vast quantities of finite materials and fossil fuels has been shaping the global economy. Such a linear economic model has allowed humanity to build an impressive industrial economy and reach unprecedented prosperity. At the same time, this type of economy is responsible for current environmental issues, depletion of resources, and climate change.

Per McKinsey Global Institute, AI could add $13 trillion to the global economic activity by 2030, yet some issues may constrain its application for social good. At present, the linear economic system requires changes to sustain the growth of the global middle class omitting negative environmental and social impacts.

Circular Economy, Explained

Let us start with the principal difference between the linear and circular economy models. The economic growth is not intertwining with finite resource consumption in the circular economic model. Conversely, it endeavors to eliminate waste and pollution, keep products and materials in use, and regenerate natural systems. The advantages of this approach are substantial not only for the planet but to economic growth. The circular economy can spur innovations, resolve growing environmental challenges, and create new jobs. McKinsey predicts that a net benefit of the circular economy for Europe can reach €1.8 trillion by 2030. The EU adopted the package of policies for the development of a circular economy in December 2015. Witnessing this endeavor's positive effect, the European Commission published The Circular Economy Action Plan in March 2020, which promises more changes.

Why AI?

When we think of the circular economy, we often imagine waste management and recycling, such as dealing with food waste, single use-plastics, packaging, and straws. However, the circular economy is a broader concept of being sustainable, as it embraces renewable energy, design for longevity, upgrading, disassembly, water stewardship, social responsibility, and disassembly.

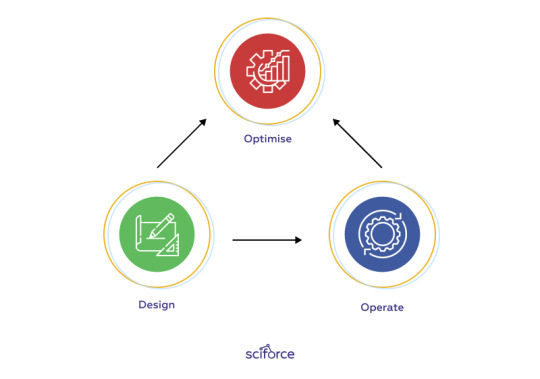

Artificial intelligence is supposed to play an essential role in enabling the circular economy's systemic shift. It is to enhance and facilitate circular economy innovation across industries in three main ways:

1. Design circular products, components, and materials. It is well-known that AI can accelerate the development of new products, features, and materials fit for a circular economy thanks to the rapid ML-driven prototyping and testing.

2. Operate circular business models. AI increases product circulation by intellegent inventory management, pricing and demand prediction, and predictive maintenance.

3. Optimize circular infrastructure. AI can improve sorting and disassembling products, components remanufacturing, and recycling materials that can build the reverse logistics infrastructure required to 'close the loop' on products and materials.

The key idea underlying all AI applications for the circular economy is to manage resources efficiently, compliant, and sustainable. The AI technologies apply to collate, analyze and interpret complex environmental data and information to understand the issues and prioritize action. More importantly, AI can become a platform to democratize sustainability knowledge, enabling us to drive changes in our behavior that benefit the planet on all levels and scales.

Field Applications

From the manufacturing industry to healthcare, the scope of AI application to curb waste is endless, and the principle is relatively similar for all fields. To grasp the extent of AI applications, we can mention just several examples.

Design of New Materials

The European Space Agency deployed circulated economy models to produce and test novel alloy models in their Accelerated Metallurgy project. The circular economy principles in alloys design bring the following results: materials are non-toxic, can be reused, and can be made using additive manufacturing and processing methods to minimize waste. Accelerated Metallurgy uses AI algorithms to analyze big data to design and test alloy composition systematically.

Infrastructure Optimization

A vital feature of the circular economy is that materials and products are not disposed of after the first use but reused multiple times, which requires optimization of the infrastructure to ensure circular product and material flows. Effective recovery of valuable materials requires homogeneous, pure flows of material and products. However, used material streams are usually far from being pristine: from kitchen waste to used computers, and these streams are mixed and heterogeneous in materials, products, and by-products, both biological and technical. AI shows how it can enable enhanced valorization of materials and products by sorting post-consumer mixed material streams through visual recognition techniques. ZenRobotics, for example, works with cameras and sensors, whose imagery input allows AI to control intelligent waste sorting robots. These robots can reach an accuracy level of 98% in sorting myriad material streams, from plastic packaging to construction waste.

Smart Farming

Two mutually opposing trends are currently putting more pressure on agriculture, calling for immediate action. Already severely depleted soils need to provide food for an ever-growing global population, and at the same time, roughly a third of food remains never eaten. AI offers multiple opportunities to make farming smarter by using image recognition to determine fruit ripeness, food supply, demand-effective matching, and increasing food by-products valorization. Our company, for instance, uses computer vision to monitor the growth and development of plants.

Designing Healthier Food Products

AI techniques can help in reducing waste, eliminate unsafe additives, and develop regenerative grown ingredients. Recent applications include alternative egg-free products, plant-based meat, and fish to decrease dependence on natural resources. A Chilean food technology company called NotCo (The Not Company), for example, is trying to replace foods made with animal products using vegetable-based foods that taste the same. They have developed the Giuseppe artificial intelligence program that takes the molecular structure of meat and can replicate it using plant-based ingredients to create a unique flavor and texture.

Predictive Maintenance

AI algorithms may be able to radically improve the assessment of a product's condition, enabling predictive maintenance and the ability to determine the secondary value of a used device more accurately. By using IoT sensors and AI-driven analytics, manufacturers and service operators can know in advance when equipment needs service. Predictive maintenance help to replace the required detail in advance. This solution predicts machine conditions leading to the failure and provides time estimation to plan and minimize downtime.

Conclusion

AI can be an enabler and accelerator of the global transition to the circular economy. Digital technologies are already driving a profound transformation of our economy and way of life. If such modification embraces circular economy principles, it can create value and generate more comprehensive benefits for society. However, AI production requires a clear understanding of the actual problem to solve. Moreover, the circular economy transition involves a network of trusted partners — it cannot be done by one company alone, even having the smartest AI tool.

The data generation, collection, and sharing are the implications of cooperation between all the stakeholders. It is only together with the community AI can transform our global economy and minimize waste.

0 notes

Text

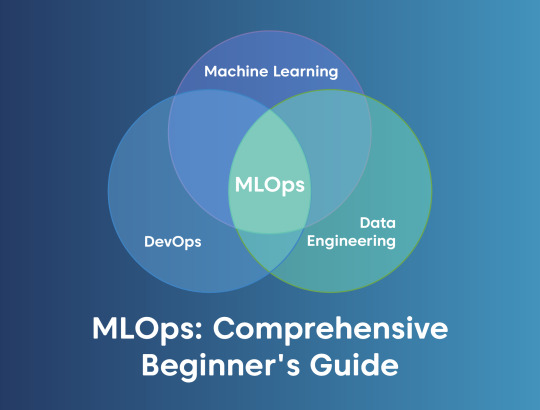

MLOps: Comprehensive Beginner's Guide

MLOps, AIOps, DataOps, ModelOps, and even DLOps. Are these buzzwords hitting your newsfeed? Yes or no, it is high time to get tuned for the latest updates in AI-powered business practices. Machine Learning Model Operationalization Management (MLOps) is a way to eliminate pain in the neck during the development process and delivering ML-powered software easier, not to mention the relieving of every team member's life.

Let's check if we are still on the same page while using principal terms. Disclaimer: DLOps is not about IT Operations for deep learning; while people continue googling this abbreviation, it has nothing to do with MLOps at all. Next, AIOps, the term coined by Gartner in 2017, refers to the applying cognitive computing of AI & ML for optimizing IT Operations. Finally, DataOps and ModelOps stand for managing datasets and models and are part of the overall MLOps triple infinity chain Data-Model-Code.

While MLOps seems to be the ML plus DevOps principle at first glance, it still has its peculiarities to digest. We prepared this blog to provide you with a detailed overview of the MLOps practices and developed a list of the actionable steps to implement them into any team.

MLOps: Perks and Perils

Per Forbes, the MLOps solutions market is about to reach $4 billion by 2025. Not surprisingly that data-driven insights are changing the landscape of every market's verticals. Farming and agriculture stand as an illustration with AI's value of 2,629 million in the US agricultural market projected for 2025, which is almost three times bigger than it was in 2020.

To illustrate the point, here are two critical rationales of ML's success — it is the power to solve the perceptive and multi-parameters problems. ML models can practically provide a plethora of functionality, namely recommendation, classification, prediction, content generation, question answering, automation, fraud and anomaly detection, information extraction, and annotation.

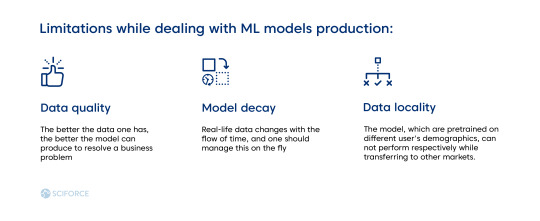

MLOps is about managing all of these tasks. However, it also has its limitations, which we recommend to bear in mind while dealing with ML models production:

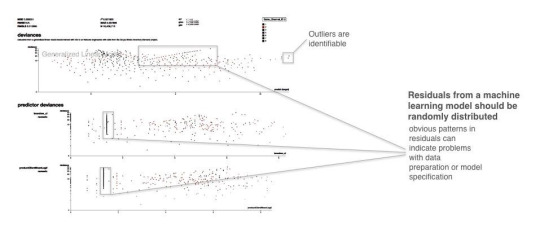

Data quality. The better the data one has, the better the model can produce to resolve a business problem.

Model decay. Real-life data changes with the flow of time, and one should manage this on the fly.

Data locality. The model, which are pretrained on different user's demographics, can not perform respectively while transferring to other markets.

Meanwhile, MLOps is particularly useful when experimenting with the models undergoing an iterative approach. MLOps is ready to go through as many iterations as necessary as ML is experimental. It helps to find the right set of parameters and achieve replicable models. Any change in data versions, hyper-parameters, and code versions leads to the new deployable model versions that ensure experimentation.

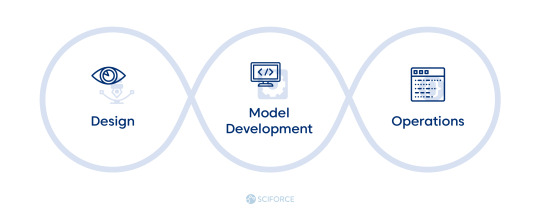

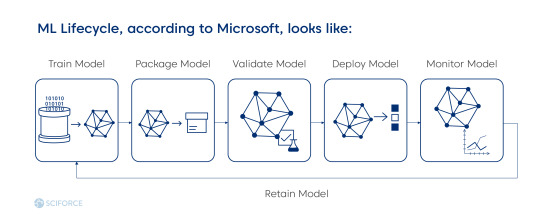

ML Workflow Lifecycle

Every ML project aims to build a statistical model out of the data, applying a machine learning algorithm. Hence, Data and ML Model come out as two different artifacts to the software development of the Code Engineering part. In general, ML Lifecycle consists of three elements:

Data Engineering: supplying and learning datasets for ML algorithms. It includes data ingestion, exploration and validation, cleaning, labeling, and splitting (into the training, validation, and test dataset).

Model Engineering: preparing a final model. It includes model training, evaluation, testing, and packaging.

Model Deployment: integrating the trained model into the business application. Includes model serving, performance monitoring, and performance logging.

Source: Microsoft

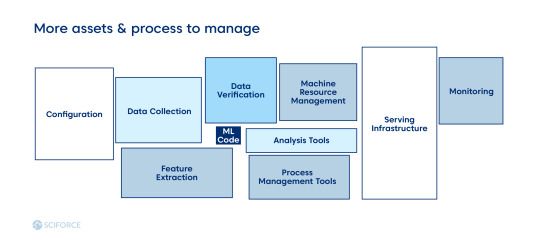

MLOps, Explained: When Data & Model Meet Code

As ML introduces two extra elements into the software development lifecycle, everything becomes more complicated than the use of DevOps for any software development. While MLOps still seeks for source control, unit and integration testing, and continuous delivery of the package, it brings some new differences, compared to DevOps:

Continuous integration (CI) applies to the testing and validating data, schemas, and models, not only refers to the code and components.

Continuous deployment (CD) refers to the whole system, which is to deploy another ML-provided service, but not to the single software or service.

Continuous training (CT) is unique to the ML models and stands for model service and retraining.

Source: Google Cloud

The level of each step of data engineering automation, model engineering, and deployment define the overall maturity of MLOps. Ideally, CI and CD pipeline should be automated to define the mature MLOps system. Hence, there are three levels of MLOps, categorized and based on the level of processes automation:

MLOps level 0: a process of building and deploying of ML model is entirely manual. It is sufficient for the models that are rarely changed or trained.

MLOps level 1: continuous training of the model by automating the ML pipeline, good fit for models based on the new data, but not for new ML ideas.

MLOps level 2: CI/CD automation lets work with new ideas of feature engineering, model architecture, and hyperparameters.

In contrast to DevOps, model reuse is a different story as it needs manipulations with data and scenarios, unlike software reuse. As the model decays over time, there is a need for model retraining. In general, data and model versioning is “code versioning” in MLOps, which seeks more effort compared to DevOps.

Benefits and Costs

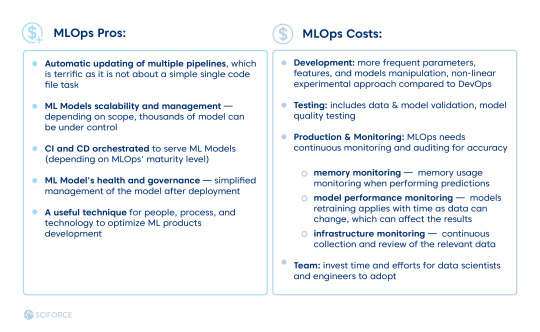

To think through the MLOps hybrid approach for a team, which is implementing it, one needs to assess the possible outcomes. Hence, we've developed a generalized pros-and-cons list, which may not apply to every scenario.

MLOps Pros:

Automatic updating of multiple pipelines, which is terrific as it is not about a simple single code file task

ML Models scalability and management — depending on scope, thousands of model can be under control

CI and CD orchestrated to serve ML Models (depending on MLOps' maturity level)

ML Model's health and governance — simplified management of the model after deployment

A useful technique for people, process, and technology to optimize ML products development

We assume that it might take some time for any team to adapt to the MLOps and develop its modus operandi. Hence, we are proposing a list of possible “stumbling stones” to foresee:

MLOps Costs:

Development: more frequent parameters, features, and models manipulation, non-linear experimental approach compared to DevOps

Testing: includes data and model validation, model quality testing

Production and Monitoring: MLOps needs continuous monitoring and auditing for accuracy

memory monitoring — memory usage monitoring when performing predictions

model performance monitoring — models retraining applies with time as data can change, which can affect the results

infrastructure monitoring — continuous collection and review of the relevant data

Team: invest time and efforts for data scientists and engineers to adopt

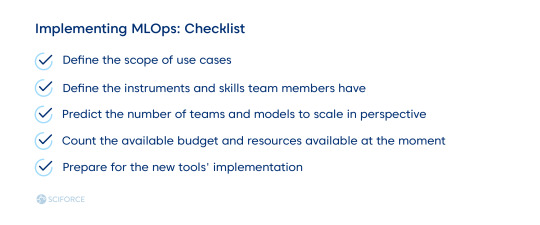

Getting Started with MLOps: Actionable Steps

MLOps requires knowledge about data biases and needs high discipline within the organization, which decides to implement it.

As a result, every company should develop its own set of practices to adjust MLOps to its development and automation of the AI force. We hope that the guidelines mentioned contribute to the smooth adoption of this philosophy into your team.

2 notes

·

View notes

Text

The Strength and Beauty of GraphQL in Use

The Strength and Beauty of GraphQL in Use

Facebook developed GraphQL as a major problem-solver for more efficient mobile data loading in 2012 and released it as an open-source solution three years later. Since that time, it mistakenly associates with PHP only and lacks trust given the Facebook's reputation (if you know what I mean). However, a recent Netflix case that finds GraphQL as a game-changer to power the API layer and increase the scalability and operability of the studio ecosystem attracts attention. This specification already gained popularity — given State of JavaScript 2019 Report, 50.6% of respondents have heard of GraphQL and would like to learn it. However, The New York Times, Airbnb, Atlassian, Coursera, NBC, GitHub, Shopify, and Starbucks are already among the GraphQL users. We decided to dwell on the beauty, strength, and some constructions of GraphQL in its scalability, performance, and security aspects and tell about our use cases for a banking sphere and a platform of commercial targeting. See the list of useful toolkits added in the end as a bonus.

GraphQL: the Beans Spilled

GraphQL is a convenient way of communication between a client and a server first. Sometimes one can see it as an opponent to REST API given the main difference that GraphQL brings to the table — only endpoint to fetch the data by one call from multiple sources. Meanwhile, we are to provide the space for consideration whether this specification is relevant to particular tasks or REST API is the silver bullet for your case.

Both REST and GraphQL APIs are stateless, supported by any server-side language and any frontend framework, exchange the data through the JSON. But the one and the only endpoint containing the query expression to define the data that should be returned creates the what-you-see-is-what-you-get principle to optimize the work. Let's deep dive into the specification's main advantages and disadvantages.

Performance and Security

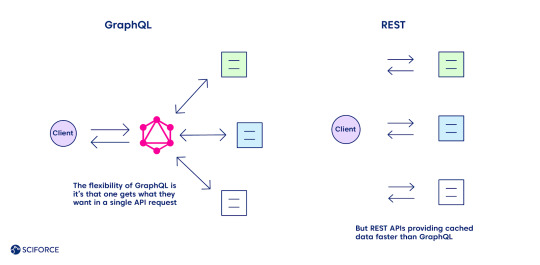

The flexibility of GraphQL is its main advantage over REST, as one gets what they want in a single API request. Define the structure of the information to receive back, and it goes back in the format requested, no under-fetching or over-fetching.

Meanwhile, caching seems to be one of the GraphQL downsides compared to REST (see the complete list of all the pros and cons further). REST APIs use the HTTP caching mechanism, providing cached data faster. It leverages its community-powered and time-tested feature, leaving GraphQL behind at the moment.

Security is another area of improvement for GraphQL while comparing it with REST, which boasts of a more mature system. The latter leverages HTTP authentication, JSON Web Tokens (JWT), or OAUth 2.0 mechanisms.

Pros and Cons: All Things Considered

Unlike REST API, GraphQL has detailed documentation and supports the function of nested queries that contributes to the principle "no over fetching and under fetching data," which happened while using the first specification. Query and mutation are the joint GraphQL operations. Thus, the CRUD (create, read, update, delete) model is not relevant for GraphQL as the create operation executes through the query command (other ones are implemented with mutations).

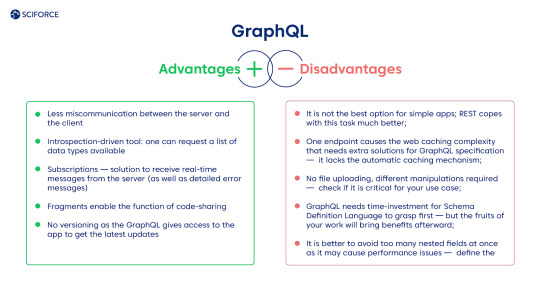

Advantages

Less miscommunication between the server and the client.

Introspection-driven tool: one can request a list of data types available.

Subscriptions — solution to receive real-time messages from the server (as well as detailed error messages).

Fragments enable the function of code-sharing.

No versioning as the GraphQL gives access to the app to get the latest updates.

Disadvantages

It is not the best option for simple apps; REST copes with this task much better.

One endpoint causes the web caching complexity that needs extra solutions for GraphQL specification — it lacks the automatic caching mechanism.

No file uploading, different manipulations required — check if it is critical for your use case.

GraphQL needs time-investment for Schema Definition Language to grasp first — but the fruits of your work will bring benefits afterward.

It is better to avoid too many nested fields at once as it may cause performance issues — define the architecture of the query beforehand.

Our Use Cases with GraphQL

GraphQL provides developers with higher scalability of the system that applies in any sphere. We want to share our experience of functions diversification for a commercial targeting platform and solving the banking application's two-fold task.

The Platform for a Commercial Targeting

GraphQL became a convenient solution for one of our clients who needed to develop a platform for commercial targeting, providing a straightforward approach for searching the potential customers in any national institution or facility. Using it, the client can direct the ads straight to the audience of interest using geolocation data and a set of filters. The platform consists of two primary services: one for geo-based consumers extraction based on PlaceIQ dataset usage and one for attribute-based (consumers identity graph) with consumer dataset. The project can be extended by adding the missing residential dataset to retrieve residents at requested addresses. Also, the services could be wrapped into the REST API to provide the ability to trigger them using web requests.

Risk Reduction and Resilience Boosting Financial Platform

An average bank encounters no more than 100K transactions a day. Moreover, it also faces malicious actions and the risk of cyberattack. One of our clients needed to empower their software platform to encounter higher transaction pressure and provide a higher risk-management system to avoid financial crimes. As a result, we have developed a solution that stands for the high amount of transactions and provides the reports while detecting anomalies based on the transactions' data in real-time.

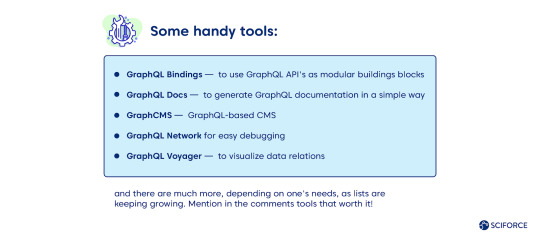

GraphQL: Useful Toolkit

Check out the growing GraphQL community to find the latest updates on this solution. There are many horizontally and vertically developed solutions for GraphQL client, GraphQL gateway, GraphQL server, and database-to-GraphQL server. Add some of the tools that you enjoy using while working with GraphQL in comments to this blog.

GraphQL's servers are available for languages like JavaScript, Java, Python, Perl, Ruby, C#, Go, etc.

Apollo Server for JavaScript applications and GraphQL Ruby are some of the most popular choices.

Apollo Client, DataLoader, GraphQL Request, and Relay are among popular GraphQL clients. Graphiql, GraphQL IDE, and GraphQL Playground for IDE's respectively.

Some handy tools:

GraphQL Bindings — to use GraphQL API's as modular buildings blocks

GraphQL Docs — to generate GraphQL documentation in a simple way

GraphCMS — GraphQL-based CMS

GraphQL Network for easy debugging

GraphQL Voyager — to visualize data relations

and there are much more, depending on one's needs, as lists are keeping growing. Mention in the comments tools that worth it!

0 notes

Text

SEO in Times of Voice-Activated Apps: How to Make Voice Search Work for You

Probably when Siri appeared in our life for the first time, the idea of talking to your device sounded like a whim or a funny activity to test Artificial Intelligence. By now, the use of voice-activated assistants has become an everyday experience. Siri, Google Assistant, and Cortana from our mobile devices and Amazon Echo, Google Home and Apple’s HomePod in our house are always ready to answer our question of complete a task. Such spreading of voice-activated applications has changed the way we perform a search in the Internet shifting it from typing keywords to asking direct

Voice search is being increasingly used by customers to find businesses around them, complete tasks, or just help them go about their day-to-day life. By 2020, Gartner predicts that 30% of all searches will take place without a screen altogether, meaning voice or image search. Andrew Ng, then Chief Scientist at Baidu stated in September 2014 that “In five years’ time, at least 50% of all searches are going to be either through images or speech” — the prediction that has spread all over the market.

Accurate or not, we already see how the voice search is changing our way of communication with our devices and our ideas and expectations of search. Check out a few of these stats that substantiate how much voice search has changed life as we know it, and how it’ll shape the future as well.

What it means for users

The key to unlocking the power of voice search is its convenience. Throughout history, we can see that as technology develops and advances, it makes life easier for people and this, in turn, changes the behavior of consumers.

Similarly in our search habits, with more people using their smartphones to search for products and services, many will begin to use the voice capability to speak into the search engine rather than trying to type on the go. New voice technology makes it easier than ever for people to gather information and answers from search results.

Voice-activated applications are already changing our habits in many aspects.

The most important difference in voice search query behavior is that the query string tends to be longer than keyboard written text queries. 41% of people admit they talk to voice assistants as if they were a friend(5). Obviously, voice search behavior similarly differs in comparison to manual searches: if you are typing a search for places to buy pizza, you might input something like “Best Pizza near me.” However, using voice search, we’ll end up in a conversational style, asking “Where are the closest pizza restaurants to me?”

We’re already seeing consumer expectations migrating from links to answers. And virtual assistants will also continue to evolve from answers to actions, making our search more individualized, accurate, and based on voice and visual inputs.

What it means for businesses

In the present-day search, as in the case with many new technologies, businesses need to make sure they can be found by voice or they risk being left on the sidelines. However, new challenges can open new opportunities for growth is addressed intelligently:

Businesses should see voice search as an opportunity to develop a conversation with their audience. Forward-thinking brands will adapt their websites content to engage in conversational search, rather than just short Q&A exchanges to engage with the public and build up interest in their products and loyalty.

It is a powerful and rapidly growing area of search marketing. To get in front of the consumer, businesses should answer their questions in a way that is accessible to voice search and try to understand how your users will be searching given that voice is faster and more convenient than typing.

Thanks to the growing demand for location-based queries, plus the newer ‘near me’, ‘close by’ and other voice-based queries, online market opens its doors to SMEs and empowers them to compete more effectively in markets where they have premises, experts and audiences in place.

Changes in user experience will inevitably shape future advertising models. To be able to answer the new demands, businesses will have to move from text ads to voice ads, and from investing in clicks to answers and actions.

New SEO for voice search

If a business spends time, money, and effort on keyword optimization and potentially spends money on PPC advertising it is missing a growing sector in the search market. As both small businesses and chain companies start taking voice search queries into consideration, they are looking to the ways to optimize SEO for voice queries.

Typed and spoken searches will output different results. Besides, unlike with regular search results, where the second result for a search query is likely to attract a lot of valuable traffic, voice search only returns one answer to searchers from the number one best-optimized result. Therefore, optimizing a website for a traditional search doesn’t always look the same as optimizing the same site for voice search. Here we will try to explore the most essential factors to consider when optimizing for voice search:

Using assistants. You can start simply from getting a voice-activated application and asking questions relevant to your products and business. You can learn what the assistants can answer and what the gaps in their answers are and how you can rewrite your product description accordingly. Further, you can bulk query assistants and classify the results by type to pick a key phrase where we could provide an answer either with a web answer box or using a Google action. If you don’t have a device you can use https://echosim.io/ welcome or https://allo.google.com/ which are web versions of the two assistants.

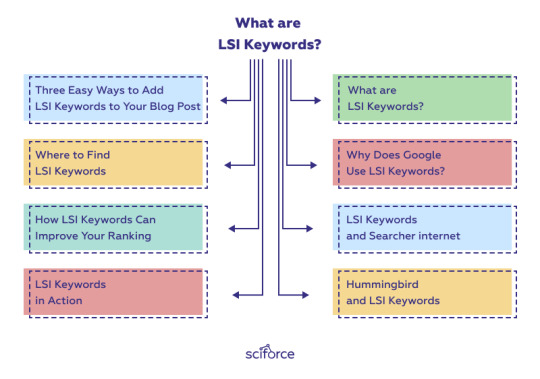

Conversational approach. In order to optimize content for voice search, you need to understand how your audience speaks about your products and services and what questions they ask. The content you create should answer those questions or include these more natural language queries, including conversational keywords or long-tail keywords . Modify your SEO strategy to include researching and understanding LSI (latent semantic indexing) keywords. LSI keywords are closely related to or synonyms of your main keyword and are natural language variations that can help your content rank for your main keyword and more. So, using synonyms and trying to give the searcher all the information they can consume related to their query, is likely to increase the site visibility. These tricks will enable you to create content that helps search engines determine the context of the page so it can deliver the right content based on user intent.

Data structuring. Structuring of data for a growing number of informational queries will be a logical evolution of SEO. Structured data is a code added to HTML markup that is used by search engines to better understand a site’s content. Therefore, structured data can help search engines crawl and read your content efficiently. With schema markup, you can better control the way you provide information about your brand, and the way machines interpret it. Implementing structured data results in more powerful snippets.

Featured snippets. Featured snippets are selected search results that are featured on top of Google’s organic results below the ads in a box and are believed to provide the most relevant content or to give a direct answer. They are known to increase click-through rate, drive traffic, and bring you competitive advantages. You can be sure that if the results include a featured snippet, your voice assistant will pull its answer from there.

So if you optimize for voice search what you’re really optimizing for is featured snippets. This means looking at the featured snippets that appear for queries you’d like to rank for and considering whether the format of that snippet is ideal. If it looks optimal, you can mimic it with your content to take its place (same format but better quality) and if not, produce content that would rank but in a format that makes more sense. For example, if a question can be better answered by a list — make content that includes a list, not a long description.

User intent. User intent tells us the reason a person entered a query into a search engine: to buy a product or to look for a recommendation, to compare different options, or to get new information? Sometimes the intent is obvious and clearly expressed in the query with words such as “buy,” “price,” “how-to,” “what is,” etc. But other times, intent hides only in a user’s mind. To enhance the relevance of your pages to specific search queries you should always consider user intent when creating content. To succeed, businesses should seek to answer questions, and provide details wherever possible. At the same time, the same steps you take to optimize for answer boxes are going to help you in voice search.

Mobile-friendly is voice-friendly. The recent course of development of search algorithms has already made search engine marketing a “mobile-first” environment. Since most voice searches come from mobile devices, it might be beneficial to focus specifically on voice search optimization. Besides, much of what you can do to optimize for voice search is also beneficial for mobile-first optimization.

It is still dubious whether one day we’ll see that search engines switch to prioritizing voice-friendly results or our habits of typing queries into search engines fade away, but voice commands will undoubtedly have a big impact on search marketing. Since almost every effort you can take to optimize for voice search might be beneficial to your SEO efforts overall, it is a good idea to start focusing on voice optimization. Incorporating voice search optimization strategies into your overall optimization efforts can help you ensure you’re prepared for the voice-driven future.

0 notes

Text

Computational Aesthetics: shall We Let Computers Measure Beauty?

As we all know, tastes differ and change over time. However, each epoch tried to define its own criteria for beauty and aesthetics. As science was developing, so was the urge to measure beauty quantitatively. Not surprisingly, the recent advancements in Artificial Intelligence pushed forward the question of whether intelligent models can overcome what seems to be human subjectivity.

A separate subfield of artificial intelligence (AI), called ‘computational aesthetics’, was created to assess beauty in domains of human creative expression such as music, visual art, poetry, and chess problems. Typically, it uses mathematical formulas that represent aesthetic features or principles in conjunction with specialized algorithms and statistical techniques to provide numerical aesthetic assessments. Computational aesthetics merges the study of art appreciation with analytic and synthetic properties to bring into view the computational thinking artistic outcome.

Brief History of Computational Aesthetics

Though we are used to thinking about Artificial Intelligence as a recent development, computational aesthetics can be traced back as far as 1933, when American mathematician George David Birkhoff in “Aesthetic Measure” proposed the formula M = O/C where M is the “aesthetic measure,” O is order, and C is complexity. This implies that orderly and simple objects appear to be more beautiful than chaotic and/or complex objects. Order and complexity are often regarded as two opposite aspects, thus, order plays a positive role in aesthetics while complexity often plays a negative role. Birkhoff applied that formula to polygons and artworks as different as vases and poetry, and is considered to be the forefather of modern computational aesthetics.

In the 1950s, German philosopher Max Bense and French engineer Abraham Moles independently combined Birkhoff’s work with Claude Shannon’s information theory to develop a scientific means of grasping aesthetics. These ideas found their niche in the first computer-generated art but did not feel close to human perception.

In the early 1990s, the International Society for Mathematical and Computational Aesthetics (IS-MCA) was founded. This organization is specialized in design with an emphasis on functionality and aesthetics and attempts to be a bridge between science and art.

In the 21st century, computational aesthetics is an established field with its own specialized conferences, workshops, and special issues of journals uniting researchers from diverse backgrounds, particularly AI and computer graphics.

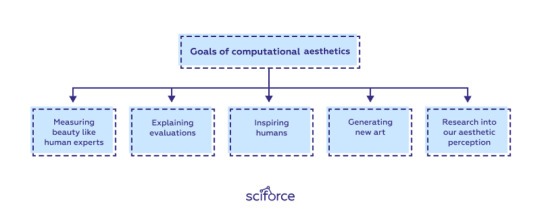

Objectives of Computational Aesthetics

The ultimate goal of computational aesthetics is to develop fully independent systems that have or exceed the same aesthetic “sensitivity” and objectivity as human experts. Ideally, machine assessments should correlate with human experts’ assessment and even go beyond it, overcoming human biases and personal preferences.

Additionally, those systems should be able to explain their evaluations, inspire humans with new ideas, and generate new art that could lie beyond typical human imagination.

Finally, computing aesthetics can also provide a deeper understanding of our aesthetic perception.

In practical terms, computational aesthetics can be applied in various fields and for various purposes. To name a few, aesthetics can be used in the following applications:

as one of the ranking criteria for image retrieval systems;

in image enhancement systems;

managing image or music collections;

improving the quality of amateur art;

distinguishing between videos shot by professionals and by amateurs;

aiding human judges to avoid controversies, etc.

Features

The backbone of all classifiers is a robust selection of features that can be associated with the perception of a certain form of art. In the search for correlation with human perception, aesthetic systems apply specific sets of features for visual art and music that are developed by theorists in arts and domain experts.

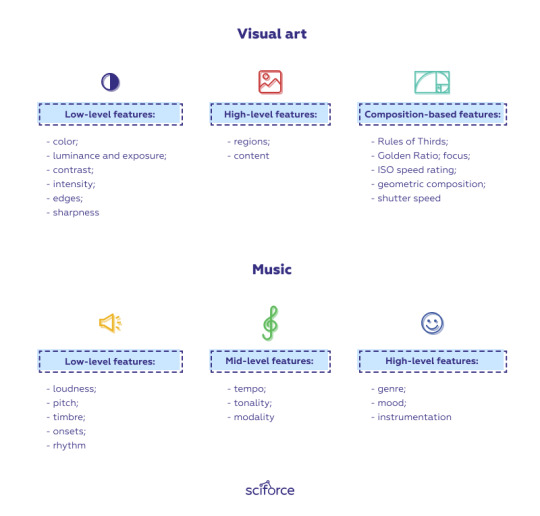

Visual Art

Image aesthetic features could be categorized as low-level or high-level plus composition-based. However, some research is based on features related to saliency (Zhang and Sclaroff, 2013), object (Roy et al., 2018), and information theory (Rigau,1998). The selection of features largely depends on the type of art and the level of abstraction, as well as the algorithm applied. For instance, photography assessment relies heavily on the compositional aspects, while measurement of the beauty of abstract art requires another approach assessing color harmony or symmetry (Nishiyama et al.,2011).

Low-level features try to describe an image objectively and intuitively with relatively low time and space complexity. They include color, luminance and exposure, contrast, intensity, edges, and sharpness.

High-level features include regions and contents as aspects that make great contributions to overall human aesthetic judgment and try to establish the regions of an image that seem to be more important for human judgment and find the correlation between the content and human reaction.

Composition-based features differ for photography and artwork and may include depending on the form of art a range of features, such as Rules of Thirds, Golden Ratio (Visual Weight Balance), focus and focal length, ISO speed rating, geometric composition and shutter speed (Aber et al., 2010).

Music

Similarly to image analysis, music aesthetics assessments try to combine research in human perception and cognition of basic dimensions of sound, such as loudness or pitch and in higher-level concepts related to music, including the perception of its emotive content (Juslin and Laukka, 2004), as well as performance specific traits (Palmer, 1997) to develop a comprehensive set of features that would be able to assess a piece of music.

In 2008, Gouyon et al. offered a hierarchy organized in three levels of abstraction starting from the most fundamental acoustic features, to be extracted directly from the signal, and progressively building on top of them to get to model more complex concepts derived from music theory and even from cognitive and social phenomena:

Low-level features are related to the physical aspect of the signal and include loudness, pitch, timbre, onsets, and rhythm (e.g., see Justus and Bharucha, 2002).

Mid-level features move to a higher level of abstraction within the music theory and cover tempo, tonality, modality, etc.

High-level features try to establish a correlation between abstract music descriptors like genre, mood, and instrumentation and human perception.

Methods and Algorithms

At its broadest, we can speak of computational aesthetics as a tool to assess aesthetics in visual art or music and as a means to generate new art.

For aesthetics assessment, various algorithms have been proposed over the past few years based either on classification or clusterization.

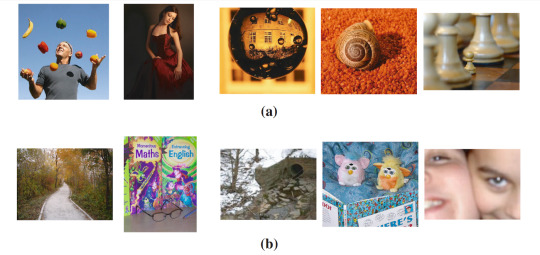

Classification approach

There are a number of algorithms that are extensively used to assess image aesthetics by means of classification. Among the most popular are AdaBoost, Naive Bayes, and Support Vector Machine, and substantial work is also conducted using Random Forests and Artificial Neural Networks (ANNs).

AdaBoost in computational aesthetics is a widely used method that is believed to render the best results. It was first offered in 2008 by Luo and Tang who conducted a study on photo quality evaluation, with the unique characteristic of focusing on the subject. They utilized Gentle AdaBoost (Torralba et al., 2004), a variant of AdaBoost that uses a specific way of weighting its data, applying less weight to outliers. The success rate obtained was 96%. However, when Khan and Vogel (2012) utilized their proposed set of features for photographic portraiture aesthetic classification, the accuracy rate with the multiboosting variant (multi-class version) of AdaBoost fell to 59.14% (Benbouzid et al., 2012).

Naïve Bayes is another popular method that was used in the same study by Luo and Tang (2008). In 2009, Li and Chen utilized the Naïve Bayes classifier to aesthetically classify paintings in which the results were described as robust. The success rate achieved utilizing a Bayesian classifier was 94%.

Support Vector Machine is probably the most wide-spread algorithm for binary classification in computational aesthetics. It has been used since 2006 when Datta et al. studied the correlation between a defined set of features and their aesthetic value, by using a previously rated set of photographs and showed up to 76% of accuracy. Other studies that rested on the same classifier include Li and Chen (2009) who aesthetically classified paintings; Wong and Low (2009) who built a classification system of professional photos and snapshots, Nishiyama et al. (2011) who conducted a research on the aesthetic classification of photographs based on color harmony, and others, with an average accuracy rate of about 75% and higher.

Random Forest, though usually showing lower results as compared to Bayesian classifiers or AdaBoost, were used in a number of studies of photograph aesthetics. For instance, Ciesielski et al. (2013) achieved a 73% accuracy to assess photograph aesthetics. Khan and Vogel (2012) utilizing their proposed set of features for photographic portraiture aesthetic classification, achieved an accuracy of 59.79% by making use of random forests (Breiman, 2001).

Artificial Neural Networks (ANNs) rendered extremely good results when used with compression-based features by Machado et al. (2007) and Romero et al. (2012). The former research aimed at the identification of the author of a set of paintings and reported a success rate from 90.9% to 96.7%. The latter work used an ANN classifier to predict the aesthetic merit of photographs at a success rate of 73.27%.

Convolutional Neural Networks (CNNs) are state-of-the-art deep learning models for rating image aesthetics that have been extensively used in the past few years. CNNs learn a hierarchy of filters, which are applied to an input image in order to extract meaningful information from the input. For example, Denzler et al. (2016) applied the AlexNet model (Krizhevsky et al., 2012) on different datasets to experimentally evaluate how well pre-learned features of different layers are suited to distinguish art from non-art images using an SVM classifier. They report the highest discriminatory power with a Network trained on the ImageNet dataset, which outperforms a network solely trained on natural scenes.

Clustering

Image clustering is a very popular unsupervised learning technique. By grouping sets of image data in a particular way, it maximizes the similarity within a cluster, simultaneously minimizing the similarity between clusters. In computational aesthetics, researchers use K-Means, Fuzzy Clustering, and Spectral Clustering in image analysis.

K-Means Clustering is widely used to analyze the color scheme of an image. For instance, Datta et al. (2006) used k-means to compute two features to measure the number of distinct color blobs and disconnected large regions in a photograph. Lo et al. (2012) utilized this method to find dominant colors in an image.

Fuzzy Clustering is a form of clustering in which each data point can belong to more than one cluster, therefore it is used in multi-class classification (see, for example, Felci Rajam and Valli (2011)). Celia and Felci Rajam (2012) utilized FCM clustering for effective image categorization and retrieval.

Spectral Clustering is used to identify communities of nodes in a graph based on the edges connecting them. In computational aesthetics, a spectral clustering technique named normalized cuts (Ncut) was used to organize images with similar feature values (Zakariya et al., 2010).

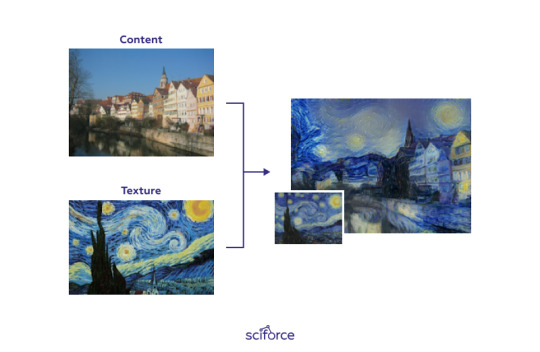

Generative models

A separate task of computational aesthetics is to generate artwork independently from human experts. At present, the algorithm that is best known for directly learning the transformations between images from the training data is Generative Adversarial Network(GAN). GANs automatically learn the appropriate operations from the training data and, therefore, have been widely adopted for many image-enhancement applications, such as image super-resolution and image denoising. Machado et al. (2015) also used GANs for automatically enhancing image aesthetics by performing mainly tone adjustment.

Example that combines the content of a photo with a well-known artwork

Conclusion: Restrictions and Limitations

Aspiring to reach objectivity, research in computational aesthetics tries to reduce the focus to form, rather than to content and its associations to a person’s mind and memories. However, from a psychophysiological viewpoint, it is not clear whether we can have a dichotomy here or whether aesthetics is intrinsically subjective.

Besides, it is difficult to ascertain whether a system that performs on the same level as a human expert is actually using similar mechanisms as the human brain and, therefore, whether it reveals something about human intelligence.

It might be that in the future we will rely on machines in our artistic preferences, but for now, human experts will dictate their opinions and try to get machines simulate their choices.

34 notes

·

View notes

Text

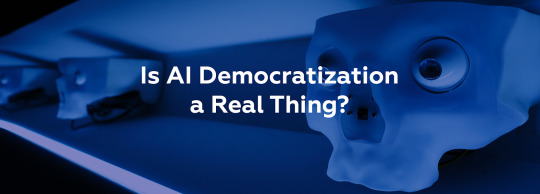

Is AI Democratization a Real Thing?

In the last decades, we’ve seen tremendous advancements in Artificial Intelligence (AI) and related fields. It is viewed not only as a ground-breaking technology, but as a step forward to the future having the means to change our society. We expect AI to use hardware and software to see and hear patterns, make predictions, learn and improve, and take action with this intelligence. Some enthusiasts believe that AI can be a tool that will guide us to a better world showing how to achieve more. In this context, new questions have been raised of power and pervasiveness of such technologies. However, many IT companies claim that AI will democratize AI and will make it available “for every person and every organization”, open to developers and researchers around the world. But does AI really democratize our world? And is it only a positive development?

What does AI democratization mean?

When we say that something is democratized, we imply that all people can access it and benefit from it. In enterprise IT, to democratize AI means to make it accessible for every organization or even to every person within the organization. Such dissemination of AI is indeed beneficial in many respects:

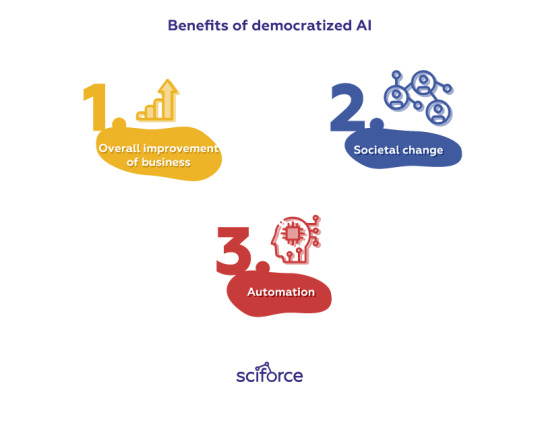

Benefits of democratized AI

Overall improvement of business — AI currently encompasses cloud suites, NLP-based virtual assistants to run business, improve customer’s experience and increase stats;

Societal change — as the awareness and the usage of AI grows, it gains more power to address global issues, like fighting climate change, tracking police work to avoid unfair targeting, or developing new standards in healthcare;

Automation — Gartner predicts that many of these tools will be automated, offering a completely new level of self-service that will free up companies’ potential.

What do we see today?

It is true that many companies, first of all tech startups and market giants, deploy artificial intelligence of some kind: machine learning does their predictions, natural language processing is improving their communication with clients, and sophisticated deep learning algorithms detect anomalies in their processes. However, many companies do not have the resources to build AI of their own and have to rely on cloud technologies to fill the gaps. For companies that are not tech-savvy, AI often feels complicated or overwhelming and it can be downright expensive, especially when engaging data science.

In this context, big tech firms end up way ahead of the curve, other enterprises can hardly catch them up, or even benefit from AI. To understand whether true AI democratization is achievable, let’s discuss what aspects of the market and inherent characteristics of AI add to inequality and what measures are taken to make AI available for everyone:

Data is the King

Data is the key to successful AI-driven intelligence and the more data we create, fuelling the so-called new “data economy”, the smarter products and services we can develop. Data seems to be an increasingly important asset and often the only significant advantage over the competition and as a result it is vigorously kept private. Undeniably, having access to all data produced by your devices or every trace of your digital presence and being able to cross-correlate them across different platforms, services and people give an enormous power. To understand the scale, we can think of the monopolizing presence of IT giants like Facebook or Google in our daily digital life. This is completely rightful and strategically sound, but unfortunately completely in contrast with the AI democratization process.

We are promised that the monopolizing effect is mitigated by new algorithms that offer insightful predictions even with limited datasets. However, best results are still achieved with gigantic amounts of data, so to make AI available to everyone, we first need to make data available to every interested party via open and shared datasets. AI democratization can not work if we do not democratize data first.

At the same time, access to data is only the first step towards AI democratization, since it is not the amount of data that wins the competition, but the ability to use it.

Technical Illiteracy

In many cases, we do not think of the amount of digital data we produce in the world where every “like” on Facebook counts. At the enterprise-level, companies that are not technically savvy, are easily misled by complicated Ai solutions. Major AI companies intentionally take advantage of the common illiteracy in AI by oversimplifying and even undermining more serious AI democratization approaches. Similarly to data ownership, concerns are raised on the dangerous monopolization of AI technologies by only a few organizations.

Network Effect

An extension of such monopolization is the “network effect”, a social and economic phenomenon where an increased number of users improves the value of a good or service in turn attracting more participants and triggering a virtuous cycle. This effect makes the situation even more unequal, as the tech giants gather most of the participants, their data and, as a result, intelligence — just think of Google and Baidu that detain ~86% the entire market share.

AI Talents

As big companies have more resources, they open up their doors to the best scientists, creating advanced labs for AI research with infinite resources. This has led to great advancements and speed-up in AI research. At the same time, tech giants tend to open up their AI research labs, giving the researchers the freedom to collaborate with other institutions or make everything open-source.

Does it add to democratization of AI? From the inspirational point of view, yes, since everyone now can download the sample code and try to use it in any project.

However, in reality the results are not always reproducible, and what tech giants achieve is free improvement and testing of their open-source tools.

Cloud

Most companies who successfully deploy AI have to invest heavily on the tech side. The enterprises that have more limited resources or that have no wish to engage in the full circle of AI research and development can get more affordable cloud solutions. The cheaper cloud tech gets, more AI tools can exist offering immediate solutions. Besides, as clouds make AI more accessible, more people can specialize in it, decreasing salary costs to companies for these data-specific jobs.

As we can see, democratization in reality is a myth as the access to AI is limited, on the one hand, by the resources and knowledge a company has and, on the other hand, by the amount of data and technology tech giants are eager to share. However, the lack of accessibility is not necessarily totally bad. Data quality is often uncertain, to say the least. When relying on a combination of entry-level AI specialists typical for smaller companies and automated or self-service AI tools, companies may be relying on data that is poor and produces unstable solutions. Results from poor data can ripple across the business, with unanticipated outcomes not evident until it’s too late.

Another problem we may face as AI democratizes is the bureaucracy inherent to many enterprises that will prevent companies and employees from acting quickly. Yet, it is the only way that intelligence can actually make a difference — if it is applied at the right time, which often arrives faster than a board can enact a decision.

To sum up, AI is indeed our future, and we’ll see the technology getting more accessible to everyone. However, like in human democracy, we’ll need a system of checks and balances to ensure that the AI-driven world remains afloat.

1 note

·

View note

Text

Text Preprocessing for NLP and Machine Learning Tasks

As soon as you start working on a data science task you realize the dependence of your results on the data quality. The initial step — data preparation — of any data science project sets the basis for effective performance of any sophisticated algorithm.

In textual data science tasks, this means that any raw text needs to be carefully preprocessed before the algorithm can digest it. In the most general terms, we take some predetermined body of text and perform upon it some basic analysis and transformations, in order to be left with artefacts which will be much more useful for a more meaningful analytic task afterward.

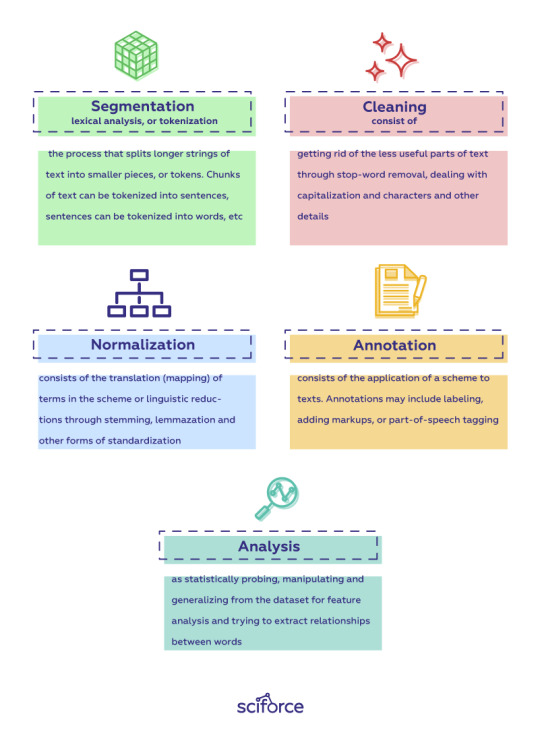

The preprocessing usually consists of several steps that depend on a given task and the text, but can be roughly categorized into segmentation, cleaning, normalization, annotation and analysis.

Segmentation, lexical analysis, or tokenization, is the process that splits longer strings of text into smaller pieces, or tokens. Chunks of text can be tokenized into sentences, sentences can be tokenized into words, etc.

Cleaning consists of getting rid of the less useful parts of text through stop-word removal, dealing with capitalization and characters and other details.