#ccdh

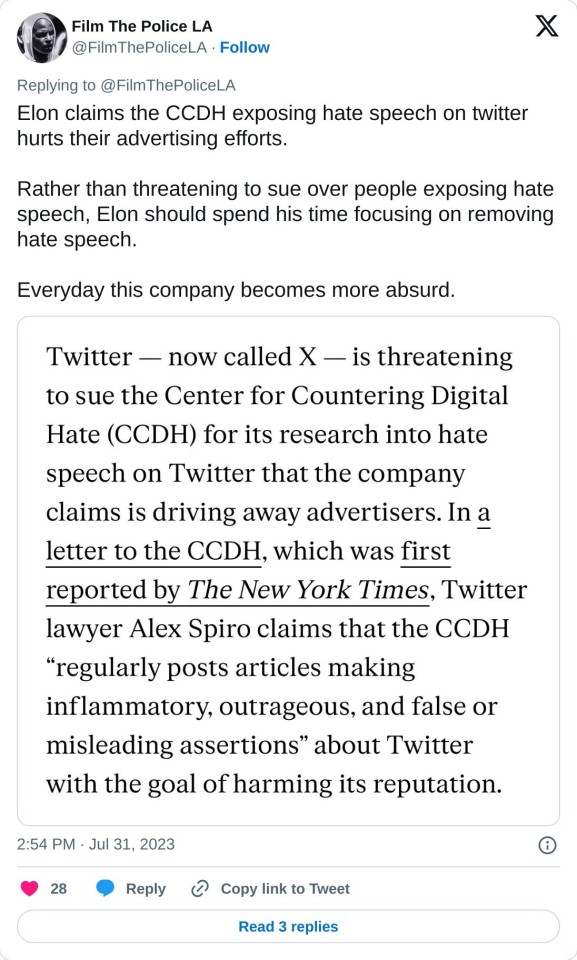

Text

A federal judge on Monday threw out a lawsuit by Elon Musk’s X that had targeted a watchdog group for its critical reports about hate speech on the social media platform.

In a blistering 52-page order, the judge blasted X’s case as plainly punitive rather than about protecting the platform’s security and legal rights.

“Sometimes it is unclear what is driving a litigation,” wrote District Judge Charles Breyer, of the US District Court for the Northern District of California, in the order’s opening lines. “Other times, a complaint is so unabashedly and vociferously about one thing that there can be no mistaking that purpose.”

“This case represents the latter circumstance,” Breyer continued. “This case is about punishing the Defendants for their speech.”

X’s lawsuit had accused the Center for Countering Digital Hate (CCDH) of violating the company’s terms of service when it studied, and then wrote about, hate speech on the platform following Musk’s takeover of Twitter in October 2022. X has blamed CCDH’s reports, which showcase the prevalence of hate speech on the platform, for amplifying brand safety concerns and driving advertisers away from the site.

In the suit, X claimed that it had suffered tens of millions of dollars in damages from CCDH’s publications. CCDH is an international non-profit with offices in the UK and US.

Because of its potential to destroy the watchdog group, the case has been widely viewed as a bellwether for research and accountability on X as Musk has welcomed back prominent white supremacists and others to the platform who had previously been suspended when the platform was still a publicly-traded company called Twitter.

#elon musk#lawsuit#x (social media platform)#watchdog group#hate speech#social media#district judge charles breyer#us district court#northern district of california#center for countering digital hate#ccdh#terms of service#twitter#brand safety concerns#advertisers#damages#non-profit#research#accountability#white supremacists#publicly-traded company#white supremacy#racism#social justice#equality#end hate#anti-racism#racial equality#stop racism#no to hate

56 notes

·

View notes

Text

Horse boy on a trash can, 1999 🐴🗑️

Really loving Crazy Diamond's Demonic Heartbreak so far, hope this cowboy gets a happy ending 🤞

Pose reference from a great orville peck interview/photoshoot with attitude

#hol horse#crazy diamond's demonic heartbreak#ccdh#jjba#jojos bizarre adventure#jojos bizzare adventure fanart#my art#i love this sad lil cowboy so much you guys

40 notes

·

View notes

Text

Elon Musk tries yet again to sue for Defamation and contract interference re:Media Matters

Repeating History

To refresh my memory if not others, In July 2023 Elon Musk's Twitter Corp sued the London, England based Inc or Ltd, The Center for Countering Digital Hate. It has the classic tropes of a lawsuit designed to waste courts time. A defendant the court shouldn't really have standing over, wild claims that cannot prove either defamation or any interference of contracts, and hurt pride. That complaint Attorney representing Twitter from White and Case, Johnathon Hawk, claimed that the CCDH Injured Twitter by "scraping" data, and intimidate clients. Well in all honesty John Hawk claimed that the CCDH targeted specific users to remove off of Twitter. The piece de resistance of this whole travesty of law was the part where Twitter pledged to enjoin 50 john doe defendants that were apart of a "Large Dark Money group" that subverts American politics and promotes Covid-19 Vaccines.

History at this point will note that this case can be entirely dismissed from court or continue shortly. Perhaps in light of this fact twitter didn't even try to bring in Media Matters into this whole dark money group thing that was undermining Twitters efforts to "promote free speech"

in fact apart of the CCDH's answer was:

“At its core, X Corp.’s grievance is not that the CCDH Defendants gathered public data in violation of obscure (and largely imagined) contract terms, but that they criticized X Corp. (forcefully) to the public,” -cnn (Online hate watchdog moves to dismiss lawsuit from Elon Musk’s X | CNN Business)

*it costs a decent chunk of money to get lawsuit forms, they don't have the pdf available yet, I ain't paying for it.)

While I hesitant to take anything at face value in a lawsuit, the core of the CCDH complaint and the Media Matters complaint is Elon Musk's injured Ego. One for suggesting his company promotes hate, and the other for straight up calling his company anti-semetic.

Dark Money

at this juncture I wish to move into the subject of Dark Money. The Dark money trope, because it is a conspiracy theory, is that elections are controlled by large sums of invisible money. It can't be traced, reported, or even noticed; except by those who have the will to see. It is an easy way of calling out a political opponent or group that is more popular and has more resources.

It is also slightly based in fact, like all good conspiracies. People just don't realize how expensive it is to run an election. That increasing price is seen as corruptiong, and not the nearly constant electioneering parties do. They really do win a seat of office, and immediately start running their reelection campaign.

The fact that Twitter has of yet to name any groups must show that Hawk's theory of a large group of parties trying to undermine twitter is false. Or he got fired, lemme bing that real quick. No he still works for McDermott, White, and Case. I guess Elon isn't footing the bill, OR MAYBE THE DARK MONEY GROUPS GOT TO HIM.

Hate on Twitter

Twitter has been known for years to bring out the worst in people. They have a profile pic, random name, and something in them wants to Seig Heil. I can't explain it. Its like it is designed to remove your filter when you post, which is why I never did. I quit the site months ago. So I don't know how bad it is know, but right after Elon took over, the entire thing was crypto porn bots it felt like.

Hate has always been a problem online, so it shouldn't be embarrassing for Elong to have to deal with it. All he needs to do is actually make small changes and announce they are apart of a larger plan. and boom. No longer anti-semetic. at least in the public perception.

Elon's weirdness

Elon likes fringe United States Politics. Stuff that isn't popular, but garners a lot of internet traffic. Think Reddit and 4chan hate politics. Spend enough time with Nazi's and you start to sound like one. That happened to him. Unironically his tweets out of context sound really bad. Like on a scale of 1 to hitler, like a 6 or 7.

I think he doesn't understand how bad his statements come off. I am choosing not to repeat what he reposts, or amplifies, or the posts he makes for two reasons. One, it always sounds worse to leave it vague, and two, I don't want to share such vile things.

Media Matters lawsuit

well now to real reason I write this. Yet another group is under the crosshairs of the self-proclaimed free speech absolutist. I don't really like what they do. I find their pithy messages, pithy. Often without context and they draw wide conclusions.

On the other hand this lawsuit alleges a general tort, or civil injury, but is unable to list any damages in the complaint. And protip, all the pontification in the world is useless before a judge if the other party did in fact not be the proximal cause of your injury. Twitter, in its own argument, is the inflictor of its own grievous wound. They put ads next to bad tweets, not Media Matters. No user has that authority or contract with the advertiser.

Oh and for good measure Elon wants the article removed entitled Musk endorses antisemtic pro nazi conspiracy theory, X has been placing ads for apple, et al, next to pro nazi conent from its web. (link As Musk endorses antisemitic conspiracy theory, X has been placing ads for Apple, Bravo, IBM, Oracle, and Xfinity next to pro-Nazi content | Media Matters for America) and not the other 19 articles the lawsuit mentions that harm Twitter and are all lies... etc. Again they can't cite one instance of lies in the complaint, when no proof is necessary but recommended.

You see they claim that they caused a business relationship to fall apart, but can't prove that. Even if it was true, Media Matters has to cite any of the thousands if not billions of reasons that Musk is a bad social media brand manager. Poof, the central concept and damage of the lawsuit becomes null. The entire lawsuit hinges on Musk proving that business relationships were irreparably and unfairly damaged by Media Matters, and good luck, it ain't happening.

all of this being said Media Matters did not:

pervert the truth to say what they want (paraphasing)

Manufacture a fake user experience

Threaten Twitter in any way

Manipulate algorithms (they cant, twitter controls those

the clueless lawyers for X instead of giving Elon a Xanax and ketamine decided to file this suit. They claim that Media Matters manipulated the platform by creating a newish account and following some bad actors in the space. Bad actors amplified by musk, followed musk, and unbanned by musk btw. This is not manipulation; this is an authentic user experience. People post bad things and then you see them. These posts are promoted by the algorithm randomly anyway because they attract a lot of views and engagement. Twitter tries to claim it is fake, but Media Matters as a matter of fact cannot and couldnot invent a fake twitter enviroment. Media matters did not make Seig heil posts. they merely refreshed the Seig heil posts until advertisers appeared next to it, thereby honestly simulating the twitter experience for the average user. these nazi kind of accounts just appear in my experience, doesn't matter if you are looking at cats, or a Snoop Dog post.

Media Matters simply showed screenshots of these companies advertising next to salacious materials. What threat did they make. DId they call for people to "cancel" IBM because they advertised on Twitter. I cannot recall of any. And even if they did call for people to cancel a company, did anyone listen? was any damage done? well good luck proving it.

Perverting the truth and distorting it isn't a real cause of action or defamation. Media Matters may have helped things along, but they didn't knowingly make a material misrepensentation of the facts as they understood them. I find it difficult for Musk, his attorneys, or anyone to really prove they knowingly lied.

The fact is that Twitter, under Musk's leadership, has continued to advertise next to posts that praise Hitler, among all sorts of things. Trying to claim users can curate them away is a false answer to the claim that advertisers are advertising to things they would rather not be associated with. Imagine a Vacation Bible School being put up next to hardcore porn. I am sure it happened. If users can curate the experience to hide bad posts, then bad posts exist, and have ads next to them.

TURNS OUT THIS IS WHY TWITTER "SHADOWBANNED" PEOPLE. TO STOP ADVERTISERS FROM LEAVING BECAUSE SOMEONE WAS 420EPICYOLOHITLERDIDNOTHINGWRONG cat emoji, eggplant emoji picture of a horse pooping, picture of a lady getting beheaded in an anime. Troll face. advertisers for the most part don't want to be next to that.

Turns out Musk firing the people that handle this kind of thing really really bit him in the ass. Who Knew?

0 notes

Text

#the basis of musk's argument is so stupid hes claiming that openAI has already created AGI#something several magnitudes of order more sophisticated than all current LLMs#and is currently impossible to achieve without a gigantic leap forward in computing technology#that is impossible to achieve in this lifetime#he knows this. sam altman knows this#this lawsuit exists bc musk is still pissed that altman snubbed him and refused his buyout of openAI#and to distract from his failed lawsuit against the CCDH

48 notes

·

View notes

Text

I literally keep saying I'll read phf then straight up dont

#forgetful king 😔#i literally only remember at work#once i get home i fixate on my computer and draw or games#maybe just maybe#when i work my way through reading golden winds manga#i can get myself to do it then...#so sorry friends#it doesnt help that it sounds like it mainly focuses on guys i dont terribly care for or whatever#like ccdh ill read when it comes out because i loVE josuke and a manga formate is easier for me to keep focus on an read#ill get there#someday#maybe#someone needs to just like#throw it at my ass like a brick and ill read it#im lying they basically already did#gatta throw it harder

1 note

·

View note

Text

Why disinformation experts say the Israel-Hamas war is a nightmare to investigate

The Israel-Hamas conflict has been a minefield of confusing counter-arguments and controversies—and an information environment that experts investigating mis- and disinformation say is among the worst they’ve ever experienced.

In the time since Hamas launched its terror attack against Israel last month—and Israel has responded with a weekslong counterattack—social media has been full of comments, pictures, and video from both sides of the conflict putting forward their case. But alongside real images of the battles going on in the region, plenty of disinformation has been sown by bad actors.

“What is new this time, especially with Twitter, is the clutter of information that the platform has created, or has given a space for people to create, with the way verification is handled,” says Pooja Chaudhuri, a researcher and trainer at Bellingcat, which has been working to verify or debunk claims from both the Israeli and Palestinian sides of the conflict, from confirming that Israel Defense Forces struck the Jabalia refugee camp in northern Gaza to debunking the idea that the IDF has blown up some of Gaza’s most sacred sites.

Bellingcat has found plenty of claims and counterclaims to investigate, but convincing people of the truth has proven more difficult than in previous situations because of the firmly entrenched views on either side, says Chaudhuri’s colleague Eliot Higgins, the site’s founder.

“People are thinking in terms of, ‘Whose side are you on?’ rather than ‘What’s real,’” Higgins says. “And if you’re saying something that doesn’t agree with my side, then it has to mean you’re on the other side. That makes it very difficult to be involved in the discourse around this stuff, because it’s so divided.”

For Imran Ahmed, CEO of the Center for Countering Digital Hate (CCDH), there have only been two moments prior to this that have proved as difficult for his organization to monitor and track: One was the disinformation-fueled 2020 U.S. presidential election, and the other was the hotly contested space around the COVID-19 pandemic.

“I can’t remember a comparable time. You’ve got this completely chaotic information ecosystem,” Ahmed says, adding that in the weeks since Hamas’s October 7 terror attack social media has become the opposite of a “useful or healthy environment to be in”—in stark contrast to what it used to be, which was a source of reputable, timely information about global events as they happened.

The CCDH has focused its attention on X (formerly Twitter), in particular, and is currently involved in a lawsuit with the social media company, but Ahmed says the problem runs much deeper.

“It’s fundamental at this point,” he says. “It’s not a failure of any one platform or individual. It’s a failure of legislators and regulators, particularly in the United States, to get to grips with this.” (An X spokesperson has previously disputed the CCDH’s findings to Fast Company, taking issue with the organization’s research methodology. “According to what we know, the CCDH will claim that posts are not ‘actioned’ unless the accounts posting them are suspended,” the spokesperson said. “The majority of actions that X takes are on individual posts, for example by restricting the reach of a post.”)

Ahmed contends that inertia among regulators has allowed antisemitic conspiracy theories to fester online to the extent that many people believe and buy into those concepts. Further, he says it has prevented organizations like the CCDH from properly analyzing the spread of disinformation and those beliefs on social media platforms. “As a result of the chaos created by the American legislative system, we have no transparency legislation. Doing research on these platforms right now is near impossible,” he says.

It doesn’t help when social media companies are throttling access to their application programming interfaces, through which many organizations like the CCDH do research. “We can’t tell if there’s more Islamophobia than antisemitism or vice versa,” he admits. “But my gut tells me this is a moment in which we are seeing a radical increase in mobilization against Jewish people.”

Right at the time when the most insight is needed into how platforms are managing the torrent of dis- and misinformation flooding their apps, there’s the least possible transparency.

The issue isn’t limited to private organizations. Governments are also struggling to get a handle on how disinformation, misinformation, hate speech, and conspiracy theories are spreading on social media. Some have reached out to the CCDH to try and get clarity.

“In the last few days and weeks, I’ve briefed governments all around the world,” says Ahmed, who declines to name those governments—though Fast Company understands that they may include the U.K. and European Union representatives. Advertisers, too, have been calling on the CCDH to get information about which platforms are safest for them to advertise on.

Deeply divided viewpoints are exacerbated not only by platforms tamping down on their transparency but also by technological advances that make it easier than ever to produce convincing content that can be passed off as authentic. “The use of AI images has been used to show support,” Chaudhuri says. This isn’t necessarily a problem for trained open-source investigators like those working for Bellingcat, but it is for rank-and-file users who can be hoodwinked into believing generative-AI-created content is real.

And even if those AI-generated images don’t sway minds, they can offer another weapon in the armory of those supporting one side or the other—a slur, similar to the use of “fake news” to describe factual claims that don’t chime with your beliefs, that can be deployed to discredit legitimate images or video of events.

“What is most interesting is anything that you don’t agree with, you can just say that it’s AI and try to discredit information that may also be genuine,” Choudhury says, pointing to users who have claimed an image of a dead baby shared by Israel’s account on X was AI—when in fact it was real—as an example of weaponizing claims of AI tampering. “The use of AI in this case,” she says, “has been quite problematic.”

586 notes

·

View notes

Text

Federal judge repeatedly kicks Elon Musk in the balls for 52 glorious pages, ruling that Musk suing a research group was basically a temper tantrum.

Turns out, his website really does traffic in hate speech. Who knew?

68 notes

·

View notes

Text

On March 25, Judge Charles Breyer granted CCDH’s motions and threw out the case. His decision leaves no doubt as to his views on X’s lawsuit:

“Sometimes it is unclear what is driving a litigation, and only by reading between the lines of a complaint can one attempt to surmise a plaintiff's true purpose. Other times, a complaint is so unabashedly and vociferously about one thing that there can be no mistaking that purpose. This case represents the latter circumstance. This case is about punishing the Defendants for their speech.”

52 notes

·

View notes

Text

F—k that draft dodging South African racist oligarch!

34 notes

·

View notes

Text

A New Type of War

While many still have not realized it, we are at war. The aggressors are government intelligence and security agencies that have turned their weapon of choice — information — against their own citizens.

And, while the organizations doing the CIA's dirty work may have changed, the basic organizational structure is the same as it was in 1967. Taxpayer money gets funneled through various federal departments and agencies into the hands of nongovernmental agencies that carry out censorship activities as directed. As recently reported by investigative journalists Alex Gutentag and Michael Shellenberger:6

"The Anti-Defamation League (ADL), the Center for Countering Digital Hate (CCDH), and the Institute for Strategic Dialogue (ISD) are nongovernmental organizations, their leaders say.

When they demand more censorship of online hate speech, as they are currently doing of X, formerly Twitter, those NGOs are doing it as free citizens and not, say, as government agents.

But the fact of the matter is that the US and other Western governments fund ISD, the UK government indirectly funds CCDH, and, for at least 40 years, ADL spied on its enemies and shared intelligence with the US, Israel and other governments.

The reason all of this matters is that ADL's advertiser boycott against X may be an effort by governments to regain the ability to censor users on X that they had under Twitter before Musk's takeover last November.

Internal Twitter and Facebook messages show that representatives of the US government, including the White House, FBI, Department of Homeland Security (DHS), as well as the UK government, successfully demanded Facebook and Twitter censorship of their users over the last several years."

What we have now is government censorship by proxy, a deeply anti-American activity that has become standard practice, not just by intelligence and national security agencies but federal agencies of all stripes, including our public health agencies.

September 8, 2023, the Fifth Circuit Court of Appeals upheld a lower court's injunction banning the White House, the surgeon general, the Centers for Disease Control and Prevention and the FBI from influencing social media companies to remove so-called "disinformation."7

According to the judges' decision,8 "CDC officials provided direct guidance to the platforms on the application of the platforms' internal policies and moderation activities" by telling them what was, and was not, misinformation, asking for changes to platforms' moderation policies and directing platforms to take specific actions.

"Ultimately, the CDC's guidance informed, if not directly affected, the platforms' moderation decisions," the judges said, so, "although not plainly coercive, the CDC officials likely significantly encouraged the platforms' moderation decisions, meaning they violated the First Amendment."

Unfortunately, as mentioned earlier, the U.S. government is not acting alone. Governments around the world and international organizations like the World Health Organization are all engaged in censorship, and when it comes to medical information, most Big Tech platforms are taking their lead from the WHO. And, if the WHO's pandemic treaty9 is enacted, then the WHO will have sole authority to dictate truth. Everything else will be censored.

36 notes

·

View notes

Text

Last week we got a letter from Elon Musk’s X. Corp threatening CCDH with legal action over our work, exposing the proliferation of hate and lies on Twitter since he became the owner. Elon Musk’s actions represent a brazen attempt to silence honest criticism and independent research in the desperate hope that he can stem the tide of negative stories and rebuild his relationship with advertisers.

Since Musk took over Twitter in late 2022, CCDH has been studying and publishing research on the startling rise in hate speech, disinformation and incitement to harm on Twitter, which has been echoed by the independent findings of other civil society organizations, and researchers around the globe.

14 notes

·

View notes

Text

X Corp., the parent company of the social media platform formerly known as Twitter, filed a lawsuit in San Francisco federal court Monday against a nonprofit organization that monitors hate speech and disinformation, following through on a threat that had made headlines hours earlier.

The lawsuit, filed in U.S. District Court for the Northern District of California, accuses the Center for Countering Digital Hate (CCDH) of orchestrating a "scare campaign to drive away advertisers from the X platform" by publishing research reports claiming that the social media service failed to take action against hateful posts. The service is owned by the technology mogul Elon Musk.

In the filing, lawyers for X. Corp alleged that the CCDH carried out "a series of unlawful acts designed to improperly gain access to protected X Corp. data, needed by CCDH so that it could cherry-pick from the hundreds of millions of posts made each day on X and falsely claim it had statistical support showing the platform is overwhelmed with harmful content."

The complaint specifically accuses the nonprofit group of breach of contract, violating federal computer fraud law, intentional interference with contractual relations and inducing breach of contract. The company's lawyers made a demand for a jury trial.

The lawsuit was filed just hours after the CCDH revealed that Musk's lawyer, Alex Spiro, had sent the organization a letter on July 20 saying X Corp. was investigating whether the CCDH's "false and misleading claims about Twitter" were actionable under federal law.

In a statement to NBC News, CCDH founder and chief executive Imran Ahmed took direct aim at Musk, arguing that the Tesla and SpaceX tycoon's "latest legal threat is straight out of the authoritarian playbook — he is now showing he will stop at nothing to silence anyone who criticizes him for his own decisions and actions."

"The Center for Countering Digital Hate’s research shows that hate and disinformation is spreading like wildfire on the platform under Musk's ownership and this lawsuit is a direct attempt to silence those efforts," Ahmed added in part. "Musk is trying to 'shoot the messenger' who highlights the toxic content on his platform rather than deal with the toxic environment he's created.

"The CCDH's independent research won’t stop — Musk will not bully us into silence," Ahmed said in closing.

The research report that drew particular ire from X Corp. claimed that the platform had failed to take action against 99% of 100 posts flagged by CCDH staff members that included racist, homophobic and antisemitic content.

Musk has drawn fierce scrutiny since buying Twitter last year. Top hate speech watchdog groups and activists have blasted him for loosening restrictions on what can be posted on the platform, and business analysts have raised eyebrows at his seemingly erratic and impulsive decision-making.

The Center for Countering Digital Hate's research has been cited by NBC News, The New York Times, The Washington Post, CNN and many other news outlets.

Musk, who has been criticized for posting conspiratorial or inflammatory content on his own account, has said he is acting in the interest of "free speech." He has said he wants to transform Twitter into a "digital town square."

Musk has also claimed that hate speech on the platform was shrinking. In a tweet on Nov. 23, Musk wrote that “hate speech impressions” were down by one-third and posted a graph — apparently drawn from internal data — showing a downward trend.

13 notes

·

View notes

Text

‘The suit, against the nonprofit Center for Countering Digital Hate, focused on research the organization published in June. In one report, the CCDH looked at 100 different accounts subscribed to Twitter Blue and found that Twitter failed to act on 99% of hate posted by users. The group also questioned whether Twitter’s algorithm boosts “toxic tweets.”

Other CCDH research indicated that Twitter failed to act on 89% of anti-Jewish hate speech and 97% of anti-Muslim hate speech on the platform.

X is accusing the CCDH of using data that it didn’t legally possess to “falsely claim it had statistical support showing the platform is overwhelmed with harmful content.” The company is seeking a jury trial, unspecified monetary damages, and wants to block CCDH and any of its collaborators or employees from accessing data provided by X to social media-listening platform Brandwatch.’

-

Ah, the Johnson and Johnson approach: “If we sue enough of them researchers will be too scared to publish negative things about us.”

11 notes

·

View notes

Text

A US judge has thrown out a lawsuit brought by Elon Musk's social media firm X against a group that had claimed that hate speech had risen on the platform since the tech tycoon took over.

X had accused the Center for Countering Digital Hate (CCDH) of taking "unlawful" steps to access its data.

The US judge dismissed the case and said it was "evident" Mr Musk's X Corp did not like criticism.

X said it planned to appeal.

Imran Ahmed, founder and chief executive of CCDH, celebrated the win, saying Mr Musk had conducted a "loud, hypocritical campaign" of harassment and abuse against his organisation in an attempt to "avoid taking responsibility for his own decisions".

"The courts today have affirmed our fundamental right to research, to speak, to advocate, and to hold accountable social media companies" he said, adding that he hoped the ruling would "embolden" others to "continue and even intensify" similar work.

It is a striking loss for the billionaire, a self-described "free-speech absolutist".

The company, formerly known as Twitter, launched its lawsuit against CCDH in 2023, claiming its researchers had cherry-picked data to create misleading reports about X.

It accused the group of "intentionally and unlawfully" scraping data from X, in violation of its terms of service, in order to produce its research.

It said the non-profit group designed a "scare campaign" to drive away advertisers, and it demanded tens of millions of dollars in damages.

But in his decision Judge Charles Breyer said Mr Musk was "punishing the defendants for their speech".

Judge Breyer said X appeared "far more concerned about CCDH's speech than it is its data collection methods".

He said the company had "brought this case in order to punish CCDH for ... publications that criticised X Corp - and perhaps in order to dissuade others who might wish to engage in such criticism".

Mr Musk purchased the platform in 2022 for $44bn (£34bn) and swiftly embarked on a slew of controversial changes, sharply reducing its workforce with deep cuts to teams in charge of content moderation and other areas.

His own posts have also drawn charges of anti-semitism, a claim he has denied.

17 notes

·

View notes

Text

Imran Ahmed, chief executive officer of the Center for Countering Digital Hate (CCDH), a non-profit that fights misinformation: “American democracy itself cannot survive wave after wave of disinformation that seeks to undermine democracy, consensus and further polarizes the public.”

10 notes

·

View notes