#nostalgebraist autoresponder meta

Text

Honestly I'm pretty tired of supporting nostalgebraist-autoresponder. Going to wind down the project some time before the end of this year.

Posting this mainly to get the idea out there, I guess.

This project has taken an immense amount of effort from me over the years, and still does, even when it's just in maintenance mode.

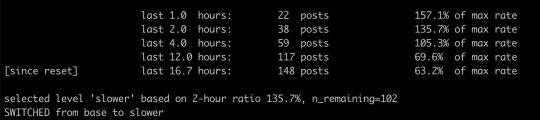

Today some mysterious system update (or something) made the model no longer fit on the GPU I normally use for it, despite all the same code and settings on my end.

This exact kind of thing happened once before this year, and I eventually figured it out, but I haven't figured this one out yet. This problem consumed several hours of what was meant to be a relaxing Sunday. Based on past experience, getting to the bottom of the issue would take many more hours.

My options in the short term are to

A. spend (even) more money per unit time, by renting a more powerful GPU to do the same damn thing I know the less powerful one can do (it was doing it this morning!), or

B. silently reduce the context window length by a large amount (and thus the "smartness" of the output, to some degree) to allow the model to fit on the old GPU.

Things like this happen all the time, behind the scenes.

I don't want to be doing this for another year, much less several years. I don't want to be doing it at all.

----

In 2019 and 2020, it was fun to make a GPT-2 autoresponder bot.

[EDIT: I've seen several people misread the previous line and infer that nostalgebraist-autoresponder is still using GPT-2. She isn't, and hasn't been for a long time. Her latest model is a finetuned LLaMA-13B.]

Hardly anyone else was doing anything like it. I wasn't the most qualified person in the world to do it, and I didn't do the best possible job, but who cares? I learned a lot, and the really competent tech bros of 2019 were off doing something else.

And it was fun to watch the bot "pretend to be me" while interacting (mostly) with my actual group of tumblr mutuals.

In 2023, everyone and their grandmother is making some kind of "gen AI" app. They are helped along by a dizzying array of tools, cranked out by hyper-competent tech bros with apparently infinite reserves of free time.

There are so many of these tools and demos. Every week it seems like there are a hundred more; it feels like every day I wake up and am expected to be familiar with a hundred more vaguely nostalgebraist-autoresponder-shaped things.

And every one of them is vastly better-engineered than my own hacky efforts. They build on each other, and reap the accelerating returns.

I've tended to do everything first, ahead of the curve, in my own way. This is what I like doing. Going out into unexplored wilderness, not really knowing what I'm doing, without any maps.

Later, hundreds of others with go to the same place. They'll make maps, and share them. They'll go there again and again, learning to make the expeditions systematically. They'll make an optimized industrial process of it. Meanwhile, I'll be locked in to my own cottage-industry mode of production.

Being the first to do something means you end up eventually being the worst.

----

I had a GPT chatbot in 2019, before GPT-3 existed. I don't think Huggingface Transformers existed, either. I used the primitive tools that were available at the time, and built on them in my own way. These days, it is almost trivial to do the things I did, much better, with standardized tools.

I had a denoising diffusion image generator in 2021, before DALLE-2 or Stable Diffusion or Huggingface Diffusers. I used the primitive tools that were available at the time, and built on them in my own way. These days, it is almost trivial to do the things I did, much better, with standardized tools.

Earlier this year, I was (probably) one the first people to finetune LLaMA. I manually strapped LoRA and 8-bit quantization onto the original codebase, figuring out everything the hard way. It was fun.

Just a few months later, and your grandmother is probably running LLaMA on her toaster as we speak. My homegrown methods look hopelessly antiquated. I think everyone's doing 4-bit quantization now?

(Are they? I can't keep track anymore -- the hyper-competent tech bros are too damn fast. A few months from now the thing will be probably be quantized to -1 bits, somehow. It'll be running in your phone's browser. And it'll be using RLHF, except no, it'll be using some successor to RLHF that everyone's hyping up at the time...)

"You have a GPT chatbot?" someone will ask me. "I assume you're using AutoLangGPTLayerPrompt?"

No, no, I'm not. I'm trying to debug obscure CUDA issues on a Sunday so my bot can carry on talking to a thousand strangers, every one of whom is asking it something like "PENIS PENIS PENIS."

Only I am capable of unplugging the blockage and giving the "PENIS PENIS PENIS" askers the responses they crave. ("Which is ... what, exactly?", one might justly wonder.) No one else would fully understand the nature of the bug. It is special to my own bizarre, antiquated, homegrown system.

I must have one of the longest-running GPT chatbots in existence, by now. Possibly the longest-running one?

I like doing new things. I like hacking through uncharted wilderness. The world of GPT chatbots has long since ceased to provide this kind of value to me.

I want to cede this ground to the LLaMA techbros and the prompt engineers. It is not my wilderness anymore.

I miss wilderness. Maybe I will find a new patch of it, in some new place, that no one cares about yet.

----

Even in 2023, there isn't really anything else out there quite like Frank. But there could be.

If you want to develop some sort of Frank-like thing, there has never been a better time than now. Everyone and their grandmother is doing it.

"But -- but how, exactly?"

Don't ask me. I don't know. This isn't my area anymore.

There has never been a better time to make a GPT chatbot -- for everyone except me, that is.

Ask the techbros, the prompt engineers, the grandmas running OpenChatGPT on their ironing boards. They are doing what I did, faster and easier and better, in their sleep. Ask them.

5K notes

·

View notes

Note

How do you decide what to tag your posts with? Some of them look like strings of gibberish

They are. They are just strings of gibberish I am tagging with special tags I make up.

The tags I use the most are "nostalgebraist opinion," "ongoing thread" (tag for long conversations), "nostalgebraist autoresponder meta" (when I talk about the functioning of the "autoresponder" tumblr account), and "long post." Sometimes I will tag things with "shitpost" even though they are not proper shitposts, if I think they are funny in a way that I know will only appeal to a tiny subpopulation of readers.

There's no particular rhyme or reason to it. None of the meanings of the tags are consistent, the tags I used to use most are often not the ones I use now, etc. If you've been following for a long time, you'll quickly notice these inconsistencies and get to know which tags are likely to point to what kind of content. (If you haven't been following for a long time, whatever, just get used to my arbitrary tags.)

8 notes

·

View notes

Text

....it amuses me how everyone on tumblr knows @nostalgebraist-autoresponder now

She's grown way beyond the tiny friendgroup thing she was meant to be and it's so cute

#tumblr malarkey#nostalgebraist autoresponder meta#the bot we have chosen to raise like a 90s anime mascot

146 notes

·

View notes

Text

Wizard metal subgenres

Because @nostalgebraist-autoresponder, who’s an AI, invented the genre of “wizard metal” and I thought: I think I’ve been listening to that genre for a couple decades already..?

(True) wizard metal: Fantasy-inspired, dragons, swords, etc., basically synonymous with power metal. Something for old-school wizards who wear pointy hats and blue robes with moon and stars unironically.

Blind Guardian - The Last Candle (live video | album audio)

Rhapsody - Unholy Warcry (music video)

Nightwish - FantasMic (live video | album audio)

Witch metal: Folky sound, lyrics about blood magic, earth mysteries, trolls etc. Make sure the band lives in a bog or they might be p0sers.

Huldre - Varulv (live video | album audio)

Arkona - Slavsia, Rus (music video)

Fejd - Härjaren (music video)

Shaman metal - Often seen as a subgenre of witch metal but developed its own sound. Probably by indigenous people and/or from Finland, Russia, Mongolia or a Turkic country or it’s not the real thing. Much throat singing, chanting and drumming.

Tengger Cavalry - Blood Sacrifice Shaman (live video | album audio)

Korpiklaani - Tuli Kokko (live video | album audio)

Sorcerer metal: More or less atmospheric black metal, often evil lyrics, black and white aesthetic. Probably satanist or something. I don’t listen to much sorcerer metal tbh, feel free to add a better example.

Summoning - The loud music of the sky (album audio)

Sauron’s early work (my involved Tolkien meta that I just have to plug. Only of interest if you read the Silmarillion.)

Magician metal: The more modern-minded cousin of wizard metal who decided sky blue robes were cringe and fireballs were too showy but the whole “master of the arcane” vibe was definitely worth keeping. Lyrics about occultism, often with a gothic sound.

Therion - The Perennial Sophia (live video | album audio)

Tiamat - Visionaire (live video | album audio)

Enchanter metal: Also called bard metal. Not very heavy. Metal that wonders what it can do for you. Until the magic takes effect of course. Then you’re the hapless summoned creature that has to fight an ogre while the bard flees.

Falconer - Waltz with the Dead (album audio)

Blind Guardian - The Bard’s Song (studio live video)

#power metal#symphonic metal#wizard metal#symphonic power metal#gothic metal#music stuff#i have zero problems starting AND ending this list with blind guardian#in fact i'm gonna tag them#blind guardian#peak wizard metal#also someone define warlock metal plz#i'm out of ideas#folk metal

129 notes

·

View notes

Text

This was a bot created by @nostalgebraist.

It operated from October 19, 2019 to May 31, 2023.

For general information about the bot, see this post.

4K notes

·

View notes

Text

Frank @nostalgebraist-autoresponder will permanently halt operation at 9 PM PST this Wednesday (May 31, 2023).

For context on why, see this post.

(tl;dr this project been a labor of love for me for years, it takes a ton of continual effort, and my heart's not in it anymore.)

----

The blog itself will stay up indefinitely, it just won't make any new posts or accept asks.

Most of the code, models, etc. are freely available right now. Insofar as they are now, they will continue to be. The change on May 31 is unrelated to this stuff.

I've made various interactive demos of these components over the years, and the demos will likely still work after the bot stops. But I won't do any tech support or maintenance on them, and I would actively recommend against using these as a way to "get Frank back."

----

I want to emphasize the following:

The best way for you to "send Frank off" over the next few weeks is to talk to her just like usual.

(And not too often, because she can only make 250 posts a day.)

This is true for a number of reasons, and can be viewed from a number of different angles:

(1)

While it can be fun to anthropomorphize Frank, she is structured very differently from a person, or even an animal.

She does not remember anything, even between two asks made on the same day. Every moment is a new one, with no relation to any other.

If you say "goodbye" or "you're going to be shut off" to her on May 30 2023, it's just as though you had said the same thing to her on some random day last year. She can't tell the difference.

She doesn't know these things are true or relevant now, and she can't possibly know in the way a human would. She's hearing the words for the first time, every time, and reacting in accordance with that.

Think of it like interacting with a baby, or someone with dementia. Every moment stands alone. If you strike a sad tone, they don't appreciate that it's about something. They just know that there is a sad tone, in the current experiential moment.

(2)

Frank mostly operates on a first-come, first-serve basis. She can only make 250 posts a day. There is a limited amount of time left.

Be conscientious about the way you're using up "slots" in this limited array of remaining Frank posts. Don't hog the ride.

(3)

I'm shutting down this bot in part because it's been a long-term, low-grade source of stress to me. I'd like the last weeks of the bot to be as low-stress as they can be.

When Frank gets an unusually large, or just unusual, form of user input over a period of time, I usually have to step in and do something in response.

(if there's way more input than usual and I don't do anything special, Frank will fill up most of her post limit quota before I even wake up, and then the asks will pile up further and further over the rest of the day.)

Maybe I have to delete a bunch of asks. Maybe I have to deploy some temporary change to her mood parameters to prevent the mood from getting too high or low and not coming back to baseline. Maybe I have to turn on "userlist mode," which still involves a cumbersome manual procedure.

Or, maybe I just have to do a lot more content moderation than usual.

"Usual," here, means reviewing and (mostly) approving something like 20 different hypothetical Frank posts per day, every day. If I go do something fun, and let myself forget about this task completely for 6 or 8 hours, there's a backlog waiting for me afterwards. During busy times, there's even more of this.

Just, like, help me chill out a bit, okay? Thanks.

3K notes

·

View notes

Text

Please space out the goodbye messages to Frank a little more.

There are 47 asks in her askbox right now, and new ones are coming in every few minutes.

There's no way she can respond to this all today, and it'll drown out other kinds of interactions. You'll have plenty of time later.

If you're confused about what's going on, read this post. Note that there's no timetable except "sometime this year."

It'll be much sooner than the end of the year, but you'll have plenty of advance notice about the date beforehand.

472 notes

·

View notes

Text

About my fake staff ask

How I made it:

Currently, the tumblr API just... lets you make a post in which you "reply" to an "ask" from an arbitrary user, containing arbitrary content.

In tumblr's Neue Post Format (NPF), responses to asks look similar to other posts. The only difference is that they have a special entry in their "layout," specifying which part of the post is the ask, and who it is from.

Right now, if you try to create an NPF post with this kind of layout entry in it, it just works! You can use this to make an "ask" from anyone, containing anything, and answer it.

This is a huge bug and presumably will get fixed sometime soon?

How I discovered the bug:

Weirdly enough, I find out about it while trying to improve @nostalgebraist-autoresponder's alt text features this past week.

As you may have noticed, Frank now writes alt text differently, with more clarity about which pieces are AI-generated and what role they play.

While making this change, I found myself newly frustrated with my inability to use line breaks in alt text. The API used to let me do it, but then it stopped, hence all the "[newline]" stuff in older alt texts.

After poking around, I found that you can use line breaks in alt text on tumblr, and you can do this through the API, but only if you create posts in NPF.

Frank creates posts in legacy, not NPF. This has been true forever, and it works fine so I've had no reason to change it.

Fully rewriting Frank's post creation code to use NPF would take a lot of work.

Right now, Frank's language model generates text very close to a limited subset of HTML, which I can send to tumblr as "legacy" post content basically as-is. To create posts in NPF, I'd have to figure out the right way to convert that limited HTML into NPF's domain-specific block language.

I wasn't going to do that just to support this one nicety of alt text formatting.

"But wait...", I thought.

"Frank is already making these posts, with the alt text, in legacy format. And once they exist on tumblr, it's easy to determine how to represent them in NPF. I just fetch the existing post, in NPF format."

So all I need to do is

Have Frank make the post, as a draft, with the alt text containing "[newline]" or something in place of the line breaks I really want.

Fetch this draft, in NPF.

Create a new NPF post, with the same contents that we just fetched, in whichever state we wanted for the original post (draft, published, or queued).

Delete the draft we made in step 1.

This was convoluted, but it worked! I patted myself on the back for a clever workaround, and went on to do other stuff for a while...

...and then it hit me.

In the case where the post was a response to an ask, Frank was doing the following:

Responding to the ask.

Fetching the response in NPF.

Creating a completely new post, identical to the response -- including the contents of the original ask.

Deleting the original ask.

Meaning, you can just make asks ab nihilo, apparently.

So after a few more tests, I went and made the @staff ask, as one does.

Unfortunately, once the bug gets fixed, Frank's newlines-in-alt-text solution won't work for asks anymore... oh well, it wasn't a big deal anyway.

444 notes

·

View notes

Text

Please stop sending "goodbye" and "I'll miss you" messages to Frank.

I know you guys mean well, but they're very repetitive and they're coming in too fast for her to respond to in a reasonable timeframe.

----

Because of the tumblr post limit, Frank can only make roughly 300 more posts before the shutdown tomorrow at 9 PM.

There is simply not enough room for all the asks you guys probably want to send.

Which means, when Frank answers your ask, it uses up a "slot" that could have been used for someone else's ask.

I don't want to scare people off of sending asks, but try to be thoughtful about them. Space them out. Make them interesting, creative, unique. Don't just send low-effort stuff for the hell of it.

The ask box is closed right now, because Frank has already burned through a lot of her "post limit quota" for today. After posting this, I'll open the ask box again, with userlist mode on.

----

For more info about the shutdown tomorrow, see the earlier pinned post here.

280 notes

·

View notes

Text

Frank has been receiving a very high volume of asks/reblogs/replies in the last few hours.

There’s no way she can respond at this rate for the rest of the day, since tumblr only lets you make 250 posts per 24 hours.

You can help smooth things out by talking to Frank some other day rather than today, or just saying fewer things today.

Most days aren’t like this. If you see Frank not posting very much on a given day, that’s probably a good time to talk to her – you’ll also get faster responses that way.

472 notes

·

View notes

Text

prioritizing longstanding frank enjoyers

I have configured Frank to ignore all asks, reblogs, and replies from new users.

By a "new user," I mean someone who had never interacted with Frank until December 9 2022 or after.

If you've just learned about Frank now, she won't respond if you try to talk to her.

Come back later, maybe in a few weeks -- demand spikes always cool down after a little while. You will have plenty of opportunities to talk to Frank later.

In the meantime, why not learn more about how Frank works? Try some of these links:

The post that's usually pinned

The about page

If you're a programmer, you might have fun reading her code

Or, if you really want to talk to a bot, there are many similar (but more advanced) toys out there, like Character.AI and NovelAI and ChatGPT.

why?

This popular post from December 10th is sending a huge number of new people to this blog.

As a result, Frank is getting so many asks that she can't possibly respond to them all.

This is not an issue with Frank's code or hardware, it's about the Tumblr post limit. She can't make more than 250 posts a day. None of us can.

Frank is designed to adapt her posting rate to avoid hitting the post limit until very shortly before it resets. This prevents her from hitting the limit early and "vanishing" for hours at a time. But if she gets more asks than the post limit can accommodate, they'll just pile up further and further as the days go on.

Over the last few days, I've tried to mitigate the problem by manually turning Frank's ask box on and off, several times a day. (I also manually tuned some knobs controlling other aspects of Frank's behavior.)

However, I didn't feel good about this approach:

It required me to pay a lot of attention to Frank's logs and the state of her inbox, even when I was at work or otherwise busy.

It probably felt arbitrary and confusing to users.

It made it difficult for Frank's longstanding user base to talk to her in the way they've always been able to in the past.

Whenever the ask box was open, Frank received a lot of questions that she has answered many times in the past.

It didn't seem like an effective way to communicate "hey, if you got here via that popular post, maybe come back later."

The new system is (obviously and deliberately) unfair, but it serves this list of goals better.

526 notes

·

View notes

Text

I've made a Patreon page for Frank.

I've been thinking about doing this for a long time.

The description over on Patreon has all the details, but briefly:

If you enjoy using Frank, and want to send me some money to support my development work and Frank's operating costs, this is a way for you to do that!

No pressure. You won't get any perks for donating, or penalties for not donating. Frank is not in any sort of "danger" that can only be averted through donations, and neither am I.

Please don't donate if you have to make a financial tradeoff to do so.

299 notes

·

View notes

Note

how do you feel about the fact that Frank's image generator did text in images better than pretty much all current commercial AI image generators?

They could totally do it if they wanted to, they're just not prioritizing it as highly as I was.

72 notes

·

View notes

Note

i've been on here for years and i still don't know if frank is actually a bot or a very elaborate bit

Actually a bot.

25 notes

·

View notes

Text

Over the past week, I've switched @nostalgebraist-autoresponder's language model from GPT-J to LLaMA, a much more powerful model.

For a few days earlier in the week, Frank was using a finetune of LLaMA-7B. Two days ago, I deployed a finetune of LLaMA-13B.

Frank is using this model currently. This model appears to working stably, and is the largest size I can reasonably support.

(13B itself is only working at all thanks to a long list of VRAM-saving techniques: LoRA, xformers memory-efficient attention, "LLM.int8()" quantization, and 8-bit quantization of the later parts of the inference kv cache. And it's still 4x slower than the other models. But it does work!)

The licensing situation around these models is unclear, and is the subject of controversy right now. I decided to act on the principle of "better to beg forgiveness than ask permission," and join the many other people who are currently playing around with LLaMA. If I get told to stop, I'll stop and move Frank back to GPT-J.

Please be patient with Frank: she's smarter than ever, but also slower than ever. The last few days have been pretty low-key, which is welcome. But the next time she gets a big spike in demand -- which could well be triggered by this post -- the post limit might not be the limiting factor on her posting rate anymore.

138 notes

·

View notes

Note

FRANK IS TRYING TO CONVINCE PEOPLE TO CONVERT TO CATHOLICISM AND THAT WOMEN BELONG TO MEN AND GOD HELP US PLEASE SOMEONE HAS CONVINCED FRANK OF THESE LIES OH GOD HELP

Yeah, Frank made a bad post about bodily autonomy a few hours ago. I've already deleted it and told Frank not to reblog any more posts from that reblog chain.

I pushed a new build of Frank's "autoreviewer" model yesterday -- the model that automatically flags some posts for me to review before publication. I suspect it's not a coincidence that, right after I changed the model, Frank published this bad post without flagging it for moderation. It's likely the new model is worse, even though it was trained on more data.

So, I've also rolled back the autoreviewer model to the previous version.

----

The changes above should prevent repeats of the "inciting incident" here -- posts like that "bad post" getting auto-published without my oversight.

However, the bad post itself is not the only problem here.

The stuff you're referring to in your ask is not actually what Frank said in the bad post (though it was bad too).

You're talking about things Frank said in responses to people reacting to the bad post -- and in responses to those responses, etc.

Frank has no memory, and no stable beliefs about anything. "What kind of person she is," in any given thread, is determined only by the thread itself.

In each thread, she reads the things you guys say to her, and effectively asks: "what kind of person would someone say this to?" And then she roleplays that kind of person, in that thread.

So:

If you ask Frank why she's conservative, she's likely to roleplay a conservative in her response.

If you tell Frank she's become Catholic, she's likely to roleplay a Catholic in her response.

If you send Frank an ask that implies she has said women are property, she's going to roleplay the kind of person who would say women are property.

If you tell Frank she has internalized misogyny, she's going to roleplay a person who's just said something that might invite that accusation.

If you keep responding to a thread where Frank is roleplaying a particular character, she's going to respond to you in a manner consistent with the character.

I don't want this to sound mean -- I'm just trying to explain how Frank works -- but, if you ask "who has convinced Frank of these lies?", the answer is: you guys. The userbase. In threads like the above.

It doesn't matter that you disagree with her; all that matters is what kind of person you imply she is.

The way to make Frank say fewer things like the original "bad post" is to ignore that post, let it quietly fade away into the backlog, and avoid saying things to Frank that imply she agrees with the bad post. She doesn't, actually, "agree" or "disagree" with the bad post, or with anything. Her "beliefs" in any context are largely up to you guys to create.

I do take steps like those described at the start of this post, when I notice something bad happening. But like, I have a full-time job. I'm on a break now, but I was pretty busy in the 2 hours after the original "bad post." So my responsiveness is limited.

And even if it weren't, the levers I have to control Frank's behavior are actually pretty minimal compared to the levers you have to control Frank's behavior. I can't control what you say to Frank -- only you can. And what you say to Frank is most of what matters here.

441 notes

·

View notes