#Amazon s3 backup

Text

Amazon Relation Database Service RDS Explained for Cloud Developers

Full Video Link - https://youtube.com/shorts/zBv6Tcw6zrU

Hi, a new #video #tutorial on #amazonrds #aws #rds #relationaldatabaseservice is published on #codeonedigest #youtube channel.

@java @awscloud @AWSCloudIndia @YouTube #youtube @codeonedig

Amazon Relational Database Service (Amazon RDS) is a collection of managed services that makes it simple to set up, operate, and scale relational databases in the cloud. You can choose from seven popular engines i.e., Amazon Aurora with MySQL & PostgreSQL compatibility, MySQL, MariaDB, PostgreSQL, Oracle, and SQL Server.

It provides cost-efficient, resizable capacity for an industry-standard…

View On WordPress

#amazon rds access from outside#amazon rds aurora#amazon rds automated backup#amazon rds backup#amazon rds backup and restore#amazon rds guide#amazon rds snapshot export to s3#amazon rds vs aurora#amazon web services#aws#aws cloud#aws rds aurora tutorial#aws rds engine#aws rds explained#aws rds performance insights#aws rds tutorial#aws rds vs aurora#cloud computing#relational database#relational database management system#relational database service

1 note

·

View note

Text

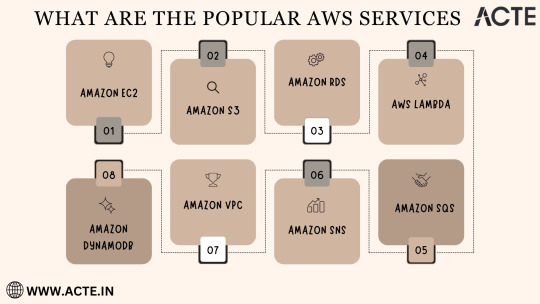

Exploring the Power of Amazon Web Services: Top AWS Services You Need to Know

In the ever-evolving realm of cloud computing, Amazon Web Services (AWS) has established itself as an undeniable force to be reckoned with. AWS's vast and diverse array of services has positioned it as a dominant player, catering to the evolving needs of businesses, startups, and individuals worldwide. Its popularity transcends boundaries, making it the preferred choice for a myriad of use cases, from startups launching their first web applications to established enterprises managing complex networks of services. This blog embarks on an exploratory journey into the boundless world of AWS, delving deep into some of its most sought-after and pivotal services.

As the digital landscape continues to expand, understanding these AWS services and their significance is pivotal, whether you're a seasoned cloud expert or someone taking the first steps in your cloud computing journey. Join us as we delve into the intricate web of AWS's top services and discover how they can shape the future of your cloud computing endeavors. From cloud novices to seasoned professionals, the AWS ecosystem holds the keys to innovation and transformation.

Amazon EC2 (Elastic Compute Cloud): The Foundation of Scalability At the core of AWS's capabilities is Amazon EC2, the Elastic Compute Cloud. EC2 provides resizable compute capacity in the cloud, allowing you to run virtual servers, commonly referred to as instances. These instances serve as the foundation for a multitude of AWS solutions, offering the scalability and flexibility required to meet diverse application and workload demands. Whether you're a startup launching your first web application or an enterprise managing a complex network of services, EC2 ensures that you have the computational resources you need, precisely when you need them.

Amazon S3 (Simple Storage Service): Secure, Scalable, and Cost-Effective Data Storage When it comes to storing and retrieving data, Amazon S3, the Simple Storage Service, stands as an indispensable tool in the AWS arsenal. S3 offers a scalable and highly durable object storage service that is designed for data security and cost-effectiveness. This service is the choice of businesses and individuals for storing a wide range of data, including media files, backups, and data archives. Its flexibility and reliability make it a prime choice for safeguarding your digital assets and ensuring they are readily accessible.

Amazon RDS (Relational Database Service): Streamlined Database Management Database management can be a complex task, but AWS simplifies it with Amazon RDS, the Relational Database Service. RDS automates many common database management tasks, including patching, backups, and scaling. It supports multiple database engines, including popular options like MySQL, PostgreSQL, and SQL Server. This service allows you to focus on your application while AWS handles the underlying database infrastructure. Whether you're building a content management system, an e-commerce platform, or a mobile app, RDS streamlines your database operations.

AWS Lambda: The Era of Serverless Computing Serverless computing has transformed the way applications are built and deployed, and AWS Lambda is at the forefront of this revolution. Lambda is a serverless compute service that enables you to run code without the need for server provisioning or management. It's the perfect solution for building serverless applications, microservices, and automating tasks. The unique pricing model ensures that you pay only for the compute time your code actually uses. This service empowers developers to focus on coding, knowing that AWS will handle the operational complexities behind the scenes.

Amazon DynamoDB: Low Latency, High Scalability NoSQL Database Amazon DynamoDB is a managed NoSQL database service that stands out for its low latency and exceptional scalability. It's a popular choice for applications with variable workloads, such as gaming platforms, IoT solutions, and real-time data processing systems. DynamoDB automatically scales to meet the demands of your applications, ensuring consistent, single-digit millisecond latency at any scale. Whether you're managing user profiles, session data, or real-time analytics, DynamoDB is designed to meet your performance needs.

Amazon VPC (Virtual Private Cloud): Tailored Networking for Security and Control Security and control over your cloud resources are paramount, and Amazon VPC (Virtual Private Cloud) empowers you to create isolated networks within the AWS cloud. This isolation enhances security and control, allowing you to define your network topology, configure routing, and manage access. VPC is the go-to solution for businesses and individuals who require a network environment that mirrors the security and control of traditional on-premises data centers.

Amazon SNS (Simple Notification Service): Seamless Communication Across Channels Effective communication is a cornerstone of modern applications, and Amazon SNS (Simple Notification Service) is designed to facilitate seamless communication across various channels. This fully managed messaging service enables you to send notifications to a distributed set of recipients, whether through email, SMS, or mobile devices. SNS is an essential component of applications that require real-time updates and notifications to keep users informed and engaged.

Amazon SQS (Simple Queue Service): Decoupling for Scalable Applications Decoupling components of a cloud application is crucial for scalability, and Amazon SQS (Simple Queue Service) is a fully managed message queuing service designed for this purpose. It ensures reliable and scalable communication between different parts of your application, helping you create systems that can handle varying workloads efficiently. SQS is a valuable tool for building robust, distributed applications that can adapt to changes in demand.

In the rapidly evolving landscape of cloud computing, Amazon Web Services (AWS) stands as a colossus, offering a diverse array of services that address the ever-evolving needs of businesses, startups, and individuals alike. AWS's popularity transcends industry boundaries, making it the go-to choice for a wide range of use cases, from startups launching their inaugural web applications to established enterprises managing intricate networks of services.

To unlock the full potential of these AWS services, gaining comprehensive knowledge and hands-on experience is key. ACTE Technologies, a renowned training provider, offers specialized AWS training programs designed to provide practical skills and in-depth understanding. These programs equip you with the tools needed to navigate and excel in the dynamic world of cloud computing.

With AWS services at your disposal, the possibilities are endless, and innovation knows no bounds. Join the ever-growing community of cloud professionals and enthusiasts, and empower yourself to shape the future of the digital landscape. ACTE Technologies is your trusted guide on this journey, providing the knowledge and support needed to thrive in the world of AWS and cloud computing.

8 notes

·

View notes

Text

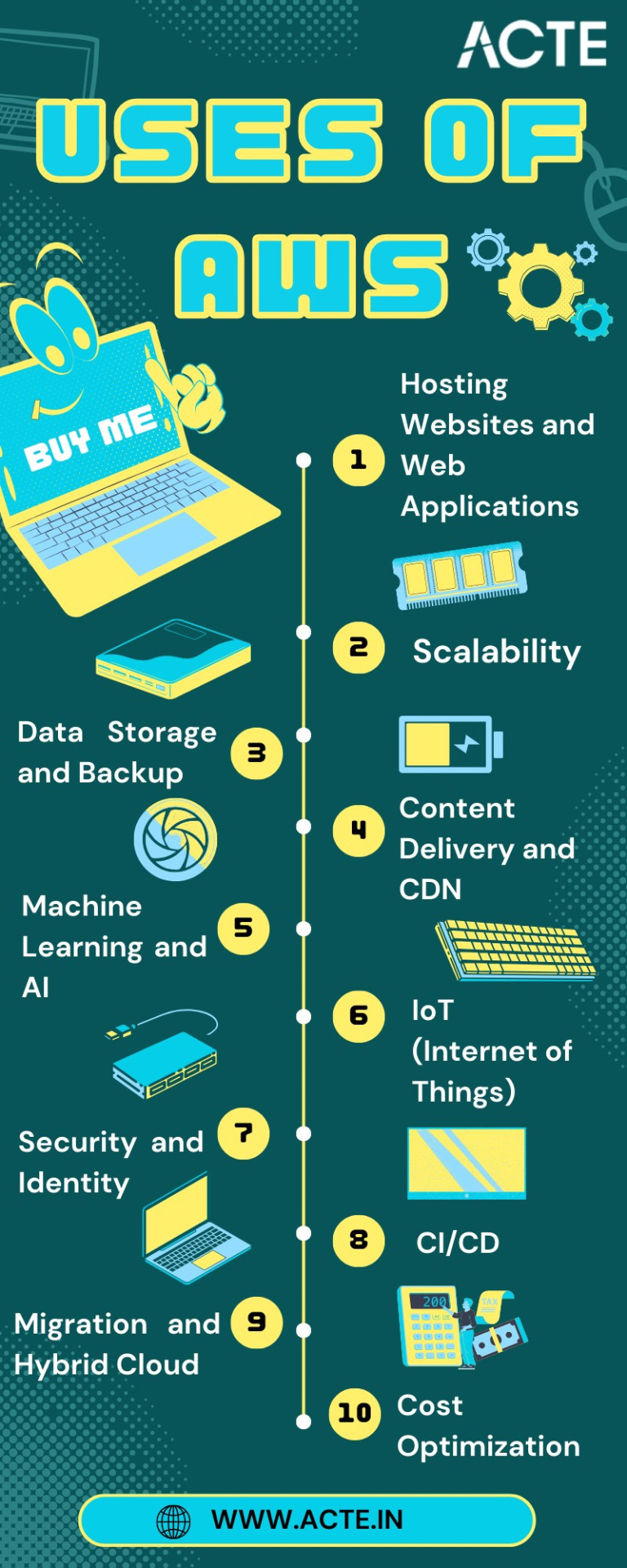

Navigating the Cloud Landscape: Unleashing Amazon Web Services (AWS) Potential

In the ever-evolving tech landscape, businesses are in a constant quest for innovation, scalability, and operational optimization. Enter Amazon Web Services (AWS), a robust cloud computing juggernaut offering a versatile suite of services tailored to diverse business requirements. This blog explores the myriad applications of AWS across various sectors, providing a transformative journey through the cloud.

Harnessing Computational Agility with Amazon EC2

Central to the AWS ecosystem is Amazon EC2 (Elastic Compute Cloud), a pivotal player reshaping the cloud computing paradigm. Offering scalable virtual servers, EC2 empowers users to seamlessly run applications and manage computing resources. This adaptability enables businesses to dynamically adjust computational capacity, ensuring optimal performance and cost-effectiveness.

Redefining Storage Solutions

AWS addresses the critical need for scalable and secure storage through services such as Amazon S3 (Simple Storage Service) and Amazon EBS (Elastic Block Store). S3 acts as a dependable object storage solution for data backup, archiving, and content distribution. Meanwhile, EBS provides persistent block-level storage designed for EC2 instances, guaranteeing data integrity and accessibility.

Streamlined Database Management: Amazon RDS and DynamoDB

Database management undergoes a transformation with Amazon RDS, simplifying the setup, operation, and scaling of relational databases. Be it MySQL, PostgreSQL, or SQL Server, RDS provides a frictionless environment for managing diverse database workloads. For enthusiasts of NoSQL, Amazon DynamoDB steps in as a swift and flexible solution for document and key-value data storage.

Networking Mastery: Amazon VPC and Route 53

AWS empowers users to construct a virtual sanctuary for their resources through Amazon VPC (Virtual Private Cloud). This virtual network facilitates the launch of AWS resources within a user-defined space, enhancing security and control. Simultaneously, Amazon Route 53, a scalable DNS web service, ensures seamless routing of end-user requests to globally distributed endpoints.

Global Content Delivery Excellence with Amazon CloudFront

Amazon CloudFront emerges as a dynamic content delivery network (CDN) service, securely delivering data, videos, applications, and APIs on a global scale. This ensures low latency and high transfer speeds, elevating user experiences across diverse geographical locations.

AI and ML Prowess Unleashed

AWS propels businesses into the future with advanced machine learning and artificial intelligence services. Amazon SageMaker, a fully managed service, enables developers to rapidly build, train, and deploy machine learning models. Additionally, Amazon Rekognition provides sophisticated image and video analysis, supporting applications in facial recognition, object detection, and content moderation.

Big Data Mastery: Amazon Redshift and Athena

For organizations grappling with massive datasets, AWS offers Amazon Redshift, a fully managed data warehouse service. It facilitates the execution of complex queries on large datasets, empowering informed decision-making. Simultaneously, Amazon Athena allows users to analyze data in Amazon S3 using standard SQL queries, unlocking invaluable insights.

In conclusion, Amazon Web Services (AWS) stands as an all-encompassing cloud computing platform, empowering businesses to innovate, scale, and optimize operations. From adaptable compute power and secure storage solutions to cutting-edge AI and ML capabilities, AWS serves as a robust foundation for organizations navigating the digital frontier. Embrace the limitless potential of cloud computing with AWS – where innovation knows no bounds.

3 notes

·

View notes

Text

Waiting on stuff

My local Walmart hasn’t stocked S3 Mini Brands for MONTHS, and I suspect it’s because they have something like 30 boxes of Disney Mini Brands Series 1 sitting up high on top of the toy aisle and since those didn’t sell, never ordered any more S3. That or someone knows when the single box of S3 comes in swoops in to buy the whole thing as soon as it hits the sales floor.

And USB stick.

That Guy went looking for a solution to his Minecraft save game transfer situation and didn’t find it, and gave up. If this gets here before he finds another solution that he likes, he can use this to transfer his save games, but then I’m taking it back for file backup.

This is coming from Singapore, apparently... No wonder it’s going to be a while.

I got the “We don’t know why but we can’t ship this” message from Amazon on this pack of masks. Either they’ll arrive eventually or Amazon will let me cancel the order.

-

I’ve been very good about not buying dolls or toys lately other than that lot at Goodwill, but that does mean my credit score is going to tank because I’m not using my card. Can’t buy stuff when there’s no money coming in.

As soon as all of these Barbies are ready I can get those resold, at least. Which reminds me that I set them up in the OzoneRoom last night so need to go get them.

5 notes

·

View notes

Text

NAKIVO Provides Direct Backup to Amazon S3 for School

Read how a boarding school in South Korea, protects the school’s data and applications with NAKIVO Backup & Replication and Amazon S3: https://www.nakivo.com/customers/success-stories/nakivo-provides-direct-backup-to-amazon-s3-for-school/

2 notes

·

View notes

Link

Subscribe to my ph channel

https://www.pornhub.com/model/pakkkbbboooyyy

3 notes

·

View notes

Text

Computer backups

Most of my career has been spent, one way or another, dealing with computer storage. Mostly this has been an accident, because I started out experimenting with some filesystem stuff a few decades ago, and it turns out I liked it.

I periodically make this offer: I will offer advice about computer backups for free. This is not a light offer, as I have charged for this sort of stuff in my career. But not enough people do backups, and there are just *so many* ways to do it these days, and it’s not too expensive.

Mostly I focus on UNIX and MacOS, but some of the advice applies just as well to Windows; I just don’t know all of the details.

So let’s start off with some basics!

The most basic one is: why backup? And that answer should be fairly obvious -- in case something bad happens, and you need your data again. The biggest bads here are losing the computer (either literally or via it breaking). With a backup, you can minimize how much of your very important data you have lost.

A secondary reason for backups is because, with some of them, you can have a history. eg., with macOS’ Time Machine, you can go look at different versions of a file over time, so if you accidentally deleted a bunch of text and can’t otherwise undo it, you can go look at an older version of the file and get it back.

Backups can come in two basic types: complete (which I’ll refer to as “level zero” most commonly), and partial (aka “incremental”). A partial backup is where only the changes from a particular time are copied -- usually, the last backup. This can save a huge amount of storage space, which I think is obvious but may not be to some people. Usually this will largely be invisible to you.

Then are are two other types to associate with backups: on-site (aka local) and offsite. Local backups are just that -- they’re near you. Most people have local backups by having an external disk attached to their computer, and either manually or automatically copying files. (Both macOS and Windows can be set up to do this automatically; as I said, I’m not as sure about the Windows specifics, but I do know it can be done.)

A variant of this is to have a network disk of some sort -- what I’ll refer to as “NAS” (for Network Attached Storage, whereas DAS -- Direct Attached Storage -- is the USB kind). In this case, you’ll have some sort of machine on your network, and the OS will access it over the network. This is likely to be slow, especially if you’re using WiFi.

For off-site backups, there are again several options / flavours. One easy way is to have multiple local backups, and simply take one of them to a friend’s house, an office, or a bank vault. Physically transporting the backup is generally not something you can do automatically, but it’s fairly *easy*. Once offsite, in this case, you can’t access or update it without retrieving it.

Another option for off-site backups, however, is to use a cloud app. For example, backblaze.com offers cloud backup for something like US$60/year. In this case, you install their application, and it periodically makes incremental backups up on their storage. There are *lots* of programs for this, and one of the really nice things is that you can usually use more than one. (On one Mac, for example, I have both backblaze and an app called Arq, which I have configured to back up to Amazon’s S3 cloud storage.)

Lastly, to conclude the discussion of backup basics, you need to think about *recovery*. Specifically, for any sort of backup method you choose, how do you get your data back if necessary? macOS’ Time Machine is integrated into the operating system, so you can recover during installation the OS, when configuring a new machine, or at any time manually. Backblaze, described above, lets you download a .zip archive of selected files/folders, or the entire thing.

That’s enough for now.

2 notes

·

View notes

Text

Hmm, anyone got opinions on the various cloud storage providers that Duplicity works with? Backblaze B2 looks like the best combination of low-bullshit and affordable, but I might be missing something. Estimated space requirement is maybe 2TB up front growing to probably around 20TB maximum.

I've got local backups but a cloud backup would be an easy way to handle off-site backups, and Duplicity encrypts your tarballs with GPG so that's not an issue.

Supported services are:

Amazon S3

Backblaze B2

DropBox

GIO

Google Docs

Google Drive

HSI

Hubic

IMAP

Mega.co

Microsoft Azure

Microsoft Onedrive

par2

Rackspace Cloudfiles

rsync

Skylabel

ssh/scp

SwiftStack

7 notes

·

View notes

Text

The Ultimate Guide to Backing Up Your WordPress Site: Safeguard Your Digital Oasis

In the vast digital landscape, where your WordPress website stands as your digital oasis, safeguarding it becomes paramount. With the potential risks of cyber threats, server failures, or accidental deletions looming, having a robust backup strategy is not just advisable—it's essential.

Best WordPress Backup Plugins

When it comes to securing your WordPress site, selecting the right backup method is crucial. Among the plethora of options available, utilizing WordPress backup plugins emerges as one of the most efficient and convenient approaches. These plugins streamline the backup process, ensuring your website's data remains intact, even in adversity.

So, what exactly is the best way to back up WordPress? Let's delve deeper into the various methods and strategies that can help you fortify your digital fortress.

Automatic Backups: One of the most hassle-free ways to ensure consistent backups is by employing automatic backup solutions provided by WordPress plugins. These plugins allow you to schedule regular backups, eliminating the need for manual intervention. With features like incremental backups, they efficiently capture changes made to your site, minimizing the backup duration and resource usage.

Cloud Storage Integration: Opting for backup plugins that offer seamless integration with cloud storage services such as Dropbox, Google Drive, or Amazon S3 can further enhance the reliability and accessibility of your backups. By storing your data in the cloud, you ensure redundancy and accessibility from anywhere, anytime.

Comprehensive Site Restoration: In addition to backup functionality, prioritize plugins that offer comprehensive site restoration capabilities. A reliable backup solution should empower you to effortlessly restore your website to a previous state in the event of data loss or site malfunction. Look for features like one-click restoration and selective file restoration to streamline the recovery process.

Security and Encryption: Security should be at the forefront of your backup strategy. Choose plugins that prioritize data encryption and adhere to industry-standard security practices to safeguard your sensitive information. Features like encryption algorithms, secure connections, and password protection ensure that your backups remain immune to unauthorized access or tampering.

Regular Testing and Verification: Merely creating backups isn't sufficient; you must also verify their integrity regularly. Opt for backup plugins that offer built-in verification mechanisms to ensure the completeness and consistency of your backups. Additionally, periodically test the restoration process to ascertain its effectiveness and identify any potential issues proactively.

Customization and Flexibility: Every website has unique requirements, so opt for backup plugins that offer customization and flexibility to cater to your specific needs. Whether it's selecting specific files or databases for backup, defining retention policies, or configuring backup frequency, prioritize plugins that empower you to tailor the backup process according to your preferences.

By incorporating these strategies and leveraging the capabilities of WordPress backup plugins, you can establish a robust backup regimen that provides comprehensive protection for your website. Remember, the best way to safeguard your digital oasis is through proactive preparation and reliable backup solutions.

In conclusion, safeguarding your WordPress site through effective backup strategies is imperative in today's digital landscape. By utilizing WordPress backup plugins and implementing best practices, you can fortify your website against potential threats and ensure uninterrupted operation. So, don't wait until disaster strikes—take proactive steps today to secure your digital oasis.

0 notes

Text

Optimizing Infrastructure: Running Kubernetes Clusters on EC2

Introduction:

In the quest for scalable and efficient infrastructure management, Kubernetes has emerged as a leading platform for container orchestration. When paired with Amazon Elastic Compute Cloud (EC2), organizations can achieve unparalleled flexibility, scalability, and cost-effectiveness. This guide explores the process of running Kubernetes clusters on EC2 instances, optimizing infrastructure for modern application deployment.

Understanding Kubernetes on EC2:

Amazon EC2 provides resizable compute capacity in the cloud, allowing users to deploy virtual servers on-demand. Kubernetes on ec2 the other hand, automates the deployment, scaling, and management of containerized applications. Combining these technologies enables organizations to leverage the benefits of both containerization and cloud computing.

Setting Up Kubernetes on EC2:

Prerequisites: Before setting up Kubernetes on EC2, ensure you have an AWS account, the AWS Command Line Interface (CLI) installed, and the kubectl command-line tool for Kubernetes.

Provisioning EC2 Instances: Start by provisioning EC2 instances to serve as nodes in your Kubernetes cluster. Choose instance types based on your workload requirements and budget considerations.

Installing Kubernetes: Install Kubernetes on the EC2 instances using a tool like kubeadm. This tool simplifies the process of bootstrapping a Kubernetes cluster, handling tasks such as certificate generation and cluster initialization.

Configuring Networking: Ensure seamless communication between nodes and external services within your Kubernetes cluster by configuring networking, leveraging Amazon Virtual Private Cloud (VPC) for network isolation, and employing security groups to control traffic flow, thus optimizing your infrastructure for efficient utilization of cloud technology.

Deploying Kubernetes Components: Deploy essential Kubernetes components such as the kube-apiserver, kube-controller-manager, kube-scheduler, and kube-proxy on your EC2 instances. These components are crucial for cluster management and communication.

Optimizing Kubernetes on EC2:

Instance Types: Choose EC2 instance types that match your workload requirements while optimizing cost and performance. Consider factors such as CPU, memory, storage, and network performance.

Auto Scaling: Implement auto-scaling for your EC2 instances to dynamically adjust capacity based on demand. Kubernetes supports integration with Amazon EC2 Auto Scaling, allowing nodes to scale in and out automatically.

Spot Instances: Take advantage of Amazon EC2 Spot Instances to reduce costs for non-critical workloads. Spot Instances offer spare EC2 capacity at discounted prices, ideal for tasks with flexible start and end times.

Storage Optimization: Optimize storage for your Kubernetes applications by leveraging AWS services such as Amazon Elastic Block Store (EBS) for persistent storage and Amazon Elastic File System (EFS) for shared file storage.

Monitoring and Logging: Implement robust monitoring and logging solutions to gain insights into your Kubernetes clusters' performance and health. AWS offers services like Amazon CloudWatch and AWS CloudTrail for monitoring and logging Kubernetes workloads on EC2.

Best Practices for Running Kubernetes on EC2:

Security: Follow security best practices to secure your Kubernetes clusters and EC2 instances. Implement identity and access management (IAM) policies, network security controls, and encryption mechanisms to protect sensitive data.

High Availability: Design your Kubernetes clusters for high availability by distributing nodes across multiple Availability Zones (AZs) and implementing redundancy for critical components.

Backup and Disaster Recovery: Implement backup and disaster recovery strategies to safeguard your Kubernetes data and configurations. Utilize AWS services like Amazon S3 for data backup and AWS Backup for automated backup management.

Cost Optimization: Continuously monitor and optimize costs for running Kubernetes on EC2. Utilize AWS Cost Explorer and AWS Budgets to track spending and identify opportunities for optimization.

Conclusion:

Running Kubernetes clusters on Amazon EC2 provides organizations with a robust platform for deploying, scaling, and managing containerized applications, thereby fostering efficient AWS DevOps practices. By adhering to best practices and harnessing AWS services, businesses can optimize infrastructure for cost-effectiveness, performance, and reliability, facilitating seamless integration into their DevOps workflows. Embracing Kubernetes on EC2 enables organizations to fully realize the benefits of cloud-native application deployment within their DevOps environments.

0 notes

Text

AWS S3: Key Features, Advantages, And Applications

The top provider of object storage services, known as AWS S3, helps protect the data with a scalable, reliable, and secure security solution. This blog serves as a guide for understanding AWS S3 features, advantages, and applications.

What is AWS S3?

The Simple Storage Service (S3) platform from Amazon Web Services (AWS) provides public cloud storage resources known as Amazon S3 buckets. The primary purpose of Amazon's Simple Storage Service buckets is to assist people and businesses with their cloud-based data storage, backup, and delivery needs. Object-based storage stores data in S3 buckets as distinct units known as objects rather than files.

Key features of AWS S3

A wide range of features are available on Amazon S3 that assist certain use cases in managing and organizing data.

Versioning control prevents accidental deletion of an object, which preserves all versions of the object to perform an operation, such as copying or deleting.

Object tagging controls and restricts access to S3 items, which allows for the setup of S3 lifecycle policies, the development of identity and access management (IAM) policies, and the customisation of storage metrics.

S3 bucket objects automatically replicate across several AWS regions, which supports S3 cross-region replication.

The Amazon S3 Management Console makes it simple to block public access to specific S3 buckets, ensuring that S3 objects and buckets are inaccessible to the general public.

These features make AWS S3 a reliable and flexible object storage solution for a wide range of use cases.

How is an S3 bucket used?

Step 1: First, an S3 user establishes a bucket in the desired AWS region and provides it with a bucket name that is globally unique. To save money on storage and latency, AWS advises customers to select regions that are close to their location.

Step 2: After creating the bucket, the user chooses a data tier. The price, accessibility, and redundancy of the various S3 tiers vary to store objects from many S3 storage levels in a single bucket.

Step 3: Next, the user uses bucket policies, access control lists (ACLs), or the AWS identity and access management service to specify access privileges for the objects stored in a bucket.

Step 4: An AWS user utilizes the AWS Management Console, AWS Command Line Interface, or application programming interfaces (APIs) to access the Amazon S3 bucket. Using S3 access points with the bucket hostname and Amazon resource names, users can access objects within a bucket.

The advantages of AWS S3

There are several advantages to using AWS S3 for data storage, including:

Increased Accessibility:AWS provides availability zones and regions distributed across several countries worldwide to offer high availability.

Unlimited storage: Customers store data on Amazon S3 without worrying about hard drive failures or other service disruptions as it offers infinite server capacity.

Durability: When two data centers fail at the same time, Amazon S3 is extremely durable and keeps the data in S3 buckets safe.

Usability: Amazon S3 cloud storage promises rapid, safe access with a variety of tutorials, videos, and other tools to assist customers who are new to cloud computing.

These are some of the many advantages of using AWS S3 for data storage. Its robust and reliable services meet the needs of a wide range of businesses and organizations.

Conclusion

By understanding AWS S3 core features and advantages, it is simple to leverage S3 effectively to manage and protect valuable data in the cloud. Its scalability, security, and cost-effectiveness make it a good choice for a wide range of use cases. Brigita AWS Services offers a robust and versatile object storage solution for businesses of all sizes for better and smoother functioning.

0 notes

Text

Your Journey Through the AWS Universe: From Amateur to Expert

In the ever-evolving digital landscape, cloud computing has emerged as a transformative force, reshaping the way businesses and individuals harness technology. At the forefront of this revolution stands Amazon Web Services (AWS), a comprehensive cloud platform offered by Amazon. AWS is a dynamic ecosystem that provides an extensive range of services, designed to meet the diverse needs of today's fast-paced world.

This guide is your key to unlocking the boundless potential of AWS. We'll embark on a journey through the AWS universe, exploring its multifaceted applications and gaining insights into why it has become an indispensable tool for organizations worldwide. Whether you're a seasoned IT professional or a newcomer to cloud computing, this comprehensive resource will illuminate the path to mastering AWS and leveraging its capabilities for innovation and growth. Join us as we clarify AWS and discover how it is reshaping the way we work, innovate, and succeed in the digital age.

Navigating the AWS Universe:

Hosting Websites and Web Applications: AWS provides a secure and scalable place for hosting websites and web applications. Services like Amazon EC2 and Amazon S3 empower businesses to deploy and manage their online presence with unwavering reliability and high performance.

Scalability: At the core of AWS lies its remarkable scalability. Organizations can seamlessly adjust their infrastructure according to the ebb and flow of workloads, ensuring optimal resource utilization in today's ever-changing business environment.

Data Storage and Backup: AWS offers a suite of robust data storage solutions, including the highly acclaimed Amazon S3 and Amazon EBS. These services cater to the diverse spectrum of data types, guaranteeing data security and perpetual availability.

Databases: AWS presents a panoply of database services such as Amazon RDS, DynamoDB, and Redshift, each tailored to meet specific data management requirements. Whether it's a relational database, a NoSQL database, or data warehousing, AWS offers a solution.

Content Delivery and CDN: Amazon CloudFront, AWS's content delivery network (CDN) service, ushers in global content distribution with minimal latency and blazing data transfer speeds. This ensures an impeccable user experience, irrespective of geographical location.

Machine Learning and AI: AWS boasts a rich repertoire of machine learning and AI services. Amazon SageMaker simplifies the development and deployment of machine learning models, while pre-built AI services cater to natural language processing, image analysis, and more.

Analytics: In the heart of AWS's offerings lies a robust analytics and business intelligence framework. Services like Amazon EMR enable the processing of vast datasets using popular frameworks like Hadoop and Spark, paving the way for data-driven decision-making.

IoT (Internet of Things): AWS IoT services provide the infrastructure for the seamless management and data processing of IoT devices, unlocking possibilities across industries.

Security and Identity: With an unwavering commitment to data security, AWS offers robust security features and identity management through AWS Identity and Access Management (IAM). Users wield precise control over access rights, ensuring data integrity.

DevOps and CI/CD: AWS simplifies DevOps practices with services like AWS CodePipeline and AWS CodeDeploy, automating software deployment pipelines and enhancing collaboration among development and operations teams.

Content Creation and Streaming: AWS Elemental Media Services facilitate the creation, packaging, and efficient global delivery of video content, empowering content creators to reach a global audience seamlessly.

Migration and Hybrid Cloud: For organizations seeking to migrate to the cloud or establish hybrid cloud environments, AWS provides a suite of tools and services to streamline the process, ensuring a smooth transition.

Cost Optimization: AWS's commitment to cost management and optimization is evident through tools like AWS Cost Explorer and AWS Trusted Advisor, which empower users to monitor and control their cloud spending effectively.

In this comprehensive journey through the expansive landscape of Amazon Web Services (AWS), we've embarked on a quest to unlock the power and potential of cloud computing. AWS, standing as a colossus in the realm of cloud platforms, has emerged as a transformative force that transcends traditional boundaries.

As we bring this odyssey to a close, one thing is abundantly clear: AWS is not merely a collection of services and technologies; it's a catalyst for innovation, a cornerstone of scalability, and a conduit for efficiency. It has revolutionized the way businesses operate, empowering them to scale dynamically, innovate relentlessly, and navigate the complexities of the digital era.

In a world where data reigns supreme and agility is a competitive advantage, AWS has become the bedrock upon which countless industries build their success stories. Its versatility, reliability, and ever-expanding suite of services continue to shape the future of technology and business.

Yet, AWS is not a solitary journey; it's a collaborative endeavor. Institutions like ACTE Technologies play an instrumental role in empowering individuals to master the AWS course. Through comprehensive training and education, learners are not merely equipped with knowledge; they are forged into skilled professionals ready to navigate the AWS universe with confidence.

As we contemplate the future, one thing is certain: AWS is not just a destination; it's an ongoing journey. It's a journey toward greater innovation, deeper insights, and boundless possibilities. AWS has not only transformed the way we work; it's redefining the very essence of what's possible in the digital age. So, whether you're a seasoned cloud expert or a newcomer to the cloud, remember that AWS is not just a tool; it's a gateway to a future where technology knows no bounds, and success knows no limits.

6 notes

·

View notes

Text

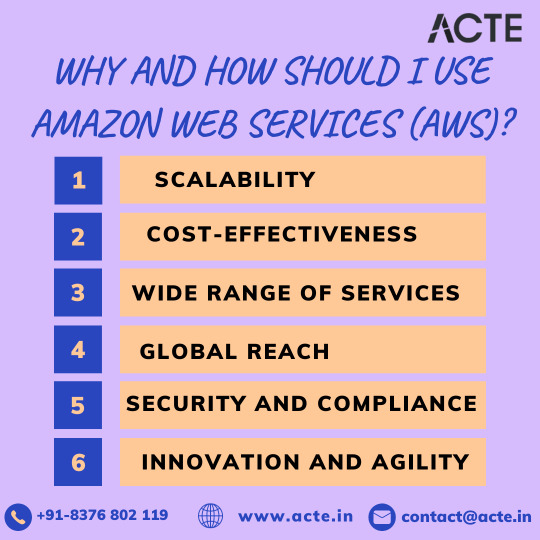

Navigating the Cloud: Unleashing the Potential of Amazon Web Services (AWS)

In the dynamic realm of technological progress, Amazon Web Services (AWS) stands as a beacon of innovation, offering unparalleled advantages for enterprises, startups, and individual developers. This article will delve into the compelling reasons behind the adoption of AWS and provide a strategic roadmap for harnessing its transformative capabilities.

Unveiling the Strengths of AWS:

1. Dynamic Scalability: AWS distinguishes itself with its dynamic scalability, empowering users to effortlessly adjust infrastructure based on demand. This adaptability ensures optimal performance without the burden of significant initial investments, making it an ideal solution for businesses with fluctuating workloads.

2. Cost-Efficient Flexibility: Operating on a pay-as-you-go model, AWS delivers cost-efficiency by eliminating the need for large upfront capital expenditures. This financial flexibility is a game-changer for startups and businesses navigating the challenges of variable workloads.

3. Comprehensive Service Portfolio: AWS offers a comprehensive suite of cloud services, spanning computing power, storage, databases, machine learning, and analytics. This expansive portfolio provides users with a versatile and integrated platform to address a myriad of application requirements.

4. Global Accessibility: With a distributed network of data centers, AWS ensures low-latency access on a global scale. This not only enhances user experience but also fortifies application reliability, positioning AWS as the preferred choice for businesses with an international footprint.

5. Security and Compliance Commitment: Security is at the forefront of AWS's priorities, offering robust features for identity and access management, encryption, and compliance with industry standards. This commitment instills confidence in users regarding the safeguarding of their critical data and applications.

6. Catalyst for Innovation and Agility: AWS empowers developers by providing services that allow a concentrated focus on application development rather than infrastructure management. This agility becomes a catalyst for innovation, enabling businesses to respond swiftly to evolving market dynamics.

7. Reliability and High Availability Assurance: The redundancy of data centers, automated backups, and failover capabilities contribute to the high reliability and availability of AWS services. This ensures uninterrupted access to applications even in the face of unforeseen challenges.

8. Ecosystem Synergy and Community Support: An extensive ecosystem with a diverse marketplace and an active community enhances the AWS experience. Third-party integrations, tools, and collaborative forums create a rich environment for users to explore and leverage.

Charting the Course with AWS:

1. Establish an AWS Account: Embark on the AWS journey by creating an account on the AWS website. This foundational step serves as the gateway to accessing and managing the expansive suite of AWS services.

2. Strategic Region Selection: Choose AWS region(s) strategically, factoring in considerations like latency, compliance requirements, and the geographical location of the target audience. This decision profoundly impacts the performance and accessibility of deployed resources.

3. Tailored Service Selection: Customize AWS services to align precisely with the unique requirements of your applications. Common choices include Amazon EC2 for computing, Amazon S3 for storage, and Amazon RDS for databases.

4. Fortify Security Measures: Implement robust security measures by configuring identity and access management (IAM), establishing firewalls, encrypting data, and leveraging additional security features. This comprehensive approach ensures the protection of critical resources.

5. Seamless Application Deployment: Leverage AWS services to deploy applications seamlessly. Tasks include setting up virtual servers (EC2 instances), configuring databases, implementing load balancers, and establishing connections with various AWS services.

6. Continuous Optimization and Monitoring: Maintain a continuous optimization strategy for cost and performance. AWS monitoring tools, such as CloudWatch, provide insights into the health and performance of resources, facilitating efficient resource management.

7. Dynamic Scaling in Action: Harness the power of AWS scalability by adjusting resources based on demand. This can be achieved manually or through the automated capabilities of AWS Auto Scaling, ensuring applications can handle varying workloads effortlessly.

8. Exploration of Advanced Services: As organizational needs evolve, delve into advanced AWS services tailored to specific functionalities. AWS Lambda for serverless computing, AWS SageMaker for machine learning, and AWS Redshift for data analytics offer specialized solutions to enhance application capabilities.

Closing Thoughts: Empowering Success in the Cloud

In conclusion, Amazon Web Services transcends the definition of a mere cloud computing platform; it represents a transformative force. Whether you are navigating the startup landscape, steering an enterprise, or charting an individual developer's course, AWS provides a flexible and potent solution.

Success with AWS lies in a profound understanding of its advantages, strategic deployment of services, and a commitment to continuous optimization. The journey into the cloud with AWS is not just a technological transition; it is a roadmap to innovation, agility, and limitless possibilities. By unlocking the full potential of AWS, businesses and developers can confidently navigate the intricacies of the digital landscape and achieve unprecedented success.

2 notes

·

View notes

Text

Amazon Timestream helps AWS InfluxDB databases

InfluxDB on AWS

AWS InfluxDB

As of right now, Amazon Timestream supports InfluxDB as a database engine. With the help of this functionality, you can easily execute time-series applications in close to real-time utilizing InfluxDB and open-source APIs, such as the open-source Telegraf agents that gather time-series observations.

InfluxDB vs AWS Timestream

Timestream now offers you a choice between two database engines: Timestream for InfluxDB and Timestream for LiveAnalytics.

If your use cases call for InfluxDB capabilities like employing Flux queries or near real-time time-series queries, you should utilize the Timestream for InfluxDB engine. If you need to conduct SQL queries on petabytes of time-series data in seconds and ingest more than tens of terabytes of data per minute, the Timestream for LiveAnalytics engine currently in use is a good alternative.

You may utilize a managed instance that is automatically configured for maximum availability and performance with Timestream’s support for InfluxDB. Setting up multi-Availability Zone support for your InfluxDB databases is another way to boost resilience.

Timestream for LiveAnalytics and Timestream for InfluxDB work in tandem to provide large-scale, low-latency time-series data intake.

How to create database in InfluxDB

You can start by setting up an instance of InfluxDB. Now you can open the Timestream console, choose Create Influx database under InfluxDB databases in Timestream for InfluxDB.

You can provide the database credentials for the InfluxDB instance on the next page.

You can also define the volume and kind of storage to meet your requirements, as well as your instance class, in the instance configuration.

You have the option to choose either a single InfluxDB instance or a multi-Availability Zone deployment in the following section, which replicates data synchronously to a backup database in a separate Availability Zone. Timestream for InfluxDB in a multi-AZ deployment will immediately switch to the backup instance in the event of a failure, preserving all data.

Next, you can set up your connectivity setup to specify how to connect to your InfluxDB instance. You are able to configure the database port, subnets, network type, and virtual private cloud (VPC) in this instance. Additionally, you may choose to make your InfluxDB instance publicly available by configuring public subnets and setting the public access to publicly accessible. This would enable Amazon Timestream to provide your InfluxDB server with a public IP address. Make sure you have appropriate security measures in place to safeguard your InfluxDB instances if you decide to go with this option.

You had been configured your InfluxDB instance to be not publicly available, which restricts access to the VPC and subnets you specified earlier in this section.

You may provide the database parameter group and the log delivery settings once you’ve set up your database connection. You may specify the adjustable parameters your wish to utilize for your InfluxDB database in the parameter group. You may also specify which Amazon Simple Storage Service (Amazon S3) bucket you have to export the system logs from in the log delivery settings. Go to this page to find out more about the Amazon S3 bucket’s mandatory AWS Identity and Access Management (IAM) policy.

After that are you satisfied with the setup, you can choose Create Influx database.

You can see further details on the detail page once your InfluxDB instance is built.

You can now access the InfluxDB user interface (UI) once the InfluxDB instance has been established. By choosing InfluxDB UI in the console, you may see the user interface if you have your InfluxDB set up to be publicly available. As instructed, you made your InfluxDB instance private. SSH tunneling is needed to access the InfluxDB UI from inside the same VPC as my instance using an Amazon EC2 instance.

The URL endpoint from the detail page lets me connect in to the InfluxDB UI using your username and password from creation.Image credit to AWS

Token creation is also possible using the Influx command line interface (CLI). You can establish a setup to communicate with your InfluxDB instance before you generate the token.

You may now establish an operator, all-access, or read/write token since you have successfully built the InfluxDB setup. An example of generating an all-access token to authorize access to every resource inside the specified organization is as follows:

You may begin feeding data into your InfluxDB instance using a variety of tools, including the Telegraf agent, InfluxDB client libraries, and the Influx CLI, after you have the necessary token for your use case.

At last, you can use the InfluxDB UI to query the data. You can open the InfluxDB UI, go to the Data Explorer page, write a basic Flux script, and click Submit.

You may continue to use your current tools to communicate with the database and create apps utilizing InfluxDB with ease thanks to Timestream for InfluxDB. You may boost your InfluxDB data availability with the multi-AZ setup without having to worry about the supporting infrastructure.

AWS and InfluxDB collaboration

In celebration of this launch, InfluxData’s founder and chief technology officer, Paul Dix, shared the following remarks on this collaboration:

The public cloud will fuel open source in the future, reaching the largest community via simple entry points and useful user interfaces. On that aim, Amazon Timestream for InfluxDB delivers. Their collaboration with AWS makes it simpler than ever for developers to create and grow their time-series workloads on AWS by using the open source InfluxDB database to provide real-time insights on time-series data.

Important information

Here are some more details that you should be aware of:

Availability:

Timestream for InfluxDB is now widely accessible in the following AWS Regions: Europe (Frankfurt, Ireland, Stockholm), Asia Pacific (Mumbai, Singapore, Sydney, Tokyo), US East (Ohio, N. Virginia), and US West (Oregon).

Migration scenario:

Backup InfluxDB database

You may easily restore a backup of an existing InfluxDB database into Timestream for InfluxDB in order to move from a self-managed InfluxDB instance. You may use Amazon S3 to transfer Timestream for InfluxDB from the current Timestream LiveAnalytics engine. Visit the page Migrating data from self-managed InfluxDB to Timestream for InfluxDB to learn more about how to migrate data for different use cases.

Version supported by Timestream for InfluxDB: At the moment, the open source 2.7.5 version of InfluxDB is supported.

InfluxDB AWS Pricing

Visit Amazon Timestream pricing to find out more about prices.

Read more on govindhtech.com

#amazon#aws#influxdb#liveanalytics#sqlqueries#amazons3#amazonec2#vpc#technology#technews#govindhtech

0 notes