#amazon rds aurora

Text

Amazon Relation Database Service RDS Explained for Cloud Developers

Full Video Link - https://youtube.com/shorts/zBv6Tcw6zrU

Hi, a new #video #tutorial on #amazonrds #aws #rds #relationaldatabaseservice is published on #codeonedigest #youtube channel.

@java @awscloud @AWSCloudIndia @YouTube #youtube @codeonedig

Amazon Relational Database Service (Amazon RDS) is a collection of managed services that makes it simple to set up, operate, and scale relational databases in the cloud. You can choose from seven popular engines i.e., Amazon Aurora with MySQL & PostgreSQL compatibility, MySQL, MariaDB, PostgreSQL, Oracle, and SQL Server.

It provides cost-efficient, resizable capacity for an industry-standard…

View On WordPress

#amazon rds access from outside#amazon rds aurora#amazon rds automated backup#amazon rds backup#amazon rds backup and restore#amazon rds guide#amazon rds snapshot export to s3#amazon rds vs aurora#amazon web services#aws#aws cloud#aws rds aurora tutorial#aws rds engine#aws rds explained#aws rds performance insights#aws rds tutorial#aws rds vs aurora#cloud computing#relational database#relational database management system#relational database service

1 note

·

View note

Text

Amazon RDS Extended Support for MySQL 5.7 & PostgreSQL 11

Your MySQL 5.7 and PostgreSQL 11 database instances on Amazon Aurora and Amazon RDS will automatically enroll in Amazon RDS Extended Support commencing February 29, 2024.

This will prevent unanticipated downtime and compatibility concerns from automated major version upgrades. You have more control over when to upgrade your major database.

RDS Extended Support may cost more due to automated enrollment. Upgrade your database before RDS Extended Support to prevent these expenses.

Describe Amazon RDS Extended Support

Amazon RDS Extended Support, announced in September 2023, lets you run your database on a major engine version on Amazon Aurora or Amazon RDS after its end of standard support date for a fee.

The MySQL and PostgreSQL open source communities identify CVEs, generate patches, and resolve bugs until community end of life (EoL). Until the database major version is retired, the communities release a quarterly minor version with security and bug updates. CVE patches and bug fixes are no longer available after the community end of life date, making such engines unsupported. As of October and November 2023, the communities no longer support MySQL 5.7 and PostgreSQL 11. AWS appreciate community support of these major versions and a transparent transition process and schedule to the latest major version.

Amazon Aurora and RDS engineer crucial CVE patches and bug fixes for up to three years after a major version’s community EoL with RDS Extended Support. Amazon Aurora and RDS will find engine CVEs and problems, develop patches, and deploy them swiftly for three years. Under RDS Extended Support, AWS will continue to support an engine’s major version after the open source community ends support, protecting your applications from severe security risks and unresolved problems.

You may ask why we charge for RDS Extended Support instead of including it in RDS. AWS must invest developer resources in crucial CVE patches and bug fixes to preserve community EoL engine security and functionality. Because of this, RDS Extended Support only charges clients that need the flexibility to stay on a version past its EoL.

If your applications depend on a specific MySQL or PostgreSQL major version for plug-in compatibility or bespoke functionality, RDS Extended Support may help you satisfy your business objectives. If you’re running on-premises database servers or self-managed Amazon Elastic Compute Cloud (Amazon EC2) instances, you can migrate to Amazon Aurora MySQL-Compatible Edition, Amazon Aurora PostgreSQL-Compatible Edition, Amazon RDS for MySQL, and Amazon RDS for PostgreSQL beyond the community EoL date and use them with RDS Extended Support in a managed service. RDS Extended Support lets you phase a large database transfer to ensure a smooth transition without straining IT resources.

RDS Extended Support will be provided in 2024 for RDS for MySQL 5.7, PostgreSQL 11, Aurora MySQL-compatible 2, and Aurora PostgreSQL 11. A supported MySQL major version on Amazon RDS and Amazon Aurora major versions in the AWS documentation lists all future supported versions.

Why are all databases automatically enrolled in Amazon RDS Extended Support?

AWS were told you RDS Extended Support would deliver opt-in APIs and console functionality in December 2023. AWS announced that if you did not opt your database into RDS Extended Support, it would automatically update to a newer engine version on March 1, 2024. You would be upgraded from Aurora MySQL 2 or RDS for MySQL 5.7 to Aurora MySQL 3 or RDS for MySQL 8.0, and from Aurora PostgreSQL 11 to 15 and RDS for 15.

However, several users complained that these automated upgrades may damage their applications and cause other unpredictable behavior between major community DB engine releases. If applications are not ready for MySQL 8.0 or PostgreSQL 15, an unplanned major version upgrade could cause compatibility issues or downtime.

Automatic enrollment in RDS Extended Support allows you more time and control to organize, schedule, and test database upgrades on your own timeline while receiving essential security and bug fixes from AWS.

Upgrade before RDS standard support ends to prevent automatic enrollment in RDS Extended Support and associated fees.

Upgrade your database to avoid RDS Extended Support fees

RDS Extended Support helps you schedule your upgrade, but staying with previous versions means missing out on the greatest price-performance for your database workload and paying more for support.

Global Database, Amazon RDS Proxy, Performance Insights, Parallel Query, and Serverless v2 deployments are supported in Aurora MySQL 8.0, also known as Aurora MySQL 3. RDS for MySQL 8.0 supports AWS Graviton2 and Graviton3-based instances, Multi-AZ cluster deployments, and Optimized Reads and Writes for up to three times better performance than MySQL 5.7.

Aurora PostgreSQL 15 Aurora I/O Optimized, Aurora Serverless v2, Babelfish for Aurora PostgreSQL, pgvector extension, TLE, AWS Graviton3-based instances, and community additions are supported by PostgreSQL. RDS for PostgreSQL 15 adds Multi-AZ DB cluster deployments, RDS Optimized Reads, HypoPG extension, pgvector extension, TLEs, and AWS Graviton3-based instances.

Major version upgrades may change databases incompatible with existing apps. To upgrade to the major version, manually edit your database. Any major version upgrade should be tested on non-production instances before being applied to production to verify application compatibility. The AWS documentation describes an in-place upgrade from MySQL 5.7 to 8.0, including incompatibilities, Aurora MySQL in-place major version upgrade, and RDS for MySQL upgrades. Use pgupgrade to upgrade PostgreSQL 11 to 15 in-places.

AWS advocate Fully Managed Blue/Green Deployments in Amazon Aurora and Amazon RDS to reduce upgrade downtime. Amazon RDS Blue/Green Deployments can establish a synchronized, fully controlled staging environment that mimics production in a few clicks. A parallel green environment with upper-version clones of your production databases lower-version is needed. Traffic might transition to the green environment after validation. Blue environment can be discontinued. Blue/Green Deployments reduce downtime best in most cases, except with Amazon Aurora and Amazon RDS.

Currently accessible

Amazon RDS Extended Support is now available for clients utilizing MySQL 5.7, PostgreSQL 11, and higher versions in AWS Regions, including AWS GovCloud (US), beyond the 2024 standard support expiration. You can upgrade your databases and obtain 3 years of RDS Extended Support without opting in.

Read more on Govindhtech.com

0 notes

Text

Using the ETL Tool in AWS Database Migration

AWS (Amazon Web Service) is a cloud-based platform providing infrastructure, platform, and packaged software as a service. However, the most critical service of Amazon Web Service Database Migration Service (AWS DMS) is migration of data between relational databases, data warehouses, and NoSQL databases for which ETL in AWS is the most optimized tool.

To understand why ETL in AWS is preferred for migrating databases, one should know how the ETL tool works. ETL is short for Extract, Transform, Load. Through these three steps data is combined from several sources into a centralized data warehouse. In the ETL in AWS process, data is extracted from a source, transformed into a format that matches the structure of the target database, and then loaded into a data warehouse or a storage repository.

The Benefits of ETL in AWS

There are several benefits of ETL in AWS for database migration.

· Organizations need not install and configure additional drivers and applications or change the structure of the source database when migration is done with ETL in AWS. The process is initiated directly through the AWS Management Console whereby any change or update is replicated to the target database through its Change Data Capture (CDC) feature.

· Changes that occur in the source database are updated at regular intervals to the target database by ETL in AWS. However, for this to happen, the source and the target databases should always be kept in sync. Most importantly, the migration process can take place even when the source database is fully functional. Hence, there is no system shutdown, a big help for large data-driven enterprises where any downtime upsets operating schedules.

· Most common and popular databases in use today are supported by AWS. Hence, ETL in AWS can handle any migration activity regardless of the structure of the databases. These include both homogeneous and heterogeneous migration. In the first, the database engines, data structure, data type and code, and the schema structures of the source and the target databases are similar while in the second case, each is different from the other.

· ETL in AWS is also widely-used for migrating on-premises databases to Amazon RDS or Amazon EC2, Aurora, and databases running on EC2 to RDS or vice versa. Apart from these possibilities, database migration can be done between SQL, text-based data, and NoSQL with DMS AWS also.

High-Performing AWS Glue

There are various tools for ETL in AWS, but the most high-performing one is AWS Glue. It has a fully managed ETL platform that eases data processing for analysis. The tool is very user-friendly and can be set up and running with only a few clicks on the AWS Management Console. The advantage here is that AWS Glue discovers data automatically and stores the connected metadata in the AWS Glue Data Catalog. Later, the data can be searched and queried instantly.

4 notes

·

View notes

Text

AWS RDS Vs Aurora: Everything You Need to Know

Delve into the nuances of Amazon RDS and Aurora in this concise comparison guide. Uncover their unique strengths, weaknesses, and suitability for diverse use cases. Whether it's performance benchmarks, cost considerations, or feature differentiators, gain the insights you need to navigate between these two prominent AWS database solutions effectively.

Also read AWS RDS Vs Aurora

0 notes

Text

Data Engineering Course in Hyderabad | AWS Data Engineer Training

Overview of AWS Data Modeling

Data modeling in AWS involves designing the structure of your data to effectively store, manage, and analyse it within the Amazon Web Services (AWS) ecosystem. AWS provides various services and tools that can be used for data modeling, depending on your specific requirements and use cases. Here's an overview of key components and considerations in AWS data modeling

AWS Data Engineer Training

Understanding Data Requirements: Begin by understanding your data requirements, including the types of data you need to store, the volume of data, the frequency of data updates, and the anticipated usage patterns.

Selecting the Right Data Storage Service: AWS offers a range of data storage services suitable for different data modeling needs, including:

Amazon S3 (Simple Storage Service): A scalable object storage service ideal for storing large volumes of unstructured data such as documents, images, and logs.

Amazon RDS (Relational Database Service): Managed relational databases supporting popular database engines like MySQL, PostgreSQL, Oracle, and SQL Server.

Amazon Redshift: A fully managed data warehousing service optimized for online analytical processing (OLAP) workloads.

Amazon DynamoDB: A fully managed NoSQL database service providing fast and predictable performance with seamless scalability.

Amazon Aurora: A high-performance relational database compatible with MySQL and PostgreSQL, offering features like high availability and automatic scaling. - AWS Data Engineering Training

Schema Design: Depending on the selected data storage service, design the schema to organize and represent your data efficiently. This involves defining tables, indexes, keys, and relationships for relational databases or determining the structure of documents for NoSQL databases.

Data Ingestion and ETL: Plan how data will be ingested into your AWS environment and perform any necessary Extract, Transform, Load (ETL) operations to prepare the data for analysis. AWS provides services like AWS Glue for ETL tasks and AWS Data Pipeline for orchestrating data workflows.

Data Access Control and Security: Implement appropriate access controls and security measures to protect your data. Utilize AWS Identity and Access Management (IAM) for fine-grained access control and encryption mechanisms provided by AWS Key Management Service (KMS) to secure sensitive data.

Data Processing and Analysis: Leverage AWS services for data processing and analysis tasks, such as - AWS Data Engineering Training in Hyderabad

Amazon EMR (Elastic MapReduce): Managed Hadoop framework for processing large-scale data sets using distributed computing.

Amazon Athena: Serverless query service for analysing data stored in Amazon S3 using standard SQL.

Amazon Redshift Spectrum: Extend Amazon Redshift queries to analyse data stored in Amazon S3 data lakes without loading it into Redshift.

Monitoring and Optimization: Continuously monitor the performance of your data modeling infrastructure and optimize as needed. Utilize AWS CloudWatch for monitoring and AWS Trusted Advisor for recommendations on cost optimization, performance, and security best practices.

Scalability and Flexibility: Design your data modeling architecture to be scalable and flexible to accommodate future growth and changing requirements. Utilize AWS services like Auto Scaling to automatically adjust resources based on demand. - Data Engineering Course in Hyderabad

Compliance and Governance: Ensure compliance with regulatory requirements and industry standards by implementing appropriate governance policies and using AWS services like AWS Config and AWS Organizations for policy enforcement and auditing.

By following these principles and leveraging AWS services effectively, you can create robust data models that enable efficient storage, processing, and analysis of your data in the cloud.

Visualpath is the Leading and Best Institute for AWS Data Engineering Online Training, in Hyderabad. We at AWS Data Engineering Training provide you with the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

Visit: https://www.visualpath.in/aws-data-engineering-with-data-analytics-training.html

#AWS Data Engineering Online Training#AWS Data Engineering Training#Data Engineering Training in Hyderabad#AWS Data Engineering Training in Hyderabad#Data Engineering Course in Ameerpet#AWS Data Engineering Training Ameerpet#Data Engineering Course in Hyderabad#AWS Data Engineering Training Institute

0 notes

Text

How does AWS manage database backups and disaster recovery?

In the fast-paced digital landscape, businesses in Pune are increasingly turning to Amazon Web Services (AWS) to power their operations. As the demand for skilled professionals grows, the importance of AWS Training in Pune becomes evident. One crucial aspect that AWS professionals need to master is how AWS manages database backups and disaster recovery. In this blog post, we'll delve into the intricacies of AWS's robust strategies for safeguarding data, making it a fundamental topic for anyone pursuing AWS classes in Pune and aiming to excel in the Best AWS Certification Course in Pune.

AWS offers a comprehensive set of tools and services to ensure the security and resilience of databases. One key element is the AWS Backup service, designed to centralize and automate the backup of data across various AWS services. For those enrolled in AWS classes in Pune, understanding how to leverage AWS Backup is crucial. This service simplifies the backup process, providing a unified interface to schedule, manage, and monitor backups across databases, file systems, and more. By grasping these functionalities, students in AWS Training in Pune can implement efficient backup strategies in diverse AWS environments.

Disaster recovery is another critical aspect covered in AWS courses in Pune. AWS ensures high availability and quick recovery with services like Amazon RDS (Relational Database Service) and Amazon Aurora. These managed database services automatically create and retain backups, allowing for point-in-time recovery. During AWS classes in Pune, students learn to configure automated backups and set retention policies, tailoring disaster recovery solutions to specific business needs. The Best AWS Certification Course in Pune equips professionals with the skills to implement and manage resilient architectures that minimize downtime and data loss.

For those embarking on an AWS Course in Pune, understanding multi-region backup and recovery strategies is essential. AWS enables businesses to distribute data across multiple geographic locations, enhancing resilience against region-specific failures. Services like Amazon S3 cross-region replication and AWS Backup support the creation of redundant backups in different regions, allowing for seamless recovery in the face of disasters. This advanced level of disaster recovery planning is a key focus in AWS Training in Pune, emphasizing the importance of a well-rounded skill set for professionals in the field.

Conclusion: In conclusion, mastering AWS's database backup and disaster recovery mechanisms is a pivotal aspect of AWS Training in Pune. The dynamic nature of the digital landscape demands skilled professionals who can navigate and implement robust data protection strategies. Aspirants seeking the Best AWS Certification Course in Pune will find that a deep understanding of AWS's backup and recovery services positions them as valuable assets in the industry. By enrolling in AWS classes in Pune, professionals can unlock the full potential of AWS, ensuring data resilience and business continuity in the face of unforeseen challenges.

Website:- https://www.ssdntech.com

Contact Number:- 9999111686

0 notes

Text

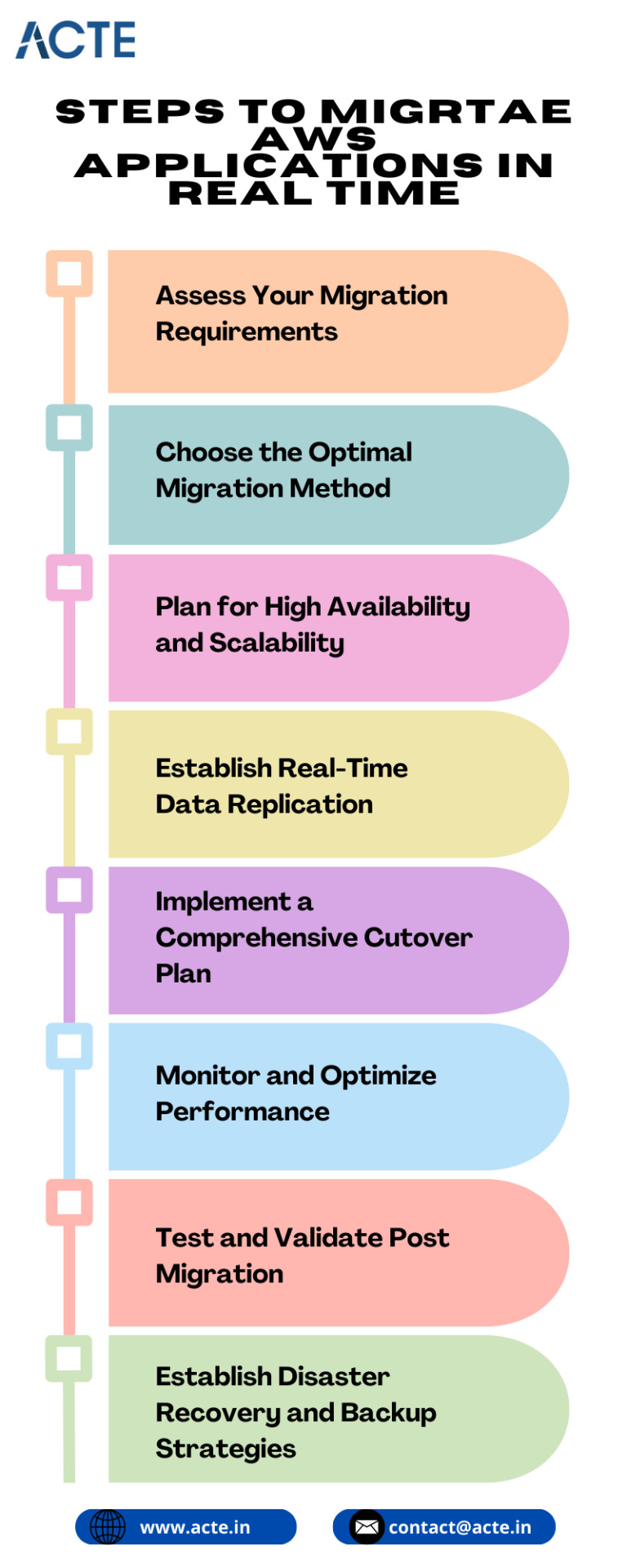

Migrating Your AWS Applications, Data, and Databases in Real Time: A Step-by-Step Guide

Migrating your AWS applications, data, and databases in real time can be a complex process. However, with careful planning and the right approach, you can achieve a seamless transition while ensuring minimal downtime and data integrity. We will walk you through the step-by-step process of migrating your AWS components in real time.

Step 1: Assess Your Migration Requirements:

Begin by thoroughly assessing your current environment. Identify the applications, data, and databases that need to be migrated and evaluate their dependencies. Consider the complexity, criticality, and compatibility of each component to devise an appropriate migration strategy.

Step 2: Choose the Optimal Migration Method:

AWS offers various migration methods to suit different scenarios. Evaluate options such as AWS Database Migration Service (DMS), AWS Server Migration Service (SMS), or AWS DataSync. Each method has its own strengths and considerations, so select the one that aligns with your specific needs.

Step 3: Plan for High Availability and Scalability:

Ensure high availability and scalability of your applications during the migration process. Utilize AWS services like Amazon EC2 Auto Scaling, Amazon RDS Multi-AZ, and Amazon Aurora Global Database to maintain optimal performance and handle increased traffic. Implement redundancy and failover mechanisms to minimize potential disruptions.

Step 4: Establish Real-Time Data Replication:

Real-time data replication is crucial for maintaining continuous availability during migration. Leverage AWS services like AWS DMS or utilize third-party tools to enable bi-directional data replication between the source and target environments. This ensures that data changes are synchronized in real time.

Step 5: Implement a Comprehensive Cutover Plan:

Develop a detailed cutover plan to smoothly transition from the source to the target environment. Include steps for final data synchronization, DNS updates, application switchover, and post-migration functionality verification. Test the plan in a controlled environment to identify and address any potential issues before the actual migration.

Step 6: Monitor and Optimize Performance:

Monitor the performance and health of your applications, data, and databases throughout the migration process. Utilize AWS Cloud Watch to track key metrics, set up alerts, and ensure optimal performance. Continuously optimize your AWS resources by right-sizing instances, optimizing database configurations, and leveraging services like AWS Lambda and Amazon Cloud Front.

Step 7: Test and Validate Post-Migration:

Thoroughly test and validate the functionality of your applications, data, and databases in the new environment. Conduct comprehensive testing, including functional, performance, and security testing, to ensure everything is working as expected. Monitor the post-migration environment closely to identify and address any lingering issues.

Step 8: Establish Disaster Recovery and Backup Strategies:

Ensure robust disaster recovery and backup strategies are in place. Implement automated backup mechanisms, leverage AWS services like AWS Backup, and regularly test your disaster recovery processes to ensure business continuity and data protection.

Migrating your AWS applications, data, and databases in real time requires careful planning, a well-defined strategy, and meticulous execution. By following these step-by-step guidelines, you can achieve a successful migration with minimal disruptions.Remember to assess your migration requirements, choose the right migration method, establish real-time data replication, and thoroughly test and validate the post-migration environment. With proper planning and execution, you can seamlessly migrate your AWS components in real time.

Don’t miss this opportunity to advance your career in the dynamic field of cloud computing!

If you are searching for the AWS course, I recommend exploring the official AWS Training and Certification website at ACTE Technologies. They offer a wealth of resources, including study guides, practice exams, and training courses that cater to different learning styles.

0 notes

Text

AWS Cloud Practitioner - study notes

Databases

------------------------------------------------------

Amazon Relational Database Service (RDS):

Service which makes it easy to launch and manage relational databases.

Supports popular databases

High availability and fault tolerance when using Multi-AZ depoloying

AWS manages automatic software patching, backups, operating system maintenance, and more

Enhance performance and durability by launching read replicas acros regions

Use case: migrate an on-premises (Oracle/PostgreSQL) database to the cloud

Amazon Aurora:

Relational database compatible with MySQL and PostgreSQL.

Supports MySQL and PostgreSQL

5x faster than normal MySQL and 3x faster than normal PostgreSQL

Scales automatically

Managed by RDS

Use case: migrate an on-premises PostgreSQL database to the cloud.

Amazon DynamoDB:

Fully managed NoSQL key-value and document database.

NoSQL key-value database

Fully managed and serverless

Scales automatically

Non-relational

Use case: NoSQL database fast enough to handle millions of request per seconds.

Amazon DocumentDB:

Fully managed document database which supports MongoDB.

Document database

MongoDB compatible

Fully managed and serverless

Non-relational

Use case: Operate MOngoDB workload at scale.

Amazonb ElastiCache:

Fully managed in-memory datastore compatible with Redis or Memcached.

In-memory datastore

Compatible with Redis or Memcached

Data cen be lost

Offers high performance and low latency

Use case: Alleviate database load for data that is accessed often.

Amazon Neptune:

Fully managed graph database that supports highly connected datasets.

Graph database service

Supports highly connected datasets

Fully managed and serverless

Fast and reliable

Use case: Process large sets of example user profiles and social interactions.

0 notes

Text

What is Amazon Aurora?

Explore in detail about Amazon aurora and its features.

Amazon Aurora is a fully managed relational database service offered by Amazon Web Services (AWS). It is designed to provide high performance, availability, and durability while being compatible with MySQL and PostgreSQL database engines.

Amazon Aurora, a powerful and scalable database solution provided by AWS, is a fantastic choice for businesses looking to streamline their data management. When it comes to optimizing your AWS infrastructure, consider leveraging professional AWS consulting services. These services can help you make the most of Amazon Aurora and other AWS offerings, ensuring that your database systems and overall cloud architecture are finely tuned for performance, reliability, and security.

Here are some of the key features of Amazon Aurora:

1. Compatibility: Amazon Aurora is compatible with both MySQL and PostgreSQL database engines, which means you can use existing tools, drivers, and applications with minimal modification.

2. High Performance: It offers excellent read and write performance due to its distributed, fault-tolerant architecture. Aurora uses a distributed storage engine that replicates data across multiple Availability Zones (AZs) for high availability and improved performance.

3. Replication: Aurora supports both automated and manual database replication. You can create up to 15 read replicas, which can help offload read traffic from the primary database and improve read scalability.

4. Global Databases: You can create Aurora Global Databases, allowing you to replicate data to multiple regions globally. This provides low-latency access to your data for users in different geographic locations.

5. High Availability: Aurora is designed for high availability and fault tolerance. It replicates data across multiple AZs, and if the primary instance fails, it automatically fails over to a replica with minimal downtime.

6. Backup and Restore: Amazon Aurora automatically takes continuous backups and offers point-in-time recovery. You can also create manual backups and snapshots for data protection.

7. Security: It provides robust security features, including encryption at rest and in transit, integration with AWS Identity and Access Management (IAM), and support for Virtual Private Cloud (VPC) isolation.

8. Scalability: Aurora allows you to scale your database instances up or down based on your application's needs, without impacting availability. It also offers Amazon Aurora Auto Scaling for automated instance scaling.

9. Performance Insights: You can use Amazon RDS Performance Insights to monitor the performance of your Aurora database, making it easier to identify and troubleshoot performance bottlenecks.

10. Serverless Aurora: AWS also offers a serverless version of Amazon Aurora, which automatically adjusts capacity based on actual usage. This can be a cost-effective option for variable workloads.

11. Integration with AWS Services: Aurora can be integrated with other AWS services like AWS Lambda, AWS Glue, and Amazon SageMaker, making it suitable for building data-driven applications and analytics solutions.

12. Cross-Region Replication: You can set up cross-region replication to have read replicas in different AWS regions for disaster recovery and data locality.

These features make Amazon Aurora a powerful and versatile database solution for a wide range of applications, from small-scale projects to large, mission-critical systems.

0 notes

Text

Amazon Aurora Database Explained for AWS Cloud Developers

Full Video Link - https://youtube.com/shorts/4UD9t7-BzVM

Hi, a new #video #tutorial on #amazonrds #aws #aurora #database #rds is published on #codeonedigest #youtube channel.

@java @awscloud @AWSCloudIndia @YouTube #youtube @codeonedigest #cod

Amazon Aurora is a relational database management system (RDBMS) built for the cloud & gives you the performance, availability of commercial-grade databases at one-tenth the cost. Aurora database comes with MySQL & PostgreSQL compatibility.

Amazon Aurora provides built-in security, continuous backups, serverless compute, up to 15 read replicas, automated multi-Region replication, and…

View On WordPress

#amazon aurora#amazon aurora mysql#amazon aurora postgresql#amazon aurora serverless#amazon aurora tutorial#amazon web services#amazon web services tutorial#aurora database aws#aurora database tutorial#aurora database vs rds#aurora db#aurora db aws#aurora db aws tutorial#aurora db cluster#aurora db with spring boot#aws#aws aurora#aws aurora mysql#aws aurora postgresql#aws aurora tutorial#aws aurora vs rds#aws cloud#what is amazon web services

0 notes

Text

AWS CDK database queries in PostgreSQL and MySQL

With support for the AWS Cloud Development Kit (AWS CDK), AWS are now able to connect to and query your current MySQL and PostgreSQL databases. This is a new feature that allows you to construct a secure, real-time GraphQL API for your relational database, either inside or outside of Amazon Web Services (AWS). With merely your database endpoint and login credentials, you can now construct the full API for all relational database operations. You can use a command to apply the most recent modifications to the table schema whenever your database schema changes.

With the release of AWS Amplify GraphQL Transformer version 2, which was announced in 2021, developers can now create GraphQL-based app backends that are more feature-rich, adaptable, and extensible with little to no prior cloud experience. In order to create extensible pipeline resolvers that can route GraphQL API requests, apply business logic like authorization, and interact with the underlying data source like Amazon DynamoDB, this new GraphQL Transformer was completely redesigned.

But in addition to Amazon DynamoDB, users also desired to leverage relational database sources for their GraphQL APIs, including their Amazon RDS or Amazon Aurora databases. Amplify GraphQL APIs now support @model types for relational and DynamoDB data sources. Data from relational databases is produced into a different file called schema.sql.graphql. You may still build and maintain DynamoDB-backed types with standard schema.graphql files.

Upon receiving any MySQL or PostgreSQL database information, whether it is accessible publicly online or through a virtual private cloud (VPC), AWS Amplify will automatically produce a modifiable GraphQL API that can be used to securely connect to your database tables and expose CRUD (create, read, update, or delete) queries and mutations. To make your data models more frontend-friendly, you may also rename them. For instance, a database table with the name “todos” (plural, lowercase) may be accessible to the client as “ToDo” (single, PascalCase).

Any of the current Amplify GraphQL authorization rules can be added to your API with only one line of code, enabling the smooth development of use cases like owner-based authorization and public read-only patterns. Secure real-time subscriptions are accessible right out of the box because the produced API is based on AWS AppSync’s GraphQL capabilities. With a few lines of code, you can subscribe to any CRUD event from any data model.

Starting up the MySQL database in the AWS CDK

The AWS CDK gives you the significant expressive capability of a programming language to create dependable, scalable, and affordable cloud applications. Install the AWS CDK on your local computer to begin.

To print the AWS CDK version number and confirm that the installation is correct, use the following command.

Next, make your app’s new directory:

Use the cdk init command to set up a CDK application.

Add the GraphQL API construct from Amplify to the newly created CDK project.

Launch your CDK project’s primary stack file, which is often found in lib/<your-project-name>-stack.ts. Add the following imports to the top of the file:

Run the following SQL query on your MySQL database to create a GraphQL schema for a new relational database API.

$ cdk –version

Make sure the results are written to a.csv file with column headers included, and change <database-name> to the name of your schema, database, or both.

Run the following command, substituting the path to the.csv file prepared in the previous step for <path-schema.csv>.

$ npx @aws-amplify/cli api generate-schema \

–sql-schema <path-to-schema.csv> \

–engine-type mysql –out lib/schema.sql.graphql

To view the imported data model from your MySQL database schema, open the schema.sql.graphql file.

If you haven’t already, establish a parameter for your database’s connection information, including hostname/url, database name, port, username, and password, in the AWS Systems Manager console’s Parameter Store. To properly connect to your database and run GraphQL queries or modifications against it, Amplify will need these in the following step.

To define a new GraphQL API, add the following code to the main stack class. Put the parameter paths that were made in the previous step in lieu of the dbConnectionConfg options.

This setting assumes that you can access your database online. Additionally, on all models, the sandbox mode is enabled to permit public access, and the default authorization mode is set to Api Key for AWS AppSync. You can use this to test your API before implementing more detailed authorization restrictions.

Lastly, launch your GraphQL API on the Amazon Cloud

Select the Queries menu along with your project. The newly developed GraphQL APIs, like getMeals to retrieve a single item or listRestaurants to list all products, are compatible with your MySQL database tables.

like instance, a new GraphQL query appears when you pick objects that have fields like address, city, name, phone number, and so on. You may view the query results from your MySQL database by selecting the Run button.

You get identical results when you run a query on your MySQL database.

Currently accessible

Any MySQL and PostgreSQL databases hosted anywhere within an Amazon VPC or even outside of the AWS Cloud are now compatible with the relational database support for AWS Amplify.

Read more on Govindhtech.com

#aws#mysql#postgresql#api#GraphQLAPI#database#CDK#VPC#cloudcomputing#technology#technews#govindhtech

0 notes

Text

What is necessary to learn AWS?

What is necessary to learn AWS?

To learn Amazon Web Services (AWS) effectively, it is essential to follow a structured learning path that includes the following key elements:

Cloud Computing Fundamentals: Start by understanding the basic concepts of cloud computing, such as the advantages of cloud services, different cloud models (public, private, hybrid), and essential cloud terminologies.

AWS Core Services: Familiarize yourself with the core AWS services, such as Amazon EC2 (Elastic Compute Cloud), Amazon S3 (Simple Storage Service), Amazon RDS (Relational Database Service), AWS Lambda, and Amazon VPC (Virtual Private Cloud). These services form the foundation of most AWS solutions.

AWS Architecture and Design: Learn how to design scalable, reliable, and cost-effective architectures using AWS services. Understand various design patterns and best practices for building cloud applications.

Security and Compliance: Gain knowledge about AWS security features, identity and access management (IAM), encryption, and compliance frameworks. Learn how to secure AWS resources and meet industry-specific regulations.

AWS Networking: Explore AWS networking concepts, including Virtual Private Cloud (VPC), subnets, Internet Gateways, and Elastic Load Balancing (ELB). Understand how to set up and configure networking in the AWS environment.

AWS Compute Services: Dive deeper into AWS compute services, such as Amazon EC2, AWS Lambda (serverless computing), Amazon ECS (Elastic Container Service), and Amazon EKS (Elastic Kubernetes Service).

AWS Storage Services: Learn about various AWS storage options, including Amazon S3, Amazon EBS (Elastic Block Store), Amazon Glacier (for archival storage), and Amazon FSx (managed file system).

AWS Databases: Familiarize yourself with AWS database services like Amazon RDS, Amazon DynamoDB (NoSQL database), Amazon Aurora (MySQL and PostgreSQL compatible database), and Amazon Redshift (data warehousing).

0 notes

Text

Navicat Premium Crack Plus Keygen [Mac + Win]

⭐ ⏩⏩⏩️ DOWNLOAD LINK 🔥🔥🔥 Navicat Premium Crack is an impressive database development tool that allows you to connect to MySQL, MariaDB, MongoDB, SQL Server, Oracle. Navicat Premium v (31 Aug ) for macOS + (Size: MB - Date: 9/6/ Navicat Premium Crack Free key is a great tool which simplifies the process of creating a database and handles all the task. Navicat Premium Crack is a great tool that simplifies the process of creating a database and handles all the tasks involved in the database. Compatible with cloud databases like Amazon RDS, Amazon Aurora, Amazon Redshift, Microsoft Azure, Oracle Cloud, Google Cloud and MongoDB Atlas. You can quickly. Navicat premium is the latest powerful database designing tool. It helps to manage multiple types of database at same time on the cloud. Navicat Mac is a database development tool that allows you to simultaneously connect to MySQL, MariaDB, SQL Server, Oracle, PostgreSQL…. Navicat Essentials is for commercial use and is available for MySQL, MariaDB, SQL Server, PostgreSQL, Oracle, and SQLite databases. If you need. Navicat Premium 15 破解激活(亲测有效)如果经济允许,还是希望可以支持正版!!!windows10 & ubuntu. linux windows navicat ubuntu navicat-keygen. Navicat Premium is a database development tool that allows you to simultaneously connect to MySQL, MariaDB, MongoDB, SQL Server, Oracle, PostgreSQL. Navicat Premium Crack Free Download is a database development tool that allows you to simultaneously connect to MySQL, MariaDB. Navicat Premium Crack is a data management tool that allows you to communicate simultaneously with programs such as MySQL. Navicat Premium Crack is an efficient software to manage different database systems. It is very simple and versatile with a graphical. Navicat Premium Crack is a powerful and efficient software for managing different databases with the advanced graphical user. Navicat Crack | Navicat Premium | Navicat Premium Crack | navicat download | navicat free | navicat alternative | navicat 16 | navicat for mysql | Navicat |. Navicat Premium Crack is a powerful and efficient software for managing totally different databases wited graphical user interfaces. Navicat Premium Crack is an advanced multi-connection database administration tool that allows you to simultaneously connect to all kinds. Navicat Premium The database development tool allows you to simultaneously connect to MySQL, MariaDB, SQL Server, Oracle, PostgreSQL. Navicat Premium Crack is an advanced multi-connection database administration program that allows users to connect to all kinds of databases. Navicat for MySQL Crack bit is the perfect solution for MySQL/MariaDB administration and development. Simultaneously connect to MySQL and MariaDB.

Navicat Premium Cracked for macOS | Haxmac

Navicat Premium Crack With Registration Key

Navicat Premium Crack is Here! [Latest] | Novahax

navicat-keygen · GitHub Topics · GitHub

Navicat Premium Crack With License Key Full Torrent

Navicat Premium v + Keygen | haxNode

Navicat Premium Full Crack + Keygen [Latest] -

Navicat Premium Crack - [download] | Mac Apps Free Share

Navicat Premium Crack Plus Keygen [Mac + Win]

Download Navicat Premium 16 Full Crack + License Key Free Full Version

Navicat Premium with Crack - HaxPC

Navicat Premium Crack + Serial Key Free Download

1 note

·

View note

Text

Navicat Premium Cracked for macOS | Haxmac

⭐ ⏩⏩⏩️ DOWNLOAD LINK 🔥🔥🔥 Navicat Premium Crack is an impressive database development tool that allows you to connect to MySQL, MariaDB, MongoDB, SQL Server, Oracle. Navicat Premium v (31 Aug ) for macOS + (Size: MB - Date: 9/6/ Navicat Premium Crack Free key is a great tool which simplifies the process of creating a database and handles all the task. Navicat Premium Crack is a great tool that simplifies the process of creating a database and handles all the tasks involved in the database. Compatible with cloud databases like Amazon RDS, Amazon Aurora, Amazon Redshift, Microsoft Azure, Oracle Cloud, Google Cloud and MongoDB Atlas. You can quickly. Navicat premium is the latest powerful database designing tool. It helps to manage multiple types of database at same time on the cloud. Navicat Mac is a database development tool that allows you to simultaneously connect to MySQL, MariaDB, SQL Server, Oracle, PostgreSQL…. Navicat Essentials is for commercial use and is available for MySQL, MariaDB, SQL Server, PostgreSQL, Oracle, and SQLite databases. If you need. Navicat Premium 15 破解激活(亲测有效)如果经济允许,还是希望可以支持正版!!!windows10 & ubuntu. linux windows navicat ubuntu navicat-keygen. Navicat Premium is a database development tool that allows you to simultaneously connect to MySQL, MariaDB, MongoDB, SQL Server, Oracle, PostgreSQL. Navicat Premium Crack Free Download is a database development tool that allows you to simultaneously connect to MySQL, MariaDB. Navicat Premium Crack is a data management tool that allows you to communicate simultaneously with programs such as MySQL. Navicat Premium Crack is an efficient software to manage different database systems. It is very simple and versatile with a graphical. Navicat Premium Crack is a powerful and efficient software for managing different databases with the advanced graphical user. Navicat Crack | Navicat Premium | Navicat Premium Crack | navicat download | navicat free | navicat alternative | navicat 16 | navicat for mysql | Navicat |. Navicat Premium Crack is a powerful and efficient software for managing totally different databases wited graphical user interfaces. Navicat Premium Crack is an advanced multi-connection database administration tool that allows you to simultaneously connect to all kinds. Navicat Premium The database development tool allows you to simultaneously connect to MySQL, MariaDB, SQL Server, Oracle, PostgreSQL. Navicat Premium Crack is an advanced multi-connection database administration program that allows users to connect to all kinds of databases. Navicat for MySQL Crack bit is the perfect solution for MySQL/MariaDB administration and development. Simultaneously connect to MySQL and MariaDB.

Navicat Premium Cracked for macOS | Haxmac

Navicat Premium Crack With Registration Key

Navicat Premium Crack is Here! [Latest] | Novahax

navicat-keygen · GitHub Topics · GitHub

Navicat Premium Crack With License Key Full Torrent

Navicat Premium v + Keygen | haxNode

Navicat Premium Full Crack + Keygen [Latest] -

Navicat Premium Crack - [download] | Mac Apps Free Share

Navicat Premium Crack Plus Keygen [Mac + Win]

Download Navicat Premium 16 Full Crack + License Key Free Full Version

Navicat Premium with Crack - HaxPC

Navicat Premium Crack + Serial Key Free Download

1 note

·

View note