#SN X P5

Text

Ok, I’ve had a Persona 5 X Shining Nikki AU rattling around in my head for a while now.

If you are interested In my ramblings continue onwards, otherwise I hope y’all have a good day/night!

The only reason this AU exists is because I think P5 and SN’s stories are surprisingly similar in theme. Just with the “let’s believe in humanity and save it while using powers that come from a place between reality and myth” thing going on. Also, I’m not super into angst or lore in this AU. I could conjure up some if people are interested, but most of this is fluff headcannonimg. Also Also this is a chaotic mess of a post, so apologies for very disjointed thoughts at times, but I couldn’t care more for organization right now.

In my own personal head cannon of SN, the way I describe how styling power works is that, stylists can use and or make their own Designer Reflections freely, however they cannot use other people’s. (Ex: Nikki can use her own, but she can’t use Momo DR). The only person who can use DR made by another stylist is the player. (My player is named Lily Kayla so that is what I will be referring to her as!). Nikki simply has more styling power than most people and Lily Kayla is the one who channels other DRs for Nikki to use.

Now, I don’t particularly care whether Nikki, Lily Kayla, and Momo go to the P5 universe, or if the Phantom Thieves go the SN universe. I have ideas for both which I will now ramble about. SN in P5 is here if you scroll down a bit.

Phantom Thieves in the Shining Nikki Universe

If the PTs go to the SN universe, I think they would be considered stylists and their Persona would transfer over into having a main style. Here’s a list that I will makeup on the spot now along with which nation style they would best fit into!

Joker: cool, north or apple

Mona: cool or cute, apple

Skull: fresh, apple

Panther: Sexy or cute, apple

Fox: elegant, cloud

Queen: cool or sexy, north

Oracle: cool, wasteland or ruin

Noir: cute, pigeon or Ninir

Crow: elegant and then cool, Ninir change to north

Violet: elegant or cute, Ninir or pigeon

There’s a lot of “cool” in there I know, but I just think it fits.

And now jumping into character interactions because that’s the main reason why I like crossover stuff in the first place.

I think Lily Kayla would totally show the ark off to the PTs. She would also show off a lot of DRs which she has acquired. Sorika (SN’s version of the gentlewoman thief) would definitely be on the list to show off. I think Lily would also show off or meet up with Ai, simply for Futaba’s sake. I could also see possibly something coming up the Justiciars and the PTs could go and meet Zoey’s crew. Maybe Zoey could show Ann more ways to use her whip along with general gun skills. I’d like to think that Nikki would surprise them with how good her gun skills are, or something of the sort.

Lily Kayla, Nikki, and Momo would definitely take the PTs on like, a small tour or something (I have no idea how big Miraland actually is). Yusuke would definitely get lost looking at something and I think he would just have a good time in general. I also want the cat not cats to talk to each other. See how that conversation would go or something. I think Futaba would also freak out at the prospect of Ruin Island until the Phantom Thieves come to find out what happened.

The entire Glow arc along side Modric having the ability the remove people memories with music could definitely be connected with Maruki’s entire thing. The PTs and the Shining Gang would have some interesting topics that they could convene on.

Oh! Nikki and Lily Kayla would definitely dress the PTs up with their extensive wardrobe. I actually have a picture I drew of the PTs in casual outfits, but I won’t add it here because I’m not even halfway through and I could still keep going. I also think Lily would like to show off their models guns and swords which they own for the purpose of ✨fashion✨. That’s mostly the gist of this half of the au. There’s definitely more that I’ve thought of, but I’ll cut this part short before it turns into a small fanfiction.

Shining Nikki in the Persona 5 Universe

I think that Nikki and Lily Kayla would be in the P5 universe thanks to one of Aeon’s experiments. They would initially end up in Momentos, and whether they met the PTs while they were in Momentos or after they escape from it, I don’t really care. I’m just kinda open to anything really.

Because Lily Kayla is the only one who has access to multiple DRs, I think she would be placed in the wildcard/velvet attendant spot. Specifically, I think she would be Nikki’s velvet attendant and Nikki would be considered the wild card. I think that upon first arrival that Justine and Caroline would throw her in a jail cell and Joker would have to bargain with them (maybe a plea deal or something) to get her out. From then on Lily doesn’t go near the Velvet Room anymore. (I also have a drawing of Lily in an attendant outfit, but as stated before, unless someone asks for it I’ll leave it for a different post). (I should also make Nikki a PT outfit!!).

DRs would change from having style elements to Metaverse Elements. Here are a few outfits I think would get used often and what element I think they would have. (Btw, I’m going off of my own personal collection, so if there’s a better DR which would fit an element that I don’t have, that’s why I didn’t mention it! :)) (also please keep in mind that I’ve never actually played P5 or P5R, I’ve just watched RTgame play it on twitch/youtube)

Zoey•Bloodthirsty Queen: Gun/Physical

Caprico•Cold Flame: Nuke/Electric

Yunikina•Song of Dawn: Ice

Erinka•Banquet of Passion: Fire

Qin Yi•Phoenix out of Palace: Fire

Yuntan•Fleeting Dream: Wind

Mercury•Elves’ Prayer: Bless/Light

Nikki•Guides of Star: Bless/Light

Sorika•Night Camouflage: Curse/Dark

Ai•Virtual Adventure: Electric

Aeon•Sea Mist: Psychic

Etc. I think you get the idea because I could list DR and what metaverse element I think they could be forever, but I’m going to stop myself here. I think Lily Kayla would often switch who she’s using just to get a feel for who’s good for what.

But, general overview, most of Zoey’s DR are gonna by gun/physical, Caprico’s or anyone from Ruin is gonna be Nuke or Electric, Lilith’s Curse/Dark, Nikki, Stars, and Light elves are Bless/Light, etc. etc. (if you want to know more then ask and I’ll respond!)

MOVING ON!!! —->

I think Lily Kayla in the real world (outside of Momentos) would be incorporeal. Kinda like Morgana where unless you’ve seen them in the Metaverse or know that they exist, they’ll be a ghost or Phantom (haha) otherwise.

Speaking of Lily Kayla!! Because she is basically my OC at this point I have some extra points I’d like to bring up. She’d probably make the connection from the Velvet Room to the Ark and talk to either Justine and Caroline or Joker about the Sea of Souls vs the Ocean of Memories. I think Lily Kayla would rope Nikki into telling the PTs about how they defeated the Goddess of Desire from their world sometime after the PTs explain how Momentos and Palaces work. Also, she’d be absolutely horrified by the fusions. Perhaps ever traumatized. Lily’s way of summoning DR in this world is through her mirror thing. As long as she has that, I kinda think it would work like a compendium of sorts.

When the DRs get summoned in Momentos, Lily and Nikki can control what elements from the outfit and the memory get summoned. Key word can. Most of the time I think they would just summon the whole outfit and memory to save the trouble, but I do think in certain times that specific parts of an outfit could be summoned at a time. (Ex: like only summoning Zoey’s Gun, Netga’s Mech or any other accessory to an outfit. With only summoning memories, it’d only be so that Lily or Nikki could talk to them or get advice from them).

Now for outside stuff that could happen!! Maybe once Lily Kayla becomes more physical (or maybe not I’m not picky) she and Nikki would probably just hang around the PTs and maybe crash at someone’s place. (Probably Haru’s). Joker (I’m calling P5 Protag Joker because I don’t have a preference on his name) would make them curry and I know that Nikki would fall in love with it. She’d probably want to cook with Joker sometime, but Lily Kayla would stop her before she could make something that even Yexiao couldn’t swallow.

Depending on how you interpret NG+, Nikki and Joker could get along with being tortured time travel buddies. Joker and Lily could get along we being the receptive “wildcard” of their group too. Lily would still probably introduce the thieves to a few of their friends from back home by summoning their memories.

Okay!! This post is long enough, I’m definitely cutting myself off here!! If even one person asks more about this insane crossover, then I’d be happy to write more about it, but for now this will satisfy me! If anyone gets to the bottom of this post, congrats! I’m glad you found me and my very niche demographic of P5 and SN fan who also happens to like fluffy crossover stuff! I hope to see you again soon!

#sn#shining nikki#persona 5#p5#I mention like#all of the main persona characters#but I don’t really go into detail about any one of them specifically#persona 5 crossover#shining Nikki crossover#I don’t know what to tag this as#SN X P5

3 notes

·

View notes

Text

my ship tags by media

ANIMANGA:

Akagami no Shirayukihime = ans ships, Beastars = beastars ships, Bleach = bleach ships, Boku no Hero Academia = bnha ships, Code Geass = cg ships, Death Note = dn ships, Full Metal Alchemist = fma ships, Gekkan Shoujo Nozaki-kun = gsnk ships, Jo-Jo’s Bizarre Adventure = jjba ships, Kimetsu no Yaiba = kny ships, Kuroko no Basuke = knb ships, Naruto = naruto ships, One Punch Man = opm ships, Otome Game no Hametsu Flag...= hamefura ships, Ouran High School Host Club = ohshc ships, Sailor Moon = sm ships, Shingeki no Kyojin = snk ships, Spy x Family = sxf ships, Studio Ghibli = sg ships, Tokyo Ghoul = tg ships, Yamada-kun and the Seven Witches = ykatsw ships, Yu Yu Hakusho = yyh ships,Yu-Gi-Oh! = ygo ships, Zankyou no Terror = znt ships

VIDEO GAMES:

Bendy and the Ink Machine = batim ships, Bravely Default = bd ships, Catherine = catherine ships, Corpse Party = corpse party ships, Dangan Ronpa = dr ships, Final Fantasy = ff ships, Fire Emblem = fe ships, Life is Strange = lis ships, Nier Autonoma = na ships, Persona 5 ships = p5 ships, Pokemon = pokemon ships, Resident Evil ships = re ships, Rune Factory = rf ships, Soul Nomad & the World Eaters = sn ships, The Legend of Zelda = tloz ships, The Letter = tl ships, Yandere Simulator = ys ships

MOVIES: Any other movie ship = movie ships

Star Wars = sw ships, The Lord of the Rings = tlotr ships, Titanic = titanic ships.

SHOWS:

Doctor Who = drw ships, Friends = friends ships, Merlin = merlin ships, The Big Bang Theory = tbbt ships, The Vampire Diaries = tvd ships

WESTERN ANIMATION:

Avatar: The Last Airbender: atla ships, Castlevania = castlevania ships, Class of the Titans = cott ships, Codename Kids Next Door = cknd ships, Danny Phantom = dp ships, Disney = disney ships, Dreamworks = dw ships Futurama = futurama ships, Kim Possible = kp ships, Kung Fu Panda = kfp ships, Miraculous Ladybug = mlb ships, Nijiiro Days = nd ships, Pixar = pixar shipsRWBY = rwby ships, Scooby Doo = sd ships, Total Drama = td ships, Totally Spies = ts ships, W.I.T.C.H = w.i.t.c.h ships, Winx Club = wc ships

COMICS:

DC = dc ships, Marvel = marvel ships

BOOKS:

Harry Potter = hp ships, Little Women = lw ships, Percy Jackson & the Olympians = pjo ships, Septimus Heap = sh ships, Twilight = twilight ships

DRAMA SHIPS = drama ships (any jdrama or kdrama ships)

Mainly from: Flower of Evil, Goblin & Faith

CROSSOVER SHIPS = crossover ships

Mainly: Jack x Elsa, Kuzco x Chel, Hades x Eris

MISC. SHIPS = misc. ships (any other ship basically):

Gomez x Morticia, Roger Rabbit x Jessica Rabbit, Merlin x Snow White, Veronica x Jughead, Nega Wanda x Nega Cosmo, Cam x Brandy, Nadia x Lucio, Jack x Rose, Anastasia x Dimitri, Shark Boy x Lava Girl & others.

GENERAL = shipping (tag about ships/shipping in fandom

4 notes

·

View notes

Note

how did you get into persona 5, hunter x hunter, naruto, and ace attorney?

oo thats a lot ok

p5: violet was a fan, it seemed interesting + people compared it to aa w the whole. hey man politicians kinda suck + i kept on getting assigned joker so eventually i gave in and just bought it

hxh: um. my phones always on mute for reasons so im not often all that caught up on trends? like ive never heard the ps5 guy to this day.

but one day i was on post limit but scrolling the dash anyways, and then this. misery x cpr x reeses puffs vid w kurapika, leorio, and killua and gon showed up and i think i just sat there watching it for almost an hour 😭😭 i never did find the video again sadly but thats what convinced me to officially start

naruto: i was gonna start this in grade 6 when everyone was obsessed but at that point i had Never watched a show on my own mostly bc i wasnt allowed to have interests and uh. 720 episodes was pretty intimidating for a beginner.

but then (a year ago yeah! happy one year anniversary to me and naruto) blablabla some kids in my science class named their lab group after naruto and got me thinking ab it again, my sister couldnt choose a new show to watch and i knew naruto was long so i recommended that, as a JOKE.

we watched the first few episodes but stopped when sakuras daydreaming ab sasuke kissing her forehead, at this point i had been on tumblr long enough that i had seen the what i THOUGHT was exaggerated sns stuff, so i thought itd be funny if i jokingly called it a family experience. ironic fortnite sasuke theme.

it was not funny. im still stuck here what the FUCK. stuck with watching it with my PARENTS OF ALL THINGS its like when you watched movies w your parents as a kid and every time the lead and the Girl looked at each other youd internally chant "dont kiss dont kiss dont kiss-" and then theyd kiss. except now its "dont use the strangest wording to talk about your Bond naruto dont look fondly back at him dont be obsessed with him all the time dont connect this back to sasuk-" and then he does.

aa: kris was liveblogging and i like mystery stuff so it seemed up my alley and uh. it was! started this back on faerociousbeast so its been two years of that now i think 😭😭 lawyers. this was one of the more normal ways for me to get into a series

0 notes

Text

Source: NESARC 2001 study

I want to talk about the frequency of smoking and the dependence of nicotine. I will focus on the age group of 18-25 year olds.

Below is the program I have created to present data.

import pandas as pd

import numpy as np

import seaborn as sns

# import entire dataset to memory

data = pd.read_csv('nesarc_pds.csv', low_memory=False)

# Uppercase all Dataframe to column names

data.columns = map(str.upper, data.columns)

# bug fix for display formats to avoid run time errors

pd.set_option('display.float_format', lambda x:'%f' %x)

print(len(data)) # Number of observations (rows)

print(len(data.columns)) # Number of variables (columns)

print(len(data.index))

print ("counts for TAB12MDX - nicotine dependence in the past 12 months, yes=1")

c1 = data["TAB12MDX"].value_counts(sort=False).sort_values(ascending=True)

print (c1)

print("percentages for TAB12MDX nicotine dependence in the past 12 months, yes=1")

p1 = data["TAB12MDX"].value_counts(sort=False, normalize=True).sort_values(ascending=True)

print (p1)

print ("counts for CHECK321 smoked in the past year, yes=1")

c2 = data["CHECK321"].value_counts(sort=False).sort_values(ascending=True)

print (c2)

print ("percentages for CHECK321 smoked in the past year, yes=1")

p2 = data["CHECK321"].value_counts(sort=False, normalize=True).sort_values(ascending=True)

print (p2)

print ("counts for S3AQ381 -usual frequency when smoked cigarettes")

c3 = data["S3AQ3B1"].value_counts(sort=False).sort_values(ascending=True)

print (c3)

print ("percentages for S3AQ381 -usual frequency when smoked cigarettes")

p3 = data["S3AQ3B1"].value_counts(sort=False, normalize=True).sort_values(ascending=True)

print (p3)

print ("counts for S3AQ3C1 usual quantity when smoked cigarettes")

c4 = data["S3AQ3C1"].value_counts(sort=False, dropna=False).sort_values(ascending=True)

print(c4)

print("percentages for S3AQ3C1 usual quantity when smoked cigarettes")

p4 = data["S3AQ3C1"].value_counts(sort=False, normalize=True).sort_values(ascending=True)

print (p4)

ct1 = data.groupby('TAB12MDX').size()

print(ct1)

pt1 = data.groupby('TAB12MDX').size() * 100 / len(data)

print(pt1)

sub1=data[(data['AGE']>=18) & (data['AGE']<=25) & (data['CHECK321']==1)]

sub2=sub1.copy()

print('counts for AGE')

c5 = sub2['AGE'].value_counts(sort=False)

print(c5)

print('percentages for AGE')

p5 = sub2['AGE'].value_counts(sort=False, normalize=True)

print(p5)

print('counts for CHECK321')

c6 = sub2['CHECK321'].value_counts(sort=False)

print(c6)

print('percentages of CHECK321')

p6 = sub2['CHECK321'].value_counts(sort=False, normalize=True)

print(p6)

Here is the output of the program when executed:

counts for TAB12MDX - nicotine dependence in the past 12 months, yes=1

1 4962

0 38131

Name: TAB12MDX, dtype: int64

percentages for TAB12MDX nicotine dependence in the past 12 months, yes=1

1 0.115146

0 0.884854

Name: TAB12MDX, dtype: float64

counts for CHECK321 smoked in the past year, yes=1

9.000000 22

2.000000 8078

1.000000 9913

Name: CHECK321, dtype: int64

percentages for CHECK321 smoked in the past year, yes=1

9.000000 0.001221

2.000000 0.448454

1.000000 0.550325

Name: CHECK321, dtype: float64

counts for S3AQ381 -usual frequency when smoked cigarettes

9.000000 102

5.000000 409

2.000000 460

3.000000 687

4.000000 747

6.000000 772

1.000000 14836

Name: S3AQ3B1, dtype: int64

percentages for S3AQ381 -usual frequency when smoked cigarettes

9.000000 0.005663

5.000000 0.022706

2.000000 0.025537

3.000000 0.038139

4.000000 0.041470

6.000000 0.042858

1.000000 0.823627

Name: S3AQ3B1, dtype: float64

counts for S3AQ3C1 usual quantity when smoked cigarettes

39.000000 1

21.000000 1

34.000000 1

33.000000 1

57.000000 1

66.000000 1

75.000000 2

23.000000 2

37.000000 2

27.000000 2

55.000000 2

28.000000 3

29.000000 3

19.000000 5

24.000000 7

45.000000 8

22.000000 10

70.000000 12

98.000000 15

17.000000 22

11.000000 23

14.000000 25

35.000000 30

13.000000 34

16.000000 40

80.000000 47

9.000000 49

18.000000 59

50.000000 106

25.000000 155

12.000000 230

60.000000 241

99.000000 262

7.000000 269

8.000000 299

6.000000 463

4.000000 573

15.000000 851

2.000000 884

30.000000 909

3.000000 923

1.000000 934

40.000000 993

5.000000 1070

10.000000 3077

20.000000 5366

nan 25080

Name: S3AQ3C1, dtype: int64

percentages for S3AQ3C1 usual quantity when smoked cigarettes

39.000000 0.000056

21.000000 0.000056

34.000000 0.000056

33.000000 0.000056

57.000000 0.000056

66.000000 0.000056

75.000000 0.000111

55.000000 0.000111

23.000000 0.000111

27.000000 0.000111

37.000000 0.000111

28.000000 0.000167

29.000000 0.000167

19.000000 0.000278

24.000000 0.000389

45.000000 0.000444

22.000000 0.000555

70.000000 0.000666

98.000000 0.000833

17.000000 0.001221

11.000000 0.001277

14.000000 0.001388

35.000000 0.001665

13.000000 0.001888

16.000000 0.002221

80.000000 0.002609

9.000000 0.002720

18.000000 0.003275

50.000000 0.005885

25.000000 0.008605

12.000000 0.012769

60.000000 0.013379

99.000000 0.014545

7.000000 0.014934

8.000000 0.016599

6.000000 0.025704

4.000000 0.031810

15.000000 0.047244

2.000000 0.049076

30.000000 0.050464

3.000000 0.051241

1.000000 0.051851

40.000000 0.055127

5.000000 0.059402

10.000000 0.170821

20.000000 0.297896

Name: S3AQ3C1, dtype: float64

TAB12MDX

0 38131

1 4962

dtype: int64

TAB12MDX

0 88.485369

1 11.514631

dtype: float64

counts for AGE

18 161

19 200

20 221

21 239

22 228

23 231

24 241

25 185

Name: AGE, dtype: int64

percentages for AGE

18 0.094373

19 0.117233

20 0.129543

21 0.140094

22 0.133646

23 0.135404

24 0.141266

25 0.108441

Name: AGE, dtype: float64

counts for CHECK321

1.000000 1706

In summary there is absolutely a correlation between frequency one smokes and nicotine dependency.

0 notes

Text

Assignment 4

Code:

import pandas

import numpy

import matplotlib.pyplot as plt

import seaborn as sns

data = pandas.read_excel('nesarc.xlsx')

#making individual ethnicity variables numeric

data['S1Q1E'] = data['S1Q1E'].astype(int)

data['S1Q16'] = data['S1Q16'].astype(int)

data['S8Q1A2'] = data['S8Q1A2'].astype(int)

data['AGE'] = data['AGE'].astype(int)

#sub1=data[(data['AGE']>=18) & (data['AGE']<=25)]

sub2=data.copy()

c1 = sub2['S1Q16'].value_counts(sort=False, dropna=False)

c3 = sub2['S8Q1A2'].value_counts(sort=False, dropna=False)

#Set missing data to NAN

sub2['S1Q16']=sub2['S1Q16'].replace(9, numpy.nan)

sub2['S8Q1A2']=sub2['S8Q1A2'].replace(9, numpy.nan)

c4 = sub2['S1Q16'].value_counts(sort=False, dropna=False)

c6 = sub2['S8Q1A2'].value_counts(sort=False, dropna=False)

#print(c6)

#To collect the data

sub1=data[(data['AGE']>=18) & (data['AGE']<=25) & ((data['S8Q1A2']==1) | (data['S8Q1A2']==2))]

# make a copy of my new subsetted data

sub2 = sub1.copy()

#print('Counts for AGE')

c5 = sub2.groupby('AGE').size()

#print(c5)

#print('Percentages for AGE')

p5 = sub2.groupby('AGE').size() * 100 / len(data)

#print(p5)

#print("****************** Counts and Percentage of Fear of Height **********************")

#print('Counts for S8Q1A2')

c6 = sub2.groupby('S8Q1A2').size()

#print(c6)

#print('Percentages for S8Q1A2')

p6 = sub2.groupby('S8Q1A2').size() * 100 / len(data)

#print(p6)

#print("*********************************************")

#print("***************** American Indian and Height of Fear *****************")

#American Indian , French, Italian

sub3=data[(data['S1Q1E']==3) & ((data['S8Q1A2']==1) | (data['S8Q1A2']==2)) ]

sub4 = sub3.copy()

#print('counts for S1Q1E')

c7 = sub4.groupby('S1Q1E').size()

#print(c7)

#print('percentages for S1Q1E')

p7 = sub4.groupby('S1Q1E').size() * 100 / len(data)

#print(p7)

#print("**************** Counts and Percentage of Fear of Height *****************")

#print('counts for S8Q1A2')

c8 = sub4.groupby('S8Q1A2').size()

#print(c8)

#print('percentages for S8Q1A2')

p8 = sub4.groupby('S8Q1A2').size() * 100 / len(data)

#print(p8)

#print("*********************************************")

#print("***************** German and Height of Fear *****************")

sub5=data[(data['S1Q1E']==19) & ((data['S8Q1A2']==1) | (data['S8Q1A2']==2)) ]

sub6 = sub5.copy()

#print('counts for S1Q1E')

c9 = sub6.groupby('S1Q1E').size()

#print(c9)

#print('percentages for S1Q1E')

p9 = sub6.groupby('S1Q1E').size() * 100 / len(data)

#print(p9)

#print("***************** Counts and Percentage of Fear of Height *****************")

#print('counts for S8Q1A2')

c10 = sub6.groupby('S8Q1A2').size()

#print(c10)

#print('percentages for S8Q1A2')

p10 = sub6.groupby('S8Q1A2').size() * 100 / len(data)

#print(p10)

#print("*********************************************")

#print("***************** Italian and Height of Fear *****************")

sub7=data[(data['S1Q1E']==29) & ((data['S8Q1A2']==1) | (data['S8Q1A2']==2)) ]

sub8 = sub7.copy()

#print('counts for S1Q1E')

c11 = sub8.groupby('S1Q1E').size()

#print(c11)

#print('percentages for S1Q1E')

p11 = sub8.groupby('S1Q1E').size() * 100 / len(data)

#print(p11)

#print("***************** Counts and Percentage of Fear of Height *****************")

#print('counts for S8Q1A2')

c12 = sub8.groupby('S8Q1A2').size()

#print(c12)

#print('percentages for S8Q1A2')

p12 = sub8.groupby('S8Q1A2').size() * 100 / len(data)

#print(p12)

#print("********************************************")

#print("*********** SELF-PERCEIVED CURRENT HEALTH is Excellent and Height of Fear ****************")

sub9=data[(data['S1Q16']==1) & ((data['S8Q1A2']==1) | (data['S8Q1A2']==2)) ]

sub10 = sub9.copy()

#print('counts for S1Q16')

c13 = sub10.groupby('S1Q16').size()

#print(c13)

#print('percentages for S1Q16')

p13 = sub10.groupby('S1Q16').size() * 100 / len(data)

#print(p13)

#print("***************** Counts and Percentage of Fear of Height *****************")

#print('counts for S8Q1A2')

c14 = sub10.groupby('S8Q1A2').size()

#print(c14)

#print('percentages for S8Q1A2')

p14 = sub10.groupby('S8Q1A2').size() * 100 / len(data)

#print(p14)

#print("*********************************************")

#print("*********** SELF-PERCEIVED CURRENT HEALTH is Poor and Height of Fear ****************")

sub11=data[(data['S1Q16']==5) & ((data['S8Q1A2']==1) | (data['S8Q1A2']==2)) ]

sub12 = sub11.copy()

#print('counts for S1Q16')

c15 = sub12.groupby('S1Q16').size()

#print(c15)

#print('percentages for S1Q16')

p15 = sub12.groupby('S1Q16').size() * 100 / len(data)

#print(p15)

#print("***************** Counts and Percentage of Fear of Height *****************")

#print('counts for S8Q1A2')

c16 = sub12.groupby('S8Q1A2').size()

#print(c16)

#print('percentages for S8Q1A2')

p16 = sub12.groupby('S8Q1A2').size() * 100 / len(data)

#print(p16)

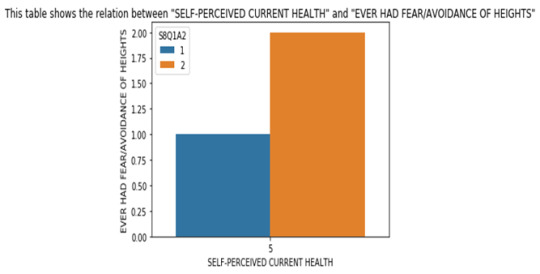

sns.barplot(x='S1Q16',y='S8Q1A2',data=sub11,hue='S8Q1A2')

plt.xlabel('SELF-PERCEIVED CURRENT HEALTH')

plt.ylabel('EVER HAD FEAR/AVOIDANCE OF HEIGHTS')

plt.title('This table shows the relation between "SELF-PERCEIVED CURRENT HEALTH" and "EVER HAD FEAR/AVOIDANCE OF HEIGHTS"')

plt.show()

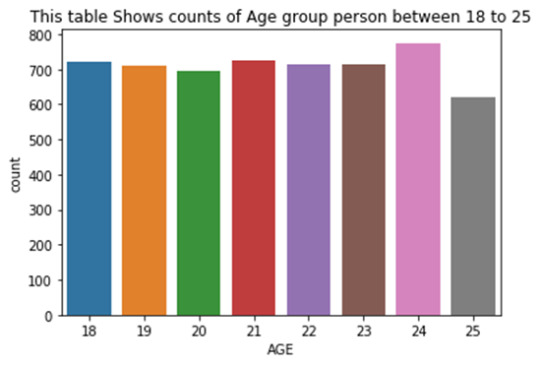

sns.countplot(x='AGE',data=sub1)

plt.title('This table Shows counts of Age group person between 18 to 25')

plt.show()

sns.countplot(x='S1Q16',hue='S8Q1A2',data=sub2)

plt.xlabel('SELF-PERCEIVED CURRENT HEALTH')

plt.title('Counts of "SELF-PERCEIVED CURRENT HEALTH" with respect to fear of height')

plt.show()

sns.distplot(data['AGE'],bins=50)

plt.ylabel('Proportions')

plt.title('This table shows the proportions of all the "AGE" persons participate')

plt.show()

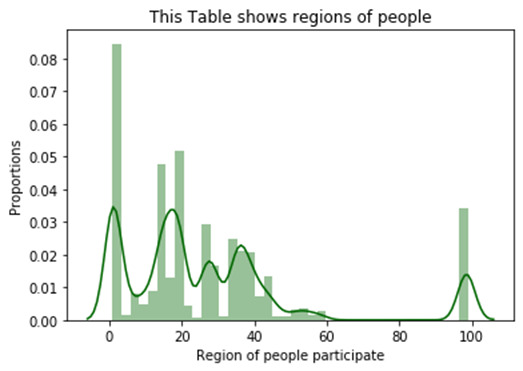

sns.distplot(data['S1Q1E'],bins=40,color='#006600')

plt.xlabel('Region of people participate')

plt.ylabel('Proportions')

plt.title('This Table shows regions of people')

plt.show()

Output :

Output 1 :

Output 2 :

Output 3 :

Output 4 :

Output 5 :

Summary :

1) The 1st output is a bivariate graph of variable ‘S1Q16’ - SELF-PERCEIVED CURRENT HEALTH, S8Q1A2 - EVER HAD FEAR/AVOIDANCE OF HEIGHTS and the graph shows the persons with SELF-PERCEIVED CURRENT HEALTH poor mainly do not have any fear of height. Also, the code suggests the same way that most of this category persons do not have any fear of heights.

2) The 2nd output is a univariate graph of variable ‘AGE’ it shows the frequencies and counts of persons participate between age 18 to 25 and in this category highest persons participate are of age 24. And this plot is the ‘countplot’ of AGE.

3) The 3rd output is a univariate graph of variable ‘S1Q16’ it shows the frequency and counts of SELF-PERCEIVED CURRENT HEALTH with category from excellent to poor health and most of the persons from any category do not have any fear of height. As the counts of 2 are very high then that of 1.

4) The 4th Output is a univariate graph of variable ‘AGE’ and this graph is a ‘distplot and its shows the proportions of all the persons of AGE 18 to 99 and in that part we takes bins = 50 and the plot of kde is also true. And the proportions shows that the most of the persons participate are of AGE nearby 40.

5) The 5th Output is a univariate graph of variable ‘S1Q1E’- ORIGIN OR DESCENT and this is also a distplot with bins= 40 and here also there is integrated ‘kdeplot’ and it shows that most of the persons are belong to regions 1 to 20 and this is also proved by code that the counts of that particular regions of persons are high than that of others.

0 notes

Text

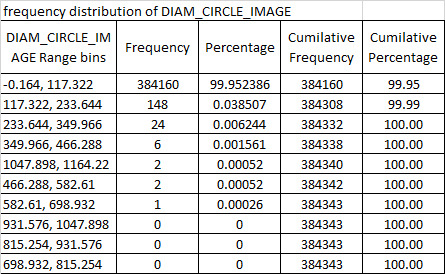

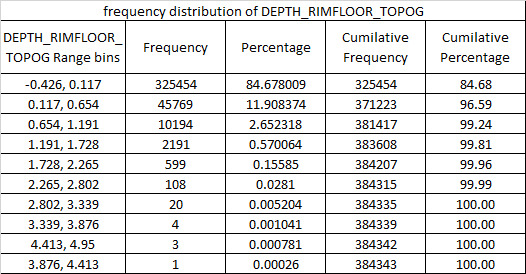

Mars Craters Study Raw Data Frequency distribution

A total of 384343 sample data is available in Mars crater impact study analysis. the variables are CRATER_ID, CRATER_NAME,LATITUDE_CIRCLE_IMAGE,LONGITUDE_CIRCLE_IMAGE DIAM_CIRCLE_IMAGE, DEPTH_RIMFLOOR_TOPOG, MORPHOLOGY_EJECTA_1, MORPHOLOGY_EJECTA_2, MORPHOLOGY_EJECTA_3, NUMBER_LAYERS.

CRATER_NAME variable available are 986.

the objective is to find the relation between crater depth associated with crater diameter.

currently, the frequency distribution of variables are studied.

99.9% of DIAM_CIRCLE_IMAGE variable is falling with 117km range.

95% of NUMBER_LAYERS variable is having zero(0) layer.

85% of DEPTH_RIMFLOOR_TOPOG variable is falling within 0.117 range

#****************Python Code starts*********************************************

from pandas import DataFrame, read_csv

import matplotlib.pyplot as plt

import pandas as pd

import seaborn as sns

import numpy as np

print(pd.__version__)

data = pd.read_csv('marscrater_pds.csv', index_col=False)

print(len(data))

print(len(data.columns))

print (data.columns)

values = data['DIAM_CIRCLE_IMAGE'].values

#to convert solumn titles to upper

data.columns=map(str.upper,data.columns)

#data.columns=map(str.lower,data.columns)

pd.set_option('display.float_format', lambda x:'%f'%x)

#data types

data['CRATER_ID']

data['CRATER_NAME']

data['MORPHOLOGY_EJECTA_1']

data['NUMBER_LAYERS']

print('CRATER_ID1')

c6 = data['CRATER_ID'].value_counts(sort=False)

p6 = data['CRATER_ID'].value_counts(sort=False, normalize=True)

c7 = data['CRATER_ID1'].value_counts(sort=False)

p7 = data['CRATER_ID1'].value_counts(sort=False, normalize=True)

#df[['First','Last']] = df.Name.str.split("_",expand=True)

data[['CRATER_ID1','CRATER_ID2']] = data["CRATER_ID"].str.split("-",expand=True)

data["CRATER_ID1"]=data["CRATER_ID1"].convert_objects(convert_numeric=True)

data["CRATER_ID2"] = data["CRATER_ID2"].convert_objects(convert_numeric=True)

#data["S1Q2I"] = data["S1Q2I"].convert_objects(convert_numeric=True)

ct7=data.groupby('LATITUDE_CIRCLE_IMAGE').size()

print(c7)

print(p7)

print('LATITUDE_CIRCLE_IMAGE')

c1 = data['LATITUDE_CIRCLE_IMAGE'].value_counts(sort=False)

p1 = data['LATITUDE_CIRCLE_IMAGE'].value_counts(sort=False, normalize=True)

pc1=data['LATITUDE_CIRCLE_IMAGE'].value_counts(bins=10)

pp1=data['LATITUDE_CIRCLE_IMAGE'].value_counts(bins=10, normalize=True)*100

print(pc1)

print(pp1)

ct1=data.groupby('LATITUDE_CIRCLE_IMAGE').size()

print(c1)

print(p1)

print('LONGITUDE_CIRCLE_IMAGE')

c2 = data['LONGITUDE_CIRCLE_IMAGE'].value_counts(sort=False)

p2 = data['LONGITUDE_CIRCLE_IMAGE'].value_counts(sort=False, normalize=True)

ct2=data.groupby('LONGITUDE_CIRCLE_IMAGE').size()

pc2=data['LONGITUDE_CIRCLE_IMAGE'].value_counts(bins=10)

pp2=data['LONGITUDE_CIRCLE_IMAGE'].value_counts(bins=10, normalize=True)*100

print(pc2)

print(pp2)

print(c2)

print(p2)

print('DIAM_CIRCLE_IMAGE')

c3 = data['DIAM_CIRCLE_IMAGE'].value_counts(sort=False)

p3 = data['DIAM_CIRCLE_IMAGE'].value_counts(sort=False, normalize=True)

ct3=data.groupby('DIAM_CIRCLE_IMAGE').size()

print(c3)

print(p3)

pc3=data['DIAM_CIRCLE_IMAGE'].value_counts(bins=10)

pp3=data['DIAM_CIRCLE_IMAGE'].value_counts(bins=10, normalize=True)*100

print(pc3)

print(pp3)

print('DEPTH_RIMFLOOR_TOPOG')

c4 = data['DEPTH_RIMFLOOR_TOPOG'].value_counts(sort=False)

cs4 = data['DEPTH_RIMFLOOR_TOPOG'].value_counts(sort=True)

p4 = data['DEPTH_RIMFLOOR_TOPOG'].value_counts(sort=False, normalize=True)

ct4=data.groupby('DEPTH_RIMFLOOR_TOPOG').size()

print(c4)

print(p4)

pc4=data['DEPTH_RIMFLOOR_TOPOG'].value_counts(bins=10)

pp4=data['DEPTH_RIMFLOOR_TOPOG'].value_counts(bins=10, normalize=True)*100

print(pc4)

print(pp4)

print('NUMBER_LAYERS')

c5 = data['NUMBER_LAYERS'].value_counts(sort=False)

p5 = data['NUMBER_LAYERS'].value_counts(sort=False, normalize=True)

print(c5)

print(p5)

ct5=data.groupby('NUMBER_LAYERS').size()*100/len(data)

ct5=data.groupby('NUMBER_LAYERS').size()

#data['DIAM_CIRCLE_IMAGE']=data['DIAM_CIRCLE_IMAGE'].convert_objects(convert_numeric=True)

dfi1=data.dropna()

len(dfi1)

len(data)

sub1=data[(data['NUMBER_LAYERS']>2)&(data['DIAM_CIRCLE_IMAGE']>2)]

sub2=sub1.copy()

c8 = data['CRATER_NAME'].value_counts(sort=False)

p8 = data['CRATER_NAME'].value_counts(sort=False, normalize=True)

print(p8)

print(c8)

#****************Code Ends*********************************

Python output in arranged in tabular format

0 notes

Video

youtube

Charmed S8 Ep22-Le futur des soeurs Halliwell

Il y a 3 heures, il était 3h20 p.m. à l'heure de la Polynésie (Po)(ly)(ne)(sie) Française le 30 janvier 2018 .

https://www.youtube.com/watch?v=qZSx6nGQR6A (A) (q)

ajoutée le 8 nov. 2015, il y a 22 jours et 2 mois et 2 ans.

https://www.youtube.com/watch?v=HBwZPozCmGA (A)(po) (h)

ajoutée le 8 déc. 2014, il y a 22 jours et 1 mois et 3 ans.

https://www.youtube.com/watch?v=vGxOs9OrLYk (ly)

ajoutée le 1 déc. 2009, il y a 29 jours et 1 mois et 8 ans. (18)

https://www.youtube.com/watch?v=i-TiNC3zpPw

ajoutée le 18 avr. 2016 (18), il y a 12 jours (12) et 9 mois et 1 an.

https://www.youtube.com/watch?v=RMnZnEemOWg (ne)

ajoutée le 18 mai 2017 (18), il y a 12 jours (12) et 8 mois .

https://www.youtube.com/watch?v=SiEWZcE0wY0 (sie) publié un 12 mai (12)

https://www.youtube.com/watch?v=MQHmU8zHYNg (q) (h) (g)

ajoutée le 19 mai 2011, il y a 11 jours et 8 mois et 6 ans. (8/6)

https://www.youtube.com/watch?v=iu3Wfx-mgjE (g)

ajoutée le 8 juin 2017 (8/6), il y a 22 jours et 7 mois (22/7).

https://www.youtube.com/watch?v=sJ6-asFmbYk (6) (b)

https://www.youtube.com/watch?v=oWnYtyNKPsA (A) (p)(o)

ajoutée le 22 juil. 2017 (22/7), il y a 8 jours et 8 mois.

https://www.youtube.com/watch?v=E3GBpSrHSDI (b)(p) (e) (d)

La vidéo a La Durée de 2:16.

ajoutée le 13 sept. 2008, il y a 17 jours et 4 mois et 9 ans.

https://www.youtube.com/watch?v=iOe6dI2JhgU (o) (e) (g)

ajoutée le 22 sept. 2016, il y a 1 an et 4 mois et 8 jours. (14/8)

https://www.facebook.com/14-ao%C3%BBt-2017-315423672252313/ (14/8)

https://www.youtube.com/watch?v=BAKI70e0yDg (d) (g)

ajoutée le 9 /6/2017, il y a 21 jours (21) et 007 mois.

https://www.youtube.com/watch?v=EWUY5hQomOA (A) (5) (h) (u)

ajoutée le 21 févr. 2017 (21) (21/2), il y a 9 jours et 11 mois . (9/11)

Le phénomène lunaire du 31 janvier 2018 va durer 5 heures. (5)

https://www.youtube.com/watch?v=K6ghuG8Ch7c (h)(u) (g) (8) (7) (6)

ajoutée le 9 nov. 2017 (9/11), il y a 21 jours et 2 mois (21/2).

https://www.youtube.com/watch?v=YGUgd2ngj_4 (u) (g) (2)

ajoutée le 8 mai 2010, il y a 22 jours et 8 mois et 7 ans. (8) (7)

https://www.youtube.com/watch?v=3DN6gTh2-6M (6) (g) (2) (t)

ajoutée le 10 oct. 2009, il y a 20 jours et 3 mois et 8 ans. (38)

https://www.youtube.com/watch?v=FHG2oizTlpY (g) (2) (t) (o)

ajoutée le 8 déc. 2009, il y a 21 jours et 1 mois et 8 ans. (21/8)

https://www.youtube.com/watch?v=XM1FexW2tk0 (2) (t) (e) (x)

ajoutée le 15 juin 2015, il y a 15 jours et 7 mois et 2 ans. (2)

https://www.youtube.com/watch?v=iEBD7HOE6Jw (o) (e) (i) (h)

ajoutée le 13 oct. 2017, il y a 17 jours et 3 mois .

https://www.youtube.com/watch?v=99_BOsxIt6w (x) (i) (s)

ajoutée le 28 févr. 2009, il y a 2 jours et 11 mois et 8 ans. (2/8)

(2/8) Depuis le 2 août 2017, une seule planète ne suffit plus à subvenir aux consommations de la population mondiale.

https://www.youtube.com/watch?v=QbCkB5HSknc (h) (s) (5)

ajoutée le 6 avr. 2008, il y a 24 jours (24) et 9 mois et 9 ans. (99)

Depuis 99 ans, les Américains ont attendu l'éclipse totale de Soleil aux USA .

https://www.youtube.com/watch?v=jgvfstfnIKA (A) (s) (g)

ajoutée le 24 nov. 2009 (24), il y a 6 jours (6) et 2 mois et 8 ans (2/8)

https://www.youtube.com/watch?v=CqBtS6BIP1E (s) (6) (q)

ajoutée le 24 nov. 2009, il y a 6 jours (6) et 2 mois (2) et 8 ans (2/8) (6/8)

https://www.youtube.com/watch?v=qZSx6nGQR6A&t=174s (g) (q)

ajoutée le 8 nov. 2015, il y a 22 jours et 2 mois et 2 ans. (2)

https://www.youtube.com/watch?v=7gPrNpHaFX8 (007) (x) (p) film sorti au cinéma un (6/8)

ajoutée le 7 mai 2014, il y a 23 jours et 007 mois et 3 ans. (23/3)

https://www.youtube.com/watch?v=1bKuXbnGDqI (x) (b)

ajoutée le 23 mars 2011 (23/3), il y a 7 jours et 10 mois et 6 ans (7/10) ou (6/10)

https://www.youtube.com/watch?v=SN-a1vPBSXo (x) (p) (b) (s) (p)

ajoutée le 20 nov. 2014, il y a 10 jours et 2 mois et 3 ans. (3)

https://www.youtube.com/watch?v=OY3SBj2rzrg (3) (s) (2) (s)

ajoutée le 15 juil. 2017, il y a 15 jours et 6 mois.

https://www.youtube.com/watch?v=yDMyc7a3p20 (p) (2) (y) (7)

ajoutée le 14 avr. 2013 (14/4), il y a 16 jours et 9 mois et 4 ans.

https://www.youtube.com/watch?v=aFPcsYGriEs (s) (y) (g) (s)

ajoutée le 21 nov. 2016, il y a 9 jours et 2 mois et 1 an.

https://www.youtube.com/watch?v=pIgZ7gMze7A (7) (g) (a) (z)

ajoutée le 24 oct. 2009, il y a 6 jours et 3 mois et 8 ans. (38)

https://www.youtube.com/watch?v=EocLKUzsaoc (s) (a) (L) (e)

ajoutée le 4 mai 2009, il y a 26 jours et 8 mois et 8 ans.

https://www.youtube.com/watch?v=RJEYSZLVZ6g&t=188s (z) (L) (r) (z)

ajoutée le 19 juil. 2014, il y a 11 jours et 6 mois et 3 ans.

https://www.youtube.com/watch?v=9b8erWuBA44 (e) (r) (b) (8) (4)

ajoutée le 16 juin 2009, il y a 14 jours et 7 mois (14/7) et 8 ans. (8)

https://www.youtube.com/watch?v=qnZbxuI_M-M (z) (b) (X) (z)

ajoutée le 14 févr. 2010, il y a 16 jours et 11 mois et 7 ans. (11/7)

https://www.youtube.com/watch?v=D4XZLlSXg6E (4) (X) (L)

ajoutée le 9 sept. 2015, il y a 21 jours et 4 mois et 2 ans.

https://www.youtube.com/watch?v=zXa7Z06x-5Q (z) (x) (Z) (z)

ajoutée le 12 mai 2017, il y a 18 jours et 8 mois . (18/8 anniversaire de Mika)

https://www.youtube.com/watch?v=k_ZDZ3ZlzXI (L) (Z) (k) (x)

ajoutée le 28 janv. 2016, il y a 2 jours et 1 an. (21)

https://www.youtube.com/watch?v=5Cw8YYdZKW8 (z) (k) (z) (8)

ajoutée le 10 sept. 2014, il y a 20 jours et 4 mois et 3 ans. (20/3) et (4)

https://www.youtube.com/watch?v=XbxZargtXug (x) (z) (r) (g)

ajoutée le 5 févr. 2017, il y a 25 jours et 11 mois.

https://www.youtube.com/watch?v=YYpr8RubbyE (8) (r) (8)

ajoutée le 11 déc. 2015, il y a 19 jours (19) et 2 ans et 1 mois. (21)

https://www.youtube.com/watch?v=tiy7peMH3g8 (g) (8)

ajoutée le 5 avr. 2012, il y a 25 jours et 5 ans et 9 mois. (59)

https://www.youtube.com/watch?v=5yD9NpUUlKE (59) (5)(K) (5) (k) (9)

https://www.youtube.com/watch?v=55KH1ei-WD0&index=11&list=PL87ECAFCB42546FA5 (5)(5) (k)

ajoutée le 10 oct. 2006, il y a 20 jours et 3 mois (20/3) et 11 ans.

https://www.youtube.com/watch?v=55qUevLP1OQ (5) (5)

ajoutée le 10 oct. 2013, il y a 20 jours et 3 mois (20/3) et 4 ans. (4)

https://www.youtube.com/watch?v=6SgnOYK9HuI (k) (9)

ajoutée le 26 août 2016, il y a 4 jours (4) et 5 mois et 1 an. (51)

https://www.youtube.com/watch?v=PHQg51mvyHA (51) (p1) (p5)(pa)

https://www.youtube.com/watch?v=z1P15YrUg8Y&index=20&list=PL87ECAFCB42546FA5 (p1)

ajoutée le 23 janv. 2011, il y a 7 jours (7) et 6 mois.

https://www.youtube.com/watch?v=VMp55KH_3wo (p5) (k)

ajoutée le 5 juin 2015, il y a 25 jours et 7 mois (7) et 2 ans.

https://www.youtube.com/watch?v=8sSYknnAApA (pa) (8A) (a)

ajoutée le 13 juin 2014, il y a 17 jours et 7 mois (17/7) et 3 ans.

17/7 date de sortie de la série Les Champions.

https://www.youtube.com/watch?v=8AF-Sm8d8yk (8A) (88) (k) (a)

ajoutée le 8 sept. 2017, il y a 22 jours et 4 mois.

https://www.space.com/18880-moon-phases.html?utm_source=twitter&utm_medium=social#?utm_source=twitter&utm_medium=social&utm_campaign=2016twitterdlvrit (88) (t)

https://www.youtube.com/watch?v=KfWgodekkA4 (k) (a)

ajoutée le 20 nov. 2014, il y a 10 jours et 2 mois et 3 ans.

https://www.youtube.com/watch?v=tksqqmQ5_XY (t) (k) (q)

ajoutée le 1 sept. 2017, il y a 29 jours et 4 mois .

https://www.youtube.com/watch?v=H1Fv67HKdkQ (1) (k )(q) (q) (h)ajoutée le 5 déc. 2014, il y a 25 jours et 1 mois (1) et 3 ans. (25/3)

https://www.youtube.com/watch?v=MQHmU8zHYNg (q) (h) (m)

ajoutée le 19 mai 2011, il y a 11 jours et 8 mois et 6 ans. (6)

https://www.youtube.com/watch?v=WKzm6AZFbdc&t=69s (m) (6) (AZ)

ajoutée le 3 août 2012, il y a 27 jours (27) et 5 mois et 5 ans. (5 et 5) (5 + 5) = (10)

https://www.youtube.com/watch?v=SF_GVbV_DeI (5 et 5)

https://www.youtube.com/watch?v=AzaTyxMduH4 (AZ) (U) (4)

ajoutée le 24 août 2012, il y a 6 jours (6) et 5 mois et 5 ans . (5 et 5)

https://www.youtube.com/watch?v=UXf6UZ9L328 (6) (U) (8) (U)

ajoutée le 30 mars 2009, il y a 10 mois (10) et 8 ans. (8)

https://www.youtube.com/watch?v=AI6tE1kua4U (4) (U) (6) (K) (4)

ajoutée le 8 mai 2017, il y a 22 jours et 8 mois. (8)

https://www.youtube.com/watch?v=YBA26k1Qy-Q (6) (K) (B)

ajoutée le 9 nov. 2017, il y a 21 jours et 2 mois.

https://www.youtube.com/watch?v=reSXR4BhJ_k (4) (B) (s)(h)

ajoutée le 27 janv. 2014 (27), il y a 3 jours et 3 ans.

https://www.youtube.com/watch?v=lVS9oON8NBs (B) (s) (n) (S) (8) (nb)(VS)(ob) (8o) (8s) (Sn) (vb) (ss)

https://www.youtube.com/watch?v=h6P5jK4HnQQ (h) (n)

https://www.youtube.com/watch?v=Oei7OKqadS8 (S) (8)

ajoutée le 25 avr. 2012, il y a 5 jours et 9 mois et 5 ans. (59)

https://www.youtube.com/watch?v=puQJbvnbycE (nb)

ajoutée le 18 juil. 2009, il y a 12 jours et 6 mois et 8 ans. (12/6 saint Guy ) (6/8)

https://www.youtube.com/watch?v=FSD72x6Zvs4 (VS)

ajoutée le 7 janv. 2008, il y a 23 jours et 9 mois.

https://www.youtube.com/watch?v=Tvvg9mOb8WM (ob)

ajoutée le 15 mars 2011, il y a 15 jours et 10 mois et 6 ans. (6/10)

https://www.youtube.com/watch?v=vnNXiO48oTY (8o)

ajoutée le 4 févr. 2015, il y a 26 jours et 10 mois et 2 ans.

https://www.youtube.com/watch?v=9B0WhLhs8eA&t=18s (8s)

https://www.youtube.com/watch?v=Xlc3hSNvpsQ (sn)

ajoutée le 18 nov. 2016, il y a 12 jours et 1 an et 2 mois (12)

https://www.youtube.com/watch?v=SPK9fxvvbfk (vb) (s) ajoutée le 7 juin 2017, il y a 23 jours et 7 mois. (23/7)

https://www.youtube.com/watch?v=Zn-ssP1uGfo (ss)(1) (z) ajoutée le 13 janv. 2017, il y a 17 jours et 1 an. (1)

https://www.youtube.com/watch?v=3_TvpBwSZDM (s) (z) (DM) (m)

ajoutée le 2 déc. 2016, il y a 28 jours (2/8) et 1 mois et 1 an. (28/11)

https://www.youtube.com/watch?v=DMxHLIUTlCc (DM) (d)

ajoutée le 28 nov. 2017 (28/11), il y a 2 jours et 2 mois.

https://www.youtube.com/watch?v=WmdCFKoss0A (m) (d) (wa)

ajoutée le 8 juin 2017, il y a 23 jours et 7 mois. (23/7) à l'heure de la France le 31 janvier 2018.

https://www.youtube.com/watch?v=UCeWAOzSmIo (wa) (c)

ajoutée le 10 mars 2017, il y a 20 jours et 10 mois. (2010)

https://www.youtube.com/watch?v=bTUeD0KQc_c (c) (b)

ajoutée le 5 oct. 2010 (2010), il y a 25 jours et 3 mois (25/3) et 7 ans. (7)

https://www.youtube.com/watch?v=64M7pBiSB7A (b) (7)

ajoutée le 12 mai 2016, il y a 18 jours et 8 mois (18/8 anniversaire de Mika) et 1 an. (8/1)

https://www.youtube.com/watch?v=7TWhyTW8tRw (7)

ajoutée le 8 janv. 2016 (8/1), il y a 22 jours et 2 ans ou il y a 23 jours (23) et 2 ans à l'heure de l'Australie le 31 janvier 2018.

La vidéo a La Durée de 4:43.

Dans 23 minutes (23), il sera 4h43 a.m. en Australie .

0 notes