#but we know that taylor uses facial recognition technology to keep out stalkers and other people she doesn’t want at her shows

Text

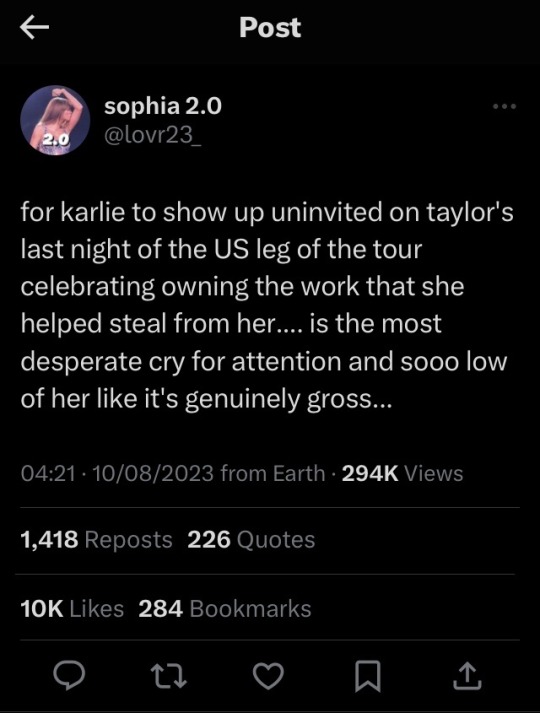

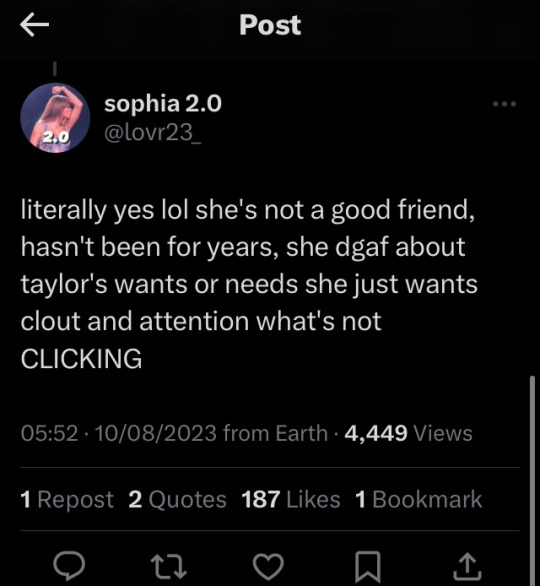

Will somebody please tell swifties that you don’t need to receive a royal invitation from the Palace of Swift in order to attend a concert?

You literally just have to buy a ticket.

EVERYONE who was at the eras tour, unless they were a special guest, “showed up uninvited.”

#also the irony of complaining that karlie wants attention while GIVING her that attention is so funny#shit swifties say#parasocial relationships#karlie kloss#kaylor#lol#swifties are so weird about that woman because they’re either convinced that she’s the devil reincarnate#who LITERALLY sold taylor’s music to scooter- no proof of that but facts don’t matter to swifties#or they think she’s taylor’s secret lesbian lover ijbol#it would be kinda mean for taylor to make her LOVER sit in the nosebleeds#but we know that taylor uses facial recognition technology to keep out stalkers and other people she doesn’t want at her shows#like… taylor has the power to ban people she doesn’t want at her concerts

29 notes

·

View notes

Link

SAN FRANCISCO — San Francisco, long at the heart of the technology revolution, took a stand against potential abuse on Tuesday by banning the use of facial recognition software by the police and other agencies.

The action, which came in an 8-to-1 vote by the Board of Supervisors, makes San Francisco the first major American city to block a tool that many police forces are turning to in the search for both small-time criminal suspects and perpetrators of mass carnage.

The authorities used the technology to help identify the suspect in the mass shooting at an Annapolis, Md., newspaper last June. But civil liberty groups have expressed unease about the technology’s potential abuse by government amid fears that it may shove the United States in the direction of an overly oppressive surveillance state.

Aaron Peskin, the city supervisor who sponsored the bill, said that it sent a particularly strong message to the nation, coming from a city transformed by tech.

“I think part of San Francisco being the real and perceived headquarters for all things tech also comes with a responsibility for its local legislators,” Mr. Peskin said. “We have an outsize responsibility to regulate the excesses of technology precisely because they are headquartered here.”

But critics said that rather than focusing on bans, the city should find ways to craft regulations that acknowledge the usefulness of face recognition. “It is ridiculous to deny the value of this technology in securing airports and border installations,” said Jonathan Turley, a constitutional law expert at George Washington University. “It is hard to deny that there is a public safety value to this technology.”

There will be an obligatory second vote next week, but it is seen as a formality.

Similar bans are under consideration in Oakland and in Somerville, Mass., outside of Boston. In Massachusetts, a bill in the State Legislature would put a moratorium on facial recognition and other remote biometric surveillance systems. On Capitol Hill, a billintroduced last month would ban users of commercial face recognition technology from collecting and sharing data for identifying or tracking consumers without their consent, although it does not address the government’s uses of the technology.

Matt Cagle, a lawyer with the A.C.L.U. of Northern California, on Tuesday summed up the broad concerns of facial recognition: The technology, he said, “provides government with unprecedented power to track people going about their daily lives. That’s incompatible with a healthy democracy.”

The San Francisco proposal, he added, “is really forward-looking and looks to prevent the unleashing of this dangerous technology against the public.”

In one form or another, facial recognition is already being used in many American airports and big stadiums, and by a number of other police departments. The pop star Taylor Swift has reportedly incorporated the technology at one of her shows, using it to help identify stalkers.

The facial recognition fight in San Francisco is largely theoretical — the police department does not currently deploy such technology, and it is only in use at the international airport and ports that are under federal jurisdiction and are not impacted by the legislation.

Some local homeless shelters use biometric finger scans and photos to track shelter usage, said Jennifer Friedenbach, the executive director of the Coalition on Homelessness. The practice has driven undocumented residents away from the shelters, she said.

Still, it has been a particularly charged topic in a city with a rich history of incubating dissent and individual liberties, but one that has also suffered lately from high rates of property crime.

The ban prohibits city agencies from using facial recognition technology, or information gleaned from external systems that use the technology. It is part of a larger legislative package devised to govern the use of surveillance technologies in the city that requires local agencies to create policies controlling their use of these tools. There are some exemptions, including one that would give prosecutors a way out if the transparency requirements might interfere with their investigations.

Still, the San Francisco Police Officers Association, an officers’ union, said the ban would hinder their members’ efforts to investigate crime.

“Although we understand that it’s not a 100 percent accurate technology yet, it’s still evolving,” said Tony Montoya, the president of the association. “I think it has been successful in at least providing leads to criminal investigators.”

Mr. Cagle and other experts said that it was difficult to know exactly how widespread the technology was in the United States. “Basically, governments and companies have been very secretive about where it’s being used, so the public is largely in the dark about the state of play,” he said.

But Dave Maass, the senior investigative researcher at the Electronic Frontier Foundation, offered a partial list of police departments that he said used the technology, including Las Vegas, Orlando, San Jose, San Diego, New York City, Boston, Detroit and Durham, N.C.

Other users, Mr. Maass said, include the Colorado Department of Public Safety, the Pinellas County Sheriff’s Office, the California Department of Justice and the Virginia State Police.

U.S. Customs and Border Protection is now using facial recognition in many airports and ports of sea entry. At airports, international travelers stand before cameras, then have their pictures matched against photos provided in their passport applications. The agency says the process complies with privacy laws, but it has still come in for criticism from the Electronic Privacy Information Center, which argues that the government, though promising travelers that they may opt out, has made it increasingly difficult to do so.

But there is a broader concern. “When you have the ability to track people in physical space, in effect everybody becomes subject to the surveillance of the government,” said Marc Rotenberg, the group’s executive director.

In the last few years, facial recognition technology has improved and spread at lightning speed, powered by the rise of cloud computing, machine learning and extremely precise digital cameras. That has meant once-unimaginable new features for users of smartphones, who may now use facial recognition to unlock their devices, and to tag and sort photos.

But some experts fear the advances are outstripping government’s ability to set guardrails to protect privacy.

Mr. Cagle and others said that a worst-case scenario already exists in China, where facial recognition is used to keep close tabs on the Uighurs, a largely Muslim minority, and is being integrated into a national digital panopticon system powered by roughly 200 million surveillance cameras.

American civil liberties advocates warn that the ability of facial surveillance to identify people at a distance, or online, without their knowledge or consent presents unique risks — threatening Americans’ ability to freely attend political protests or simply go about their business anonymously in public. Last year, Bradford L. Smith, the president of Microsoft, warned that the technology was too risky for companies to police on their own and asked Congress to oversee its use.

The battle over the technology intensified last year after two researchers published a study showing bias in some of the most popular facial surveillance systems. Called Gender Shades, the study reported that systems from IBM and Microsoft were much better at identifying the gender of white men’s faces than they were at identifying the gender of darker-skinned or female faces.

Another study this year reported similar problems with Amazon’s technology, called Rekognition. Microsoft and IBM have since said they improved their systems, while Amazon has said it updated its system since the researchers tested it and had found no differences in accuracy.

Warning that African-Americans, women and others could easily be incorrectly identified as suspects and wrongly arrested, the American Civil Liberties Union and other nonprofit groups last year called on Amazon to stop selling its technology to law enforcement.

But even with improvements in accuracy, civil rights advocates and researchers warn that, in the absence of government oversight, the technology could easily be misused to surveil immigrants or unfairly target African-Americans or low-income neighborhoods. In a recent essay, Luke Stark, a postdoctoral researcher at Microsoft Research Montreal, described facial surveillance as “the plutonium of artificial intelligence,” arguing that it should be “recognized as anathema to the health of human society, and heavily restricted as a result.”

Alvaro Bedoya, who directs Georgetown University’s Center on Privacy and Technology, said that more than 30 states allow local or state authorities, or the F.B.I., to search their driver’s license photos.

Mr. Bedoya said that these images are tantamount to being in a perpetual police lineup, as law enforcement agencies use them to check against the faces of suspected criminals. He said that the difference is that an algorithm, not a human being, is pointing to the suspect.

He also said that comprehensive regulation of the technology is sorely lacking. “This is the most pervasive and risky technology of the 21st century,” he said.

Daniel Castro, director of the Center for Data Innovation at the Information Technology and Innovation Foundation, is among those who opposed the idea of a ban. He said he would prefer to see face-recognition data accessible to the police only if they have secured a warrant from a judge, following guidelines the Supreme Court has set for other forms of electronic surveillance.

But proponents of the bans say they are an effort to hit the pause button and study the matter before harm is done. The proposed ban in Somerville, the Boston suburb, was sponsored by a councilor, Ben Ewen-Campen. “The government and the public don’t have a handle on what the technology is and what it will become,” he said on Tuesday.

Next door in Boston, Ed Davis, the former police commissioner, said it was “premature to be banning things.” Mr. Davis, who led the department during the Boston Marathon attack, said that no one in the United States wanted to follow the Chinese model.

But he also sees the potential. “This technology is still developing,” he said, “and as it improves, this could be the answer to a lot of problems we have about securing our communities.”

Joel Engardio, the vice president of Stop Crime SF, said that he agreed that current facial recognition technologies were flawed, but said that the city should not prohibit their use in the future, if they were improved.

“Instead of an outright ban, why not a moratorium?” Mr. Engardio asked. “Let’s keep the door open for when the technology improves. I’m not a fan of banning things when eventually it could actually be helpful.”

Kate Conger reported from San Francisco; Richard Fausset from Atlanta and Serge F. Kovaleski from New York. Reporting was also contributed by Natasha Singer and Adeel Hassan in New York.

Phroyd

11 notes

·

View notes

Text

FaceApp is a privacy nightmare, but so is almost everything else you do online

The more permissions you grant to programs like FaceApp, the more data they can collect. (FaceApp/)

Internet phenomena have a tendency to come on strong and totally take over our social feeds, seemingly out of nowhere. The current meme dominating just about every platform involves an app called FaceApp, which uses artificial intelligence to apply surprisingly convincing filters to pictures of people. The app recently introduced a filter that shows what you could look like when you're old. The results are somewhat convincing and rather entertaining. But, as with all app-based fun, it comes a cost involving your personal info, privacy, and security.

This isn’t the first time the FaceApp has spread around the internet. Back in 2017, the app generated attention when it debuted back in 2017. We’ve also seen this kind of phenomena plenty of times before, including Snapchat’s gender swap filter, which was everywhere just a few weeks ago.

The backlash to the FaceApp, however, has been swift and louder than usual because the developer operates out of Russia. To date, however, there’s no proof that the company has ties to the Russian government or has any bad intentions for the data. But, with the 2020 U.S. election getting into its tumultuous swing and years of news reports about Russia’s involvement in the 2016 voting process, users are justifiably on edge.

The company has already issued a statement about the security concerns.

When you download the program, it asks for permission to access your photos, send you notifications, and activate your camera. We’re so used to this process of clicking through permission roadblocks that it’s easy to become numb to it. Granting access to our photo library is, in some ways, the new clicking blindly to agree that we’ve read the iTunes terms of service agreement. We’re not entirely sure what we’re getting into, but there’s fun on the other side of that dialog box and we want to hurry up and get to it.

If you sign up for FaceApp, however, you are giving up some of your personal information and any content you generate through the app. As a Twitter user pointed out, agreeing to the app’s terms of service grants it very liberal usage of whatever content you upload or create. The terms contain troubling phrases like “commercial” and “sub-licensable,” which means your images—along with information associated with them—could end up in advertisements. This doesn’t mean that the company “owns” your photos like some news outlets have suggested, but rather that they can use them for pretty much whatever they want down the road.

If this sounds familiar, it's because it's somewhat similar to the agreement for many other social networks. Twitter, for instance, uses the following language:

“By submitting, posting or displaying Content on or through the Services, you grant us a worldwide, non-exclusive, royalty-free license (with the right to sublicense) to use, copy, reproduce, process, adapt, modify, publish, transmit, display and distribute such Content in any and all media or distribution methods (now known or later developed).”

You’ll notice that blurb doesn’t include the phrase “commercial” usage, which elevates its security over FaceApp. But, Twitter does have rules that allow “ecosystem partners” to interact with your content according to rules that you almost certainly haven’t read.

Facebook has a similar clause in its terms of service, which read,

“….when you share, post, or upload content that is covered by intellectual property rights (like photos or videos) on or in connection with our Products, you grant us a non-exclusive, transferable, sub-licensable, royalty-free, and worldwide license to host, use, distribute, modify, run, copy, publicly perform or display, translate, and create derivative works of your content.”

Again, Facebook leaves out the “commercial” aspect of it, which is an upgrade from FaceApp’s agreement, but you’re still giving the company a generous license.

You have likely seen a lot of your friends looking old on social media this week. You can thank FaceApp. (FaceApp/)

Things get muddier once you start dipping into Facebook apps that have their own terms, which are guided by overarching platform rules, but vary widely from title to title. So, if you’ve ever installed a Facebook app that let you see what your tombstone will say or other fun-stupid things, you may have given up more information than you intended anyway.

But what about the stuff you didn't mean to share?

When you first open the FaceApp, you can select images to upload and share. The app does its processing on its own servers rather than on the device, so you have to agree to upload an image before you get the sweet payoff of your filtered image. Some users have noticed that you can select single images to upload even if you haven’t given the app access to your photos at all. This certainly seems nefarious, but as Tech Crunch points out, this is actually an iOS feature that debuted back in iOS 11. You can choose a specific image for the app to access without granting it full view of your camera roll and iCloud libraries.

That’s not to say that something nefarious couldn’t happen, but right now, there’s no specific threat we know about. So, while it’s OK to feel a little silly for downloading and using the app, it’s also not something to panic over—at least any more than it’s appropriate to constantly panic here in 2019.

Going forward, however, you can likely expect more and more apps to try and capture information about your face. Companies like Facebook have their own facial recognition technology as well as billions of photos useful for training it. Not every company has that luxury, however.

Amazon, for instance, has a controversial facial recognition tech called Rekognition that relies on exterior image databases from sources like law enforcement. Sports stadiums are using facial recognition to learn more about fans that attend events, and Taylor Swift's tour used it to try and make sure stalkers weren't showing up to the venues. Those technologies work better with deeper reference databases full of photos, so if a company like FaceApp wanted to sell its trove of accurately identified selfies, they would be within their rights to do so and finding a buyer wouldn't be challenging.

Your info is likely already in several databases you don't even know about like data brokers and people-finder sites. Adding facial info to those data caches could only make them more valuable.

For now FaceApp says it's not sharing your info with third parties, but it could down the road. If you want the app to remove your data, you can do it, but the process isn't great and involves sending an email to the company. Even still, doing so won't necessarily break the license you've already given the app to use your content. For now, you can just keep using the app or delete it before you feed it any more personal info. If you're opting for the latter, you're probably best served by deleting most of your other apps while you're at it.

from Popular Photography | RSS https://ift.tt/2xPOoXF

0 notes

Text

FaceApp is a privacy nightmare, but so is almost everything else you do online

New Post has been published on https://nexcraft.co/faceapp-is-a-privacy-nightmare-but-so-is-almost-everything-else-you-do-online/

FaceApp is a privacy nightmare, but so is almost everything else you do online

The more permissions you grant to programs like FaceApp, the more data they can collect. (FaceApp/)

Internet phenomena have a tendency to come on strong and totally take over our social feeds, seemingly out of nowhere. The current meme dominating just about every platform involves an app called FaceApp, which uses artificial intelligence to apply surprisingly convincing filters to pictures of people. The app recently introduced a filter that shows what you could look like when you’re old. The results are somewhat convincing and rather entertaining. But, as with all app-based fun, it comes a cost involving your personal info, privacy, and security.

This isn’t the first time the FaceApp has spread around the internet. The app generated attention when it debuted back in 2017. We’ve also seen this kind of phenomena plenty of times before, including Snapchat’s gender swap filter, which was everywhere just a few weeks ago.

The backlash to the FaceApp, however, has been swift and louder than usual because the developer operates out of Russia. To date, however, there’s no proof that the company has ties to the Russian government or has any bad intentions for the data. But, with the 2020 U.S. election getting into its tumultuous swing and years of news reports about Russia’s involvement in the 2016 voting process, users are justifiably on edge.

The company has already issued a statement about the security concerns.

When you download the program, it asks for permission to access your photos, send you notifications, and activate your camera. We’re so used to this process of clicking through permission roadblocks that it’s easy to become numb to it. Granting access to our photo library is, in some ways, the new clicking blindly to agree that we’ve ready the iTunes terms of service agreement. We’re not entirely sure what we’re getting into, but there’s fun on the other side of that dialog box and we want to hurry up and get to it.

If you sign up for FaceApp, however, you are giving up some of your personal information and any content you generate through the app. As a Twitter user pointed out, agreeing to the app’s terms of service grants it very liberal usage of whatever content you upload or create. The terms contain troubling phrases like “commercial” and “sub-licensable,” which means your images—along with information associated with them—could end up in advertisements. This doesn’t mean that the company “owns” your photos like some news outlets have suggested, but rather that they can use them for pretty much whatever they want down the road.

If this sounds familiar, it’s because it’s somewhat similar to the agreement for many other social networks. Twitter, for instance, uses the following language:

“By submitting, posting or displaying Content on or through the Services, you grant us a worldwide, non-exclusive, royalty-free license (with the right to sublicense) to use, copy, reproduce, process, adapt, modify, publish, transmit, display and distribute such Content in any and all media or distribution methods (now known or later developed).”

You’ll notice that blurb doesn’t include the phrase “commercial” usage, which elevates its security over FaceApp. But, Twitter does have rules that allow “ecosystem partners” to interact with your content according to rules that you almost certainly haven’t read.

Facebook has a similar clause in its terms of service, which read,

“….when you share, post, or upload content that is covered by intellectual property rights (like photos or videos) on or in connection with our Products, you grant us a non-exclusive, transferable, sub-licensable, royalty-free, and worldwide license to host, use, distribute, modify, run, copy, publicly perform or display, translate, and create derivative works of your content.”

Again, Facebook leaves out the “commercial” aspect of it, which is an upgrade from FaceApp’s agreement, but you’re still giving the company a generous license.

You have likely seen a lot of your friends looking old on social media this week. You can thank FaceApp. (FaceApp/)

Things get muddier once you start dipping into Facebook apps that have their own terms, which are guided by overarching platform rules, but vary widely from title to title. So, if you’ve ever installed a Facebook app that let you see what your tombstone will say or other fun-stupid things, you may have given up more information than you intended anyway.

But what about the stuff you didn’t mean to share?

When you first open the FaceApp, you can select images to upload and share. The app does its processing on its own servers rather than on the device, so you have to agree to upload an image before you get the sweet payoff of your filtered image. Some users have noticed that you can select single images to upload even if you haven’t given the app access to your photos at all. This certainly seems nefarious, but as Tech Crunch points out, this is actually an iOS feature that debuted back in iOS 11. You can choose a specific image for the app to access without granting it full view of your camera roll and iCloud libraries.

If you give an app wholesale access to your photos, that means it can see anything you have hanging around, including screenshots of personal information. Beyond that, it may also access metadata associated with the image file that could contain things like GPS data from when the photo was taken. It’s a lot of potential info, but there’s no real evidence the app is uploading your entire catalog in the background.

Right now, there’s no specific threat we know about with FaceApp beyond a general distrust toward companies that collect data. So, while it’s OK to feel a little silly for downloading and using the app, it’s also not something to panic over—at least any more than it’s appropriate to constantly panic here in 2019. The app is sending your info to services like Google’s DoubleClick platform, but that’s relatively common for apps at this point.

Face Snatchers

Going forward, however, you can likely expect more and more apps to try and capture information about your face. Companies like Facebook have their own facial recognition technology as well as billions of photos useful for training it. Not every company has that luxury, however.

Amazon, for instance, has a controversial facial recognition tech called Rekognition that relies on exterior image databases from sources like law enforcement. Sports stadiums are using facial recognition to learn more about fans that attend events, and Taylor Swift’s tour used it to try and make sure stalkers weren’t showing up to the venues. Those technologies work better with deeper reference databases full of photos, so if a company like FaceApp wanted to sell its trove of accurately identified selfies, they would be within their rights to do so and finding a buyer wouldn’t be challenging.

Your info is likely already in several databases you don’t even know about like data brokers and people-finder sites. Adding facial info to those data caches could only make them more valuable.

For now FaceApp says it’s not sharing your info with third parties, but it could down the road. If you want the app to remove your data, you can do it, but the process isn’t great and involves sending an email to the company. Even still, doing so won’t necessarily break the license you’ve already given the app to use your content. For now, you can just keep using the app or delete it before you feed it any more personal info. If you’re opting for the latter, you’re probably best served by deleting most of your other apps while you’re at it.

Written By Stan Horaczek

0 notes

Text

San Francisco Bans Facial Recognition Technology

SAN FRANCISCO — San Francisco, long at the heart of the technology revolution, took a stand against potential abuse on Tuesday by banning the use of facial recognition software by the police and other agencies.

The action, which came in an 8-to-1 vote by the Board of Supervisors, makes San Francisco the first major American city to block a tool that many police forces are turning to in the search for both small-time criminal suspects and perpetrators of mass carnage.

The authorities used the technology to help identify the suspect in the mass shooting at an Annapolis, Md., newspaper last June. But civil liberty groups have expressed unease about the technology’s potential abuse by government amid fears that it may shove the United States in the direction of an overly oppressive surveillance state.

Aaron Peskin, the city supervisor who sponsored the bill, said that it sent a particularly strong message to the nation, coming from a city transformed by tech.

“I think part of San Francisco being the real and perceived headquarters for all things tech also comes with a responsibility for its local legislators,” Mr. Peskin said. “We have an outsize responsibility to regulate the excesses of technology precisely because they are headquartered here.”

But critics said that rather than focusing on bans, the city should find ways to craft regulations that acknowledge the usefulness of face recognition. “It is ridiculous to deny the value of this technology in securing airports and border installations,” said Jonathan Turley, a constitutional law expert at George Washington University. “It is hard to deny that there is a public safety value to this technology.”

There will be an obligatory second vote next week, but it is seen as a formality.

Similar bans are under consideration in Oakland and in Somerville, Mass., outside of Boston. In Massachusetts, a bill in the State Legislature would put a moratorium on facial recognition and other remote biometric surveillance systems. On Capitol Hill, a bill introduced last month would ban users of commercial face recognition technology from collecting and sharing data for identifying or tracking consumers without their consent, although it does not address the government’s uses of the technology.

Matt Cagle, a lawyer with the A.C.L.U. of Northern California, on Tuesday summed up the broad concerns of facial recognition: The technology, he said, “provides government with unprecedented power to track people going about their daily lives. That’s incompatible with a healthy democracy.”

The San Francisco proposal, he added, “is really forward-looking and looks to prevent the unleashing of this dangerous technology against the public.”

In one form or another, facial recognition is already being used in many American airports and big stadiums, and by a number of other police departments. The pop star Taylor Swift has reportedly incorporated the technology at one of her shows, using it to help identify stalkers.

The facial recognition fight in San Francisco is largely theoretical — the police department does not currently deploy such technology, and it is only in use at the international airport and ports that are under federal jurisdiction and are not impacted by the legislation.

Some local homeless shelters use biometric finger scans and photos to track shelter usage, said Jennifer Friedenbach, the executive director of the Coalition on Homelessness. The practice has driven undocumented residents away from the shelters, she said.

Still, it has been a particularly charged topic in a city with a rich history of incubating dissent and individual liberties, but one that has also suffered lately from high rates of property crime.

The ban prohibits city agencies from using facial recognition technology, or information gleaned from external systems that use the technology. It is part of a larger legislative package devised to govern the use of surveillance technologies in the city that requires local agencies to create policies controlling their use of these tools. There are some exemptions, including one that would give prosecutors a way out if the transparency requirements might interfere with their investigations.

Still, the San Francisco Police Officers Association, an officers’ union, said the ban would hinder their members’ efforts to investigate crime.

“Although we understand that it’s not a 100 percent accurate technology yet, it’s still evolving,” said Tony Montoya, the president of the association. “I think it has been successful in at least providing leads to criminal investigators.”

Mr. Cagle and other experts said that it was difficult to know exactly how widespread the technology was in the United States. “Basically, governments and companies have been very secretive about where it’s being used, so the public is largely in the dark about the state of play,” he said.

But Dave Maass, the senior investigative researcher at the Electronic Frontier Foundation, offered a partial list of police departments that he said used the technology, including Las Vegas, Orlando, San Jose, San Diego, New York City, Boston, Detroit and Durham, N.C.

Other users, Mr. Maass said, include the Colorado Department of Public Safety, the Pinellas County Sheriff’s Office, the California Department of Justice and the Virginia State Police.

U.S. Customs and Border Protection is now using facial recognition in many airports and ports of sea entry. At airports, international travelers stand before cameras, then have their pictures matched against photos provided in their passport applications. The agency says the process complies with privacy laws, but it has still come in for criticism from the Electronic Privacy Information Center, which argues that the government, though promising travelers that they may opt out, has made it increasingly difficult to do so.

But there is a broader concern. “When you have the ability to track people in physical space, in effect everybody becomes subject to the surveillance of the government,” said Marc Rotenberg, the group’s executive director.

In the last few years, facial recognition technology has improved and spread at lightning speed, powered by the rise of cloud computing, machine learning and extremely precise digital cameras. That has meant once-unimaginable new features for users of smartphones, who may now use facial recognition to unlock their devices, and to tag and sort photos.

But some experts fear the advances are outstripping government’s ability to set guardrails to protect privacy.

Mr. Cagle and others said that a worst-case scenario already exists in China, where facial recognition is used to keep close tabs on the Uighurs, a largely Muslim minority, and is being integrated into a national digital panopticon system powered by roughly 200 million surveillance cameras.

American civil liberties advocates warn that the ability of facial surveillance to identify people at a distance, or online, without their knowledge or consent presents unique risks — threatening Americans’ ability to freely attend political protests or simply go about their business anonymously in public. Last year, Bradford L. Smith, the president of Microsoft, warned that the technology was too risky for companies to police on their own and asked Congress to oversee its use.

The battle over the technology intensified last year after two researchers published a study showing bias in some of the most popular facial surveillance systems. Called Gender Shades, the study reported that systems from IBM and Microsoft were much better at identifying the gender of white men’s faces than they were at identifying the gender of darker-skinned or female faces.

Another study this year reported similar problems with Amazon’s technology, called Rekognition. Microsoft and IBM have since said they improved their systems, while Amazon has said it updated its system since the researchers tested it and had found no differences in accuracy.

Warning that African-Americans, women and others could easily be incorrectly identified as suspects and wrongly arrested, the American Civil Liberties Union and other nonprofit groups last year called on Amazon to stop selling its technology to law enforcement.

But even with improvements in accuracy, civil rights advocates and researchers warn that, in the absence of government oversight, the technology could easily be misused to surveil immigrants or unfairly target African-Americans or low-income neighborhoods. In a recent essay, Luke Stark, a postdoctoral researcher at Microsoft Research Montreal, described facial surveillance as “the plutonium of artificial intelligence,” arguing that it should be “recognized as anathema to the health of human society, and heavily restricted as a result.”

Alvaro Bedoya, who directs Georgetown University’s Center on Privacy and Technology, said that more than 30 states allow local or state authorities, or the F.B.I., to search their driver’s license photos.

Mr. Bedoya said that these images are tantamount to being in a perpetual police lineup, as law enforcement agencies use them to check against the faces of suspected criminals. He said that the difference is that an algorithm, not a human being, is pointing to the suspect.

He also said that comprehensive regulation of the technology is sorely lacking. “This is the most pervasive and risky surveillance technology of the 21st century,” he said.

Daniel Castro, director of the Center for Data Innovation at the Information Technology and Innovation Foundation, is among those who opposed the idea of a ban. He said he would prefer to see face-recognition data accessible to the police only if they have secured a warrant from a judge, following guidelines the Supreme Court has set for other forms of electronic surveillance.

But proponents of the bans say they are an effort to hit the pause button and study the matter before harm is done. The proposed ban in Somerville, the Boston suburb, was sponsored by a councilor, Ben Ewen-Campen. “The government and the public don’t have a handle on what the technology is and what it will become,” he said on Tuesday.

Next door in Boston, Ed Davis, the former police commissioner, said it was “premature to be banning things.” Mr. Davis, who led the department during the Boston Marathon attack, said that no one in the United States wanted to follow the Chinese model.

But he also sees the potential. “This technology is still developing,” he said, “and as it improves, this could be the answer to a lot of problems we have about securing our communities.”

Joel Engardio, the vice president of Stop Crime SF, said that he agreed that current facial recognition technologies were flawed, but said that the city should not prohibit their use in the future, if they were improved.

“Instead of an outright ban, why not a moratorium?” Mr. Engardio asked. “Let’s keep the door open for when the technology improves. I’m not a fan of banning things when eventually it could actually be helpful.”

Sahred From Source link Technology

from WordPress http://bit.ly/2YCcSiL

via IFTTT

0 notes