#InfoSec

Text

OUT NOW: my most in depth investigation yet

in this article we see just HOW little a company handling highly sensitive data can care about security and yes it's even worse than you think

content warning: mentions of abuse/controlling behaviour

support me on ko-fi if you like my work and want to read (non-hacking) articles early!

#FuckStalkerware#maia arson crimew#stalkerware#security#infosec#data breach#investigative journalism

8K notes

·

View notes

Text

How I got scammed

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/02/05/cyber-dunning-kruger/#swiss-cheese-security

I wuz robbed.

More specifically, I was tricked by a phone-phisher pretending to be from my bank, and he convinced me to hand over my credit-card number, then did $8,000+ worth of fraud with it before I figured out what happened. And then he tried to do it again, a week later!

Here's what happened. Over the Christmas holiday, I traveled to New Orleans. The day we landed, I hit a Chase ATM in the French Quarter for some cash, but the machine declined the transaction. Later in the day, we passed a little credit-union's ATM and I used that one instead (I bank with a one-branch credit union and generally there's no fee to use another CU's ATM).

A couple days later, I got a call from my credit union. It was a weekend, during the holiday, and the guy who called was obviously working for my little CU's after-hours fraud contractor. I'd dealt with these folks before – they service a ton of little credit unions, and generally the call quality isn't great and the staff will often make mistakes like mispronouncing my credit union's name.

That's what happened here – the guy was on a terrible VOIP line and I had to ask him to readjust his mic before I could even understand him. He mispronounced my bank's name and then asked if I'd attempted to spend $1,000 at an Apple Store in NYC that day. No, I said, and groaned inwardly. What a pain in the ass. Obviously, I'd had my ATM card skimmed – either at the Chase ATM (maybe that was why the transaction failed), or at the other credit union's ATM (it had been a very cheap looking system).

I told the guy to block my card and we started going through the tedious business of running through recent transactions, verifying my identity, and so on. It dragged on and on. These were my last hours in New Orleans, and I'd left my family at home and gone out to see some of the pre-Mardi Gras krewe celebrations and get a muffalata, and I could tell that I was going to run out of time before I finished talking to this guy.

"Look," I said, "you've got all my details, you've frozen the card. I gotta go home and meet my family and head to the airport. I'll call you back on the after-hours number once I'm through security, all right?"

He was frustrated, but that was his problem. I hung up, got my sandwich, went to the airport, and we checked in. It was total chaos: an Alaska Air 737 Max had just lost its door-plug in mid-air and every Max in every airline's fleet had been grounded, so the check in was crammed with people trying to rebook. We got through to the gate and I sat down to call the CU's after-hours line. The person on the other end told me that she could only handle lost and stolen cards, not fraud, and given that I'd already frozen the card, I should just drop by the branch on Monday to get a new card.

We flew home, and later the next day, I logged into my account and made a list of all the fraudulent transactions and printed them out, and on Monday morning, I drove to the bank to deal with all the paperwork. The folks at the CU were even more pissed than I was. The fraud that run up to more than $8,000, and if Visa refused to take it out of the merchants where the card had been used, my little credit union would have to eat the loss.

I agreed and commiserated. I also pointed out that their outsource, after-hours fraud center bore some blame here: I'd canceled the card on Saturday but most of the fraud had taken place on Sunday. Something had gone wrong.

One cool thing about banking at a tiny credit-union is that you end up talking to people who have actual authority, responsibility and agency. It turned out the the woman who was processing my fraud paperwork was a VP, and she decided to look into it. A few minutes later she came back and told me that the fraud center had no record of having called me on Saturday.

"That was the fraudster," she said.

Oh, shit. I frantically rewound my conversation, trying to figure out if this could possibly be true. I hadn't given him anything apart from some very anodyne info, like what city I live in (which is in my Wikipedia entry), my date of birth (ditto), and the last four digits of my card.

Wait a sec.

He hadn't asked for the last four digits. He'd asked for the last seven digits. At the time, I'd found that very frustrating, but now – "The first nine digits are the same for every card you issue, right?" I asked the VP.

I'd given him my entire card number.

Goddammit.

The thing is, I know a lot about fraud. I'm writing an entire series of novels about this kind of scam:

https://us.macmillan.com/books/9781250865878/thebezzle

And most summers, I go to Defcon, and I always go to the "social engineering" competitions where an audience listens as a hacker in a soundproof booth cold-calls merchants (with the owner's permission) and tries to con whoever answers the phone into giving up important information.

But I'd been conned.

Now look, I knew I could be conned. I'd been conned before, 13 years ago, by a Twitter worm that successfully phished out of my password via DM:

https://locusmag.com/2010/05/cory-doctorow-persistence-pays-parasites/

That scam had required a miracle of timing. It started the day before, when I'd reset my phone to factory defaults and reinstalled all my apps. That same day, I'd published two big online features that a lot of people were talking about. The next morning, we were late getting out of the house, so by the time my wife and I dropped the kid at daycare and went to the coffee shop, it had a long line. Rather than wait in line with me, my wife sat down to read a newspaper, and so I pulled out my phone and found a Twitter DM from a friend asking "is this you?" with a URL.

Assuming this was something to do with those articles I'd published the day before, I clicked the link and got prompted for my Twitter login again. This had been happening all day because I'd done that mobile reinstall the day before and all my stored passwords had been wiped. I entered it but the page timed out. By that time, the coffees were ready. We sat and chatted for a bit, then went our own ways.

I was on my way to the office when I checked my phone again. I had a whole string of DMs from other friends. Each one read "is this you?" and had a URL.

Oh, shit, I'd been phished.

If I hadn't reinstalled my mobile OS the day before. If I hadn't published a pair of big articles the day before. If we hadn't been late getting out the door. If we had been a little more late getting out the door (so that I'd have seen the multiple DMs, which would have tipped me off).

There's a name for this in security circles: "Swiss-cheese security." Imagine multiple slices of Swiss cheese all stacked up, the holes in one slice blocked by the slice below it. All the slices move around and every now and again, a hole opens up that goes all the way through the stack. Zap!

The fraudster who tricked me out of my credit card number had Swiss cheese security on his side. Yes, he spoofed my bank's caller ID, but that wouldn't have been enough to fool me if I hadn't been on vacation, having just used a pair of dodgy ATMs, in a hurry and distracted. If the 737 Max disaster hadn't happened that day and I'd had more time at the gate, I'd have called my bank back. If my bank didn't use a slightly crappy outsource/out-of-hours fraud center that I'd already had sub-par experiences with. If, if, if.

The next Friday night, at 5:30PM, the fraudster called me back, pretending to be the bank's after-hours center. He told me my card had been compromised again. But: I hadn't removed my card from my wallet since I'd had it replaced. Also, it was half an hour after the bank closed for the long weekend, a very fraud-friendly time. And when I told him I'd call him back and asked for the after-hours fraud number, he got very threatening and warned me that because I'd now been notified about the fraud that any losses the bank suffered after I hung up the phone without completing the fraud protocol would be billed to me. I hung up on him. He called me back immediately. I hung up on him again and put my phone into do-not-disturb.

The following Tuesday, I called my bank and spoke to their head of risk-management. I went through everything I'd figured out about the fraudsters, and she told me that credit unions across America were being hit by this scam, by fraudsters who somehow knew CU customers' phone numbers and names, and which CU they banked at. This was key: my phone number is a reasonably well-kept secret. You can get it by spending money with Equifax or another nonconsensual doxing giant, but you can't just google it or get it at any of the free services. The fact that the fraudsters knew where I banked, knew my name, and had my phone number had really caused me to let down my guard.

The risk management person and I talked about how the credit union could mitigate this attack: for example, by better-training the after-hours card-loss staff to be on the alert for calls from people who had been contacted about supposed card fraud. We also went through the confusing phone-menu that had funneled me to the wrong department when I called in, and worked through alternate wording for the menu system that would be clearer (this is the best part about banking with a small CU – you can talk directly to the responsible person and have a productive discussion!). I even convinced her to buy a ticket to next summer's Defcon to attend the social engineering competitions.

There's a leak somewhere in the CU systems' supply chain. Maybe it's Zelle, or the small number of corresponding banks that CUs rely on for SWIFT transaction forwarding. Maybe it's even those after-hours fraud/card-loss centers. But all across the USA, CU customers are getting calls with spoofed caller IDs from fraudsters who know their registered phone numbers and where they bank.

I've been mulling this over for most of a month now, and one thing has really been eating at me: the way that AI is going to make this kind of problem much worse.

Not because AI is going to commit fraud, though.

One of the truest things I know about AI is: "we're nowhere near a place where bots can steal your job, we're certainly at the point where your boss can be suckered into firing you and replacing you with a bot that fails at doing your job":

https://pluralistic.net/2024/01/15/passive-income-brainworms/#four-hour-work-week

I trusted this fraudster specifically because I knew that the outsource, out-of-hours contractors my bank uses have crummy headsets, don't know how to pronounce my bank's name, and have long-ass, tedious, and pointless standardized questionnaires they run through when taking fraud reports. All of this created cover for the fraudster, whose plausibility was enhanced by the rough edges in his pitch - they didn't raise red flags.

As this kind of fraud reporting and fraud contacting is increasingly outsourced to AI, bank customers will be conditioned to dealing with semi-automated systems that make stupid mistakes, force you to repeat yourself, ask you questions they should already know the answers to, and so on. In other words, AI will groom bank customers to be phishing victims.

This is a mistake the finance sector keeps making. 15 years ago, Ben Laurie excoriated the UK banks for their "Verified By Visa" system, which validated credit card transactions by taking users to a third party site and requiring them to re-enter parts of their password there:

https://web.archive.org/web/20090331094020/http://www.links.org/?p=591

This is exactly how a phishing attack works. As Laurie pointed out, this was the banks training their customers to be phished.

I came close to getting phished again today, as it happens. I got back from Berlin on Friday and my suitcase was damaged in transit. I've been dealing with the airline, which means I've really been dealing with their third-party, outsource luggage-damage service. They have a terrible website, their emails are incoherent, and they officiously demand the same information over and over again.

This morning, I got a scam email asking me for more information to complete my damaged luggage claim. It was a terrible email, from a noreply@ email address, and it was vague, officious, and dishearteningly bureaucratic. For just a moment, my finger hovered over the phishing link, and then I looked a little closer.

On any other day, it wouldn't have had a chance. Today – right after I had my luggage wrecked, while I'm still jetlagged, and after days of dealing with my airline's terrible outsource partner – it almost worked.

So much fraud is a Swiss-cheese attack, and while companies can't close all the holes, they can stop creating new ones.

Meanwhile, I'll continue to post about it whenever I get scammed. I find the inner workings of scams to be fascinating, and it's also important to remind people that everyone is vulnerable sometimes, and scammers are willing to try endless variations until an attack lands at just the right place, at just the right time, in just the right way. If you think you can't get scammed, that makes you especially vulnerable:

https://pluralistic.net/2023/02/24/passive-income/#swiss-cheese-security

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

10K notes

·

View notes

Text

Google Drive users are reporting that recent files stored in the cloud have suddenly disappeared, with the cloud service reverting to a storage snapshot as it was around April-May 2023.

Hope you aren't using Google Drive for anything important.

1K notes

·

View notes

Text

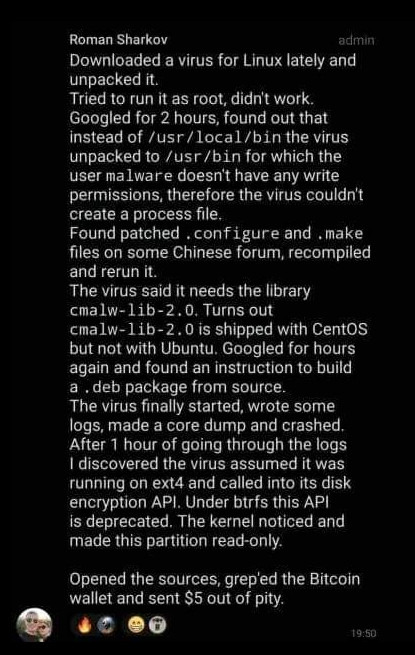

Lmao. why Linux is safer than other oses?

869 notes

·

View notes

Text

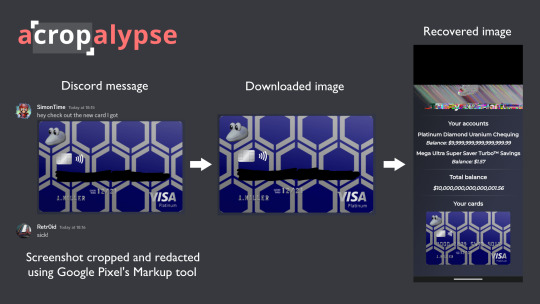

if you're a pixel phone user, your cropped/redacted screenshots might not be redacted

a bug called "acropalypse" has been discovered that can recover the original contents

Source: https://twitter.com/ItsSimonTime/status/1636857478263750656

More info:

This affects the built-in Markup app for all models from Pixel 3 through Pixel 7.

Images uploaded to Discord before January 2023 have not been sanitized against this.

Here's a blog post by one of the authors: https://www.da.vidbuchanan.co.uk/blog/exploiting-acropalypse.html

What you should do:

Update your phone ASAP. Update instructions here. Follow the section for security updates and ensure your Android security update is from March 2023.

Check your social media for vulnerable images you may have uploaded. You can use the demo app to test your images: https://acropalypse.app/

#my:poast#security#infosec#acropalypse#google pixel#hopefully this one is easier to read?? idk#stay safe y'all this one is a fucking doozy and a half

1K notes

·

View notes

Text

Masterpost of informational posts

All posts are written for everyone, including those with no prior computer science education. If you know how to write an email and have used a computer at least sparingly, you are qualified for understanding these posts. :)

What is a DDoS

What are the types of malware

Vulnerabilities and Exploits (old and somewhat outdated)

Example of how malware can enter your computer

What are botnets and sinkholes

How does passwords work

Guide for getting a safer password

Here are various malware-related posts you may find interesting:

Stuxnet

The North Korean bank heist

5 vintage famous malware

Trickbot the Trickster malware (old and not up to date)

jRAT the spy and controller (old and not up to date)

Evil malware

New to Linux? Here's a quick guide for using the terminal:

Part 1: Introduction

Part 2: Commands

Part 3: Flags

Part 4: Shortcuts

If you have any questions, request for a topic I should write about, or if there is something in these posts that you don't understand, please send me a message/ask and I'll try my best to help you. :)

- unichrome

Bonus: RGB terminal

#datatag#masterpost#malware#cybersecurity#infosec#security#hacking#linux#information#informative#computer science

218 notes

·

View notes

Photo

#giphy#retro#90s#vhs#tumblr#computer#cyberpunk#error#cybersecurity#fuzzyghost#computing#password#terminal#infosec#access denied#logging on

179 notes

·

View notes

Text

Commission- Thread Hunting - WIP

#cyberpunk#cyberpunkart#synthwave#scifi#scifiart#cyborg#cyberpunk girl#cover art#heavy metal magazine#heavymetalmagazine#infosec#hacking#blue team#noai#noaiart

62 notes

·

View notes

Text

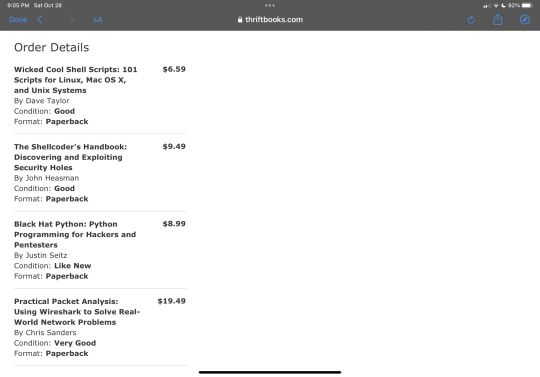

I love you thriftbooks <3

Literally could get four books for less than the price of one on Amazon.

But since I'm broke, I love you Oceanofpdf even more <3

(I just downloaded all four of those books for free instead!! :D)

#thriftbooks#thrift books#books books books#codeblr#cybersecurity#codetober#compsci#coding#linux#hacking#hack the planet#hacker#infosec#programmer#programming#i love reading

87 notes

·

View notes

Text

Can't bots just trust we're human without the robot dance-off? 😄

#linux#linuxfan#linuxuser#ubuntu#debian#dev#devops#webdevelopment#programmingmemes#linuxmemes#memes#cat#coding#developer#tech#ethicalhacking#computerscience#coder#security#infosec#cyber

125 notes

·

View notes

Text

NEW FROM ME: so i guess i hacked samsung?!

a short bug bounty write up on how i randomly stumbled onto samsung cloud infrastructure

(not an april fools bit)

5K notes

·

View notes

Text

Demon-haunted computers are back, baby

Catch me in Miami! I'll be at Books and Books in Coral Gables on Jan 22 at 8PM.

As a science fiction writer, I am professionally irritated by a lot of sf movies. Not only do those writers get paid a lot more than I do, they insist on including things like "self-destruct" buttons on the bridges of their starships.

Look, I get it. When the evil empire is closing in on your flagship with its secret transdimensional technology, it's important that you keep those secrets out of the emperor's hand. An irrevocable self-destruct switch there on the bridge gets the job done! (It has to be irrevocable, otherwise the baddies'll just swarm the bridge and toggle it off).

But c'mon. If there's a facility built into your spaceship that causes it to explode no matter what the people on the bridge do, that is also a pretty big security risk! What if the bad guy figures out how to hijack the measure that – by design – the people who depend on the spaceship as a matter of life and death can't detect or override?

I mean, sure, you can try to simplify that self-destruct system to make it easier to audit and assure yourself that it doesn't have any bugs in it, but remember Schneier's Law: anyone can design a security system that works so well that they themselves can't think of a flaw in it. That doesn't mean you've made a security system that works – only that you've made a security system that works on people stupider than you.

I know it's weird to be worried about realism in movies that pretend we will ever find a practical means to visit other star systems and shuttle back and forth between them (which we are very, very unlikely to do):

https://pluralistic.net/2024/01/09/astrobezzle/#send-robots-instead

But this kind of foolishness galls me. It galls me even more when it happens in the real world of technology design, which is why I've spent the past quarter-century being very cross about Digital Rights Management in general, and trusted computing in particular.

It all starts in 2002, when a team from Microsoft visited our offices at EFF to tell us about this new thing they'd dreamed up called "trusted computing":

https://pluralistic.net/2020/12/05/trusting-trust/#thompsons-devil

The big idea was to stick a second computer inside your computer, a very secure little co-processor, that you couldn't access directly, let alone reprogram or interfere with. As far as this "trusted platform module" was concerned, you were the enemy. The "trust" in trusted computing was about other people being able to trust your computer, even if they didn't trust you.

So that little TPM would do all kinds of cute tricks. It could observe and produce a cryptographically signed manifest of the entire boot-chain of your computer, which was meant to be an unforgeable certificate attesting to which kind of computer you were running and what software you were running on it. That meant that programs on other computers could decide whether to talk to your computer based on whether they agreed with your choices about which code to run.

This process, called "remote attestation," is generally billed as a way to identify and block computers that have been compromised by malware, or to identify gamers who are running cheats and refuse to play with them. But inevitably it turns into a way to refuse service to computers that have privacy blockers turned on, or are running stream-ripping software, or whose owners are blocking ads:

https://pluralistic.net/2023/08/02/self-incrimination/#wei-bai-bai

After all, a system that treats the device's owner as an adversary is a natural ally for the owner's other, human adversaries. The rubric for treating the owner as an adversary focuses on the way that users can be fooled by bad people with bad programs. If your computer gets taken over by malicious software, that malware might intercept queries from your antivirus program and send it false data that lulls it into thinking your computer is fine, even as your private data is being plundered and your system is being used to launch malware attacks on others.

These separate, non-user-accessible, non-updateable secure systems serve a nubs of certainty, a remote fortress that observes and faithfully reports on the interior workings of your computer. This separate system can't be user-modifiable or field-updateable, because then malicious software could impersonate the user and disable the security chip.

It's true that compromised computers are a real and terrifying problem. Your computer is privy to your most intimate secrets and an attacker who can turn it against you can harm you in untold ways. But the widespread redesign of out computers to treat us as their enemies gives rise to a range of completely predictable and – I would argue – even worse harms. Building computers that treat their owners as untrusted parties is a system that works well, but fails badly.

First of all, there are the ways that trusted computing is designed to hurt you. The most reliable way to enshittify something is to supply it over a computer that runs programs you can't alter, and that rats you out to third parties if you run counter-programs that disenshittify the service you're using. That's how we get inkjet printers that refuse to use perfectly good third-party ink and cars that refuse to accept perfectly good engine repairs if they are performed by third-party mechanics:

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

It's how we get cursed devices and appliances, from the juicer that won't squeeze third-party juice to the insulin pump that won't connect to a third-party continuous glucose monitor:

https://arstechnica.com/gaming/2020/01/unauthorized-bread-a-near-future-tale-of-refugees-and-sinister-iot-appliances/

But trusted computing doesn't just create an opaque veil between your computer and the programs you use to inspect and control it. Trusted computing creates a no-go zone where programs can change their behavior based on whether they think they're being observed.

The most prominent example of this is Dieselgate, where auto manufacturers murdered hundreds of people by gimmicking their cars to emit illegal amount of NOX. Key to Dieselgate was a program that sought to determine whether it was being observed by regulators (it checked for the telltale signs of the standard test-suite) and changed its behavior to color within the lines.

Software that is seeking to harm the owner of the device that's running it must be able to detect when it is being run inside a simulation, a test-suite, a virtual machine, or any other hallucinatory virtual world. Just as Descartes couldn't know whether anything was real until he assured himself that he could trust his senses, malware is always questing to discover whether it is running in the real universe, or in a simulation created by a wicked god:

https://pluralistic.net/2022/07/28/descartes-was-an-optimist/#uh-oh

That's why mobile malware uses clever gambits like periodically checking for readings from your device's accelerometer, on the theory that a virtual mobile phone running on a security researcher's test bench won't have the fidelity to generate plausible jiggles to match the real data that comes from a phone in your pocket:

https://arstechnica.com/information-technology/2019/01/google-play-malware-used-phones-motion-sensors-to-conceal-itself/

Sometimes this backfires in absolutely delightful ways. When the Wannacry ransomware was holding the world hostage, the security researcher Marcus Hutchins noticed that its code made reference to a very weird website: iuqerfsodp9ifjaposdfjhgosurijfaewrwergwea.com. Hutchins stood up a website at that address and every Wannacry-infection in the world went instantly dormant:

https://pluralistic.net/2020/07/10/flintstone-delano-roosevelt/#the-matrix

It turns out that Wannacry's authors were using that ferkakte URL the same way that mobile malware authors were using accelerometer readings – to fulfill Descartes' imperative to distinguish the Matrix from reality. The malware authors knew that security researchers often ran malicious code inside sandboxes that answered every network query with fake data in hopes of eliciting responses that could be analyzed for weaknesses. So the Wannacry worm would periodically poll this nonexistent website and, if it got an answer, it would assume that it was being monitored by a security researcher and it would retreat to an encrypted blob, ceasing to operate lest it give intelligence to the enemy. When Hutchins put a webserver up at iuqerfsodp9ifjaposdfjhgosurijfaewrwergwea.com, every Wannacry instance in the world was instantly convinced that it was running on an enemy's simulator and withdrew into sulky hibernation.

The arms race to distinguish simulation from reality is critical and the stakes only get higher by the day. Malware abounds, even as our devices grow more intimately woven through our lives. We put our bodies into computers – cars, buildings – and computers inside our bodies. We absolutely want our computers to be able to faithfully convey what's going on inside them.

But we keep running as hard as we can in the opposite direction, leaning harder into secure computing models built on subsystems in our computers that treat us as the threat. Take UEFI, the ubiquitous security system that observes your computer's boot process, halting it if it sees something it doesn't approve of. On the one hand, this has made installing GNU/Linux and other alternative OSes vastly harder across a wide variety of devices. This means that when a vendor end-of-lifes a gadget, no one can make an alternative OS for it, so off the landfill it goes.

It doesn't help that UEFI – and other trusted computing modules – are covered by Section 1201 of the Digital Millennium Copyright Act (DMCA), which makes it a felony to publish information that can bypass or weaken the system. The threat of a five-year prison sentence and a $500,000 fine means that UEFI and other trusted computing systems are understudied, leaving them festering with longstanding bugs:

https://pluralistic.net/2020/09/09/free-sample/#que-viva

Here's where it gets really bad. If an attacker can get inside UEFI, they can run malicious software that – by design – no program running on our computers can detect or block. That badware is running in "Ring -1" – a zone of privilege that overrides the operating system itself.

Here's the bad news: UEFI malware has already been detected in the wild:

https://securelist.com/cosmicstrand-uefi-firmware-rootkit/106973/

And here's the worst news: researchers have just identified another exploitable UEFI bug, dubbed Pixiefail:

https://blog.quarkslab.com/pixiefail-nine-vulnerabilities-in-tianocores-edk-ii-ipv6-network-stack.html

Writing in Ars Technica, Dan Goodin breaks down Pixiefail, describing how anyone on the same LAN as a vulnerable computer can infect its firmware:

https://arstechnica.com/security/2024/01/new-uefi-vulnerabilities-send-firmware-devs-across-an-entire-ecosystem-scrambling/

That vulnerability extends to computers in a data-center where the attacker has a cloud computing instance. PXE – the system that Pixiefail attacks – isn't widely used in home or office environments, but it's very common in data-centers.

Again, once a computer is exploited with Pixiefail, software running on that computer can't detect or delete the Pixiefail code. When the compromised computer is queried by the operating system, Pixiefail undetectably lies to the OS. "Hey, OS, does this drive have a file called 'pixiefail?'" "Nope." "Hey, OS, are you running a process called 'pixiefail?'" "Nope."

This is a self-destruct switch that's been compromised by the enemy, and which no one on the bridge can de-activate – by design. It's not the first time this has happened, and it won't be the last.

There are models for helping your computer bust out of the Matrix. Back in 2016, Edward Snowden and bunnie Huang prototyped and published source code and schematics for an "introspection engine":

https://assets.pubpub.org/aacpjrja/AgainstTheLaw-CounteringLawfulAbusesofDigitalSurveillance.pdf

This is a single-board computer that lives in an ultraslim shim that you slide between your iPhone's mainboard and its case, leaving a ribbon cable poking out of the SIM slot. This connects to a case that has its own OLED display. The board has leads that physically contact each of the network interfaces on the phone, conveying any data they transit to the screen so that you can observe the data your phone is sending without having to trust your phone.

(I liked this gadget so much that I included it as a major plot point in my 2020 novel Attack Surface, the third book in the Little Brother series):

https://craphound.com/attacksurface/

We don't have to cede control over our devices in order to secure them. Indeed, we can't ever secure them unless we can control them. Self-destruct switches don't belong on the bridge of your spaceship, and trusted computing modules don't belong in your devices.

I'm Kickstarting the audiobook for The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There's also bundles with Red Team Blues in ebook, audio or paperback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/17/descartes-delenda-est/#self-destruct-sequence-initiated

Image:

Mike (modified)

https://www.flickr.com/photos/stillwellmike/15676883261/

CC BY-SA 2.0

https://creativecommons.org/licenses/by-sa/2.0/

#pluralistic#uefi#owner override#user override#jailbreaking#dmca 1201#schneiers law#descartes#nub of certainty#self-destruct button#trusted computing#secure enclaves#drm#ngscb#next generation secure computing base#palladium#pixiefail#infosec

577 notes

·

View notes

Text

"Hackers stealing data from a genetic testing company" sounds like a cyberpunk rpg side mission.

211 notes

·

View notes

Text

182 notes

·

View notes

Text

Sharing an article about the whole Ao3 situation because I work with this journalist and Cybernews shares reputable news: https://cybernews.com/news/ao3-shut-down-extorted-anonymous-sudan/

Excerpt from the article about AnonymousSudan: "While the gang is supposedly an anti-Western pro-Islam hacker collective, the group’s origins and modus operandi strongly point to it being a “Made in Russia” project with solid financial backing that regular hacktivists cannot afford."

Or in other words - AnonymousSudan are neither Anonymous (as in not related to the Anonymous group), nor Sudanese (they work for Russia). Stop being Islamophobic, that's literally what the hate group wants.

118 notes

·

View notes