#deepfake video scandal

Text

JUST found out about the kajol, katrina kaif and rashmika deepfake vid thingy that’s been circulating and goddamn why can’t people just mind their own business and exist 😭 did anyone ASK you to do that?? I don’t fucking think so. It’s like the hands to yourself policy. If someone doesn’t want you bothering them or misusing them in a harmful way, just don’t gosh. It’s not that hard. Keep to yourself everyone. Don’t misuse the internet like that. If everyone can just coexist on this platform and not harm anyone, that would be lovely.

12 notes

·

View notes

Text

The U.S. Wants to Ban TikTok for the Sins of Every Social Media Company

On Wednesday, the House of Representatives will likely vote to force ByteDance to divest from TikTok, which sets the stage for a possible full ban of the platform in the United States (Update: it did). The move will come after a slow but steady drumbeat from politicians on both sides of the aisle to ban the platform for some combination of potential and real societal harms algorithmically inflicted upon American teens by a Chinese-owned company.

The situation is an untenable mess. A TikTok ban will have the effect of further entrenching and empowering gigantic, monopolistic American social media companies that have nearly all of the same problems that TikTok does. A ban would highlight, again, that people who use mainstream social media platforms run by corporations do not actually own their followers or their audiences, and that any businesses/jobs/livelihoods created on these platforms can be stripped away at any moment by the platforms or, in this case, by the United States government.

Bytedance and TikTok itself have been put into an essentially impossible situation that is perhaps most exemplified in a 60 Minutes clip from 2022 that went viral this weekend, in which Tristan Harris, a big tech whistleblower who has turned the attention he got from the documentary The Social Dilemma into a self-serving career as a guy who talks about how social media is bad, explains that China is exporting the “opium” version of TikTok to American children.

In [the Chinese] version of TikTok, if you’re under 14 years old, they show you science experiments you can do at home, museum exhibits, patriotism videos, and educational videos,” Harris said. “And they also limit it to only 40 minutes per day. They don’t ship that version of TikTok to the rest of the world. So it’s almost like they recognize TikTok is influencing kids’ development, and they make their domestic version a spinach version of TikTok, while they ship the opium version to the rest of the world.” FCC Commissioner Brendan Carr quote tweeted this and said “In America, TikTok pushes videos to kids that promote self-harm, eating disorders, and suicide.”

Put simply: Every social media platform pushes awful shit to users of all ages. This is not a defense of TikTok, but a simple fact that has made up a huge portion of tech reporting for the last decade. Mere weeks ago, the New York Times published an exposé on underage girls being pushed into “child influencing,” a world which is full of pedophiles. Instagram’s effects on teens has been widely documented by Meta’s own employees, and without really trying we have been able to document the sale of guns and drugs, hacking services, and counterfeit services in ads displayed on the platform. Discord is full of communities used for organizing by Neo Nazis and paramilitaries, criminal hackers, crypto scammers, deepfake peddlers, teens who kidnap each other, etc. Facebook is full of AI-generated bullshit that people think is real, was used by foreign adversaries to attempt to influence an election, was credibly accused of being abused to facilitate a genocide in Myanmar, and has had innumerable scandals over the years. Twitter is full of malware and has essentially gotten rid of all of its rules. YouTube is a place that has been used by ISIS terrorists, white supremacists, mass shooters, and child brainwashers. Telegram was founded by Russians, is now based in the United Arab Emirates, and is full of criminals, hackers, and Russian disinformation. We have reported endlessly that all of these platforms are monitored by governments, militaries, surveillance agencies, and commercial interests around the world using "social listening," "social media monitoring," and OSINT tools.

Meta, Google, and Twitter have all moved resources away from content moderation in recent years, and have laid off huge numbers of employees as Republicans have cried “social media censorship.” As Elon Musk’s Twitter has become more of a cesspool in the absence of good content moderation, Google and Meta have realized that they can keep advertisers as long as their platforms are ever so slightly less toxic than Twitter. I am unaware of any political pushes to ban Instagram, Facebook, YouTube, or Twitter, and efforts to meaningfully regulate them to be less harmful seemingly have no political will. The only actual regulation of these platforms have been laws passed by conservatives in Florida and Texas which give them even less ability to moderate their platforms and which is the subject of a Supreme Court case.

This is just to say that TikTok and the specter of China’s control of it has become a blank canvas for which anyone who has any complaint about social media to paint their argument on, and has become a punching bag receiving scrutiny we should also be applying to every other social media giant.

When Uber, Airbnb, DoorDash and Bird ignore local laws or face the specter of bans or regulation, they use push notifications, email, and popups within their apps asking customers to complain to legislators. When these American apps do this, they are simply leveraging their popularity to “mobilize users.” When TikTok does the same, it is Chinese interference in American politics. When American TikTok users use their platform to share their progressive or leftist politics and TikTok’s algorithms allow them to go viral, that’s Chinese interference. When TikTok deletes content that violates its terms of service, that’s Chinese censorship. When Facebook and Google allow advertisers to create psychographic, biographic, and behavioral-based profiles of their users to target ads to them, that’s “personalized advertising.” When TikTok does ads, it’s Chinese spying. When TikTok users see content that promotes suicide, eating disorders, and makes people feel bad about themselves, it’s China brainwashing our children, undermining America, and threatening our existence. When Facebook, Instagram, and YouTube users see the same, it’s inconvenient and unfortunate, but can be solved with a blasé spokesperson statement that these platforms care about safety and will strive to do better.

In the clip above, Harris explains that polls show American children want to be “social media influencers” and that Chinese children want to be “astronauts,” the subtext being that it is like this because bad stuff is not allowed on Douyin, the Chinese version of TikTok. Banning TikTok is not going to change this (and Harris does not mention that China has tons of social media influencers as well). Harris says this with some derision, the subtext being that we should not want our children to grow up to be social media influencers.

This should not need to be explained, but because Harris and 60 Minutes did not explain it: Douyin (the Chinese version of TikTok) is not actually a sterile place that consists only of people doing science experiments and math equations, just as TikTok and all social media in America is not only an unmitigated shithole devoid of intellectual value. But Harris has this idea of Douyin being a safe place for kids because China does not have a free internet. The internet is widely and famously censored by the Chinese government, and ByteDance is complying with Chinese law in China. It is possible to argue (though I would not), that this makes the internet “safer,” and it is possible to argue (though I would not) that a “safer” internet is “better.” If Harris wants Chinese-style censorship of the internet in the United States, then he should argue for that. But in the United States, we have the First Amendment and a host of other regulations that have fostered something resembling an open internet. That open internet allowed for the rise of Facebook, Twitter, Instagram, and YouTube.

This general principle of not censoring the global internet also allowed for the rise of TikTok, which has millions of users in the United States because people like using it. TikTok is not perfect—in fact, I believe lots of the things on TikTok are very bad. Despite what I have just written, I understand that Chinese interference via algorithmic warfare or spying or any other tactic is a possible threat. China has been accused of using accounts on TikTok to spread influence, in the exact same way as the U.S. government has been caught spreading pro-U.S. influence abroad on Facebook and Twitter.

Like I mentioned, I think that this entire situation is actually very complicated, and is in fact a huge mess. I can understand why some people want to ban TikTok, but I am not sure how the government can do so without violating the free speech rights of millions of Americans and setting us on a path where a relatively open, global internet becomes one that is increasingly geographically siloed. I don’t think we should ban a platform because it competed too hard and became popular, especially when the direct beneficiaries of a ban are companies that are doing most of the same apparent algorithmic poisoning of America, just from within America’s borders. I also do not think it is constitutional, ethical, or good for the government to decide to unilaterally cut millions of Americans off from one of the largest social media platforms in the world and to effectively force its users and more importantly the people who make a living on TikTok to use a balkanized internet dominated by American megacorporations.

9 notes

·

View notes

Text

April 10 was a very bad day in the life of celebrity gamer and YouTuber Atrioc (Brandon Ewing). Ewing was broadcasting one of his usual Twitch livestreams when his browser window was accidentally exposed to his audience. During those few moments, viewers were suddenly face-to-face with what appeared to be deepfake porn videos featuring female YouTubers and gamers QTCinderella and Pokimane—colleagues and, to my understanding, Ewing’s friends. Moments later, a quick-witted viewer uploaded a screenshot of the scene to Reddit, and thus the scandal was a fact.

Deepfakes refer broadly to media doctored by AI, commonly to superimpose a person’s face onto that of, say, an actor in a movie or video clip. But sadly, as reported by Vice journalist Samantha Cole, its primary function has been to create porn starring female celebrities, and perhaps more alarmingly, to visualize sexual fantasies of friends or acquaintances. Given its increasing sophistication and availability, anyone with a picture of your face now can basically turn it into a porno. “We are all fucked,” as Cole concisely puts it.

For most people, I believe, it is obvious that Ewing committed some kind of misconduct in consuming the fictive yet nonconsensual pornography of his friends. Indeed, the comments on Reddit, and the strong (justified) reactions from the women whose faces were used in the clips, testify to a deep sense of disgust. This is understandable, yet specifying exactly where the crime lies is a surprisingly difficult undertaking. In fact, the task of doing so brings to the fore a philosophical problem that forces us to reconsider not only porn, but the very nature of human imagination. I call it the pervert’s dilemma.

On the one hand, one may argue that by consuming the material, Ewing was incentivizing its production and dissemination, which, in the end, may harm the reputation and well-being of his fellow female gamers. But I doubt that the verdict in the eyes of the public would have been much softer had he produced the videos by his own hand for personal pleasure. And few people see his failure to close the tab as the main problem. The crime, that is, appears to lie in the very consumption of the deepfakes, not the downstream effects of doing so. Consuming deepfakes is wrong, full stop, irrespective of whether the people “starring” in the clips, or anyone else, find out about it.

At the same time, we are equally certain that sexual fantasies are morally neutral. Indeed, no one (except perhaps some hard-core Catholics) would have blamed Ewing for creating pornographic pictures of QTCinderella in his mind. But what is the difference, really? Both the fantasy and the deepfake are essentially virtual images produced by previous data input, only one exists in one’s head, the other on a screen. True, the latter can more easily be shared, but if the crime lies in the personal consumption, and not the external effects, this should be irrelevant. Hence the pervert’s dilemma: We think sexual fantasies are fine as long as they are only ever generated and contained in a person’s head, and abhorrent the moment they exist in the brain with the aid of somewhat realistic representation—yet we struggle to identify any morally relevant distinction to justify this assessment.

In the long run, it is likely that this will force us to reevaluate our moral attitudes to both deepfakes and sexual fantasies, at least insofar as we want to maintain consistency in our morality. There are two obvious ways in which this could go.

The first is that we simply begin to accept pornographic deepfakes as a normal way of fantasizing about sex, only that we outsource some of the work that used to happen in the brain to a machine. Considering the massive supply of (sometimes stunningly realistic) pornographic deepfakes and the ease with which they can be customized for one’s own preferences (how long before there is a DALL-E for porn?), this may be a plausible outcome. Knowing that people probably use your photos to create fictive porn may assume the same status as knowing that some people probably think of you (or look at your most recent Instagram selfie) when they masturbate—not a huge deal unless they tell it to your face. At the very least, we can imagine the production of deepfakes assuming the same status as drawing a highly realistic picture of one’s sexual fantasy—weird, but not morally abhorrent.

The second, and arguably more interesting option, is that we begin to question the moral neutrality of sexual fantasies all together. Thinking about sex was, for a long time, considered deeply sinful in Christian Europe, and has continued to be a stigma for some. It was only after the Enlightenment that whatever goes on in a person’s mind became a “private matter” beyond moral evaluation. But this is definitely an exception, historically speaking. And to some extent, we still moralize over people’s fantasies. For instance, several ethicists (and many other people I reckon), hold that sexual fantasies involving children or brutal violence are morally objectionable.

But deepfakes may give us reason to go even further, to question dirty thoughts as a general category. Since the advent of the internet, we’ve been forming a new attitude to the moral status of our personal data. Indeed, most Westerners today take it for granted that one should be in full control over information pertaining to one’s person. But wouldn’t this, strictly interpreted, also include data stored in other people’s heads? Wouldn’t it grant me a level of control over other people’s imagination? The idea is not as wild as it first appears. Consider the Friends episode “The one with a chick and a duck,” in which Ross teases Rachel by picturing her naked against her will, claiming that it is one of the “uh, rights of the ex-boyfriend, huh?” Rachel repeatedly begs him to stop, but Ross merely responds by closing his eyes saying, “Wait, wait, now there’s 100 of you, and I’m the king.” The joke is portrayed as completely uncontroversial, with added audience laughter and all. But now, some two decades later, doesn’t it leave you with a rather bitter taste in your mouth? Indeed, in the age of information, the moral neutrality of the mind seems to be increasingly under siege. Perhaps, in another 20 years, the thought that I can do whatever I want to whomever I want in my head may strike people as morally disgusting too.

We are probably going to see some of both scenarios. There will be calls to moralize over people’s imagination. And people will probably react with less and less shock when learning about the deepfake phenomenon, even when it happens to themselves. Just compare the media coverage of deepfake porn today with that of two years ago. The (legitimate) moral panic that characterized the initial reports has almost completely vanished, despite the galloping technological development that has taken place in the meanwhile. Yet, we will probably not arrive at any moral consensus regarding deepfakes anytime soon. Indeed, it has taken us thousands of years to learn to live with human imagination, and the arrival of deepfakes puts most of those cultural protocols on their heads.

So, which of the options are preferable from the viewpoint of moral philosophy? There is no simple answer. This is in part because both options make sense, or at least have the potential to make sense (otherwise there wouldn’t be any dilemma to begin with). But it is also due to the very nature of moral judgements. Moral truths cannot be stated once and for all. On the contrary, we need to begin every day by asking them anew.

Think of it like this: We know how many electrons are in a hydrogen atom, and so we never need to ask that question again. Questions like “Who should we be?”, “What is a good human life?”, or “Can we blame people for their fantasies?”, on the other hand, are questions that need to be asked again and again by every generation. This is because moral philosophy is an activity that dies the moment we stop doing it. For our moral lifeworlds to make sense, we must consciously reevaluate them, because this activity is always dependent on the social, technological, and cultural contexts in which it takes place. So, the moment we arrive at a definitive answer to the question of which option is preferable from the standpoint of moral philosophy, moral philosophy ceases to be.

Where does all this put us in relation to Ewing, Pokimane, and QTCinderella? There is no doubt that the feelings of shame and humiliation expressed by the targets of the videos are real. And I personally do not find any reason to question the authenticity of the shame and regret expressed by Ewing. But our moral sensemaking of the situation is a different matter. And we should be open to the fact that, in 20 years, we may think very differently about these things. It all depends on how we continue to build and reevaluate our moral lifeworlds. A good first step is taking a step back and reconsidering what exactly it is we find objectionable about deepfakes.

I think the best place to start is to assess the social context in which deepfakes are used, and compare this to the context around sexual fantasies. Today, it is clear that deepfakes, unlike sexual fantasies, are part of a systemic technological degrading of women that is highly gendered (almost all pornographic deepfakes involve women). And the moral implications of this system are larger than the sum of its parts (the individual acts of consumption). Fantasies, on the other hand, are not gendered—at least we have no reliable evidence of men engaging more with sexual imagination than women do—and while the content of individual fantasies may be misogynist, the category is not so in and of itself. The immoral aspect of Ewing’s actions therefore lies not primarily in the damage it caused to the individuals portrayed, but in the partaking of a technically-supported systemic degrading of women, a system that amounts to something more than the sum of its parts.

While this is the beginning of an answer, it is not the answer. How the technology is used and fitted into our social and cultural protocols will continue to change. What Ewing did wrong cannot be answered once and for all. For tomorrow, we will need to ask again.

5 notes

·

View notes

Note

what do you think about lizzie olsen deepfakes and ai porn? like when they put her face on pics or vids of pornstars to make it look like it’s her?

i hate it it’s so disgusting i really saw one on TIKTOK a few months ago. people who watch deepfake porn of anyone r so fucking deprived and detached from reality it’s honestly just so sick. like how desperate and lonely can u get 😭 it is beyond me how people do not feel embarrassed for that

there was a problem a few days ago where a twitch streamer was caught watching deepfake porn of his FRIENDS, then cried ab it on stream apologizing whilst his supporters said there was nothing wrong with deepfake porn. firstly pasting someone else’s face into a pornstar’s body without their consent is so objectifying and should be illegal and classified under the same category as sexual assault. secondly, and this is more ab that streamer’s situation, but watching deepfake porn of your friends is so fucked up— that man is seriously sick in the head. i honestly wasn’t surprised when men responded to the friends’ videos where they were crying and speaking out about it by saying they were being overdramatic and that women are too sensitive etc etc and that it’s no different from them posting ‘scandalous’ pictures of themselves online. i was more surprised when i actually saw men supporting the victims lol

4 notes

·

View notes

Text

Shocking news! Italian PM Giorgia Meloni got caught up in a huge scandal. A very bad deepfake video of her spread online fast. It showed her in an embarrassing situation. But it was totally fake! Made using smart AI technology. This “Giorgia Meloni Viral Video” showed how dangerous deepfakes can be. They can ruin someone’s privacy and reputation. Even famous leaders aren’t safe from these AI-made fakes. The explicit video went viral with millions of views. It’s a scary example of AI being used to hurt and humiliate people. Meloni and others were really mad about the video. Legal action …

0 notes

Text

Akshay Kumar becomes target of deepfake video; actor plans legal action

Akshay Kumar becomes target of deepfake video. Image Source: IANS News

Mumbai, Feb 2 : In a concerning turn of events, actor Akshay Kumar finds himself in the crosshairs of a deepfake scandal, as a fabricated video has surfaced online, featuring the superstar promoting a game application.

“The actor has never indulged in promotions of any such activity. The source of this video is being looked…

View On WordPress

0 notes

Text

SAG-AFTRA on AI Taylor Swift deepfakes, George Carlin special

The Screen Actors Guild – American Federation of Television and Radio Artists (SAG-AFTRA) put out a statement on Jan. 26, 2024, hitting back at two AI scandals in recent weeks: explicit AI deepfakes of musician Taylor Swift circulating on X (formerly Twitter) and the wider internet, and a comedy special YouTube video that impersonates the late comedian George Carlin and was marketed as AI…

View On WordPress

0 notes

Video

youtube

"Breaking: Delhi Police Nabs Deepfake Culprit Behind Rashmika Mandanna Scandal!""Shorts News: Arrest Made in #Deepfake Case Involving Rashmika Mandanna""Legal Action Unleashed: #DelhiPolice Busts Deepfake Creator - Shorts Update!""Cybercrime Crackdown: Rashmika Mandanna's Deepfake Mastermind Arrested - Shorts Edition!""Quick Update: Delhi Police Reveals Perpetrator in #Deepfake Scandal - Shorts News!""Behind Bars: Suspect Linked to Rashmika Mandanna Deepfake Video in Custody - Shorts Brief!""Privacy Protection: Delhi Police Takes Down #Deepfake Offender - Shorts Update!""Digital Forensics Triumph: Arrest in Rashmika Mandanna Deepfake Case - Shorts Recap!""From Screen to Cell: Delhi Police Breaks Deepfake Conspiracy - Shorts News Flash!""Justice Served: Perpetrator of Rashmika Mandanna Deepfake Video Apprehended - Shorts Update!""Breaking: Delhi Police Nabs Deepfake Culprit Behind #rashmikamandanna

#youtube#DelhiPoliceArrests DeepfakeArrest RashmikaMandanna DigitalForensics CybercrimeBust ShortsNews PrivacyProtection LegalAction JusticeServed So

0 notes

Note

I wholeheartedly agree with your stance on AI "art" being violating and problematic.

However, I do have a problem with the way you stated that even though you know MBB to be an adult, she is still Eleven from ST and a minor in your eyes.

She's 19. She's engaged. Because of her career she is probably more independent than anyone of us was at that age. And I do think that perpetual viewing of actors as 'stuck' as a character they once portrayed is problematic.

This is what happened when people thought "omg Hannah Montana has gone nuts" or "what happened to the sweet Demi Lovato?" Or anything else involving child actors.

What happened? They grew up.

Other than that, your point about AI generated images (I'm not even calling it 'art' anymore) stands. It's creepy and violating, especially because AI is getting 'better' (= more accurate) and I'm honestly afraid that before long we will have full-on deepfake porn videos with actors who never asked for that shit.

That said; it does raise the question with me whether or not a similar stance is warranted against NSFW artwork or smut with the actors rather than portrayed characters as the subject. (I've never been a big fan of RPF, and I don't think I've come across "real person fan art", so I can't really say with certainty if it would make me uncomfortable. But the AI things... They just make my skin crawl.)

You make a valid point!!! Warning long ass post reply below.

The Millie thing I think is more my personal view of her physically. I think I view Millie as still a young child is because of her youthful face and the characters she plays. I'm 22. It's still so hard for me to think she's only 2-3 years younger than me. To me she still looks 16. Very young. If she had a sex scene in the next 5-10 years I might still be inclined to turn away. She definitely has accomplished a lot.

I just cannot physically imagine her in sexual light at this present time. She has child like appearances other than when she's dolled up from a magazine shoot with Photoshop and then appears borderline age appropriate.

The same can be said for actor Bella Ramsey who is 20 years old, they have a very young look in appearance and have played many roles depicting them as younger than their original age. But both of them definitely deserve to be admired and respected to the work they've contributed.

So being told there's ai images with "white sauce" on her face makes my insides churn. Either a very young fan has generated that or someone's computer that might need to be investigated.

I never really knew who Hannah Montana was until wrecking ball (wasn't allowed to watch tv a lot growing up as a kid. And I was always told she was a slut in the tv show which is why I wasn't allowed to watch it -by gross male family members.) and I didn't know who Demi Lovato was AT ALL until the drug scandal- that's how I learnt about her music because they played it on the news. I was probably 13-14 at this time. But I think I understand what you're comparing...I think?

Like how Billie Eillish showed her body off when she was 18 and the internet went crazy, but to be fair I also thought she was in her 20s and was shocked when I found out she was only a little bit younger than me.

I still find myself uncomfortable even sexualising 33 year old Thomas Brodie-Sangster 😵💫 he still looks 13 to me!!!

REGARDLESS AS WE BOTH AGREE, THESE ACTORS AND PERFORMERS DONT DESERVE THIS AI PORN DISRESPECT.

For years people used to Photoshop actors faces into photos of pornos, I had issues with that.

The difference when it comes to art? Yes I think some people can be disturbing. We have Rule 34 for a reason guys. However the difference is that the art is usually not "realistic." We can tell it's hand drawn whether digital or hardcopy.

AI is luckily easy to spot usually. But it's too close in resemblance to these real people. A lot of porn websites have resorted to using AI, which is why I have a massive issue with this too. AI is two minutes if writing a description and having a computer generate. It's impulsive and dangerous.

Deepfakes, they've already made pornos with Scarlett Johansson, Taylor Swift and Katy Perrys faces I believe. There was a massive scandal when a man used the face of his female best friend in a deep fake porno because he had a "unrequited crush" for her and when she discovered the deepfake video on his computer while he was busy, she left and took him to court over sexual violation and privacy policies. It was HUGE. i hate Ai and Deepfakes.

There's even a constant debate on pedophiles using AI and Deepfakes as a means of gaining CP without soliciting CP... (I say guillotine their cock, hands, burn off their clits and spoon out their eyes with a melon ball scooper spoon but hey that's me ✌️ barbaric barbie.)

The difference between NSFW smut fics and AI porn, is:

1. Smut takes effort and time to write. AI writes and steals content for you. There's no creativity.

2. Because it's a written fic, they should have warnings and tags. It should be noted in RPF that the actors are not the same as your write them to be. This is why I changed a lot of my rpf into the characters they play.

3. Fics require imagination. AI is visual. No one can police your genuine imagination.

4. There are actors who have been uncomfy or flushed at the sight of themselves or the character version of them in NSFW Situations. Loki the pole dancing art and mpreg were show to Tom Hiddleston, who had a chuckle and many emotions of confusion with disgust and horror. I can imagine many actors would prefer to see an effort in art and literature when it comes to evil things instead of just some half baked computer program that creates lewd and anticlimactic imagery.

.

The only time I have ever excused it's use was for the chance to see my dads face move again because I don't have many videos of him, and as morbid as it is there's a comfort to seeing him smile in ai moveable photo scanning apps. But to many others this would be considered disrespectful to the dead and to be honest I would side with them if it came down to banning ai and Deepfakes forever.

0 notes

Text

How Social Media Marketing Companies Shape Political Narratives

In today’s digital age, the influence of a social media marketing company cannot be underestimated, particularly in the realm of politics. These firms offer tailored strategies and a vast array of services to shape public opinion and mould political narratives. The toolset includes, but is not limited to, targeted advertising, curated content, and sentiment analysis.

While traditional forms of campaigning like door-to-door canvassing, public rallies, and television advertisements are still prevalent, the shift towards digital marketing for politicians has seen exponential growth in recent years. The reason? The overall-presence of smartphones and the massive user base of platforms like Facebook, Twitter, Instagram, and YouTube. In these spaces, crafting and propagating a message is both efficient and swift.

However, merely having a presence on these platforms is not enough. With the surge in information, making one’s voice heard amid the cacophony can be challenging. That’s where specialized agencies come into play. Beyond merely presenting a candidate’s vision, they use sophisticated strategies and data-driven insights to drive political advertising that resonates with target audiences, thereby shaping narratives in favour of their clients.

READ MOREARVIND KEJRIWAL: THE REASON ANNA HAZARE MAKES SENSE

A Deeper Dive: Crafting the Narrative

The first step in shaping a political narrative is understanding the target audience. Social media marketing companies employ advanced analytics tools to segment populations based on demographics, location, interests, and even behavioural patterns. Once these segments are identified, content is tailor-made to appeal to each group’s sentiments, concerns, and aspirations.

Videos, memes, articles, and tweets are created and disseminated, presenting the politician or political party in a favourable light. These messages might emphasize accomplishments, elucidate policies, or even challenge competitors. The aim is to generate engagement: likes, shares, and comments, all of which amplify reach.

READ MORERELEVANCE OF CASTE IN CONTEMPORARY INDIA — PART 1

Monitoring and Adapting in Real-time

Unlike traditional forms of advertising, where feedback loops can be slow, social media allows for real-time monitoring. This means that companies can assess the efficacy of a campaign instantly and make modifications if necessary. A message not resonating with the masses? It can be tweaked or replaced swiftly.

This real-time feedback also provides an opportunity for crisis management. In the fast-paced world of social media, misinformation or scandals can spread rapidly. By keeping a finger on the pulse, marketing agencies can quickly address these issues, either by countering them with facts or crafting damage control narratives.

READ MORERELEVANCE OF CASTE IN CONTEMPORARY POLITICS — PART 2

The Ethical Dimension

Of course, with such power comes responsibility. There’s a fine line between persuasive messaging and manipulation. While these companies are hired to present politicians in the best possible light, there’s a societal expectation that this be done ethically.

The use of misinformation, deepfakes, or divisive content to stir emotions is a growing concern. Platforms themselves are taking steps to curtail such practices, but the onus is also on marketing agencies to uphold ethical standards, ensuring that the democratic process remains transparent and untainted.

The Future of Political Narratives on Social Media

As technology advances, so will the tools and techniques employed by social media marketing companies. Augmented reality (AR), virtual reality (VR), and AI-driven content creation are just a few of the innovations on the horizon.

While the mediums and methods might evolve, the core principle remains the same: to connect with the audience and shape perceptions. The challenge for both politicians and marketing agencies will be to harness these tools in ways that maintain the integrity of political discourse.

Micro-Targeting: The Art of Precision

A significant advantage of social media platforms is the ability to micro-target audiences. Gone are the days when politicians had to appeal to broad demographics with a generic message. With the depth of data available, a social media marketing company can tailor messages to very specific segments of the population. This precision means a policy regarding local infrastructure can target a city’s residents, while a proposal about educational reforms can be directed at parents of school-going children in specific regions.

The Role of Influencers in Political Narratives

Another emerging trend in digital marketing for politicians is the collaboration with social media influencers. With their massive and dedicated following, these individuals can sway public opinion significantly. By partnering with influencers whose brand aligns with their political vision, politicians can tap into a ready audience, reaching segments of the population that traditional campaigns might miss.

It?s crucial, however, for such collaborations to be transparent. Hidden political endorsements can erode trust and backfire if the audience feels manipulated.

Balancing Authenticity with Strategy

While strategic narrative crafting is essential, authenticity remains a core component of any successful political campaign. The public is increasingly discerning about manufactured images and messages. They crave genuine interactions and authentic glimpses into a politician’s character and beliefs.

Live streaming sessions, Q&A rounds, and candid behind-the-scenes content can humanize politicians, making them more relatable to the average voter. A blend of strategic messaging with authentic interactions ensures a holistic digital presence, strengthening the bond between politicians and their constituents.

The Global Impact

It’s essential to recognize that while political advertising on social media might be region-specific, its impact can be global. In an interconnected world, a political event in one country can ripple across borders. Social media marketing companies, therefore, must also be aware of global perceptions and narratives, especially for politicians on the international stage. You can also check out 15 Best Hootsuite Alternatives For Social Media Management.

The Ever-Evolving Digital Landscape

The confluence of politics and social media is dynamic, with both entities influencing the other continually. As we move forward, the role of social media marketing companies in shaping political narratives will only become more pronounced. The key will be to navigate this digital landscape ethically and responsibly, ensuring that the essence of democracy ? the voice of the people ? remains loud, clear, and uncorrupted.

In brief, social media has undeniably transformed the political landscape, amplifying the importance of narrative crafting in the digital realm. Armed with advanced tools and insights, social media marketing companies play a pivotal role in this transformation. As with all power structures, balance’s needed, ensuring that this influence is wielded ethically. As citizens and netizens, staying informed and critical of the information we consume is vital in this age of digital politics.

Reference Link(OriginallyPosted): https://qrius.com/how-social-media-marketing-companies-shape-political-narratives/

0 notes

Text

Government may bring new rules on Deepfake, Ashwini Vaishnav held meeting with social media companies

In recent times, the menace of Deepfake Technology has escalated, impacting not only Bollywood stars but also leaving big businessmen and cricketers concerned about their digital identities. Responding to this growing challenge, the central government is poised to take a substantial stride in addressing the issue.

Also Read: Cybercrime in Nagpur - Cyber Blackmailer Couple Arrested in Pune for Extorting Money

Union Communications and Information Technology Minister Ashwini Vaishnav convened a meeting with representatives from social media platforms on Thursday to deliberate on the deepfake predicament. Terming deepfakes as a newfound threat to democracy, he discussed the imperative need for altering regulations to effectively combat this emerging peril.

Ashwini Vaishnav revealed that the companies involved have committed to taking decisive actions, including reinforcing mechanisms to detect and handle deepfakes while simultaneously enhancing public awareness on the matter. Furthermore, he announced that new rules specifically tailored to counter deepfakes will be introduced in the near future. These regulations could manifest either as amendments to existing structures or the formulation of entirely new rules.

Also Read: Pune Couple's Organized Cyber Blackmailing Scandal Uncovered

Addressing the gravity of the situation, Ashwini Vaishnav emphasized that deepfake technology poses a significant threat to democratic processes. A myriad of issues were deliberated upon during the meeting with social media representatives, setting the stage for the subsequent meeting scheduled to take place in the first week of December.

Understanding Deepfake Technology

Deepfake technology, powered by artificial intelligence (AI), enables the manipulation or alteration of images, videos, and audios. This entails the creation of deceptive content through the use of AI, allowing the substitution of one person's face onto another's photo or video. Essentially, deepfake technology facilitates the production of seemingly authentic videos that are, in fact, entirely fabricated.

Also Read: Kashmiri Brother-in-Law could not show Kamal, and pressure on Nagpur police failed

Identifying Deepfakes

Distinguishing deepfakes from genuine content requires a discerning eye. Observing subtle inconsistencies, such as irregular movements of hands and legs in a video, can often reveal the artificial nature of the content. Some platforms have taken proactive measures by incorporating watermarks or disclaimers on AI-generated content to notify viewers of its artificial origin. Individuals are advised to scrutinize such marks attentively to discern the authenticity of multimedia content.

Safeguarding Against Deepfakes

To fortify defenses against the rising tide of deepfakes, individuals are encouraged to implement protective measures. This includes adjusting social media privacy settings to restrict access and using robust, unique passwords for heightened security. Enabling two-factor authentication adds an additional layer of protection, significantly reducing the risk of unauthorized access to personal information.

Also Read: Pune Couple's Organized Cyber Blackmailing Scandal Uncovered

As the government gears up to confront the challenges posed by deepfake technology, it becomes imperative for individuals to remain vigilant and adopt proactive measures to safeguard their digital identities. The collaboration between the government and social media platforms marks a crucial step toward curbing the proliferation of deepfakes and ensuring the integrity of online information. The impending rules and regulations are anticipated to provide a comprehensive framework for addressing the multifaceted threats posed by deepfake technology in the digital age.

Source: https://www.the420.in/deepfake-centre-may-introduce-new-regulations-ashwini-vaishnaw-meeting-social-media-firms/

0 notes

Text

Months after scandal, Atrioc celebrates progress combating deepfake content

Atrioc, a Twitch streamer who was caught in January having lewd deepfakes of female content producers open on his computer, gave an update on his recent activities today. Despite Atrioc's recent comeback to streaming, he hasn't posted anything to his primary YouTube channel since the first issue, which sparked fervent reactions from many other broadcasters. Shortly after the occurrence, Atrioc apologised profusely and has subsequently invested time and resources to lessen the prevalence and accessibility of deepfake content online.

In a YouTube video update, Atrioc announced that over the last several months, he has spent about $120,000 to get more than 200,000 infringing videos down, just over double his original goal. He did so using a company called Ceartas, which utilizes AI to automatically issue DMCA takedown notices, both increasing the number of videos that can be taken down at one time and decreasing the price of doing so. Atrioc said that Ceartas estimates that using traditional legal resources, he would have had to spend nearly $9 million to take down the same number of videos.

https://www.youtube.com/watch?v=U3mFwr-MbEw

Atrioc also said that he and others managed to get “massive” subreddits taken down that he said were targeting Twitch streamers. Additionally, he shared that a woman named Genevieve has successfully blocked the payment provider for many of these deepfake content creators, creating another roadblock in reaping the rewards for making such material.

The number of delisted videos should continue to grow; Atrioc revealed that more than 290,000 videos had been reported through Ceartas. Any more than that, though, will likely have to be pursued by others as he said that his initial $100,000 “budget” has been completely expended.

Atrioc said he and his team of editors will return to posting regular YouTube video content very soon.

Read the full article

0 notes

Text

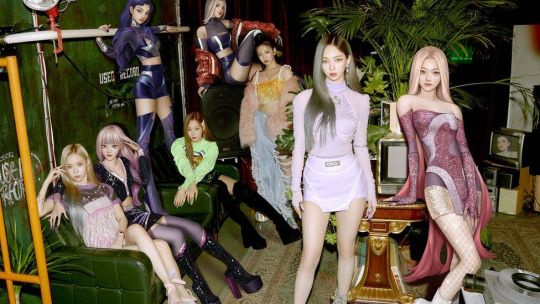

K-pop: The rise of the virtual girl bands

Since releasing their debut single I'm Real in 2021, K-pop girl group Eternity have racked up millions of views online.

They sing, dance and interact with their fans just like any other band.

In fact, there's mainly one big difference between them and any other pop group you might know - all 11 members are virtual characters.

Non-humans, hyper-real avatars made with artificial intelligence.

"The business we are making with Eternity is a new business. I think it's a new genre," says Park Jieun, the woman behind Eternity.

"The advantage of having virtual artists is that, while K-pop stars often struggle with physical limitations, or even mental distress because they are human beings, virtual artists can be free from these."

The cultural tidal wave of Korean pop has become a multibillion-dollar force over the last decade. With its catchy tunes, high-tech production and slinky dance routines, K-pop has smashed into the global mainstream, becoming one of South Korea's most lucrative and influential exports.

But the top K-pop stars, their legions of loyal fans, and the business-owners looking to capitalise on their success are all looking to the future.

With the explosion of artificial intelligence (AI), deepfake and avatar technologies, these pop idols are taking their fame into a whole new dimension.

IMAGE SOURCE,YG ENTERTAINMENT

K-pop superstars Blackpink are also using the metaverse to reach a wider audience

The virtual faces of Eternity's members were created by deep learning tech company Pulse9. Park Jieun is the organisation's CEO.

Initially the company generated 101 fantasy faces, dividing them into four categories according to their charms: cute, sexy, innocent and intelligent.

Fans were asked to vote on their favourites. In-house designers then set to work animating the winning characters according to the preferences of the fans.

For live chats, videos and online fan meets, the avatar faces can be projected onto anonymous singers, actors and dancers, contracted in by Pulse9.

The technology acts like a deepfake filter, bringing the characters to life.

"Virtual characters can be perfect, but they can also be more human than humans," Park Jieun tells BBC 100 Women.

As deepfake technology moves into the mainstream, there have been concerns that it could be used to manipulate people's images without permission or generate dangerous misinformation.

Women have reported having their faces put into pornographic films, while deepfakes of Russian President Vladimir Putin and President Volodymr Zelensky of Ukraine have been shared on social media sites.

"I'm always trying to make it clear that these are fictional characters," says the CEO.

She says Pulse9 uses the European Union's draft ethical AI guidelines when making their avatars.

IMAGE SOURCE,PULSE9

Virtual characters, like this one from Eternity, can be 'more human than humans,' says tech businesswoman Park Jieun

And Park Jieun sees other advantages in virtual bands where each avatar can be controlled by their creators.

"The scandal created by real human K-pop stars can be entertaining, but it's also a risk to the business," says the CEO.

She believes she can put these new technologies to good use and minimise risks for overstressed and pressurised K-pop artists trying to keep up with the demands of the industry.

Over the past years, K-pop made headlines for various social issues - from dating gossip to online trolling, fat-shaming and extreme dieting of band members.

The genre has also spurred a conversation about mental health and cyberbullying in South Korea, after the tragic death of young K-pop stars, which many believe had a significant impact on their following.

In 2019, singer and actress Sulli was found dead in her apartment, aged 25. She had taken a break from the entertainment industry, after reportedly "suffering physically and mentally from malicious and untrue rumours spreading about her".

Her close friend Goo Hara, another bright K-pop artist, was also found dead at her home in Seoul soon after. Before taking her own life, Goo was fighting for justice after secretly being filmed by a boyfriend, and was being viciously abused online for that.

Threat or aid?

For the human stars working around the clock to train, perform and interact with their fans, having some avatar assistance in the virtual world could provide some relief.

Han Yewon, 19, is the lead vocalist of newly launched girl group mimiirose, managed by YES IM Entertainment in South Korea.

She spent almost four years as a trainee, waiting for her opportunity to be thrust into the limelight - and one of many candidates who had to undertake monthly evaluations. Those who didn't show sufficient progress were let go.

"I worried a lot about not being able to debut," says Yewon.

Image caption,

Han Yewon is a human lead vocalist of K-pop girl group mimiirose

Becoming a K-pop star doesn't happen overnight. And with new groups making their debut every year, it can be hard to stand out.

"I went to work around ten in the morning and did my vocal warm-ups for an hour. After that, I sang for two or three hours, I danced for three to four hours and worked out for another two hours", says the vocalist.

"We practised for more than 12 hours in total. But if you aren't good enough, you end up staying longer."

Yet the prospect of virtual avatars flooding the industry worries Yewon, who says that fans appreciate her authenticity.

"Because technology has improved so much lately, I'm afraid that virtual characters will take the place of human idols," she says.

IMAGE SOURCE,PULSE9

Band members' faces are created using AI technology

Other K-pop groups, however, have been quick to adopt new avatar technologies - and the business is forecasted to grow steadily.

The digital human and avatar market size is estimated to reach $527.58bn (£429bn) globally by 2030, according to projections by market consulting company Emergen Research.

At least four of K-pop's biggest entertainment companies are investing heavily in virtual elements for their stars, and five of the top-earning K-pop groups of 2022 are getting in on the trend.

Using virtual copies of themselves allow them to reach fans across time zones and language barriers - in ways that flesh-and-blood artists would never be able to do.

Girl band aespa, for instance, consists of four human singers and dancers (Karina, Winter, Giselle and Ningning) and their four virtual counterparts - known as ae-Karina, ae-Winter, ae-Giselle and ae-Ningning. The avatars can explore virtual worlds with the fans and be used across multiple platforms.

IMAGE SOURCE,SM ENTERTAINMENT

Image caption,

Girl band aespa has four human members and four virtual avatars

While chart-topping girl band, Blackpink made history with the help of their virtual twins, winning the first-ever MTV award for Best Metaverse Performance in 2022.

More than 15 million people from around the world tuned in to popular online gaming platform PUBGM to watch the group's avatars perform in real time.

During the Covid-19 pandemic Moon Sua and her K-pop group Billlie had to cancel their live performances and fan meets. Instead, the band's management company created virtual copies of band members, to throw a party for fans in the virtual world.

"Since it was our first time doing it, we were a bit clumsy," says Sua.

IMAGE SOURCE,MYSTIC STORY

All-human band Billlie took advantage of the metaverse to communicate with fans during the pandemic

"But as time went by, we got used to it, talking with the fans while adapting to the virtual world. We had such a good time."

Moon Sua was impressed by how real the group's avatars looked, but says she still prefers to meet with their followers in person.

"I don't think it's something threatening. Maybe we can learn skills from watching them? I don't think they are a threat that can replace us," says the band's main rapper.

THE RISE OF THE VIRTUAL K-POP PRINCESS

With the rise of K-pop music globally, there has also been a growing phenomenon of hyper-realistic avatars in girl bands. BBC 100 Women takes a look at the impact of these flawless fantasy artists.

But there are also some concerns in the wider industry about ethical and copyright issues that avatar technologies can present.

"There's a lot of unknowns when it comes to artists in the metaverse, virtual versions, icons of themselves, whatever it might be," Jeff Benjamin, Billboard's K-pop columnist, tells BBC 100 Women.

"It might be the fact that the artists themselves might not be in control of their image and that can create an exploitative situation."

'Too soon to know'

For fans like Lee Jisoo, 19, who studies at engineering college, K-pop has been a welcome distraction during times of stress. She has been a dedicated Billlie fan since the group launched in 2019.

"Their love for their fans is amazing. You cannot help but love them more," says Jisoo.

Jisoo collects fan albums and merchandise, while also interacting with the band online and in the virtual world.

"I feel emotions through Billlie that I wouldn't have felt if I didn't like them," she says.

"And I'm fangirling even more because I want to give back those feelings to Billlie. I think this is a positive thing for me."

IMAGE SOURCE,SK TELECOM

Billlie threw a party for their fans in the virtual world

But the virtual world can also be an unwelcoming space for K-pop stars and fans alike, with regulations to prevent cyberbullying or abuse lacking or rarely being enforced. The industry has been rocked by online bullying and smear campaigns waged against successful stars.

"I get more stressed out when I see mean comments on Billlie online. Because it's also an insult to the things I like, so I get stressed out and heartbroken," says Jisoo.

Child and adolescent psychiatrist Jeong Yu Kim, who works in Seoul, says it's too soon to know how virtual technology and the rise of AI characters will affect young people.

"I see the real problem is that we're not seeing each other in an authentic way," Jeong Yu says.

"In virtual worlds, we could be more free and do things that you can't do outside, you can be someone else," explained Jeong Yu. "This K-pop industry is really responsive to what the public wants, and they would want their artists to fulfil that."

Jisoo has been a dedicated Billlie fan since the group launched in 2019

"Just like any entertainment industry, there are so many pressures," says Jeff Benjamin.

"The artists are really expected to always show a good image, they're supposed to be that shining example for their fans."

But this is changing, he says, and there has been an industry-wide shift taking place in order to better serve the mental health needs of the stars and reduce the intensive workload.

"The artists themselves are also opening up about what's going on with their mental health, and that's actually forging a deeper connection with those fans."

In the fast-changing K-pop industry, it might be too soon to say whether virtual idols are a short-term fad or the future of the music industry.

But for now, for fans like Jisoo the choice of who to follow is an easy one.

"Honestly, if someone asks me, 'Do you want to watch Billlie on the metaverse for 100 minutes or in real life for ten minutes?', I'll choose to see Billlie for ten minutes in real life."

She believes "people who like real idols and people who like virtual idols are completely different" - and for many like her, it would be "hard" to fall for the avatars at the expense of human K-pop stars.

https://new.c.mi.com/ng/post/237287

https://new.c.mi.com/ng/post/237857/

https://new.c.mi.com/ng/post/237862/

https://new.c.mi.com/ng/post/239949/

https://new.c.mi.com/ng/post/238498/

https://new.c.mi.com/ng/post/237952/

https://new.c.mi.com/ng/post/240044/

https://new.c.mi.com/ng/post/240059/

https://new.c.mi.com/ng/post/238067/

https://new.c.mi.com/ng/post/238077/

https://new.c.mi.com/ng/post/238633/

https://new.c.mi.com/ng/post/238146/

https://new.c.mi.com/ng/post/241170/

https://new.c.mi.com/ng/post/241190/

https://new.c.mi.com/ng/post/238946/

https://new.c.mi.com/ng/post/241740/

https://new.c.mi.com/ng/post/238942/

https://new.c.mi.com/ng/post/238947/

https://new.c.mi.com/ng/post/241905/

https://new.c.mi.com/ng/post/239087/

https://new.c.mi.com/ng/post/239092/

https://new.c.mi.com/ng/post/239151/

https://new.c.mi.com/ng/post/242380/

https://new.c.mi.com/ng/post/239472/

1 note

·

View note

Text

Deepfakes scare the fuck outta me. I've never seen a deepfake meme video without thinking about how They are going to use this to cover up whichever scandal they're embroiled in. I can just imagine people saying "no that's not me in those videos with Epstein, those are deepfakes" 😨

3 notes

·

View notes

Text

Akshay Kumar becomes target of deepfake video

Akshay Kumar becomes target of deepfake video. Image Source: IANS News

Mumbai, Feb 2 : In a concerning turn of events, actor Akshay Kumar finds himself in the crosshairs of a deepfake scandal, as a fabricated video has surfaced online, featuring the superstar promoting a game application.

“The actor has never indulged in promotions of any such activity. The source of this video is being looked…

View On WordPress

0 notes

Text

"Since 2016, the special counsel’s investigation and two Senate Intelligence Committee reports have spelled out in great detail that Russian disinformation warfare against the 2016 election was extraordinarily concerted, insidious, broad in scope and deliberately crafted to divide the country along racial and social lines. Numerous top intelligence officials have warned that Russia and other outside actors will strike again.

"The entire Ukraine scandal, for which [t]rump has been impeached, is largely about disinformation. [t]rump extorted Ukraine to get it to announce public statements that would smear Biden with disinformation and help validate conspiracy theories that Ukraine, not Russia, interfered in 2016.

"In effect, [t]rump was trying to pressure a foreign power to help manufacture more disinformation to help mislead U.S. voters about a domestic political opponent and absolve Russia of its original disinformation warfare campaign against this country.

..."[t]rump has retweeted accounts from the far-right conspiracy theorist QAnon. What’s more, [t]rump and his prominent supporters have played an active role in spreading disinformation against Democrats.

..."All this is a sign of what the Democratic nominee could face. It’s no wonder that some Democrats are worried we might even see 'deepfake' media manipulations.'"

This is why this year, prior to November, is not the time to "bring this country together." We need resilient tribalism.

We need to reach additional voters, not as centrists willing to give way to Republicons, but as people who have come to understand that nothing less than trump can disrupt the hardened agendas of Republicons, and trump makes them more extreme.

People may be dissatisfied with politics and with both political parties. People may not like some Democrats, or may disapprove of some Democratic track records. But the Democratic Party remains encouraging of and highly responsive to the voting democracy, whereas Republicons are set in their agendas, have spent the last decades evolving them toward immovable permanence, and are hostile to democracy.

For anyone who is not happy with where this country is headed under trump, or who hesitates to see the country fully committed to trump's regime with no going back, the only hope is to vote, and vote Blue, regardless of what messaging is passing around.

If there is a legitimate problem, Democrats will insist it be corrected. Republicons, obviously, will adopt it and worship it.

Democrats are responsive to voters.

Republicon voters are responsive to whatever the leader or agenda of the Party is. Their obedience makes this dangerous when Republicon policies are increasingly extremist and unpopular among all Americans.

Republicons have cheated and manipulated themselves into disproportionate power over the last half century and more, and they have held too much influence over the country. Favoring business and companies after World War II, for example, was smart as an initial measure to help the country recover post-war. Continuing to favor corporations increasingly at every level, while tearing down workers and election integrity, has helped no one except the top 1%, who are functionally Republicon clients.

We should give democracy and Democrats a chance before giving them up.

26 notes

·

View notes