#large dataset

Text

Graeme Callister is a historian of the Napoleonic & Revolutionary era, and he says that Napoleon was above average height for the infantry, and average among the grenadiers.

#pretty interesting#large dataset#not that I personally care#I don’t judge people by their height#I like him either way#But it does clear things up#napoleon#napoleonic#napoleonic era#napoleon bonaparte#first french empire#french empire#Napoleon’s height#Napoleon short myth

81 notes

·

View notes

Text

Well in the end it comes down to recognizing when you can’t have a good faith conversation. I can understand the arguments being made and where it comes from but I fundamentally disagree with some of the foundations of these beliefs and will not budge. Nuance necessitates some alignment, I think.

#my ramblings#at this moment I don’t think you can separate the conversation of ai as a tool from the CURRENT ONGOING ethical issues#this isn’t theoretical it is right now for real being used in a specific way#if you’re curating your own datasets (??) for training (???) obviously there are ways to address it#but the major tools being used#are largely unethical full stop#and go beyond ‘unethical but you kind of can accept it and it’s necessary for day-to-day life’#in my opinion at least#there are ai tools that can be used without exploiting artists#like yknow filters or some of those color tools or van gogh generator#van gogh’s dead so it’s not really exploitation of his art unless it’s like. I dunno. sold as if it were a commission#instead of an ai generated product#plus I feel like some of the arguments I’ve read frame things in kinda weird way#like artists are gatekeeping art rather than developing their skills#and there are unproductive ways to frame arguments that raise concerns about ai#but making it about the argument rather than the tool is missing the forest for the trees#well yknow how it is#I simply won’t see those takes anymore

9 notes

·

View notes

Text

#this is absolutely important in its own right but i think its very useful to remind people just how language based ML is#it doesnt 'know' anything its not 'thinking' its literally copying arrangements of characters it's been trained on#it can do this to a relatively complex degree with a large enough dataset#but it doesnt like 'know' what countries are in africa its copying text#which is why it constantly hallucinates information lol

3 notes

·

View notes

Text

researchers in [redacted field] will publish papers on how a major problem in [subfield] is that there's little-to-no available data and thus they've spent a lot of time collecting a new dataset (and look! these are the cool features and annotations our dataset has! and these are all the tasks we've used it for! isn't this cool!) and then they will. simply. not release the dataset for [insert low-resource scenario here] that they just went on and on about

#frankly it's wild that people have just been getting away with publishing papers about data without sharing the data as well#(and similarly for methods and code)#(the field at large is getting a lot better at this all at least)#special shoutout to the authors of papers who then secretly upload the datasets (and sharing the data is of course great! but why would you#not indicate so in the very paper where you wrote an entire introduction about how hard it is to find resources for [x])#(you would think people would be motivated to release their data so others can use them and then cite the authors. but apparently not.)

5 notes

·

View notes

Text

I knew someone whose strat for avoiding theft was to only post wips and blurry zoomed in shots, and honestly what a 5head move.

#it's too late for artists now because everything's out on the internet already#and any kind of large commercial facing usage of their work would inevitably become part of a dataset#it's just a shame#i wonder how many people have stopped putting stuff online as a result

3 notes

·

View notes

Text

The Complexities of AI-Human Collaboration

Introduction

In recent years, artificial intelligence (AI) has been revolutionizing many facets of our lives, from improving our ability to make decisions to automating repetitive jobs. The cooperation of AI systems and people is one of the most important advances in this sector. Despite all of its potential, this alliance is not without its difficulties and complexity. The complexities of AI-human collaboration will be discussed in this blog post, along with its advantages, disadvantages, ethical implications, and prospects for peaceful cohabitation with machine intelligence.

I. The Potential for AI and Human Cooperation

1.1 Improved Judgment-Making

AI systems can process and analyze data faster and on a larger scale than humans can because of their sophisticated algorithms and large datasets. They can offer priceless insights in group settings, assisting others in making better judgments. This is especially true in industries like healthcare, where AI can help physicians diagnose more difficult-to-treat illnesses by examining patient information and medical imaging.

1.2 Efficiency and Automation

Automation powered by AI has the potential to optimize workflows in a variety of sectors, lowering labor costs and boosting output. For instance, in manufacturing, humans can concentrate on more sophisticated and creative aspects of the work while robots and AI-powered machinery perform repetitive and labor-intensive jobs with precision.

1.3 Customized Activities

Many internet services now come with AI-driven customization as standard functionality, from customized marketing campaigns to streaming platform recommendation algorithms. Businesses may increase user pleasure and engagement by working with AI to provide highly tailored experiences for their customers.

II. The Difficulties of AI-Human Coordination

2.1 Moral Conundrums

AI creates ethical concerns about privacy, data security, and justice as technology gets more and more ingrained in our daily lives. Ethical standards must be followed by collaborative AI systems to guarantee responsible data utilization, non-discrimination, and transparency. The difficulties in upholding moral AI-human cooperation are demonstrated by the Cambridge Analytica controversy and the ongoing discussions about algorithmic bias and data privacy.

2.2 Loss of Employment

There has been a lot of talk about the threat of automation leading to job displacement. Although AI can replace repetitive activities, it also begs the question of what a human's place in the workplace is. Businesses must carefully weigh the advantages of efficiency brought about by AI against the social and economic ramifications of job displacement.

2.3 Diminished Contextual Awareness

Despite their strength, AI systems frequently fail to comprehend the larger context of human relationships and emotions. This restriction may cause miscommunications, erroneous interpretations, and even harmful choices, particularly in delicate or emotional situations such as healthcare or customer service.

2.4 Accountability and Trust

For AI systems to be successfully integrated into a variety of disciplines, trust is essential. Establishing and preserving trust in AI calls for openness, responsibility, and resolving the possibility of biases and mistakes. Trust-related issues may make it more difficult for AI and people to work together seamlessly.

III. AI-Human Collaboration in Real-world Locations

3.1 Medical Care

Healthcare practitioners have benefited from the usage of AI-powered diagnostic systems, such as IBM's Watson, to help with disease diagnosis and therapy recommendations. AI and medical professionals working together may result in quicker and more precise diagnosis and treatment—possibly saving lives.

3.2 Self-Driving Cars

AI-human cooperation is essential to the development of self-driving cars. The AI system drives, but humans are needed for supervision, making decisions in difficult circumstances, and handling unforeseen circumstances. The goal of this collaboration is to increase traffic safety and lessen accidents.

3.3 Client Assistance

Chatbots and virtual assistants are widely used by organizations to respond to standard client inquiries. Artificial intelligence (AI) can effectively respond to routine inquiries, but human agents are on hand to handle trickier problems and add a personal touch, resulting in a flawless customer care experience.

3.4 Producing Content

AI is being used to create content, including music compositions, news articles, and even artwork. AI systems work with journalists and artists to discover new avenues for creativity. However, questions remain regarding the validity and uniqueness of information created by AI.

IV. Getting Past the Difficulties

4.1 Development of Ethics in AI

It is imperative that organizations and developers give ethical AI development top priority by integrating values like accountability, transparency, justice, and data privacy into their operations. Strong legal frameworks, moral standards, and continuous supervision can help achieve this.

4.2 Training using Human-AI

Promoting effective collaboration requires educating people about AI and its capabilities. Users can operate more productively with AI technologies by understanding the advantages and disadvantages of these systems with the aid of training programs.

4.3 Combo Positions

Fears of job displacement can be reduced by designing employment positions that combine human and AI activities. By utilizing AI's potential while retaining human supervision and knowledge, this strategy creates a hybrid workforce that benefits from the best aspects of both approaches.

4.4 Creating Collaborative Designs

AI-human collaboration should be considered while designing user interfaces and systems. This entails making certain that AI systems are simple to operate, offer understandable feedback, and blend in with current workflows.

V. AI-Human Collaboration's Future

5.1 Intelligent Augmentation

The idea of enhanced intelligence, in which AI systems complement human abilities rather than replace them, holds the key to the future of AI-human collaboration. AI will become a crucial tool to unlock human potential across a range of domains as it develops.

5.2 AI as an Adjunct Complement

AI will increasingly function as a human providing support, insight, and automating repetitive chores. This collaboration could increase productivity and efficiency in a variety of fields.

5.3 Frameworks for Ethics and Regulations

The establishment of moral and legal frameworks that uphold user rights and promote responsible AI use will be necessary for the growth of AI-human collaboration. These frameworks will aid in addressing the issues of algorithmic bias, accountability, and data privacy.

5.4 Ongoing Education

To properly interact, humans and AI systems will both need to constantly adapt and learn new things. While AI systems will need constant training to increase their comprehension of human context and emotions, humans will need to stay informed about AI capabilities and limitations.

In summary

There is no denying the complexity of AI-human collaboration; it raises issues with ethics, potential job displacement, and comprehension and trust. But by working together, AI and humans can create tailored experiences, increased decision-making, and automation, which makes this an interesting direction for the future. We can create the conditions for peaceful coexistence of human and machine intelligence by tackling these difficulties through ethical growth, training, and hybrid employment roles. This will open up a world of opportunities that will benefit people individually as well as society at large. Future AI-human cooperation has the potential to be a revolutionary force that ushers in a period of expanded human capabilities and augmented intelligence.

If all of my readers want to know more about Artificial intelligence at the current time please read This book to enhance your knowladge: https://amzn.to/3Sr5Tbo

#Pleasure and Engagement#Algorithmic Bias and Data Privacy.#AI-powered machinery#AI systems and people#AI-human collaboration#Sophisticated Algorithms and Large Datasets#Cambridge Analytica controversy#artificial intelligence#relationships#music#mindfulness#personal development#SEO#SMO#Digital Marketing

0 notes

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Link

#Generative ai services#generative ai data#generative ai solutions#Text to Speech Services#large text dataset

0 notes

Text

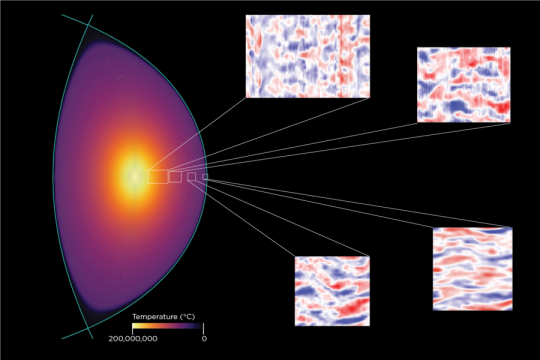

Houston Texas Appliance Parts: GPU-Based Analytics Platform Interprets Large Datasets

Houston Texas Appliance Parts

GPU-Based Analytics Platform Interprets Large Datasets

by Houston Texas Appliance Parts on Tuesday 14 February 2023 03:21 PM UTC-05

SQream Technologies has created a relational database management system that uses graphics processing units (GPUs) to perform big data analytics by means of structured query language (SQL). SQream was founded in 2010 by CEO Ami Gal and CTO and VP of R&D Razi Shoshani and is headquartered in Tel Aviv, Israel. The company joined the Google Cloud Partner Advantage program as a build partner via its no-code ETL and analytics platform, Panoply.

By using the computational power of GPUs, SQream's analytics platform can ingest, transform and query very large datasets on an hourly, daily or yearly basis. This platform enables SQream's customers to get complex insights out of their very large datasets.

Ami Gal, CEO and co-founder of SQream

"What we are doing is enabling organizations to reduce the size of their local data center by using fewer servers," Gal told EE Times. "With our software, the customer can use a couple of machines with a few GPUs each instead of a large number of machines and do the same job, achieving the same results."

According to SQream, the analytics platform can ingest up to 1,000× more data than conventional data analytics systems, doing it 10× to 50× faster, at 10% of the cost. Additionally, this is done with 10% of carbon consumption, because if it had been done using other powerful techniques based on conventional CPUs as opposed to GPUs, it would have needed many more computing nodes and would have consumed more carbon for doing the same workload.

SQreamDB

SQream's flagship product is SQreamDB, a SQL database that allows customers to execute complex analytics on a petabyte scale of data (up to 100 PB), gaining time-sensitive business insights faster and cheaper than from competitors' solutions.

As shown in Figure 1, the analytics platform can be deployed in the following ways:

Query engine: This step performs the analysis of data from any source (either internal or external) and in any format, on top of existing analytical and storage solutions. Data to be analyzed doesn't need to be duplicated.

Data preparation: Raw data is transformed through denormalization, pre-aggregation, feature generation, cleaning and BI processes. After that, it is ready to be processed by machine-learning, BI and AI algorithms.

Data warehouse: In this step, data is stored and managed on an enterprise scale. Decision-makers, business analysts, data engineers and data scientists can analyze this data and gain valuable insights from BI, SQL clients and other analytics apps.

Figure 1: SQream's analytics platform is based on three main deployments: query engine, data preparation and data warehouse. (Source: SQream Technologies)

Due to its modest hardware requirements and use of compression, SQream addresses the petabyte-scale analytics market, helping companies to save money and reduce carbon emissions. SQream did a benchmark with the help of the GreenBook guide statistics and found out that running standard analytics on 300 terabytes of data saved 90% of carbon emissions.

By taking advantage of the computational power and parallelism offered by GPUs, the software enables SQream to use much fewer resources in the data center to view and analyze the data.

"Instead of having six racks of servers, we can use only two servers to do the same job, and this allows our customers to save resources on the cloud," Gal said.

According to SQream, there are quite a few semiconductor manufacturing companies that have several IoT sensors in production. In general, the IoT is a use case that creates a lot of data and, consequently, a lot of derived analytics at scale.

Another factor that contributes to creating massive datasets is the fact that a lot of data analytics run in data centers use machine-learning algorithms: To achieve a high level of accuracy, these algorithms have to be run on big datasets. For running the algorithms on much bigger datasets, you need more storage, more computational power, more networking and more analytics.

"The more data you give machine-learning algorithms, the more accurate they are and the more satisfied the customer becomes," Gal said. "We're seeing how manufacturing, telecoms, banking, insurance, financial, healthcare and IoT companies are creating huge datasets that require a large data center. We can help in any of those use cases."

In data analytics, a crucial factor is scalability. SQream is always working on the platform architecture to make sure it will always be scalable for bigger datasets. That involves being continuously updated on future designs of policy bottlenecks, computing, processors, networking, storage and memory.

Another aspect the company is also looking into is to enable the whole product as a service. To achieve that, SQream is working together with the big cloud providers.

According to Gal, the customer often does not care about what needs to be done behind the scenes (such as required computers, networking, storage and memory) to enable the workloads. As a result, we might be in a situation where a lot of energy consumption, cooling consumption and carbon consumption are created. That's an extremely inefficient process.

"By releasing the same software, but as a service, the customer will continue with his mindset of not caring how the process is performed behind the scenes, and we will make the process efficient for him under the hood of the cloud platform," Gal said.

Millions of computers are added every year to the cloud platforms. This trend is growing exponentially, and companies are not going to stop doing analytics.

"I think one of the things we need to do as people solving architectural and computer problems for the customers is to make sure the architecture we offer them is efficient, robust, cost-effective and scalable," Gal said.

The post GPU-Based Analytics Platform Interprets Large Datasets appeared first on EE Times.

Pennsylvania Philadelphia PA Philadelphia

February 14, 2023 at 03:00PM

Hammond Louisiana Ukiah California Dike Iowa Maryville Missouri Secretary Maryland Winchester Illinois Kinsey Alabama Edmundson Missouri Stevens Village Alaska Haymarket Virginia Newington Virginia Edwards Missouri

https://unitedstatesvirtualmail.blogspot.com/2023/02/houston-texas-appliance-parts-gpu-based.html

February 14, 2023 at 04:31PM

Gruver Texas Glens Fork Kentucky Fork South Carolina Astoria Oregon Lac La Belle Wisconsin Pomfret Center Connecticut Nason Illinois Roan Mountain Tennessee

https://coloradovirtualmail.blogspot.com/2023/02/houston-texas-appliance-parts-gpu-based.html

February 14, 2023 at 05:41PM

from https://youtu.be/GuUaaPaTlyY

February 14, 2023 at 06:47PM

0 notes

Text

using public data for stuff feels like cheating sometimes

like, google maps and friends charge money and rate limit their APIs like hell and so on, but i can go download a half a gig of county parcel bounds and spam the county real estate database 10 times/sec for free

i can get the name of the owner of any property in my area

for free

it's so wild

it's not even that difficult either, especially if you've got moderate programming skill! but even if you don't they all have maps and human readable versions!! it's fun to just explore around sometimes!!

there is legitimately an absurd amount of govt.-maintained data, just, free to look at!!! fuck them aggregators that charge licensing fees and shit, go find out who the last owner of your house was! go see if your landlord pays their taxes!!!

or just ignore it! but there's a wealth of data out there to be played with for free, and i think that's kinda crazy

#im so sane and normal and have normal feelings abt large datasets#anyways uhhhhhhhhhhhhh#public data#sure that's a good tag

0 notes

Text

US Department of Energy Offers $33 Million in Funding for AI and Machine Learning in Fusion Energy and Plasma Sciences Research

US Department of Energy Offers $33 Million in Funding for AI and Machine Learning in Fusion Energy and Plasma Sciences Research

The US Department of Energy (DoE) is offering $33 million in funding for researchers who can apply artificial intelligence (AI) and machine learning (ML) to the analysis and simulation of fusion energy and plasma sciences.

The DoE is seeking proposals for the use of AI/ML on existing public data, with a focus on approaches that could support the development of a fusion pilot plant in the near…

View On WordPress

#AI#AI/ML#data resources#DoE#energy#funding#fusion energy#fusion ignition#fusion pilot plant#large datasets#Lawrence Livermore National Laboratory#machine learning#plasma sciences#research#simulation

0 notes

Text

This morning, as I was reading the news, I thought I should probably find some non-Tumblr coverage of the no-fly list leak, which I hadn’t had time to read up on yet. I was elbows-deep in actual news coverage of that story when I felt my brain grind to a halt, because I discovered that the data was found on an unsecured server in a CSV file.

I think it says something profound and not entirely flattering about me that I then thought, Everyone’s yelling about the polyamorous bisexual lesbian catgirl and nobody’s talking about the fact that the leak came from a motherfucking CSV file?

For context, a CSV file is the most basic possible way to store large datasets, where each data point is simply demarcated by a comma. You really only ever see it when trying to import/export to/from a database because otherwise it’s a fucking pain in the ass to use. Even if this was simply an export file from a secure database, the fact that it was the most widely accessible form of file, not even an Excel spreadsheet but like the himbo version of an Excel spreadsheet, is astonishingly bad data practice when handling that level of sensitive information.

A CSV! I thought to myself. Who cares what a bisexual lesbian is, it was in a CSV!

I’ve....I’ve maybe been working with databases and Excel for too long.

8K notes

·

View notes

Note

How exactly do you advance AI ethically? Considering how much of the data sets that these tools use was sourced, wouldnt you have to start from scratch?

a: i don't agree with the assertion that "using someone else's images to train an ai" is inherently unethical - ai art is demonstrably "less copy-paste-y" for lack of a better word than collage, and nobody would argue that collage is illegal or ethically shady. i mean some people might but i don't think they're correct.

b: several people have done this alraedy - see, mitsua diffusion, et al.

c: this whole argument is a red herring. it is not long-term relevant adobe firefly is already built exclusively off images they have legal rights to. the dataset question is irrelevant to ethical ai use, because companies already have huge vaults full of media they can train on and do so effectively.

you can cheer all you want that the artist-job-eating-machine made by adobe or disney is ethically sourced, thank god! but it'll still eat everyone's jobs. that's what you need to be caring about.

the solution here obviously is unionization, fighting for increased labor rights for people who stand to be affected by ai (as the writer's guild demonstrated! they did it exactly right!), and fighting for UBI so that we can eventually decouple the act of creation from the act of survival at a fundamental level (so i can stop getting these sorts of dms).

if you're interested in actually advancing ai as a field and not devils advocating me you can also participate in the FOSS (free-and-open-source) ecosystem so that adobe and disney and openai can't develop a monopoly on black-box proprietary technology, and we can have a future where anyone can create any images they want, on their computer, for free, anywhere, instead of behind a paywall they can't control.

fun fact related to that last bit: remember when getty images sued stable diffusion and everybody cheered? yeah anyway they're releasing their own ai generator now. crazy how literally no large company has your interests in mind.

cheers

2K notes

·

View notes

Text

Often when I post an AI-neutral or AI-positive take on an anti-AI post I get blocked, so I wanted to make my own post to share my thoughts on "Nightshade", the new adversarial data poisoning attack that the Glaze people have come out with.

I've read the paper and here are my takeaways:

Firstly, this is not necessarily or primarily a tool for artists to "coat" their images like Glaze; in fact, Nightshade works best when applied to sort of carefully selected "archetypal" images, ideally ones that were already generated using generative AI using a prompt for the generic concept to be attacked (which is what the authors did in their paper). Also, the image has to be explicitly paired with a specific text caption optimized to have the most impact, which would make it pretty annoying for individual artists to deploy.

While the intent of Nightshade is to have maximum impact with minimal data poisoning, in order to attack a large model there would have to be many thousands of samples in the training data. Obviously if you have a webpage that you created specifically to host a massive gallery poisoned images, that can be fairly easily blacklisted, so you'd have to have a lot of patience and resources in order to hide these enough so they proliferate into the training datasets of major models.

The main use case for this as suggested by the authors is to protect specific copyrights. The example they use is that of Disney specifically releasing a lot of poisoned images of Mickey Mouse to prevent people generating art of him. As a large company like Disney would be more likely to have the resources to seed Nightshade images at scale, this sounds like the most plausible large scale use case for me, even if web artists could crowdsource some sort of similar generic campaign.

Either way, the optimal use case of "large organization repeatedly using generative AI models to create images, then running through another resource heavy AI model to corrupt them, then hiding them on the open web, to protect specific concepts and copyrights" doesn't sound like the big win for freedom of expression that people are going to pretend it is. This is the case for a lot of discussion around AI and I wish people would stop flagwaving for corporate copyright protections, but whatever.

The panic about AI resource use in terms of power/water is mostly bunk (AI training is done once per large model, and in terms of industrial production processes, using a single airliner flight's worth of carbon output for an industrial model that can then be used indefinitely to do useful work seems like a small fry in comparison to all the other nonsense that humanity wastes power on). However, given that deploying this at scale would be a huge compute sink, it's ironic to see anti-AI activists for that is a talking point hyping this up so much.

In terms of actual attack effectiveness; like Glaze, this once again relies on analysis of the feature space of current public models such as Stable Diffusion. This means that effectiveness is reduced on other models with differing architectures and training sets. However, also like Glaze, it looks like the overall "world feature space" that generative models fit to is generalisable enough that this attack will work across models.

That means that if this does get deployed at scale, it could definitely fuck with a lot of current systems. That said, once again, it'd likely have a bigger effect on indie and open source generation projects than the massive corporate monoliths who are probably working to secure proprietary data sets, like I believe Adobe Firefly did. I don't like how these attacks concentrate the power up.

The generalisation of the attack doesn't mean that this can't be defended against, but it does mean that you'd likely need to invest in bespoke measures; e.g. specifically training a detector on a large dataset of Nightshade poison in order to filter them out, spending more time and labour curating your input dataset, or designing radically different architectures that don't produce a comparably similar virtual feature space. I.e. the effect of this being used at scale wouldn't eliminate "AI art", but it could potentially cause a headache for people all around and limit accessibility for hobbyists (although presumably curated datasets would trickle down eventually).

All in all a bit of a dick move that will make things harder for people in general, but I suppose that's the point, and what people who want to deploy this at scale are aiming for. I suppose with public data scraping that sort of thing is fair game I guess.

Additionally, since making my first reply I've had a look at their website:

Used responsibly, Nightshade can help deter model trainers who disregard copyrights, opt-out lists, and do-not-scrape/robots.txt directives. It does not rely on the kindness of model trainers, but instead associates a small incremental price on each piece of data scraped and trained without authorization. Nightshade's goal is not to break models, but to increase the cost of training on unlicensed data, such that licensing images from their creators becomes a viable alternative.

Once again we see that the intended impact of Nightshade is not to eliminate generative AI but to make it infeasible for models to be created and trained by without a corporate money-bag to pay licensing fees for guaranteed clean data. I generally feel that this focuses power upwards and is overall a bad move. If anything, this sort of model, where only large corporations can create and control AI tools, will do nothing to help counter the economic displacement without worker protection that is the real issue with AI systems deployment, but will exacerbate the problem of the benefits of those systems being more constrained to said large corporations.

Kinda sucks how that gets pushed through by lying to small artists about the importance of copyright law for their own small-scale works (ignoring the fact that processing derived metadata from web images is pretty damn clearly a fair use application).

1K notes

·

View notes

Text

Palantir’s NHS-stealing Big Lie

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me in TUCSON (Mar 9-10), then SAN FRANCISCO (Mar 13), Anaheim, and more!

Capitalism's Big Lie in four words: "There is no alternative." Looters use this lie for cover, insisting that they're hard-nosed grownups living in the reality of human nature, incentives, and facts (which don't care about your feelings).

The point of "there is no alternative" is to extinguish the innovative imagination. "There is no alternative" is really "stop trying to think of alternatives, dammit." But there are always alternatives, and the only reason to demand that they be excluded from consideration is that these alternatives are manifestly superior to the looter's supposed inevitability.

Right now, there's an attempt underway to loot the NHS, the UK's single most beloved institution. The NHS has been under sustained assault for decades – budget cuts, overt and stealth privatisation, etc. But one of its crown jewels has been stubbournly resistant to being auctioned off: patient data. Not that HMG hasn't repeatedly tried to flog patient data – it's just that the public won't stand for it:

https://www.theguardian.com/society/2023/nov/21/nhs-data-platform-may-be-undermined-by-lack-of-public-trust-warn-campaigners

Patients – quite reasonably – do not trust the private sector to handle their sensitive medical records.

Now, this presents a real conundrum, because NHS patient data, taken as a whole, holds untold medical insights. The UK is a large and diverse country and those records in aggregate can help researchers understand the efficacy of various medicines and other interventions. Leaving that data inert and unanalysed will cost lives: in the UK, and all over the world.

For years, the stock answer to "how do we do science on NHS records without violating patient privacy?" has been "just anonymise the data." The claim is that if you replace patient names with random numbers, you can release the data to research partners without compromising patient privacy, because no one will be able to turn those numbers back into names.

It would be great if this were true, but it isn't. In theory and in practice, it is surprisingly easy to "re-identify" individuals in anonymous data-sets. To take an obvious example: we know which two dates former PM Tony Blair was given a specific treatment for a cardiac emergency, because this happened while he was in office. We also know Blair's date of birth. Check any trove of NHS data that records a person who matches those three facts and you've found Tony Blair – and all the private data contained alongside those public facts is now in the public domain, forever.

Not everyone has Tony Blair's reidentification hooks, but everyone has data in some kind of database, and those databases are continually being breached, leaked or intentionally released. A breach from a taxi service like Addison-Lee or Uber, or from Transport for London, will reveal the journeys that immediately preceded each prescription at each clinic or hospital in an "anonymous" NHS dataset, which can then be cross-referenced to databases of home addresses and workplaces. In an eyeblink, millions of Britons' records of receiving treatment for STIs or cancer can be connected with named individuals – again, forever.

Re-identification attacks are now considered inevitable; security researchers have made a sport out of seeing how little additional information they need to re-identify individuals in anonymised data-sets. A surprising number of people in any large data-set can be re-identified based on a single characteristic in the data-set.

Given all this, anonymous NHS data releases should have been ruled out years ago. Instead, NHS records are to be handed over to the US military surveillance company Palantir, a notorious human-rights abuser and supplier to the world's most disgusting authoritarian regimes. Palantir – founded by the far-right Trump bagman Peter Thiel – takes its name from the evil wizard Sauron's all-seeing orb in Lord of the Rings ("Sauron, are we the baddies?"):

https://pluralistic.net/2022/10/01/the-palantir-will-see-you-now/#public-private-partnership

The argument for turning over Britons' most sensitive personal data to an offshore war-crimes company is "there is no alternative." The UK needs the medical insights in those NHS records, and this is the only way to get at them.

As with every instance of "there is no alternative," this turns out to be a lie. What's more, the alternative is vastly superior to this chumocratic sell-out, was Made in Britain, and is the envy of medical researchers the world 'round. That alternative is "trusted research environments." In a new article for the Good Law Project, I describe these nigh-miraculous tools for privacy-preserving, best-of-breed medical research:

https://goodlawproject.org/cory-doctorow-health-data-it-isnt-just-palantir-or-bust/

At the outset of the covid pandemic Oxford's Ben Goldacre and his colleagues set out to perform realtime analysis of the data flooding into NHS trusts up and down the country, in order to learn more about this new disease. To do so, they created Opensafely, an open-source database that was tied into each NHS trust's own patient record systems:

https://timharford.com/2022/07/how-to-save-more-lives-and-avoid-a-privacy-apocalypse/

Opensafely has its own database query language, built on SQL, but tailored to medical research. Researchers write programs in this language to extract aggregate data from each NHS trust's servers, posing medical questions of the data without ever directly touching it. These programs are published in advance on a git server, and are preflighted on synthetic NHS data on a test server. Once the program is approved, it is sent to the main Opensafely server, which then farms out parts of the query to each NHS trust, packages up the results, and publishes them to a public repository.

This is better than "the best of both worlds." This public scientific process, with peer review and disclosure built in, allows for frequent, complex analysis of NHS data without giving a single third party access to a a single patient record, ever. Opensafely was wildly successful: in just months, Opensafely collaborators published sixty blockbuster papers in Nature – science that shaped the world's response to the pandemic.

Opensafely was so successful that the Secretary of State for Health and Social Care commissioned a review of the programme with an eye to expanding it to serve as the nation's default way of conducting research on medical data:

https://www.gov.uk/government/publications/better-broader-safer-using-health-data-for-research-and-analysis/better-broader-safer-using-health-data-for-research-and-analysis

This approach is cheaper, safer, and more effective than handing hundreds of millions of pounds to Palantir and hoping they will manage the impossible: anonymising data well enough that it is never re-identified. Trusted Research Environments have been endorsed by national associations of doctors and researchers as the superior alternative to giving the NHS's data to Peter Thiel or any other sharp operator seeking a public contract.

As a lifelong privacy campaigner, I find this approach nothing short of inspiring. I would love for there to be a way for publishers and researchers to glean privacy-preserving insights from public library checkouts (such a system would prove an important counter to Amazon's proprietary god's-eye view of reading habits); or BBC podcasts or streaming video viewership.

You see, there is an alternative. We don't have to choose between science and privacy, or the public interest and private gain. There's always an alternative – if there wasn't, the other side wouldn't have to continuously repeat the lie that no alternative is possible.

Name your price for 18 of my DRM-free ebooks and support the Electronic Frontier Foundation with the Humble Cory Doctorow Bundle.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/03/08/the-fire-of-orodruin/#are-we-the-baddies

Image:

Gage Skidmore (modified)

https://commons.m.wikimedia.org/wiki/File:Peter_Thiel_(51876933345).jpg

CC BY-SA 2.0

https://creativecommons.org/licenses/by-sa/2.0/deed.en

#pluralistic#peter thiel#trusted research environment#opensafely#medical data#floss#privacy#reidentification#anonymization#anonymisation#nhs#ukpoli#uk#ben goldacre#goldacre report#science#evidence-based medicine#goldacre review#interoperability#transparency

521 notes

·

View notes