#relu

Text

85 notes

·

View notes

Text

Merkara and Relu are in Stardew Valley now. I found this character creator and couldn't resist. Already decided that Merkara is a rogue magician, she's here to cause mischief and steal some artifacts. And Relu is a doctor, he used to work in a war zone and escaped to a small town to heal from his traumatizing experience

58 notes

·

View notes

Text

youtube

Sludge by Relu feat. flower

12 notes

·

View notes

Text

SPIRIT - StarLight PolaRis [Kan/Rom/Esp]

SPIRIT - すたぽら

Vocal: すたぽら

Words: Relu

Music: Relu

❀❀❀❀

subete wo okizari ni shite seigi mo aku mo boku ni wa iranai

mata hitori sore de iinda darenimo wakaru hazu nai kara

"shiawase nante machigai" to warau koto wo yamete shimau kurai

kurushikute omoitsumete kore ga boku no ikiru sube datta

全てを置き去りにして 正義も悪も僕には要らない

また一人 それでいいんだ 誰にもわかるはずないから

"幸せなんて間違い"と笑うことをやめてしまうくらい

苦しくて 思い詰めて これが僕の生きる術だった

Dejé atrás cosas innecesarias, como la justicia o la maldad,

quedándome solo, aunque estuve bien con eso, nadie podría entenderlo de todos modos.

"La felicidad es un error", todo era tan doloroso que dejé de sonreír.

Luego de pensarlo, me di cuenta que así era como vivía.

daijoubu dayo hora te wo totte

koko ni wa atatakai basho ga aru

kurayami ni hikaru ano hoshi made

大丈夫だよ ほら手を取って

ここには暖かい居場所がある

暗闇に光るあの星まで

Todo está bien, vamos, toma mi mano,

aquí hay un lugar cálido donde puedes estar.

Hasta que salgas de la oscuridad y alcances esa estrella.

tada oikakete tada oikakete iza yubisasu saki he

suuji to USO ni mamireta sekai dehitasura tatakaitai

kimi ga ite boku ga ireba hora nanimo kowakunai

deaeta kiseki ga ima kiseki wo yobu

ただ追いかけて ただ追いかけて いざ指差す先へ

数字とウソにまみれた世界でひたすら戦いたい

君がいて 僕がいれば ほら何も怖くない

出会えた奇跡が 今 奇跡を呼ぶ

Simplemente sígueme, simplemente sígueme, al lugar que estoy señalando.

Quiero luchar con seriedad en un mundo lleno de valores y mentiras.

Si tú estás aquí, y yo también lo estoy, mira, no hay nada que temer.

Al habernos conocido, le podemos llamar "milagro".

todokeru no ga chiisakute mo omoi made ga chiisai wake jyanai

dareka ni wa kantan na koto doushite dareka to kuraberu no?

seikai dake iino ni dokonimo kotae nante nai you de

kowainda shinjiru no ga kokochi warui yononaka datta

届けるのが小さくても思いまでが小さいわけじゃない

誰かには 簡単なこと どうして誰かと比べるの?

正解だけでいいのに どこにも答えなんてないようで

怖いんだ 信じるのが 心地悪い世の中だった

Incluso si lo que te alcanza es algo pequeño, no significa que los sentimientos también lo sean.

Hay cosas más fáciles para alguien más, pero, ¿por qué deberías compararte?

La respuesta correcta es lo que necesito, pero no hay respuesta en ninguna parte.

Tenía miedo estando en un mundo donde es difícil creer.

nee nakanaide utsumukanai

kimi no mikata wa koko ni iru

kanashimi no saki ni aru ano hoshi made

ねえ 泣かないで 俯かないで

君の味方はここにいる

悲しみの先にある あの星まで

Hey, no llores, no bajes la mirada,

tienes un aliado aquí.

Hasta que salgas del dolor y alcances esa estrella.

tada oikakete tada oikakete iza yubisasu saki he

suuji to USO ni mamireta sekai dehitasura tatakaitai

kimi ga ite boku ga ireba hora nanimo kowakunai

deaeta kiseki ga ima kiseki wo yobu

ただ追いかけて ただ追いかけて いざ指差す先へ

数字とウソにまみれた世界でひたすら戦いたい

君がいて 僕がいれば ほら何も怖くない

出会えた奇跡が 今 奇跡を呼ぶ

Simplemente sígueme, simplemente sígueme, al lugar que estoy señalando.

Quiero luchar con seriedad en un mundo lleno de valores y mentiras.

Si tú estás aquí, y yo también lo estoy, mira, no hay nada que temer.

Al habernos conocido, le podemos llamar "milagro".

utagai kata mo shirazu ni kizu darake mou hitori ni shite oite

soredemo te wo nobashite ita sukui agerareru koto wo nozondeta

kitto minna sou nandayo

sou yatte bokutachi wa koko ni iru

kurushimi wo norikoete saa ima

疑い方も知らずに 傷だらけ もう一人にしておいて

それでも手を伸ばしていた 掬い上げられることを望んでた

きっとみんなそうなんだよ

そうやって僕たちはここにいる

苦しみを乗り越えて さあ 今

Sin darme cuenta, estaba lleno de cicatrices, quería estar solo.

Aun así, extendí la mano, con la esperanza de ser salvado.

Seguramente todos son así.

Así es como estamos viviendo.

Superemos el dolor, vamos.

oikakete tada oikakete iza yubisasu saki he

suuji to USO ni mamireta sekai dehitasura tatakaitai

kimi ga ite boku ga ireba hora nanimo kowakunai

deaeta kiseki ga ima kiseki wo yobu

追いかけて ただ追いかけて いざ指差す先へ

数字とウソにまみれた世界でひたすら戦いたい

君がいて 僕がいれば ほら何も怖くない

出会えた奇跡が 今 奇跡を呼ぶ

Sígueme, simplemente sígueme, al lugar que estoy señalando.

Quiero luchar con seriedad en un mundo lleno de valores y mentiras.

Si tú estás aquí, y yo también lo estoy, mira, no hay nada que temer.

Al habernos conocido, le podemos llamar "milagro".

❀❀❀❀

En el coro no se refieren a valores morales, sino a valores numéricos, dígitos.

#utaites#utaite#traduccion al español#歌い手#letra de canción#starlight polaris#すたぽら#starpola#starpola trad#relu#coe#kottaro#yu#kisaragi yu#kuni#letra en español

7 notes

·

View notes

Text

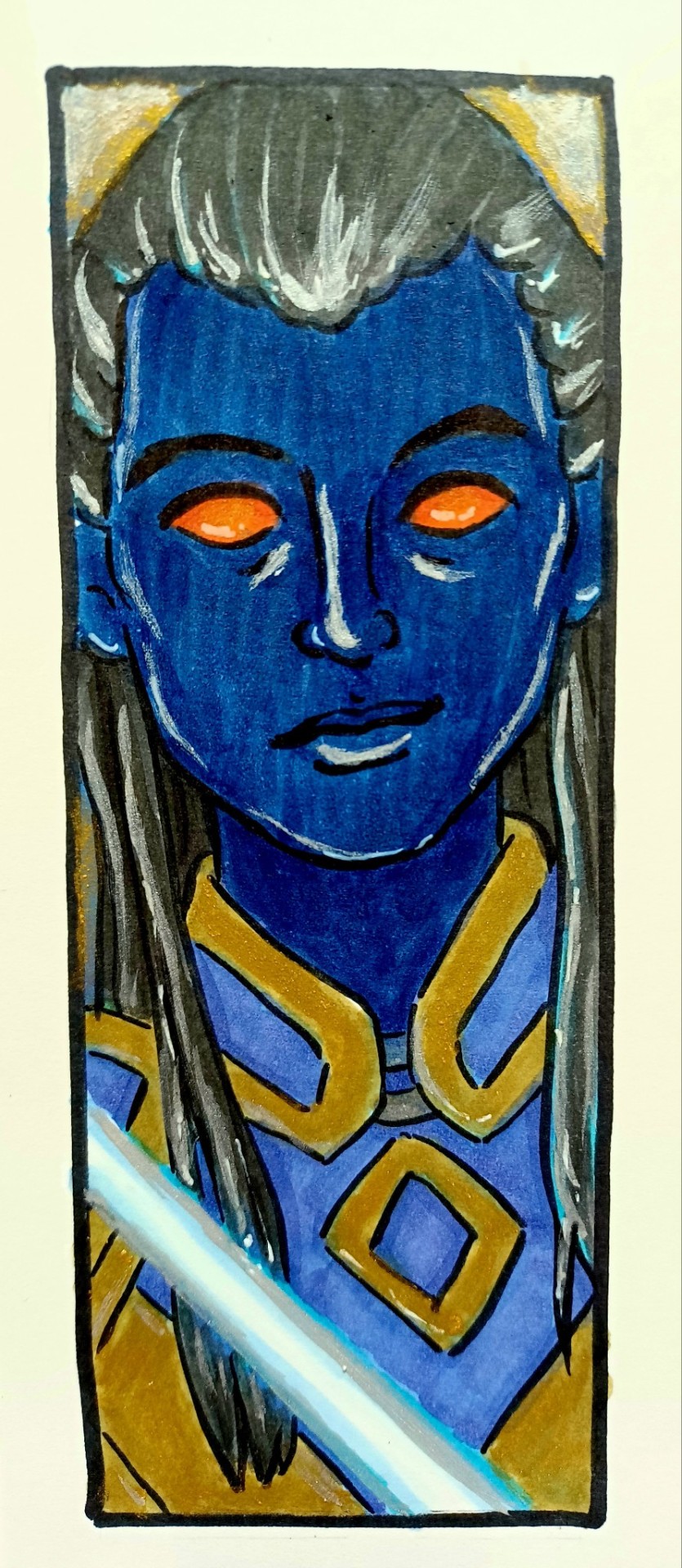

New profile pic! I can't remember if I ever shared this lovely @moosopp-art commission here but this is my darling Relu

#dnd character#dnd oc#retiring oneki because the campaign is over and she's retiring to wander the world with a drow#a baby#and a de-godified demi god#Do not be concerned about the beaker in her hand#She is a healer#Mostly#relu

1 note

·

View note

Text

ReLU Activation Function: A Powerful Tool in Deep Learning

Introduction

In the vast field of deep learning, activation functions play a crucial role in determining the output of a neural network. One popular activation function is the Rectified Linear Unit (ReLU). In this article, we will delve into the details of the ReLU activation function, its benefits, and its applications in the realm of artificial intelligence.

Table of Contents

What is an Activation Function?

Understanding ReLU Activation

2.1 Definition of ReLU

2.2 How ReLU Works

2.3 Mathematical Representation

Advantages of ReLU

3.1 Simplicity and Efficiency

3.2 Addressing the Vanishing Gradient Problem

3.3 Non-linear Transformation

ReLU in Practice

4.1 ReLU in Convolutional Neural Networks

4.2 ReLU in Recurrent Neural Networks

4.3 ReLU in Generative Adversarial Networks

Limitations of ReLU

5.1 Dead ReLU Problem

5.2 Gradient Descent Variants

Conclusion

FAQs

7.1 How does ReLU differ from other activation functions?

7.2 Can ReLU be used in all layers of a neural network?

7.3 What are the alternatives to ReLU?

7.4 Does ReLU have any impact on model performance?

7.5 Can ReLU be used in regression tasks?

1. What is an Activation Function?

Before diving into the specifics of ReLU, it's essential to understand the concept of an activation function. An activation function introduces non-linearity into the output of a neuron, enabling neural networks to learn complex patterns and make accurate predictions. It determines whether a neuron should be activated or not based on the input it receives.

2. Understanding ReLU Activation

2.1 Definition of ReLU

ReLU, short for Rectified Linear Unit, is an activation function that maps any negative input value to zero and passes positive input values as they are. In other words, ReLU outputs zero if the input is negative and the input value itself if it is positive.

2.2 How ReLU Works

ReLU is a simple yet powerful activation function that contributes to the success of deep learning models. It acts as a threshold function, allowing the network to activate specific neurons and discard others. By discarding negative values, ReLU introduces sparsity, which helps the network focus on relevant features and enhances computational efficiency.

2.3 Mathematical Representation

Mathematically, ReLU can be defined as follows:

scssCopy code

f(x) = max(0, x)

where x represents the input to the activation function, and max(0, x) returns the maximum value between zero and x.

3. Advantages of ReLU

ReLU offers several advantages that contribute to its widespread use in deep learning models. Let's explore some of these benefits:

3.1 Simplicity and Efficiency

ReLU's simplicity makes it computationally efficient, allowing neural networks to process large amounts of data quickly. The function only involves a simple threshold operation, making it easy to implement and compute.

3.2 Addressing the Vanishing Gradient Problem

One significant challenge in training deep neural networks is the vanishing gradient problem. Activation functions such as sigmoid and tanh tend to saturate, resulting in gradients close to zero. ReLU helps alleviate this issue by preserving gradients for positive inputs, enabling effective backpropagation and faster convergence.

3.3 Non-linear Transformation

ReLU introduces non-linearity, enabling the network to learn complex relationships between inputs and outputs. Its ability to model non-linear transformations makes it suitable for a wide range of tasks, including image and speech recognition, natural language processing, and more.

4. ReLU in Practice

ReLU finds applications in various deep learning architectures. Let's explore how ReLU is used in different types of neural networks:

4.1 ReLU in Convolutional Neural Networks

Convolutional Neural Networks (CNNs) excel in image classification tasks. ReLU is commonly used as the activation function in CNNs because of its ability to capture and enhance relevant features within images.

4.2 ReLU in Recurrent Neural Networks

Recurrent Neural Networks (RNNs) are widely used in sequential data processing, such as language modeling and speech recognition. ReLU's non-linearity helps RNNs learn long-term dependencies in sequential data, leading to improved performance.

4.3 ReLU in Generative Adversarial Networks

Generative Adversarial Networks (GANs) are used for tasks such as image synthesis and style transfer. ReLU activation is often employed in the generator network of GANs to introduce non-linearity and improve the quality of generated samples.

5. Limitations of ReLU

While ReLU offers significant advantages, it also has some limitations that researchers and practitioners should be aware of:

5.1 Dead ReLU Problem

In certain scenarios, ReLU neurons can become "dead" and cease to activate. When a neuron's weights and biases are set in such a way that the output is always negative, the gradient during backpropagation becomes zero, rendering the neuron inactive. This issue can be addressed by using variants of ReLU, such as Leaky ReLU or Parametric ReLU.

5.2 Gradient Descent Variants

Some variants of gradient descent, such as the second-order methods, may not perform well with ReLU activation due to its non-differentiability at zero. Proper initialization techniques and learning rate schedules should be employed to mitigate these issues.

6. Conclusion

ReLU has emerged as a fundamental building block in deep learning models, offering simplicity, efficiency, and non-linearity. Its ability to address the vanishing gradient problem and its successful application across various neural network architectures make it an indispensable tool in the field of artificial intelligence.

FAQs

7.1 How does ReLU differ from other activation functions?

ReLU differs from other activation functions by transforming negative inputs to zero, while allowing positive inputs to pass unchanged. This characteristic enables ReLU to introduce sparsity and preserve gradients during backpropagation.

7.2 Can ReLU be used in all layers of a neural network?

ReLU can be used in most layers of a neural network. However, it is advisable to avoid ReLU in the output layer for tasks that involve predicting negative values. In such cases, alternative activation functions like sigmoid or softmax are typically used.

7.3 What are the alternatives to ReLU?

Some popular alternatives to ReLU include Leaky ReLU, Parametric ReLU (PReLU), and Exponential Linear Unit (ELU). These variants address the dead ReLU problem and introduce improvements in terms of the overall performance of the neural network.

7.4 Does ReLU have any impact on model performance?

ReLU can have a significant impact on model performance. By promoting sparsity and non-linearity, ReLU aids in capturing complex patterns and improving the network's ability to learn and generalize.

7.5 Can ReLU be used in regression tasks?

ReLU can be used in regression tasks; however, it is crucial to consider the nature of the target variable. If the target variable includes negative values, ReLU might not be suitable, and alternative activation functions should be explored.

0 notes

Text

Infermedica launchs Intake API in order to improve patient care, minimize clinician burnout, and give intake data prior to care

- By InnoNurse Staff -

Infermedica, an AI-powered digital health platform that provides solutions for symptom analysis and patient triage, has expanded its API to incorporate Intake features. The new Intake API features are an expansion of the company's Medical Guidance Platform, which builds on its clinically verified Triage product.

The new API features are intended to increase clinician productivity while also personalizing the patient experience.

Read more at Infermedica/PRNewswire

///

Other recent news and insights

Kayentis, a French medtech firm delivering electronic Clinical Outcome Assessment solutions, has raised €5 million to expand its operations in the United States (Tech.eu)

Relu raises €2 million for dental treatment planning automation (Relu/PRNewswire)

#infermedica#health tech#medtech#data science#api#computing#triage#health it#kayentis#france#ecoa#clinical trials#belgium#data management#automation#dental care#relu#ai#artificial intelligence#imaging#medical imaging#usa

0 notes

Text

towards first-principles architecture design – The Berkeley Artificial Intelligence Research Blog

towards first-principles architecture design – The Berkeley Artificial Intelligence Research Blog

2022-08-29 09:00:00

Foundational works showed how to find the kernel corresponding to a wide network. We find the inverse mapping, showing how to find the wide network corresponding to a given kernel.

Deep neural networks have enabled technological wonders ranging from voice recognition to machine transition to protein engineering, but their design and application is nonetheless notoriously…

View On WordPress

0 notes

Text

Lihle Relu (@ amzo.xx)

8 notes

·

View notes

Text

61 notes

·

View notes

Text

Thank you @chaosandwonder, you're an angel and your art gives me so much joy 💜

#swtor#swtor art#submission#you're like Santa Claus of swtor fandom#swtor oc#relu#merkara#emotional support art 💜

66 notes

·

View notes

Text

youtube

Your by Relu feat. Kagamine Rin

2 notes

·

View notes

Text

SWTOR Headcanon: I Ship Jaymizu Poh and Achitan

For those who don't remember, these two were involved in the "Reclaiming What's Ours" mission in Republic-side Taris.

Jaymizu Poh

Achitan

Jaymizu and her blustering brother, Relus, are looking to reclaim their family's lands (from 300 years ago) on Taris. When they can't get the Republic military to help, they turn to the player for help.

Of course, the player discovers that the squatters are a group of non-human's led by Achitan whose ancestors had an even older claim. Their land had been repossessed by the Senate at some point, apparently due to anti-non-human bigotry.

(Mind you, that would have been long before KOTOR1, much less SWTOR. There are VERY few examples of Republic anti-alien bigotry in the SWTOR stories.)

In my Halcyon Legacy, Ulannium Kaarz, my Barsen'thor, enountered these two and brokered a settlement.

And... i'm shipping them. I headcanon that both of them evacuate Taris when the Empire attacks, with Relus and most of Achitan's friends not making it.

And they find comfort with each other, eventually forming an organization to help refugees.

Anyone like this?

#swtorpadawan talks#swtor talks#swtorpadawan headcanons#swtor headcanon#jaymizu poh#achitan#taris#Reclaiming What's Ours#oc: ulannium kaarz#relus poh

18 notes

·

View notes

Text

Heads up, Seven up !

Tagged by @tales-from-nocturnaliss :)

Tagging : @isabellebissonrouthier and OPEN TAG i never actually tagged 7 people in this.

Rules (which exist, apparently) : post 7 lines (or so) from a WIP then tag 7 people (or 6 with an open tag)

Let's go, with... Well, the last bits I wrote for Hélianthe and Atropa. Which are, you guessed it, in french.

-

L'autre resta silencieuse quelques instants. Puis Atropa l'entendit se lever et s'éloigner et crut enfin être tranquille, jusqu'à ce qu'elle ajoute :

– J'espère ne pas avoir tué autant de gens que vous à cet âge-là.

– T'as choisi le mauvais travail pour ça, gardienne. Ton taf c'est le même que le mien, sauf que tu choisis pas ton employeur et la majorité des gens que tu butes sont innocents. Moi des fois j'ai de la chance et je me débarrasse de connards.

Margot détala, le bruit de sa course comme une chanson aux oreilles d'Atropa.

#heads up seven up#tag games#writing games#my writing#ok real talk. first time i was tagged in a heads up seven up i just assumed it was a 7 last linesyou wrote thing and that's#how i've been doing it ever since#i don't KNOW 7 people i can consistently tag anyway#bref#hélianthe et atropa#encore une fois c'est un premier jet non relu et non édité voilààà

9 notes

·

View notes

Note

For the mini-fics: "dream" (Four Swords, any AU, maybe some Red/Blue if you feel like it? Only if you feel like it though, I do remember you mentioning it wasn't one of your ships, and I'm happy to read any interaction between the two <3)

It... it wasn't one of my ships. And then your writing, and then this, and uh. Anyway, I guess this is some kind of tragic fairytale AU. (it isn't long!)

---

"I don't like this," Blue muttered, shadowing Red as they stalked through the castle halls. His heels clicked on the polished floors, and glints of armor and a crown kept catching Red's eye in the mirrors.

"I, Red, the prince and heir of Hyrule, do solemnly invoke the sacred Wall of Thorns."

Red tried to ignore the warm presence at his shoulder. This was not the time or place for dreams, especially silly dreams like taking Blue's hand, or leaning against Blue's chest, or dragging him into one of the closets they passed and—

Army, approaching. Danger, imminent. Blue, bodyguard, not boyfriend. Not now, not ever. They'd run out of time. It hurt.

"This castle and all therin will be protected from harm by the Thorns of Nayru, caught in time just as their prince."

"Nobody likes it, Blue," Red answered. He hoped his voice didn't shake, or that Blue would take it to be fear of the coming storm rather than anything else. They slowed in front of the large door just ahead, decorated with wrought iron in the shape of thorny rosevines.

"I don't want to let you go through with this."

"But you will, because there's no other way. You agreed about that." Red unlocked the door, and Blue helped him to push it open. It felt heavy, in more than one way. His steps slowed as he approached the raised dais in the center of the dim, circular room.

"The land will be blessed and forgotten by all but its inhabitants until I may take possession of the throne once again."

It wasn't strictly tall enough for him to need help, but Red held his hand out anyway. "Help me up?"

Blue's hand was warm under the soft leather of the glove. After Red stepped up, Blue followed, and Red had to actively avoid his eyes, or he'd get lost. They didn't have the time to waste.

Red sat on the richly appointed bed and looked at his hand, still clutched in Blue's. "You don't have to be here right now."

"You're not going to keep me away."

"I know." He'd been so sure that he'd have time with Blue someday. But Blue couldn't be… he couldn't lift the spell of he was trapped inside it with the rest of the castle. With the rest of the kingdom.

"The spell will lift only when one who can be considered a soulmate bestows upon me true love's first kiss."

Red picked up the small book on the night table and lit the candle, all with one hand.

"This spell is irrevocable, impenetrable, and absolutely binding."

He'd been so sure. He started reading the spell's activation, even as hoofbeats sounded in the windows.

---

If I wrote out this whole thing, there would be a loophole or something where Blue doesn't actually fall asleep but ends up being captured by the enemy and has to fight his way out—or maybe Green and Vio show up and one of them could be considered a platonic soulmate—or magic blips and just Blue wakes up, minutes at a time every few years, watching the world out the windows change, battling with his feelings and taking a long time to get over himself. There are a lot of options!

#my writing#3sent#four swords#whats the ship tag#uhhhh#ive never tagged this ship before#red x blue#lets go with that#fsa red#fsa blue#their ship name would be bled or rue#which are cool but neither are quite the right vibe#blred#relue#its fine

20 notes

·

View notes