#neuroslug

Text

IT LIVES

IT LIIIIIIVEEEESSSSSSS

That's it, I'm gonna make my own damn cartoons. With blackjack and bugs

372 notes

·

View notes

Text

Helping Neuroslug help me

Admittedly it took me an embarrassing amount of time to figure out and start using inpainting, but now that I've had a taste of it my head is spinning with possibilities. And so I'm making this post to show the process and maybe encourage more artists to try their hand at generating stuff. It really can can be an amazing teammate when you know how to apply it.

For those who didn't see my first post on this, I've trained an AI on my artworks, because base Stable Diffusion doesn't understand what anthropomorphic insects are.

That out of the way, here we go:

I noticed that a primarily character focused LoRA often botches backgrounds (probably because few images of the dataset have them) so I went with generating a background separately and roughly blocking out a character over it in Procreate. Since it was a first experiment I got really generous with proper shading and even textures. Unsurprisingly, SD did it's job quite well without much struggle.

Basically masked out separate parts such as fluff, skirt, watering can, etc. and changed the prompt to focus on that specific object to add detail.

There were some bloopers too. She's projecting her inner spider.

Of course it ate the hands. Not inpainting those, it's the one thing I'll render correctly faster than the AI does. Some manual touchups to finish it off and voila:

The detail that would have taken me hours is done in 10-20 minutes of iterating through various generations. And nothing significant got lost in translation from the block out, much recommend.

But that was easy mode, my rough sketch could be passed off as finished on one of my lazier days, not hard to complete something like that. Lets' try rough rough.

I got way fewer chuckles out of this than I expected, it took only 4-5 iterations for the bot to offer me something close to the sketch.

>:C

It ate the belly. I demand the belly back.

Scribble it in...

Much better.

Can do that with any bit actually, very nice for iterating a character design.

Opal eyes maybe?

Lol

Okay, no, it's kind of unsettling. Back to red ones.

Now, let's give her thigh highs because why not?

It should be fancier. Give me a lace trim.

Now we're talking. Since we've started playing dress-up anyway, why not try a dress too. Please don't render my scribble like a trash bag. I know you want to.

Phew

I crave more details.

Cute. Perhaps I'll clean it up later.

...

..

.

SHRIMP DRESS

#neuroslug#slug's experiments#ai assisted art#moth#I need to retrain neuroslug on a more artsy checkpoint#base model leans more to realism and it affects the style a lot#not complaining but i want it to mimic my usual style better

442 notes

·

View notes

Text

NEUROSLUG IS OUT OF CONTAINMENT

You can grab it here

It's a little dumb but it's trying it's best so treat it well.

yes I did just use hand drawn art to announce an AI model, don't apply human logic to me, I'm a slug

Brief list of features: imitation of three different coloring styles of mine, knows anthro butterflies, moths, beetles and flies, can create about 8 different body types on both male and female characters if you ask hard enough.

Guidelines on it's application and fun details will be attached in a reblog. Have fun with my spawn.

287 notes

·

View notes

Text

There's something indescribable about routinely finding nodes like this in workflows that are like a month old.

On that note, getting the perfect control I want has been a real struggle. There's a ton of workflows for animations, but the moment you peek outside the mainstream you're basically on your own.

About 50 nodes in I realized that I need to start simple and tried the basic text to video just to see how Neuroslug would fare with zero guidance on my part. Honestly expected the shapes to absolutely fall apart because AnimateDiff is trained on mostly humans.

But-

It figured out how to rotate their heads. This honestly incredible, I didn't go out of my way to put all the head angles into the training data and it still figured this out.

Obviously they're not perfect, but it's awesome that it can do this much on it's own. There are prettier examples too, the movement just isn't as extreme/complex. Behold my little collection

It's really surreal to be able to be able to peek into a world that I created without controlling and knowing every detail of the image/video. Brings so many ideas too.

I planned to just hand draw the backgrounds for what I'm planning and leave them static, but looking at these makes me want to run some of the areas through animated diff with low denoising to introduce some instability there. Honestly loving this kind of imperfection.

Kay, back to trying control methods. One of them is bound to click at some point.

214 notes

·

View notes

Text

More quick and dirty fluffies for the data set. Neuroslug will learn how an anthro butterfly laying down should look like, whether it wants to or not.

478 notes

·

View notes

Text

Not quite what I want from this, but it's making for a rather cute post-process already. I like how it brings in the color variation typical for my style and tints the shadows to something more lively.

Let's see what high res fix can do about the details and if it fails I can always just draw in some lines as guides

#slug's experiments#im doing this without depth control net for now#ill try that tomorrow cuz it'll allow for higher denoising without losing proportions#neuroslug

163 notes

·

View notes

Text

Neuroslug evolved into some kind of (body horror-y) insect waifu generator

Look at them all. Much sexeh

Also yes, that's a rococo bunk bed, for when you have lots of money but little space

221 notes

·

View notes

Text

I'm a sucker for punishment.

Challenged myself to achieving exactly what I want purely in AI without cheating by finishing the pic off in a proper drawing program. Behold the struggle of making this fine gentleman.

Here's the base I drew in all it's MS Paint-looking glory.

What I surmised from the about 2 hours of struggle this took me:

1. The statement that "it's as simple as writing some prompts" is a fat lie.

2. AI art has a stage of looking like ass before it starts to look good similar to normal art.

3. The algorithm can be unimaginably, remarkably, monumentally dumb. Artificial Stupidity is a thing and it's pervasive.

Mother of God

Did...did you just draw a dog there?

STOP

I'm putting both dog and cat in the negative prompt.

Screw you too, bot, screw you too.

Honestly can't imagine what it must be like to try getting your exact vision in SD without having at least some art skills to help the algorithm through it's mental deficiencies.

#neuroslug#slug's experiments#AI is like a stormy sea and your own skill is the boat you get to use#Go in unprepared and you get a little raft#learn some anatomy and you got yourself an aircraft carrier#the result can look good either way#but the amount of control you have is affected drastically#stable diffusion

125 notes

·

View notes

Text

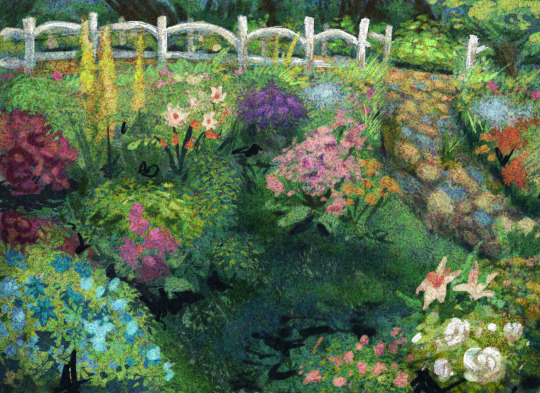

Denoiser wisdom

Since a lot of people showed interest in my workflow of using SD like a renderer for existing sketches, I'll be sharing the little tricks I find while exploring the capabilities of SD with Neuroslug. Read the inpainting post to understand this one.

When inpainting, the model takes into consideration what is already in the area it regenerates and in the areas around it. How exactly it'll follow these guidelines is determined by denoising strength. At low values it'll stick closely to the areas of color it sees and won't create anything radically different from the base. At high denoising strength it'll gladly insert colors, shapes and silhouettes that weren't there originally.

Basically the more you trust your sketch the smaller your denoising strength should be. It doesn't mean you won't need the high denoising at some point. Let me explain it using yesterday's artwork.

It all starts with a rough sketch.

Since I have a particular composition in mind and want it to be maintained, I'll be using a low denoising strength to fully regenerate this image.

It means that the algorithm won't have enough freedom to fix my large-scale mistakes, it's simply not allowed to change the areas of color too dramatically. So if you want to do this yourself make sure to set the image to black and white first and check that your values are working and contrast is good.

To make sure the result isn't too cartoony and flat I used brushes with strong color jitter and threw a rather aggressive noise texture over the whole thing. This'll give the denoiser a little wiggle room to sprout details out of thin air.

It kept the composition, the suggested lighting and the majority of flowers kept their intended colors too. This was denoising strength 0f 0.4. To contrast that, same base image with denoising at 0.7:

It's pretty, but it's neither the style nor composition I wanted.

Let's refine the newly redrawn base to include the details that were lost in transition.

These were intended to be roses.

It's here where I learned a little trick. You can mix and match different models to achieve the look you desire.

Neuroslug is good at detailed moths and painterly environments. It's not good at spitting out really detailed flowers, they end up looking very impressionist which is not what I want in foreground.

So, I switched to an anime focused model and let it run wild on this bush with high (0.7) denoising strength.

Nice definition, but it looks too smooth and isn't in line with what I want.

Switching back to Neuroslug with denoising at 0.5 and letting it work over these roses.

This way, I get both the silhouette and contrast of the anime model (counterfeitV30) and the matching style of Neuroslug. It's also useful in cases where the model doesn't know a particular flower. You can generate an abstract flower cluster with the anime model and use the base model to remind the AI that what you want is in fact a phlox specifically.

So I did this to basically every flower cluster on the image to arrive at this:

It's still a bit of a mess but it has taken me about 80% of the way there, the rest I'll be fixing up myself.

My "Lazy Foliage" brush set was really helpful for this. I'll release that one once it accumulates enough brushes to be really versatile.

Now we block in the character.

Yes, I left the hands wonky since I intend to be drawing them manually later, same about the foot. There's so much opportunity for the AI to mess them up that I'd rather have all the control on these details.

When it renders the face it can really mess up everything, so I do it with low (0.45) denoising strength to discourage new eyes popping up in inappropriate places. Take note that I kept the antennae out of the mask. AI is easily confused when one subject overlaps the other.

Good, good.

Wait. Why are your eyes hairy?

Now, mask out the eyes, remove all mention of fur from the prompt and

That's about right. Since the eyes are all one color block I can afford to raise the denoising strength for more wild results.

Same for areas of just fluff on the entire body, it's all one texture and having the denoiser at 0.6-0.75 is beneficial because it's going to add locks, stray hairs and other fluffy goodness. Just make sure to not make the mask too tight to the silhouette, it needs some space to add hairs sticking out.

With the skirt it was back to really low denoising. The folds I blocked in make sense with the position oh her legs under it, so I didn't want it to be lost.

Lastly, I drew in a flower that she's planting and ran over it with moderately high denoising to make it match the surrounding style. Ignore the biblically accurate roots there, I'll fix them by hand.

One last pass over the whole thing in Procreate. I draw the hands and add details such as the round pseudopupils, face ridges and wing markings to keep the character consistent with the previous image of her. And a bit of focal blur for a feeling of depth.

Phew, even with generous use of AI this whole thing took an entire day of work. In the end what determines quality isn't the tool you use but the attention you choose to pay to finding inconsistencies and fixing them.

#neuroslug#ai assisted art#stable diffusion#anthro#moth#tutorial#I guess it counts as a tutorial at least#what are these long posts even#slug's experiments

127 notes

·

View notes

Note

I got this slightly upsetting "ask" from someone who saw one of your images I shared and assumed it was "AI Art." I tagged you in my response: tumblr.com/futurebird/744514952775286784

I think the way you are using AI in your art is really neat. I think calling any of your work "AI Art (derogatory)" is wrong but wanted to know what you thought about it and if the particular drawing used any AI.

My guess it it's pure illustration (whatever that means, is a gradient "using AI" ?)

In any case keep making beautiful drawings however you do it, I hope you keep sharing your process --it's so helpful for all us amateurs.

And please maybe draw more ants.

Man, I wish I was skilled/experienced enough to train a model that produced images this coherent with a dataset as small as mine. Alas, Neuroslug isn't there yet. I'm trying though, gonna do some nonconventional training methods for the next iteration.

But back to the original question, that drawing is fully hand drawn, no AI touched it directly. There is some on the inspiration board though. One of the random gens produced a cute orange outfit with a shorter skirt part than I'd usually draw. I refined that further and the result is the outfit on the mom in that drawing. The moths themselves I did just bullshit together from memory without referencing any particular species.

Lately I've taken to using Neuroslug as turbo-Pinterest tailored to my specific needs. It's very neat when the outfits I'm browsing through for inspiration are already fitting for an anthro insect. In terms of reference, there's AI an almost all my reference boards now - for mood, for lighting, outfits, fluff colors etc. And I don't tag images that only use AI for reference because why? It isn't much different than just yanking something on google images for the same purpose.

Whenever I do tag with "neuroslug" it means that there's substantial amount of work done directly on the image through AI generation, and not just as reference or minor superimposed textures.

I hear you about the ants, gonna need to develop a culture for them as well at some point.

56 notes

·

View notes

Text

Big brain idea for video workflow with neuroslug: use a hand drawn first frame for style transfer onto a whole 3d animation. Right now I'm at the mercy of what AI assumes my style is like, but that way i could give it a more direct pointer to what I want.

I'll show off what it'll look like if it doesn't fail miserably

54 notes

·

View notes

Text

My evil plan is working. On single images at least, it'll be a whole adventure when the input becomes video instead. The biggest concern is the temporal stability on thin overlapping parts such as fingers, toes and antennae, there's basically 0 chance they won't get messed up there.

Maybe Grease pencil lines can help that issue if I throw them in as a second control net in addition to the depth map.

If all else fails, I could do a 2d mimic shader on the model, throw on some finer grease pencil linework on them and then use the hands off of that to marry back into the generated output with really low denoising. Should be doable if I render out animated masks for the hands and other problem bits.

A mask like that might be too tight though. Perhaps a looser mask in after effects? Decisions, decisions.

I'm in for months with this experiment, aren't I?

81 notes

·

View notes

Text

This is how I look when I eat sushi too. What an absolute creature.

For context: I'm stress testing a new version of Neuroslug and documenting all of it's blunders

116 notes

·

View notes

Note

I think it’s kind of a question, kind of a statement but, there seem to be a lot of people upset about you utilizing ai art recently. Correct me if I’m wrong, but if you’re training an ai on your OWN art, doesn’t that cut out a lot of the unethical things about mainstream ai art generators? And if I may ask, how do you feel about mainstream ai art generators and the way it utilizes others’ art? I apologize if this comes off as rude, I’ve not seen someone train an ai specifically on their own art and I’m curious about your thoughts. Thank you for reading, I hope you have a lovely day. Your world building and art is phenomenal and inspiring.

My opinion is that the only unethical bits stem from how an operator uses a tool, not the tool itself. Stable Diffusion isn't a person, it's isn't good or evil, it is incapable of acting on it's own without a human's input.

I could do some extremely unethical things with oil and canvas if I bothered to dig them up from the closet. I have the skills to theoretically mimic the style of a known artist and then sell it as if it's genuine. I could use the same traditional tools to straight up copy an artwork and claim that I came up with the composition and plot myself.

I then could come up with an original plot and composition in my head and then achieve that with prompts and inpainting using Stable Diffusion. The prompt might have some artist's name in it to achieve a particular style, but the end result won't match anything that artist has drawn before. You can't steal a style after all.

If I did all that it doesn't make oil and canvas evil and an AI good. The only thing that mattered was my intent. If your intent is foul anything you create with any tool can be unethical.

My attitude towards mainstream AI art isn't all that different from that towards normal art. Majority of both is unoriginal, boring, poor quality or all three in that order.

On AI's side it'd be big titty babes just standing around or Midjourney stuff (I hate MJ's style with a passion), on normal art's side it'd be what I call "face in flowers" types of drawings. You'll see that exact type infesting all of Instagram.

Should these artworks not exist? No, they can stay, they have their fans so whatever. I just personally don't find them interesting.

And then a small percentage of both is truly interesting. It has surprising plot, style, other quirks or is just genuinely funny. Good art is memorable regardless of what it's made with. It's just my opinion though.

If you haven't seen anything memorable made with AI yet, I recommend you search for "Will Smith eating spaghetti checkpoint". It's burned into my mind and still causes an ugly laugh each time I remember it exists.

Or "Anime rock paper scissors" for something less meme-y.

Thanks for the compliments btw, nothing is more rewarding than inspiring others.

41 notes

·

View notes

Text

The desire to kick the hornet's nest is winning. I'll go show neuroslug to twitter

31 notes

·

View notes

Note

Your use of artificial intelligence reduces the value of your work and your credibility as an "artist". You're no more than someone who can type words into a machine.

My beloved credibility, gonna miss it.

:V

33 notes

·

View notes