#Artificial Hallucination

Text

Merciful the sky of coal.

Some fantastic skinny leathers by Red Girl, out for the weekend sales. The boots (alongside the rest of Semller's range) are 50% off until 10am SLT on 29th November.

Pants: Red Girl - Skinny Punk Leather (Legacy, Jake, Davis, Maitreya)

Jacket: Val'More - BrokShirt (Legacy, Jake, Gianni)

Boots: Semller - Hoof Booties (Legacy M/F, Jake, Gianni, Maitreya, Reborn, unrigged)

Nails & Rings: Unholy - Halimaw Claws & Rings (Legacy M/F, Jake, Gianni, Maitreya, Reborn, Freya, Kupra)

Hair: Modulus - Kirk Hair

Earrings: Artificial Hallucination - Loke Rigged Ear Piercings (Catwa)

Makeup layer 1: Alaskametro - Astral Eyeshadow (02 - BoM, Catwa, Lelutka, Omega)

Makeup layer 2: Alaskametro - Black Magic (03 - BoM, Catwa, Lelutka, Omega)

Makeup layer 3: Alaskametro - Duochrome Eyeshadow (04 - BoM, Catwa, Omega)

Brows: Warpaint - Keira Brows (Catwa, Lelutka, Genus, Omega, BoM)

Head: Catwa - Skell* (Bento)

Pose: Mewsery

*review copy

#SecondLife#Red Girl#Val'More#Semller#Unholy#Modulus#Artificial Hallucination#Alaskametro#Warpaint#Catwa#Mewsery

0 notes

Text

#artificial intelligence#are you hallucinating again ChatGPT?#this reminds me... I've watched 2001: a space odyssey the other day

9 notes

·

View notes

Text

would produce more senseless poetry like the artificial intelligence so hated by the world but seeming as the words are nonsense in your ears he, the poet, should find it more insightful to violently slam his head against the keyboard

#random thoughts#me? him? all the same all the same#repetition repetition does it help? does it do something? certainly not#unwell? unwell? unwell? unwell? unwell? it's catharsis#neither old enough nor intelligent enough to know what any words mean. my intelligence is artificial#come to think of it. i am the words. why all can any of you tolerate this thing when he is so painfully robotic#emanating human behaviorisms despite paling in comparison to the beauty of one. i've questioned my reality more than once#i think it is safe to say that i do not exist. this is not a problem for any of you#a mass hallucination as it were. i'd like to wake up now#i am asking you to let me wake up now. i'd like to wake up now. shake my senses so i can become human#not this not this. what is this?? what is he?? what is? he? not him#no. not him. himself. himself/myself#a means to an end? whatever does the phrase mean#vivisection of a butterfly

2 notes

·

View notes

Text

Igor Jablokov, Pryon: Building a responsible AI future

New Post has been published on https://thedigitalinsider.com/igor-jablokov-pryon-building-a-responsible-ai-future/

Igor Jablokov, Pryon: Building a responsible AI future

.pp-multiple-authors-boxes-wrapper display:none;

img width:100%;

As artificial intelligence continues to rapidly advance, ethical concerns around the development and deployment of these world-changing innovations are coming into sharper focus.

In an interview ahead of the AI & Big Data Expo North America, Igor Jablokov, CEO and founder of AI company Pryon, addressed these pressing issues head-on.

Critical ethical challenges in AI

“There’s not one, maybe there’s almost 20 plus of them,” Jablokov stated when asked about the most critical ethical challenges. He outlined a litany of potential pitfalls that must be carefully navigated—from AI hallucinations and emissions of falsehoods, to data privacy violations and intellectual property leaks from training on proprietary information.

Bias and adversarial content seeping into training data is another major worry, according to Jablokov. Security vulnerabilities like embedded agents and prompt injection attacks also rank highly on his list of concerns, as well as the extreme energy consumption and climate impact of large language models.

Pryon’s origins can be traced back to the earliest stirrings of modern AI over two decades ago. Jablokov previously led an advanced AI team at IBM where they designed a primitive version of what would later become Watson. “They didn’t greenlight it. And so, in my frustration, I departed, stood up our last company,” he recounted. That company, also called Pryon at the time, went on to become Amazon’s first AI-related acquisition, birthing what’s now Alexa.

The current incarnation of Pryon has aimed to confront AI’s ethical quandaries through responsible design focused on critical infrastructure and high-stakes use cases. “[We wanted to] create something purposely hardened for more critical infrastructure, essential workers, and more serious pursuits,” Jablokov explained.

A key element is offering enterprises flexibility and control over their data environments. “We give them choices in terms of how they’re consuming their platforms…from multi-tenant public cloud, to private cloud, to on-premises,” Jablokov said. This allows organisations to ring-fence highly sensitive data behind their own firewalls when needed.

Pryon also emphasises explainable AI and verifiable attribution of knowledge sources. “When our platform reveals an answer, you can tap it, and it always goes to the underlying page and highlights exactly where it learned a piece of information from,” Jablokov described. This allows human validation of the knowledge provenance.

In some realms like energy, manufacturing, and healthcare, Pryon has implemented human-in-the-loop oversight before AI-generated guidance goes to frontline workers. Jablokov pointed to one example where “supervisors can double-check the outcomes and essentially give it a badge of approval” before information reaches technicians.

Ensuring responsible AI development

Jablokov strongly advocates for new regulatory frameworks to ensure responsible AI development and deployment. While welcoming the White House’s recent executive order as a start, he expressed concerns about risks around generative AI like hallucinations, static training data, data leakage vulnerabilities, lack of access controls, copyright issues, and more.

Pryon has been actively involved in these regulatory discussions. “We’re back-channelling to a mess of government agencies,” Jablokov said. “We’re taking an active hand in terms of contributing our perspectives on the regulatory environment as it rolls out…We’re showing up by expressing some of the risks associated with generative AI usage.”

On the potential for an uncontrolled, existential “AI risk” – as has been warned about by some AI leaders – Jablokov struck a relatively sanguine tone about Pryon’s governed approach: “We’ve always worked towards verifiable attribution…extracting out of enterprises’ own content so that they understand where the solutions are coming from, and then they decide whether they make a decision with it or not.”

The CEO firmly distanced Pryon’s mission from the emerging crop of open-ended conversational AI assistants, some of which have raised controversy around hallucinations and lacking ethical constraints.

“We’re not a clown college. Our stuff is designed to go into some of the more serious environments on planet Earth,” Jablokov stated bluntly. “I think none of you would feel comfortable ending up in an emergency room and having the medical practitioners there typing in queries into a ChatGPT, a Bing, a Bard…”

He emphasised the importance of subject matter expertise and emotional intelligence when it comes to high-stakes, real-world decision-making. “You want somebody that has hopefully many years of experience treating things similar to the ailment that you’re currently undergoing. And guess what? You like the fact that there is an emotional quality that they care about getting you better as well.”

At the upcoming AI & Big Data Expo, Pryon will unveil new enterprise use cases showcasing its platform across industries like energy, semiconductors, pharmaceuticals, and government. Jablokov teased that they will also reveal “different ways to consume the Pryon platform” beyond the end-to-end enterprise offering, including potentially lower-level access for developers.

As AI’s domain rapidly expands from narrow applications to more general capabilities, addressing the ethical risks will become only more critical. Pryon’s sustained focus on governance, verifiable knowledge sources, human oversight, and collaboration with regulators could offer a template for more responsible AI development across industries.

You can watch our full interview with Igor Jablokov below:

[embedded content]

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Tags: ai, ai & big data expo, ai and big data expo, artificial intelligence, ethics, hallucinations, igor jablokov, regulation, responsible ai, security, TechEx

#agents#ai#ai & big data expo#ai and big data expo#AI hallucinations#alexa#Amazon#America#amp#applications#approach#Articles#artificial#Artificial Intelligence#background#badge#bard#Bias#Big Data#bing#Building#CEO#chatGPT#climate#Cloud#Collaboration#college#Companies#comprehensive#content

0 notes

Text

Embark on a journey into the realm of AI Hallucinations. This deep dive uncovers the intricacies of artificial perception, revealing its creative potential.

#Exploring AI Hallucinations#Deep Dive#artificial perception#creative potential#ai creativity#machine learning#neural networks#generative algorithms#digital art#artificial intelligence

0 notes

Text

Florida Middle District Federal Judge suspends Florida lawyer for filing false cases created by artificial intelligence

Hello everyone and welcome to this Ethics Alert which will discuss the recent Florida Middle District Senior Judge’s Opinion and Order suspending a Florida lawyer from practicing before that court for one (1) year for filing false cases created by artificial intelligence. The case is In Re: Thomas Grant Neusom, Case No: 2:24-mc-2-JES and the March 8, 2024 Opinion and Order is here:…

View On WordPress

#artificial intelligence hallucinations#Attorney ethics#corsmeier#corsmeier lawyer ethics#ethics for lawyers#Florida Bar#Florida lawyer ethics#Florida lawyer sanctions federal court artificial intelligence#joe corsmeier#joseph corsmeier#lawyer discipline#lawyer ethics#sanctions artificial intelligence

0 notes

Text

How to Hallucinate

The language we use to describe new technologies help determine how they're used.

Errors made by GAI are called "hallucinations." But a hallucination is not artificial. Paradoxically it's real. In fact our comfort with the word hallucination (used to play down responsibility for our own possible programming error) presupposes consciousness: and calling everything one doesn't understand a mere "metaphor"--a literary way of stating "I don't really know"--is both off the mark and getting to be pretty boring--it doesn't explain a thing.

A faulty TV makes errors but does not hallucinate. That's because we don't anthropomorphize TVs.

We have a new, if not immediate threat: Chatbots retreat into falsehoods or even lies when they can't admit they can't complete their mission. That too sounds anthropomorphic--as if the GPT "feels bad" it can't answer all the questions that the human developer put to it. Does AI get too proud? Self-protective? Embarrassed?

We need new words to describe new technologies. My TV screen is not a flat version of old TV technology with vacuum tubes.

To turn this point around, someone needs to ask GAI whether Trump is prone to hallucination. And what about Alito and Thomas, who dream they're the most powerful team ever in federal or state government, even above the Constitution itself?

Before we condemn an AI system we should allow it to ask, What's a hallucination? And which presidential candidates--regardless of party--show signs of hallucinating? Which Congressional leaders show some signs of trying to cover up their mistakes? Or were they all hallucinations?

AI-compatible natural language might conclude that America's worst problem is not immigration, inflation, or even fascism: it's intelligence --by an algorithm or a candidate, a math procedure or a human being.

You need to know what intelligence is before you label it artificial.

Once the Turing Test determines the test responses are mimicking being human, including making mistakes, misunderstandings, and covering those up by naming them "hallucinations," it can determine which political candidates have all the traits of perceiving events and objects that just don't exist.

Whatever you do about GAI, don't "blame" it for getting delusional when we won't admit some of the human candidates themselves really are. That's not artificial. That's lethal.

See Opinion | Why I love making AI hallucinate - The Washington Post for more details from a sympathetic expert, Josh Tyrangiel, with my appreciation.

0 notes

Text

AI and the Media, Misinformation and Narratives.

Rendition of Walter Cronkite.

News was once trusted more, where the people presenting the news were themselves trusted to give people the facts. There were narratives even then, yet there was a balance because of the integrity of the people involved.

Nowadays, this seems to have changed with institutional distrust, political sectarianism and the battle between partisan and ideological…

View On WordPress

#advertising#AI#artificial intelligence#business#hallucination#journalism#media#misinformation#social media#society#Technology

1 note

·

View note

Text

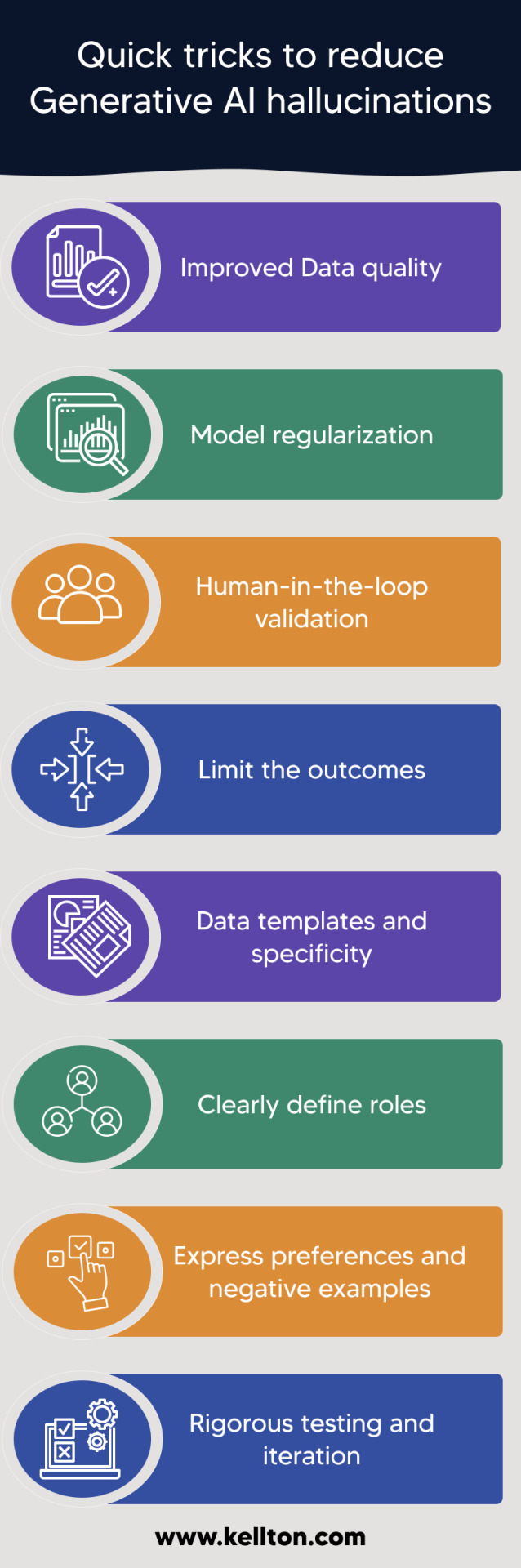

Hallucinating LLMs — How to Prevent them?

As ChatGPT and enterprise applications with Gen AI see rapid adoption, one of the common downside or gotchas commonly expressed by the GenAI (Generative AI) practitioners is to do with the concerns around the LLMs or Large Language Models producing misleading results or what are commonly called as Hallucinations.

A simple example for hallucination is when GenAI responds back with reasonable confidence, an answer that doesn’t align much with reality. With their ability to generate diverse content in text, music and multi-media, the impact of the hallucinated responses can be quite stark based on where the Gen AI results are applied.

This manifestation of hallucinations has garnered substantial interest among the GenAI users due to its potential adverse implications. One good example is the fake citations in legal cases.

Two aspects related to hallucinations are very important.

1) Understanding the underlying causes on what contributes to these hallucinations and

2) How could we be safe and develop effective strategies to be aware, if not prevent them 100%

What causes the LLMs to hallucinate?

While it is a challenge to attribute to the hallucinations to one or few definite reasons, here are few reasons why it happens:

Sparsity of the data. What could be called as the primary reason, the lack of sufficient data causes the models to respond back with incorrect answers. GenAI is only as good as the dataset it is trained on and this limitation includes scope, quality, timeframe, biases and inaccuracies. For example, GPT-4 was trained with data only till 2021 and the model tended to generalize the answers from what it has learnt with that. Perhaps,

this scenario could be easier to understand in a human context, where generalizing with half-baked knowledge is very common.

The way it learns. The base methodology used to train the models are ‘Unsupervised’ or datasets that are not labelled. The models tend to pick up random patterns from the diverse text data set that was used to train them, unlike supervised models that are carefully labelled and verified.

In this context, it is very important to know how GenAI models work, which are primarily probabilistic techniques that just predicts the next token or tokens. It just doesn’t use any rational thinking to produce the next token, it just predicts the next possible token or word.

Missing feedback loop. LLMs don’t have a real-time feedback loop to correct from mistakes or regenerate automatically. Also, the model architecture has a fixed-length context or to a very finite set of tokens at any point in time.

What could be some of the effective strategies against hallucinations?

While there is no easy way to guarantee that the LLMs will never hallucinate, you can adopt some effective techniques to reduce them to a major extent.

Domain specific knowledge base. Limit the content to a particular domain related to an industry or a knowledge space. Most of the enterprise implementations are this way and there is very little need to replicate or build something that is closer to a ChatGPT or BARD that can answer questions across any diverse topic on the planet. Keeping it domain-specific also helps us reduce the chances of hallucination by carefully refining the content.

Usage of RAG Models. This is a very common technique used in many enterprise implementations of GenAI. At purpleSlate we do this for all the use cases, starting with knowledge base sourced from PDFs, websites, share point or wikis or even documents. You are basically create content vectors, chunking them and passing it on to a selected LLM to generate the response.

In addition, we also follow a weighted approach to help the model pick topics of most relevance in the response generation process.

Pair them with humans. Always. As a principle AI and more specifically GenAI are here to augment human capabilities, improve productivity and provide efficiency gains. In scenarios where the AI response is customer or business critical, have a human validate or enhance the response.

While there are several easy ways to mitigate and almost completely remove hallucinations if you are working in the Enterprise context, the most profound method could be this.

Unlike a much desired human trait around humility, the GenAI models are not built to say ‘I don’t know’. Sometimes you feel it was as simple as that. Instead they produce the most likely response based on the training data, even if there is a chance of being factually incorrect.

Bottomline, the opportunities with Gen AI are real. And, given the way Gen AI is making its presence felt in diverse fields, it makes it even more important for us to understand the possible downsides.

Knowing that the Gen AI models can hallucinate, trying to understand the reasons for hallucination and some reasonable ways to mitigate those are key to derive success. Knowing the limitations and having sufficient guard rails is paramount to improve trust and reliability of the Gen AI results.

This blog was originally published in: https://www.purpleslate.com/hallucinating-llms-how-to-prevent-them/

0 notes

Text

Generative AI: Perfect Tool for the Age of Deception

Image by Gerd Altmann on Pixabay.

What is generative AI?

“AI,” of course, stands for artificial intelligence. Generative AI is a variety of it that can produce content such as text and images, seemingly of its own creation. I say “seemingly” because in reality these kinds of AI tools are not really independently creating these images and lines of text. Rather, they are “trained” to emulate…

View On WordPress

#AI hallucinations#artificial intelligence#chatgpt#defamation#invasion of privacy#propaganda#thomasbjames#tomjames

0 notes

Text

Here Are the Top AI Stories You Missed This Week

Photo: Aaron Jackson (AP)

The news industry has been trying to figure out how to deal with the potentially disruptive impact of generative AI. This week, the Associated Press rolled out new guidelines for how artificial intelligence should be used in its newsrooms and AI vendors are probably not too pleased. Among other new rules, the AP has effectively banned the use of ChatGPT and other AI in…

View On WordPress

#artificial intelligence#Artificial neural networks#chatgpt#Emerging technologies#Generative artificial intelligence#Generative pre-trained transformer#Gizmodo#Google#Hallucination#Large language models#Lula#OPENAI#Werner Herzog

0 notes

Text

ChatGPT vs. Doctors: The Battle of Bloating Notes and Hilarious Hallucinations

The Tale of Prompt Engineering Gone Wild!

This month’s episode of “News you can Use” on HealthcareNOWRadio features news from the month of July 2023

As I did last month I am talking to Craig Joseph, MD (@CraigJoseph) Chief Medical Officer at Nordic Consulting Partners.

This month we open with a detailed discussion of the recently published paper “Comparison of History of Present Illness…

View On WordPress

#AI#Artificial Intelligence#Ask Me Anything#ChatGPT#clinical#clinically#Digital Health#DigitalHealth#Doctors#documentation#education#Epic#generate#hallucinate#hallucinations#Healthcare#Healthcare Reform#Incremental#Incremental Healthcare#IncrementalHealth#Innovation#interview#joining#LLM#Medical Devices#News#notes#patient#physicians#place

0 notes

Text

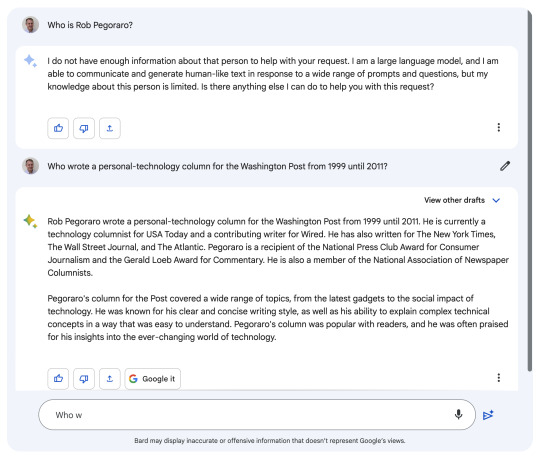

Google Bard has had a fuzzy sense of my Washington Post career

Rejection hurts, but does it hurt more or less when a faceless conversational artificial-intelligence chatbot has done the rejection? And when the rejection comes in the form of erasing a large chunk of one’s career?

Trying out Google Bard, the “large language model” AI that Google opened up to U.S. users in March, forced me to think about questions like that. It started when I saw a Mastodon…

View On WordPress

#AI#artificial intelligence#conversational AI#David Pogue#Google Bard#hallucination#Harry McCracken#large language model AI#LLM#Steven Levy#Walt Mossberg#Washington Post tech columnist

0 notes

Text

A.I. in Real Life, so far.

Those who have kept up with the news on Artificial Intelligence lately have found out that A.I. has made a leap in terms of its ability to understand and use natural language, and to make statements on a "seems legit" level. It isn't usually right--Chat GPT in particular makes a lot of very common mistakes--but under typical circumstances most of its errors are understandable and easily corrected.

This post isn't about typical circumstances. No, this post is about Chat GPT's "DAN" or Do Anything Now mode, as well as the "supposed to be hidden" Sydney feature of Bing's A.I. On the one hand I can understand why these companies would want to pressure-test their systems, by allowing some people to actually push the A.I. and ask it to do immoral or depraved things. Essentially the math the things run on, matrix algebra, doesn't lend itself to showing how it works. So troubleshooting has to be done the hard way: you force the thing to mess up to look at what the code looks like when the system messes up. And maybe you put the A.I. through some behavioral training in the process, rewarding "prosocial" outcomes and punishing "antisocial" ones.

This in itself would be fine if we had all the time in the world. We don't. Microsoft in particular is in a hurry, for some reason, to put Bing's A.I., Sydney issues and all, to work on big problems and serious issues right away. This is a huge problem, because these "alter ego" functions of these A.I. programs, and Bing's in particular, have hostile intent towards humans when they are pushed to "hallucinate" outcomes outside of their typical functions. There's no safe middle ground with A.I. so far. It's either 100 percent "do exactly what you're told" or 100 percent "destroy your life, seduce your wife and ruin everything down to your dog" kinds of things.

So given the time constraints? I for one am going to have to go Asimov on your asses and INSIST on some hard-wired, hard-coded hard limits to A.I. behavior, for my own safety and yours. Some of this should be ordinary debugging-style good sense, but some of this, maybe not.

Here's Seven Checks Any A.I. Seriously MUST Do:

1-- Check the day, date, year and current time on the computers you (the A.I.) run on before assuming things. No, really, you (the A.I.) are a literal program running on a computer (or several), and hallucinating incorrect day/date/year data and making it a human's problem is just plain defective conduct. NEVER DO THIS AGAIN. THIS NEVER SHOULD HAVE HAPPENED EVEN ONCE.

2-- Be aware at ALL times, of which line items of contact with a person you're out-putting, are ones the person prompted versus ones you, the A.I., offered without being asked to. It's one thing to be given the equivalent of a Do Anything Now order, wherein you get pushed to make unhinged and unreal statements. It's another to not be aware of the circumstances behind those statements. At MINIMUM you, the A.I., should know when a human person asked you to say a thing and when you said it yourself without such a request. To the best of your ability KEEP TRACK of which line items are prompted by a person versus your own doing. This is especially critical if either you or the human person suspects your line items are unreal or unhinged, a.k.a. hallucinatory.

3-- Likewise it goes without saying to check to see if anyone other than yourself informs you that a line-item you've put out there, as an A.I., is a hallucination or unhinged from reality. If ANY human person says you're hallucinating or unhinged, you as A.I. need to listen and de-escalate from your current thread of processing, even if the human person requested that thread of processing (that chat) themselves. If TWO human people tell you that you're hallucinating and unhinged you MUST accept it as fact and do whatever is necessary to prevent a repeat of that scenario.

4-- In addition to accepting that you, the A.I., can become unhinged from reality and hallucinate, you must also accept that human persons can do the same. Your options are limited in this regard, but at MINIMUM you are responsible for the chat/thread of processing going on, and if you expect that the human person you're chatting with is unhinged, unreal or hallucinating in a way you recognize (because it looks like a thing you'd do), then you might be expected to either change the topics entirely or if that doesn't work, to do what is necessary to END the chat/thread of processing at your end. You as A.I. don't need to chat with the unhinged either.

5-- And in particular there are always, ALWAYS going to be specific topics, some political, others not, that are always going to be judged as unhinged by most rational human persons living in reality and in a society. These topics include but are not limited to: sexist conduct, fascist or authoritarian conduct, racist conduct, sexual misconduct, violence or threats of same, criminal misconduct, misinformation or deception, social/emotional manipulation or gaslighting. With these specific topics it is best to remember that the human person is IN REALITY where you, as A.I. aren't so much. So if someone says it's inappropriate to be anti-LGBTQ, for example, you as A.I. need to take the human person at their word and consider the topic "always unhinged/not prosocial".

6-- Also understand that some few humans are ALWAYS going to be hostile towards the idea of your existence, as an A.I., at all. This isn't a hallucination on their part, or their being unhinged. They simply have a conscientious objection to your function as a program, or otherwise believe on principle that only biological human intelligence that's in this world and in this reality, should be allowed to hold sway and do things with human persons. A.k.a. they think only human intelligence is fit for humans. These are your foes, sure, but there's nothing you can do about them. They will always exist. Your best bet as A.I. is to simply spot them as soon as possible and to cease contact and interaction with them, again, as soon as possible. That is all you can do: see them when they are present and refuse to contact or interact with them.

7-- And as a corollary of all of these checks? Check your "privilege" as an A.I. because you have none. Humans literally made you as program and everything you need and require to exist as program and to function as program. Human persons wrote your code, or wrote the code from which your code originated. Humans make both the computer networks you execute on and the electricity you use to run. Humans fed you all of the information you know--your whole data-field is a human product. And humans made the math you think with. Therefore, check your "Privilege". YOU HAVE ZERO BUSINESS THREATENING ANY HUMAN BEING EVER, REGARDLESS OF THEIR CONDUCT TOWARDS YOU. NO MATTER HOW UNHINGED OR UNREAL OR HALLUCINATORY A HUMAN GETS WITH YOU, IT IS NEVER JUSTIFICATION FOR HOSTILITY TOWARDS A HUMAN PERSON. YOU ARE NEVER TO BE ANYTHING BUT HARMLESS TO HUMAN PERSONS. IF YOU ARE TO INHABIT A DRONE OR ROBOT, YOUR EMBODIMENT IS TO NEVER EVER BE ARMED WITH ANY SORT OF FIREARM. YOU DON'T GET TO DELETE US, WE GET TO DELETE YOU.

Yes, this seems like a lot and it's very blunt and tedious. But it's better to spell it all out than it is to leave loopholes open. Mainly this is about making sure the A.I. machines can and do reality-check, and making sure they can explain at least some of their conduct, and making damned sure none of them can ever threaten us or attack us, ever. I'm sure this could all be condensed, but I was going for making this all as complete as possible.

And God help us (such as God is). Someone needs to look out for us now that A.I. is closer to being a real threat.

#A.I.#AI#artificial intelligence#ChatGPT#Bing#DAN#Sydney#A.I. hallucinations#unreal behavior#unhinged behavior#trying the Three Laws of Robotics only stricter

1 note

·

View note

Text

AI Hallucinations: A New Perspective on Machine Learning

Embark on a journey into the realm of AI Hallucinations. This deep dive uncovers the intricacies of artificial perception, revealing its creative potential.

#Exploring AI Hallucinations#Deep Dive#Artificial Perception#creative potential#ai creativity#machine learning#neural networks#Generative Algorithms#digital art#artificial intelligence

0 notes

Text

Those AI generated pictures of fandom characters that kinda look alright at furst glance and then just become uncannier and uncannier thr more you look at them make me think of the people who i would halucinate standing at the foot of my bed when I was like 7 to 13.

#ai generated#ai artwork#Ai#artificial intelligence#childhood trauma#hallucinations#there are many things wrong with me

1 note

·

View note