#ObjectDetection

Text

Whitepaper: 7 Usecases for Touchscreen Object Recognition

The recognition of objects on large-scale touch screens picks up the well-known principle of cashier systems barcodes and takes it to a new level: objects which are equipped with a special marker chip or printed code can be detected and processed from multi-touch displays in real-time.

In this article:

Instant information

Personalization

Product Comparison

Special access

Save & transfer data

Natural user interface

Games & effects

Read the full article here:

Whitepaper: 7 Usecases for Touchscreen Object Recognition

Find more information here:

Interactive Signage Touchscreen Solutions XXL (eyefactive GmbH)

Touchscreen Software App Platform

Touchscreen Object Recognition

Smart Retail Signage Solutions

2 notes

·

View notes

Text

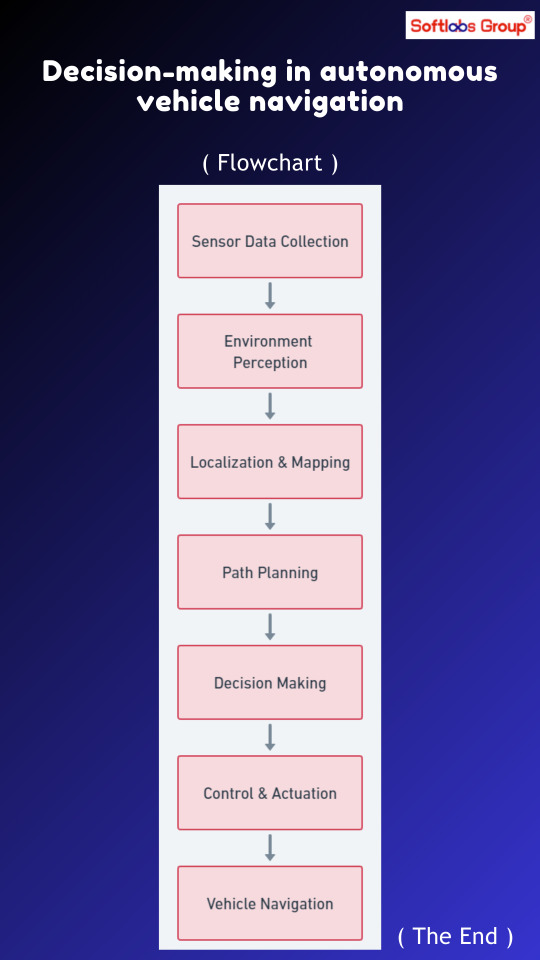

Explore the decision-making process in autonomous vehicle navigation with our informative flowchart. Follow steps including perception, localization, mapping, path planning, and control. Simplify the complex process of ensuring safe and efficient navigation for autonomous vehicles. Perfect for engineers, researchers, and enthusiasts in autonomous driving technology. Stay informed with Softlabs Group for more insights into the future of transportation!

0 notes

Text

Exploring the Canvas of Wonders! Discover the magic of object detection. (Note: This is a test on stylized AI Animation using the pretrained model)

0 notes

Text

Image Annotation For Person Detection

Introducing cutting-edge image annotation technology for precise person detection. Keep a watchful eye on your surroundings with unparalleled accuracy and efficiency. Our system employs state-of-the-art algorithms to identify individuals swiftly and reliably, ensuring maximum security and peace of mind.

Experience the power of advanced person detection today and elevate your surveillance capabilities to new heights! Contact us now for a personalized demo and take the first step towards a safer tomorrow.

#personannotation#persondetection#imageannotation#boundingbox#objectdetection#machinelearning#computervision#wisepl#annotationservice#AI

0 notes

Text

How to Annotate Images in 3 Easy Steps for Object Detection

Annotating images for object detection involves organizing the dataset, defining class labels, drawing bounding boxes around objects, and assigning class labels to each box for accurate model training and inference.

So, this is how image annotation is done for object detection, curious to learn more about image annotation? Explore our article filled with insights and guidance to grasp the concept and annotate images effortlessly for object detection in three simple steps.

0 notes

Text

Detect construction workers, identify vehicles, and ensure PPE compliance with precision. Enhance workplace safety, streamline operations, and boost productivity. Click here https://www.opticvyu.com/products/custom-object-detection-image-processing-ai to discover more about OpticVyu's AI solution.

0 notes

Text

Data Annotation Types to execute Autonomous Driving

Autonomous vehicles are still working towards reaching the stage of full autonomy. A fully functioning and safe autonomous vehicle must be competent in a wide range of machine learning processes before it can be trusted to drive on its own. From processing visual data in real-time to safely coordinating with other vehicles, the need for AI is essential. Self-driving cars could not do any of this without a huge volume of different types of training data, created and tagged for specific purposes.

Due to the several existing sensors and cameras, advanced automobiles generate a tremendous amount of data. We cannot use these datasets effectively unless they are correctly labeled for subsequent processing. This could range from simple 2D bounding boxes all the way to more complex annotation methods, such as semantic segmentation.

There are various image annotation types such as Polygons, bounding boxes, 3D cuboids, Semantic Segmentation, Lines, and Splines that can be incorporated into autonomous vehicles. These annotation methods help in achieving greater accuracy for autonomous driving algorithms. However, which annotation method is best suited for you must be chosen according to the requirements of your project.

Types of Annotation for Autonomous Driving

Below we have discussed all types of annotation required to make the vehicle autonomous.

2D bounding Box Annotation

The bounding box annotation technique is used to map objects in a given image/video to build datasets thereby enabling ML models to identify & localize objects.2D boxing is rectangular, and among all the annotation tools, it is the simplest data annotation type with the lowest cost. This annotation type is preferred in less complex cases and also if you are restricted by your budget. This is not trusted to be the most accurate type of annotation but saves a lot of labeling time. Common labeling objects include: Vehicles, Pedestrian, Obstacles, Road signs, Signal lights, Buildings and Parking zone.

3D Cuboid Annotation

Similar to the bounding boxes that were previously discussed, this type involves the annotator drawing boxes around the objects in an image. The bounding boxes in this sort of annotation, as the name implies, are 3D, allowing the objects to be annotated on depth, width, and length (X, Y, and Z axes). An anchor point is placed at each edge of the object after the annotator forms a box around it. Based on the characteristics of the object and the angle of the image, the annotator makes an accurate prediction as to where the edge maybe if it is missing or blocked by another object. This estimation/ annotation plays a vital role in judging the distance of the object from the car based on the depth and detecting the object’s volume and position.

Polygon Annotation

It can occasionally be challenging to add bounding boxes around specific items in an image due to their forms and sizes. In photos and movies with erratic objects, polygons provide precise object detection and localization. Due to its precision, it is one of the most popular annotation techniques. However, the accuracy comes at a price because it takes longer than other approaches. Beyond a 2D or 3D bounding box, irregular shapes like people, animals, and bicycles need to be annotated. Since polygonal annotation allows the annotator to specify additional details such as the sides of a road, a sidewalk, and obstructions, among other things, it can be a valuable tool for algorithms employed in autonomous vehicles.

Semantic Segmentation

We’ve looked at defining objects in images up to this point, but semantic segmentation is far more accurate than other methods. It deals with assigning a class to each pixel in an image. For a self-driving automobile to function well in a real-world setting, it must comprehend its surroundings. The method divides the items into groups like bicycles, people, autos, walkways, traffic signals, etc. Typically, the annotator will have a list made up of these. In conclusion, semantic segmentation locates, detects, and classifies the item for computer vision. This form of annotation demands a high degree of accuracy, where the annotation must be pixel-perfect.

Lines and Splines Annotation

In addition to object recognition, models need to be trained on boundaries and lanes. To assist in training the model, annotators drew lines in the image along the lanes and edges. These lines allow the car to identify or recognize lanes, which is essential for autonomous driving to succeed since it enables the car to move through traffic with ease while still maintaining lane discipline and preventing accidents.

Video Annotation

The purpose of video annotation is to identify and track objects over a collection of frames. The majority of them are utilized to train predictive algorithms for automated driving. Videos are divided into thousands of individual images, with annotations placed on the target object in each frame. In complicated situations, single frame annotation is always employed since it can ensure quality. At this time, machine learning-based object tracking algorithms have already helped in video annotation. The initial frame’s objects are annotated by the annotator, and the following frames’ items are tracked by the algorithm. Only when the algorithm doesn’t work properly does the annotator need to change the annotation. As labor costs decrease, clients can save a greater amount of money. In basic circumstances, streamed frame annotation is always employed.

Use Cases of Autonomous Driving

The main goal of data annotation in automotive is to classify and segment objects in an image or video. They help achieve precision, which to automotive is important, given that it is a mission-critical industry, and the accuracy, in turn, determines user experience. This process is essential because of the use cases it enables:

Object and vehicle detection: This crucial function allows an autonomous vehicle to identify obstacles and other vehicles and navigate around them. Various types of annotation are required to train the object detection model of autonomous driving so that it can detect persons, vehicles, and other obstacles coming in its way.

Environmental perception: Annotators use semantic segmentation techniques to create training data that labels every pixel in a video frame. This vital context allows the vehicle to understand its surroundings in more detail. It’s important to have a complete understanding of its location and everything surrounding it to make a safe drive.

Lane detection: Autonomous vehicles need to be able to recognize road lanes so that they can stay inside of them. This is very important to avoid any accidents. Annotators support this capability by locating road markings in video frames.

Understanding signage: The vehicle must be able to recognize all the signs and signals on the road to predict when and where to stop, take a turn, and many related objectives. Autonomous vehicles should automatically detect road signs and respond to them accordingly. Annotation services can enable this use case with careful video labeling.

Conclusion

Although it takes a lot of effort, delivering Ground Truth quality annotation for self-driving cars is crucial to the project’s overall success. Get the best solutions by using precise annotations created by TagX to train and validate your algorithms.

We are the data annotation experts for autonomous driving. We can help with any use case for your automated driving function, whether you’re validating or training your autonomous driving stack. Get in contact with our specialists to learn more about our automobile and data annotation services as well as our AI/ML knowledge.

#ObjectDetection#LaneMarkingAnnotation#SemanticSegmentation#3DAnnotation#artificial intelligence#machinelearning#dataannotation

0 notes

Text

youtube

Step into the captivating world of computer vision as we embark on a transformative journey to explore the cutting-edge realm of intelligent systems. Join us for an immersive talk that delves deep into the art and science of building advanced technologies through computer vision.

#VisualIntelligence#ComputerVision#AI#MachineLearning#DeepLearning#ImageProcessing#ImageRecognition#ImageAnalysis#ObjectDetection#ComputerVisionAI#Youtube

0 notes

Text

From enhancing road safety to revolutionizing retail and healthcare, object detection technology is reshaping industries.

Explore the diverse domains benefiting from this groundbreaking innovation.

#ObjectDetection#ComputerVision#Innovation#AI#MachineLearning#TechTrends#DigitalTransformation#VisualRecognition#Nexgits#Infographic

1 note

·

View note

Text

Objekterkennung ist ab sofort auch auf Displaygrößen von 86 und 98 Zoll möglich. Die von eyefactive neu entwickelte Technologie funktioniert auf Touchscreens mit Infrarot-Rahmen (IR) und entsprechenden Marker-Chips.

Bisher wurde Objekterkennung auf Touchscreens vor allem auf kapazitiven Displays realisiert. Mit der neu entwickelten Technologie von eyefactive ist es ab sofort möglich, Objekte auch auf Touchscreens mit Infrarot-Rahmen (IR) zu erkennen, eine Weltneuheit.

Objekte werden auf MultiTouch Displays anhand sogenannter Marker-Chips erkannt. Diese wurden von eyefactive nun speziell für die Erkennung auf Touchscreen mit IR-Technologie angepasst. Die Marker-Chips sind dabei passiv, benötigen dementsprechend keine Batterie für einen dauerhaften Betrieb.

#objectrecognition#objectdetection#touchsoftware#touchapps#multitouchsoftware#touchscreensoftware#touchscreenapps#touchscreens#touchtables#touchterminal#touchmonitor#videowall

0 notes

Text

Enhance Safety and Performance: Introducing the KUS3100 Ultrasonic Proximity Sensor

In today's fast-paced and technology-driven world, ensuring safety and optimizing performance are paramount for businesses across various industries. To address these crucial needs, we are excited to introduce the KUS3100 Ultrasonic Proximity Sensor from KC Sensor. This cutting-edge sensor offers advanced features and capabilities to revolutionize proximity sensing applications, enhancing safety measures and boosting overall operational performance. Let's delve into the exceptional features of the KUS3100 and explore how it can benefit your business.

Unmatched Precision and Reliability:

The KUS3100 Ultrasonic Proximity Sensor is engineered with utmost precision and reliability. It utilizes ultrasonic technology to accurately detect and measure the distance between objects, providing unparalleled accuracy even in challenging environmental conditions. Whether it's a fast-paced manufacturing line or a busy warehouse, the KUS3100 ensures precise proximity detection, minimizing the risk of collisions and accidents.

Adaptable and Versatile:

The KUS3100 sensor is designed to be highly adaptable and versatile, making it suitable for a wide range of applications. It can be seamlessly integrated into various industrial processes, including automated machinery, robotics, and security systems. With its adjustable detection range and customizable settings, the KUS3100 can cater to diverse operational requirements, offering flexibility and ease of use.

Intelligent and User-Friendly Features:

Equipped with intelligent features, the KUS3100 Ultrasonic Proximity Sensor simplifies installation and enhances user experience. It boasts a user-friendly interface that allows for effortless configuration and calibration, saving valuable time and resources. Additionally, the sensor incorporates intelligent self-diagnostic capabilities, providing real-time feedback and alerts to ensure optimal performance and reduce downtime.

Durability and Longevity:

When it comes to industrial-grade equipment, durability is crucial. The KUS3100 is built to withstand the harshest working conditions, thanks to its rugged construction and high-quality materials. It offers exceptional resistance to dust, moisture, vibrations, and temperature variations, ensuring long-lasting performance and minimal maintenance requirements. With the KUS3100, you can rely on a sensor that can endure the demands of your industry.

Seamless Integration and Connectivity:

The KUS3100 Ultrasonic Proximity Sensor is designed with seamless integration in mind. It supports various output options, including analog and digital interfaces, allowing for easy connectivity to your existing systems and equipment. This ensures a hassle-free integration process, enabling you to quickly incorporate the sensor into your workflow without disruptions or extensive modifications.

Conclusion:

The KUS3100 Ultrasonic Proximity Sensor from KC Sensor is a game-changer for businesses seeking to enhance safety and performance in their operations. With its unmatched precision, adaptability, intelligent features, durability, and seamless integration capabilities, the KUS3100 provides a reliable solution for proximity sensing needs. By investing in this cutting-edge sensor, you can optimize your workflow, prevent accidents, and improve overall efficiency. Embrace the future of proximity sensing with the KUS3100. Visit KC Sensor to learn more and explore the endless possibilities it offers for your business.

#UltrasonicProximitySensor#SafetyandPerformance#IndustrialSensors#UltrasonicTechnology#ProximityDetection#DistanceMeasurement#ObjectDetection

0 notes

Text

Object recognition is now also possible on display sizes of 86 and 98 inches. The new technology developed by eyefactive works on touch screens with infrared frames (IR) and corresponding marker chips.

Object recognition on touch screens was mainly realized on capacitive displays. With the newly developed technology of eyefactive it is now possible to recognize objects also on touch screens with infrared frames (IR), a world novelty.

Objects are recognized on multi-touch displays by means of so-called marker chips. They have now been adapted by eyefactive for recognition on touch screens with IR technology. The marker chips are passive and therefore do not need a battery for permanent operation.

#objectrecognition#objectdetection#touchsoftware#touchapps#multitouchsoftware#touchscreensoftware#touchscreenapps#touchscreens#touchtables#touchterminal#touchmonitor#videowall

0 notes

Text

Walkway Segmentation Dataset Machine Learning:-

New Walkway Segmentation Dataset Enables Accurate Detection of Pedestrian Pathways for Improved Navigation and Safety

Visit:

https://gts.ai/

#machinelearning#computervision#deeplearning#segmentation#imageprocessing#objectdetection#artificialintelligence#datascience#autonomousvehicles#navigation#safety#pedestriandetection#dataset

0 notes

Text

Arguably, the most crucial task of Deep Learning-based Multiple Object Tracking (MOT) is not to identify an object but to re-identify it after occlusion. There are a plethora of trackers available to use, but not all of them have a good re-identification pipeline. In this blog post, we will focus on one such tracker, FairMOT, that revolutionized the joint optimization of detection and re-identification tasks in tracking.

0 notes

Text

Data Labeling Services For Airlines & Aviation Industry

Efficiently optimize your flight data analysis with our cutting-edge data labeling services. We specialize in accurately labeling flight data, ensuring precision and reliability for your analytics needs. Enhance safety protocols, improve predictive maintenance, and streamline operations with our meticulous data labeling solutions. Contact us today to elevate your flight data analysis to new heights

#objectdetection#datalabeling#aviation#flights#airportdata#airlines#annotationservices#machinelearning#computervision#wisepl#ai

0 notes

Text

AI-Powered People Tracking Solutions.

This blog will give you a clear vision of the areas where AI can be used, the process of tracking and characterization, and how its characteristics are changing the world.

0 notes