#rather than integrating with custom hardware and physical inputs

Text

Replacing physical buttons and controls with touchscreens also means removing accessibility features. Physical buttons can be textured or have Braille and can be located by touch and don't need to be pressed with a bare finger. Touchscreens usually require precise taps and hand-eye coordination for the same task.

Many point-of-sale machines now are essentially just a smartphone with a card reader attached and the interface. The control layout can change at a moment's notice and there are no physical boundaries between buttons. With a keypad-style machine, the buttons are always in the same place and can be located by touch, especially since the middle button has a raised ridge on it.

Buttons can also be located by touch without activating them, which enables a "locate then press" style of interaction which is not possible on touchscreens, where even light touches will register as presses and the buttons must be located visually rather than by touch.

When elevator or door controls are replaced by touch screens, will existing accessibility features be preserved, or will some people no longer be able to use those controls?

Who is allowed to control the physical world, and who is making that decision?

#i get why this is happening; it's way cheaper to buy an off-the-shelf touch kiosk or tablet and run your ui on a web server#rather than integrating with custom hardware and physical inputs#but that should not just removing accessibility features#and I know that digital devices can help a lot with accessibility: e.g. screen readers#but I wouldn't rely on any of those being installed on someone else's device

47K notes

·

View notes

Text

How a Retail Cash Register Helps Your Restaurant?

The cash is kept in a drawer at the bottom of the retail cash register, which is a feature. After you input the amount of the things you've purchased, this gadget will automatically print a receipt and record any cash transactions made in accordance with the manual sales transactions made at the point of sale.

It is a very powerful tool that aids in the sales process for any organization. Additionally, the cash register will give the consumer a receipt that includes information about the purchase. As it has a drawer, it is perfect for securing cash. Cash registers come in many varieties, but they all generally have the same function.

What is a POS system?

A POS cash register software may perform all the functions of a restaurant cash register and much more. The ability to install them on a range of devices and form factors is one of its upgraded features, along with advanced data management and integrations. The technical requirements of the modern restaurant revolve around a POS system. Additionally, restaurant registers are no longer adequate.

Its capacity to gather sizable data sets and integrate technologies used for various restaurant management operations is its main distinction from restaurant cash registers. Restaurant POS systems fall into one of two hardware categories: stationary or mobile. The majority of restaurants use stationary POS systems, which typically use a combination of the following hardware:

A touch-screen punching order terminal

An apparatus for running POS software

A physical server that houses all the data

Credit and debit card readers are additional payment equipment.

Benefits of POS Systems

Creating detailed consumer profiles

You should be able to gather, manage, and track client information with the aid of a POS register system. With access to this information, store employees can better understand the clients they serve, which can encourage repeat business and improve your loyalty and retention marketing campaigns.

Your POS system figures out the whole cost

Your POS system computes the final price once each item is added to the customer's cart, including any applicable sales tax, and then changes your inventory count to reflect the products that have been sold. Store employees can now also apply discounts or promotional codes.

Gathering and real-time visualization of sales data

Your reporting and analytics tools should receive data from each transaction that passes through your POS system. Your POS system should make it simple to access metrics. You should be able to receive a complete perspective of your brand's sales and be able to filter by sales channel rather than viewing ecommerce and store sales data in separate platforms.

Conclusion

Today's customers are starting to demand a variety of services from eateries. They anticipate being able to purchase from your restaurant online, reserve a table online, receive customized offers and invitations, and receive speedier service than ever. In ways that a retail cash register never can, a POS enables you to keep up with rising client demands. Especially contemporary subscription-based POS systems, where the software receives constant back-end updates.

0 notes

Text

3DS capture cards are a thing but they're still very underground. Why?

I love my Nintendo 3DS. I am not a corporate shill. I promise.

Seriously though, my 3DS has some really cool games and its a shame that streaming/recording footage is such a hassle and limited by so many things. I'd prefer to stream off real hardware than an emulator for a variety of reasons including but not limited to the (current) amount of games that Citra can't run without major issues in some capacity. So with that off the table, what are my options?

Well, hardware capture is expensive right now. Not accounting for *gestures vaguely at everything involving the supply chain from 2020 to 2022* most places local to the US and UK make you pay out of pocket for a new system with a capture device installed or by sending yours in to have it built in. This is great for those who want to build their own because of budgetary reasons or just for the sake of starting their own business in this landscape. It makes pretty good money! I'm currently eyeing Delfino Customs for some systems, and they make bank!

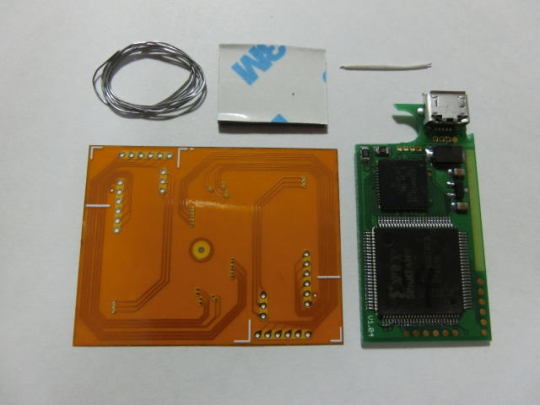

Turns out, the reason they do this is because someone in Japan provides the boards and ships them overseas for up to a whopping $130! The seller goes by Optimize, their site (in English) can be found here. I'm going to be looking specifically at the New 3DS XL Capture kit, called New-SPA3.

For the sake of documentation, the big chip on the bottom is a XILINX Spartan-3A FPGA (XC3S50A), package type is VQG100 (very thin flat pack), speed grade 4, temp range 0-85 degrees C. On top is a Cypress EZ-USB FX2LP (CY7C68013A) microcontroller, package type appears to be -56-LTXC.

The rest of the stuff on the green board is just a standard Micro-USB-B connector and passives like diodes, capacitors, resistors, and maybe a power regulator or two. The orange paper thing is also a circuit board, the intent is you cut it in a specific way to connect the board to specific electrical pads on the 3DS's circuit board, and the material the orange is made out of is flexible allowing for unique positioning and reaching.

It's definitely a complex piece of tech! The FPGA takes in the video signals from the 3DS, processes them a bit, spits them out to the USB chip which sends them to a PC to be displayed. That's oversimplifying it just a teensy bit.

Unfortunately, due to the FPGA being in use instead of some kind of dedicated circuitry, we can't actually see how the data is being taken in and processed. FPGA's are field programmable gate arrays, meaning they are chips that are given a bit of code that manipulates how their logic works on a physical level, rather than just electronically processing signals. This is all done inside the chip, instead of some dedicated chip that does a specific function or a portion of a circuit board with known parts and gates. Unless we have a preprogrammed FPGA chip on hand and are able to test every single input and what its output would be (or even better, the source code for the FPGA from Optimize), we cannot possibly know how the FPGA takes the input and gives some output.

Luckily, we can make some educated guesses without ever actually owning a board, but rather looking at the software needed to use it!

Enter non-standard, a Japanese maker of stuff. They created the program that interfaces with the 3DS capture cards that are installed by almost all services. The program in question is called `nonstd 3line Differential Signal viewer`, or just n3DSview, referencing the creator's name, the n3DS, and the method of transporting color data from a circuit board to a screen. Basically, differential signaling uses two wires for one bit of data, flipping one on and the other off to represent a one, then the reverse for a zero. This is done for signal integrity, and the 3 pairs mentioned represent red, green, and blue. (Note: this may not be how the video transmit system works for 3DS, this is just what the name is implying)

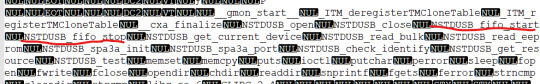

Now, USB is a complicated thing. When something wants to be transmitted over it in a unique way that isn't defined by some standard (so it's non-standard, hah!) (also, some standard interfaces include things like keyboards, mice, midi devices, and storage devices), one must create and define a device driver. Luckily the software comes with the necessary driver, so let's take a look.

Yep, just as expected, this software relies on the onboard USB chip from Cypress to handle communications. The driver simply provides Windows a name to a face-- er, USB connection. The other files with the driver are the actual communication binaries that Windows uses to understand the incoming and outgoing signals. Unfortunately, due to the nature of the files being bytes and bytes of unintelligible compiled code, we're a bit stuck. We don't actually know how the device is communicating. Lucky for us, Raspberry Pi might have the answer

Linux, like Windows, may need drivers. A Raspberry Pi runs Linux (usually) and needs to be able to communicate with devices just like Windows does. Thus, this file called a shared object file (which is like a Windows DLL) is provided along with the Raspberry Pi version of n3DSview. It contains code that can be used by any program compiled against it to interface with the USB device. It gives us great insight into how the USB chip communicates: through its dedicated FIFO processing!

The image above is from the reference manual for the USB chip (this document also describes the FIFO specs), which describes all of the chips functions and features. FIFO is short for "first in, first out". This means that the data that gets put in first to the FIFO will be the first to get out. Think of it like a line in an amusement park. First arrivals get to get on the ride first, and a line will build up. The data works just like that, ensuring that it gets sent and processed in the correct order.

So we know that the chip is sending processed data in a FIFO manner, but we don't actually know how the signals from the 3DS are processed by the FPGA before they get sent to the USB chip. So we're a bit stuck for now, but we have a lot of great info from just looking at pictures and text. The next step would be to potentially decompile the RPi binary and see how it processes the input from USB, but that'll be for another time.

#nintendo#3ds#capture card#hardware#technology#this is what happens when i have too much time and the ability to google too well#apex barks

3 notes

·

View notes

Text

What is a combiner box?

The role of the combiner box is to bring the output of several solar strings together. Daniel Sherwood, director of product management at SolarBOS, explained that each string conductor lands on a fuse terminal and the output of the fused inputs are combined onto a single conductor that connects the box to the inverter. “This is a combiner box at its most basic, but once you have one in your solar project, there are additional features typically integrated into the box,” he said. Disconnect switches, monitoring equipment and remote rapid shutdown devices are examples of additional equipment.

Solar combiner boxes also consolidate incoming power into one main feed that distributes to a solar inverter, added Patrick Kane, product manager at Eaton. This saves labor and material costs through wire reductions. “Solar combiner boxes are engineered to provide overcurrent and overvoltage protection to enhance inverter protection and reliability,” he said.

“If a project only has two or three strings, like a typical home, a solar combiner box isn’t required. Rather, you’ll attach the string directly to an inverter,” Sherwood said. “It is only for larger projects, anywhere from four to 4,000 strings that combiner boxes become necessary.” However, combiner boxes can have advantages in projects of all sizes. In residential applications, combiner boxes can bring a small number of strings to a central location for easy installation, disconnect and maintenance. In commercial applications, differently sized combiner boxes are often used to capture power from unorthodox layouts of varying building types. For utility-scale projects, combiner boxes allow site designers to maximize power and reduce material and labor costs by distributing the combined connections.

The combiner box should reside between the solar modules and inverter. When optimally positioned in the array, it can limit power loss. Position can also be important to price. “Location is highly important because a combiner in a non-optimal location may potentially increase DC BOS costs from losses in voltage and power,” Kane explained. “It only constitutes a few cents per watt, but it’s important to get right,” Sherwood agreed.

Little maintenance is required for combiner boxes. “The environment and frequency of use should determine the levels of maintenance,” Kane explained. “It is a good idea to inspect them periodically for leaks or loose connections, but if a combiner box is installed properly it should continue to function for the lifetime of the solar project,” Sherwood added.

The quality of the combiner box is the most important consideration when selecting one, especially since it’s the first piece of equipment connected to the output of the solar modules. “Combiner boxes are not expensive compared to other equipment in a solar project, but a faulty AC combiner box can fail in a dramatic way, involving shooting flames and smoke,” Sherwood warned. “All should be third-party certified to conform to UL1741, the relevant standard for this type of equipment,” Sherwood said. Also be sure to pick a combiner box that meets the technical requirements for your project.

A new trend is the incorporation of a whip: a length of wire with a solar connector on the end. “Rather than a contractor drilling holes in the combiner box and installing fittings in the field, we install whips at the factory that allow the installer to simply connect the output conductors to the box using a mating solar connector,” Sherwood explained. “It’s as easy as plugging in a toaster.”

This year arc-fault protection and remote rapid shutdown devices are more popular than ever, due to recent changes in the National Electrical Code that require them in many solar applications. “New technologies and components are driven by the NEC changes, as well as the desire for enhanced energy efficiency and reduction of labor costs,” Kane said. Some of these new components include: higher voltage components, integral mounting hardware and custom grounding options.

A photovoltaic power plant is a photovoltaic power generation system that uses the sun's light energy and electronic components such as crystalline silicon panels and inverters to generate electricity that is connected to the power grid and delivers power to the grid.

The PV power generation system consists of solar arrays, battery packs, charge/discharge controllers, inverters, AC power distribution cabinets, solar tracking control systems, and other equipment. The PV power generation system usually consists of PV modules, inverters, PV distribution boxes, meters, and power grids, and the distribution boxes, although the surge protective device is not accounting for a high percentage of the total system cost, but play an important role in the PV power generation system.

solar system

Photovoltaic Applications

Solar energy is an inexhaustible source of renewable energy, which is sufficiently clean, absolutely safe, relatively widespread, really long-lived and maintenance-free, resource-sufficient and potentially economical, etc., and plays an important role in long-term energy strategies. Photovoltaic power plants are currently among the most strongly encouraged green power development energy projects. The use of limited resources to achieve the maximum amount of energy for human use.

Power Distribution Boxes in Photovoltaic Power Plants

Photovoltaic power generation is developing rapidly in China, and the application of photovoltaic in all aspects of life has been reflected. Household photovoltaic power stations, large photovoltaic ground power stations, photovoltaic buildings, photovoltaic street lights, photovoltaic traffic lights, photovoltaic caravans, photovoltaic electric vehicles, photovoltaic carports, and more and more electricity-using environments have a photovoltaic figure, in which the photovoltaic box plays a vital role in the entire photovoltaic system, the main functions of which are as follows:

Combiner box

1. Power isolation function

Switchboards require a physical isolation device that allows the circuit to have a sharp break-point for the safety of personnel in service and maintenance situations. This device is called an isolation switch, which could ensure safety while the electricity has a short circuit, and the current increases and cause the cut off of the isolation switch.

2、Short circuit protection function

Once a short-circuit occurs in a circuit, it will cause a sudden increase in current, which will burn out the appliance and generate a lot of heat, making it susceptible to fires, with serious consequences. Therefore, when a short circuit occurs, a device is needed to cut off the power. The air switch is the device, which occupies an important role in the PV box. When you choose the air switch, please try to use reliable products and choose reliable manufacturers to ensure safety.

3、Energy measurement

Generally, PV energy meters are installed together with the DC surge protective device. There are also some places where the meter is separate from the distribution box. You can choose according to the local power supply department.

4、Over-voltage protection

The majority of distributed PV power generation is connected to the rural power grid. One of the characteristics of rural grids is that they are unstable, it is common that the power outages usually, and the voltage fluctuates greatly. In this case, the PV distribution box has a device indispensable, that is, an under-voltage protector. In the case of over-or under-voltage, it acts as a circuit breaker to protect other components. Under-voltage protection in the entire photovoltaic box, is more prone to failure of accessories, even if maintenance and replacement are required.

5、Lightning protection

Lightning is also a common natural phenomenon in life, especially in the summer, the thunderstorms are frequent happened and many accidents happened to people, Lightning is a scary thing in life, and there are lightning protectors in many places. There is also an important lightning protection device in the distribution box - the surge protector. A surge protector, also called a lightning protector, is an electronic device that provides safety protection for different electronic equipment, instruments, and communication lines. When the electrical circuit or communication lines because of external interference in the sudden generation of current or voltage, the surge protector can be turned on in a very short period of time to shunt, thereby reducing the circuit damage to other equipment, is an indispensable component.

6、About the cabinet

Generally speaking, the design service life of photovoltaic power generation is 25 years, so the distribution box should be used for 25 years, requiring a waterproof and dust-proof function. With the development of society and the progress of the times, the original distribution box made of cheap tin was not up to the requirements. Now the power supply bureau generally requires to use the of galvanized plate spray plastic, iron plate spray plastic, stainless steel, plastic steel, and other materials of the distribution box. Otherwise, you will forbid to the parallel network, in order for your safety, choose the appropriate box is very important.

0 notes

Text

Loupedeck Live is a compelling alternative to Elgato's Stream Deck

Life’s too short to drag a mouse more than three inches or remember elaborate keyboard combinations to get things done. This is 2021 and you can have a pretty, dedicated button for almost any task if you want. And if you partake in anything creative, or like to stream, there’s a very good chance that you do. Loupedeck makes control surfaces with many such buttons with a particular focus on creatives. Its latest model is the “Live” ($245) and it’s pitched almost squarely against Elgato’s popular Stream Deck ($150). Both have their own strengths, and I’ve been using them side by side for some time now. But which one have I been reaching for the most? And does the Loupedeck Live do enough to command almost a hundred more dollars?

First, we should go into what the Loupedeck Live actually is and why it might be useful. In short, it’s a PC or Mac control surface covered in configurable buttons and dials. The buttons have mini LCD displays on them so you can easily see what each does with either text, an icon or even a photo. Behind the scenes is a companion app, which is where you’ll customize what each button or dial does. Many popular applications are natively supported (Windows, MacOS, Photoshop, OBS and many more). But if the software you use supports keyboard shortcuts, you can control it with the Live.

So far, so Stream Deck? Well, kinda. The two are undeniably very similar, but there are some important differences. For one, the Stream Deck’s only input type is a button; Live has rotary dials too. This makes Loupedeck’s offering much more appealing for tasks like controlling volume, scrolling through a list or scrubbing a video and so on. But there are also some UI differences that give them both a very different workflow, too.

Hardware

James Trew / Engadget

Like Elgato, Loupedeck currently offers three different models. With the Stream Deck, the difference between versions is all about how many buttons there are (6, 15 and 32). The different Loupedecks are physically distinct and lend themselves to certain tasks. The Loupedeck CT, for example, has a girthy dial in the middle for those that work with video. The Loupedeck+ offers faders and transport controls and the Live is the smallest of the family with a focus on streaming and general creativity.

At a more superficial level, both the Stream Deck and the Live look pretty cool on your desk, which clearly is vitally important. Elgato decided to make its hardware with a fixed cable, whereas Loupedecks have a removable USB-C connection. I wouldn’t normally bother to mention this, but it’s worth noting as that means you can use your own (longer/shorter) lead to avoid cable spaghetti. You can also unplug it and use it to charge something else if needed. Minor, but helpful functionality if your workspace is littered with things that need topping off on the reg like mine is.

Clearly, one of the main advantages with the Live will be those rotary dials. If you work with audio or image editing at all, they are going to be much more useful than a plain ol’ button for many tasks. For example, I wanted to set up some controls for stereo panning in Ableton Live. On the Stream Deck I need to employ two buttons to get the setup I wanted: pan left one step / pan right one step and it takes a lot of presses to move from one extreme to the other. With the Live, I can simply assign it to one of the rotaries (clicking it will reset to center). From there, I can dial in the exact amount of panning I want in one deft movement.

That’s a very simple example, but if you imagine using the Live with something like Photoshop for adjusting Levels, you can see how having several rotaries might suddenly become incredibly useful.

Another practical difference between these two devices is the action on the buttons. On the Stream Deck, each one is like a clear Jolly Rancher with a bright display behind it. The buttons have a satisfying “click” to them and are easy to find without really looking. The Live, on the other hand, feels more like someone placed a divider over a touchscreen. That’s to say, the buttons don’t have any action/movement at all, instead delivering somewhat less satisfying vibrations to let you know you’ve pressed them.

Software

James Trew / Engadget

The real difference between these two, though, is the workflow. I had been using the Stream Deck for a couple of months before the Loupedeck Live. The Stream Deck is, at its core, a “launcher.” Assign a button to a task and it’ll do that task on demand. You can nest multiple tasks under folders to expand your options nearly endlessly, but the general interface remains fixed. So, if you wanted to control Ableton and Photoshop, for example, you might have a top-level button for each. That button would then link through to a subfolder of actions and/or more subfolders (one for editing, one for exporting actions and so on). These buttons remain fixed no matter what application you are using at a given moment.

With Loupedeck, it’s all about dynamic profiles. That’s to say, if I am working in Ableton, the Loupedeck will automatically switch to that profile and all the buttons and rotaries will change to whatever I have assigned them to for Ableton. If I then jump into Photoshop, all the controls will change to match that software, too. Or put another way, the Stream Deck is very “trigger” based (launch this, do this key command). The Loupedeck is more task-related, with pages, profiles and workspaces for whatever app is active. The net result is, once you have things customized to just how you want them, the Loupedeck Live is much more adaptive to your workflow as it “follows” you around and has more breadth of actions available at any one time. But at first, I was trying to make it simply launch things and found that harder than it was on a Stream Deck until I figured out how to work with it.

This “dynamic” mode can also be turned off if you prefer to keep the same controls available to you at any one time, but for that you can also assign set custom “workspaces” to any of the seven circular buttons along the bottom — so if you want your Photoshop profile to open with the app, but also have some basic system/trigger controls available, they can just be one button push away.

This approach definitely makes the Loupedeck feel more tightly integrated to whatever you’re doing “right now” rather than a nifty launcher, but it also takes a bit to get your head around how it wants to do things. At least in my experience. With the Stream Deck I was able to get under its skin in a day, I am still reading up on what the Live can do after some weeks, and need to keep reminding myself how to make certain changes. As a reverse example, launching an app is something Stream Deck was born to do. With a Loupedeck, you have to create a custom action and then assign that to a profile you can access at any time (i.e. a custom workspace) or add that action to various different profiles where you want it to be available.

Both do offer the option for macros/multi-actions and work in very similar ways in that regard. If, say, you want to create a shortcut to resize and then save an image, you can do so with either by creating a list of actions to be carried out in order. You can add a delay between each step and include text entry, keyboard shortcuts and running apps — all of which allows you to cook up some pretty clever “recipes.” Sometimes it takes a bit of trial and error to get things right, but once you do it can simplify otherwise fairly lengthy/mundane tasks.

James Trew / Engadget

Where the Stream Deck takes things a little further is with third-party plug-ins. These are usually more complex than tasks you create yourself (and require some programming to create). But thanks to Elgato’s active community, there are already quite a few on offer and the number is growing every day. Some of them are simple: I can have a dynamic weather widget displayed on one of the keys, others are more practical — I use one that switches my audio output between my headphones and my PC’s built-in speakers. Some of my colleagues speak highly of a Spotify controller and the Hue lights integration — both of which came from the Stream Deck community.

Loupedeck offers a way to export (and thus share) profiles, but as far as I can tell right now, there’s no way to do anything more complex than what you can do with custom controls — if that were to change in the future that could really enhance the functionality considerably.

Beyond the hardware controls and the user interface, it’s worth mentioning that both the Live and the Stream Deck have native support for specific apps. “Native” means that the companion software already has a list of drop and drag controls for select apps. Elgato’s controller, unsurprisingly, has a strong focus on things like OBS/Streamlabs, Twitch and, of course, the company’s own game capture software and lights along with some social tools and audio/soundboard features (for intro music or effects).

The Loupedeck Live also offers native controls for OBS/Streamlabs (but not Twitch) but tends to skew toward things like After Effects, Audition, Premier Pro and so on. The list of native apps supported is actually quite extensive and many more (like Davinci or iZotope RX) are available to download. If streaming is your main thing, Elgato’s solution is affordable and definitely more streamlined for that. The Loupedeck, however, is going to be more useful for a lot of other things — it’ll help with streaming, but also help you design the logo for your channel.

So which?

At this point, you can probably guess what the wrap-up is. Elgato’s Stream Deck offers less functionality overall but that can be greatly expanded as the number of plugins continues to grow. But likewise, it’ll always be somewhat limited by its singular input method (buttons). The Loupedeck Live is much more ambitious, but with that, trades off some of the simplicity. If you were looking for something that can take care of simple tasks and skews toward gaming or podcasting, save yourself the $100 and go with a Stream Deck, but if you want something that can pick up the slack for multiple desktop apps and tools, you probably want to pat your pockets a little more for the Loupedeck Live.

from Mike Granich https://www.engadget.com/loupedeck-live-versus-the-stream-deck-170020781.html?src=rss

0 notes

Text

Facility Management Technology a key Enabler for the Fourth Industrial Revolution

We are in the middle of the fourth industrial revolution which is changing the way we work and live. This potential productivity revolution brings up the convergence of the right tools and technology that empower transformative areas and comes hot on its heels with spawned automation, cyber-physical systems and big data.

The recent technological leaps and intrusion of smart technologies have aspired a growing age of millennials. With the evolving workforce, thriving in a ‘real-time, anytime and anywhere’ economy, business culture is transforming under their influence.

The digital maturity honing the existing business processes, penetration of technology in the traditional businesses and increased number of millennials in the workforce, altogether is creating a competitive environment among the businesses along with the necessity to incorporate technological facets in the processes to enhance productivity and improve user-experiences.

Keeping up with the demands of advancements in multiple sites in a portfolio requires the expertise of additional tools, control, intelligence and strategic implementation of the resources at hand.

Thus, to thrive in the fiercely growing and competitive global market, organisations will continue to outsource facility management services on a large scale to transform the way organisations utilise their resources and professionals, creating a win-win for employees as well as businesses in the space. Both in-house and outsourced facility management is growing exponentially with a total market for facilities management to grow from 1.24 trillion USD (in 2019) to a predicted number of 1.62 trillion USD by 2027

The facilities management industry has seen itself rising from an under-optimised resource to a fundamental support that enriches the interaction with the facility, delights the stakeholders and intelligently empowers the businesses.

What is digital transformation and why it is necessary for facility management?

Digital Transformation is the application of digital capabilities to processes, products, and assets to improve efficiency, enhance customer value, manage risk, and uncover new monetization opportunities. — Bill Schmarzo, CTO of Dell EMC Services

In the business context, data-driven digital transformation refers to the exploitation of real-time data collection, analysis and prediction of issues to constantly add value to the companies’ offering and to stay competitive. This will ultimately optimise performance within the organisation, maximise resources, flexibility and help companies make timely and informed decisions.

With the technological disruption in almost every business field, digital transformation in the FM industry has become equally essential. First because of the conscious change in perspective involving every stakeholder in the effort to add value and innovation to the ecosystem and second to potentially handle the broader needs of the business.

The current legacy models of FM operations have considerable budget constraints which limits innovation and efficiency. However, by utilising the business automation techniques we can explore the untapped data to obtain more predictable and contextual insights, making more of the already available assets. The utilisation of smarter technology like IoT, ML and AI makes the existing systems smarter, rather than replacing them with a new generation of inputs eventually contributing to effective cost-cutting.

“People actually remember your service a lot longer than the price.” Technology-driven efficiency results in holistic and strategic FM operation along with elevating asset performance, commercial gains, improving customer service and satisfaction.

After a considerable background of how the FM industry is currently witnessing the paradigm shifts in its operation and transformational value, we should consider the positives that are lining up altogether and seamlessly contributing to upgrading FM from a passive cost centre to a value-driven essential investment.

Procure operational leads

Traditional reliability of hardware and manual work is now proving to be inflexible and rigid because of decreased optimisation and difficult management. The idea is to set up smart workflows that track asset performance, predict and prevent peculiarities and proactively manage the facilities.

With Big Data Analytics, Artificial Intelligence and Machine Learning, a new layer of empowered functionality will be added enabling predictive intervention and intelligent insights in the business operations. These will help to make informed purchase decisions along with continuous speculation on investments.

Continuous optimisation

FM operations can achieve solid productivity and acceleration in the ongoing tasks by eliminating inefficient paperwork and manual work. Machine Learning-driven performance analytics and custom KPIs enable data-led decision making, drive predictive models of operations and pinpoint abnormal asset anomalies. This facilitates a quicker and responsive approach to problem-solving.

Data-driven decision making and predictive analysis

Being confronted with data and operating through technology is pushing facility management to new waters. Actionable insights derived from the IoT devices and other software when combined and processed can aid in optimising assets, workforce and sustainability. Several time-series ML models can derive a new stream of information about potential cost optimisation, inventory management and consumption patterns. Outcomes include reduced overall operational cost and minimised asset downtime.

Stay on top of your portfolio

IoT enabled smart buildings and offices, optimised workplaces and Computer Vision Technologies allows making operations coherent across distributed sites and eases down tracking of assets, equipment, workflow systems and buildings — which were conventionally quite complicated and fault-prone. A fully centralised system that can solely but effectively respond to energy, security and operational data on a single platform will require a less dispersed workforce. Such a unified central source provides a bird-eye view of the entire organisation and makes monitoring easy.

With proper exploratory data analysis on asset and budget, repair or updation decisions related to the equipment can be quickly taken, that too possible in accordance with the budget and maintenance data. This helps monitor the machinery uptime and downtime and increase the life cycle of the equipment.

Blockchain Improvements

“The economy will undergo a radical shift as new, blockchain-based, sources of influence and control emerge. Blockchain has the potential to change the way facilities are managed, ranging from work order tracking to preventive maintenance to life cycle assessments”. ~ IFMA Facility Management Journal

It offers a streamlined way to store and access secure data. It functions as a cloud-based, permanent, digitally secure ledger among the parties, and can reduce complexities of contract management, work processing order and processing of payments. All the contractual processes are recorded in real-time and it induces automation with the capability of self-executing workflows.

Co-exist with your problems and ensure predictive maintenance

Where everything is dynamic and real-time operations are now a necessity, how the solutions employed can be static?

Early fault detection is critical for smooth customer experience and business functioning. The earlier fault was reported after the harm was done. The engineer would react immediately leading to haphazard and sometimes inconvenient situations. But now, the technology applied in buildings is coming pre-installed with sensors and IoT based services come to the rescue. The digital information collected can determine whether the device is working as expected. And in case of anomaly, early detection of a fault or replacement reminders eases out the whole process.

Owing to the occurrence of the COVID-19 pandemic, ensuring safety standards and employing mitigation techniques in the workplace becomes quite necessary. Using IoT and AI, social distancing among the employees, regulating the planned access to different assets within a building, organisations of meetings by keeping the health standards in mind, attendance trackers using computer vision technology, automatically generated digital passes etc. are some of how organisations have seamlessly adopted the measures to minimise human contact and intervention.

Also, thermal cameras for temperature screening, built-in room sensors to track human activity, face-mask detection and optimised parking spaces are widely used to enhance employee safety and experience in the office.

The fourth industrial revolution is advancing faster and is more revolutionary than any to date. Access to previously unimaginable amounts of data, utilisation of technology in every chore and value-driven approaches adopted by the FM industry is increasing the control over any other operational task, providing sustainable solutions and saving money.

The technologically disruptive paradigm of facility management has transformed the conventional way of the utilisation of professionals, assets and money. It has now taken a centre stage and produced unprecedented outcomes. This has always been the industry that identified bottlenecks in the business processes and created solutions in response.

The consensus emerging for technology predicts bigger shifts and includes increased support for research and development, regulations for emerging technologies and devices, value-driven approaches of FM and their accessibility to businesses of all sizes.

The IoT-AI driven FM industry can’t blaze forward without the incorporation of a skilled workforce that has a strong background in STEM areas like programming, data analysis, cybersecurity, AI and ML. Big changes are required to keep the innovations alive and like many other fields, the FM industry is also calling for the adoption of new technologies and an IT skilled workforce.

Integrated Facility Management is expected to increase its share of the total addressable FM market from 10.3% in 2018 to 13.9% by 2025, while the total market is forecast to grow from $819.53 billion to $945.11 billion during the same period at a compound annual growth rate (CAGR) of 2.1%. — Frost and Sullivan

FM is the main driver of the commercial value of properties by providing a faster response, smarter solutions and better management strategies. Now, every stakeholder in the associated industry stands to benefit, customers will get amazing services and experiences, owners will achieve greater profits and above all, Facility Managers are the key enablers.

For More Information, checkout the link: www.evbex.com

0 notes

Text

Understanding MIMO

I don't know about you but sometimes technology makes me feel stupid. Usually you'll find me in a cheerful mood happily trotting along somewhere in a grass field, but every once in a while, a heavy feeling takes hold and it makes me sit and reflect upon the choices that I have made, leading me up to the present point in my life. Anyone who knows me can testify that I'm not the one to waste a bright sunny day in useless contemplation. You can imagine then the force of this indescribable feeling that took a free spirited creature like myself, who would rather spend its time chasing the wind, to chain itself and think. Imagine that. Sit and think on a bright sunny day! But one must make best of ones circumstances.

The other day I was reading about MIMO. Just scrolling through a Wikipedia article. I can't say how I got on to it. I don't remember what prompted me to open the page. All I remember is that it was there. It was interesting read. Multi this. Many that. etc etc.

I'm not all that much into reading, as you probably would have guessed. It does not fit my character, you see. I'm someone who prefers activity, due to my natural outgoing, adventurous nature. I've learnt though my own experience and through the experience of my ancestors that its of no use fighting your own nature. It is a battle that you can't win.

As you can understand therefore, I rarely read. Unless it is to pass time at work. Even then I prefer to study the habits and inclinations of cats on various online encyclopedias. Especially preferring vivid portraits on imagur and short documentaries on youtube that aid in understanding to big walls of text.

But something drew me to that particular subject that day. I don't know what it was. I'm not a painter who can describe his feelings through his art. Nor am I a poet who can write a song about it. I'm unable to find the reason behind my decision to read about that particular subject of which I had no particular knowledge. Maybe it was fate. Maybe something else. All that matters now is that I read about MIMO. And today a similar feeling compels me to talk about it.

MheeeeeeMhawwwww

MIMO stands for Multipe Input and Multiple output. MIMO is applied to make a radio link more robust by increasing the number of transmitting and receiving antennas. The goal of MIMO is to increase the network robustness and capacity. MIMO takes several forms

In one kind of MIMO Multiple can antennas transmit among different paths. Multiple receivers can accept those signals. Each one on a different path. This is the basis of Beamforming. Not all of the receivers will receive the best signal so we must design a way for the receiver to determine the best signal. This is done with the help of precoding. For the purpose of this discussion we don't need to understand precoding.

In another type of MIMO instead of sending multiple signals you simply send one signal but split it into multiple streams. Each one of those streams are transmitted over a different antenna. To the receiver it looks like each one of these signals have arrived at a different channel and thus network capacity is increased....... At least in theory.

Finally there is Diveristy Coding. Diversity coding is best understood as spray and pray technique. In this type of MIMO a single stream is transmitted many times by multiple antennas hoping that at least one of them will arrive at their destination unharmed. Desperation is palpable in this one.

MU-MIMO is MIMO with multi user capabilities. In other words you can MIMO with several users at once.

Reading this post left me with several questions. After collecting my thoughts I shared them with one of my smarter friends to understand what he had to say about the subject. My mom used to tell me that it is always good to take advice from someone who is brainier and more knowledgeable than you are. Although you must trust in your own ability its never a bad idea to seek some guidance. Those who know me can tell you that I have always been an obedient child. It should come as no surprise then that I'd listen to her advice.

I took my queries to my friend and this is what he had to say. I paraphrase because I forgot to carry my notepad with me to jot down his wise words.

THUS HE BEGAN

Before we can understand MIMO we must understand wireless networks. A wireless network is a physical network with no wires. When a wireless communication channel is established between two devices it is equivalent to connecting the two devices with a physical wire.

Of course the appeal of wireless networks is that you don't have to invest in huge infrastructural projects to increase the connectivity in a region.

The downside is that since wireless signals can't be directed as well as wired signals there is a lot of signal loss. That leads to a degraded quality of service. Which leads to unsatisfied customers.

Therefore effort has to be spent to get wireless signal as close to the quality of wired signal as possible. If one has the inclination to study it can take an entire lifetime to understand the clever techniques invented to make this possible. Life is short. What we need know is a gist of the matter. Here it is:-

We want to efficiently utilize the communication channel. We do this by multiplexing.

We want to make the networks more robust. We do this by adding redundant network stations.

MIMO is one such technique invented. However MIMO has several shortcomings.

It requires a dedicated hardware in base station and client machine. Existing machines will not work.

It requires complex receiver hardware to assemble the signals.

It does not guarantee a better QOS or signal to noise ratio. MIMO in essence is simply a way of increasing the chance of getting a good quality signal by adopting a brute force approach.

The goal of MIMO is to increase total network throughput ( Individual speeds will not be increased, however more user will have access to a uniform speed ) . Bu then why do we need MIMO at all? There are alternatives where these goals can be achieved cheaply.

Most of the MIMO technology can be easily replicated using cheap inter-operable components available in the market right now. Especially in the home networking context.

In fact the principle behind MIMO is best realized when you set up cheap multiple access points rather than highly optimized individual devices. Quantity is greater than quality in network coverage. All the time.

Consider Beamforming. By definition beamforming is the technique of emitting same signals from different antennas in a way that they all add up to for the best signal at the receiver.

But this can be easily replicated by having multiple base stations each of them emitting a signal. All client device has to do is choose the best one?

"But its costly to set up multiple base stations you say?"

I ask different questions.

What is the cost of having multiple antennas on a single station and a receiver? Exactly how is a single station with multiple antennas cheaper from multiple stations with single antenna? What happens when the receiver is in motion?

In the last case we discover that beamforming actually reduces to the same old radio signals that we're used to. And we have spent all this money on equipment that only works when the client and the base station positions are well defined? Static, in other words. Really? Why not go with a Point to Point connection then? Nothing can beat that in terms of quality.

There is one very big advantage of multiple stations. It is redundancy. No single point of failure. That is something that is well worth the investment.

Next consider spatial multiplexing. Here you divide a single data channel into multiple channel streams to trick the receiver into believing that its getting data from multiple channels at a higher speed. It does increase the network capacity. But how many client devices can actually use this technology?

Let us assume that we actually get the client devices to work with this technology. What then? How should the application layer adapt to this change? How should we design our you tube app for example, when the last 30 min of a 90 min movie have arrived successfully but the stream transmitting the first 60 min is lost?

Whatever you gain on base station efficiency you loose at the client reception. As a user I feel no better or even worse as compared to old network.

Data integrity is just as valuable as data speed. I can understand a congested network giving me slower speeds. But I can't tolerate a fast network giving me data out of place. It may work slowly but it should always be right.

A well designed redundant network will always outperform an "intelligent" auto adjusting technology. Interference exists and we have means to work around it.

Like any breakthrough in communication technology to fully gain the advantages of MIMO we need compatible client as well as server station hardware. This means that we need to invest in new infrastructure. Do the gains provided by MIMO justify the investment? I'll leave that to you to decide.

With these parting notes my friend signed off. Having nothing further to add to the discussion I must take my leave as well. I hope that my ramblings have been of use to a few people who like me often find themselves at a loss in the ever changing, ever expanding , progressive world of communication technology. I'm lucky to have a friend who has a certain interest in these things. Though I can't for the life of me understand how any one can be bothered to read about electronics and radios when you can study cats instead. But no one can fight their nature, I suppose.

If you'd like answers to your wifi problems don't hesitate to send an email to

write to us on our tumblr page

[https://workrockin.tumblr.com/ask]

tweet

[https://twitter.com/workrockin]

connect with us on linkedin

[https://www.linkedin.com/in/workrock-careers-21b3a2186/]

0 notes

Link

If you’re running a call center, you are familiar with how crucial call center technology is in improving customer experience. Without the right tools and technology foundation to support the call center infrastructure, your call center is incapable. Let’s discuss a little bit more about call center technology in contemporary call centers. What is Call Center Technology? Every Call Center requires the right technology to power principal operations, boost customer experience and cut expenses. This is what encompasses call center technology in a call center – what makes up and complements the underlying infrastructure. Difference Between Call Center Technology and Call Center Infrastructure Most people can’t differentiate between call center technology and call center infrastructure. While they are very alike in nature, and are often used interchangeably. In this article, we are going to try and define both terms as well as point out how dissimilar they are. Call Center Infrastructure refers to the set of software, hardware, and network components enabling a call center to operate effectively, like LAN Network and VOIP Telephony. In contrast, call center technology typically refers to the numerous sets of technologies employed to enhance customer experience and operations in a call center, like automatic call distributor, intelligent contact center routing and so on. Technology Essentials in a Call Center When it comes to call center technologies, the following are some important technologies you must have in your call center: Customer Relationship Management (CRM) With a call center, your staff are going to come into contact with a wide variety of callers. Managing them effectively with a CRM tool is one of the best ways to engage with customers on a regular basis. Some of you may ask why? This is because your marketing & operations teams are going to require to access the contact data on a daily basis. If you’re searching for the most effective CRM to handle your customer experience, then one that has a fully-encompassing 360-degree customer view to gain a complete view of all customer engagements is preferable. Automatic Call Distributor (ACD) Automatic Call Distributor, or ACD, handles all the incoming calls that a call center gets and initiate pre-set rules to forward it to the most suitable agent. The most progressive form of modern contact center routing is Intelligent skills-based routing. Call Center Routing can significantly improve first call response, or the rate at which questions are responded the first time. According to SQM Group, a 1% improvement in first Call Response converts to $276,000 in yearly operational savings for the typical call center. Predictive Dialer A predictive dialer is used for automating outbound calls, where a system automatically dials a distinct set of numbers while calculating which call center agent will be available to receive the call when it successfully connects. This is one aspect of the call center dialer technology. Computer Telephony Integration (CTI) Computer Telephony Integration, or CTI, enables call center staff to manage their call dialing without having to handle physical telephones. There are many benefits of using computer telephony integration in a call center. Self Service Nowadays, customers aren’t looking for assistance with their issues, they want to find solutions for their problems themselves. With the support of intelligent self-service options like artificial intelligence IVR or chatbots, customers can solve their problems easily without much effort. According to The Harris Poll, almost 50% of customers with texting capabilities would favor pressing a button to start a text conversation instantly, rather than waiting on hold to talk with an agent. Case Management A case management system is used to professionally manage customer inquiries via ticket management system to log the problem at each stage along the progress in real-time. Having a customer experience platform with integrated case management system can hugely enhance customer satisfaction in a call center. Artificial Intelligence Artificial Intelligence, Machine Learning and Big Data are all hot topics in the call center industry. Besides that, AI can also be used to enhance customer experience. Possessing the capacity to extract beneficial business intelligence from big sets of data instantaneous can offer crucial insights for customer experience perfection. Social Media Sentiment Analysis can be integrated to comprehend the visitor’s emotion at the exact stage of the customer journey. In less than a year, the consumer will be able to maintain 85% of the relationship with a business without engaging with a real-live agent according to Gartner. Social Media Rather than call a representative for a question, consumers would rather go online on social media channels, like Facebook or Twitter, to obtain real-time data about their issues. It is much simpler to confirm if a website is down by checking on social media, rather than giving a call center representative a call. In a tougher customer service circumstances, customers can use social media to notify the company about their query by leaving their Order ID. There is no doubt that there is a growing role of social media in business. If you want to attain an ideal customer-agent engagement in your call center then having an effective Customer Relationship Management (CRM) can go a long way in making this a reality. Real-Time Analytics & Reporting Without real-time inputs from a reliable reporting tool, you’re left to believe your hard work is hopefully paying off. With data coming in from all touch points, agent, and team – your staff is in a much better position to service consumers effectively by examining and interpreting incoming data. Most customer experience software has real-time customer experience analytics to comprehend and enhance customer experience in their industry. Call Center Technology – Where Does Your Business Stand? Since call center technology is still evolving and improving every day, it is difficult to pin-point precisely where a business stands in terms of call center technology. Do you require more progressive call center technology to power your business to greater heights, or just a trivial system upgrade to address a departmental problem? In any case, you should start with performing a customer experience assessment before doing anything to ensure that the call center software you chose for your business is effective and falls within your budget.

0 notes

Text

SimpliSafe Home Security System Review: Beautiful -- and Brains, Too!

New Post has been published on https://bestedevices.com/simplisafe-home-security-system-review-beautiful-and-brains-too.html

SimpliSafe Home Security System Review: Beautiful -- and Brains, Too!

"Stylish hardware, a range of sensors and home security monitoring make SimpliSafe a top choice."

Beautifully designed base station fits seamlessly into your home

Setting and forgetting the installation means that you will only be interrupted if there is a problem

The affordable home surveillance service offers comprehensive coverage

No contracts

Limited smartphone integration ex works

Home Monitoring Service subscription required

If you're concerned about home security but don't have the time (or inclination) to explore the myriad of products available, a one-box solution like SimpliSafe Protect can calm your fears. This starter kit is part of a wider selection of smart home security lines that include alarms, sensors, cameras and more, and offer professional surveillance support 24/7.

The security of smart homes is booming with only 20 percent of the currently protected Americans: Amazon is pushing for 360-degree smart home security with the recent takeover of Ring via Smart Doorbells and is competing with Nest Secure from Google and newcomers like Abode, for example. SimpliSafe is not (yet) a well-known name, but the company has been active in the home security field for more than ten years. The company is valued at over $ 1 billion after a recent capital injection.

With the company's third generation system on the shelves of your local big box store, there's no better time to try SimpliSafe.

Home security reinvented with beautiful hardware

In 2018, safe, intelligent, and simply are no longer the differentiators for smart home devices that they used to be. Now consumers are also demanding style. Inexpensive Kickstarter kits may have been good enough in 2014, but optimized systems like Nest Secure have improved the game thanks to beautifully designed sensors and curved keyboards.

Terry Walsh / Digital Trends

In response, SimpliSafe worked with the design gurus IDEO to reinvent security hardware for smart homes – with elegant results. The SimpliSafe base station is the star of the show and resembles the fruits of a one-night stand between Amazon Echo and Google Home. Equipped with a high-quality loudspeaker, a 95 dB siren and free cellular and Wi-Fi connectivity, the powerful hub blends wonderfully into the background. However, when asked to act, you and your intruders know it's there. An integrated emergency power supply offers continuous protection in the event of a power failure.

While other components don't have the same visual impact, exploring the beautifully presented starter kit shows a generous selection of smart home sensors and controllers. You can customize the kit contents when ordering your system so prices vary depending on your requirements. Otherwise, choose a pre-configured bento box of your choice.

The fruits of a one-night stand between Amazon Echo and Google Home

For comparison purposes, a custom selection of the SimpliSafe kit that exactly fits the Ring Protect system for $ 199 (base station, keyboard, an input sensor, and a motion detector) costs $ 229 – a little more expensive, but as we'll discuss in a moment , SimpliSafes hardware is more advanced.

The base station is accompanied by a large, wall-mounted keyboard that can be used to activate and deactivate the system. In contrast to Nest and Ring, the SimpliSafe keyboard has a monochrome display and is used both for system configuration and for arming. This new device is more compact than the previous generation and has a larger, brighter screen, illuminated buttons and a larger signal range. Soft-touch plastics and sleek lines ensure a good match with the base station, but clicking controls (where you press to navigate the sides of the screen) and annoying beeps that accompany each command make the experience cheaper. An oversized keychain can also be included to switch protection.

Terry Walsh / Digital Trends

Four entrance sensors for windows and doors are neat enough, but they lack the finesse and first-class finish of Nest Secure. A motion sensor fits perfectly in a corner between the wall and ceiling (or it can sit on a desktop). An independent panic alarm, a water leak sensor and a freeze sensor are also included in the scope of delivery. For the sake of simplicity, most sensors have a self-adhesive back, but screws are also included for permanent installation.

To ward off potential intruders, a large garden sign and window stickers with SimpliSafes 24-hour surveillance protection are also included. When you add an expanded selection that includes smoke and CO2 alarms, surveillance cameras and an upcoming smart doorbell and lock, SimpliSafe is one of the most comprehensive security systems on the market today.

Voice-controlled configuration is easy once you get used to a physical keyboard

Given the number of components included, installing the system is expected to take some time, although you will find that the starter kit is partially pre-configured for you upon arrival. Of course, you need to invest some time to figure out the best placement for the various sensors and other triggers in your kit. After the physical installation, simply press a button on each component to register. You will then be asked to enter a personalized name for this component on the keyboard. A included test mode allows you to go through the house again by pressing each button to check that all devices are working.

A lack of ready-to-use smartphone integration is a real disappointment.

SimpliSafe appears to connect to the cellular network automatically, but in the past, lack of signal strength has left users somewhat in the dark. Wi-Fi support has been added to this latest generation. So if you remember, you will find that connecting the system to your home network is easy enough.

The process is easy once you get used to using a physical keyboard instead of a smartphone app. The voice announcements via the high quality loudspeaker of the base station ensure that you are never lost.

It blends beautifully into the background, but you have to register for all functions

Once you are ready to go, you will find that SimpliSafe is largely invisible unless there is a problem. This is a big plus for us. Large "Home" and "Away" buttons on the keyboard and key chain make it easy to activate and deactivate the system. We found the sensors to be robust and very responsive. Activating the base station alarm and keyboard notifications took less than a second.

At 1,000 feet, SimpliSafe offers a better sensor range than nest and ring and at the same time outperforms the competition with a battery life of five to seven years. However, a lack of ready-to-use smartphone integration is a big disappointment. SimpliSafe offers both a web app and a mobile app. However, without subscribing to the company's subscription service for $ 15 a month, only basic features are included, such as: B. Activation of home surveillance, camera surveillance and account management.

As soon as the service is active, remote notifications and app control as well as monitoring of people are opened around the clock. When your alarm goes off, your base station will notify the surveillance center that will contact you (and other specific family members or friends). False alarms can be easily deactivated with a safe word, otherwise the police or fire brigade will be sent to your home.

As another sweetener, subscribing enables a growing number of third-party device integrations, including August Smart Locks, Amazon Alexa, Google Assistant, the Nest Learning Thermostat, and more.

We love SimpliSafe's low price and comprehensive home security, but would rather have remote notifications and system access available without having to pay for full home surveillance.

Warranty information

SimpliSafe is covered by a three-year limited warranty, well ahead of Nest Secure (two years) and Ring Alarm (one year).

Our opinion

If you want to protect your home with a remote monitoring service, choosing SimpliSafe makes a lot of sense. An affordable service subscription with 24-hour monitoring, remote access, powerful sensors and elegant security hardware is a convincing combination.

SimpliSafe certainly offers real value and performance, even without home surveillance, but the lack of remote app access and notifications weakens the offering. However, such a comprehensive selection of sensors and supporting hardware makes SimpliSafe a fantastic choice for 24-hour house protection.

Is there a better alternative?

At $ 499, Nest Secure offers great hardware, but it can't keep up with the price and doesn't have the multitude of security sensors offered by SimpliSafe. Ring Alarm is a more compelling competitor at $ 199. Here too, the breadth of SimpliSafe is lacking, but Ring is rapidly expanding its ecosystem for security hardware and making it an observable ecosystem. A comprehensive selection of sensors, supporting hardware and monitoring services make SimpliSafe a fantastic choice for 24-hour house protection.

How long it will take?

SimpliSafe is one of the original pioneers of smart home security, and a substantial capital injection means that it will continue for some time. Buy with confidence.

Should you buy it

Good-looking hardware, a comprehensive selection of sensors and supportive monitoring services make SimpliSafe a fantastic choice for 24-hour house protection. Put simply, SimpliSafe is one of the best home security systems on the market.

Updated September 19, 2018 to find that SimpliSafe now works with Google Assistant.

Editor's recommendations

0 notes

Text

Original Post from Rapid7

Author: Aaron Sawitsky

In a recent webcast, our panel of cybersecurity experts discussed all things cloud security, including cloud security best practices, how to avoid common security pitfalls in cloud environments, and how to work with DevOps to get the most out of your organization’s cloud investment.

In this blog post, we’ll share some of our experts’ insights into protecting your cloud environment:

Cloud security requires a new mindset

Our security panelists—Rapid7’s Aaron Sawitsky, Bulut Ersavas, Josh Frantz, and Tyler Schmidtke and Scott Ward of AWS—said that moving to the cloud requires security teams to develop some new ways of thinking. For security professionals accustomed to seeing and touching physical hardware in a data center, working with cloud environments can be a big adjustment. In order to take full advantage of the benefits of cloud, you’ll have to adapt your organization and your team’s skill sets to fit into your new reality.

There are some special considerations when it comes to the cloud. One difference is that for a cloud environment, the responsibility for security is shared between the cloud customer and the cloud provider. Although the details change depending on the provider, they are generally responsible for securing the underlying infrastructure of the cloud, while the customer is responsible for securing anything they put in that cloud environment.

This arrangement can be highly beneficial, as it gives your organization the opportunity to let security team members who would normally be tasked with infrastructure security focus on new projects. However, it’s also important that everyone at your organization is familiar with exactly what the cloud provider is responsible for keeping secure and what responsibilities still rest on your shoulders. More than a few incidents have occurred because someone incorrectly assumed that the cloud provider was taking care of all security considerations.

Another unique aspect of the cloud is the ease with which new assets can be deployed. In a cloud environment, a developer can deploy new infrastructure with the click of a mouse. As a result, the security team has far less oversight of cloud assets and less input into how they are configured. This can lead to misconfigurations, which are a leading cause of security incidents in cloud environments. At the same time, ease of deployment is a key benefit of the cloud, so security teams need to find a way to minimize the risk of misconfigurations, while still supporting easy deployments.

Related: See how our vulnerability management solutions can help you understand the vulnerabilities and misconfigurations present in your cloud environments

When moving to the cloud, you also have to think about the lifespan of assets. The cloud lets you spin up short-lived virtual instances, which can present challenges if your security team isn’t used to monitoring those assets in real-time. Keep in mind that if you only scan for vulnerabilities every week or every month, you might completely miss an instance that your DevOps team spins up for just a few days. Therefore, if you want to maintain an up-to-date picture of your cloud environment, you will need to use new tools and techniques.

Cloud security strategies and pitfalls

So, how do security teams evolve to better rise to cloud challenges? First, our experts discussed threats to cloud environments and the areas where security teams often go wrong. One of the largest factors in many data breaches is configuration vulnerabilities. Your cloud provider probably offers a variety of controls for your environment. Make sure you take the time to assess these controls and identify the ones that will provide the biggest security benefits. Guidelines such as the CIS Benchmarks for AWS, Azure, and GCP can be a great help when it comes to learning about best practices for configuring the controls in your platform(s).

All the experts on our panel agreed that defining baselines is crucial. Identify what measures should always be in place to effectively minimize risk. Once you’ve defined a baseline, our experts recommended implementing guardrails that ensure all new cloud assets conform to your baseline. This can be done using a tool from your cloud provider, such as AWS Config. You can also give developers templates for properly configured infrastructure using tools like Terraform or AWS CloudFormation. You can even go one step further and automate deployment of new cloud assets with all appropriate configurations applied using tools like Chef or Puppet. This will allow you to easily scale your cloud environment in a secure manner. Another benefit of automating the process is that you minimize the chance of human error.

Visibility is essential to protecting your cloud environment. People in your organization may spin up new instances in different regions, create new networks, launch new services, or even create brand-new AWS accounts. Whatever tools you’re using for visibility and vulnerability assessment need to have a broad-enough scope to take in this entire landscape. They should also have the flexibility to assess asset types beyond traditional VMs. Perhaps most importantly, the tools you’re using for visibility must also have the ability to detect assets that are misconfigured. Even if you define and enforce baseline configurations, misconfigurations can be introduced after deployment. Your security team needs the ability to know when this happens so that they can fix the issue and educate the appropriate employees on what risks they unintentionally introduced with their configuration settings.

DevOps and security culture

In cloud environments, security teams run the risk of stifling innovation if they try to replicate the processes used for on-premises networks and directly control the deployment of new infrastructure or software. By delaying deployments to conduct manual security assessments, your security team can defeat some of the core purposes of using cloud resources: speed, efficiency, and agility. The panelists suggested that moving to a cloud environment provides a great opportunity for security professionals to instead integrate themselves into the DevOps process, transforming it into DevSecOps. This means that security becomes a part of the testing process that occurs before any deployment. Rather than security being a standalone assessment that occurs outside the regular workflow that developers use, security issues are caught during pre-deployment testing and addressed like any other bug.

As our experts pointed out, everyone in the organization wants to do what’s best for the business. It’s important for each team to empathize with each other’s viewpoint and learn together. Security shouldn’t be trying to punish development for unsafe practices. Instead, try sitting down with developers to go through an audit log together. Paint them a picture of what could happen to the entire enterprise if best practices aren’t followed.

Cloud migration and hybrid environments

Most organizations don’t move all of their assets from on-premises to the cloud at once, and in fact, our experts recommended a crawl, walk, run approach when it comes to cloud migrations. That means you’ll end up running both types of environments simultaneously (maybe temporarily or maybe permanently).

Some businesses have completely separate security teams for on-premises and cloud—a solution that our experts don’t recommend. There are many best practices that are similar for both environments, and the teams will need to communicate often regarding emerging threats that need to be addressed across both environments.

When migrating, it’s important to make sure you have a holistic view and don’t lose sight of securing legacy systems as you move to new platforms. And for monitoring and threat assessment, consider solutions that are capable of bridging the divide. Learn more about how Rapid7 InsightVM and InsightIDR allow you to manage risk for both on-premises and cloud environments, all in one place.

#gallery-0-5 { margin: auto; } #gallery-0-5 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-5 img { border: 2px solid #cfcfcf; } #gallery-0-5 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Go to Source

Author: Aaron Sawitsky Cloud Security Fundamentals: Strategies to Secure Cloud Environments Original Post from Rapid7 Author: Aaron Sawitsky In a recent webcast, our panel of cybersecurity experts discussed all things cloud security, including cloud security best practices, how to avoid common security pitfalls in cloud environments, and how to work with DevOps to get the most out of your organization’s cloud investment.

0 notes

Text

Tute 8

PERSISTENT DATA

The opposite of dynamic—it doesn’t change and is not accessed very frequently.

Core information, also known as dimensional information in data warehousing. Demographics of entities—customers, suppliers,orders.

Master data that’s stable.

Data that exists from one instance to another. Data that exists across time independent of the systems that created it. Now there’s always a secondary use for data, so there’s more persistent data. A persistent copy may be made or it may be aggregated. The idea of persistence is becoming more fluid.

Stored in actual format and stays there versus in-memory where you have it once, close the file and it’s gone. You can retrieve persistent data again and again. Data that’s written to the disc; however, the speed of the discs is a bottleneck for the database. Trying to move to memory because it’s 16X faster.