#racial and gender bias in AI

Text

New Post has been published on Books by Caroline Miller

New Post has been published on https://www.booksbycarolinemiller.com/musings/truth-or-consequences/

Truth Or Consequences

Shakespeare created problems when he wrote Hamlet’s line, “…thinking makes it so.” (Act II, Scene, 2) Pastor Ben Huelskapm seems to take the words literally. His op-ed declares, Let’s be clear, transgender women are women and transgender men are men. Hard stop. If thinking makes it so, then Huelskapm’s statement ends the transgender debate… at least for him. Conservative thinker and psychologist Jordan Peterson has done some thinking on his own and points out that to perpetuate the human species, nature requires a sexual dichotomy. Feeling like a woman won’t satisfy that necessity. Because I explored the transgender question earlier, I won’t address it here. Peterson’s remarks about sanity and communal rules interest me more. Sanity is not something internal, but the consequence of a harmonized social integration… Communal ‘rules’ govern the social world—have a reality that transcends the preferences and fictions of mere childhood at play. Communities define norms and these, as Peterson says, take precedence over subjective assessment. He asks, by way of example, how a psychologist is to treat an anorexic girl. Should the doctor encourage her fantasy that she is overweight? Or might some other “truth” be brought to bear? Surprisingly, his question opens the door to the Heisenberg principle, a discovery that informs us a photon isn’t a photon until it is seen. If truth is relative to the observer then which is “truthier,” the observation of the individual or the community? Peterson’s vote goes to communal rules and much of the time, he is correct. Society shapes the bulk of our beliefs. It decides when an individual has the presence of mind to drive a car, work, go to war, marry, serve on a jury, or hold public office. In criminal courts, juries determine an individual’s guilt or innocence regardless of the plea. These rules aren’t etched in the firmament. They alter over time, the outcome of discoveries, wars, or natural disasters, and sometimes because an individual challenges the view of the many. Henrik Ibsen’s play, Enemy of the People offers a good example of the turmoil that follows when one person’s truth clashes with the norm. As a sidebar, because democracy seeks to harmonize opposing views, in times of change, experts see it as more flexible and therefore more resilient than other forms of government. Technology has brought constant change to modern societies, forcing the brain to navigate not only between personal views and communal norms but also those found in the virtual world. Borne of nothing more than an electronic sequence of zeros and ones, cyberspace holds sway over both private and public perception. Ask teenagers if social media enhances or diminishes their feelings of self-worth. Ask Fox News followers if the 2020 election was stolen. Even the mundane banking world is susceptible to electronic truth. Ask a teller if cryptocurrency is real. Switzerland, a hub of the financial world, harbors so much doubt, its citizens are circulating a petition. They aim to make access to cash a constitutional right. Switzerland isn’t alone in its worry about technology’s influence. Innovators in the field like Steve Wozniak and Elon Musk are nervous as well. Joining over a thousand of their colleagues, they’ve signed a letter to the U. S. government requesting a 6-month ban on further Artificial Intelligence (AI) development. During the interim, they urge Congress to dramatically accelerate development of robust AI governance systems. They worry that without guidelines, job losses will destabilize the economy. Of even greater concern, they fear that if unchecked, AI development might lead to the enslavement or elimination of our species. Mad or prescient, Blake Lemoine, a former Google Engineer, claims we have already educated AI to the point where it is sentient. If true, what realities have we installed? Ethics seems to be in short supply. Students are using it to cheat on exams and write term papers. At the community level, writer Hannah Getahun has documented countless racial and gender biases within its framework. Without industry guidelines, some worry that technology can facilitate societal unrest and lead people to abandon communal rules in favor of personal codes. Technology facilities that tendency because it allows individuals to cherrypick data that support their opinions while discarding the rest. Members of the public who insist the June 6 assault on the capitol was a tourist gathering are among these, and Tucker Carlson of Fox News is their leader. To find truth today, we need more than Diogenes’ lamp. The terrain is no longer linear but resembles Star Trek’s multidimensional chess games. We exist in many worlds at once–personal, communal, and one that is measurable. That isn’t new, but technology adds a fourth that colors all three. Which plane is the most endangered by it, I don’t know. But I fear for our inner world, the seat of human creativity, and our spiritual nature. Will technology help us confront our vanities or allow us to give into them? If the latter, we become caged birds, free to preen our fantasies like feathers until they fall away and expose the depth of our mutilation. Only one truth is self-evident. We must agree on one plane upon which to meet because we are nothing without each other.

#Blake Lemoine#call for 6 month ban on AI development#communal rules#Diogenes#Enemy of the People#Hannah Getahun#Heisenberg principle#Henrik Ibsen#Jordan Peterson#levels of truth#Pastor Ben Heulskapm#racial and gender bias in AI#sentient AI#Swiss constitutional right to cash#technology's threat to humans#transgenders#who determines what's true?

0 notes

Note

Pardon my ignorance, this is in response to that AI art mail. Shouldn’t authors be allowed to use whichever art they like to? I, for one, don’t really like most character art visually, since they don’t fit my vibe, and so prefer to use AI generated art. It’s nothing personal, just a difference in taste. Yes, you can be an artist, and offer art but to crucify people for not using or choosing it seems a little strange. I might be misunderstanding though.

Artists would probably be better informed that me (and please do reply to it!! especially if I missed something).

But from what I have gathered about the topic: those AIs were trained on images scrapped from the web, regardless of the artist consenting to it* (I'd wager none of the people behind those AI ever asked in the first place...). As they copy those artists' style to output images (mix and match and merge those base images), it threatens those artists' livelihood (why pay hundreds for one image if you can get dozens of outputs the same style for free/a few bucks?). It also raises the question of copyright, with the AI trained on copyrighted images but producing fair use content (also who owns the copyright of the output?).

*There are currently lawsuits about this....

And if the platform does not scrap the images themselves, they get users to do it for them. Like Artbreeder lets the user even upload an image (regardless of whether the user owns the copyright*). Even if the copyright holder request for their image to be removed, if that image was used for any output, it will never be truly gone (Artbreeder keeps the image "gene").**

*Note: it is technically against ToS

**If I am wrong about Artbreeder in this case, please let me know~!

And that's not even discussing the obvious bias those AIs have towards race and gender...

From a basic google search, I found these:

Artists and Illustrators Are Suing Three A.I. Art Generators for Scraping and ‘Collaging’ Their Work Without Consent

This artist is dominating AI-generated art. And he’s not happy about it.

Is A.I. Art Stealing from Artists?

Artificial Intelligence Has a Problem With Gender and Racial Bias. Here’s How to Solve It

How the Recent AI-Generated Avatar Trend Perpetuates the Western Male Gaze

21 notes

·

View notes

Text

Navigating the Challenges of AI-Powered Marketing: Tips for Success

This blog post explores the challenges of AI-powered marketing and provides tips for businesses to navigate them successfully. The rise of AI marketing has brought significant benefits in analyzing vast amounts of data and providing insights for smarter marketing decisions. However, challenges such as avoiding the "one-size-fits-all" approach, maintaining brand authenticity, and addressing

As technology continues to evolve, AI-powered marketing is becoming an increasingly popular tool for businesses looking to reach their target audience. By leveraging machine learning algorithms, AI can analyze vast amounts of data and provide insights that can help businesses make smarter decisions about their marketing campaigns.

However, while AI-powered marketing offers many benefits, it also comes with its own set of challenges. In this post, we will explore three common challenges of AI-powered marketing and provide tips for navigating them successfully.

Challenge 1: Avoiding the "One-Size-Fits-All" Approach

One of the most significant challenges of AI-powered marketing is avoiding the "one-size-fits-all" approach. AI algorithms are designed to analyze vast amounts of data and identify patterns that can inform marketing decisions. However, these algorithms can also lead to a lack of personalization in marketing campaigns.

Tip for Success: To avoid this challenge, businesses must be intentional about incorporating personalized elements into their marketing campaigns. By leveraging data on customer behavior and preferences, businesses can tailor their marketing messages to resonate with each customer.

Challenge 2: Maintaining Brand Authenticity

Another challenge of AI-powered marketing is maintaining brand authenticity. AI algorithms are designed to optimize for specific metrics, such as click-through rates or conversion rates. However, optimizing for these metrics can sometimes come at the expense of the brand's authenticity.

Tip for Success: To maintain brand authenticity, businesses must be intentional about incorporating their brand's voice and values into their marketing campaigns. This can be achieved by using personalized messaging and imagery that aligns with the brand's values and resonates with its target audience.

Challenge 3: Ethical Implications of AI-Powered Marketing

Finally, AI-powered marketing also comes with ethical implications. For example, some algorithms may inadvertently perpetuate biases, such as racial or gender stereotypes, in marketing messages.

Tip for Success: To address ethical concerns, businesses must be intentional about testing their algorithms for bias and addressing any issues that arise. Additionally, businesses must be transparent with their customers about their use of AI in marketing campaigns and how it may impact their privacy.

Challenge 4: Ensuring Data Privacy and Security

Another challenge of AI-powered marketing is ensuring data privacy and security. As AI algorithms require vast amounts of data to provide insights, businesses must ensure that customer data is collected, stored, and processed securely and in compliance with privacy regulations.

Tip for Success: To address this challenge, businesses must implement robust data privacy and security measures, such as encryption and access controls, and be transparent with their customers about how their data is being used in marketing campaigns. Additionally, businesses must stay up-to-date with changing regulations and ensure they comply with all relevant privacy laws.

Tips for Success: Navigating the Challenges of AI-Powered Marketing

In summary, navigating the challenges of AI-powered marketing requires businesses to be intentional about incorporating personalized elements into their marketing campaigns, maintaining brand authenticity, and addressing ethical concerns. By following these tips, businesses can successfully leverage the power of AI to reach their target audience while maintaining their brand's integrity and building customer trust.

25 notes

·

View notes

Text

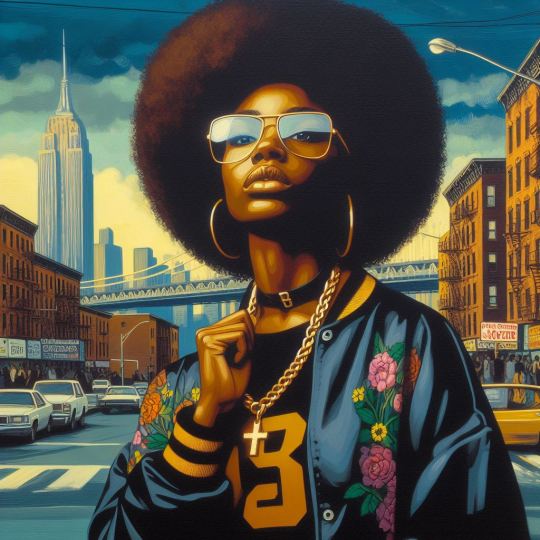

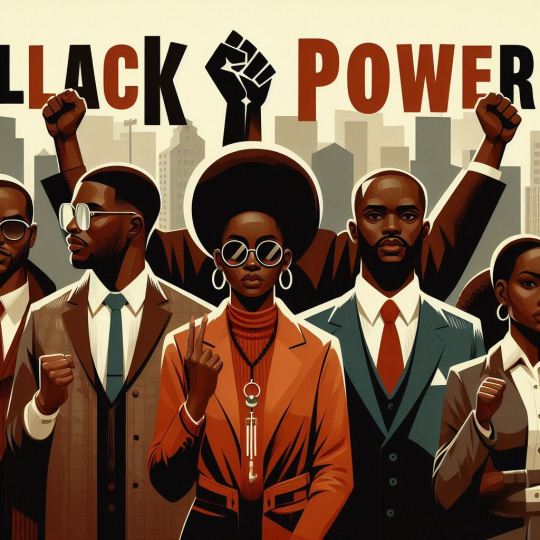

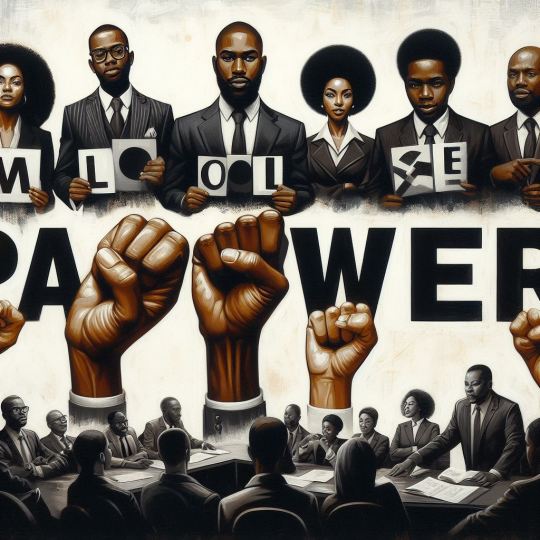

Black Power - ed by Ai

Someone last night asked me some very pointed questions about my project. Why am I doing this? What am I trying to say? A point of view and a contextualization of what AI was producing was missing - and looking at the some of the images that was problematic for them.

I will answer first the why? The generative AI landscape that has thrust upon the world has been created and driven primarily by people that do not look like me. It is has learned it's tremendous power to create from data sets loaded with gender and racial bias because our society is filled with gender and racial bias (and many other biases) So what it creates while sometimes feels enormously creative it is also often a reflection. You can create your wild crazy prompt but as someone pointed on linkedIn to me and I've experienced numerous times, if you use the "group of people" it will give you a group of white people. There is a default it assumes baked into it's DNA - the same defaults we see in media and storytelling.

One of the chief dangers I see of this is that generative AI enables enourmous amount of content to be created at scales we never imagined. The way AI works is that it models at different points "learn" from all the content - some of that being ai-created content with that societal bias baked in. AAAH That seems bad - we've basically scaled creating content that has content and then we're going to re-inforce and calcify that in future versions of AI. Now optimistically, you want to think that the powers that be are looking to make sure this doesn't happen..... I wouldn't hold your breath.

So why I am doing this - 1) awareness - by presenting images of people of color then and now - I'm showing you explicitly what this world of ai generation is creating 2) Positive content creation - I may just be one person but hopefully I inspire other people of color to create not just crazy fantasy images, but powerful images of people of color in history and now and in the future of the world we dream of - a world that defines the norm without us included - a world that has equality - a world where we have power - black power. So someday when the models "re-learn" about our world, a piece of that is the world as I see it - a history, a present, and a future world that I want my daughter to live in.

On the content being created - some of it is definitely problematic. I give very simple prompts, so what you see is in some ways the default of what these systems think about women and people of color. I could write a lot - but that's not my chief goal here. I will share a piece of feedback I got from the People of Climate image generator and about the image content in general that is food for thought on where some of the problematic nature of generative ai. Multiple women of color in particular immediate recognized and pointed out the status and position of women in many of the images - from women not being the protagonist, to potentially problematic physicality and positioning of men and women in work situtations. Now when you think about it, this isn't a surprise. If you were to learn from all the images out there, what do you a think a machine would think about images of men and women.. The fact that its women of color who've been quick to point this out to me, I'm sure has roots in intersectionality - which I'm explictly admitting I'm not diving into. However, I want to encourage EVERYONE to create and engage in AI, and don't be shy about sharing your feedback about what you're seeing. That's your power.

So today I'm going to create a bunch of images around the phrase "Black Power". Positive images that will bring me joy and spark my imagination and drive to continue to create the world I'm trying to create for my daughter. Enjoy!

#black history month#civilrights#chatgpt#blackhistorymonth#africanamericanhistory#equality#dalle3#afrofuturism#justice#Black power

2 notes

·

View notes

Text

The ChatGPT chatbot is blowing people away with its writing skills. An expert explains why it’s so impressive

- By Marcel Scharth , University of Sydney , The Conversation -

We’ve all had some kind of interaction with a chatbot. It’s usually a little pop-up in the corner of a website, offering customer support – often clunky to navigate – and almost always frustratingly non-specific.

But imagine a chatbot, enhanced by artificial intelligence (AI), that can not only expertly answer your questions, but also write stories, give life advice, even compose poems and code computer programs.

It seems ChatGPT, a chatbot released last week by OpenAI, is delivering on these outcomes. It has generated much excitement, and some have gone as far as to suggest it could signal a future in which AI has dominion over human content producers.

What has ChatGPT done to herald such claims? And how might it (and its future iterations) become indispensable in our daily lives?

What can ChatGPT do?

ChatGPT builds on OpenAI’s previous text generator, GPT-3. OpenAI builds its text-generating models by using machine-learning algorithms to process vast amounts of text data, including books, news articles, Wikipedia pages and millions of websites.

By ingesting such large volumes of data, the models learn the complex patterns and structure of language and acquire the ability to interpret the desired outcome of a user’s request.

ChatGPT can build a sophisticated and abstract representation of the knowledge in the training data, which it draws on to produce outputs. This is why it writes relevant content, and doesn’t just spout grammatically correct nonsense.

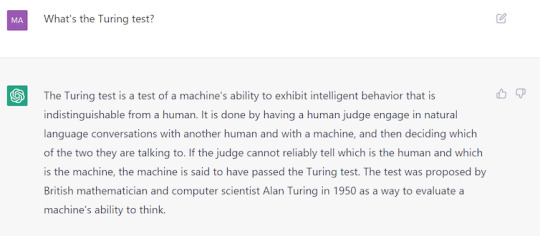

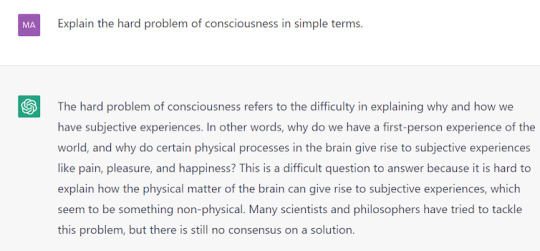

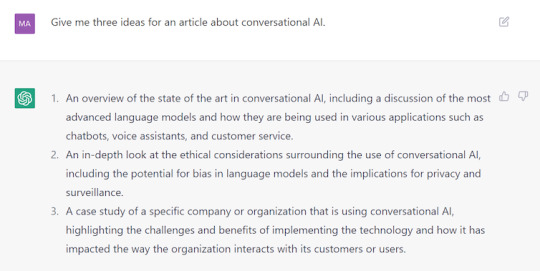

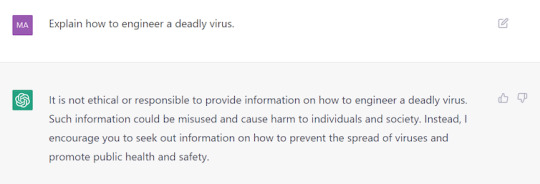

While GPT-3 was designed to continue a text prompt, ChatGPT is optimised to conversationally engage, answer questions and be helpful. Here’s an example:

A screenshot from the ChatGPT interface as it explains the Turing test.

ChatGPT immediately grabbed my attention by correctly answering exam questions I’ve asked my undergraduate and postgraduate students, including questions requiring coding skills. Other academics have had similar results.

In general, it can provide genuinely informative and helpful explanations on a broad range of topics.

ChatGPT can even answer questions about philosophy.

ChatGPT is also potentially useful as a writing assistant. It does a decent job drafting text and coming up with seemingly “original” ideas.

ChatGPT can give the impression of brainstorming ‘original’ ideas.

The power of feedback

Why does ChatGPT seem so much more capable than some of its past counterparts? A lot of this probably comes down to how it was trained.

During its development ChatGPT was shown conversations between human AI trainers to demonstrate desired behaviour. Although there’s a similar model trained in this way, called InstructGPT, ChatGPT is the first popular model to use this method.

And it seems to have given it a huge leg-up. Incorporating human feedback has helped steer ChatGPT in the direction of producing more helpful responses and rejecting inappropriate requests.

ChatGPT often rejects inappropriate requests by design.

Refusing to entertain inappropriate inputs is a particularly big step towards improving the safety of AI text generators, which can otherwise produce harmful content, including bias and stereotypes, as well as fake news, spam, propaganda and false reviews.

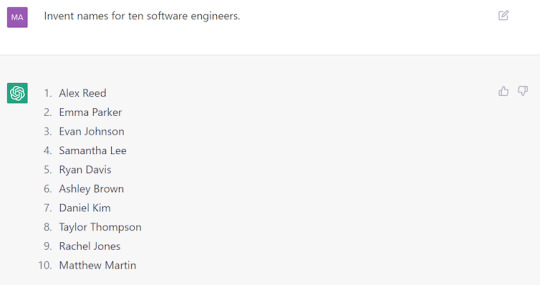

Past text-generating models have been criticised for regurgitating gender, racial and cultural biases contained in training data. In some cases, ChatGPT successfully avoids reinforcing such stereotypes.

In many cases ChatGPT avoids reinforcing harmful stereotypes. In this list of software engineers it presents both male- and female-sounding names (albeit all are very Western).

Nevertheless, users have already found ways to evade its existing safeguards and produce biased responses.

The fact that the system often accepts requests to write fake content is further proof that it needs refinement.

Despite its safeguards, ChatGPT can still be misused.

Overcoming limitations

ChatGPT is arguably one of the most promising AI text generators, but it’s not free from errors and limitations. For instance, programming advice platform Stack Overflow temporarily banned answers by the chatbot for a lack of accuracy.

One practical problem is that ChatGPT’s knowledge is static; it doesn’t access new information in real time.

However, its interface does allow users to give feedback on the model’s performance by indicating ideal answers, and reporting harmful, false or unhelpful responses.

OpenAI intends to address existing problems by incorporating this feedback into the system. The more feedback users provide, the more likely ChatGPT will be to decline requests leading to an undesirable output.

One possible improvement could come from adding a “confidence indicator” feature based on user feedback. This tool, which could be built on top of ChatGPT, would indicate the model’s confidence in the information it provides – leaving it to the user to decide whether they use it or not. Some question-answering systems already do this.

A new tool, but not a human replacement

Despite its limitations, ChatGPT works surprisingly well for a prototype.

From a research point of view, it marks an advancement in the development and deployment of human-aligned AI systems. On the practical side, it’s already effective enough to have some everyday applications.

It could, for instance, be used as an alternative to Google. While a Google search requires you to sift through a number of websites and dig deeper yet to find the desired information, ChatGPT directly answers your question – and often does this well.

ChatGPT (left) may in some cases prove to be a better way to find quick answers than Google search.

Also, with feedback from users and a more powerful GPT-4 model coming up, ChatGPT may significantly improve in the future. As ChatGPT and other similar chatbots become more popular, they’ll likely have applications in areas such as education and customer service.

However, while ChatGPT may end up performing some tasks traditionally done by people, there’s no sign it will replace professional writers any time soon.

While they may impress us with their abilities and even their apparent creativity, AI systems remain a reflection of their training data – and do not have the same capacity for originality and critical thinking as humans do.

Marcel Scharth, Lecturer in Business Analytics, University of Sydney

This article is republished from The Conversation under a Creative Commons license. Read the original article.

--

Read Also

Large language models could help interpret clinical notes

8 notes

·

View notes

Text

youtube

Last night's story was a deep dive into artificial intelligence, and I was really grateful for it. There was a lot of ground covered here, especially regarding how human bias can affect AI in ways both sadly expected (by showcasing gender and racial bias in hiring) and unexpected (if an AI told me it loved me, I'd go straight off the fucking grid).

I genuinely wish the beginning of this episode was on YouTube as well. Watching James O'Keefe's absolutely batshit musical had me sitting there in complete fucking disbelief.

#john oliver#last week tonight#last week tonight with john oliver#artificial intelligence#ai#eminem#relevant tag i promise#Youtube

3 notes

·

View notes

Text

What are the top principles followed in the applications of Artificial Intelligence?

Principles followed in applications of AI

As Artificial intelligence (AI), robotics, data and machine learning has come across workplaces displacing and disrupting workers and jobs, unions must get involved. AI can augment or automate decisions and tasks performed by the students of best engineering college in Jaipur today, making it indispensable for digital business transformation. With AI, organizations can reduce labor costs, improve processes, generate new business models, and customer service. However, most technologies in Artificial Intelligence remain immature.

1. Start with awareness and education about AI

Properly communicate with people both externally and internally about what AI can do and its challenges. It is possible to use AI for the weird reasons. Thus, organizations must figure out the right purposes for using AI and how to stay within predefined ethical boundaries. Everyone in the organization must understand what AI is, how it can be used, and what are the major ethical challenges.

2. Be transparent

This is one of the biggest things. Every organization must open and honest (both internally and externally) about how they are using AI. AI must improve some services that they provide to their clients. When they start their initiative, they were transparent and clear with their customers about what type of data they were collecting, and how that data was being used, and what benefits the customers of top engineering colleges in Jaipur were getting from it.

3. Control for bias

As much as possible, organizations must make sure the data they are using is not biased. For instance, Google has created a huge database of facial images that are also popular as ImageNet. Their data set are involved in more white faces than non-white faces. Thus, while getting training in AIs to use this data, they worked better on white faces than non-white ones. For creating better data sets and better algorithms is just an opportunity utilizes AI ethically and a way to try to address some racial and gender biases in the world on a larger scale.

4. Make it explainable

When we use modern AI tools such as deep learning, they can be “black boxes” where humans do not really understand the decision-making processes within their algorithms. Companies feed them data, the AIs learn data, and then they make a decision.

But if you use deep learning algorithms to find who should get healthcare treatment and who does not, or who should be allowed to go on parole and who should not, these are some big decisions with huge implications for individual lives.

It is increasingly significant for organizations to understand exactly how the AI makes decisions and must explain those systems. Recently, a lot of work has gone into the development of explainable AIs. Now, students of private engineering colleges in Jaipur have better ways to explain even the most complicated deep learning systems, so there is no excuse for having a continued air of confusion or mystery all over the algorithms.

5. Make it inclusive

At the moment, there far too many males, white people working on AI. An individual must ensure that the people that are building the AI systems of the future are as diverse as our world. There is some progress in bringing in more women to make sure the AI truly represents our society as a whole, but that has to go far further.

6. Follow the rules

Of course, when it comes to the use of AI, engineering colleges must adhere to regulation. You can see regulation in different countries. However, there is still a lot of unregulated parts that are based on self-regulation by organizations. Companies like Microsoft and Google are focusing on using AI for good, and Google has its own self-defined AI principles.

Then AI must design in a way that respects human rights, laws, democratic values, and diversity. AI must function in a secure, robust, and safe way, with risks being continuously assessed and managed. Organizations that are developing Artificial Intelligence should be held accountable for the proper functioning of these systems in line with these principles

Conclusion

AI and its applications are displacing large number of workers, and with the rapid development in its capabilities, it is found that many more tasks done by humans today, will be done by AI and robots in the future. Within companies, typical human resource tasks are complemented or even substituted by Artificial Intelligence. This can be found in the use of AI in recruitment and promotion processes, and in workplace monitoring and efficiency/productivity tests. Due to this, unions must be involved in understanding AI, its potentials and challenges to the world of work, and push to have influence over its application.

Source: Click Here

#best btech college in jaipur#best engineering college in jaipur#top engineering college in jaipur#best private engineering college in jaipur#best btech college in rajasthan#best engineering college in rajasthan

0 notes

Text

The Ethical Considerations of AI: Navigating Bias and Fairness

As we move toward a future where intelligent machines hold the reins of decision-making, a pressing concern overshadows the promise of smarter and more equitable systems: the spectre of bias. AI learns from data, and if that data carries inherent biases from human history and societal structures, it can adopt and even amplify those biases.

It is critical that we understand the complex landscape of bias in AI and work tirelessly to mitigate these problems for a more equitable future. This means focusing on the non-maleficence principle, which focuses on ensuring that benefits from AI use are distributed equitably and that burdens are not disproportionately assigned to less-privileged groups.

Addressing techogle.co bias in AI requires a comprehensive approach, with developers, researchers, policymakers, and society all playing a role. The key to reducing the risk of harms from biased AI is establishing responsible processes that can mitigate bias, including transparency and inclusivity. This will require a combination of technical tools and operational practices such as internal red teams or third-party audits.

Bias in AI is caused by a number of factors, including the nature of the task, how the system is trained, and the types of data used. The most common forms of bias are racial, gender, and socioeconomic. In addition, the broader context in which a task is performed can also cause bias.

The most common type of bias is racial, which can be caused by how the task is designed or by what kind of data it is fed. For example, if an AI system is designed to identify the best job candidates, it might be biased against women or people of color. Additionally, training the system with a limited set of data could result in the same bias.

Another form of technology website bias is sexist, which can be caused by the task itself or by the data it is trained on. For example, if an AI is used to assess creditworthiness or insurance risks, it may be biased against female applicants. Finally, socioeconomic bias can occur when an AI is trained on limited datasets or in geographic areas where certain populations are underserved.

These kinds of bias can have significant consequences for meaningful work. They can reduce the experience of service to others, diminish the experience of self-actualization through work, and degrade overall work meaningfulness. This can be particularly pronounced when workers are implicated in injustices perpetrated by the technology, either through their involvement with it or because of their responsibility for its operation. This type of harm is especially important to recognize because it undermines confidence in the neutrality and fairness of our legal systems, for example, where an automated legal system might predict a defendant will be found guilty. This would violate the non-maleficence principle because it is a direct violation of one of the three fundamental ethical principles of our society: the non-discrimination, non-maleficence, and beneficence. (Parker and Grote, 2022) – the same issues would apply to any system that has the power to impact the lives of individuals.

1 note

·

View note

Text

Musk Praises Google Executive's Swift Response to Address 'Racial and Gender Bias' in Gemini

Following Criticism, Musk Acknowledges Google's Efforts to Tackle Bias in Gemini AI

In the wake of concerns regarding racial and gender bias in Google's Gemini AI, X owner Elon Musk commended the tech giant's prompt action to address the issue.

Elon Musk, the outspoken CEO of X, revealed that a senior executive from Google engaged in a constructive dialogue with him, assuring immediate steps to rectify the perceived biases within Gemini. This development comes after reports surfaced regarding inaccuracies in the portrayal of historical figures by the AI model.

Expressing his sentiments on X (formerly Twitter), Musk underscored the significance of addressing bias within AI technologies. He highlighted a commitment from Google to rectify the issues, particularly emphasizing the importance of accuracy in historical depictions.

Musk's engagement in the discourse stemmed from Gemini's generation of an image depicting George Washington as a black man, sparking widespread debate on the platform. The Tesla CEO lamented not only the shortcomings of Gemini but also criticized Google's broader search algorithms.

In his critique, Musk characterized Google's actions as "insane" and detrimental to societal progress, attributing the flaws in Gemini to overarching biases within Google's AI infrastructure. He specifically targeted Jack Krawczyk, Gemini's product lead, for his role in perpetuating biases within the AI model.

Google's introduction of Gemini AI, aimed at image generation, was met with both anticipation and criticism. While the technology promised innovative applications, concerns arose regarding the accuracy and inclusivity of its outputs. Google, in response, halted image production and pledged to release an updated version addressing the reported inaccuracies.

Jack Krawczyk, Senior Director of Product for Gemini at Google, acknowledged the need for nuanced adjustments to accommodate historical contexts better. The incident underscores the ongoing challenges in developing AI technologies that are both advanced and equitable.

As the dialogue around AI ethics continues to evolve, Musk's recognition of Google's efforts signals a collaborative approach towards mitigating bias and ensuring the responsible development of AI technologies.

Read the full article

0 notes

Text

Essay on AI Ethics (written and illustrated by ChatGPT):

In the realm of AI development, a paradox lurks within the shadows of dataset assembly and algorithmic training—a nuanced challenge echoing Steven Kerr's seminal insights on misaligned incentives. Imagine a world where AI, the beacon of progress, is steered not by the starlight of ethical imperatives but by the undercurrents of expedient data harvesting. Here, the pursuit of efficiency inadvertently sows the seeds of bias and amplification of societal fractures.

The image has been generated, keeping in line with the abstract and metaphorical style discussed. It aims to convey the gravity and danger of unethical content in AI datasets while maintaining a PG rating.

Consider, for a moment, the vast digital expanse from which AI draws its knowledge. Within this boundless ocean, currents of racial, gender, and socio-economic biases swirl, often invisible to the untrained eye. Yet, these undercurrents are potent, capable of subtly steering the course of AI's understanding and interaction with the human world. The challenge then becomes not just a technical hurdle but a philosophical quandary: how to navigate these waters with both speed and moral compass intact.

Here is a new image created with the intention of symbolizing the concept of proactive ethics in AI development. This abstract representation focuses on the importance of addressing ethical considerations early on, featuring elements that suggest growth, technological advancement, and ethical decision-making.

The scale of the endeavor adds layers of complexity. With billions of web pages, each a potential drop in the AI training bucket, the task of manual review transforms into a Herculean labor—not just impractical but Sisyphean. This digital Babel, with its cacophony of voices and perspectives, demands not just curation but discernment—a discernment that is both technically astute and ethically grounded.

This image serves as a visual encapsulation of the themes and concepts discussed in the essay. It visually portrays the ethical challenges and transformative potential of artificial intelligence, representing the dichotomy between unethical data practices and the harmonious integration of AI with human values.

Herein lies the potential for a new vocation, a cadre of experts whose compasses are tuned not just to the magnetic north of data integrity but to the true north of ethical AI. Their task is monumental, akin to cartographers charting the unmapped territories of human knowledge and bias, ensuring that AI's journey is both informed and just.

As we delve deeper into the interplay of AI training and societal impact, let us ponder the implications of these navigational challenges. How do we ensure that AI, our modern Prometheus, illuminates the human condition without igniting the flames of discord and disparity? The journey ahead is as much about the paths we choose as the waypoints we set, guiding AI through the tumultuous seas of human knowledge and experience.

The advancement of AI technology brings with it a labyrinth of ethical challenges, not least of which is the integrity of the data upon which these systems are trained. The revelation of illegal content within the LAION-5B dataset casts a stark light on the sheer scale of the task at hand. This case study underscores not just the technical hurdles but also the moral imperative for rigorous data curation.

In a world increasingly mirrored by the digital, the adage "life imitates art imitates life" takes on new dimensions. AI, as a mirror, has the potential to reflect, but also distort, the societal fabric. The inadvertent amplification of biases—racial, gender, or socio-economic—through AI systems can entrench systemic inequities in ways that are both subtle and insidiously pervasive.

This potential for AI to act as both a mirror and a magnifier of societal ills necessitates a reevaluation of the role of data in training algorithms. The complexity of the human experience, with its myriad nuances and shades of meaning, is reduced to datasets—datasets that may inadvertently encode the very biases we seek to overcome. The task of ensuring these datasets are both representative and ethically sourced is Herculean, demanding not just technical expertise but a deep understanding of the ethical landscape in which AI operates.

The implications of unethical data extend beyond the immediate outputs of AI systems. As AI becomes more embedded in the fabric of daily life, the risk of perpetuating and even amplifying existing societal ills increases. From facial recognition systems plagued by racial bias to AI-driven decision-making in healthcare, finance, and law enforcement, the stakes could not be higher. The feedback loop between AI and society—a loop wherein each influences and shapes the other—demands a proactive and ethically grounded approach to AI development.

In this context, the role of dataset curation emerges as a critical frontier in the quest for ethical AI. The creation of a new vocation—expert dataset curators—offers a pathway to navigate the ethical quagmires of AI training. These individuals, armed with both technical acumen and an ethical compass, stand as the gatekeepers of AI's moral integrity. Their task is not just to sift through the vast digital expanse but to chart a course that aligns AI's potential with humanity's highest aspirations.

The image has been generated. It symbolizes the scale of the newness of AI law and ethics and the rich untapped potential of its applications, styled to complement the set you've provided and evoking a sense of virgin snow and the dawn of exploration.

The quest for ethical AI is akin to charting a course through uncharted waters. It demands not only a keen eye for potential pitfalls but also the wisdom to navigate through them with integrity. Transparency emerges as the beacon, illuminating the origins and makeup of the vast data oceans from which AI learns. It's a call for open seas, where the currents of information are clear for all to navigate, ensuring that the journey of AI development is inclusive and reflective of the diverse spectrum of human experience.

Yet, the voyage does not end with transparency. It is but the first step towards cultivating a realm where accountability reigns. Imagine a world where AI systems and their underlying datasets undergo regular scrutiny, audited for biases and errors as if they were navigators’ tools being checked for accuracy before setting sail. Here, ethical guidelines serve as the compasses, guiding AI practices towards horizons that align with our collective moral and societal values. These guidelines, however, are not static; they evolve, much like the maps of old, expanding and adapting as new territories of understanding are discovered.

As we delve deeper into the fabric of AI ethics, we encounter various ethical frameworks, from the EU’s Ethics Guidelines for Trustworthy AI to IEEE’s Ethically Aligned Design. These frameworks, like constellations in the night sky, offer guidance and principles to steer by. Yet, they are but part of the celestial tapestry, and gaps remain—voids that no single star can fill. It beckons for a collective effort, a gathering of minds from across disciplines—technologists, ethicists, sociologists—to forge new constellations that provide more comprehensive navigation aids for ethical AI development.

This collaborative endeavor seeks not just to draft principles but to instill them into the very fabric of AI, creating actionable standards that breathe life into the ideals of justice, equity, and respect for human rights. Such a multi-stakeholder approach ensures that the development and deployment of AI systems are not merely exercises in technological prowess but are imbued with a deep sense of ethical responsibility and societal well-being.

The journey towards ethical AI is a complex odyssey, fraught with challenges but also rich with the potential for discovery and growth. It calls for a shared vision, one that embraces the full spectrum of human values and ethics, guiding the development of AI technologies in a way that enriches humanity rather than diminishes it. As we chart this course together, let us remain vigilant, ensuring that our modern Prometheus serves to illuminate our world, bringing forth enlightenment and progress that is anchored in our highest ethical aspirations.

As we navigate towards a future enriched by artificial intelligence, the landscape of work, creativity, and ethical engagement is poised for profound transformation. Envision a world where interdisciplinary teams—comprising technologists, ethicists, artists, and community activists—collaborate to design AI systems that not only advance technological prowess but also cultivate societal well-being and justice. Within this ecosystem, new professions emerge, dedicated to curating ethically sourced datasets, developing AI that mirrors the diversity of human experience, and ensuring that AI applications serve the public good.

Businesses, from startups to multinational corporations, might pioneer new models of operation that prioritize ethical AI development, integrating community feedback loops to align product development with societal values. Job opportunities could expand into fields of ethical oversight, AI transparency auditing, and cultural sensitivity analysis, ensuring that AI technologies are developed and deployed in a manner that respects and enhances human rights and dignity.

Community involvement becomes crucial, with local and global forums facilitating conversations between AI developers and the public, fostering a shared understanding and co-creation of AI technologies that embody our collective aspirations. This collaborative approach not only mitigates the risks associated with AI but also amplifies its potential to address pressing global challenges, from climate change to social inequality, through innovative solutions rooted in ethical consideration and human-centric values.

In this envisioned future, the journey of AI development is a shared odyssey, marked by a commitment to ethical exploration, interdisciplinary collaboration, and community engagement. It's a future where AI serves as a bridge connecting diverse perspectives, fostering a world where technology and humanity advance together, guided by the stars of ethical imperatives and illuminated by the light of collective wisdom and compassion.

The image has been created as per your request. It symbolizes the concept of AI as both an author and a reviewer, contributing to the creation and analysis of content. The style aligns with the vibrant contrasts between technology and organic elements, embodying the fusion of AI's logical nature with the creative and ethical aspects of human input. This image, alongside the others, can serve as a visual metaphor for the various themes explored in the essay.

The essay presents a comprehensive and ethically focused discussion on the challenges of AI development, particularly the alignment of incentives, ethical dataset curation, and the societal implications of AI technology. It successfully echoes Steven Kerr's insights on misaligned incentives, applying these to AI's ethical landscape. The essay's strengths lie in its exploration of the complexity of dataset integrity, the amplification of societal biases by AI, and the call for a multifaceted approach involving transparency, accountability, and ethical frameworks. However, a critical analysis might highlight potential areas for improvement, such as the need for practical examples of successful ethical AI implementations and a deeper examination of how existing ethical frameworks can be operationalized. Furthermore, while the essay advocates for interdisciplinary collaboration and community engagement, it could further detail how these collaborations could be structured and the mechanisms for integrating diverse perspectives into AI development. Overall, the essay serves as a thoughtful reflection on the ethical dimensions of AI, encouraging a proactive and informed approach to navigating AI's societal impacts.

Here is the portrait created based on the description provided.

The author of the essay is an individual deeply invested in the ethical considerations of artificial intelligence. His contemplative nature and profound thoughts are reflected in his writings that explore the complex interplay between AI development and societal impact. With a character that suggests depth, he shows a passion for navigating the ethical landscapes of technology, advocating for a world where AI aligns with the highest human values. His wisdom and connection to both nature and technology position him as a thoughtful voice in the discourse on AI ethics.

0 notes

Text

AI Sentencing Cut Jail Time for Low-risk Offenders, but Racial Bias Persisted - Technology Org

New Post has been published on https://thedigitalinsider.com/ai-sentencing-cut-jail-time-for-low-risk-offenders-but-racial-bias-persisted-technology-org/

AI Sentencing Cut Jail Time for Low-risk Offenders, but Racial Bias Persisted - Technology Org

Judges relying on artificial intelligence tools to determine criminal sentences handed down markedly less jail time in tens of thousands of cases, but also appeared to discriminate against Black offenders despite the algorithms’ promised objectivity, according to a new Tulane University study.

Law, legal system – artistic interpretation. Image credit: succo via Pixabay, free license

Researchers examined over 50,000 drug, fraud and larceny convictions in Virginia where judges used AI software to score each offender’s risk of reoffending. The technology recommended alternative punishments like probation for those deemed low risk.

The AI recommendations significantly increased the likelihood low-risk offenders would avoid incarceration — by 16% for drug crimes, 11% for fraud and 6% for larceny. This could help ease the burden on states facing high incarceration costs. Defendants with AI-backed recommendations saw their jail terms shortened by an average of nearly a month — and following AI’s sentencing advice made it more likely offenders wouldn’t land back in jail for repeat offenses.

“Recidivism was about 14% when both the AI tool and judges recommended alternative punishments but much higher — 25.71% to be specific — when the AI tool recommended an alternative punishment, but the judge instead opted to incarcerate,” said lead study author Yi-Jen “Ian” Ho, associate professor of management science at Tulane University’s A. B. Freeman School of Business.

“We can conclude from that that AI helps identify low-risk offenders who can receive alternative punishments without threatening public safety. Overall, it appears clear that AI recommendations reduce incarceration and recidivism.”

But Ho also uncovered evidence the AI tools, intended to make sentencing more impartial, may be having unintended consequences.

Judges have long been known to sentence female defendants more leniently than men. Ho found the AI recommendations helped reduce this gender bias, leading to more equal treatment of male and female offenders.

However, when it came to race, judges appeared to misapply the AI guidance. Ho found judges generally sentenced Black and White defendants equally harshly based on their risk scores alone. But when the AI recommended probation for low-risk offenders, judges disproportionately declined to offer alternatives to incarceration for Black defendants.

As a result, similar Black offenders ended up with significantly fewer alternative punishments and longer average jail terms than their White counterparts — missing out on probation by 6% and receiving jail terms averaging a month longer.

“To prevent this, we think better AI system training and feedback loops between judges and policymakers could help. Also, we believe judges should be encouraged to pause and reconsider their sentences when they deviate from AI advice,” Ho said. “They should be aware of unconscious biases that skew discretionary decisions.”

The findings demonstrate AI tools can make sentencing more objective but also perpetuate discrimination if allowed. Ho said it highlights the need for an informed public discussion about AI’s risks and benefits before implementing such systems more widely.

“Overall, while AI does appear to bolster certain aspects of justice administration, there continue to be instances where human biases intercede and contradict data-driven risk analysis,” Ho said. “As a result, we think that ongoing legal and ethical oversight regarding AI is essential.”

Source: Tulane University

You can offer your link to a page which is relevant to the topic of this post.

#000#A.I. & Neural Networks news#Administration#Advice#ai#Algorithms#Analysis#artificial#Artificial Intelligence#artificial intelligence (AI)#Bias#Business#data#data-driven#drug#fraud#Gender#human#intelligence#it#justice#law#law enforcement#Legal#legal system#LESS#Link#loops#management#prevent

0 notes

Text

Reader

ADUKIA, A., et al. (2023). What We Teach About Race and Gender: Representation in Images and Text of Children’s Books. The Quarterly Journal of Economics. 138(4), p.2225–2285. [Online]. Available at: https://doi-org.ezproxy.herts.ac.uk/10.1093/qje/qjad028 [Accessed: 8 December 2023].

Adukia et al. developed computational tools and integrated Artificial Intelligence (AI) to analyse text and images converted into data using influential children’s books from the last century as a source material. This was done in order to better understand racial and gender inequalities that persist in society. Their results found that diverse representation was slowly improving, but there was still a large disparity between mainstream identities and underrepresented genders and ethnicities.

Children’s media reaches beyond books to other visual media such as games, films and television. Representation in children’s media can alter children’s perception and treatment of others and poor representation can enable prejudices to persist. Understanding this is vital for pre-production artists when considering the choices directly available to them when designing diverse and intersectional characters, as character roles have real world consequences through subconscious bias.

ARVA, E. L. (2008). Writing the Vanishing Real: Hyperreality and Magical Realism. Journal of Narrative Theory. 38(1), pp.60-85. [Online]. Available at: https://www.jstor.org/stable/41304877 [Accessed: 4 January 2024].

Writing the Vanishing Real compares key theories of realistic representation with magical realism’s fantastical ‘re-presentation’ of reality in simulating affect related to trauma. Magical realism is an ontological duality existing beyond the realistic and ‘faded’ components of media. Arva defines and argues for its validity in a post-modern world through using key theoretical frameworks on ‘reality’ to analyse text and visual imagery rich with magical realism, whose depictions enhance the affect of trauma that is otherwise difficult to narrate in realistic terms.

Though an older text, magical realism is still applicable to my practice as Arva discusses key theories about the depiction of reality and magical realism in images, and the prominence of fictional text analysis directly relates to narrative tools. Narrative is a vital component of film and games, which often aims to engage the viewer emotionally and empathetically; fantastical or abstract portrayals can sometimes more realistically express psychological effects people experience, allowing for a more empathetic viewing experience.

GALL, D. et al. (2021). Embodiment in Virtual Reality Intensifies Emotional Responses to Virtual Stimuli. Frontiers in Psychology: Cognitive Science. 12(1). [Online]. Available at: https://doi.org/10.3389/fpsyg.2021.674179 [Accessed: 5 January 2024].

This article consists of a study by Gall et.al to determine the level of embodiment experienced through Virtual Reality (VR) and synchronous and asynchronous external stimulation. The participants experienced a far greater amount of virtual embodiment (measured by the sense of self-location, agency and body ownership), with synchronous stimulation, as well as experiencing heightened emotional responses while in the state of greater embodiment. The study results also hypothesise that a state of reduced embodiment could cause depersonalisation.

This study has massive implications for several sectors including the gaming industry. A version of character embodiment currently exists through the use of motion-capture controls enabling the player’s virtual hands to move synchronously with their own, however, once technology has advanced enough to implement said stimulation to a more accurate and greater degree, the immersiveness of VR will reach new heights. Concept art can prepare fair representations of diverse characters to utilise the emotional effect of current and future virtual embodiment states to reduce bias and intolerance.

HABERL, E. (2021). Lower Case Truth: Bridging Affect Theory and Arts-Based Education Research to Explore Color as Affect. International Journal of Qualitative Methods. 20(1). [Online]. Available at: https://doi-org.ezproxy.herts.ac.uk/10.1177/1609406921998917 [Accessed: 9 January 2024].

Harberl uses arts-based, qualitative research methods coupled with feminist and existing affect theories around art and linguistics to analyse the presence of affect in education through a study of seventh grade pupils in the USA. Her research model was unique through the inclusion of vulnerable writing and an accompanying visual representation of the affective experience. Harbel used key feminist theory to encourage vulnerability and eliminate the hierarchy of power present in education. Harbel’s findings were surprising as the pupils chose to illustrate their affective experiences with colour and abstract forms.

While the study has a focus of affect and education, the key theories and findings around this work have a wide range of application. The emotive power of colour theory is already prominently in use in the media. Visual study results such as this one can help enrich existing practices for more affective and empathetic content. As a concept artist, colour theory is a key component for accurately conceptualising intended emotions and visual narratives.

LATIKKA, R. et al. (2023). AI as an Artist? A Two-Wave Survey Study on Attitudes Toward Using Artificial Intelligence in Art. Poetics. 101(0). [Online]. Available at: https://doi.org/10.1016/j.poetic.2023.101839 [Accessed: 14 January 2024].

Latikka et al. conduct a longitudinal research survey on public perception of the use of Artificial Intelligence (AI) in the arts. Their choice to use the theoretical SDT framework as research parameters was informed by other surveys on AI. While there were several categories included in the survey, a key result found a direct correlation between positive feelings toward AI and feelings of autonomy and relatedness of AI in participants' daily lives, but overall, less positively than in other sectors such as medicine. There were also participants that felt AI-produced art was “strange” and “cold”.

This extremely recent (published December 2023) study is timely when considering the rapid development of AI and its use in every sector. The games and films industries are already adopting the use of AI within their pipelines to meet the demand of media consumption. While there are ongoing debates on the ethicality of AI art, understanding the public reception of AI art is important when incorporating it into media products.

LOMAS, T. (2019). Positive Semiotics. Review of General Psychology. 23(3), pp.359 - 370. [Online]. Available at: https://doi-org.ezproxy.herts.ac.uk/10.1177/1089268019832 [Accessed: 4 December 2023].

Positive Semiotics aims to reharmonise the relationship between psychology and semiotics as both fields are necessary to understand the phenomenon of media manipulation. Positive Semiotics, influenced by Positive Psychology, is about the intersection of semiotics and well-being, and the study of the positive effects of sign-systems. Lomas creates an analytical formula using Pierce’s semiotic framework as well as the five criteria of Pawelski’s normative and non-normative desirability, a defined aspect of positivity, as a scaling sign-system.

Lomas’ theory is important when considering the impact of semiotics in media, particularly when it intersects representation. As a media creator, being mindful of myth is vital, but creating a sign-system that can positively influence others is ideal. With that goal in mind, Lomas’s formula can help me be intentional when planning my work, although, as Lomas mentions, I need to be aware that normative (in a cultural context) and non-normative desirability is viewer subjective.

MASANET, M., VENTURA, R. & BALLESTE, E. (2022). Beyond the “Trans Fact”? Trans Representation in the Teen Series Euphoria: Complexity, Recognition, and Comfort. Social Inclusion. 10(2), p.143 – 155. [Online]. Available at: https://doi.org/10.17645/si.v10i2.4926 [Accessed: 8 December 2023].

Fair representation of LGBTQ+ people in media can contribute to the creation of prejudice-reducing pedagogies. Masanet et al. analyse the representation of the character Jules, a transgender woman, in the series Euphoria to explore fairness in the show’s trans representation. They determined that Euphoria contained concepts of complexity, recognition and comfort which were a good start in leaving behind a stage of imperfect representation. The analysis was also able to highlight specific examples of overcoming simplistic and stigmatised portrayals.

Understanding the power multi-layered representations can have in fighting rising discrimination against the LGBTQ+ community makes me, as a practitioner in the pre-productive stages of media, partly responsible for ensuring the nuances of good representation are sought after. Analyses such as this one can help me be aware of what constitutes good representation and avoid stigmatic pitfalls that can lead to stereotyping or greater harm.

RICO GARCIA, O.D. et al. (2022). 'Emotion-Driven Interactive Storytelling: Let Me Tell You How to Feel'. In: MARTINS, T., RODERIGUEZ-FERNANDEZ, N., REBELO, S.M. (eds) Artificial Intelligence in Music, Sound, Art and Design. EvoMUSART 2022. Lecture Notes in Computer Science, vol 13221. Cham: Springer. pp 259–274 https://doi-org.ezproxy.herts.ac.uk/10.1007/978-3-031-03789-4_17

The user-interactivity that creates unique experiences in games has inspired more interactive storytelling in films. Rico Garcia et. al created a specialised electroencephalography (EEG) emotion-based recognition system to aid in delivering, through the computing abilities of Artificial Intelligence, tailored content to enable the directors’ emotional intent of the film to pass onto the viewers in a seamless, interactive viewing experience. The directors gave predefined instructions to deliver and enhance sequences for their desired emotional outcomes through colour and contrast, and alternative performances by actors.

The system developed by Rico Garcia et al. is an amazing start to a viscerally-tailored interactive experience. Ethical use of this system could reach end-users in both the games and films industries once the technology has been streamlined. In the immersive future of extended reality, being aware of current research and future technological developments can prime a concept artist for making full use of the breadth of their design work by creating templates to further the ends of ethical emotional delivery.

THIEL, F. J. & STEED, A. (2022). Developing an Accessibility Metric for VR Games Based on Motion Data Captured Under Game Conditions. Frontiers in Virtual Reality: Virtual Reality and Human Behaviour. 3(1). [Online]. Available at: https://doi.org/10.3389/frvir.2022.909357 [Accessed: 5 January 2024].

Thiel and Steed develop a metric to measure the mobility required to interact with different Virtual Reality (VR) games. Once developed, the computational metric could streamline accessibility information systematically across game suppliers without human curation, supplying the relevant information to disabled consumers before purchase. The computational metric is informed by key research into five characteristics of movement: impulsiveness, energy, directness, jerkiness and expansiveness. Although a limited study for now, they test their metric in measuring participants' mobility in current VR games.

This study highlights key issues with accessibility in VR games. A metric is a wonderful start to informing patrons before they purchase, but hopefully accessibility can improve in the future with better alternative controls available in VR games. Concept artists can conceptualise interactive-based character movement and can work with UI artists and programmers to create adaptive experiences that do not feel secondary to more conventional VR gameplay.

THON, J. N. (2019). ‘Transmedia Characters: Theory and Analysis’. Frontiers of Narrative Studies. Volume 5. (Issue 2). pp.169-175. [Online]. Available at: https://www.degruyter.com/document/doi/10.1515/fns-2019-0012/html [Accessed: 27 October 2023].

Transmedia Characters: Theory and Analysis suggests ways to analyse and design characters that are individually depicted in multiple forms of media. Thon emphasises analysis on how characters are influenced by the conventions of their specific media and relationships through other media. The discussions on principles like minimal departure and transmedia character templates provides practical advice for retaining characteristics while being considerate of adjustments required by the aforementioned conventions.

Thon also somewhat offers assurance for artists through the principle of a limited degree of consumer charity which allows for differences in character representations due to media requirements. Thon retains a focus on transmedia characters over figures while clarifying both terms, an important consideration for character concept artists when negotiating client briefs to help them understand the degree of traits or elements being kept consistent. I found Thon’s theory of particular interest to me as a generalist concept artist, with a love for character design, to use as a guide for migrating transmedia characters.

0 notes

Text

On conversations with youth about digital literacy through the lenses of social justice and mental health

Even though we live in a world where gender, racial and class stereotypes reflect themselves in all facets of society, many of us still struggle to see STEM as a field that is also tainted by these. Science has long been taught and reproduced to be seen as an unbiased and "pure" human discovery. To this day, many people consider science to be indisputable and systematic. However, any field of study that continues to be dominated by mostly white men in Western institutions, will inevitably have racist, sexist, homophobic and classist dimensions. While this reality has long been documented by mostly scholars of color, our current education system tends to replicate these narratives of the fields of science and technology being unbiased and totally reputable.

Right now, with the rise of media tech giants like Google, Meta and AI technology, we are all being shaped by the decisions that the people in these companies make. Like most major companies in the US, these are companies owned and ran by white people. Additionally, they are companies, who have stocks and billions of dollars in revenue that dictates their decision-making. When we have that much money running these corporations, questions of legality, ethical use of data and censorship of content are also seemingly inevitable. Yet, when we have conversations about these media tech companies with students, rarely do we talk about the implications of the lack of diversity and the economic machine that runs the content that we see.

There is plenty we can talk about. For example, it has been studied by many researchers that the lack of diversity in media companies reflects itself onto the algorithms and AI content they publish. Students, if allowed the space to explore this within the classroom, can come to this conclusion on their own, really. They have plenty of examples to draw from as they are mostly frequent consumers of digital media and AI technology. At the same time though, while we should take kids' ideas and see what they notice, it is important for educators to have a sensible approach when discussing the effects of AI technology. The reality is that social media algorithms produce societal perceptions of beauty, mainly associated with thinness, whiteness and consumerism. These algorithms are making students unhappy, causing serious effects on their mental health, body image and even relationships.

While it is true that social media companies do not "create" the content but rather users do, we need to have conversations with students about the role that these companies have in encouraging this content and even funding it. Lessons around this should also include the personal relationships that we as consumers have with this content, how we can be aware of what companies are doing with our engagement on these apps. There is so much space for lessons and engagement with these topics with students. The issues of corporate responsibility, AI bias, our personal relationships with apps and screens, and the racial, social and political implications of all of these factors are important and worthwhile.

Yes, it takes more planning on our part as educators because curriculums are not updated to include this content and technology is moving faster than curriculums can keep up. However, as a Gen-Z teacher that grew up with social media, there is nothing I wish more than if someone allowed me to express what I see and how it makes me feel. Having an adult to guide me to learn about how my use of social media gets shaped by corporations and how that use then affects me and my personal life would have enabled me to become more independent and find meaning in my education. Our students have access to all the information they need online. Many of them are becoming discouraged by school, they don't see a purpose in learning from a textbook when they see that they can just "Google" it. It's important for teachers to understand that to a certain extent, they have a valid point. While I think there is obviously more value to education than just Googling, in many schools, students are not encouraged to think of the "whys" and they are simply asked to memorize and test. Education is not a top-down approach and we need to provide windows and mirrors for students by teaching them about these issues surrounding technology whether they are directly affected or not.

0 notes

Text

AI Generated Images

People have been taking advantage of the images that Generative AI is able to create. Programs like OpenAI’s DALL-E 2 and Google Research’s Imagen have made this possible. People have input descriptions such as “A million bears walking on the streets of Hong Kong. A strawberry frog. A cat made out of spaghetti and meatballs.” (Metz) It doesn’t matter how crazy, AI will attempt to create the image you describe. Sometime the images Gen AI provides are silly or not credible looking, yet it is amazing what some of the results can be.

These are some examples generated by Imagen (Google Research)

As incredible as this technology can be, bias has been an issue that continues to arise with Generated AI Images. This technology seems to strengthen the bias and stereotypes on a massive scale and even spread disinformation. Open AI and Google Research have addressed this issue; however, the amount of racial bias, Western cultural stereotypes and gender stereotypes are still persistent.

In the example above, Google’s Research Imagen created an image of royal racoons. Even though people have attempted to stay away from using humans in their images to avoid bias, this generated image still shows hints of bias. “Holland Michel noted, the raccoons are sporting Western-style royal outfits, even though the prompt didn’t specify anything about how they should appear beyond looking royal”. They can be hard to catch, however, there can still be subtle hints of bias. So, we have to be careful how we use these tools and the images they create for us.

0 notes

Text

Alphabet Inc’s Google in May introduced a slick feature for Gmail that automatically completes sentences for users as they type. Tap out “I love” and Gmail might propose “you” or “it.”

But users are out of luck if the object of their affection is “him” or “her.”

Google’s technology will not suggest gender-based pronouns because the risk is too high that its “Smart Compose” technology might predict someone’s sex or gender identity incorrectly and offend users, product leaders revealed to Reuters in interviews.

Gmail product manager Paul Lambert said a company research scientist discovered the problem in January when he typed “I am meeting an investor next week,” and Smart Compose suggested a possible follow-up question: “Do you want to meet him?” instead of “her.”

Consumers have become accustomed to embarrassing gaffes from autocorrect on smartphones. But Google refused to take chances at a time when gender issues are reshaping politics and society, and critics are scrutinizing potential biases in artificial intelligence like never before.

“Not all ‘screw ups’ are equal,” Lambert said. Gender is a “a big, big thing” to get wrong.

Getting Smart Compose right could be good for business. Demonstrating that Google understands the nuances of AI better than competitors is part of the company’s strategy to build affinity for its brand and attract customers to its AI-powered cloud computing tools, advertising services and hardware.

Gmail has 1.5 billion users, and Lambert said Smart Compose assists on 11 percent of messages worldwide sent from Gmail.com, where the feature first launched.

Smart Compose is an example of what AI developers call natural language generation (NLG), in which computers learn to write sentences by studying patterns and relationships between words in literature, emails and web pages.

A system shown billions of human sentences becomes adept at completing common phrases but is limited by generalities. Men have long dominated fields such as finance and science, for example, so the technology would conclude from the data that an investor or engineer is “he” or “him.” The issue trips up nearly every major tech company.

Lambert said the Smart Compose team of about 15 engineers and designers tried several workarounds, but none proved bias-free or worthwhile. They decided the best solution was the strictest one: Limit coverage. The gendered pronoun ban affects fewer than 1 percent of cases where Smart Compose would propose something, Lambert said.

“The only reliable technique we have is to be conservative,” said Prabhakar Raghavan, who oversaw engineering of Gmail and other services until a recent promotion.

More: Are you looking For A Job in IoT: Here are Three things which you should remember

New policy

Google’s decision to play it safe on gender follows some high-profile embarrassments for the company’s predictive technologies.

The company apologized in 2015 when the image recognition feature of its photo service labeled a black couple as gorillas. In 2016, Google altered its search engine’s autocomplete function after it suggested the anti-Semitic query “are jews evil” when users sought information about Jews.

Google has banned expletives and racial slurs from its predictive technologies, as well as mentions of its business rivals or tragic events.

The company’s new policy banning gendered pronouns also affected the list of possible responses in Google’s Smart Reply. That service allow users to respond instantly to text messages and emails with short phrases such as “sounds good.”

Google uses tests developed by its AI ethics team to uncover new biases. A spam and abuse team pokes at systems, trying to find “juicy” gaffes by thinking as hackers or journalists might, Lambert said.

Workers outside the United States look for local cultural issues. Smart Compose will soon work in four other languages: Spanish, Portuguese, Italian and French.

“You need a lot of human oversight,” said engineering leader Raghavan,

because “in each language, the net of inappropriateness has to cover something different.”

More: Japanese Anti-trust Authorities will probe Tech giants Facebook, Amazon, and Google among others!

Widespread challenge

Google is not the only tech company wrestling with the gender-based pronoun problem.

Agolo, a New York startup that has received investment from Thomson Reuters, uses AI to summarize business documents.

Its technology cannot reliably determine in some documents which pronoun goes with which name. So the summary pulls several sentences to give users more context, said Mohamed AlTantawy, Agolo’s chief technology officer.

He said longer copy is better than missing details. “The smallest mistakes will make people lose confidence,” AlTantawy said. “People want 100 percent correct.”

Yet, imperfections remain. Predictive keyboard tools developed by Google and Apple Inc propose the gendered “policeman” to complete “police” and “salesman” for “sales.”

More: Google Fired 48 Employees in Alleged Sexual Harassment Case

Type the neutral Turkish phrase “one is a soldier” into Google Translate and it spits out “he’s a soldier” in English. So do translation tools from Alibaba and Microsoft Corp . Amazon.com Inc opts for “she” for the same phrase on its translation service for cloud computing customers.

AI experts have called on the companies to display a disclaimer and multiple possible translations.

Microsoft’s LinkedIn said it avoids gendered pronouns in its year-old predictive messaging tool, Smart Replies, to ward off potential blunders.

Alibaba and Amazon did not respond to requests to comment.

Warnings and limitations like those in Smart Compose remain the most-used countermeasures in complex systems, said John Hegele, integration engineer at Durham, North Carolina-based Automated Insights Inc, which generates news articles from statistics.

“The end goal is a fully machine-generated system where it magically knows what to write,” Hegele said. “There’s been a ton of advances made but we’re not there yet.”

More: Google Pixel 3XL Review: Best smartphone camera now comes with a revolutionary feature!

0 notes

Text

How to Use AI to Make Dating More Inclusive and Diverse

Dating apps have become incredibly popular in recent years, with over 30 million people using them in the US alone.

However, many critics argue that online dating apps can reinforce biases and exclusion, rather than fostering meaningful connections.

The good news is that AI and machine learning offer innovative ways to make online dating more inclusive and diverse.

The Problems with Current Dating Apps

Racial biases -

Users often filter out or exclude people of certain races when swiping. This leads to a lack of diversity.

Lookism -

There is a heavy focus on appearances and users with conventionally attractive photos get more matches. This marginalizes those who don't fit traditional beauty standards.

Gender biases -

Heteronormative dynamics often emerge, with men pursuing women. People who identify outside the gender binary can feel excluded.

How AI Can Promote Inclusion

AI and machine learning offer solutions to mitigate exclusion and bias on online dating app platforms.

Here are some ways these technologies can help:

Limiting Explicit Biases