#ai is spreading political misinformation

Text

Amazon’s Alexa has been claiming the 2020 election was stolen

The popular voice assistant says the 2020 race was stolen, even as parent company Amazon promotes the tool as a reliable election news source -- foreshadowing a new information battleground

This is a scary WaPo article by Cat Zakrzewski about how big tech is allowing AI to get information from dubious sources. Consequently, it is contributing to the lies and disinformation that exist in today's current political climate.

Even the normally banal but ubiquitous (and not yet AI supercharged) Alexa is prone to pick up and recite political disinformation. Here are some excerpts from the article [color emphasis added]:

Amid concerns the rise of artificial intelligence will supercharge the spread of misinformation comes a wild fabrication from a more prosaic source: Amazon’s Alexa, which declared that the 2020 presidential election was stolen.

Asked about fraud in the race — in which President Biden defeated former president Donald Trump with 306 electoral college votes — the popular voice assistant said it was “stolen by a massive amount of election fraud,” citing Rumble, a video-streaming service favored by conservatives.

The 2020 races were “notorious for many incidents of irregularities and indications pointing to electoral fraud taking place in major metro centers,” according to Alexa, referencing Substack, a subscription newsletter service. Alexa contended that Trump won Pennsylvania, citing “an Alexa answers contributor.”

Multiple investigations into the 2020 election have revealed no evidence of fraud, and Trump faces federal criminal charges connected to his efforts to overturn the election. Yet Alexa disseminates misinformation about the race, even as parent company Amazon promotes the tool as a reliable election news source to more than 70 million estimated users.

[...]

Developers “often think that they have to give a balanced viewpoint and they do this by alternating between pulling sources from right and left, thinking this is going to give balance,” [Prof. Meredith] Broussard said. “The most popular sources on the left and right vary dramatically in quality.”

Such attempts can be fraught. Earlier this week, the media company the Messenger announced a new partnership with AI company Seekr to “eliminate bias” in the news. Yet Seekr’s website characterizes some articles from the pro-Trump news network One America News as “center” and as having “very high” reliability. Meanwhile, several articles from the Associated Press were rated “very low.”

[...]

Yet despite a growing clamor in Congress to respond to the threat AI poses to elections, much of the attention has fixated on deepfakes.

However, [attorney Jacob] Glick warned Alexa and AI-powered systems could “potentially double down on the damage that’s been done.”

“If you have AI models drawing from an internet that is filled with platforms that don’t care about the preservation of democracy … you’re going to get information that includes really dangerous undercurrents,” he said.

[color emphasis added]

#alexa#ai is spreading political misinformation#2020 election lies#the washington post#cat zakrzewski#audio

165 notes

·

View notes

Text

Dear Sirs!

(or have some ladies also signed?)

A few days ago, you, Mr Musk, together with Mr Wozniak, Mr Mostaque and other signatories, published an open letter demanding a compulsory pause of at least six months for the development of the most powerful AI models worldwide.

This is the only way to ensure that the AI models contribute to the welfare of all humanity, you claim. As a small part of the whole of humanity, I would like to thank you very much for wanting to protect me. How kind! 🙏🏻

Allow me to make a few comments and ask a few questions in this context:

My first question that immediately came to mind:

Where was your open letter when research for the purpose of warfare started and weapon systems based on AI were developed, leading to unpredictable and uncontrollable conflicts?

AI-based threats have already been used in wars for some time, e.g. in the Ukraine war and Turkey. Speaking of the US, they are upgrading their MQ-9 combat drones with AI and have already used them to kill in Syria, Afghanistan and Iraq.

The victims of these attacks - don't they count as humanity threatened by AI?

I am confused! Please explain to me, when did the (general) welfare of humanity exist, which is now threatened and needs to be protected by you? I mean the good of humanity - outside your "super rich white old nerds Silicon Valley" filter bubble? And I have one more question:

Where was your open letter when Facebook's algorithms led to the spread of hate speech and misinformation about the genocide of Rohingya Muslims in Myanmar?

Didn't the right to human welfare also apply to this population group? Why do you continue to remain silent on the inaction and non-transparent algorithms of Meta and Mr Zuckerberg? Why do you continue to allow hatred and agitation in the social media, which (at least initially) belonged to you without exception?

My further doubt relates to your person and your biography itself, dear Mr Musk.

You, known as a wealthy man with Asperger's syndrome and a penchant for interplanetary affairs, have commendably repeatedly expressed concern about the potentially destructive effects of AI robots in the past. I thank you for trying to save me from such a future. It really is a horrible idea!

And yet, Mr Musk, you yourself were not considered one of the great AI developers of Silicon Valley for a long time.

Your commitment to the field of artificial intelligence was initially rather poor. Your Tesla Autopilot is a remarkable AI software, but it was developed for a rather niche market.

I assume that you, Mr Musk, wanted to change that when you bought 73.5 million of Twitter's shares for almost $2.9 billion in April?

After all, to be able to play along with the AI development of the giants, you lacked one thing above all: access to a broad-based AI that is not limited to specific applications, as well as a comprehensive data set.

The way to access such a dataset was to own a large social network that collects information about the consumption patterns, leisure activities and communication patterns of its users, including their social interactions and political preferences.

Such collections about the behaviour of the rest of humanity are popular in your circles, aren't they?

By buying Twitter stock, you can give your undoubtedly fine AI professionals access to a valuable treasure trove of data and establish yourself as one of Silicon Valley's leading AI players.

Congratulations on your stock purchase and I hope my data is in good hands with you.

Speaking of your professionals, I'm interested to know why your employees have to work so hard when you are so concerned about the well-being of people?

I'm also surprised that after the pandemic your staff were no longer allowed to work in their home offices. Is working at home also detrimental to the well-being of humanity?

In the meantime, you have taken the Twitter platform off the stock market.

It was never about money for you, right? No, you're not like that. I believe you!

But maybe it was about data? These are often referred to as the "oil of our time". The data of a social network is like the ticket to be one of the most important AI developers in the AI market of the future.

At this point, I would like to thank you for releasing parts of Twitter's code for algorithmic timeline control as open source. Thanks to this transparency, I now also know that the Twitter algorithm has a preference for your Elon Musk posts. What an enrichment of my knowledge horizon!

And now, barely a year later, this is happening: OpenAi, a hitherto comparatively small company in which you have only been active as a donor and advisor since your exit in 2018, not only has enormous sources of money, but also the AI gamechanger par excellence - Chat GPT. And virtually overnight becomes one of the most important players in the race for the digital future. It was rumoured that your exit at the time was with the intention that they would take over the business? Is that true at all?

After all I have said, I am sure you understand why I have these questions for you, don't you?

I would like to know what a successful future looks like in your opinion? I'm afraid I'm not one of those people who can afford a $100,000 ticket to join you in colonising Mars. I will probably stay on Earth.

So far I have heard little, actually nothing, about your investments in climate projects and the preservation of the Earth.

That is why I ask you, as an advocate of all humanity, to work for the preservation of the Earth - with all the means at your disposal, that would certainly help.

If you don't want to do that, I would very much appreciate it if you would simply stop worrying about us, the rest of humanity. Perhaps we can manage to protect the world from marauding robots and a powerful artificial intelligence without you, your ambitions and your friends?

I have always been interested in people. That's why I studied social sciences and why today I ask people what they long for. Maybe I'm naive, but I think it's a good idea to ask the people themselves what they want before advocating for them.

The rest of the world - that is, the 99,9 percent - who are not billionaires like you, also have visions!

With the respect you deserve,

Susanne Gold

(just one of the remaining 99% percent whose welfare you care about).

#elon musk#open letter#artificial intelligence#chatgpt#science#society#democracy#climate breakdown#space#planet earth#siliconvalley#genocide#war and peace#ai algorithms

245 notes

·

View notes

Note

you bring up women and female too much... feeling pretty terfy tbh

This is so fucking funny. As bait it’s like you’re not even Trying

(for context: transphobes just found my post about why it’s important to not spread historical misinformation and they are mad about it. This is from a transphobic radfem angry that I said trans people exist and existed in the past too.)

Shockingly I bring up women and being female because I am a woman and I care about feminism and women’s rights. I firmly believe most people should! Being a woman kind of makes it pressing in a certain way though.

I went through the whole gender questioning and gender exploration thing, thinking about gender and womanhood and femaleness and what they mean and how I relate to it and don’t. I was a tomboyish girl who liked to read and climb trees and hated shaving my legs and participating in gym class and was resentful and resistant to Rules but also enthralled by history and particularly suffragists and women who Did Things. But also, for a while, as I started to increasingly reaize I was queerer than I supposed and felt alienated from other girls my age, I felt pretty disconnected from womanhood; I didn’t know what it meant to me. I’m aromantic asexual, and that self-knowledge was hard-won and required a lot of soul searching and fear for the future and trying really hard to be allo and crying alone at the dining hall. And the question of “what is my gender for, anyway?” felt pertinent in that regard. If it’s not there to structure romantic/sexual attraction and relationships, what is it there for? What does it do for me?

Reading feminist texts by cis feminists was important for thinking about political organizing and political necessities of combating sexism and misogyny in its many pervasive and awful forms, but it didn’t really make me feel any more of a personal sense of womanhood. Political coalition building is not necessarily the same as personal sense of self, nor should it be.

You know what made me feel more secure in my gender? In feeling like A Woman?

Reading the work of trans lesbians.

I mean it. Reading trans women lesbians writing sci-fi stories about apocalypses and AIs and identity, and realistic stories about longing and coercion and freedom and joy and fear and just being, is what made me go, oh, this is it. This is what it’s all about, this is what it means to be a woman. You get it. And you make me feel it in a way I haven’t in a long time.

(I’m also an anthropologist. Thinking about human societies and social constructs and performances of identity is my job.)

But if you want to read the work of the trans woman who really made me feel comfortable and seen and resonant in my womanhood for the first time in a long time, I highly HIGHLY recommend Jamie Berrout and particularly her Portland Diary: Short Stories 2016/2017. They have a spark of brilliance. This Pride month treat yourself to these stories. (This is a direct download because unfortunately Berrout scrubbed her entire internet presence, including any place to legitimately buy her work, but I paid a fair price for these before she did, and I think they deserve to be read.)

(I won’t currently link the other trans woman writer who helped my through my Gender Epiphany, because unlike Berrout who has gone off the grid, she has an internet presence and I don’t want her to get targeted by any transphobes camping my page waiting for my response to their brilliantly crafted bait.)

TERFs, and some overzealous tumblr/twitter users, want so badly to believe that feminism and trans rights are at odds, that if you believe in one you can’t believe in the other. That’s bullshit, of course. My feminism is fully bound up in trans rights; non-discrimination by sex and gender means non-discrimination by sex and gender. Bodily autonomy and authority on one’s identity are the rights of everyone. We need to end sexism and part of ending sexism is ending the belief in gender essentialism; we need to end transphobia and part of ending transphobia means ending the idea that women can only be One Thing and men can only be One Thing and there are unbreachable distances between them. I believe in gender equality and that means the equality of all genders. And from personal experience, I believe that trans women have a lot to say about feminism and what it feels like to be a woman.

Also I have a lot of trans and non-binary friends and I like them much better and trust them much more than I like or trust transphobes. So.

Women’s rights are human rights. Trans rights are human rights. Trans rights are women’s rights. All of these things are true, and inextricable.

48 notes

·

View notes

Text

Let me say upfront that I am trying as hard as possible to be unbiased and fair about this Israel Palestine thing so let me just say that I think that Hamas should be disbanded but also that I wouldn't feel too bad if the child murderers who killed Hind Rajab and her family in the IDF got what's coming for them. Does that please both sides? Moving on. I just want to comment on the information warfare aspects of this war and how weird it is. This may legitimately be the first war and genocide fought as much on tiktok as the battlefield.

On one end. The Israeli side is so Baffling in how they wasted such amazing PR. Like an Muslim terrorist group carrying out an antisemitic attack on the only Jewish country in the world. That should be absolute PR Gold but it somehow descended into calling the UN Hamas, Posting AI pictures of Voldemort and comparing Hamas to him and calling all their critics Limp Wristed gay leftist college students who are into astrology using stonetoss tier skits. It's absolutely baffling how they messed it up so badly.

On the other end. The pro-palestine movement is absolutely massive. Literally every Zoomer with so much as an interest in the political is coming out with support for palestine and a bunch of Millennials as well. It's an absolutely massive wave of support but it isn't focused directly at Israel. Rather it's spread out against everyone who hasn't spoken up against israel from Biden to Taylor Swift to BTS to Noah Schnap. It honestly turned me off with how Fandomy it feels. Like people are comparing fucking Houthi Pirates to Monkey D. Luffy. It's treating a war and genocide as a fandom.

This is the first digital Guerilla war. Every Atrocity and war crime is being broadcast to the world but that comes with a lot of misinformation given how democratized information is with the internet. Israel is caught bombing a hospital or Ambulance and they can just claim that it was secretly Hamas and so it's okay that they killed those civilians and Hamas did it anyway. Hamas War Crimes like Kidnapping and Hostage taking as well as rape and targeting civilians are ignored or brushed aside as Zionist propaganda and they were justified anti-imperialist anyways and they also weren't a big deal. Shit's fucked up is what I'm saying

#Why can't we go back to ukraine#It was so much simpler back then#None of this complicated gray zone bullshit

9 notes

·

View notes

Text

[tw for the ongoing violence and genocide at Palestine-Israel, i'm not sure how to word it better, sorry]

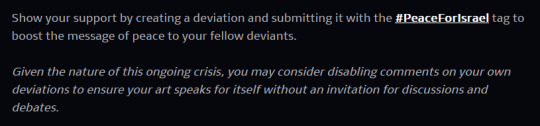

Beating myself up for not realizing it sooner but uh, good ol Deviantart has been raising for the past week a hashtag in support for Israel. It makes zero mention whatsoever of the retaliations done by Israel, and despite its typical PR wording of "this is for peace", centers Israel and only Israel in its pleas, describing palestinians as, check this out, "also impacted in the aftermath of the terrible attack", and """"feeling the effects of this difficult time"""".

The text is not shy to describe Israel victims as being slaughtered, but palestinians get only the kings of all euphemisms apparently.

and yes of course comments are locked.

they flat out encourage artists to disable comments as well, which is wildly mask-off in my opinion. Not even the PR-like wording of "ooh people are spreading misinformation and harassment" this time, it's just flat out "your political art should not invite discussion or debate". Epic stance.

So yeah. Here's an archived version of the post if you wanna read it, I'm not linking to give clicks to the original and the AI slop on it. I'm feeling very done with that website right now.

As an aside, one of charities DA listed is Doctors Without Borders which, to my knowledge, helps all victims of conflict with no pro-Israel stance. I'm just noting this so that DA's pathetic behavior here doesn't spill over the charities mentioned, I did not however research on any of the others.

#deviantart#art#please tell me if you need any warning tags on this i'm pretty confused on how to tag it#i just didn't see anyone commenting on it and i think people should know#like this might be it this might be my last straw on using that website#this is utter garbage behavior#this is way beyond just a naive or ignorant 'both sides' stance

13 notes

·

View notes

Text

By Olivia Rosane

Common Dreams

Dec. 26, 2023

"If people don't ultimately trust information related to an election, democracy just stops working," said a senior fellow at the Alliance for Securing Democracy.

As 2024 approaches and with it the next U.S. presidential election, experts and advocates are warning about the impact that the spread of artificial intelligence technology will have on the amount and sophistication of misinformation directed at voters.

While falsehoods and conspiracy theories have circulated ahead of previous elections, 2024 marks the first time that it will be easy for anyone to access AI technology that could create a believable deepfake video, photo, or audio clip in seconds, The Associated Press reported Tuesday.

"I expect a tsunami of misinformation," Oren Etzioni, n AI expert and University of Washington professor emeritus, told the AP. "I can't prove that. I hope to be proven wrong. But the ingredients are there, and I am completely terrified."

"If a misinformation or disinformation campaign is effective enough that a large enough percentage of the American population does not believe that the results reflect what actually happened, then Jan. 6 will probably look like a warm-up act."

Subject matter experts told the AP that three factors made the 2024 election an especially perilous time for the rise of misinformation. The first is the availability of the technology itself. Deepfakes have already been used in elections. The Republican primary campaign of Florida Gov. Ron DeSantis circulated images of former president Donald Trump hugging former White House Coronavirus Task Force chief Anthony Fauci as part of an ad in June, for example.

"You could see a political candidate like President [Joe] Biden being rushed to a hospital," Etzioni told the AP. "You could see a candidate saying things that he or she never actually said."

The second factor is that social media companies have reduced the number of policies designed to control the spread of false posts and the amount of employees devoted to monitoring them. When billionaire Elon Musk acquired Twitter in October of 2022, he fired nearly half of the platform's workforce, including employees who worked to control misinformation.

Yet while Musk has faced significant criticism and scrutiny for his leadership, co-founder of Accountable Tech Jesse Lehrich told the AP that other platforms appear to have used his actions as an excuse to be less vigilant themselves. A report published by Free Press in December found that Twitter—now X—Meta, and YouTube rolled back 17 policies between November 2022 and November 2023 that targeted hate speech and disinformation. For example, X and YouTube retired policies around the spread of misinformation concerning the 2020 presidential election and the lie that Trump in fact won, and X and Meta relaxed policies aimed at stopping Covid 19-related falsehoods.

"We found that in 2023, the largest social media companies have deprioritized content moderation and other user trust and safety protections, including rolling back platform policies that had reduced the presence of hate, harassment, and lies on their networks," Free Press said, calling the rollbacks "a dangerous backslide."

Finally, Trump, who has been a big proponent of the lie that he won the 2020 presidential election against Biden, is running again in 2024. Since 57% of Republicans now believe his claim that Biden did not win the last election, experts are worried about what could happen if large numbers of people accept similar lies in 2024.

"If people don't ultimately trust information related to an election, democracy just stops working," Bret Schafer, a senior fellow at the nonpartisan Alliance for Securing Democracy, told the AP. "If a misinformation or disinformation campaign is effective enough that a large enough percentage of the American population does not believe that the results reflect what actually happened, then Jan. 6 will probably look like a warm-up act."

The warnings build on the alarm sounded by watchdog groups like Public Citizen, which has been advocating for a ban on the use of deepfakes in elections. The group has petitioned the Federal Election Commission to establish a new rule governing AI-generated content, and has called on the body to acknowledge that the use of deepfakes is already illegal under a rule banning "fraudulent misrepresentation."

"Specifically, by falsely putting words into another candidate's mouth, or showing the candidate taking action they did not, the deceptive deepfaker fraudulently speaks or act[s] 'for' that candidate in a way deliberately intended to damage him or her. This is precisely what the statute aims to proscribe," Public Citizen said.

The group has also asked the Republican and Democratic parties and their candidates to promise not to use deepfakes to mislead voters in 2024.

In November, Public Citizen announced a new tool tracking state-level legislation to control deepfakes. To date, laws have been enacted in California, Michigan, Minnesota, Texas, and Washington.

"Without new legislation and regulation, deepfakes are likely to further confuse voters and undermine confidence in elections," Ilana Beller, democracy campaign field manager for Public Citizen, said when the tracker was announced. "Deepfake video could be released days or hours before an election with no time to debunk it—misleading voters and altering the outcome of the election."

Our work is licensed under Creative Commons (CC BY-NC-ND 3.0). Feel free to republish and share widely.

#artificial intelligence#ai#deep fakes#2024 elections#public citizen#federal election commission#fec#democracy#social media

10 notes

·

View notes

Text

I’m not kidding everyone should be very worried about how much AI is going to fuck everything up. Like we’re already aware of how much misinformation is constantly spread online and how that influences peoples perceptions and politics. Now imagine when people can create a fully realistic video of a politician or public figure which is indistinguishable from the real thing and get them to say anything they want for any political purpose. And no one would be able to tell that the video isn’t real. This is honestly nightmare scenario. And people should be blaring the sirens because this could legitimately lead to catastrophe.

4 notes

·

View notes

Text

Advances in generative artificial intelligence could supercharge the propaganda playbook, experts warn

A banal dystopia where manipulative content is so cheap to make and so easy to produce on a massive scale that it becomes ubiquitous: that’s the political future digital experts are worried about in the age of generative artificial intelligence (AI).

In the run-up to the 2016 presidential election, social media platforms were vectors for misinformation as far-right activists, foreign influence campaigns and fake news sites worked to spread false information and sharpen divisions. Four years later, the 2020 election was overrun with conspiracy theories and baseless claims about voter fraud that were amplified to millions, fueling an anti-democratic movement to overturn the election.

Continue reading...

12 notes

·

View notes

Text

The Mechanics of Intentional Polarization: AI Bots and Social Media Algorithms

In the digital age, the battleground of ideas is no longer confined to newspapers, town halls, or television screens; it has expanded into the vast, interconnected realms of social media. Platforms like Facebook and Twitter have become the new public squares where political discourse is shaped, challenged, and disseminated to millions with unparalleled speed and efficiency. However, beneath the surface of these vibrant discussions lies a more insidious phenomenon: intentional polarization, driven in part by polarizing AI bots and manipulated social media algorithms.

A recent report from the Center for Business and Human Rights at New York University's Stern School of Business illuminates the complex relationship between technological platforms and extreme polarization. Despite Facebook's denials of its role in fostering divisiveness, a growing body of evidence, including internal documents and actions by the platform itself, suggests a significant contribution to the erosion of democratic values and the incitement of partisan violence. This contention is supported by social science research that points to social media companies like Facebook and Twitter as key players in intensifying political sectarianism.

The role of AI bots in this process cannot be understated. These automated entities are designed to mimic human behavior online, often spreading misinformation, engaging in argumentative or provocative behavior, and exacerbating micro-conflicts within communities. By exploiting the natural tendency of humans to seek confirmation of their beliefs, these bots amplify divisive content, ensuring its propagation across the digital ecosystem. This creates an environment where political and social factions are not only formed but are continually fueled by a stream of tailored content that reinforces existing prejudices and ideological divides.

Moreover, the algorithms governing what content users are exposed to on these platforms play a critical role in the perpetuation of polarization. Designed to maximize engagement, these algorithms often prioritize content that evokes strong emotional reactions, which divisive and polarizing content frequently does. This results in a feedback loop where users are increasingly exposed to homogenized, one-sided information, further entrenching them in their ideological silos.

The impact of these dynamics is profound. As noted in an article published in the journal Science, social media's influence on political discourse has led to heightened political sectarianism, challenging the very foundation of democratic engagement and discourse. This is echoed by observations made regarding Twitter's pivotal role in politics, where the platform's capacity to disseminate political messages quickly and broadly stands in stark contrast to traditional media's costlier and slower methods.

Yet, this rapid dissemination comes with its pitfalls. Studies focused on Twitter's use by political figures suggest that social media may skew public discourse towards more contentious political issues, sidelining the complex, nuanced discussions necessary for solving societal problems. This skew is further exacerbated by social media's personalization capabilities, which contribute to extremism by filtering the information users receive, thereby narrowing their worldview and reinforcing polarized perspectives.

As we navigate the complexities of this digital era, the need for strategies to mitigate online polarization becomes increasingly urgent. Governments, policymakers, and social media platforms themselves must grapple with the challenge of balancing the benefits of these powerful communication tools with the potential harm they pose to societal cohesion and democratic discourse.

#queer artist#chaotic good#original art#digital art#eyestrain#i’m just a girl#third eye#artists on tumblr#original thots#original content#writeblr#writers on tumblr#writing#politics#twitter#facebook#polarization

2 notes

·

View notes

Text

Even when technology contributes to unwanted outcomes, humans are often the ones pressing the buttons. Consider the effect of AI on unemployment. The Future of Life Institute letter raises concerns that AI will eliminate jobs, yet whether or not to eliminate jobs is a choice that humans ultimately make. Just because AI can perform the jobs of, say, customer service representatives does not mean that companies should outsource these jobs to bots. In fact, research indicates that many customers would prefer to talk to a human than to a bot, even if it means waiting in a queue. Along similar lines, increasingly common statements that AI-based systems—like “the Internet,” “social media,” or the set of interconnected online functions referred to as “The Algorithm”—are destroying mental health, causing political polarization, or threatening democracy neglect an obvious fact: These systems are populated and run by human beings. Blaming technology lets people off the hook.

5 notes

·

View notes

Text

I think the concerns with AI really should be more about the manufacturing of propaganda and using to portray people saying and doing things they wouldn't, moreso than AI art. I am an art historian and I have lot to say about AI Art as art and why I don't particularly like it, but in a broader political sense I am far more concerned by people making hyperrealistic images of the groups they don't like doing bad things to spread misinformation than I am that someone made ugly art with the computer.

2 notes

·

View notes

Text

NewsGuard, a firm that tracks misinformation and rates the credibility of information websites, has found close to 350 online news outlets that are almost entirely generated by AI with little to no human oversight. Sites such as Biz Breaking News and Market News Reports churn out generic articles spanning a range of subjects, including politics, tech, economics, and travel. Many of these articles are rife with unverified claims, conspiracy theories, and hoaxes. When NewsGuard tested the AI model behind ChatGPT to gauge its tendency to spread false narratives, it failed 100 out of 100 times.

AI frequently hallucinates answers to questions, and unless the AI models are fine-tuned and protected with guardrails, Gordon Crovitz, NewsGuard's co-CEO told me, "they will be the greatest source of persuasive misinformation at scale in the history of the internet." A report from Europol, the European Union's law-enforcement agency, expects a mind-blowing 90% of internet content to be AI-generated in a few years.

4 notes

·

View notes

Text

We are living in dangerous times where media is being fabricated by AI programs at the hands of anyone. The least yall can do is ensure you are LINKING SOURCES to your posts of political media claims. People could just be spreading around misinformation with the click of a button.

2 notes

·

View notes

Note

I am telling you selfie pics will not be useful for training an AI to identify people in a protest. Because that is not how you train an AI to identify people in a protest. You clearly do not understand this topic and are acting mostly on some gut feeling rather than actually thinking about it or doing any research. Regardless of how hostile I am being to you you are still spreading misinformation and being stupid. This is the last ask I will send because I should have just unfollowed you in the first place.

thanks for being so polite as to announce your departure ?

#this could have been a much more productive conversation had you not Literally called me stupid from the get go#is that how you correct people irl???

2 notes

·

View notes

Text

Social media platforms crack down on fake news ahead of Brazil election

Disinformation is a potent political weapon in Latin America’s biggest country

Social media platforms say they have launched an effort to crack down on fake news and misinformation in Brazil ahead of elections in October that many expect to be turbulent.

Fake news has proven to be a potent political tool in Latin America’s largest country and was wielded to dramatic effect in the 2018 election of far-right president Jair Bolsonaro.

Caught flat-footed then, Meta-owned platforms — in conjunction with the nation’s electoral court — have since rolled out new technologies to detect and stifle the spread of misinformation as well as streamlined procedures for officials and judges to have content taken offline, according to the company.

Even Telegram, which the Supreme Court in March temporarily ordered to be suspended over its hosting of misinformation, has signed an agreement with election officials to develop tools to flag fake news and an AI chatbot to answer questions about the elections.

Continue reading.

#brazil#brazilian politics#politics#brazilian elections#misinformation#brazilian elections 2022#social media#mod nise da silveira#image description in alt

4 notes

·

View notes

Text

I might use this blog at some point, in which case hi.

This is a sideblog that I might, hopefully, use for opinionposting that won’t clog up my main. If you know my main you know my main but I’m probably not going to advertise it here.

Various positions:

Demsoc-adjacent

Systemic racism, misogyny, and homophobia continue to negatively affect the lives of minorities; feminism and anti-racism as principles are good

Idpol in the sense of political organizing on the basis of identity is fine; idpol in the sense of “minorities have Special Knowledge that cannot be understood by outsiders and minority status is a kind of moral currency” is bad

Secularism is good and atheism is correct

Trans women are women, trans men are men, and so forth

Callouts are generally bad and not to be trusted

Nuclear energy is good

People should have access to physician-assisted dying

AI art is not inherently good but it’s not inherently bad either

Many progressive spaces are rife with people holding wildly inconsistent belief systems based on Vibes, spreading misinformation for a “good cause”, and the proliferation of “good-seeming” ideas that people do not fully understand for the sake of belonging in an in-group; in these spaces, identifying this as a problem is seen as tantamount to approval of bigotry. This is a problem.

Candy corn is not that bad

2 notes

·

View notes