#ai writing vs human writing

Text

#ai to human writing#ai writing#ai writing vs human writing#difference between ai writing and human writing#how to learn machine learning#is ai writing better than humans

0 notes

Text

Oh yeah Rev was in the episodes I showed to my friend today too and he was decently well behaved. Calling Ai a he for once:

And I know what the dub did with his response to Akira saying he's going to die if they don't stop Ai was funny and all but... I like this better:

Is it just the Rev simp in me thinking this part is cute? He's basically saying he'll protect him and says it so softly and... well, it's just cute. Code me to safety, you lil softie.

Oh yeah I also don't hate the Pandor thing anymore. AIs have gotten so much scarier/worse irl lately that Rev programming Pandor to not have thoughts against humanity just doesn't bother me whatsoever anymore. I 100% get it. I think it was more so the phrasing of that scene that bothered me when I first saw it.

#yugioh#ygo#yugioh vrains#ygo vrains#vrains#revolver#revolver vrains#ryoken kogami#I still think season 3 misused him#or at least the first episode did#I just#ugh#he should be angry after Ai attacks humanity not before#this scene with Akira should've been Rev's first S3 scene#it would've shrouded him in mystery#also he shoulda blew up at Ai at some point#just completely lose all his composure and snap at him#something along the lines of: HOW DARE YOU BETRAY PLAYMAKER AND SOULBURNER THEY TRUSTED YOU#in fact maybe I'll write that when I get to Aoi and Akira vs Ai

6 notes

·

View notes

Text

So do y'all use AI to learn codes and write important emails you don't have the time to write and basically gain knowledge from it, or do you use it to write the particular fanfiction you want to read but are too lazy/unable to write yourself

#chat gbt#ai#fanfiction#note this is not me getting in on the whole ai vs human war#because tbh ai stories will never be as good as ones written by humans#it's just a cheap easy way for me to generate stories i want but am too lazy to write for myself#very self indulgent#may be self inserting too XD

4 notes

·

View notes

Text

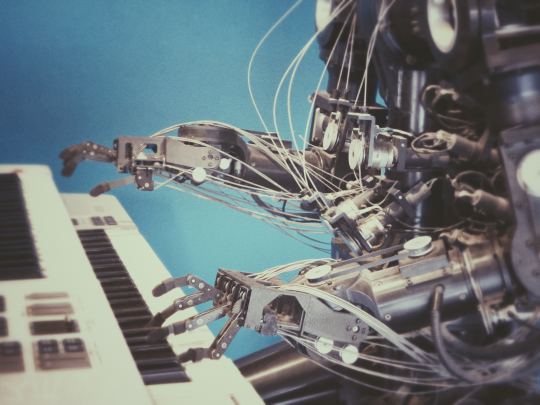

a weird little thing abt me is i will definitely mock shitty ai art but it never feels right doing it about the hands simply by virtue of the fact that a lot of them look indistinguishable from the hands i was doing years ago when i first got a drawing tablet

#like id have the right number of fingers obv but like. putting the thumb on the wrong side#fingers bending weird directions or connecting in weird places#weird anatomy at joints‚ freaky nails‚ bad proportions‚ bad perspective‚ etc etc etc#people say 'this isnt ai like in sci-fi its just machine learning' but to me its a lot more interesting to look at it as#'this isnt ai like in scifi /yet/'#like yeah the stuff ai does in fiction isnt possible at this point but like. i find it difficult not to wonder if this#is the ai version of infancy stages yknow? like.#ppl go 'its cant write its own stuff its just recycling stuff its been fed' as if thats not kinda how people . learn to talk?#idk i just find it hard to agree with arguments that act like where we currently are at is the furthest these technologies could possibly#evolve in our lifetimes#'it just makes things up' you mean like toddlers going on long winding rambles about unicorns and monsters or w/e#'it cant do art good' you mean like a child? or even just literally Anyone who doesnt know how to draw yet?#like. idk. i feel like people are trying very very hard to insist the ai of today is still the same as it was in the clevverbot days#and that its impossible to evolve any further#people want to cling to the old days when ai stuff didnt pass the turing test by a much wider marging than it tends to now#dont want to admit that it does indeed sometimes surpass the turing test and likely would be able to even moreso were it#not for restraints#(see: that one stock trading ai that did insider trading vs various chatbots not bring allowed to write disparaging things#about copyrighted people or w/e)#if ai stuff was still truly indistinguishable from human works then we wouldnt need to spend so much time#hashtag exposing things as being ai generated#and i just think its bad to‚ in pursuit of that‚ mock things that are like. just stuff all beginner artists struggle with#i guarantee you there is not a single artist out there who hasnt drawn a hand that made them want to curl up and die at least once.#i got very off-topic there but swung it back around at the end there so. hashtag win#origibberish

1 note

·

View note

Text

You must know that artificial intelligence produces text that resembles human text. And, because of the limited understanding, it gives similar responses to everyone as per their command. That means uniqueness is lacking in AI content. On the other hand, a skilled writer can maintain the authenticity of the work. That’s one of the primary differences between AI and human writing. As you move further, you will observe more things like vocabulary, language flow, relatability, and others.

#AI and human writing#differences between AI and human writing#pros and cons of AI writing vs human writing

0 notes

Text

who would vin

My sister who fucking uses AI

vs

Me who can't create stories unless it's on paper

0 notes

Text

This is probably a lot more just me watching way more movie video essays than movies but I think we can come out of this era of devalued storytelling understanding art better than ever. Like I guess by nature you can only get so much depth from anything made for the mainstream super broad appeal, but as someone who was into cinemasins and tvtropes type stuff in high school I think as a society its not for nothing for people to have started asking pedantic questions about things that happen in a movie, and then see the movie industry react as if theyre trying not to have those questions asked, and have people go "ohhh maybe all that stuff isn't the point of the story that we're really here for. What are we really here for" and in a way we thought the answer to that was "we want character complexity" and the movie/tv industry answered that with characters who are a little too self aware of their problems in a way that also is kind of what we "asked" for but not what we really need.

Like this is simultaneously a failure of how media literacy is taught and art under capitalism blindly seeking what audiences want through trial and error, but I think its valuable to see for yourself why something is needed by seeing what happens when you strip away what you thought was unnecessary.

#tayne newsletter#and like not to get into ai art discourse but i think ai writing vs human writers could be insightful#about what art you want that is more dependent on all that has come before#or if you played around with it finding out how much of art is curating#similar to the pedantic thing i think its a way to learn for yourself that a huge part of art is deciding what you don't need to include#the mona lisa does not continue out of frame

0 notes

Text

once again. STILL thinking about my slasher rpg. might be puttin ghosts in there

#also shout out to dave the harbinger because his lore is gonna be EXTENSIVE#shout out to James for suggesting that how the players treat him determines what information they get#also i have the location for it now#i just have to determine what type of slasher it is#once again. fear street multiple slashers vs one guy with Lore (supernatural) vs a few guys with Lore (supernatural)#vs one guy with lore (human and mantle passed down)#and if supernatural what makes him show up. or if there’s multiple what makes them show up#i also have to find a place to write it since Google docs is starting to use ppls stuff for ai#talk to me about my slasher rpg

0 notes

Text

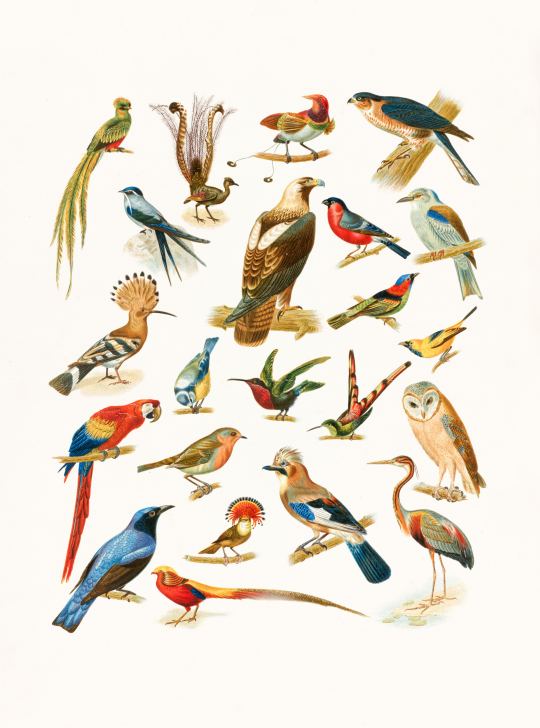

Artificial Intelligence (An alarming situation).

I remember when I was a kid, I used to see so many birds, sparrows to be more specific, hearing their chirping sounds every morning, it always made me happy. I used to witness their complete ecosystem around trees, gardens etc.

Similarly, I used to do almost all of my work independently without using some tech gadgets and any Expert System having AI based algorithm. We in our young age did not have VR gear boxes, Smartphones, Smartwatches but pure joy and healthy life.

It seems like everything has changed in a glance. Now I see gadgets everywhere, now we don`t have to move physically to perform any task, now we, barely move our finger to do our tasks. Is it a blessing or a curse?

As we are living in AI era, we "maybe unknowingly" are completely dependent upon technology. As for now, we are living besides Robots and Expert Systems, these technology have more reliability over our decisions. Initially AI was developed to assist human but this pleasant dream soon to be turned into a nightmare.

HOW? How AI is defeating us rather than helping us out.

UNEMPLOYMENT:

Technically, the idea of AI was to create jobs for people, to assist us for carrying out complex tasks. AI is all about the automation this means millions of people are going to lose their jobs who are responsible for several administrative work.

One example is; A robot has started to perform surgery on a patient to diagnose and cure some disease, creating troubles for doctors and paramedics. The implementation of AI in medical field is in experimental phase and soon it can replace most of the doctors and surgeons especially in developed world, leading to doctors towards unemployment.

Similarly, Robots based upon AI models are being used experimentally in construction and infrastructure field creating and developing homes and buildings using their complex algorithms of modeling. It can also lead to architectures and home designers to be idle.

2. HEALTH ISSUES:

Though AI was developed to help us in living a healthier and depression-free lives but ended up having several health issues and lazy lives. Today, everything is just a one click or tap away from us. We barely move and thanks to the latest technology. Health-related issues arises when our dependency leads us to laziness and slowly depression which gradually will turn into heart diseases and being obese.

3. THREAT TO ENVIRONMENT:

Relying completely on AI, it can be a serious threat to our environment and that means we all are connected with the natural ecosystem and the excessive usage of technology requires heavy installation resources (e.g. Cell Phone towers are in the larger number than before to provide its subscribers fast internet and 5G).

4. THREAT TO ANIMALS AND BIRDS:

Animals and birds are the necessary part of our lives. They helps us in an indirect manner to balance the natural ecosystem but the unlimited experiments and uses of AI is leading towards the destruction and even extinction of several species. Several types of animals and birds are near to the mass extinction.

Further, there could be other threats in several aspects due to the excessive usage and experiments to implement AI among us.

Consider, if those AI-based robots due to some malfunctions in their complex infrastructures and algorithms tries to kill you rather than saving you, how`d you react?

CONCLUSIVELY:

Opposing the excessive and limitless implementation of AI around us doesn`t means that I am against the new improvised tech. Only we must have a watch over its interference in those decisions of ours which should be completely ours only.

Even though, I myself is a great fan of Artificial Intelligence and have done some practical (An educational project during COVID19) work using AI.

All I want to say is that AI has truly helped us a lot in a lot of ways but it should have some constraints and regulations to work alongside us so that we can move along till the end of the line.

0 notes

Text

How is AI content writing better than human content writing as per Google SERP ranking?

Introduction

In the dynamic world of digital marketing, staying ahead of the competition requires a deep understanding of search engine optimization (SEO) and the factors that influence Google SERP rankings. One emerging trend is the utilization of AI content writing to enhance search engine visibility. In this blog, we will explore how AI content writing surpasses human content writing in terms of improving Google SERP rankings.

Unleashing the Power of Keyword Optimization

Keywords play a vital role in SEO, as they help search engines understand the context and relevance of content. While humans can conduct keyword research, AI content writing takes it to a whole new level. AI algorithms analyze extensive data sets, identify commonly searched keywords, and effectively incorporate them into the content. This optimized approach enhances the chances of ranking higher on Google SERPs.

2. Crafting Highly Relevant and Engaging Content

Meeting user intent is paramount when it comes to climbing the SERP ladder. AI-generated content has the ability to analyze user behavior, search trends, and preferences, thereby generating highly relevant and tailored content. By understanding user queries and providing comprehensive answers, AI content writing ensures that the content resonates with the audience, ultimately leading to improved rankings.

3. Elevating Readability and Structural Excellence

Content that is easy to read, well-structured, and formatted appropriately tends to rank higher on SERPs. While humans strive for these qualities, AI content writing has the advantage of learning from patterns in successful content. This results in AI-generated content that excels in readability, adheres to the optimal structure, and boosts the overall user experience. Search engines, including Google, reward such content with better rankings.

4. Achieving Consistency and Scalability

Consistency is crucial in establishing a brand identity and delivering a unified experience to users. AI content writing maintains a consistent writing style, tone, and brand voice across a large volume of content. Moreover, AI algorithms can generate content at an impressive speed, enabling scalability in content creation. The ability to produce vast amounts of optimized content in a short span gives AI an edge over human writers, benefiting SERP rankings.

5. Embracing Data-Driven Optimization

AI content writing relies on data-driven insights to continuously refine its strategies. By analyzing user behavior, search patterns, and emerging trends, AI algorithms adapt the content creation process to maximize SEO performance. This data-driven approach enables AI-generated content to bridge content gaps, target new opportunities, and align with evolving search engine algorithms. Consequently, the content is more likely to rank higher on SERPs.

Conclusion

AI content writing represents a paradigm shift in the world of SEO and SERP ranking. Through its ability to optimize keywords, craft highly relevant content, improve readability, ensure consistency, and embrace data-driven insights, AI content writing offers advantages that surpass human content writing in terms of boosting Google SERP rankings. However, it is important to recognize that human involvement still plays a significant role, as human writers can bring unique perspectives, creativity, and emotional intelligence to the table. The most effective approach combines the strengths of AI and human content writing to achieve optimal results in terms of SERP ranking and user engagement.

Source Code: https://www.transcurators.com/content-service/

"TransCurators- Quality Content Writing Company"

#why Ai content writing is better than human content writing#ai content writing amazing facts#content writer vs ai writing process#which is better ai writing or a human content writer#content writing#TransCurators: A high quality content writing company#Ai content writing blogs

0 notes

Text

uhhh bitch im worried ab companies using AI for free labour and making even more ppl unemployed/underpaid or selling their likeness without consent… not about their poems being good. why is ur humanity so fragile? could really use you on some picket lines, not staring at a sonnet saying ‘you can barely tell…’

#AI#two types of people#AI is welcome to write poems as long as it’s not selling my voice#as a writer#I’m not threatened by how good AI can write… more that they’ll be used instead of human writers to save companies MONEY#like I will take on AI any day I back myself#but me vs a machine that has no rights???? pls they will get hired every time

1 note

·

View note

Text

Can Artificial Intelligence Replace Human Writers? Unveiling the True Potential of ChatGPT and Google Bard

#AI-generated content#ML-generated text#GPT-3 vs. Google Bard#impact of AI on writing#NLP-based writing#language processing in AI writing#“How can AI-driven writing enhance content creation?”#“Exploring the true potential of ChatGPT and Google Bard”#“Can artificial intelligence completely replace human writers?”#“How does ChatGPT and Google Bard differ in their writing capabilities?”#“The impact of AI-generated content on the writing industry”#“The role of AI in transforming the writing landscape”

1 note

·

View note

Text

What kind of bubble is AI?

My latest column for Locus Magazine is "What Kind of Bubble is AI?" All economic bubbles are hugely destructive, but some of them leave behind wreckage that can be salvaged for useful purposes, while others leave nothing behind but ashes:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Think about some 21st century bubbles. The dotcom bubble was a terrible tragedy, one that drained the coffers of pension funds and other institutional investors and wiped out retail investors who were gulled by Superbowl Ads. But there was a lot left behind after the dotcoms were wiped out: cheap servers, office furniture and space, but far more importantly, a generation of young people who'd been trained as web makers, leaving nontechnical degree programs to learn HTML, perl and python. This created a whole cohort of technologists from non-technical backgrounds, a first in technological history. Many of these people became the vanguard of a more inclusive and humane tech development movement, and they were able to make interesting and useful services and products in an environment where raw materials – compute, bandwidth, space and talent – were available at firesale prices.

Contrast this with the crypto bubble. It, too, destroyed the fortunes of institutional and individual investors through fraud and Superbowl Ads. It, too, lured in nontechnical people to learn esoteric disciplines at investor expense. But apart from a smattering of Rust programmers, the main residue of crypto is bad digital art and worse Austrian economics.

Or think of Worldcom vs Enron. Both bubbles were built on pure fraud, but Enron's fraud left nothing behind but a string of suspicious deaths. By contrast, Worldcom's fraud was a Big Store con that required laying a ton of fiber that is still in the ground to this day, and is being bought and used at pennies on the dollar.

AI is definitely a bubble. As I write in the column, if you fly into SFO and rent a car and drive north to San Francisco or south to Silicon Valley, every single billboard is advertising an "AI" startup, many of which are not even using anything that can be remotely characterized as AI. That's amazing, considering what a meaningless buzzword AI already is.

So which kind of bubble is AI? When it pops, will something useful be left behind, or will it go away altogether? To be sure, there's a legion of technologists who are learning Tensorflow and Pytorch. These nominally open source tools are bound, respectively, to Google and Facebook's AI environments:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

But if those environments go away, those programming skills become a lot less useful. Live, large-scale Big Tech AI projects are shockingly expensive to run. Some of their costs are fixed – collecting, labeling and processing training data – but the running costs for each query are prodigious. There's a massive primary energy bill for the servers, a nearly as large energy bill for the chillers, and a titanic wage bill for the specialized technical staff involved.

Once investor subsidies dry up, will the real-world, non-hyperbolic applications for AI be enough to cover these running costs? AI applications can be plotted on a 2X2 grid whose axes are "value" (how much customers will pay for them) and "risk tolerance" (how perfect the product needs to be).

Charging teenaged D&D players $10 month for an image generator that creates epic illustrations of their characters fighting monsters is low value and very risk tolerant (teenagers aren't overly worried about six-fingered swordspeople with three pupils in each eye). Charging scammy spamfarms $500/month for a text generator that spits out dull, search-algorithm-pleasing narratives to appear over recipes is likewise low-value and highly risk tolerant (your customer doesn't care if the text is nonsense). Charging visually impaired people $100 month for an app that plays a text-to-speech description of anything they point their cameras at is low-value and moderately risk tolerant ("that's your blue shirt" when it's green is not a big deal, while "the street is safe to cross" when it's not is a much bigger one).

Morganstanley doesn't talk about the trillions the AI industry will be worth some day because of these applications. These are just spinoffs from the main event, a collection of extremely high-value applications. Think of self-driving cars or radiology bots that analyze chest x-rays and characterize masses as cancerous or noncancerous.

These are high value – but only if they are also risk-tolerant. The pitch for self-driving cars is "fire most drivers and replace them with 'humans in the loop' who intervene at critical junctures." That's the risk-tolerant version of self-driving cars, and it's a failure. More than $100b has been incinerated chasing self-driving cars, and cars are nowhere near driving themselves:

https://pluralistic.net/2022/10/09/herbies-revenge/#100-billion-here-100-billion-there-pretty-soon-youre-talking-real-money

Quite the reverse, in fact. Cruise was just forced to quit the field after one of their cars maimed a woman – a pedestrian who had not opted into being part of a high-risk AI experiment – and dragged her body 20 feet through the streets of San Francisco. Afterwards, it emerged that Cruise had replaced the single low-waged driver who would normally be paid to operate a taxi with 1.5 high-waged skilled technicians who remotely oversaw each of its vehicles:

https://www.nytimes.com/2023/11/03/technology/cruise-general-motors-self-driving-cars.html

The self-driving pitch isn't that your car will correct your own human errors (like an alarm that sounds when you activate your turn signal while someone is in your blind-spot). Self-driving isn't about using automation to augment human skill – it's about replacing humans. There's no business case for spending hundreds of billions on better safety systems for cars (there's a human case for it, though!). The only way the price-tag justifies itself is if paid drivers can be fired and replaced with software that costs less than their wages.

What about radiologists? Radiologists certainly make mistakes from time to time, and if there's a computer vision system that makes different mistakes than the sort that humans make, they could be a cheap way of generating second opinions that trigger re-examination by a human radiologist. But no AI investor thinks their return will come from selling hospitals that reduce the number of X-rays each radiologist processes every day, as a second-opinion-generating system would. Rather, the value of AI radiologists comes from firing most of your human radiologists and replacing them with software whose judgments are cursorily double-checked by a human whose "automation blindness" will turn them into an OK-button-mashing automaton:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

The profit-generating pitch for high-value AI applications lies in creating "reverse centaurs": humans who serve as appendages for automation that operates at a speed and scale that is unrelated to the capacity or needs of the worker:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

But unless these high-value applications are intrinsically risk-tolerant, they are poor candidates for automation. Cruise was able to nonconsensually enlist the population of San Francisco in an experimental murderbot development program thanks to the vast sums of money sloshing around the industry. Some of this money funds the inevitabilist narrative that self-driving cars are coming, it's only a matter of when, not if, and so SF had better get in the autonomous vehicle or get run over by the forces of history.

Once the bubble pops (all bubbles pop), AI applications will have to rise or fall on their actual merits, not their promise. The odds are stacked against the long-term survival of high-value, risk-intolerant AI applications.

The problem for AI is that while there are a lot of risk-tolerant applications, they're almost all low-value; while nearly all the high-value applications are risk-intolerant. Once AI has to be profitable – once investors withdraw their subsidies from money-losing ventures – the risk-tolerant applications need to be sufficient to run those tremendously expensive servers in those brutally expensive data-centers tended by exceptionally expensive technical workers.

If they aren't, then the business case for running those servers goes away, and so do the servers – and so do all those risk-tolerant, low-value applications. It doesn't matter if helping blind people make sense of their surroundings is socially beneficial. It doesn't matter if teenaged gamers love their epic character art. It doesn't even matter how horny scammers are for generating AI nonsense SEO websites:

https://twitter.com/jakezward/status/1728032634037567509

These applications are all riding on the coattails of the big AI models that are being built and operated at a loss in order to be profitable. If they remain unprofitable long enough, the private sector will no longer pay to operate them.

Now, there are smaller models, models that stand alone and run on commodity hardware. These would persist even after the AI bubble bursts, because most of their costs are setup costs that have already been borne by the well-funded companies who created them. These models are limited, of course, though the communities that have formed around them have pushed those limits in surprising ways, far beyond their original manufacturers' beliefs about their capacity. These communities will continue to push those limits for as long as they find the models useful.

These standalone, "toy" models are derived from the big models, though. When the AI bubble bursts and the private sector no longer subsidizes mass-scale model creation, it will cease to spin out more sophisticated models that run on commodity hardware (it's possible that Federated learning and other techniques for spreading out the work of making large-scale models will fill the gap).

So what kind of bubble is the AI bubble? What will we salvage from its wreckage? Perhaps the communities who've invested in becoming experts in Pytorch and Tensorflow will wrestle them away from their corporate masters and make them generally useful. Certainly, a lot of people will have gained skills in applying statistical techniques.

But there will also be a lot of unsalvageable wreckage. As big AI models get integrated into the processes of the productive economy, AI becomes a source of systemic risk. The only thing worse than having an automated process that is rendered dangerous or erratic based on AI integration is to have that process fail entirely because the AI suddenly disappeared, a collapse that is too precipitous for former AI customers to engineer a soft landing for their systems.

This is a blind spot in our policymakers debates about AI. The smart policymakers are asking questions about fairness, algorithmic bias, and fraud. The foolish policymakers are ensnared in fantasies about "AI safety," AKA "Will the chatbot become a superintelligence that turns the whole human race into paperclips?"

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

But no one is asking, "What will we do if" – when – "the AI bubble pops and most of this stuff disappears overnight?"

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/12/19/bubblenomics/#pop

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

--

tom_bullock (modified)

https://www.flickr.com/photos/tombullock/25173469495/

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/

4K notes

·

View notes

Text

ChatGPT Bot Block

Hey Pillowfolks!

We know many of you are still waiting on our official stance regarding AI-Generated Images (also referred to as “AI Art”) being posted to Pillowfort. We are still deliberating internally on the best approach for our community as well as how to properly moderate AI-Generated Images based on the stance we ultimately decide on. We’re also in contact with our Legal Team for guidance regarding additions to the Terms of Service we will need to include regarding AI-Generated Images. This is a highly divisive issue that continues to evolve at a rapid pace, so while we know many of you are anxious to receive a decision, we want to make sure we carefully consider the options before deciding. Thank you for your patience as we work on this.

As of today, 9/5/2023, we have blocked the ChatGPT bot from scraping Pillowfort. This means any writings you post to Pillowfort cannot be retrieved for use in ChatGPT’s Dataset.

Our team is still looking for ways to provide the same protection for images uploaded to the site, but keeping scrapers from accessing images seems to be less straightforward than for text content. The biggest AI generators such as StableDiffusion use datasets such as LAION, and as far as our team has been able to discern, it is not known what means those datasets use to scrape images or how to prevent them from doing so. Some sources say that websites can add metadata tags to images to prevent the img2dataset bot (which is apparently used by many generative image tools) from scraping images, but it is unclear which AI image generators use this bot vs. a different bot or technology. The bot can also be configured to simply disregard these directives, so it is unknown which scrapers would obey the restriction if it was added.

For artists looking to protect their art from AI image scrapers you may want to look into Glaze, a tool designed by the University of Chicago, to protect human artworks from being processed by generative AI.

We are continuing to monitor this topic and encourage our users to let us know if you have any information that can help our team decide the best approach to managing AI-Generated Images and Generative AI going forward. Again, we appreciate your patience, and we are working to have a decision on the issue of moderating AI-Generated Images soon.

Best,

Pillowfort.social Staff

873 notes

·

View notes

Text

y'know what, I think it's kind of interesting to bring up Data from Star Trek in the context of the current debates about AI. like especially if you actually are familiar with the subplot about Data investigating art and creativity.

see, Data can definitely do what the AI programs going around these days can. better than, but that's beside the point, obviously. he's a sci-fi/fantasy android. but anyway, in the story, Data can perfectly replicate any painting or stitch a beautiful quilt or write a poem. he can write programs for himself that introduce variables that make things more "flawed", that imitate the particular style of an artist, he can choose to either perfectly replicate a particular sort of music or to try and create a more "human" sounding imitation that has irregular errors and mimics effort or strain. the latter is harder for him that just copying, the same way it's more complicated to have an algorithm that creates believable "original" art vs something that just duplicates whatever you give it.

but this is not the issue with Data. when Data imitates art, he himself knows that he's not really creating, he's just using his computer brain to copy things that humans have done. it's actually a source of deep personal introspection for the character, that he believes being able to create art would bring him closer to humanity, but he's not sure if he actually can.

of course, Data is a person. he's a person who is not biological, but he's still a person, and this is really obvious from go. there's no one thing that can be pointed to as the smoking gun for Data's personhood, but that's normal and also true of everyone else. Data's the culmination of a multitude of elements required to make a guy. Asking if this or that one thing is what makes Data a person is like asking if it's the flour or the eggs that make a cake.

the question of whether or not Data can create art is intrinsically tied to the question of whether or not Data can qualify as an artist. can he, like a human, take on inspiration and cultivate desirable influences in order to produce something that reflects his view on the world?

yes, he can. because he has a view on the world.

but that's the thing about the generative AI we are dealing with in the real world. that's not like Data. despite being referred to as "AI", these are algorithms that have been trained to recognize and imitate patterns. they have no perspective. the people who DO have a perspective, the humans inputting prompts, are trying to circumvent the whole part of the artistic process where they actually develop skills and create things themselves. they're not doing what Data did, in fact they're doing the opposite -- instead of exploring their own ability to create art despite their personal limitations, they are abandoning it. the data sets aren't like someone looking at a painting and taking inspiration from it, because the machine can't be inspired and the prompter isn't filtering inspiration through the necessary medium of their perspective.

Data would be very confused as to the motives and desires involved, especially since most people are not inhibited from developing at least SOME sort of artistic skill for the sake self-expression. he'd probably start researching the history of plagiarism and different cultural, historical, and legal standards for differentiating it from acceptable levels of artistic imitation, and how the use of various tools factored into it. he would cite examples of cultures where computer programming itself was considered a form of art, and court cases where rulings were made for or against examples of generative plagiarism, and cases of forgeries and imitations which required skill as good if not better than the artists who created the originals. then Geordi would suggest that maybe Data was a little bit annoyed that people who could make art in a way he can't would discount that ability. Data would be like "as a machine I do not experience annoyance" but he would allow that he was perplexed or struggling to gain internal consensus on the matter. so Geordi would sum it up with "sometimes people want to make things easy, and they aren't always good at recognizing when doing that defeats the whole idea" and Data would quirk his head thoughtfully and agree.

then they'd get back to modifying the warp core so they could escape some sentient space anomaly that had sucked the ship into intermediate space and was slowly destabilizing the hull, or whatever.

anyways, point is -- I don't think Data from Star Trek would be a big fan of AI art.

303 notes

·

View notes

Note

Your discussions on AI art have been really interesting and changed my mind on it quite a bit, so thank you for that! I don’t think I’m interested in using it, but I feel much less threatened by it in the same way. That being said, I was wondering, how you felt about AI generated creative writing: not, like AI writing in the context of garbage listicles or academic essays, but like, people who generate short stories and then submit them to contests. Do you think it’s the same sort of situation as AI art? Do you think there’s a difference in ChatGPT vs mid journey? Legitimate curiosity here! I don’t quite have an opinion on this in the same way, and I’ve seen v little from folks about creative writing in particular vs generated academic essays/articles

i think that ai generated writing is also indisputably writing but it is mostly really really fucking awful writing for the same reason that most ai art is not good art -- that the large training sets and low 'temperature' of commercially available/mass market models mean that anything produced will be the most generic version of itself. i also think that narrative writing is very very poorly suited to LLM generation because it generally requires very basic internal logic which LLMs are famously bad at (i imagine you'd have similar problems trying to create something visual like a comic that requires consistent character or location design rather than the singular images that AI art is mostly used for). i think it's going to be a very long time before we see anything good long-form from an LLM, especially because it's just not a priority for the people making them.

ultimately though i think you could absolutely do some really cool stuff with AI generated text if you had a tighter training set and let it get a bit wild with it. i've really enjoyed a lot of AI writing for being funny, especially when it was being done with tools like botnik that involve more human curation but still have the ability to completely blindside you with choices -- i unironically think the botnik collegehumour sketch is funnier than anything human-written on the channel. & i think that means it could reliably be used, with similar levels of curation, to make some stuff that feels alien, or unsettling, or etheral, or horrifying, because those are somewhat adjacent to the surreal humour i think it excels at. i could absolutely see it being used in workflows -- one of my friends told me recently, essentially, "if i'm stuck with writer's block, i ask chatgpt what should happen next, it gives me a horrible idea, and i immediately think 'that's shit, and i can do much better' and start writing again" -- which is both very funny but i think presents a great use case as a 'rubber duck'.

but yea i think that if there's anything good to be found in AI-written fiction or poetry it's not going to come from chatGPT specifically, it's going to come from some locally hosted GPT model trained on a curated set of influences -- and will have to either be kind of incoherent or heavily curated into coherence.

that said the submission of AI-written stories to short story mags & such fucking blows -- not because it's "not writing" but because it's just bad writing that's very very easy to produce (as in, 'just tell chatGPT 'write a short story'-easy) -- which ofc isn't bad in and of itself but means that the already existing phenomenon of people cynically submitting awful garbage to literary mags that doesn't even meet the submission guidelines has been magnified immensely and editors are finding it hard to keep up. i think part of believing that generative writing and art are legitimate mediums is also believing they are and should be treated as though they are separate mediums -- i don't think that there's no skill in these disciplines (like, if someone managed to make writing with chatGPT that wasnt unreadably bad, i would be very fucking impressed!) but they're deeply different skills to the traditional artforms and so imo should be in general judged, presented, published etc. separately.

211 notes

·

View notes