#convolutional network

Text

literally the biggest shoutout to all the upload to google drive fandom people- you guys are fucking amazing, genuinely

#aw man nothing feels better than pulling out a google drive like a rabbit from a hat#and amazing all your friends and family with your increasingly convoluted network of pirated shows

6 notes

·

View notes

Text

Unfortunately all chatgpt is good for is interview/job application stuff which I think says a lot about the hiring process as a whole

#wrenfea.exe#as an actual artifical intelligence? no its horrible bc it really ISNT one#its a writing synthesizer it generates writing based on data searches and boundaries from training#thats what a neural network is its a very convoluted input-output sequence#it has no capacity to understand the meaning behind what it generates#it is simply generating the specific things that the user is looking for#the job interview process has become so robotic and automized that ai fits in perfectly#but employers HATE that people are turning to chatgpt for cover letters and interview answers#so it was fair for them to use filtering programs to accept/deny applications before it got in front of an actual human being#and its ok for them to use ai and pre-written formats to make job announcements descriptions and interview questions#but god forbid we are forced to use those exact same tools to get a humans attention so we can get a job and not starve#pushing aside the whole copyright debate on chatgpt and the environmental impact of its power usage btw#im soley analyzing how its become commonly utilized on both sides#by interviewer and interviewed#the mechanization of the whole process is now on both sides#it just seems very inhuman..#its also how some people have figured out how to somehow become employed multiple times by the same company due to lack of human oversight#and how automated theyve made their hiring process#probably should have made these tags into a separate reblog oops#also disclaimer do not cut and paste right into your application materials bc chatgpt often just lies#also many places now can tell you used chatgpt due to how similar its answers are#i only use it to make a template and see how things can be phrased to be more professional and buzzwordy#id never use it for something actually creative#and dear god do not write academic essays with it#i tried using it to supplement my own cover letter template but it was too robotic even for a cover letter#it is very good at accessing and summarizing publically available information#thats all it does not make sure the information is true or good

5 notes

·

View notes

Text

putting a masquerade ball in wicked turns, because how could i NOT put a masquerade ball in wicked turns, and i had planned on making it part of the szarr manor dungeon crawl but ooooooooooooooo girl....... what if it was the pre-coronation gortash encounter

#bg3 posting#on paper it's an anonymous democratic seminar where people can voice concerns about lord gortash... in reality it's just networking lol.#and if you say anything bad about gortash you get escorted to the dungeons. who's gonna suspect anything untoward?#you were anonymous to begin with. nobody knew you were here.#sure it's a bit convoluted for a banite but maybe it's his stag party. maybe the man wants to have fun before assuming all the#responsibility of tyrannically dominating the sword coast

2 notes

·

View notes

Text

HyperTransformer: G Additional Tables and Figures

Subscribe

.tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8 { color: #fff; background: #222; border: 1px solid transparent; border-radius: undefinedpx; padding: 8px 21px; } .tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8.place-top { margin-top: -10px; } .tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8.place-top::before { content: “”; background-color: inherit; position: absolute; z-index: 2; width: 20px; height: 12px; }…

View On WordPress

#conventional-machine-learning#convolutional-neural-network#few-shot-learning#hypertransformer#parametric-model#small-target-cnn-architectures#supervised-model-generation#task-independent-embedding

0 notes

Text

youtube

Hi,

This is a Tensorflow tutorial that enables you to classify world landmarks using the pre-trained Tensor-Hub platform.

We will how install the relevant Python libraries , look for the right pre-trained model , and learn how to use it for classify landmark images in Europe.

The link for the video tutorial is here : https://youtu.be/IJ5Z9Awzxr4

I also shared the link for Python code in the video description.

Enjoy

Eran

#Python #Cnn #TensorFlow #AI #Deeplearning #TensorFlow #ImageClassification #TransferLearning #Python #DeepLearning #ArtificialIntelligence #PretrainedModels #ImageRecognition #OpenCV #ComputerVision #Cnn

#artificial intelligence#convolutional neural network#deep learning#python#tensorflow#machine learning#code#Youtube

0 notes

Text

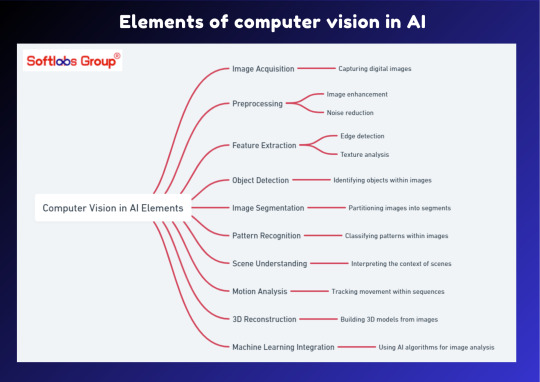

Explore the fundamental elements of computer vision in AI with our insightful guide. This simplified overview outlines the key components that enable machines to interpret and understand visual information, powering applications like image recognition and object detection. Perfect for those interested in unlocking the capabilities of artificial intelligence. Stay informed with Softlabs Group for more insightful content on cutting-edge technologies.

0 notes

Text

What is a convolutional neural Network (CNN)?

Rakesh Jatav January 05, 2024

What is a convolutional neural network?

Introduction

Deep learning, a subset of artificial intelligence (AI), has permeated various domains, revolutionizing tasks through its ability to learn from data. At the forefront of deep learning's impact is image classification, a pivotal process in computer vision and object recognition. This necessitates the utilization of specialized models, with Convolutional Neural Networks (CNNs) standing out as indispensable tools.

What to Expect

In this article, we will delve into the intricate workings of CNNs, understanding their components and exploring their applications in image-related domains.

Readers will gain insights into:

The fundamental components of CNNs, including convolutional layers, pooling layers, and fully-connected layers

The training processes and advancements that have propelled CNNs to the forefront of deep learning

The diverse applications of CNNs in computer vision and image recognition

The profound impact of CNNs on the evolution of image recognition models

Through an in-depth exploration of CNNs, readers will uncover the underlying mechanisms that power modern image classification systems, comprehending the significance of these networks in shaping the digital landscape.

Understanding the Components of a Convolutional Neural Network

Convolutional neural networks (CNNs) are composed of various components that work together to extract features from images and perform image classification tasks. In this section, we will delve into the three main components of CNNs: convolutional layers, pooling layers, and fully-connected layers.

1. Convolutional Layers

Convolutional layers are the building blocks of CNNs and play a crucial role in feature extraction. They apply filters or kernels to input images in order to detect specific patterns or features. Here's a detailed exploration of the key concepts related to convolutional layers:

Feature Maps

A feature map is the output of a single filter applied to an input image. Each filter is responsible for detecting a specific feature, such as edges, textures, or corners. By convolving the filters over the input image, multiple feature maps are generated, each capturing different aspects of the image.

Receptive Fields

The receptive field refers to the region in the input image that affects the value of a neuron in a particular layer. Each neuron in a convolutional layer is connected to a small region of the previous layer known as its receptive field. By sliding these receptive fields across the entire input image, CNNs can capture both local and global information.

Weight Sharing

Weight sharing is a fundamental concept in convolutional layers that allows them to learn translation-invariant features. Instead of learning separate parameters for each location in an image, convolutional layers share weights across different spatial locations. This greatly reduces the number of parameters and enables CNNs to generalize well to new images.

To illustrate these concepts, let's consider an example where we want to train a CNN for object recognition. In the first convolutional layer, filters might be designed to detect low-level features like edges or textures. As we move deeper into subsequent convolutional layers, filters become more complex and start detecting higher-level features, such as shapes or object parts. The combination of these features in deeper layers leads to the classification of specific objects.

Convolutional layers are the backbone of CNNs and play a crucial role in capturing hierarchical representations of images. They enable the network to learn meaningful and discriminative features directly from the raw pixel values.

2. Pooling Layers and Dimensionality Reduction

Pooling layers are responsible for reducing the spatial dimensions of feature maps while preserving important features. They help reduce the computational complexity of CNNs and provide a form of translation invariance. Let's explore some key aspects related to pooling layers:

Spatial Dimensions Reduction

Pooling layers divide each feature map into non-overlapping regions or windows and aggregate the values within each region. The most common pooling technique is max pooling, which takes the maximum value within each window. Average pooling is another popular method, where the average value within each window is computed. These operations downsample the feature maps, reducing their spatial size.

Preserving Important Features

Also read :A Comprehensive Guide on How to Become a Machine Learning Engineer in 2024

Although pooling layers reduce the spatial dimensions, they retain important features by retaining the strongest activations within each window. This helps maintain robustness to variations in translation, scale, and rotation.

Pooling layers effectively summarize local information and provide an abstract representation of important features, allowing subsequent layers to focus on higher-level representations.

3. Fully-Connected Layers for Classification Tasks

Fully-connected layers are responsible for classifying images based on the extracted features from convolutional and pooling layers. These layers connect every neuron from one layer to every neuron in the next layer, similar to traditional neural networks. Here's an in-depth look at fully-connected layers:

Class Predictions

The output of fully-connected layers represents class probabilities for different categories or labels. By applying activation functions like softmax, CNNs can assign a probability score to each possible class based on the extracted features.

Backpropagation and Training

Fully-connected layers are trained using the backpropagation algorithm, which involves iteratively adjusting the weights based on the computed gradients. This process allows the network to learn discriminative features and optimize its performance for specific classification tasks.

Fully-connected layers at the end of CNNs leverage the extracted features to make accurate predictions and classify images into different classes or categories.

By understanding the components of CNNs, we gain insights into how these neural networks process images and extract meaningful representations. The convolutional layers capture local patterns and features, pooling layers reduce spatial dimensions while preserving important information, and fully-connected layers classify images based on the extracted features. These components work together harmoniously to perform image classification tasks effectively.

2. Pooling Layers and Dimensionality Reduction

Pooling layers are an essential component of convolutional neural networks (CNNs) that play a crucial role in reducing spatial dimensions while preserving important features. They work in conjunction with convolutional layers and fully-connected layers to create a powerful architecture for image classification tasks.

Comprehensive Guide to Pooling Layers in CNNs

Pooling layers are responsible for downsampling the output of convolutional layers, which helps reduce the computational complexity of the network and makes it more robust to variations in input images. Here's a breakdown of the key aspects of pooling layers:

1. Spatial Dimension Reduction

One of the main purposes of pooling layers is to reduce the spatial dimensions of the feature maps generated by the previous convolutional layer. By downsampling the feature maps, pooling layers effectively decrease the number of parameters in subsequent layers, making the network more efficient.

2. Preserving Important Features

Despite reducing the spatial dimensions, pooling layers aim to preserve important features learned by convolutional layers. This is achieved by considering local neighborhoods of pixels and summarizing them into a single value or feature. By doing so, pooling layers retain relevant information while discarding less significant details.

Overview of Popular Pooling Techniques

There are several commonly used pooling techniques in CNNs, each with its own characteristics and advantages:

Max Pooling: Max pooling is perhaps the most widely used pooling technique in CNNs. It operates by partitioning the input feature map into non-overlapping rectangles or regions and selecting the maximum value within each region as the representative value for that region. Max pooling is effective at capturing dominant features and providing translation invariance.

Average Pooling: Unlike max pooling, average pooling calculates the average value within each region instead of selecting the maximum value. This technique can be useful when preserving detailed information across different regions is desired, as it provides a smoother representation of the input.

Global Pooling: Global pooling is a pooling technique that aggregates information from the entire feature map into a single value or feature vector. This is achieved by applying a pooling operation (e.g., max pooling or average pooling) over the entire spatial dimensions of the feature map. Global pooling helps capture high-level semantic information and is commonly used in the final layers of a CNN for classification tasks.

Example: Pooling Layers in Action

To illustrate the functionality of pooling layers, let's consider an example with a simple CNN architecture for image classification:

Convolutional layers extract local features and generate feature maps.

Pooling layers then downsample the feature maps, reducing their spatial dimensions while retaining essential information.

Fully-connected layers process the pooled features and make class predictions based on learned representations.

By incorporating pooling layers in between convolutional layers, CNNs are able to hierarchically learn features at different levels of abstraction. The combination of convolutional layers, pooling layers, and fully-connected layers enables CNNs to effectively classify images and perform complex computer vision tasks.

Also read :Comparing Google Gemini and ChatGPT: Performance, Generalization Abilities, and Ethical Considerations

In summary, pooling layers are an integral part of CNNs that contribute to dimensionality reduction while preserving important features. Techniques like max pooling, average pooling, and global pooling allow CNNs to downsample feature maps and capture relevant information for subsequent processing. Understanding how these building blocks work together provides insights into the functionality of convolutional neural networks and their effectiveness in image classification tasks.

3. Fully-Connected Layers for Classification Tasks

In a convolutional neural network (CNN), fully-connected layers play a crucial role in making class predictions based on the extracted features from previous layers. These layers are responsible for learning and mapping high-level features to specific classes or categories.

The Role of Fully-Connected Layers

After the convolutional and pooling layers extract important spatial features from the input image, fully-connected layers are introduced to perform classification tasks. These layers are similar to the traditional neural networks where all neurons in one layer are connected to every neuron in the subsequent layer. The output of the last pooling layer, which is a flattened feature map, serves as the input to the fully-connected layers.

The purpose of these fully-connected layers is to learn complex relationships between extracted features and their corresponding classes. By connecting every neuron in one layer to every neuron in the next layer, fully-connected layers can capture intricate patterns and dependencies within the data.

Training Fully-Connected Layers with Backpropagation

To train fully-connected layers, CNNs utilize backpropagation, an algorithm that adjusts the weights of each connection based on the error calculated during training. The process involves iteratively propagating the error gradient backward through the network and updating the weights accordingly.

During training, an input image is fed forward through the network, resulting in class predictions at the output layer. The predicted class probabilities are then compared to the true labels using a loss function such as cross-entropy. The error is calculated by measuring the difference between predicted and true probabilities.

Backpropagation starts by computing how much each weight contributes to the overall error. This is done by propagating error gradients backward from the output layer to the input layer, updating weights along the way using gradient descent optimization. By iteratively adjusting weights based on their contribution to error reduction, fully-connected layers gradually learn to make accurate class predictions.

Limitations and Challenges

Fully-connected layers have been effective in many image classification tasks. However, they have a few limitations:

Computational Cost: Fully-connected layers require a large number of parameters due to the connections between every neuron, making them computationally expensive, especially for high-resolution images.

Loss of Spatial Information: As fully-connected layers flatten the extracted feature maps into a one-dimensional vector, they discard the spatial information present in the original image. This loss of spatial information can be detrimental in tasks where fine-grained details are important.

Limited Translation Invariance: Unlike convolutional layers that use shared weights to detect features across different regions of an image, fully-connected layers treat each neuron independently. This lack of translation invariance can make CNNs sensitive to small changes in input position.

Example Architecture: LeNet-5

One of the early successful CNN architectures that utilized fully-connected layers is LeNet-5, developed by Yann LeCun in 1998 for handwritten digit recognition. The LeNet-5 architecture consisted of three sets of convolutional and pooling layers followed by two fully-connected layers.

The first fully-connected layer in LeNet-5 had 120 neurons, while the second fully-connected layer had 84 neurons before reaching the output layer with 10 neurons representing the digits 0 to 9. The output layer used softmax activation to produce class probabilities.

LeNet-5 showcased the effectiveness of fully-connected layers in learning complex relationships and achieving high accuracy on digit recognition tasks. Since then, numerous advancements and variations of CNN architectures have emerged, emphasizing more intricate network designs and deeper hierarchies.

Summary

Fully-connected layers serve as the final stages of a CNN, responsible for learning and mapping high-level features to specific classes or categories. By utilizing backpropagation during training, these layers gradually learn to make accurate class predictions based on extracted features from earlier layers. However, they come with limitations such as computational cost, loss of spatial information, and limited translation invariance. Despite these challenges, fully-connected layers have played a crucial role in achieving state-of-the-art performance in various image classification tasks.

Training and Advancements in Convolutional Neural Networks

Neural network training involves optimizing model parameters to minimize the difference between predicted outputs and actual targets. In the case of CNNs, this training process is crucial for learning and extracting features from input images. Here are some key points to consider when discussing the training of CNNs and advancements in the field:

Training Process

Also read :Explore the Latest in Gadgets: Gadgets 360, Officer-Approved Tech, Lottery Winners' Gadgets, NDTV Reviews, SK Premium Selection, and Self-Defense & Electronic Marvels!

The training of a CNN typically involves:

Feeding annotated data through the network

Performing a forward pass to generate predictions

Calculating the loss (difference between predictions and actual targets)

Updating the network's weights through backpropagation to minimize this loss

This iterative process helps the CNN learn to recognize patterns and features within the input images.

Advanced Architectures

Several advanced CNN architectures have significantly contributed to pushing the boundaries of performance in image recognition tasks:

LeNet-5

AlexNet

VGGNet

GoogLeNet

ResNet

ZFNet

Each of these models introduced novel concepts and architectural designs that improved the accuracy and efficiency of CNNs for various tasks.

LeNet-5

LeNet-5 was one of the pioneering CNN architectures developed by Yann LeCun for handwritten digit recognition. It consisted of several convolutional and subsampling layers followed by fully connected layers. LeNet-5 demonstrated the potential of CNNs in practical applications.

AlexNet

AlexNet gained widespread attention after winning the ImageNet Large Scale Visual Recognition Challenge in 2012. This architecture featured a deeper network design with multiple convolutional layers and introduced the concept of using ReLU (Rectified Linear Unit) activation functions for faster convergence during training.

VGGNet

VGGNet is known for its simple yet effective architecture with small 3x3 convolutional filters stacked together to form deeper networks. This approach led to improved feature learning capabilities and better generalization on various datasets.

GoogLeNet

GoogLeNet introduced the concept of inception modules, which allowed for more efficient use of computational resources by incorporating parallel convolutional operations within the network.

ResNet

ResNet (Residual Network) addressed the challenge of training very deep neural networks by introducing skip connections that enabled better gradient flow during backpropagation. This architectural innovation facilitated training of networks with hundreds of layers while mitigating issues related to vanishing gradients.

ZFNet

ZFNet (Zeiler & Fergus Network) made significant contributions to understanding visual patterns by incorporating deconvolutional layers for visualization of learned features within the network.

These advancements in CNN architectures have not only improved performance but also paved the way for exploring more complex tasks in computer vision and image recognition.

Applications of CNNs in Computer Vision

Highlighting the Wide Range of Computer Vision Tasks

Object Detection: CNNs have proven to be highly effective in detecting and localizing objects within images. They can accurately identify and outline various objects, even in complex scenes with multiple overlapping elements.

Semantic Segmentation: By employing CNNs, computer vision systems can understand the context of different parts of an image. This allows for precise identification and differentiation of individual objects or elements within the image.

Style Transfer: CNNs have been utilized to transfer artistic styles from one image to another, offering a creative application of computer vision. This technology enables the transformation of photographs into artworks reflecting the styles of famous painters or artistic movements.

By excelling in these computer vision tasks, CNNs have significantly advanced the capabilities of machine vision systems, leading to breakthroughs in fields such as autonomous vehicles, medical imaging, and augmented reality.

Examine how the introduction of CNNs revolutionized the field of image recognition and paved the way for state-of-the-art models. Discuss recent advancements in image recognition achieved through CNN-based approaches like transfer learning and attention mechanisms.

CNNs have significantly impacted the realm of image recognition models, bringing about a paradigm shift and enabling the development of cutting-edge approaches. Here's a closer look at their profound influence:

Revolutionizing Image Recognition

Also read :What is a convolutional neural Network (CNN)?

The introduction of CNNs has transformed the landscape of image recognition, pushing the boundaries of what was previously achievable. By leveraging convolutional layers for feature extraction and hierarchical learning, CNNs have enabled the creation of sophisticated models capable of accurately classifying and identifying visual content.

Recent Advancements in Image Recognition

In recent years, significant advancements in image recognition have been realized through the application of CNN-based methodologies. Two notable approaches that have garnered attention are:

Transfer Learning: This approach involves utilizing pre-trained CNN models as a starting point for new image recognition tasks. By leveraging knowledge gained from large-scale labeled datasets, transfer learning enables the adaptation and fine-tuning of existing CNN architectures to suit specific recognition objectives. This method has proven particularly valuable in scenarios where labeled training data is limited, as it allows for the efficient reutilization of learned features.

Attention Mechanisms: The integration of attention mechanisms within CNN architectures has emerged as a powerful technique for enhancing image recognition capabilities. By dynamically focusing on relevant regions within an image, these mechanisms enable CNNs to selectively attend to crucial visual elements, thereby improving their capacity to discern intricate details and patterns. This targeted approach contributes to heightened accuracy and robustness in image recognition tasks.

The utilization of transfer learning and attention mechanisms underscores the ongoing evolution and refinement of image recognition models, demonstrating the adaptability and versatility inherent in CNN-based strategies.

As we continue to witness strides in image recognition propelled by CNN innovations, it becomes evident that these developments are instrumental in shaping the future trajectory of visual analysis and classification.

FAQ (Frequently Asked Questions):

1. How does transfer learning facilitate the reutilization of learned features in CNN architectures?

Transfer learning allows for the transfer of knowledge gained from pre-training on a large dataset to a target task with limited labeled data. By freezing and utilizing the lower layers of a pre-trained CNN, important low-level features can be leveraged for the new task, while only fine-tuning higher layers to adapt to the specific recognition objectives.

2. How do attention mechanisms enhance image recognition capabilities?

Attention mechanisms enable CNNs to focus on relevant regions within an image, improving their ability to discern intricate details and patterns. This selective attention helps CNNs prioritize crucial visual elements, contributing to heightened accuracy and robustness in image recognition tasks.

3. What role do these advancements play in the future of visual analysis and classification?

The integration of transfer learning and attention mechanisms highlights the adaptability and versatility of CNN-based strategies. These advancements continue to shape the trajectory of image recognition, offering promising avenues for improving accuracy, efficiency, and interpretability in complex visual analysis tasks.

Conclusion

In conclusion, convolutional neural networks (CNNs) have revolutionized the field of image recognition and opened up new possibilities for state-of-the-art models. Through their introduction, image-related tasks have seen significant advancements and improved performance.

As readers, it is highly encouraged to explore and experiment with CNNs in your own deep learning projects or research endeavors. The potential for innovation in image-related domains is vast, and CNNs provide a powerful tool to tackle complex problems.

To get started with CNNs, consider the following steps:

Learn the fundamentals: Familiarize yourself with the key components of CNNs, including convolutional layers, pooling layers, and fully-connected layers. Understand their roles in feature extraction, dimensionality reduction, and classification.

Gain practical experience: Implement CNN architectures using popular deep learning frameworks such as TensorFlow or PyTorch. Work on image classification tasks and experiment with different network architectures to understand their strengths and weaknesses.

Stay updated: Keep up with the latest advancements in CNN research and explore new approaches like transfer learning and attention mechanisms. These techniques have shown promising results in improving image recognition accuracy.

Remember, CNNs are not limited to image recognition alone. They can be applied to various other domains such as object detection, semantic segmentation, style transfer, and more.

By harnessing the power of convolutional neural networks, you can contribute to the ongoing progress in computer vision and make significant strides in solving real-world challenges related to image analysis.

So go ahead and dive into the world of CNNs - unlock the potential of deep learning for image-related tasks and drive innovation forward!

#artificial intelligence#convolutional neural network#machine learning#Deep Learning#CNNs#Neural Networks

0 notes

Text

Lock me up and throw away the key

1 note

·

View note

Text

Many different ways AI can help to detect counterfeit products

Merchandise which might be counterfeit have grown to be a severe problem for organizations at some point of, ensuing in financial losses, putting customer safety at danger, and undermining emblem believe. artificial intelligence (AI) is growing as a strong weapon inside the armoury towards counterfeit merchandise to battle this increasing danger. AI is becoming useful in several fields for recognizing faux items because to its ability to analyse substantial volumes of information and recognize complex styles.

In this newsletter examines the diverse ways AI is getting used to deal with this trouble and safeguard both companies and customers.

reputation and Authentication of pics

Verifying the legitimacy of things visually may be time-eating and error prone. via evaluating photos of true and suspect items, AI-powered photo reputation technology can be used to perceive counterfeits quickly and exactly. Convolutional Neural Networks (CNNs), a class of AI version, thrive on this area by recognizing traits of genuine gadgets and identifying differences in replicas that are fakes.

information analysis for Transparency in the deliver Chain

In-intensity deliver chain facts can be analysed by means of AI algorithms to song a product’s path from maker to purchaser. purple flags may be raised with the aid of any deviations, delays, or undocumented movements that propose the probability of faux items coming into the market. Transparency and tamper-proof statistics are ensured by using blockchain era and AI, increasing consider and obligation across the deliver chain.

NLP for evaluate evaluation: herbal Language Processing

To discover references of fake items, NLP, a subfield of AI, can also have a look at person feedback, discussion board conversations, and social media posts. This allows groups to take proactive steps to solve these issues quickly through giving them real-time information into growing counterfeit styles.

Verification of files the usage of gadget learning

To make their items look true, counterfeiters regularly create false documentation. system learning fashions powered by using AI may additionally study documents like warranties, invoices, and authenticity certifications to search for irregularities which could factor to fraud.

Integration of IoT and RFID

To permit actual-time monitoring and authentication, net of things (IoT) devices and RFID tags can be incorporated into objects. Inconsistencies or illegal get admission to may be located within the information from various resources the use of AI processing, which strengthens an anti-counterfeiting technique.

Read complete article on https://www.ajath.com/the-many-different-ways-ai-can-help-to-detect-counterfeit-products/

#artificial intelligence#AI-based Authentication#Image Recognition#Pattern Analysis#Deep Learning#Data Analysis#Blockchain Integration#Convolutional Neural Networks (CNN)#Predictive Analytics

1 note

·

View note

Text

DATA SCIENCE WITH PYTHON

Data science is a multidisciplinary field that uses scientific methods, algorithms, and statistical models to analyze structured and unstructured data, uncover patterns, make predictions, and support decision-making processes.

Data science leverages various techniques and tools from mathematics, statistics, computer science, and domain-specific knowledge to extract value from data. These techniques include regression analysis, classification, clustering, natural language processing (NLP), deep learning, data visualization, and more.

Data scientists often use programming languages like Python programming language and its associated libraries and tools for performing data analysis, extracting insights, and building predictive models.

Python has gained significant popularity in the data science community due to its simplicity, versatility, and extensive ecosystem of libraries specifically designed for data manipulation, visualization, and machine learning and utilizes libraries and frameworks such as pandas, NumPy, sci-kit-learn, TensorFlow, or PyTorch to implement data science workflows effectively.

Data science finds applications in various industries, including finance, healthcare, marketing, e-commerce, social media, manufacturing, and more. It plays a crucial role in solving complex problems, driving innovation, making data-driven decisions, and improving business performance.

Data science is already helping the airline industry, it optimizes plan routes and decides whether to schedule direct or connecting flights, forecast flight delays and offer promotional offers to individuals according to customers.

APPLICATIONS OF DATA SCIENCE

Product recommendations draw insights out of customers browsing history, and purchase history.

Data Science predicts the data by forecasting, let's take weather forecasting as an example

Data Science also aids in effective decision-making, Self-driving car is the best example

Data science helps in fraud detection, lets take example of COVID-19 vaccination

Several Benefits Of Taking Data Science Training:

Skill Development: A data science course provides structured learning and hands-on experience in various data science techniques, tools, and methodologies.

Career Opportunities: Data science is a rapidly growing field with a high demand for skilled professionals. By completing a data science course, you enhance your employability and open up a wide range of career opportunities.

In-Demand Skills: Data science skills are in high demand across industries. By acquiring proficiency in data analysis, machine learning, and data visualization, you position yourself as a valuable asset to organizations seeking to extract insights from large volumes of data to make data-driven decisions.

Problem-Solving Abilities: Data science courses equip you with a problem-solving mindset. You learn how to approach complex business problems, identify relevant data, analyze it, and derive actionable insights.

Hands-on Experience: Many data science courses offer hands-on projects and case studies that allow you to apply the concepts you learn. Working on real-world datasets and solving practical problems helps you gain practical experience and build a portfolio, which can be valuable when applying for data science positions.

Networking Opportunities: Joining a data science course gives you access to a community of like-minded individuals, including instructors, industry experts, and fellow students. Networking with professionals in the field can lead to valuable connections, mentorship, and potential job opportunities.

Continuous Learning: Data science is a rapidly evolving field with new techniques, algorithms, and tools emerging regularly.

Learn Data Science with Python to build a good and promising career with Nucot.

#data science#python#datasciencewithpython#data#programmer#ML#computer science#artificial intelligence#big data#machine learning#learn data science#data science training#data analytics#entrepreneur#data scientists#deep learning#big data analytics#neural networks#natural language processing#convolutional neural network#software engineer#computer vision

0 notes

Text

A Convolutional Neural Network (CNN) is a type of deep learning architecture that uses multiple layers of Convolution and Pooling to analyze and learn complex relationships in images and other grid-structured data. The Convolution layer applies filters to small sections of the input, while the Pooling layer reduces the spatial size of the feature maps generated by Convolution. The final layers of a CNN typically include fully connected layers that make predictions based on the learned features. CNNs are widely used in image classification, object detection, and other computer vision tasks.

0 notes

Text

Creating a number predictor with SpriteKit and CoreML

I started playing around with a proof-of-concept for a puzzle system for Indexing Your Heart the past day or so. I wanted to have a validation system that would be lenient enough to recognize what the player drew and not penalize the player for being a pixel off. As a result, I came to the conclusion that creating a machine learning-based solution might be the answer.

So, I played with the Core ML model for the MNIST digit dataset and created this proof-of-concept that predicts the number a player draws in the box. Surprisingly, the system works pretty well and efficiently. Results come back almost instantaneously, and it was easy to create a path and take a screenshot of the game scene.

If any of this fancies you, I encourage you to take a look at the source code on GitHub. I think there's some value here that I can explore with in Indexing Your Heart.

#swift#rambles#game development#spritekit#machinelearning#convolutional neural networks#microblog#development#ios 16#macos ventura

1 note

·

View note

Text

the thing is about resi is that i think the ppl who do like it just fine are probably more than the ppl who dislike it -- there's just a viciously vocal minority of shitty video game bros completely drowning out any positivity or even neutrality.

and the absolute fucking kicker is that this goes all the way to newspaper reviewers who also didn't like it bc it was nothing like the resi games but are professional enough to not say it outright that they don't like when the things are not about them.

#my blood boils when i think about how we may not get a s2 bc of whiny entitled assholes who can't deal with things being different#you know what a 20+ year old franchise needs? an influx of new fans. like that's just a standard thing that aging franchises need#it's still really popular but ppl have dropped off as things have gotten more convoluted with the plot and there's a high barrier to entry#things that andrew dabb is uniquely qualified to do: making big change-ups to old media properties#not that we got the size ending that he teased thanks to network interference but that possibility was a p big change#resi

0 notes

Text

Basic Convolutional Neural Network Architectures

Basic Convolutional Neural Network Architectures

There are many convolutional neural network (CNN) architectures. Those architectures differ in how the layers are structured, the elements used in each layer, and how they are designed. This affects the speed and the accuracy of the model which will help to perform various tasks.

The following are some of the common CNN architectures. The word “common” is referring to pre-trained weights which…

View On WordPress

0 notes

Text

HyperTransformer: A Example of a Self-Attention Mechanism For Supervised Learning

Subscribe

.t9ce7d96b-e3c9-448d-b1fd-97f643ade4ab { color: #fff; background: #222; border: 1px solid transparent; border-radius: undefinedpx; padding: 8px 21px; } .t9ce7d96b-e3c9-448d-b1fd-97f643ade4ab.place-top { margin-top: -10px; } .t9ce7d96b-e3c9-448d-b1fd-97f643ade4ab.place-top::before { content: “”; background-color: inherit; position: absolute; z-index: 2; width: 20px; height: 12px; }…

View On WordPress

#conventional-machine-learning#convolutional-neural-network#few-shot-learning#hypertransformer#parametric-model#small-target-cnn-architectures#supervised-model-generation#task-independent-embedding

0 notes

Text

youtube

In this video, we'll show you how to use TensorFlow and Mobilenet to train an image classification model through transfer learning.

We'll guide you through the process of preprocessing image data, fine-tuning a pre-trained Mobilenet model, and evaluating its performance using validation data.

The link for the video tutorial is here : https://youtu.be/xsBm_DTSbB0

I also shared the Python code in the video description.

Enjoy,

Eran

#TensorFlow #Mobilenet #ImageClassification #TransferLearning #Python #DeepLearning #MachineLearning #ArtificialIntelligence #PretrainedModels #ImageRecognition #OpenCV #ComputerVision #Cnn

1 note

·

View note