#uc Deb

Text

Constantly consistently rotating the unprepared casters arc 1 and 7 characters in my mind. Except it’s not like a microwave, it’s like one of those carnival rides that goes a mile a minute. They all have whiplash now.

#unprepared casters#uc arc 1#uc arc 7#wizards off the coast#dragons in dungeons#thavius#sir mister person#uc deb#sk-73#hope lovejoy#loquacious lily

37 notes

·

View notes

Text

Spoilers fory future writing but I am writing an unprepared casters (dragons in dungeons crew) highschool au. Would anyone be overly upset if I made it a polycule fic. I'm thinking Deb/Skeventy/Hope/Thavius but I won't do it if I get a lot of backlash for it.

17 notes

·

View notes

Text

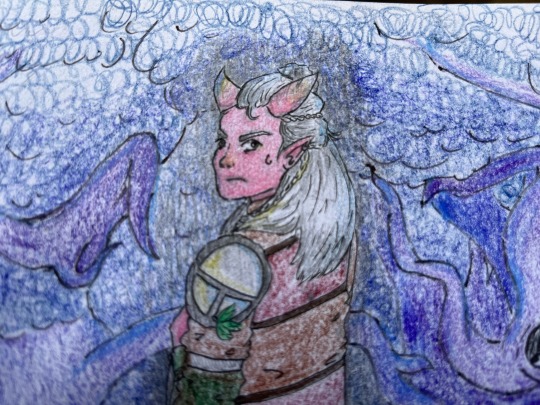

Deb in the feywild:

Yep you guessed it: this is fanart of some of the backstory Deb/Darreb’soloth had in Arc 7 of unprepared casters (as in what they did between arc 1 and arc 7 of the podcast). I used grace’s art for Deb/Darreb’soloth and Julia’s art for the background to arc 9, which takes place in the fey wild.

Closeups:

#unprepared casters#my art#uc#unprepared casters fanart#uc arc 7#Deb#uc Deb#darreb’soloth#fey wild#Deb unprepared casters#unprepared casters Deb#wizards off the coast#dnd#d&d#dungeons and dragons#uc-beepboop

29 notes

·

View notes

Text

Good morning to delusional "knights", tall rambly southern horse girls, soul-throwers, decrepit old men library robots and tiny midwestern tiefling bakers

#unprepared casters#dragons in dungeons#uc arc 1#wizards off the coast#uc arc 7#ah yes the crew that started it all!!#sir mister person#hope lovejoy#thavius#sk 73#skeventy#deb#deb unprepared casters#darrebsoloth#i can never seem to spell it right#i think i'm only missing arc 2 and 4?#i love how 'tall rambly horse girl' describes BOTH of jenny's uc characters lmao#the southern part only applies to hope tho

30 notes

·

View notes

Text

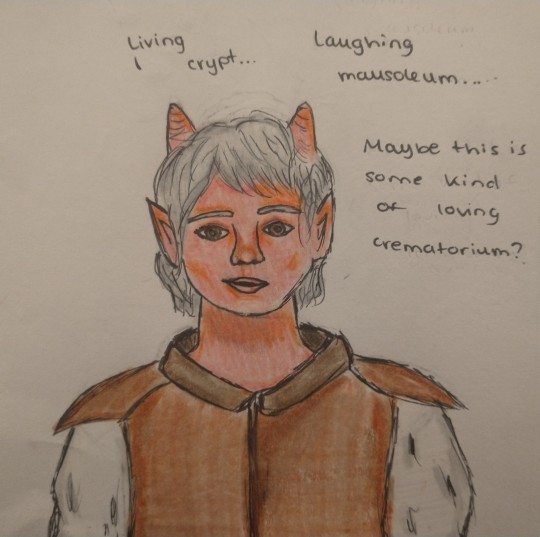

I guess i never finished my project of drawing funny lines from each arc

I've already done arc 1 but fuck it here's another one

#unprepared casters#uc fanart#unprepared casters fanart#deb#unprepared casters deb#uc arc 1#dragons in dungeons#art#my art#traditional art#this might not be the exact quote but it's been a while since i listened to it okayyyyy#ignore the fact that i forgot to give them eyelashes on their right eye

15 notes

·

View notes

Text

Info was taken under “Relationships” within Unprepared Casters (Web Series) tag in ao3.

Happy Valentines Day! (Is this a bad idea to initiate ship wars? Maybe. So if things get rough, I WILL CANCEL THIS IS YOUR WARNING)

If your ship hasn’t/won’t be in the polls, consider messaging me in my askbox for it OR even better, filling out this google form to have your ship be in the WILDCARD round(s). It’s appreciated!

#unprepared casters poll#poll#tumblr poll#uc tumblr poll#uc tumblr poll 2023#uc valentines tumblr poll#uc valentines poll#poll 1#valentines poll 1#uc valentines shipping poll#unprepared casters

37 notes

·

View notes

Text

The US government should create a new body to regulate artificial intelligence—and restrict work on language models like OpenAI’s GPT-4 to companies granted licenses to do so. That’s the recommendation of a bipartisan duo of senators, Democrat Richard Blumenthal and Republican Josh Hawley, who launched a legislative framework yesterday to serve as a blueprint for future laws and influence other bills before Congress.

Under the proposal, developing face recognition and other “high risk” applications of AI would also require a government license. To obtain one, companies would have to test AI models for potential harm before deployment, disclose instances when things go wrong after launch, and allow audits of AI models by an independent third party.

The framework also proposes that companies should publicly disclose details of the training data used to create an AI model and that people harmed by AI get a right to bring the company that created it to court.

The senators’ suggestions could be influential in the days and weeks ahead as debates intensify in Washington over how to regulate AI. Early next week, Blumenthal and Hawley will oversee a Senate subcommittee hearing about how to meaningfully hold businesses and governments accountable when they deploy AI systems that cause people harm or violate their rights. Microsoft president Brad Smith and the chief scientist of chipmaker Nvidia, William Dally, are due to testify.

A day later, senator Chuck Schumer will host the first in a series of meetings to discuss how to regulate AI, a challenge Schumer has referred to as “one of the most difficult things we’ve ever undertaken.” Tech executives with an interest in AI, including Mark Zuckerberg, Elon Musk, and the CEOs of Google, Microsoft, and Nvidia, make up about half the almost-two-dozen-strong guest list. Other attendees represent those likely to be subjected to AI algorithms and include trade union presidents from the Writers Guild and union federation AFL-CIO, and researchers who work on preventing AI from trampling human rights, including UC Berkeley’s Deb Raji and Humane Intelligence CEO and Twitter’s former ethical AI lead Rumman Chowdhury.

Anna Lenhart, who previously led an AI ethics initiative at IBM and is now a PhD candidate at the University of Maryland, says the senators’ legislative framework is a welcome sight after years of AI experts appearing in Congress to explain how and why AI should be regulated.

“It's really refreshing to see them take this on and not wait for a series of insight forums or a commission that's going to spend two years and talk to a bunch of experts to essentially create this same list,” Lenhart says.

But she’s unsure how any new AI oversight body could host the broad range of technical and legal knowledge required to oversee technology used in many areas from self-driving cars to health care to housing. “That’s where I get a bit stuck on the licensing regime idea,” Lenhart says.

The idea of using licenses to restrict who can develop powerful AI systems has gained traction in both industry and Congress. OpenAI CEO Sam Altman suggested licensing for AI developers during testimony before the Senate in May—a regulatory solution that might arguably help his company maintain its leading position. A bill proposed last month by senators Lindsay Graham and Elizabeth Warren would also require tech companies to secure a government AI license but only covers digital platforms above a certain size.

Lenhart is not the only AI or policy expert skeptical of the government licensing for AI development. In May the idea drew criticism from both libertarian-leaning political campaign group Americans for Prosperity, which fears it would stifle innovation, and from the digital rights nonprofit Electronic Frontier Foundation, which warns of industry capture by companies with money or influential connections. Perhaps in response, the framework unveiled yesterday recommends strong conflict of interest rules for staff at the AI oversight body.

Blumenthal and Hawley’s new framework for future AI regulation leaves some questions unanswered. It's not yet clear if oversight of AI would come from a newly-created federal agency or a group inside an existing federal agency. Nor have the senators specified what criteria would be used to determine if a certain use case is defined as high risk and requires a license to develop.

Michael Khoo, climate disinformation program director at environmental nonprofit Friends of the Earth says the new proposal looks like a good first step but that more details are necessary to properly evaluate its ideas. His organization is part of a coalition of environmental and tech accountability organizations that via a letter to Schumer, and a mobile billboard due to drive circles around Congress next week, are calling on lawmakers to prevent energy-intensive AI projects from making climate change worse.

Khoo agrees with the legislative framework’s call for documentation and public disclosure of adverse impacts, but says lawmakers shouldn’t let industry define what’s deemed harmful. He also wants members of Congress to demand businesses disclose how much energy it takes to train and deploy AI systems and consider the risk of accelerating the spread of misinformation when weighing the impact of AI models.

The legislative framework shows Congress considering a stricter approach to AI regulation than taken so far by the federal government, which has launched a voluntary risk-management framework and nonbinding AI bill of rights. The White House struck a voluntary agreement in July with eight major AI companies, including Google, Microsoft, and OpenAI, but also promised that firmer rules are coming. At a briefing on the AI company compact, White House special adviser for AI Ben Buchanan said keeping society safe from AI harms will require legislation.

6 notes

·

View notes

Text

literally cannot stop thinking about how much i love gus rachels RPing arguments. sir mister person and deb? impeccable. laz and nephilla? heart-wrenching. isadora and sybilla from todays ep? a masterpiece.

i usually hate when characters argue in dnd podcasts it’s always so good on UC

71 notes

·

View notes

Text

UC What If?-s I want to see

Arc 1 What if Deb told Sir Mister Person that he was not a knight

Arc 2 What if Sasha had not managed to finish the replica in time

Arc 2 What if they had accidentally disabled magic

Arc 2 What if Gem Lovejoy had been waiting for them on that roof, preferably together with Franklin by her side

Arc 3 What if Diarmad had died in that sewer (Diarmad I love you but that would be so interesting)

Arc 3 What if nobody was allowed to have Forcecage

Arc 4 What if Russ had died there

Arc 5 What if people had lost their memories: Lily, Phoenix, any party member (my apologies to James and Owen specifically but I love Angst a bit too much for my own good)

Arc 6 let Gus re-do the finale like he would do it now (I personally, from a storytelling perspective, love the finale, but I understand the issues he has with it and want to see what he would change and how that would work)

Arc 7 What if after Arc 1 they had stayed together

Arc 7 Episode 2 combat with Sir Mister poisoned (from the degunking alcohol) and Deb exhausted (from not going to sleep the night before)

Arc 7 Episode 2 combat where the dragonturtle is compelled to duell Sir Mister

Literally every possible scenario from Arc 8, including: what if Sybilla's arm would not have been an issue? What if Helga had not come to her in time (when she was banished)? What if Annie had not left? What if Sir Up would have stood by her side after all?

Arc 9 What if Julian could not mindlink

Arc 9 Episode 7 What if Render convinced Juniper

Arc 10 Episode 8 What if they read about what was happening

Arc 10 What if people actually liked Tiffany

20 notes

·

View notes

Text

Senators Call for Government Oversight and Licensing of ChatGPT-Level AI

A bipartisan pair of senators, Richard Blumenthal, a Democrat, and Josh Hawley, a Republican, have proposed that a new regulatory body be established by the US government to oversee artificial intelligence (AI). This body would also limit the development of language models, such as OpenAI’s GPT-4, to licensed companies. The senators’ proposal, which was unveiled as a legislative framework, is intended to guide future laws and influence pending legislation.

The proposed framework suggests that the development of facial recognition and other high-risk AI applications should require a government license. Companies seeking such a license would need to conduct pre-deployment tests on AI models for potential harm, report post-launch issues, and allow independent third-party audits of their AI models. The framework also calls for companies to publicly disclose the training data used to develop an AI model. Moreover, it proposes that individuals adversely affected by AI should have the legal right to sue the company responsible for its creation.

As discussions in Washington over AI regulation intensify, the senators’ proposal could have significant impact. In the coming week, Blumenthal and Hawley will preside over a Senate subcommittee hearing focused on holding corporations and governments accountable for the deployment of harmful or rights-violating AI systems. Expected to testify at the hearing are Microsoft President Brad Smith and Nvidia’s chief scientist, William Dally.

The following day, Senator Chuck Schumer will convene the first in a series of meetings to explore AI regulation, a task Schumer has described as “one of the most difficult things we’ve ever undertaken.” Tech executives with a vested interest in AI, such as Mark Zuckerberg, Elon Musk, and the CEOs of Google, Microsoft, and Nvidia, comprise about half of the nearly 24-person guest list. Other attendees include trade union presidents from the Writers Guild and AFL-CIO federation, as well as researchers dedicated to preventing AI from infringing on human rights, such as Deb Raji from UC Berkeley and Rumman Chowdhury, the CEO of Humane Intelligence and former ethical AI lead at Twitter.

Anna Lenhart, a former AI ethics initiative leader at IBM and current PhD candidate at the University of Maryland, views the senators’ legislative framework as a positive development after years of AI experts testifying before Congress on the need for AI regulation. However, Lenhart is uncertain about how a new AI oversight body could encompass the wide range of technical and legal expertise necessary to regulate technology used in sectors as diverse as autonomous vehicles, healthcare, and housing.

The concept of using licenses to limit who can develop powerful AI systems has gained popularity in both the industry and Congress. OpenAI CEO Sam Altman suggested AI developer licensing during his Senate testimony in May, a regulatory approach that could potentially benefit his company. A bill introduced last month by Senators Lindsay Graham and Elizabeth Warren also calls for tech companies to obtain a government AI license, but it only applies to digital platforms of a certain size.

However, not everyone in the AI or policy field supports government licensing for AI development. The proposal has been criticized by the libertarian-leaning political campaign group Americans for Prosperity, which worries it could hamper innovation, and by the digital rights nonprofit Electronic Frontier Foundation, which warns of potential industry capture by wealthy or influential companies. Perhaps in response to these concerns, the legislative framework proposed by Blumenthal and Hawley recommends robust conflict of interest rules for the new AI regulatory body. Section 2: The AI Regulatory Body Personnel. The proposed AI regulatory framework by Senators Blumenthal and Hawley leaves a few inquiries unresolved. It is still undetermined whether the AI supervision would be handled by a freshly established federal agency or a department within an existing one. The senators have not yet defined the standards that would be employed to identify high-risk use cases that necessitate a development license.

Michael Khoo, the director of the climate disinformation program at the environmental non-profit, Friends of the Earth, suggests that the new proposal appears to be a promising initial move, however, additional details are required for an adequate evaluation of its concepts. His organization is a part of a group of environmental and tech accountability organizations that are appealing to lawmakers through a letter to Schumer, and a mobile billboard scheduled to circle around Congress in the upcoming week, to inhibit energy-consuming AI projects from exacerbating climate change.

Khoo concurs with the legislative framework’s insistence on documentation and public disclosure of negative impacts, but argues that the industry should not be allowed to determine what is considered detrimental. He also encourages Congress members to require businesses to disclose the energy consumption involved in training and deploying AI systems, and to consider the risk of misinformation proliferation when assessing the impact of AI models.

The legislative framework indicates that Congress is contemplating a more stringent approach to AI regulation compared to the federal government’s previous efforts, which included a voluntary risk-management framework and a non-binding AI bill of rights. In July, the White House reached a voluntary agreement with eight major AI companies, including Google, Microsoft, and OpenAI, but also assured that stricter regulations are on the horizon. During a briefing on the AI company compact, Ben Buchanan, the White House special adviser for AI, stated that legislation is necessary to protect society from potential AI harms.

https://www.infradapt.com/news/senators-call-for-government-oversight-and-licensing-of-chatgpt/

1 note

·

View note

Text

Fwd: Graduate position: NorthernArizonaU.PhylogenomicsBiogeography

Begin forwarded message:

> From: [email protected]

> Subject: Graduate position: NorthernArizonaU.PhylogenomicsBiogeography

> Date: 23 September 2022 at 09:47:38 BST

> To: [email protected]

>

>

> The Northern Arizona Insect Lab of Systematics (NAILS) at Northern

> Arizona University (NAU) (https://ift.tt/9sOfeyJ) is recruiting

> two Ph.D. students to begin Fall 2023. These positions come with three

> full years of Research Assistantship support. Funding for these positions

> comes from a recently awarded NSF grant (NSF DEB# 2208620) to study the

> phylogeny and biogeography of the tiger beetle tribe Manticorini. One

> of the positions will be focused on the genus Omus, the night-stalking

> tiger beetles, and the other on Amblycheila, the giant tiger beetles

> of North America. Both projects will involve phylogenomic analysis of

> ultraconserved elements (UCEs) to look at population-level differences

> for species delimitation and reconstructing historical biogeography. They

> will also both involve extensive fieldwork.

>

> If you are interested in investigating (1) a potential ring species

> around the Central Valley of California; (2) biogeography of the Coastal

> Ranges of California and the Cascades of Oregon and Washington; and (3)

> enjoy a major taxonomic challenge, the Omus position is for you!

>

> If you are interested in investigating (1) niche reconstruction and the

> role of climate change in driving species distributions historically

> and in the future; (2) biogeography of the Madrean Sky Islands of

> southeastern Arizona; and (3) enjoy collecting throughout the Southwest,

> the Amblycheila position is for you!

>

> --Required qualifications--

>

> 1. A Bachelor’s degree in biology or a subfield of biology

> 2. Experience conducting fieldwork

> 3. Some background knowledge in biological systematics (e.g.,

> classes or prior research)

>

> --Preferred qualifications--

>

> 1. A Master’s degree in biology, or a subfield of biology --

> particularly entomology

> 2. Experience with molecular lab work – particularly DNA

> extractions and preparing samples for high-throughput sequencing

> 3. Experience conducting fieldwork in the focal regions for

> the project

>

> Applications for admission to graduate school for Fall 2023 are due

> December 2nd 2022 for full priority and February 15th 2023 at the

> latest. NAU has a set stipend for all Ph.D. positions that is currently

> $20,000 on a 9 month basis. The positions advertised here come with

> an additional $8,000 of funding for three summer semesters bringing the

> total stipend to $28,000 per year for three years. Teaching Assistantships

> are routinely available to extend support.

>

> If you are interested in applying, contact Grey Gustafson via email

> at [email protected].

>

> Please provide in the initial email the subject of “Ph.D. student

> application” and

>

> 1. A one-page statement of interest that summarizes your academic

> background, qualifications (see above), and interest in either or

> both of the advertised positions.

>

> 2. Your current C.V.

>

> For information about the Dept. of Biological Sciences at NAU visit

> (https://ift.tt/naXQthr)

>

> Grey Gustafson, Ph.D.

> Asst. Prof. ∣ Dept. of Biological Sciences

> Curator ∣ NAU Arthropod Collection

> Principle Investigator ∣ NAILS

> Northern Arizona University

> 617 S Beaver St

> Flagstaff, AZ 86011

> website: https://ift.tt/9sOfeyJ

>

> Grey T Gustafson

0 notes

Text

Evil Hope AU

or the AU where Hope and Gem have a "good" relationship

#animatic#animation#animation practice#unprepared casters#unprepared casters arc 1#unprepared casters arc 7#uc arc 1#uc arc 7#wizards off the coast#dragons in dungeons#dnd#dnd podcast#dungeons and dragons#dungeons and dragons podcast#sir mister person#thavius#deb#hope lovejoy#art#digital art#my art

42 notes

·

View notes

Text

I'm crying over the library scene again. "You are one of few people that I can say in earnest that no matter what happened you were a good person. We are defined by our whole lives not just a moment. Don't waste what's left thinking about the past." "I'll try not to."

#ink rambles#unprepared casters#uc deb#uc thavius#sk 73#sk-73#Deb and Thavius sharing emotions is making me feel things#i love them#my heart

16 notes

·

View notes

Text

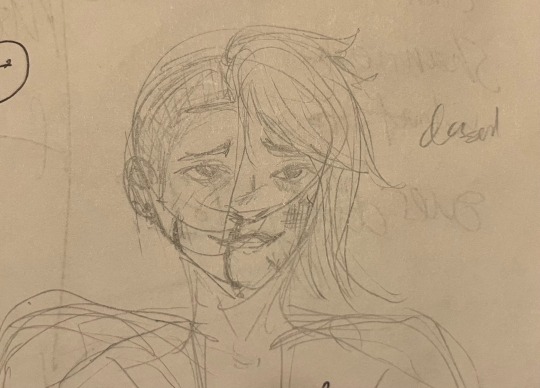

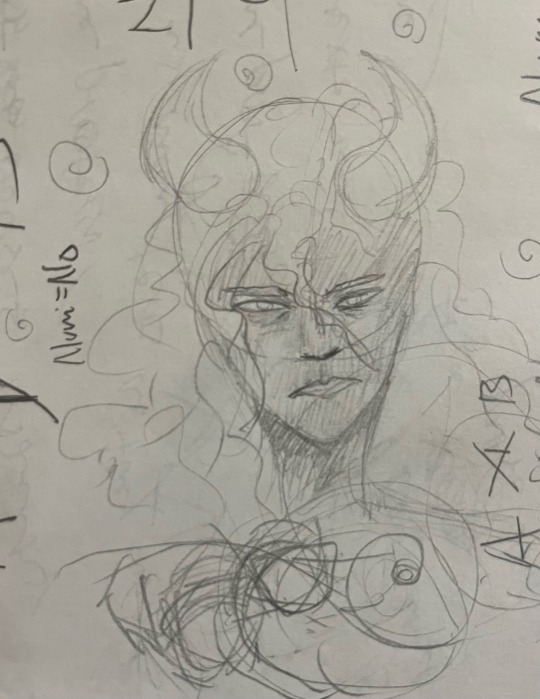

Some doodles of uc characters I found in the margins of my notes

#unprepared casters#unprepared casters sketches#my sketches#uc#nephila mori#in that one scene with laz of course#juniper Erydis#Deb#darreb’soloth#hope lovejoy#ok I have this issue where I draw two faces and they don’t look like each other at all#I swear I just drew Deb twice#it’s Deb#ok#that’s all#AH ALSO#Queen Luminessa#sketch dump#uc-beepboop

13 notes

·

View notes

Text

Hope: I came up with a new drinking game. Every time I'm sad, I take a drink.

Deb: Hope, that's just called alcoholism.

#unprepared casters#dungeons and dragons#dragons in dungeons#wizards off the coast#hope lovejoy#deb#darreb'soloth#how in the nine hells do you spell that name#uc incorrect quotes#incorrect quotes#i know the decanter of endless hope is more comparable to an energy drink but it's still funny#uc arc 1#uc arc 7

20 notes

·

View notes

Text

Happy Halloween! (Part 2)

Deb in a dragon onesie 🥰

#unprepared casters#dragons in dungeons#wizards off the coast#deb#unprepared casters deb#uc fanart#unprepared casters fanart#halloween#halloween costume

9 notes

·

View notes