#AI MUST be regulated

Text

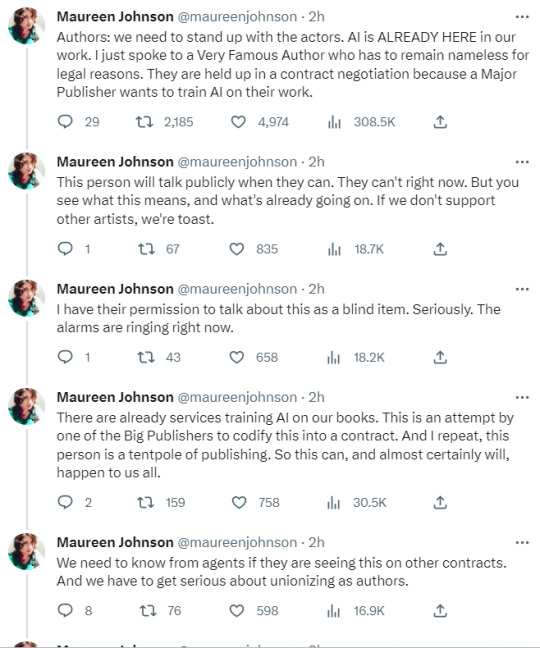

Alarm bells being rung by Maureen Johnson on AI and the Big Publishers

#I've already got academics wanting it put into their contracts that their work CAN'T be used to train AI#This is a scary time#ai MUST be regulated#on publishing#wga strike#sag aftra strike#sag-aftra strike#AUTHORS UNIONIZE

27K notes

·

View notes

Text

“A recent Goldman Sachs study found that generative AI tools could, in fact, impact 300 million full-time jobs worldwide, which could lead to a ‘significant disruption’ in the job market.”

“Insider talked to experts and conducted research to compile a list of jobs that are at highest-risk for replacement by AI.”

Tech jobs (Coders, computer programmers, software engineers, data analysts)

Media jobs (advertising, content creation, technical writing, journalism)

Legal industry jobs (paralegals, legal assistants)

Market research analysts

Teachers

Finance jobs (Financial analysts, personal financial advisors)

Traders (stock markets)

Graphic designers

Accountants

Customer service agents

"’We have to think about these things as productivity enhancing tools, as opposed to complete replacements,’ Anu Madgavkar, a partner at the McKinsey Global Institute, said.”

What will be eliminated from all of these industries is the ENTRY LEVEL JOB. You know, the jobs where newcomers gain valuable real-world experience and build their resumes? The jobs where you’re supposed to get your 1-2 years of experience before moving up to the big leagues (which remain inaccessible to applicants without the necessary experience, which they can no longer get, because so-called “low level” tasks will be completed by AI).

There’s more...

Wendy’s to test AI chatbot that takes your drive-thru order

“Wendy’s is not entirely a pioneer in this arena. Last year, McDonald’s opened a fully automated restaurant in Fort Worth, Texas, and deployed more AI-operated drive-thrus around the country.”

BT to cut 55,000 jobs with up to a fifth replaced by AI

“Chief executive Philip Jansen said ‘generative AI’ tools such as ChatGPT - which can write essays, scripts, poems, and solve computer coding in a human-like way - ‘gives us confidence we can go even further’.”

Why promoting AI is actually hurting accounting

“Accounting firms have bought into the AI hype and slowed their investment in personnel, believing they can rely more on machines and less on people.“

Will AI Replace Software Engineers?

“The truth is that AI is unlikely to replace high-value software engineers who build complex and innovative software. However, it could replace some low-value developers who build simple and repetitive software.”

#fuck AI#regulate AI#AI must be regulated#because corporations can't be trusted#because they are driven by greed#because when they say 'increased productivity' what they actually mean is increased profits - for the execs and shareholders not the workers#because when they say that AI should be used as a tool to support workers - what they really mean is eliminate entry level jobs#WGA strike 2023#i stand with the WGA

74 notes

·

View notes

Text

AI generated 'nature photographs' get reblogged onto my dash every once in a while and it makes me kind of sad. Nature on its own is so pretty! It's a genuine marvel that some photographers can be in the right place at the right time to capture a unique photo of a weasel riding a woodpecker, or a crow among doves, or a field of flowers catching the sunlight just right and glowing like a sea of jewels.

It leaves me feeling hollow when I check the source on an interesting 'photo' and realize that it isn't capturing a rare phenomenon that can happen right on this very earth, it doesn't exist at all.

If the people I follow genuinely like it, then ok, nothing I can do about that, but I wonder if a good portion of them just don't know that they aren't real photographs. Just because there's a source on a post doesn't mean it links to a real photographer. Be aware.

#I genuinely firmly believe that there MUST be some regulation surrounding ai photos of nature#and I don't mean it in a hippy 'don't let nature and machines intersect maaan!' typa way#I mean it in a 'our perception and knowledge of this world will be greatly altered and outright incorrect if we continue this' typa way#think of the dude who used ai 'art' of a rodent for his scientific paper. The image itself directly spreads incorrect information#this is not the same as photoshopping a watermelon bright blue and trolling people into believing it#anyway. all ai images should have a watermark embedded into them by default that's just my opinion

8 notes

·

View notes

Text

I don't think ai images and art have any real value tbh. Like do you do your thing if you wanna create Hot MILFs in your Area but using ChatGPT or whatever I don't care, but i genuinely don't think it should be grouped with traditional or digital art.

#Bc like. with specifically prompt based generators the process feels so vapid and hollow i get nothing out of it.#I've used generators to make pinups and that's it. and they all like suck but like i said they can do Hot MILFs in your Area#I'm neutral on AI art but lean towards I Don't Like It and don't care about it enough to really argue against it anymore.#I just think like. Some form of regulation would be. tactful?#like idk some restrictions requiring that you must give both the option to opt in or out of having stuff you've made used to train AI model#I'm not arguing for greater copyright do not get me wrong to not twist my words that will only end badly#Just. idk. it feels wrong? to have my art scraped to train ai. ik it isn't a direct one for one like oh it's scanning your shit#i know it's not literally copying and combining scraped art with other art to create stuff.#it just doesn't feel right if it isn't a consenting individuals work.

9 notes

·

View notes

Text

As we trickle on down the line with more people becoming more aware that there are some people out there in this world using AI language programs such as ChatGPT, OpenAI and CharacterAI to write positivity, come up with plotting ideas, or write thread replies for them, I'm going to add a new rule to my carrd which I'll be updating later today:

Please, for the love of God, do NOT use AI to write your replies to threads, come up with plots, or script your positivity messages for you. If I catch anyone doing this I will not interact with you.

#i'm an underachiever — out.#this is the bad place#we are just begging for Rehoboam or the Machine from Person of Interest to become our new reality at this rate#because there are practically no laws regulating AI learning yet#so it's like the wild west in a digital space#people taking incomplete fics from ao3 or wattpad or ff and asking AI to finish it for them AS IF IT'S NOT STRAIGHT UP PLAGIARISM#google docs being used to train the AI so i guess i'm gonna have to find a nice notebook and just write everything by hand#also#THIS HAS NOT PERSONALLY HAPPENED TO ME YET#i just saw a mutual who said they saw another mutual who saw a PSA which means that SOMEWHERE it happened to someone#i have also seen a few answered asks float across the for you page discussing the concerns about people posting AI generated fic on ao3#and arguing against or in defense of readers using OpenAI to finish writing incomplete fics that were abandoned by the author#which means that it must happen and at the rate things are going it is only a matter of time before it catches up to the rest of us#mel vs AI

9 notes

·

View notes

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

714 notes

·

View notes

Text

I do want to say, my views on AI “art” have changed somewhat. It was wrong of me to claim that it’s not wrong to use it in shitposts… there definitely is some degree of something problematic there.

Personally I feel like it’s one of those problems that’s best solved via lawmaking—specifically, AI generations shouldn’t be copywrite-able, and AI companies should be fined for art theft and “plagiarism”… even though it’s not directly plagiarism in the current legal sense. We definitely need ethical philosophers and lawmakers to spend some time defining exactly what is going on here.

But for civilians, using AI art is bad in the same nebulous sense that buying clothes from H&M or ordering stuff on Amazon is bad… it’s a very spread out, far away kind of badness, which makes it hard to quantify. And there’s no denying that in certain contexts, when applied in certain ways (with actual editing and artistic skill), AI can be a really interesting tool for artists and writers. Which again runs into the copywrite-ability thing. How much distance must be placed between the artist and the AI-generated inspiration in order to allow the artist to say “this work is fully mine?”

I can’t claim to know the answers to these issues. But I will say two things:

Ignoring AI shit isn’t going to make it go away. Our tumblr philosophy is wildly unpopular in the real world and most other places on the internet, and those who do start using AI are unfortunately gonna have a big leg up on those who don’t, especially as it gets better and better at avoiding human detection.

Treating AI as a fundamental, ontological evil is going to prevent us from having these deep conversations which are necessary for us—as a part of society—to figure out the ways to censure AI that are actually helpful to artists. We need strong unions making permanent deals now, we need laws in place that regulate AI use and the replacement of humans, and we need to get this technology out of the hands of huge megacorporations who want nothing more than to profit off our suffering.

I’ve seen the research. I knew AI was going to big years ago, and right now I know that it’s just going to get bigger. Nearly every job is in danger. We need to interact with this issue—sooner rather than later—or we risk losing all of our futures. And unfortunately, just as with many other things under capitalism, for the time being I think we have to allow some concessions. The issue is not 100% black or white. Certainly a dark, stormy grey of some sort.

But please don’t attack middle-aged cat-owners playing around with AI filters. Start a dialogue about the spectrum of morality present in every use of AI—from the good (recognizing cancer cells years in advance, finding awesome new metamaterials) to the bad (megacorporations replacing workers and stealing from artists) to the kinda ambiguous (shitposts, app filter that makes your dog look like a 16th century British royal for some reason).

And if you disagree with me, please don’t be hateful about it. I fully recognize that my current views might be wrong. I’m not a paragon of moral philosophy or anything. I’m just doing my best to live my life in a way that improves the world instead of detracting from it. That’s all any of us can do, in my opinion.

#the wizcourse#<- new tag for my pretentious preachy rants#this is—AGAIN—an issue where you should be calling your congressmen and protesting instead of making nasty posts at each other

847 notes

·

View notes

Text

The creation of sexually explicit "deepfake" images is to be made a criminal offence in England and Wales under a new law, the government says.

Under the legislation, anyone making explicit images of an adult without their consent will face a criminal record and unlimited fine.

It will apply regardless of whether the creator of an image intended to share it, the Ministry of Justice (MoJ) said.

And if the image is then shared more widely, they could face jail.

A deepfake is an image or video that has been digitally altered with the help of Artificial Intelligence (AI) to replace the face of one person with the face of another.

Recent years have seen the growing use of the technology to add the faces of celebrities or public figures - most often women - into pornographic films.

Channel 4 News presenter Cathy Newman, who discovered her own image used as part of a deepfake video, told BBC Radio 4's Today programme it was "incredibly invasive".

Ms Newman found she was a victim as part of a Channel 4 investigation into deepfakes.

"It was violating... it was kind of me and not me," she said, explaining the video displayed her face but not her hair.

Ms Newman said finding perpetrators is hard, adding: "This is a worldwide problem, so we can legislate in this jurisdiction, it might have no impact on whoever created my video or the millions of other videos that are out there."

She said the person who created the video is yet to be found.

Under the Online Safety Act, which was passed last year, the sharing of deepfakes was made illegal.

The new law will make it an offence for someone to create a sexually explicit deepfake - even if they have no intention to share it but "purely want to cause alarm, humiliation, or distress to the victim", the MoJ said.

Clare McGlynn, a law professor at Durham University who specialises in legal regulation of pornography and online abuse, told the Today programme the legislation has some limitations.

She said it "will only criminalise where you can prove a person created the image with the intention to cause distress", and this could create loopholes in the law.

It will apply to images of adults, because the law already covers this behaviour where the image is of a child, the MoJ said.

It will be introduced as an amendment to the Criminal Justice Bill, which is currently making its way through Parliament.

Minister for Victims and Safeguarding Laura Farris said the new law would send a "crystal clear message that making this material is immoral, often misogynistic, and a crime".

"The creation of deepfake sexual images is despicable and completely unacceptable irrespective of whether the image is shared," she said.

"It is another example of ways in which certain people seek to degrade and dehumanise others - especially women.

"And it has the capacity to cause catastrophic consequences if the material is shared more widely. This Government will not tolerate it."

Cally Jane Beech, a former Love Island contestant who earlier this year was the victim of deepfake images, said the law was a "huge step in further strengthening of the laws around deepfakes to better protect women".

"What I endured went beyond embarrassment or inconvenience," she said.

"Too many women continue to have their privacy, dignity, and identity compromised by malicious individuals in this way and it has to stop. People who do this need to be held accountable."

Shadow home secretary Yvette Cooper described the creation of the images as a "gross violation" of a person's autonomy and privacy and said it "must not be tolerated".

"Technology is increasingly being manipulated to manufacture misogynistic content and is emboldening perpetrators of Violence Against Women and Girls," she said.

"That's why it is vital for the government to get ahead of these fast-changing threats and not to be outpaced by them.

"It's essential that the police and prosecutors are equipped with the training and tools required to rigorously enforce these laws in order to stop perpetrators from acting with impunity."

279 notes

·

View notes

Text

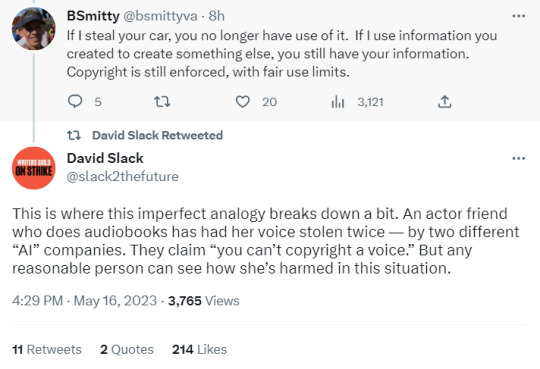

Thoughts from David Slack on 'AI' and copyright

(the voice theft in particular is really depressing)

#wga strike#wga strong#AI MUST be regulated#writer's guild of america strike#writer's guild of america#david slack#copyright#anyone familiar with me will know how I hate and love copyright#long post

16K notes

·

View notes

Text

The danger of AI isn’t necessarily AI in and of itself - the danger is what people will do with it.

How would a corporation, motivated solely by profit, use AI?

How about a politician, who only cares about winning?

Or a white supremacist, who only cares about spreading hate?

21 notes

·

View notes

Text

Be There For You

Pairing: Jaemin X Reader

Genre: Smut, PWP (let's be real for a second... just the 1st P) Doctor Jaemin, Public(ish), Good Ending (an ai good ending.) (i know nothing about medicine this is all fiction)

Warnings: Mind break (honestly, not that bad imo)

Word Count: 2k

“Come on, I know you need the money! Just think about it!” Your friend slammed the stack of papers in front of you as you sat at the dining table. “It’s just an experiment, how bad could it be?”

“That’s the problem! Those things are always too good to be true.” You looked at the first page, reading “Sleep Clinical Trial 6.4”. “Like, what if they do something weird to me?”

“They won’t! They’re a legit pharmaceutical company! They give you medication, you sleep for a bit, and then you get like, 500 dollars! It’s not that big of a deal.” She sighed, her hands on her hips. “I did the 6.2 experiment, trust me, I know these guys!”

“Ugh…” You groaned. “Is this a pyramid scheme or something? Why are you vouching so hard for them?”

“Listen, I don’t wanna be that person, but you haven’t paid rent in 2 months. I love you, but I can’t let you keep eating and sleeping here for free. If you’re not gonna get a job, you can either do this, or get out.”

You sighed. “I’ll go. I don’t promise anything, but I’ll at least hear them out.”

~

The next day, you made your way into the trial clinic on the address your friend gave you.

“Hello! How can I help you?” The receptionist, wearing a “Jeno” name tag, asked you.

“Um, hi, I’m here to participate in the sleep clinical trial.” You told him, already nervous and fidgeting.

“Oh, dear…” He clicked his tongue. “Honey, that was yesterday…”

“Fuck! I’m sorry…” You nearly bolted out the door.

“Wait! Miss!” Another man called after you. “If you’re willing to, I’m testing something else out right now, I’ll pay you $2000!”

You spun around. “What is it?”

He handed you a clipboard, with some papers attached. “Let me take you into my office.”

He dragged you into his office, the grandeur of it shocking you. Rows of bookshelves, giant velvet seats, and an oak wood desk that sat in the middle of the room, a leather chair in front of it. You focused your attention back to the doctor, who was wearing a suit with a lab coat over it, his hair an ash blue color, his glasses resting down his nose.

“Let me introduce myself, I’m Na Jaemin, MD. I’m testing a medicine right now, it’s a female hormone regulator.” You shook his hand as you sat on the leather seat, him sitting across from you.

You flipped through the papers. “What do I have to do for this, exactly?”

You read the first page. “Project E 1.0”

The subject will be given a shot containing an unlabelled test medication.

Effects may vary, but it will be used to treat PCOS and other hormone irregularities.

“You just have to take a shot, and I’ll do the rest. I’ll take your blood work, weight, physical changes… It’s supposed to be all good… hair growth, regulates your cholesterol, and makes your breasts bigger.”

“What are the possible side effects?” You asked.

“Hormones can cause a large amount of side effects, like birth control. Although, I must warn you that you are the first person to be administered this drug, which is why the pay is so good. There may be side effects we are unaware of.”

“...So basically you have no idea.” You rolled your eyes.

“Yes, but I’ll be here for you the whole time.”

You nodded. "I'll do it." After all, it seemed more good than bad, and $2000…

"You'll be taking a shot every 2 weeks, which I'll administer, then I'll have you report any changes in your mood, body, etcetera. Sign here, and then we can start!" Jaemin pointed to the last page of the stack he’d given you.

You quickly signed your sanity to Na Jaemin, MD.

~

You quickly realized the bad outweighed the good. Sure, your hair was healthier than it had ever been, your skin was glowing, your breasts grew…

But your back hurts from the weight gain and you’ve never been so horny in your life.

You were sweating, your vibrator overheating, and your cunt was drenched from the constant need of relief.

Your phone shined brightly in the dark of your bedroom. 2 weeks had passed. You needed to see your doctor again.

~

You were put together enough to make the average person think you were okay, but Jaemin could see through you, the pained look in your eyes familiar to him after working in the medical field for years. Mini skirt barely hiding the fact your juices were pouring down your legs, wishing you wore jeans, but you didn’t even have the strength to slide a pair up.

"So, I take it that the past two weeks haven't been the best?" Jaemin's pen clicked to the tempo of the clock ticking.

"No…" You rubbed your thighs together, your sweat sticking to the leather chair. You could practically feel yourself soaking the leather, still so wet, so needy. “I’ve… had a raised libido, I guess.” Your throat was dry, swallowing.

"Have you tried masturbating?" His words filled you with dread, not knowing if he fully understood.

"Everyday… Multiple times everyday. I haven't been able to sleep properly because of it…" You felt sticky, hot. You could practically smell Jaemin, the scent of his cologne, his musk, glancing at the way his hands moved as he wrote, the veins traveling up his arms. You nearly started drooling, noticing he didn’t have a ring on his finger, imagining his fingers inside you. You shook your head, knowing you couldn’t do this to yourself.

Jaemin kissed his teeth, the pop echoing in your ears. "Is a partner not an option?"

"No, I'm single… Can I go to the bathroom?" You were throbbing, practically able to feel the blood rushing to your clit, your panties rubbing against it too much to handle. You nearly toppled over as you stood up, dizzy, your legs too weak from needing to cum more than anything.

Jaemin stood up then helped you stand up. "Are you okay?" His arm on your waist, the scent of his cologne overwhelming you.

"Is… too much." You whimpered. "Need to cum right now."

Jaemin rushed you into a sterile, brightly lit patient room. He started laying you down onto the small, leather bed covered in a disposable sheet, then shutting and locking the door. "How long has this been going on?"

Tears ran down your face. "Since I took it…"

"Why hasn't it worn off?" He grumbled to himself, pacing around the room. "It's been 2 weeks, it should be out of your system…"

"It hurts…" You cried out.

"Oh, right… Fuck, you should've called me when it started! What should I do?" He touched your cheek, wiping your tears away, the chill of his hand shocking you.

"Make me cum." You cried out, your body burning up. "Doctor, make me cum…"

As he thought to himself, Jaemin thought about how he couldn't fuck a patient, how he was a professional, how he could probably give you a pain medication to make it stop. But then seeing you in agony made him reconsider the fact that he was the one who did this to you, that he was responsible… Then he realized how hard he was from listening to your cries and how much he wanted to help you cum.

Jaemin spread your legs open, sliding your panties off as they stuck to your cunt, soaked. "So wet, cute…" He muttered to himself. His hands grabbed your thighs, squeezing onto them to stabilize himself as he bent down to eat you out.

Licking up your wetness, Jaemin sucked on your clit, flicking at it with his tongue.

"Cumming!" You cried out, your back arching, hips grinding against Jaemin's tongue.

Your pretty, high pitched whines were enough to make Jaemin risk losing his job.

As Jaemin pulled away, he licked his lips and swallowed the taste of you. "Do you feel better?"

"A little…" You mumbled, sitting up, still dizzy, but less stressed.

Jaemin lowered the hospital bed using the remote on the end of the bed. "Bend over the bed."

“Doctor-” You stood up.

“Call me Jaemin, please.” Jaemin took your hand, spinning you around, then pressing his hand against your back, bending you over, his hand trailing up to the back of your head, pushing your cheek against the leather cushion. Your hands outstretched in front of you, gripping onto the paper-wrapped pillow.

“Jaemin…” You moaned, your voice only a little louder than a whisper, listening to the sounds of Jaemin removing his belt and unzipping his slacks.

His hand slid cupped your ass, watching you squirm from his touch. His tip rubbing your clit, covered in precum, getting even more wet from you. “I promise I’ll be gentle.”

“Please, hurry up.” You whimpered, crying into the pillow.

“Of course.” Jaemin plunged straight into you, grabbing onto your hips, pulling you towards him.

You never really got a good look at his cock, but it was safe to say that he was longer, thicker than your dildo, or any man you’ve ever been with before. Your back arched instinctively, not knowing how to handle a cock that big. Jaemin was only inside you for a little while, but you were already close, and after a few thrusts from Jaemin, you were at your limit. “Doctor, please!” You moaned out, biting onto the pillow as you came.

Jaemin didn’t know how to react, but he knew how he wanted to react. He grabbed you by your neck and shoved the rest of his length into you. His hand was pressed against your windpipe, making you unable to properly breathe, forcing you to arch your back so you could breathe properly. Once you did, Jaemin adjusted his hand, squeezing onto the sides of your throat.

“I told you to call me Jaemin.” He whispered into your ear.

“Sorry…” Jaemin’s pace began to quicken. “Sorry, I’m sorry!”

“You should’ve listened to me.” Jaemin started kissing your neck, nibbling, biting, trying to stop himself from pitifully moaning.

“Jaemin! Jaemin, I’m sorry!” His grip on your neck tightened, cutting off your jugular vein, making you feel euphoric.

“You’re so fucking nasty… It’s so beautiful.” He moaned into your ear while you whimpered, begging Jaemin for mercy.

You knew you were an overstimulated, noisy mess, left at the mercy of Jaemin, an overworked doctor who needed you to take his stress out into your pathetic hole.

Jaemin was certain Jeno could hear everything and prayed he would cover his ass. The way you screamed his name was worth it though. The way you shook when you came, the sweet squelching sounds you made, they were all beautiful.

“I’m gonna cum.” Jaemin bit down on your neck, already having left multiple bruises and bite marks on your pretty neck. Treating you how a dog bites down on his chew toy. Forcefully and mercilessly, like you couldn’t feel a thing.

And you basically couldn’t, afterall, all you could feel was how good Jaemin was fucking you. In that moment, Jaemin could’ve done anything he wanted to you and you would’ve nodded your head and taken it.

Which is why you didn’t even say anything when your insides were coated with a thick layer of Jaemin’s cum.

Jaemin left you for a few hours, letting you get the sleep you desperately needed.

~

When you woke up, you realized you were no longer in pain. Forcing yourself to get dressed, you made your way over to Jaemin’s office.

“You’re up?” He looked up at you over his glasses.

You nodded, sitting back down at the chair you were sitting in earlier, noticing the wet mark was still there.

“Are you still in pain?”

You shook your head, rubbing your arms.

“Shall we continue the trial then?” Jaemin stood up, removing his glasses and setting them on his desk.

“But I was in so much pain…” You looked up at Jaemin as he walked over to you.

“I think I have a solution to that.” Caressing your cheek and gently kissing your lips.

“Please fuck me again, Jaemin.”

“As my patient wishes.”

747 notes

·

View notes

Text

Announcing the Amare Games Festival 2024

Amare Games Festival 2024

Join us in April for the 2024 Amare Games Festival, a celebration of love and diversity in story-focused games!

For this festival we invite you to create or update a game that you believe falls under the Amare Genre tag!

What is Amare?

Why a festival?

Jams are an awesome way to get the creative juices flowing and challenge yourself to create a game in a short amount of time. However, there are already a lot of amazing projects in progress, so it's important to celebrate those and give people the opportunity to focus on setting and achieving goals with their existing projects as well.

Examples of Submissions

A new game.

A new demo or Vertical slice.

A new chapter update to an existing game.

Adding new features or content to an existing game.

The festival has fairly relaxed rules, but in general, we would like all participants to keep these in mind:

Rules:

You must Release or Update during the festival period. Work can be commenced before the start of the festival.

Please clearly label demos or first episodes as such. - This is to help us organise the entries

Please clearly state the exact update or new content that was added during the festival.

Submissions can be Free or Commercial.

NSFW must be properly labelled, but is fine as long as it adheres to Itch.io's TOS

No hate speech, (i.e. speech that promotes hatred, violence or discrimination against groups of people based on ethnicity, religion, gender, age, disability or sexual orientation.) or content that is patently offensive or intended to shock or disgust viewers.

Content Warnings are needed (Here is a link to help you with those if you need it!)

We currently do not support AI-generated games or games that make use of generative AI writing and art. Until legislation is put in place to ensure ethical training models that respect artist and creator copyright and regulate transparency on the use of AI in creative content, we will not allow the submission of games that utilise generative AI.

We kindly ask that you tag your game with the 'Amare' tag here on itch.io. This will ensure people who are watching the tag instead of just the jam page will see your game.

Join the discord to find others who are looking for festival partners, and to talk with other Amare Devs!

Sign up for the Festival on the Amare Games Festival Itch Page.

#Amare Games festival#Amare Game#Otome Game#Diverse Games#LGBTQA+ Games#Visual Novel#interactive fiction#text-based game#Romance Game#Game Jam#indie dev#Amare#Otome#indie game

138 notes

·

View notes

Text

These claims of an extinction-level threat come from the very same groups creating the technology, and their warning cries about future dangers is drowning out stories on the harms already occurring. There is an abundance of research documenting how AI systems are being used to steal art, control workers, expand private surveillance, and seek greater profits by replacing workforces with algorithms and underpaid workers in the Global South.

The sleight-of-hand trick shifting the debate to existential threats is a marketing strategy, as Los Angeles Times technology columnist Brian Merchant has pointed out. This is an attempt to generate interest in certain products, dictate the terms of regulation, and protect incumbents as they develop more products or further integrate AI into existing ones. After all, if AI is really so dangerous, then why did Altman threaten to pull OpenAI out of the European Union if it moved ahead with regulation? And why, in the same breath, did Altman propose a system that just so happens to protect incumbents: Only tech firms with enough resources to invest in AI safety should be allowed to develop AI.

[...]

First, the industry represents the culmination of various lines of thought that are deeply hostile to democracy. Silicon Valley owes its existence to state intervention and subsidy, at different times working to capture various institutions or wither their ability to interfere with private control of computation. Firms like Facebook, for example, have argued that they are not only too large or complex to break up but that their size must actually be protected and integrated into a geopolitical rivalry with China.

Second, that hostility to democracy, more than a singular product like AI, is amplified by profit-seeking behavior that constructs increasingly larger threats to humanity. It’s Silicon Valley and its emulators worldwide, not AI, that create and finance harmful technologies aimed at surveilling, controlling, exploiting, and killing human beings with little to no room for the public to object. The search for profits and excessive returns, with state subsidy and intervention clearing the way of competition, has and will create a litany of immoral business models and empower brutal regimes alongside “existential” threats. At home, this may look like the surveillance firm and government contractor Palantir creating a deportation machine that terrorizes migrants. Abroad, this may look like the Israeli apartheid state exporting spyware and weapons it has tested on Palestinians.

Third, this combination of a deeply antidemocratic ethos and a desire to seek profits while externalizing costs can’t simply be regulated out of Silicon Valley. These are fundamental attributes of the industry that trace back to the beginning of computation. These origins in optimizing plantations and crushing worker uprisings prefigure the obsession with surveillance and social control that shape what we are told technological innovations are for.

Taken altogether, why should we worry about some far-flung threat of a superintelligent AI when its creators—an insular network of libertarians building digital plantations, surveillance platforms, and killing machines—exist here and now? Their Smaugian hoards, their fundamentalist beliefs about markets and states and democracy, and their track record should be impossible to ignore.

311 notes

·

View notes

Text

'The Hollywood strike can and must win – for all of us, not just writers and actors'

Excerpt:

"The thousands of workers engaged in this enormous, multi-union Hollywood strike – something America hasn’t seen since 1960 – represent the frontline of two battles that matter to every single American. You might not naturally pick “writers and actors” to be the backbone of your national defense force, but hey, we go to war with the army we have. In this case, they are well suited to the fight at hand.

The first battle is between humanity and artificial intelligence. Just a year ago, it seemed like a remote issue, a vague and futuristic possibility, still tinged with a touch of sci-fi. Now, AI has advanced so fast that everyone has grasped that it has the potential to be to white-collar and creative work what industrial automation was to factory work. It is the sort of technology that you either put in a box, or it puts you in a box. And who is going to build the guardrails that prevent the worst abuses of AI?

Look around. Do you believe that the divided US government is going to rouse itself to concerted action in time to regulate this technology, which grows more potent by the month? They will not. Do you know, then, the only institutions with the power to enact binding rules about AI that protect working people from being destroyed by a bunch of impenetrable algorithms that can produce stilted, error-filled simulacrums of their work at a fraction of the cost?

Unions. When it comes to regulating AI now, before it gets so widely entrenched that it’s impossible to roll back, union contracts are the only game in town. And the WGA and Sag-Aftra contracts, which cover entire industries, will go down in history as some of the first major efforts to write reasonable rules governing this technology that is so new that even knowing what to ask for involves a lot of speculation.

What we know for sure is this: if we leave AI wholly in the hands of tech companies and their investors, it is absolutely certain that AI will be used in a way that takes the maximum amount of money out of the pockets of labor and deposits it in the accounts of executives and investment firms. These strikes are happening, in large part, to set the precedent that AI must benefit everyone rather than being a terrifying inequality accelerator that throws millions out of work to enrich a lucky few. Even if you have never been to Hollywood, you have a stake in this fight. AI will come for your own industry soon enough.

And that brings us to the second underlying battle here: the class war itself. When you scrape away the relatively small surface layer of glitz and glamor and wealthy stars, entertainment is just another industry, full of regular people doing regular work. The vast majority of those who write scripts or act in shows (or do carpentry, or catering, or chauffeuring, or the zillion other jobs that Hollywood produces) are not rich and famous. The CEOs that the entertainment unions are negotiating with make hundreds of millions of dollars, while most Sag-Aftra members don’t make the $26,000 a year necessary to qualify for the union’s health insurance plan."

Read more

#sag-aftra strike#sag strike#actors strike#current events#union solidarity#fans4wga#union strong#wga strong#i stand with the wga#wga strike#writers strike

181 notes

·

View notes

Text

Imagine using climate change as a standard for governments taking something seriously

132 notes

·

View notes