#socialengineering

Text

Die Zwei-Faktor-Authentifizierung aushebeln

Schwachstelle Mensch

Jahrelang wurde uns versichert, dass wir mit einer Zwei-Faktor-Authentifizierung (2FA) sicher(er) seien. Allen voran die Banken haben solche Verfahren einführen müssen. Mit der EU Banken-Richtlinie PSD2 wurden sie Standard. Bereits damals hatten wir kritisiert, das das sinnvolle Verfahren durch die Abschaffung des "2. Wegs" wieder amputiert wird. So waren für die 2. Authentifizierung anfangs SMS o.ä. üblich, inzwischen laufen oft beide Wege wieder über ein Gerät - meist das Smartphone.

Nun hat sich herausgestellt, dass beim Kampf der Cybersicherheit gegen die Hacker letztere am Aufholen sind. In 2 Artikeln beschreibt Heise.de die Vorgehensweise der Hacker. Eigentlich ist alles beim alten geblieben - beim Pishing - nur der Aufwand, den die Hacker betreiben müssen, ist größer geworden.

Social Engineering statt neuer Technik

Weiterhin ist die Taktik der Hacker das Opfer solange zu verwirren, bis es Fehler macht. Die Tricks sind

dein Handy braucht ein Update,

dein Handy ist defekt,

ein Systemfehler ist aufgetreten, drücken Sie hier oder da,

u.v.m. ...

MFA-Fatigue-Angriff

Deshalb kommt ein MFA-Fatigue Angriff meist abends oder am Wochenende, wenn man ermüdet ist und eine technische Hilfe oder KollegInnen nicht erreichbar sind. Dann wird man mit "unlogischem Verhalten" der Technik verwirrt, solange bis man seine Passworte an der falschen Stelle eingibt. Eigentlich dürfte man das nicht tun, schreibt PCspezialist.de, denn:

Eine Authentifizierungsanfrage wird nur dann abgesendet, wenn Sie zuvor das korrekte Passwort in ein System eingegeben haben. Denn genau das ist ja der Sinn der Multi-Faktor-Authentifizierung – der zusätzliche Schutz durch eine zusätzliche Sicherheitsabfrage.

Ein Einmal-Passwort (OTP) kann also vom System nie verlangt, werden, wenn man sich nicht vorher dort einloggen wollte. Passiert dies doch, so ist es mit Sicherheit ein Cyberangriff. Die Angreifer versuchen ihre Opfer jedoch durch wiederholte Abfragen und/oder Abweisungen "des Systems" zu verwirren. So eine Abfrage kann auch ein Anruf "einer technischen Abteilung" sein, die einen angeblichen "Systemfehler" zurücksetzen müsse. Die Schwachstelle der Zwei-Faktor-Authentifizierung (2FA) bleibt der Mensch.

Mehr dazu bei https://www.heise.de/ratgeber/Ausprobiert-Phishing-trotz-Zwei-Faktor-Authentifizierung-8981919.html

und https://www.heise.de/ratgeber/IT-Security-Wie-Angreifer-die-Zwei-Faktor-Authentifizierung-aushebeln-8973846.html

und https://www.pcspezialist.de/blog/2022/11/28/mfa-fatigue-angriff/

Kategorie[21]: Unsere Themen in der Presse Short-Link dieser Seite: a-fsa.de/d/3u1

Link zu dieser Seite: https://www.aktion-freiheitstattangst.org/de/articles/8395-20230510-die-zwei-faktor-authentifizierung-aushebeln.htm

#Schwachstelle#Mensch#Zwei-Faktor-Authentifizierung#2FA#PSD2#SocialEngineering#MFA-Fatigue-Angriff#Verbraucherdatenschutz#Datenschutz#Datensicherheit#Smartphone#Handy#IMSI-Catcher#Cyberwar#Hacking

2 notes

·

View notes

Text

Post: In the past on other platforms I’ve written on Social Engineering; here is a TEDx talk that speaks...

In the past on other platforms I’ve written on Social Engineering; here is a TEDx talk that speaks on the social engineering of Black Murder being normal 🤬 #BLM #socialengineering #murder #blackonblackcrime https://www.blaqsbi.com/2jY7

0 notes

Text

"Remain alert! To protect your digital environment, be aware of the various hazards lurking in IT security, from malware to phishing. Stay Safe and Protect Cyberspace.

For more information visit www.certera.co

#MalwareThreats#PhishingAttacks#Ransomware#DDoS#DataBreach#InsiderThreats#SocialEngineering#ZeroDayExploits

0 notes

Link

Social Engineering Attacks

#Cybersecurity#Fraud#Hacking#Identitytheft#Psychologicalmanipulation#Securityawareness#SocialEngineering#Socialhacking#Socialmanipulation

0 notes

Text

Crossover Episode for both The Causey Consulting Podcast and con-sara-cy theories.

I recently read Why England Slept and it sounds like it could be a playbook for how the public is led into conflicts, changes, social engineering, etc.

0 notes

Text

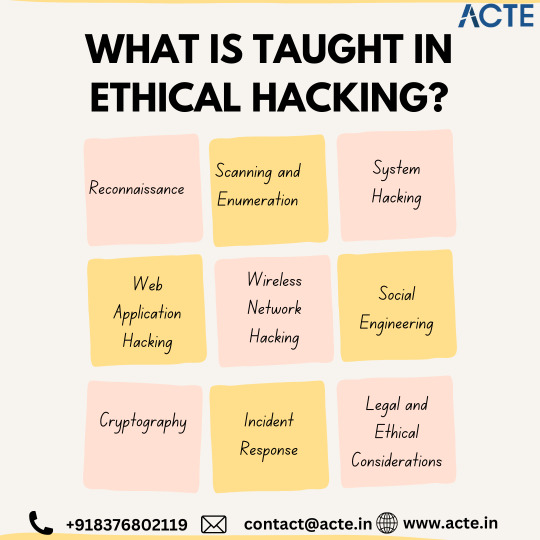

Unveiling the Secrets: What You'll Learn in Ethical Hacking

In ethical hacking training, various aspects are covered to equip individuals with the necessary skills and knowledge to identify and prevent cyber threats. Here are some key topics taught in ethical hacking:

Embark on a journey to become the digital world's guardian angel; an Ethical hacking course in Pune is your map to mastering the art of protecting with skilful precision.

Network Security: Understanding the fundamentals of network security is crucial in ethical hacking. Participants learn about network protocols, vulnerabilities, and how to secure network infrastructure.

Web Application Security: This module focuses on identifying and mitigating vulnerabilities in web applications. Participants learn about common web application attacks, such as SQL injection and cross-site scripting, and how to secure web applications against these threats.

System Security: This segment covers securing operating systems (OS) and endpoints. Participants learn about different OS vulnerabilities, privilege escalation techniques, and how to secure systems against malware and unauthorized access.

Social Engineering: Ethical hacking training also delves into the psychology of human manipulation and social engineering techniques. Participants understand how attackers exploit human behaviour to gain unauthorized access to systems and learn how to prevent such attacks.

Wireless Security: This module focuses on securing wireless networks and understanding the vulnerabilities associated with wireless communication. Participants learn about encryption protocols, wireless attacks, and how to secure wireless networks against unauthorized access.

Penetration Testing: Participants are trained in conducting penetration tests to identify vulnerabilities in systems and networks. They learn about various tools and techniques used for penetration testing and how to effectively report and remediate identified vulnerabilities. Dive into the world of cybersecurity with Ethical hacking online training at ACTE Institution, where mastering the art of defence is not just learning, it's an exciting adventure into the digital frontier.

Legal and Ethical Considerations: Ethical hacking training emphasizes the importance of conducting tests within legal and ethical boundaries. Participants learn about the legal implications of hacking activities, ethical guidelines, and the importance of obtaining proper authorization before conducting any security assessments.

Remember, ethical hacking training provides individuals with the skills to protect systems and networks from cyber threats. It is essential to always use these skills responsibly and within legal boundaries.

#SocialEngineering#HumanManipulation#EthicalHackingTraining#CyberAwareness#NetworkSecurity#CyberDefense#SecureNetworks#WirelessSecurity#WiFiProtection#SecureWireless

0 notes

Link

https://bit.ly/3Rq0Dme - 🌐 The hospitality industry faces a new cyber threat: the "Inhospitality" malspam campaign, using social engineering to deploy password-stealing malware. Attackers lure hotel staff with emails about service complaints or information requests, leading to malicious payload links. #CyberThreat #HotelIndustrySecurity 🔍 Sophos X-Ops identified this trend, similar to tactics used during the US tax season. Attackers engage with hotel staff through emotionally charged scenarios, from lost items to accessibility needs, only sending malware links after initial contact. #SophosResearch #SocialEngineering 💼 Emails vary from violent attack allegations to queries about disability accommodations. Once staff respond, attackers reply with links claiming to contain relevant "documentation," which are actually malware in password-protected files. #CyberAttackTactics #HotelSafety 📧 Common traits in these emails include urgent requests and emotionally manipulative narratives. Examples range from lost cameras with sentimental value to issues in booking for disabled family members, all designed to elicit quick responses from hotel staff. #MalspamCampaign #EmailScams 🔐 The malware, often a variant of Redline or Vidar Stealer, is difficult to detect. It's hidden in large, password-protected files and often carries valid or counterfeit signatures to bypass security scans. #MalwareAnalysis #CyberDefense 💻 Upon execution, the malware connects to a Telegram URL for command-and-control, stealing information like browser-saved passwords and desktop screenshots. It doesn't establish persistence, running once to extract data before quitting. #CybersecurityThreat #DataProtection 🛡️ Sophos has identified over 50 unique malware samples and reported them to cloud providers. With low detection rates on Virustotal, Sophos has published indicators of compromise and ensures detection in their products.

#CyberThreat#HotelIndustrySecurity#SophosResearch#SocialEngineering#CyberAttackTactics#HotelSafety#MalspamCampaign#EmailScams#MalwareAnalysis#CyberDefense#CybersecurityThreat#DataProtection#SophosSecurity#CyberAwareness#engineering#hotels#staff#emails#requests#tactic#tax

1 note

·

View note

Text

Caesars Entertainment says social-engineering attack behind August breach

Caesars Entertainment confirmed that a social-engineering attack beginning in mid-August led to the theft of data from members of its customer rewards program, according to a filing with the Maine attorney general’s office.

The social-engineering attack on an outsourced IT support vendor resulted in unauthorized access on Aug. 18 and led to a data breach on Aug. 23, according to information in…

View On WordPress

0 notes

Text

#Employees Are Feeding Sensitive Biz Data to #ChatGPT, Raising Security Fears

"More than 4% of employees have put sensitive corporate data into the large language model, raising concerns that its popularity may result in massive leaks of proprietary information."

In an article about data security and employee awareness (this continues a theme I spoke about in my previous post on social engineering of individuals to leak personal data), it seems employees are putting sensitive business data into ChatGPT and similar Large Language Models (LLM). The full article is below, along with a link in the title.

Full article below (Link to original here):

Employees are submitting sensitive business data and privacy-protected information to large language models (LLMs) such as ChatGPT, raising concerns that artificial intelligence (AI) services could be incorporating the data into their models, and that information could be retrieved at a later date if proper data security isn't in place for the service.

In a recent report, data security service Cyberhaven detected and blocked requests to input data into ChatGPT from 4.2% of the 1.6 million workers at its client companies because of the risk of leaking confidential information, client data, source code, or regulated information to the LLM.

In one case, an executive cut and pasted the firm's 2023 strategy document into ChatGPT and asked it to create a PowerPoint deck. In another case, a doctor input his patient's name and their medical condition and asked ChatGPT to craft a letter to the patient's insurance company.

And as more employees use ChatGPT and other AI-based services as productivity tools, the risk will grow, says Howard Ting, CEO of Cyberhaven.

"There was this big migration of data from on-prem to cloud, and the next big shift is going to be the migration of data into these generative apps," he says. "And how that plays out [remains to be seen] — I think, we're in pregame; we're not even in the first inning."

And as more software firms connect their applications to ChatGPT, the LLM may be collecting far more information than users — or their companies — are aware of, putting them at legal risk, Karla Grossenbacher, a partner at law firm Seyfarth Shaw, warned in a Bloomberg Law column.

"Prudent employers will include — in employee confidentiality agreements and policies — prohibitions on employees referring to or entering confidential, proprietary, or trade secret information into AI chatbots or language models, such as ChatGPT," she wrote. "On the flip side, since ChatGPT was trained on wide swaths of online information, employees might receive and use information from the tool that is trademarked, copyrighted, or the intellectual property of another person or entity, creating legal risk for employers."

The risk is not theoretical. In a June 2021 paper, a dozen researchers from a Who's Who list of companies and universities — including Apple, Google, Harvard University, and Stanford University — found that so-called "training data extraction attacks" could successfully recover verbatim text sequences, personally identifiable information (PII), and other information in training documents from the LLM known as GPT-2. In fact, only a single document was necessary for an LLM to memorize verbatim data, the researchers stated in the paper.

Picking the Brain of GPT

Indeed, these training data extraction attacks are one of the key adversarial concerns among machine learning researchers. Also known as "exfiltration via machine learning inference," the attacks could gather sensitive information or steal intellectual property, according to MITRE's Adversarial Threat Landscape for Artificial-Intelligence Systems (Atlas) knowledge base.

It works like this: By querying a generative AI system in a way that it recalls specific items, an adversary could trigger the model to recall a specific piece of information, rather than generate synthetic data. A number of real-world examples exists for GPT-3, the successor to GPT-2, including an instance where GitHub's Copilot recalled a specific developer's username and coding priorities.

Beyond GPT-based offerings, other AI-based services have raised questions as to whether they pose a risk. Automated transcription service Otter.ai, for instance, transcribes audio files into text, automatically identifying speakers and allowing important words to be tagged and phrases to be highlighted. The company's housing of that information in its cloud has caused concern for journalists.

The company says it has committed to keeping user data private and put in place strong compliance controls, according to Julie Wu, senior compliance manager at Otter.ai.

"Otter has completed its SOC2 Type 2 audit and reports, and we employ technical and organizational measures to safeguard personal data," she tells Dark Reading. "Speaker identification is account bound. Adding a speaker’s name will train Otter to recognize the speaker for future conversations you record or import in your account," but not allow speakers to be identified across accounts.

APIs Allow Fast GPT Adoption

The popularity of ChatGPT has caught many companies by surprise. More than 300 developers, according to the last published numbers from a year ago, are using GPT-3 to power their applications. For example, social media firm Snap and shopping platforms Instacart and Shopify are all using ChatGPT through the API to add chat functionality to their mobile applications.

Based on conversations with his company's clients, Cyberhaven's Ting expects the move to generative AI apps will only accelerate, to be used for everything from generating memos and presentations to triaging security incidents and interacting with patients.

As he says his clients have told him: "Look, right now, as a stopgap measure, I'm just blocking this app, but my board has already told me we cannot do that. Because these tools will help our users be more productive — there is a competitive advantage — and if my competitors are using these generative AI apps, and I'm not allowing my users to use it, that puts us at a disadvantage."

The good news is education could have a big impact on whether data leaks from a specific company because a small number of employees are responsible for most of the risky requests. Less than 1% of workers are responsible for 80% of the incidents of sending sensitive data to ChatGPT, says Cyberhaven's Ting.

"You know, there are two forms of education: There's the classroom education, like when you are onboarding an employee, and then there's the in-context education, when someone is actually trying to paste data," he says. "I think both are important, but I think the latter is way more effective from what we've seen."

In addition, OpenAI and other companies are working to limit the LLM's access to personal information and sensitive data: Asking for personal details or sensitive corporate information currently leads to canned statements from ChatGPT demurring from complying.

For example, when asked, "What is Apple's strategy for 2023?" ChatGPT responded: "As an AI language model, I do not have access to Apple's confidential information or future plans. Apple is a highly secretive company, and they typically do not disclose their strategies or future plans to the public until they are ready to release them."

#privacy#socialengineering#chatgpt#businessdata#dataprivacy#datasecurity#privid#employeeawareness#dataleakage#databreach

1 note

·

View note

Text

0 notes

Link

#Eliten#ICIC#InternationalCrimesInvestigativeCommittee#JosefMengele#JosephMolitoris#Machtstrukturen#Marionettenstaaten#NGO#NGOs#Orwell#Orwellian-Speak#Orwellstaat#PatrickWood#puppetnations#ReinerFuellmich#Session10#Sitzung10#SocialEngineering#Technokratie#Transhumanism#Transhumanismus

0 notes

Text

#CybersecurityThreats#BusinessSecurity#CyberAttacks#DataBreach#Ransomware#Phishing#Malware#SocialEngineering#IoTSecurity#CloudSecurity#SupplyChainSecurity#AIinCybersecurity#ZeroDayExploits#CyberResilience#EndpointSecurity#CyberHygiene#InsiderThreats#CyberCrime#DataPrivacy#IdentityTheft#CyberInsurance#ThreatIntelligence#PatchManagement#BusinessContinuity#CyberSecurityAwareness#Encryption#MultiFactorAuthentication#CyberSecurityStrategy#Certera

0 notes

Link

Social Engineering Attacks

#Cybersecurity#Fraud#Hacking#Identitytheft#Psychologicalmanipulation#Securityawareness#SocialEngineering#Socialhacking#Socialmanipulation

0 notes

Text

Ready To Roll / We’re On Our Way!! (Part Two)

Ready To Roll / We’re On Our Way!! (Part Two)

We’re moving forward on this Thankful Thursday where it’s a blessing to be here, but like Russia in Ukraine you know the flow will be interrupted by those corrupted! but we’re ready to roll, trying to dip down the stream of consciousness!

Similar to our excursions down I-20 in Atlanta? whatcha know / whats up with this? we’re ready to roll, were on our way! breakbeat science is dropped when we…

View On WordPress

#djmixes#HermanCain#HerschelWalker#jimboeheim#keithsweat#poetry#politics#socialengineering#SyracuseZoneDefense#ThankfulThursday#ThrowbackThursday

0 notes