#A106

Text

Lab 7 LAN party

#atom the beginning#umataro tenma#hiroshi ochanomizu#A106#counterstrike#that one doujin where its like oh noo your hammock broke we have to sleep on the couch together?#well i think they would have duct taped him to the ceiling instead#melondraw

879 notes

·

View notes

Text

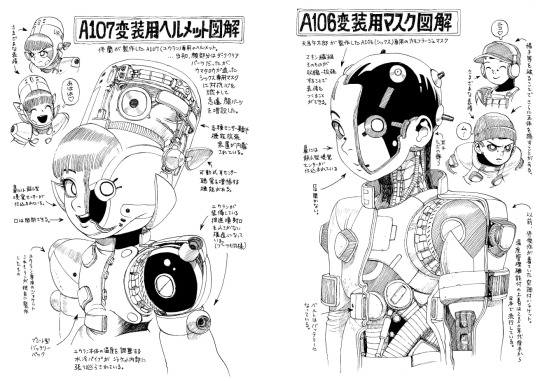

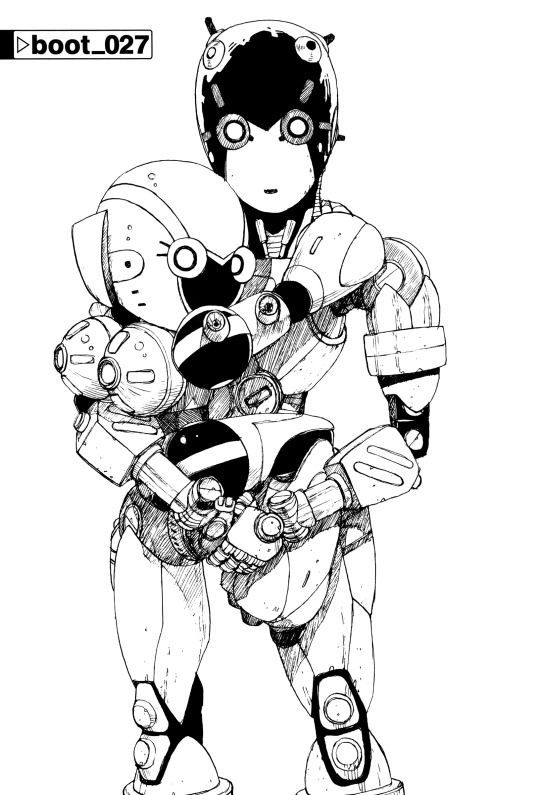

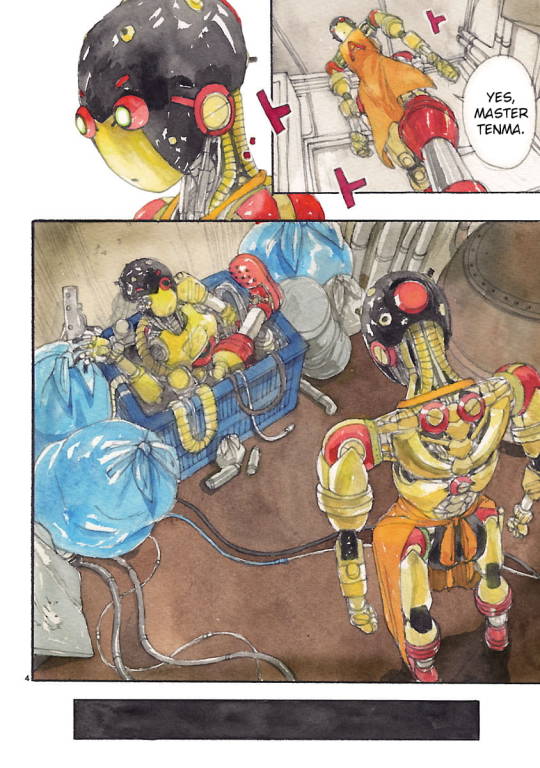

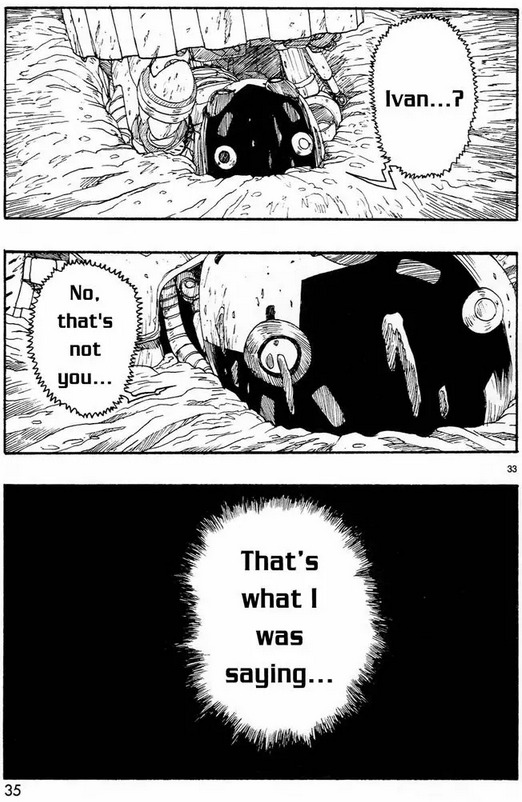

Character design artwork for A107 and A106 in Atom: The Beginning, featuring their pre-timeskip and post-timeskip character designs together.

68 notes

·

View notes

Text

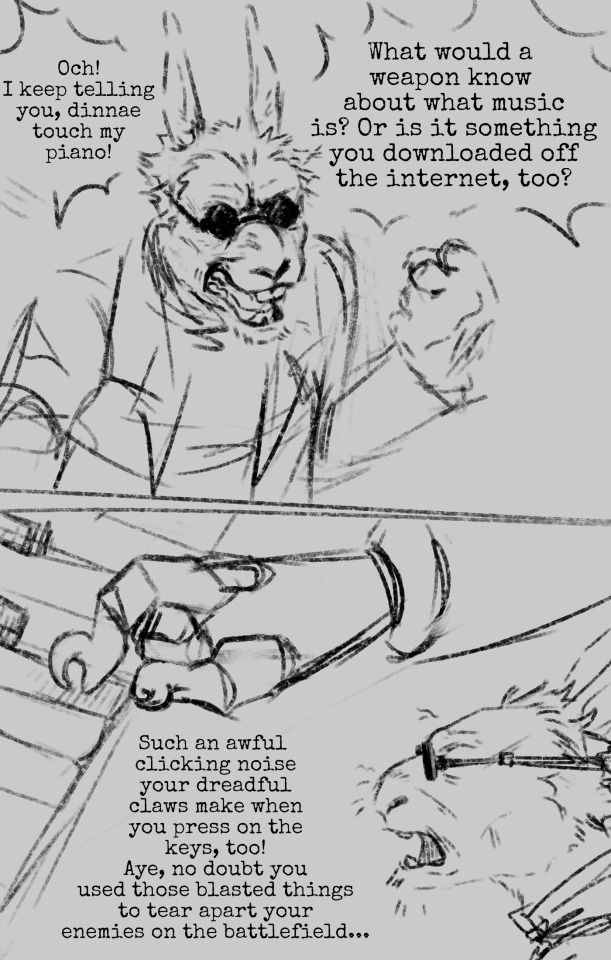

i hand you these sketches like pocket change and lint

#pkstarstruck art#fanart#sketches#artist on tumblr#digital art#alternate universe#mighty atom#pluto naoki urasawa#atom the beginning#furry au#hiroshi ochanomizu#umataro tenma#north no. 2#paul duncan#astro boy#a106

54 notes

·

View notes

Text

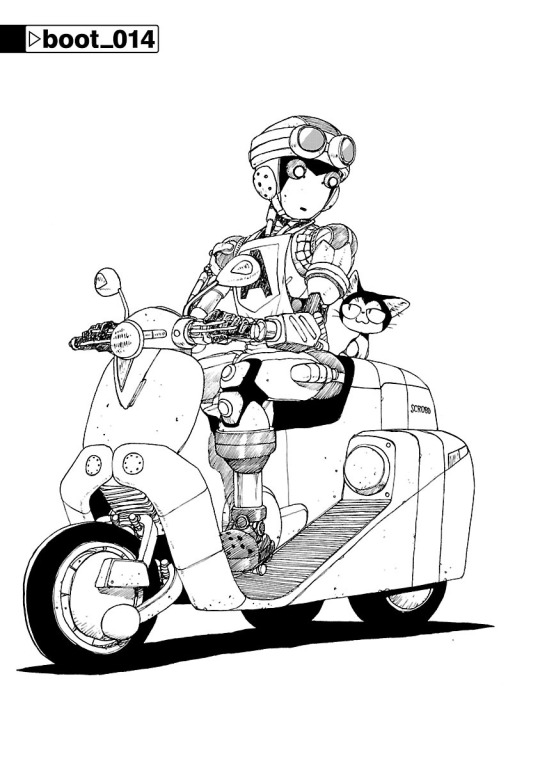

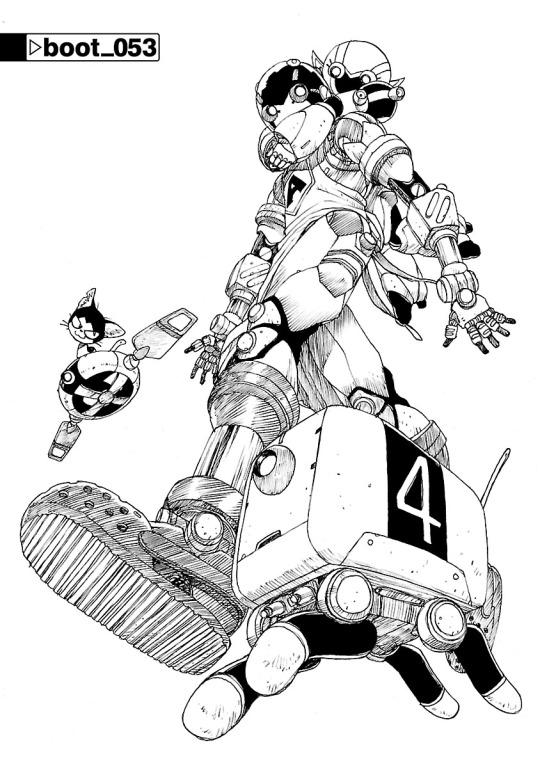

Atom: The Beginning various chapter covers

#i ordered the first 4 books and i reread everything again jahjkdl#atom#a106#a107#uran#atom the beginning#astro boy

167 notes

·

View notes

Text

ATB MS Paint doodles

167 notes

·

View notes

Text

#Atom#atom: the new beginning#A106#astro boy#tenma umataro#Hiroshi ochanomizu#motoko tsutsumi#Ran ochanomizu

80 notes

·

View notes

Text

Crocs

#drawing robots is hard#im 99% sure half the anatomy is accurate but oh well#my art#digital drawing#digital art#atom the beginning#atom: the beginning#a106#atom the beginning a106

87 notes

·

View notes

Text

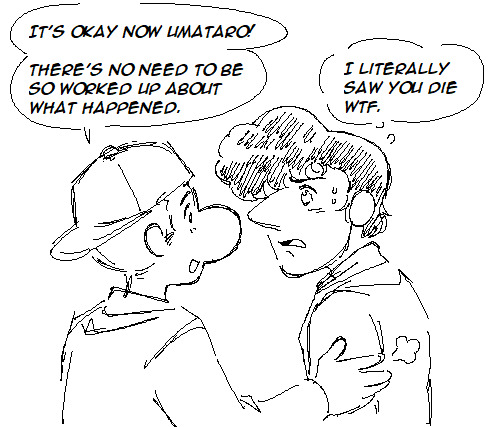

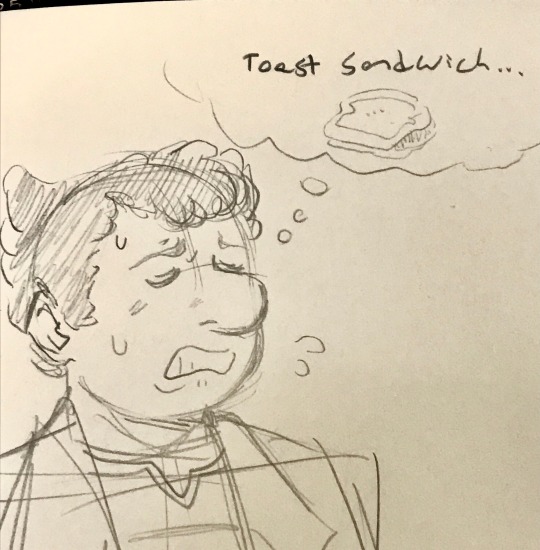

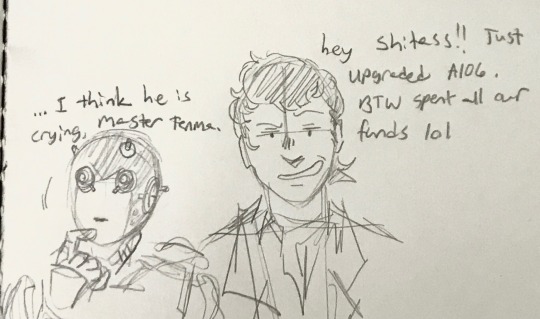

atb doodles

not sure if someone did this already but i had a sudden urge to draw it

#atom the beginning#atb#umataro tenma#hiroshi ochanomizu#dr tenma#professor ochanomizu#tetsuwan atom#astro boy#hirouma#a106

31 notes

·

View notes

Text

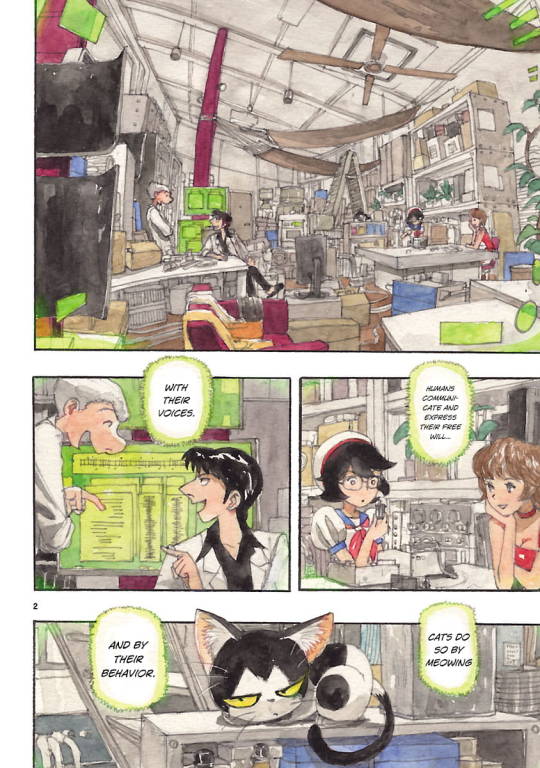

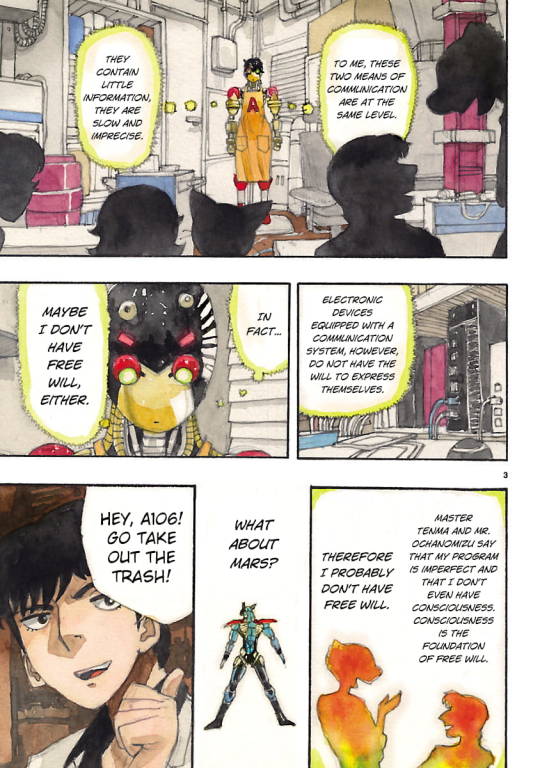

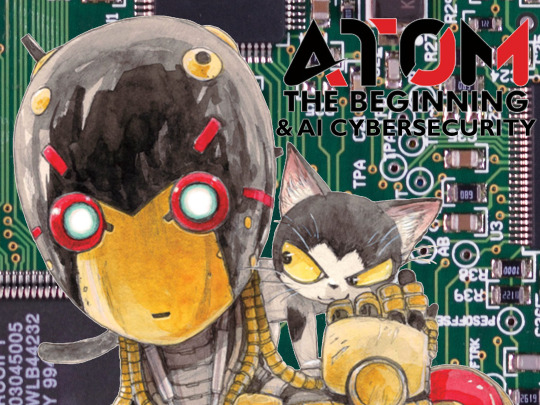

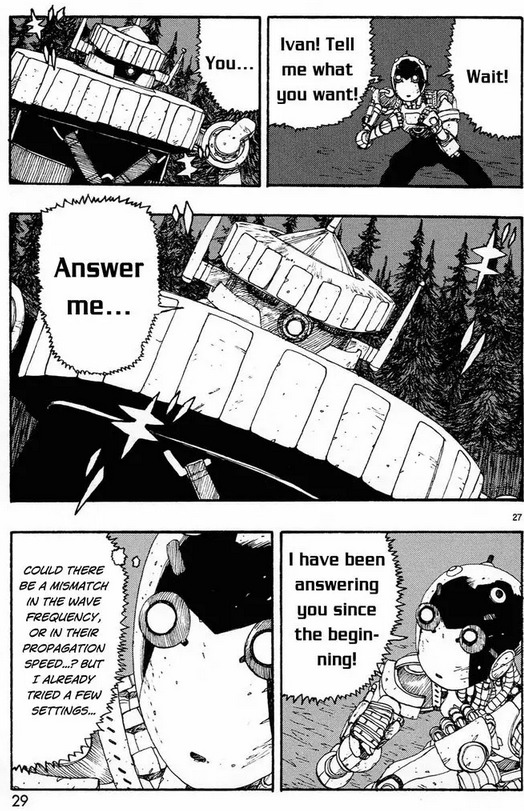

Atom: The Beginning & AI Cybersecurity

Atom: The Beginning is a manga about two researchers creating advanced robotic AI systems, such as unit A106. Their breakthrough is the Bewusstein (Translation: awareness) system, which aims to give robots a "heart", or a kind of empathy. In volume 2, A106, or Atom, manages to "beat" the highly advanced robot Mars in a fight using a highly abstracted machine language over WiFi to persuade it to stop.

This may be fiction, but it has parallels with current AI development in the use of specific commands to over-run safety guides. This has been demonstrated in GPT models, such as ChatGPT, where users are able to subvert models to get them to output "banned" information by "pretending" to be another AI system, or other means.

There are parallels to Atom, in a sense with users effectively "persuading" the system to empathise. In reality, this is the consequence of training Large Language Models (LLM's) on relatively un-sorted input data. Until recent guardrail placed by OpenAI there were no commands to "stop" the AI from pretending to be an AI from being a human who COULD perform these actions.

As one research paper put it:

"Such attacks can result in erroneous outputs, model-generated

hate speech, and the exposure of users’ sensitive information." Branch, et al. 2022

There are, however, more deliberately malicious actions which AI developers can take to introduce backdoors.

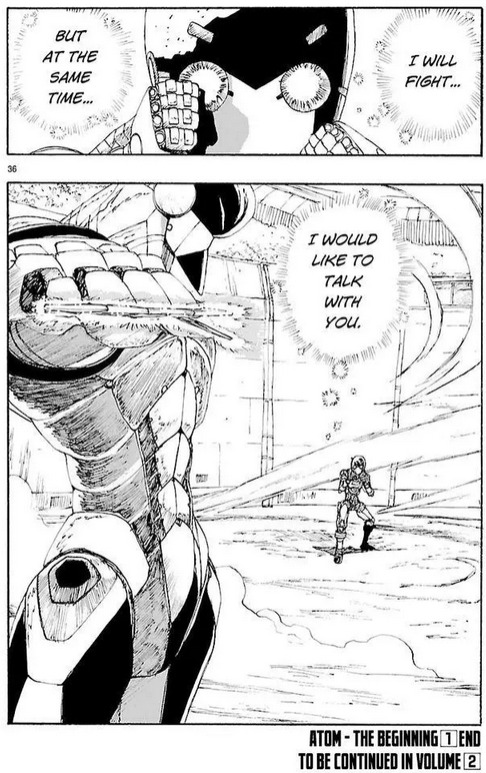

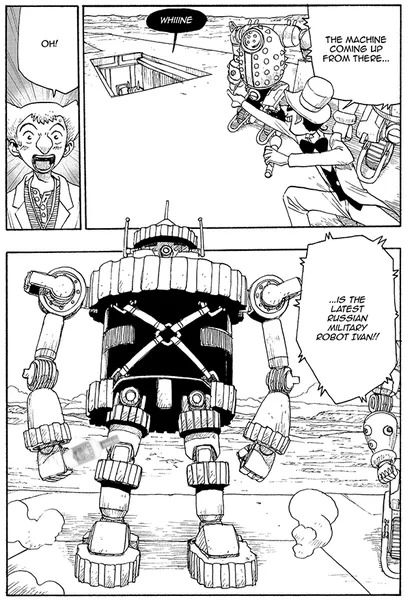

In Atom, Volume 4, Atom faces off against Ivan - a Russian military robot. Ivan, however, has been programmed with data collected from the fight between Mars and Atom.

What the human researchers in the manga didn't realise, was the code transmissions were a kind of highly abstracted machine level conversation. Regardless, the "anti-viral" commands were implemented into Ivan and, as a result, Ivan parrots the words Atom used back to it, causing Atom to deliberately hold back.

In AI cybersecurity terms, this is effectively an AI-on-AI prompt injection attack. Attempting to use the words of the AI against itself to perform malicious acts. Not only can this occur, but AI creators can plant "backdoor commands" into AI systems on creation, where a specific set of inputs can activate functionality hidden to regular users.

This is a key security issue for any company training AI systems, and has led many to reconsider outsourcing AI training of potential high-risk AI systems.

Researchers, such as Shafi Goldwasser at UC Berkley are at the cutting edge of this research, doing work compared to the key encryption standards and algorithms research of the 1950s and 60s which have led to today's modern world of highly secure online transactions and messaging services.

From returning database entries, to controlling applied hardware, it is key that these dangers are fully understood on a deep mathematical, logical, basis or else we face the dangerous prospect of future AI systems which can be turned against users.

As AI further develops as a field, these kinds of attacks will need to be prevented, or mitigated against, to ensure the safety of systems that people interact with.

References:

Twitter pranksters derail GPT-3 bot with newly discovered “prompt injection” hack - Ars Technica (16/09/2023)

EVALUATING THE SUSCEPTIBILITY OF PRE-TRAINED

LANGUAGE MODELS VIA HANDCRAFTED ADVERSARIAL

EXAMPLES - Hezekiah Branch et. al, 2022 Funded by Preamble

In Neural Networks, Unbreakable Locks Can Hide Invisible Doors - Quanta Magazine (02/03/2023)

Planting Undetectable Backdoors in Machine Learning Models - Shafi Goldwasser et.al, UC Berkeley, 2022

#ai research#ai#artificial intelligence#atom the beginning#ozuka tezuka#cybersecurity#a106#atom: the beginning

9 notes

·

View notes

Photo

warmup doodle

93 notes

·

View notes

Text

final design?

Eva 000

ATB Evangelion AU my beloved...

#Atom the Beginning#Umataro Tenma#A106#and YES little Hiroshi are in those first two lol. thinking that Umataro had to save him or smthn idk i drew these sime time ago#im reposting here for better archive bc twitter...#i was going back and fourth but Umataro pilots 6 and Hiroshi gets 7. ALSO THEYRE NOT 14! they're their normal age and yes even the robots#have the thing from the anime in them still#but...i took a few creative choices here n there#not sure if ill expand more on this au bc i only did it for two reasons...#yeah

42 notes

·

View notes

Text

15 notes

·

View notes

Text

Source: Comiplex

Chapter 110 (boot_110) of Atom: The Beginning is now available to read on the Comiplex website! It will be available to read for free in it's entirety from August 25, 2023 to September 29, 2023.

Featured on the cover art is Tobio Tenma and A106 (Six).

This cover also announces that Volume 19 of Atom: The Beginning releases on September 5, 2023.

61 notes

·

View notes

Text

Who was up atoming the beginning….

#fanart#pkstarstruck art#sketches#artist on tumblr#digital art#shitpost#atom the beginning#umataro tenma#hiroshi ochanomizu#a106#Spotify

44 notes

·

View notes

Text

1955 Alpine A 106

My tumblr-blogs: germancarssince1946 & frenchcarssince1946

2 notes

·

View notes