#adamwe

Text

Adam 100% has a "hecho en mexico" sticker on the back of his jet, you cannot tell me otherwise

105 notes

·

View notes

Video

everyone knows thats not a real Starbucks smh

45 notes

·

View notes

Text

Source: adamw, Instagram

When you get caught holding the door open.

17 notes

·

View notes

Text

0 notes

Quote

We present a simple neural network that can learn modular arithmetic tasks and exhibits a sudden jump in generalization known as ``grokking''. Concretely, we present (i) fully-connected two-layer networks that exhibit grokking on various modular arithmetic tasks under vanilla gradient descent with the MSE loss function in the absence of any regularization; (ii) evidence that grokking modular arithmetic corresponds to learning specific feature maps whose structure is determined by the task; (iii) analytic expressions for the weights -- and thus for the feature maps -- that solve a large class of modular arithmetic tasks; and (iv) evidence that these feature maps are also found by vanilla gradient descent as well as AdamW, thereby establishing complete interpretability of the representations learnt by the network.

[2301.02679] Grokking modular arithmetic

1 note

·

View note

Text

😂🤣😂🤣😂🤣😂🤣😂🤣😂🤣😂

Via @#adamw Instagram Account

When she hogs the blanket @debbiesath

https://www.instagram.com/reel/Cuz4E__NWSu/?igshid=MzRlODBiNWFlZA==

0 notes

Text

Title: LLaMA - Leaked OpenAI Model for Academic Research

Contrary to Facebook's announcement that LLaMA would only be accessible for academic research purposes, LLaMA was leaked through torrents on the first day of its release.

LLaMA, like last year's Stable Diffusion Moment with image generation models, has generated excitement in the open-source community due to the lack of public access to ChatGPT and DALL-E, which are both works of OpenAI.

LLaMA is based on the Transformer model and has not undergone significant changes since its release in 2017, yet it continues to show impressive performance.

The key differences of LLaMA compared to ChatGPT and DALL-E are the use of Pre-normalization, SwiGLU activation function, and Rotary Embeddings. Pre-normalization normalizes the input with RMSNorm instead of the output of sub-layers in Transformer, SwiGLU activation function replaces ReLU, and Rotary Embeddings apply Positional Encoding. LLaMA also uses Rotary Positional Embeddings.

LLaMA applies the AdamW Optimizer and uses the xformers library, which implements causal multi-head attention, to speed up training. Additionally, manual implementation of backward functions for Transformer layers was done to reduce the re-computation of activations during checkpointing backward pass.

LLaMA is trained with 1.4T Tokens, which is about three times larger than GPT-3's 500B Tokens. BloombergGPT, trained with 700B Tokens, extracted 363B Tokens from Bloomberg's financial data. As a result, the 5B LLaMA model outperforms the 175B model in Finance Tasks.

The training period for LLaMA took 21 days for the entire 1.4T token training on 80GB A100 2,048 GPUs, processing 380 Tokens/1 GPU/1s during the 65B model training.

Models Developed from SFT to RLHF in SFT LLaMA

Alpaca: A Strong, Replicable Instruction-Following Model

Released by Stanford CRFM (Foundation Model Research Institute directed by Percy Liang).

Seed with 175 human-generated instruction-output pairs on the LLaMA 7B model, followed by generating 52K examples using text-davinci-003 for SFT.

Made efforts to ensure diversity in the data.

Trained on 4 A100 GPUs with PyTorch FSDP, using a Hugging Face version with LLaMA PRs that were not yet merged.

Applied OpenAI's Moderations API for content filtering in the demo and added watermarks to all model outputs.

Vicuna: An Open Chatbot Impressing GPT-4

Conducted SFT using 70K conversation pairs collected from ShareGPT.

First version, Vicuna-13B, was trained on LLaMA 13B and later LLaMA 7B was also released.

Created a separate Python package called FastChat and added a demo service using Gradio for convenient usage.

Converted LLaMA to Hugging Face format and applied the provided apply_delta from FastChat to obtain Vicuna weights.

Training was done on 8 A100 GPUs with PyTorch FSDP for one day.

When evaluated with GPT-4, outperformed ChatGPT by 14%, but still suffers from significant Hallucination issues.

Koala: A Dialogue Model for Academic Research

Released by UC Berkeley's BAIR.

Utilized 60K data pairs from ShareGPT, with duplicates and non-English conversations removed, resulting in 30K remaining.

Evaluation was conducted by 100 human evaluators.

Baize: An Open-Source Chat Model with Parameter-Efficient Tuning on Self-Chat Data

Trained using LoRA, and 100K dialogues were generated using ChatGPT.

Repository available at https://github.com/project-baize/baize-chatbot.

GPT4All

Built 800K samples using gpt-3.5-turbo and conducted SFT.

RLHF

RLHF is a model that combines SFT (Stacked Fusion Transformer) with reinforcement learning using a library called TRL (Transformer Reinforcement Learning). It showed good results with SFT alone, but the model that incorporates RLHF, similar to the InstructGPT paper, is as follows: StackLLaMA. The initial training was done using the StackExchange dataset, and Reward scores were automatically constructed using upvotes. The peft library, which performs LoRA to reduce memory usage, was used. The model was loaded with 8 bits (1 byte of memory per parameter), and for LLaMA 7B, it only occupied 7GB of memory. Thanks to this, SFT can be run on Google Colab as well. RLHF used the TRL library, which is a Transformer Reinforcement Learning library currently being developed in-house.

ColossalChat

ColossalChat is a project that applies RLHF to LLM as part of the Colossal-AI project: ColossalAI.

Open Assistant

Open Assistant, as described on their website open-assistant.io, aims to provide amazing conversational AI that improves the world, just like how Stable Diffusion has helped create art and images in new ways. The project is being carried out by LAION AI, known for the LAION-400M dataset in the computer vision field. They have already released a model based on Pythia 12B with SFT.

Others

trlX: trlX is a project created by CarperAI, a spin-off from EleutherAI, which extends TRL (Transformer Reinforcement Learning) and supports Accelerate by Hugging Face up to 20B parameters and NeMo for more than 20B parameters. The PPO version of NeMo is still under development, but frameworks for ChatGPT RLHF using trlX have been almost completed, including publishing a summary task RLHF using trlX on WandB.

llama.cpp: llama.cpp is a project by Georgi Gerganov from Bulgaria, reminiscent of the talented hacker Fabrice Bellard from France (who is also currently all-in with GPT and LLM), with the goal of making LLaMA runnable on a local MacBook rather than an A100 GPU. Its main objectives are C++ optimization, 4-bit quantization, and CPU support. It has been an ongoing personal project for a long time, developing C++ projects that infer GPT on CPU, and it shows a high level of completion, which suggests that it may be possible to run the service on CPU servers alone in production.

LLaMA 4bit ChatBot Guide v2: LLaMA 4bit ChatBot Guide v2 provides a guide on running LLaMA with 4-bit quantization. It offers various resources that can significantly reduce operating costs by running CPU production.

0 notes

Text

#roblox video#roblox memes#roblox#roblox game#anime memes#animation community#animation#animation meme#youtube#small youtuber#funny content#funny memes#funny post#mediapromotionsbyher

0 notes

Video

youtube

KID PRANKS Babysitter GOES TOO FAR Ft. @HannahStocking and @AdamW | Dha...

0 notes

Text

HAPPY BIRTHDAY ADAM

134 notes

·

View notes

Video

youtube

KID PRANKS Babysitter GOES TOO FAR Ft. @HannahStocking and @AdamW | Dha...

0 notes

Text

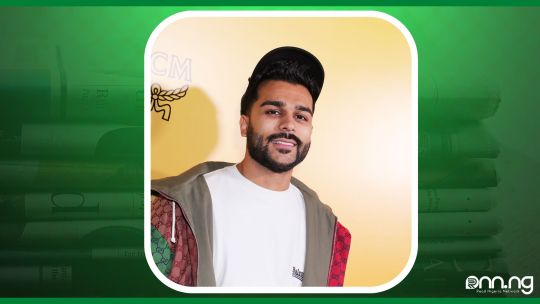

Adam Waheed Biography, Career and Net worth

Adam Waheed Biography, Career and Net worth

Who is Adam Waheed?

View this post on Instagram

A post shared by Adam Waheed (@adamw)

Adam Waheed is an actor, comedian, and social media personality . He gained popularity for posting humorous sketches on his many social media sites. He also directed the television program Fired and produced Adam’s World and Tribes.

Social media influencer Adam Waheed has become well-known and well-liked for…

View On WordPress

0 notes

Text

60 FPS Cheats for NSwitch | Page 2 | download - The Independent Video Game Community

💾 ►►► DOWNLOAD FILE 🔥🔥🔥

The Page of Doom. Main Menu. To use these cheats you must be in a game and you simply type these codes in on your keyboard at any time during play. You must type them relatively fast for it to register. If you have keys bound to a key used in a cheat code it doesn't effect the correct entry of the code but it does also register as what you bound the key to. So if, like my you use the 'D' key as strafe left, when you use the code 'iddqd' it will put you in god mode and move you to the left three times, so don't stand near a cliff when typing. When there is an X look to the Notes to see what to replace it with. All cheat codes start with 'id' which is the name of the company who made doom "id software". Replace XX with a number depending on the level number you want the music from, 'mus' is short for music most likely. Was a cool two-player Rescue Raiders rip-off. He was drunk and talked to me one night, and I thought I'd put it in. Replace XX with the episode number and level number eg. Replace XX with the level number eg. Shows your Position Coordinates for when you type in the code doesn't update as you move. This cheat was put in for operators on any hint line to know exactly where you are in a level, thanks to adamw. First time you type it the map shows all the areas as if you've been there, the second time it displays an outlined green triangle where every 'thing' is painting in the direction they are facing including the player, the third time is erases what the cheat displayed. The 'dt' in this cheat stands for Dave Taylor, thanks to Arioch. This page was made by teapot E-mail me for any information about this page. DOOM is a trademark of id Software.

1 note

·

View note

Photo

So excited I have a phone it’s going to canned film festival at @tribesthemovieofficial thank you so much @jakehunterofficial for putting me in it! I’m very proud of the selection of our Oscar-qualified short film Tribes - The Movie directed by @ninoaldi at the American Pavilion Cannes Film Festival ! This is absolutely awesome! If you’re attending Marché du Film - Festival de Cannes, come watch it. @patricachica be doing the Q&A. Starring Jake Hunter, @adamw @destorm Power, and me Jason Stuart https://www.instagram.com/p/CdjyT9YpsMA/?igshid=NGJjMDIxMWI=

0 notes

Text

Someone’s gotten a little chunky lately!

28 notes

·

View notes