#also created this little scene just to test my shader out....

Text

10:40 AM - Windenburg

#thesims4#ts4#sims 4#sims#thesims#showusyourbuilds#sims 4 builds#sims 4 cc#sims 4 screenshots#ts4 screenshots#ts4 cc#simblr#rhyinteriors#still playing around with my personal preset...im so indecisive...#a part of me wants it clean ...no grain...no overlays...#and theres another part of me that wants that vintage look to it...with dust#scratches .grains#and all that jazzz#also created this little scene just to test my shader out....#interior

215 notes

·

View notes

Text

Developing the Experience

Blog Post # 4

Angelique Shelley (MA Concept Art)

I was a little concerned with the running time of our group’s production, so I pitched an idea of some 2D animations to buffer out the total time. The idea would expand the concept and add some more shape to the narrative. There would be three different scenes from different times after judgment day, we would be seeing the world years and years in the future and then moving back in time to the judgment day. The statues will also show the progress of time by slowly rising out of the ground after falling, and being overgrown. The degrading statue would be a repeating focal point, his true identity would slowly be revealed as the creature that destroyed the world, which would add a fun twist. While not very clear in this story board, frame 6 would visually allude to this more by having some cracking and flaking of stone revealing patches of its grotesque skin.

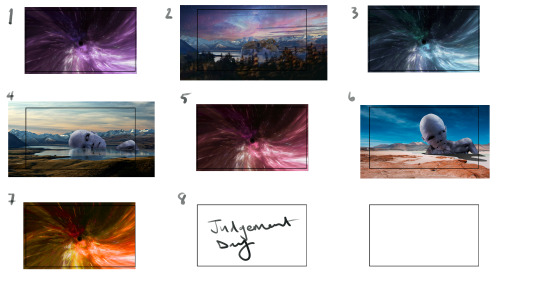

Fig. 1 The storyboard for the 2D additions pitch.

Fig. 2 The keyshot images I created for the storyboard using Liang's statue and creature

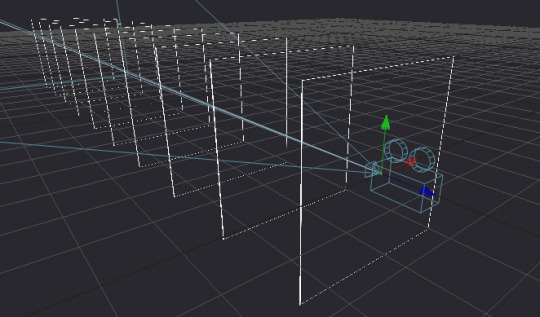

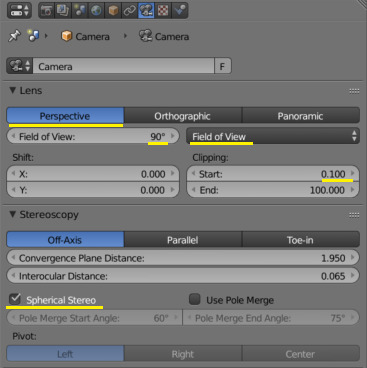

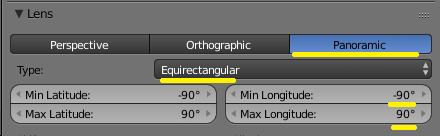

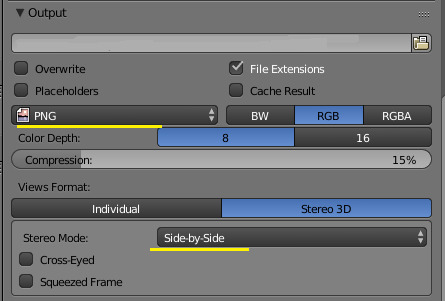

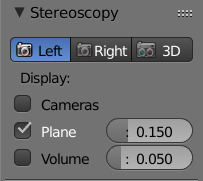

To create a 180 degree environment quickly, I decided to look at mirroring the image and creating a spherical image (see fig. 3). For the animation, I created a cylinder in Maya and learnt how to do camera-based projection. I also learned how to render out a playblast to test it. The render would be just a surface shader, meaning that I could use the animation of the image without the lighting information from the scene affecting the geometry.

Fig. 3 The playblast showing the animation idea.

In the end, Liang suggested she and Sai could render out their scenes at 0.5 speed, and I could make my portal animation longer without the above landscapes, Ana expressed concerns about whether it would be cohesive with the aesthetic of the piece. There was discussion around including this at the end if there was time, but it was ultimately decided against.

References:

Ibañez, A. (2018). The Orion Nebula, Castillo de Villamelefa, Barcelona, Spain. [Photograph]. Available at: https://c02.purpledshub.com/uploads/sites/48/2019/12/08-Alberto-Ibanez-Orion-Nebula-d32cbed.jpg?webp=1&w=1200 [Accessed 07 February 2024]

Jimenez, D. (2017). person walking in distance of mountain. [Photograph]. Unsplash. CC BY-SA. Available at: https://unsplash.com/photos/person-walking-in-distance-of-mountain-HNOaMthcq0w [Accessed 07 February 2024]

Jor4gea (2023). Purple and blue galaxy wallpaper. [Online]. Available at: https://wallpapercave.com/w/wp4247401 [Accessed 07 February 2024]

Keller, T. (2016). Landscape photography of lake and mountain. [Photograph]. Unsplash. CC BY-SA. Available at: https://unsplash.com/photos/landscape-photography-of-lake-and-mountain-73F4pKoUkM0 [Accessed 07 February 2024]

Wallpaperwolf (2024). Space-Dust … [Online]. Available at: https://www.chromethemer.com/wallpapers/8k-wallpapers/space-dust-wallpaper-8k.html [Accessed 07 February 2024]

0 notes

Text

A Grand Tour of the Julian 3D Model, Part 5: The Devil is in the Details

(Part1) (Part 2) (Part 3) (Part 4)

OK, so I’ve spent a bunch of time re-simulating Julian’s clothing, and I’ll finish posing his fuccboi expression once I set up the final lighting, because it will have a huge effect (which you will see later). So now what?

The textures on this outfit are a bit exaggerated in some places. I don’t care for the way the boots have these wrinkles (1) and the baked-in wrinkles of the shirt now make no sense because I have altered the shape of the shirt (2). (I’m test rendering in bright light so you can see all of this!)

Time for a crash course in textures...

A 3D model on its own is just a blank eggshell. Texture maps, slotted into shaders, are what make them look like fabric, skin, metal, glass, or whatever.

To vastly oversimplify, a shader is a bit of software that figures out how light affects the model. Texture maps are plugged into the shader so they can be taken into account during this process. Here you can see a small sample of the many, many shader and texture settings on Julian’s ‘Face’ surface:

Fun Fact: Julian has over 50 individual shader settings on his skin surfaces!

Texture maps may be a seamless repeating tile, or they may be a single hi-res image that is created for a specific part of the geometry, like Julian’s face above. These textures are often ‘painted’ directly on the model in a texturing program, and then ‘unwrapped’ like an orange peel and flattened into a 2D image. (The 2D image can then be modded fairly easily for things like makeup, freckles, etc.)

Most 3D models have, at minimum, a Diffuse or Albedo map (the base color/texture), a Bump or Normal map (helps ‘fake’ fine detail), and some kind of Glossiness or Specular map (controls shine and reflectivity). But there are lots and lots more kinds of maps for various functions. Different shaders will make use of different additional maps - and some shaders use no maps at all, just color values and math!

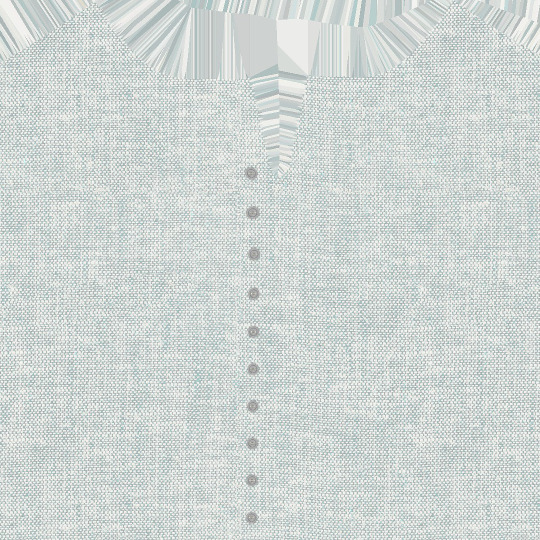

This is part of the shirt’s diffuse map - texture maps are copyrighted by the artist, so I’m not putting them here in full, just a small sample to illustrate what I’m talking about.

And this is part of the normal map - see how it shows fine details like topstitching? But overall, it’s too coarse of a weave for my tastes, and I want to apply a different texture. Since the vest is being worn over it, losing the fake bump effect on the buttons won’t matter very much.

Here I’ve applied new shaders to several surfaces. These are all shader presets - just a little bundle of tiling texture maps and appropriate shader settings. I used a cotton shader preset on the shirt, a slightly creased leather preset on the boots, a velvet preset on the chair cushion, and an aged gold preset on the chair body. I added depth-of-field on the camera to push the background into, well, the background. This slightly blurs everything behind him, and helps make Julian the focus of the image.

Now it’s time for lighting! This, too, is a huge subject, but it is almost exactly like lighting for IRL photography. Here is the ‘studio’ view of the scene, including the camera and the spotlight:

There is also another source of ambient light, not visible outside of a render, that adds an even lighting to the whole scene. The spotlight on the right was placed so that details were more visible - it’s a bit too bright and neutral for the final render, and it casts some odd shadows on Julian’s face.

Literally the only thing I have changed in the image below is the lighting - because of the cast shadows, you can see that it even changes the composition of the image, making it less top-heavy!

I wanted to accomplish three things here: 1) bring focus to his face, 2) warm the scene slightly, and 3) bring up highlights on his hair and skin. So I placed an orange light on his right slightly behind him, and a pinkish light from the opposite side. Then I turned off the ambient lighting. It makes a tremendous difference.

If I zoom out, the studio scene looks like this:

You can just barely see the rim light on the left side of the image as a blue line; it’s partly intersecting with the wall geometry.

...And then I took Julian back into Zbrush to fix a bunch of small things that were bugging me, like his eyepatch. And then I added a little more scene clutter. And then I spent entirely too long trying to get a catchlight in his eye - difficult, because it has to be a reflection from something that is actually present in the scene. His eyes have layers of geometry that represent the moisture of the eye, causing it to reflect light just like the real thing. And then I moved the lights some more to fix weird shadows...

This kind of fiddling often takes up the lion’s share of my time! Truly, the devil is in the details.

But at long last... we are (probably) ready to do the full render! Here’s the latest preview:

I’ve been rendering at about 1500px wide by 2000px high for most of my stuff - big enough to get some good detail, not so monstrous that it won’t fit on people’s monitors. When there’s a metallic prop like this, I will sometimes use a bloom filter to bring up those fun glowing highlights (I also use it for renders with light-emitting effects like magic sparkles, or renders with a setting/dawning sun to mimic the glare).

Not going to lie, I will usually stop and restart my ‘final’ render several times because I notice things that only show in the full-resolution render (not these little low-res Viewport snapshots). And even then, I may have to go into GIMP afterward to fix ‘fireflies’ (overly bright pixels that often appear on hair and shiny items), adjust contrast, and of course apply my watermark (I’ve experienced art theft in the past and it’s... not fun).

Next time: the finished render!

24 notes

·

View notes

Photo

Péguy

Hi everybody!

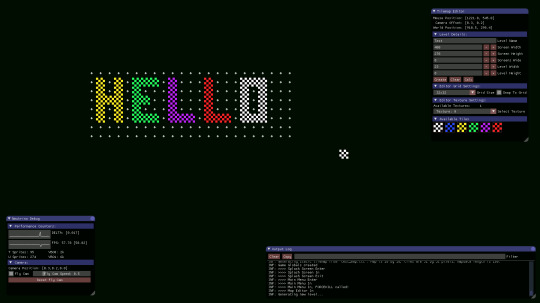

In this news feed I've told you a few times about a project I named Péguy. Well today I dedicate a complete article to it to present it to you in more detail but also to show you the new features I brought to it at the beginning of the winter.

It's not the priority project (right now it's TGCM Comics) but I needed a little break during the holidays and coding vector graphics and 3D, it's a little bit addictive like playing Lego. x)

Let's go then!

Péguy, what is it?

It is a procedural generator of patterns, graphic effects and other scenery elements to speed up the realization of my drawings for my comics.

Basically, I enter a few parameters, click on a button, and my program generates a more or less regular pattern on its own.

The first lines of code were written in 2018 and since then, this tool has been constantly being enriched and helping me to work faster on my comics. :D

This project is coded with web languages and generates vector patterns in the format SVG.

In the beginning it was just small scripts that had to be modified directly to change the parameters and run individually for each effect or pattern generated.

Not very user friendly, is it? :’D

This first version was used on episode 2 of Dragon Cat's Galaxia 1/2.

During 2019 I thought it would be more practical to gather all these scripts and integrate them into a graphical user interface. Since then, I have enriched it with new features and improved its ergonomics to save more and more time.

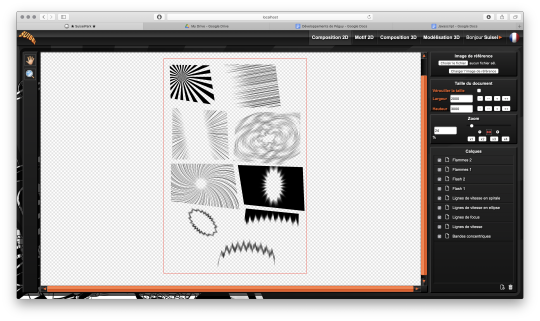

Here is a small sample of what can be produced with Péguy currently.

Graphic effects typical of manga and paving patterns in perspective or plated on a cylinder.

All these features were used on Tarkhan and Gonakin.

I plan to put this project online, but in order for it to be usable by others than me, I still need to fix a few ergonomy issues.

For the moment, to recover the rendering, you still need to open the browser debugger to find and copy the HTML node that contains the SVG.

In other words, if you don't know the HTML structure by heart, it's not practical. 8D

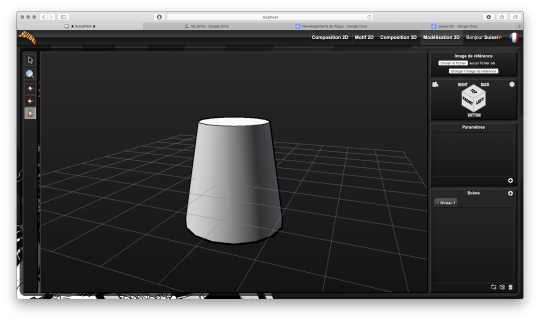

A 3D module!

The 2020 new feature is that I started to develop a 3D module. The idea, in the long run, is to be able to build my comics backgrounds, at least the architectural ones, a bit like a Lego game.

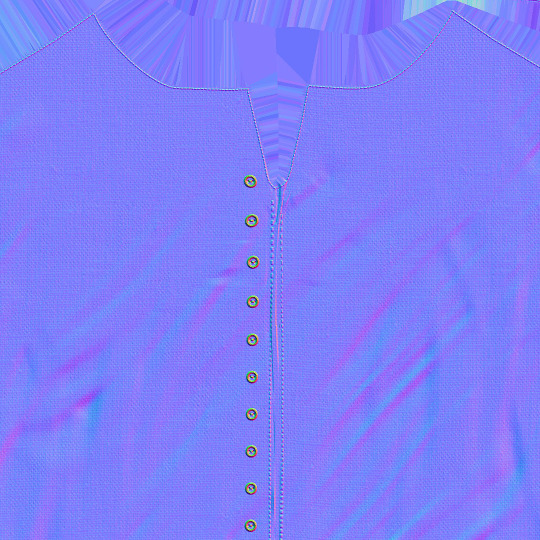

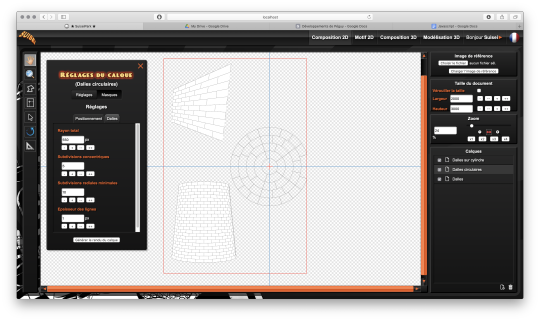

The interface is really still under development, a lot of things are missing, but basically it's going to look like this.

So there's no shortage of 3D modeling software, so why am I making one? What will make my project stand out from what already exists?

First, navigation around the 3D workspace. In short, the movement of the camera.

Well please excuse me, but in Blender, Maya, Sketchup and so on, to be able to frame according to your needs to get a rendering, it's just a pain in the ass!

So I developed a more practical camera navigation system depending on whether you're modeling an object or placing it in a map. The idea is to take inspiration from the map editors in some video games (like Age of Empire).

Secondly, I'm going to propose a small innovation. When you model an object in Blender or something else, it will always be frozen and if you use it several times in an environment, it will be strictly identical, which can be annoying for natural elements like trees for example. So I'm going to develop a kind of little "language" that will allow you to make an object customizable and incorporate random components. Thus, with a single definition for an object, we can obtain an infinite number of different instances, with random components for natural elements and variables such as the number of floors for a building.

I had already developed a prototype of this system many years ago in Java. I'm going to retrieve it and adapt it to Javascript.

And the last peculiarity will be in the proposed renderings. As this is about making comics (especially in black and white in my case), I'm developing a whole bunch of shaders to generate lines, screentones and other hatchings automatically with the possibility to use patterns generated in the existing vector module as textures! :D

What are shaders?

Well, you see the principle of post-production in cinema... (Editing, sound effects, various corrections, special effects... all the finishing work after shooting).

Well, shaders are about the same principle. They are programs executed just after the calculation of the 3D object as it should appear on the screen. They allow to apply patches, deformations, effects, filters... As long as you are not angry with mathematics, there is only limit to your imagination! :D

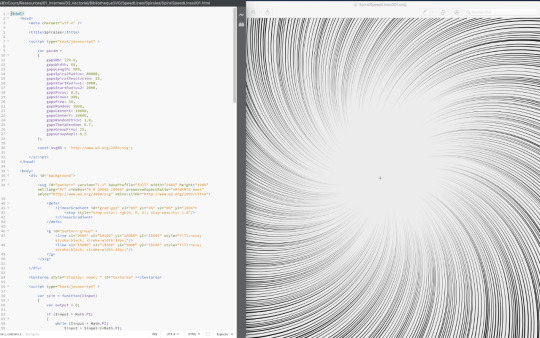

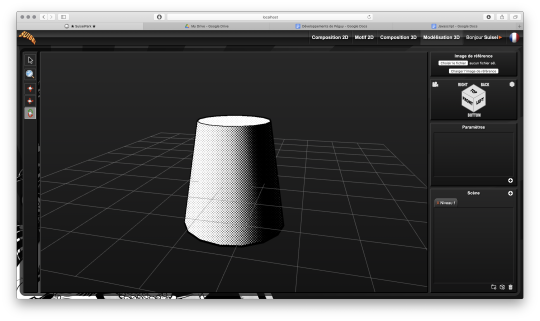

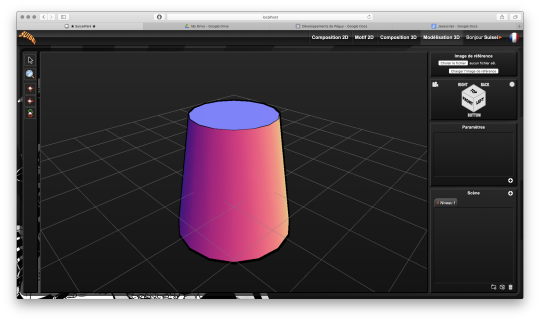

When you enter a normal vector in a color variable it gives funny results.

Yes! It's really with math that you can display all these things. :D

Now when you hear a smart guy tell you that math is cold, it's the opposite of art or incompatible with art... it's dry toast, you'll know it's ignorance. :p

Math is a tool just like the brush, it's all about knowing how to use it. :D

In truth, science is a representation of reality in the same way as a painting. It is photorealistic in the extreme, but it is nevertheless a human construction used to describe nature.

It remains an approximation of reality that continually escapes us and we try to fill in the margins of error over the centuries... Just like classical painting did.

But by the way? Aren't there a bunch of great painters who were also scholars, mathematicians? Yes, there are! Look hard! The Renaissance is a good breeding ground. x)

In short! Physics is a painting and mathematics is its brush.

But in painting, we don't only do figurative, not only realism, we can give free rein to our inspiration to stylize our representation of the world or make it abstract.

Well like any good brush, mathematics allows the same fantasy! All it takes is a little imagination for that.

Hold, for example, the good old Spirograph from our childhood. We all had one! Well, these pretty patterns drawn with the bic are nothing else than... parametric equations that make the students of math sup/math spe suffer. 8D

Even the famous celtic triskelion can be calculated from parametric equations.

Well, I digress, I digress, but let's get back to our shaders.

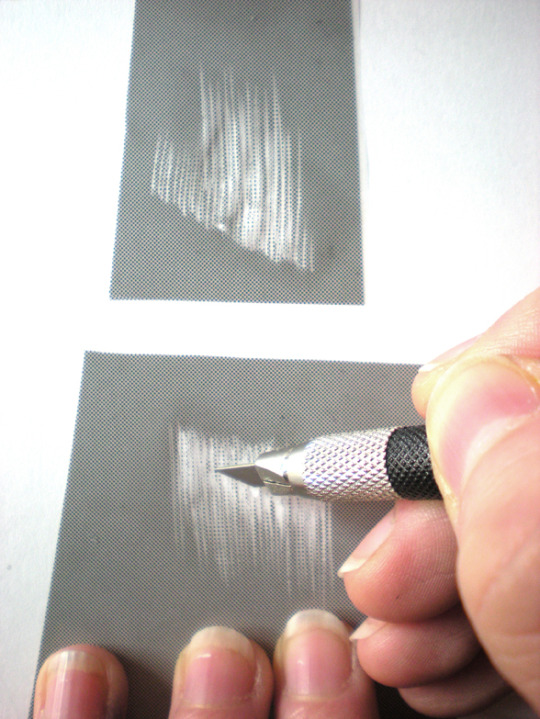

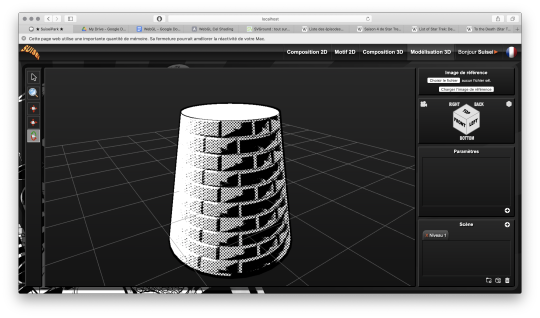

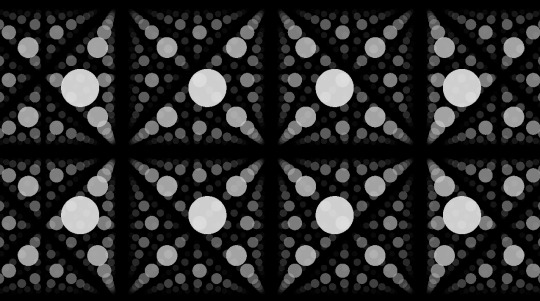

Since you can do whatever you want with it, I worked on typical manga effects. By combining the Dot Pattern Generator and the Hatch Generator but display them in white, I was able to simulate a scratch effect on screentones.

In the traditional way it is an effect that is obtained by scraping the screentones with a cutter or similar tool.

Péguy will therefore be able to calculate this effect alone on a 3D scene. :D

I extended this effect with a pattern calculated in SVG. So it will be possible to use the patterns created in the vector module as textures for the 3D module!

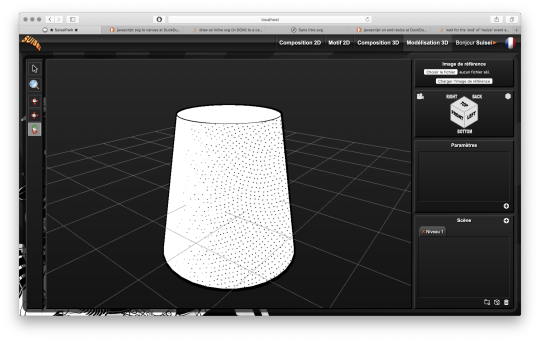

Here it is a pattern of dots distributed according to a Fibonacci spiral (I used a similar pattern in Tarkhan to make stone textures, very commonly used in manga).

Bump mapping

So this is where things get really interesting. We stay in the shaders but we're going to give an extra dimension to our rendering.

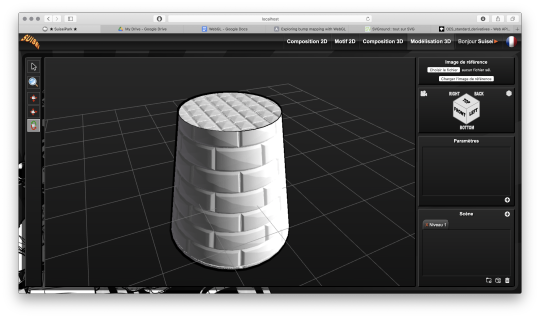

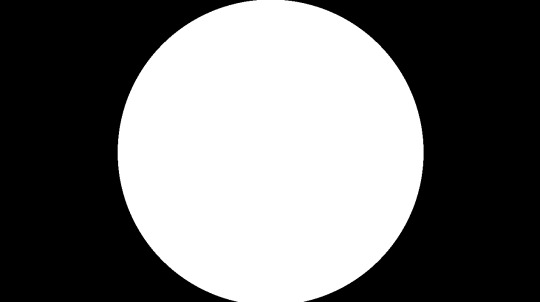

Basically, bump mapping consists in creating a bas-relief effect from a high map. And it gives this kind of result.

The defined object is always a simple cylinder (with 2 radii). It is the shaders that apply the pixel shift and recalculate the lighting thanks to the high map that looks like this.

This texture has also been calculated automatically in SVG. Thus we can dynamically set the number of bricks.

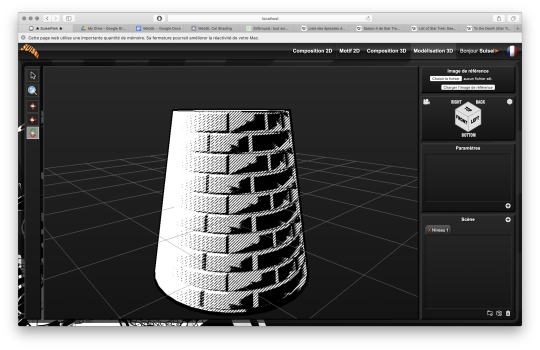

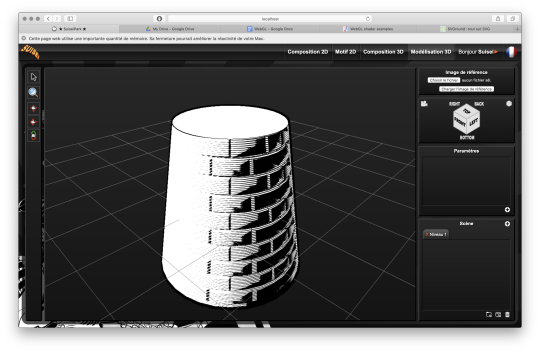

Well, this bas-relief story is very nice, but here we have a relatively realistic lighting, and we would like it to look like a drawing.

So by applying a threshold to have an area lit in white, a second threshold to have shadow areas in black, by applying the screentone pattern to the rest and by adding the hatching that simulates the scraped screentone, here is the result!

It's like a manga from the 80's! :D

I tested this rendering with other screentone patterns: Fibonnacci spiral dots, parallel lines or lines that follow the shape of the object.

Now we know what Péguy can do.

I think I can enrich this rendering a bit more with the shaders but the next time I work on this project the biggest part of the job will be to create what we call primitives, basic geometric objects.

After that I can start assembling them.

The concept of drawing while coding is so much fun that I'm starting to think about trying to make complete illustrations like this or making the backgrounds for some comic book projects only with Péguy just for the artistic process.

Finding tricks to generate organic objects, especially plants should be fun too.

That's all for today.

Next time we'll talk about drawing!

Have a nice week-end and see you soon! :D

Suisei

P.S. If you want miss no news and if you haven't already done so, you can subscribe to the newsletter here : https://www.suiseipark.com/User/SubscribeNewsletter/language/english/

Source : https://www.suiseipark.com/News/Entry/id/302/

1 note

·

View note

Text

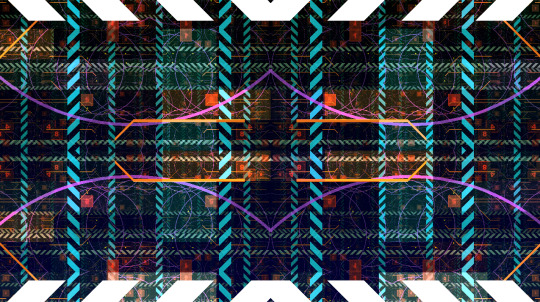

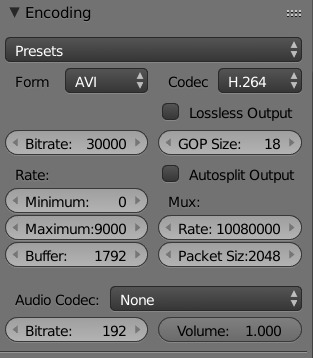

A breakdown of the Revision 2020 Threeway Battle shader

Those of you who have been following this year's edition of Revision probably remember the unexpected twist in Sunday's timeline, where I was pitted in a coding "battle" against two of the best shader-coders in the world to fend for myself. Admittedly the buzz it caused caught me by surprise, but not as much as the feedback on the final shader I produced, so I hope to shed some light on how the shader works, in a way that's hopefully understandable to beginners and at least entertaining to experts, as well as providing some glimpses into my thought process along the way.

youtube

Recorded video of the event

But before we dive into the math and code, however, I think it's important to get some context by recounting the story of how we got here.

A brief history of demoscene live-coding

Visual coding has been massively opened up when graphics APIs began to introduce programmable fragment rendering, perhaps best known to most people as "pixel shaders"; this allowed programmers to run entire programmable functions on each pixel of a triangle, and none was more adamant to do that than a fellow named Iñigo Quilez (IQ), an understated genius who early on recognized the opportunity in covering the entire screen with a single polygon, and just doing the heavy lifting of creating geometry in the shader itself. His vision eventually spiraled into not only the modern 4k scene, but also the website ShaderToy, which almost every graphics programmer uses to test prototypes or just play around with algorithms. IQ, an old friend of mine since the mid-00s, eventually moved to the US, worked at Pixar and Oculus, and became something of a world-revered guru of computer graphics, but that (and life) has unfortunately caused him to shift away from the scene.

His vision of single-shader-single-quad-single-pass shader coding, in the meantime, created a very spectacular kind of live coding competition in the scene where two coders get only 25 minutes and the attention of an entire party hall, and they have to improvise their way out of the duel - this has been wildly successful at parties for the sheer showmanship and spectacle akin to rap battles, and none emerged from this little sport more remarkably than Flopine, a bubbly French girl who routinely shuffled up on stage wearing round spectacles and cat ears (actually they might be pony ears on second thought), and mopped the floor up with the competition. Her and a handful of other live-coders regularly stream on Twitch as practice, and have honed their live-coding craft for a few years at this point, garnering a considerable following.

youtube

Just a sample of insanity these people can do.

My contribution to this little sub-scene was coming up with a fancy name for it ("Shader Showdown"), as well as providing a little tool I called Bonzomatic (named after Bonzaj / Plastic, a mutual friend of IQ and myself, and the first person to create a live coding environment for demoparties) that I still maintain, but even though I feel a degree of involvement through the architectural side, I myself haven't been interested in participating: I know I can do okay under time pressure, but I don't really enjoy it, and while there's a certain overlap in what they do and what I do, I was always more interested in things like visual detail and representative geometry aided by editing and direction rather than looping abstract, fractal-like things. It just wasn't my thing.

Mistakes were made

But if I'm not attracted to this type of competition, how did I end up in the crossfire anyway? What I can't say is that it wasn't, to a considerable degree, my fault: as Revision 2020 was entirely online, most of the scene took it to themselves to sit in the demoscene Discord to get an experience closest to on-site socializing, given the somber circumstances of physical distancing. This also allowed a number of people who hasn't been around for a while to pop in to chat - like IQ, who, given his past, was mostly interested in the showdowns (during which Flopine crushed the competition) and the 4k compo.

As I haven't seen him around for a while, and as my mind is always looking for an angle, I somehow put two and two together, and asked him if he would consider taking part in a showdown at some point; he replied that he was up for it - this was around Saturday 10PM. I quickly pinged the rest of the showdown participants and organizers, as I spotted that Bullet was doing a DJ set the next day (which would've been in a relatively convenient timezone for IQ in California as well), and assumed that he didn't really have visuals for it - as there was already a "coding jam" over Ronny's set the day before, I figured there's a chance for squeezing an "extra round" of coding. Flopine was, of course, beyond excited by just the prospect of going against IQ, and by midnight we essentially got everything planned out (Bullet's consent notwithstanding, as he was completely out of the loop on this), and I was excited to watch...

...that is, until Havoc, the head honcho for the showdowns, off-handedly asked me about an at that point entirely hypothetical scenario: what would happen if IQ would, for some reason, challenge me instead of Flopine? Now, as said, I wasn't really into this, but being one to not let a good plan go to waste (especially if it was mine), I told Havoc I'd take one for the team and do it, although it probably wouldn't be very fun to watch. I then proceeded to quickly brief IQ in private and run him through the technicalities of the setup, the tool, the traditions and so on, and all is swell...

...that is, until IQ (this is at around 2AM) offhandedly mentions that "Havoc suggested we do a three-way with me, Flopine... and you." I quickly try to backpedal, but IQ seems to be into the idea, and worst of all, I've already essentially agreed to it, and to me, the only thing worse than being whipped in front of a few thousand people would be going back on your word. The only way out was through.

Weeks of coding can spare you hours of thinking

So now that I've got myself into this jar of pickles, I needed some ideas, and quick. (I didn't sleep much that night.) First off, I didn't want to do anything obviously 3D - both IQ and Flopine are masters of this, and I find it exhausting and frustrating, and it would've failed on every level possible. Fractals I'm awful at and while they do provide a decent amount of visual detail, they need a lot of practice and routine to get right. I also didn't want something very basic 2D, like a byte-beat, because those have a very limited degree of variation available, and the end result always looks a bit crude.

Luckily a few months ago an article I saw do rounds was a write-up by Sasha Martinsen on how to do "FUI"-s, or Fictional User Interfaces; overly complicated and abstract user interfaces that are prominent in sci-fi, with Gmunk being the Michael Jordan of the genre.

Image courtesy of Sasha Martinsen.

Sasha's idea is simple: make a few basic decent looking elements, and then just pile them on top of each other until it looks nice, maybe choose some careful colors, move them around a bit, place them around tastefully in 3D, et voilà, you're hacking the Gibson. It's something I attempted before, if somewhat unsuccessfully, in "Reboot", but I came back to it a few more times in my little private motion graphics experiments with much better results, and my prediction was that it would be doable in the given timeframe - or at least I hoped that my hazy 3AM brain was on the right track.

A bit of math

How to make this whole thing work? First, let's think about our rendering: We have a single rectangle and a single-pass shader that runs on it: this means no meshes, no geometry, no custom textures, no postprocessing, no particle systems and no fonts, which isn't a good place to start from. However, looking at some of Sasha's 3D GIFs, some of them look like they're variations of the same render put on planes one after the other - and as long as we can do one, we can do multiple of that.

Rough sketch of what we want to do; the planes would obviously be infinite in size but this representation is good enough for now.

Can we render multiple planes via a single shader? Sure, but we want them to look nice, and that requires a bit of thinking: The most common technique to render a "2D" shader and get a "3D" look is raymarching, specifically with signed distance fields - starting on a ray, and continually testing distances until a hit is found. This is a good method for "solid-ish" looking objects and scenes, but the idea for us is to have many infinite planes that also have some sort of alpha channel, so we'd have a big problem with 1) inaccuracy, as we'd never find a hit, just something "reasonably close", and even that would take us a few dozen steps, which is costly even for a single plane and 2) the handling of an alpha map can be really annoying, since we'd only find out our alpha value after our initial march, after which if our alpha is transparent we'd need to march again.

But wait - it's just infinite planes and a ray, right? So why don't we just assume that our ray is always hitting the plane (which it is, since we're looking at it), and just calculate an intersection the analytical way?

Note: I would normally refer to this method as "raytracing", but after some consultation with people smarter than I am, we concluded that the terms are used somewhat ambiguously, so let's just stick to "analytical ray solving" or something equally pedantic.

We know the mathematical equation for a ray is position = origin + direction * t (where t is a scalar that represents the distance/progress from the ray origin), and we know that the formula for a plane is A * x + B * y + C * z + D = 0, where (A, B, C) is the normal vector of the plane, and D is the distance from the origin. First, since the intersection will be the point in space that satisfies both equations, we substitute the ray (the above o + d * t for each axis) into the plane:

A * (ox + dx * t) + B * (oy + dy * t) + C * (oz + dz * t) + D = 0

To find out where this point is in space, we need to solve this for t, but it's currently mighty complicated. Luckily, since we assume that our planes are parallel to the X-Y plane, we know our (A, B, C) normal is (0, 0, 1), so we can simplify it down to:

oz + dz * t + D = 0

Which we can easily solve to t:

t = (D - oz) / dz

That's right: analytically finding a ray hit of a plane is literally a single subtraction and a division! Our frame rate (on this part) should be safe, and we're always guaranteed a hit as long as we're not looking completely perpendicular to the planes; we should have everything to start setting up our code.

Full disclosure: Given my (and in a way IQ's) lack of "live coding" experience, we agreed that there would be no voting for the round, and it'd be for glory only, but also that I'd be allowed to use a small cheat sheet of math like the equations for 2D rotation or e.g. the above final equation since I don't do this often enough to remember these things by heart, and I only had a few hours notice before the whole thing.

Setting up the rendering

Time to start coding then. First, let's calculate our texture coordinates in the 0..1 domain using the screen coordinates and the known backbuffer resolution (which is provided to us in Bonzomatic):

vec2 uv = vec2(gl_FragCoord.x / v2Resolution.x, gl_FragCoord.y / v2Resolution.y);

Then, let's create a ray from that:

vec3 rayDir = vec3( uv * 2 - 1, -1.0 ); rayDir.x *= v2Resolution.x / v2Resolution.y; // adjust for aspect ratio vec3 rayOrigin = vec3( 0, 0, 0 );

This creates a 3D vector for our direction that is -1,-1,-1 in the top left corner and 1,1,-1 in the bottom right (i.e. we're looking so that Z is decreasing into the screen), then we adjust the X coordinate since our screen isn't square, but our coordinates currently are - no need to even bother with normalizing, it'll be fine. Our origin is currently just sitting in the center.

Then, let's define (loosely) our plane, which is parallel to the XY plane:

float planeDist = 1.0f; // distance between each plane float planeZ = -5.0f; // Z position of the first plane

And solve our equation to t, as math'd out above:

float t = (planeZ - rayOrigin.z) / rayDir.z;

Then, calculate WHERE the hit is by taking that t by inserting it back to the original ray equation using our current direction and origin:

vec3 hitPos = rayOrigin + t * rayDir;

And now we have our intersection; since we already know the Z value, we can texture our plane by using the X and Y components to get a color value:

vec4 color = fui( hitPos.xy ); // XY plane our_color = color;

Of course we're gonna need the actual FUI function, which will be our procedural animated FUI texture, but let's just put something dummy there now, like a simple circle:

vec4 fui ( vec2 uv ) { return length(uv - 0.5) < 0.5 ? vec4(1) : vec(0); }

And here we go:

Very good, we have a single circle and if we animate the camera we can indeed tell that it is on a plane.

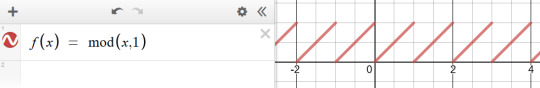

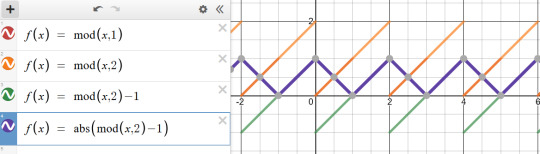

So first, let's tile it by using a modulo function; the modulo (or modulus) function simply wraps a number around another number (kinda like the remainder after a division, but for floating point numbers) and thus becomes extremely useful for tiling or repeating things:

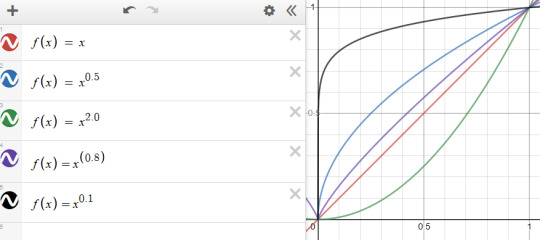

We'll be using the modulo function rather extensively in this little exercise, so strap in. (Illustration via the Desmos calculator.)

vec4 layer = fui( mod( hitPos.xy, 1.0 ) );

This will wrap the texture coordinates of -inf..inf between 0..1:

We also need multiple planes, but how do we combine them? We could just blend them additively, but with the amount of content we have, we'd just burn them in to white and it'd look like a mess (and not the good kind of mess). We could instead just use normal "crossfade" / "lerp" blending based on the alpha value; the only trick here is to make sure we're rendering them from back to front since the front renders will blend over the back renders:

int steps = 10; float planeDist = 1.0f; for (int i=steps; i>=0; i--) { float planeZ = -1.0f * i * planeDist; float t = (planeZ - rayOrigin.z) / rayDir.z; if (t > 0.0f) // check if "t" is in front of us { vec3 hitPos = rayOrigin + t * rayDir; vec4 layer = fui( hitPos.xy, 2.0 ); // blend layers based on alpha output colour = mix( colour, layer, layer.a ); } }

And here we go:

We decreased the circles a bit in size to see the effect more.

Not bad! First thing we can do is just fade off the back layers, as if they were in a fog:

layer *= (steps - i) / float(steps);

We have a problem though: we should probably increase the sci-fi effect by moving the camera continually forward, but if we do, we're gonna run into a problem: Currently, since our planeZ is fixed to the 0.0 origin, they won't move with the camera. We could just add our camera Z to them, but then they would be fixed with the camera and wouldn't appear moving. What we instead want is to just render them AS IF they would be the closest 10 planes in front of the camera; the way we could do that is that if e.g. our planes' distance from each other is 5, then round the camera Z down to the nearest multiple of 5 (e.g. if the Z is at 13, we round down to 10), and start drawing from there; rounding up would be more accurate, but rounding down is easier, since we can just subtract the division remainder from Z like so:

float planeZ = (rayOrigin.z - mod(rayOrigin.z, planeDist)) - i * planeDist;

And now we have movement! Our basic rendering path is done.

Our little fictional UI

So now that we have the basic pipeline in place, let's see which elements can we adapt from Sasha's design pieces.

The first one I decided to go with wasn't strictly speaking in the set, but it was something that I saw used as design elements over the last two decades, and that's a thick hatch pattern element; I think it's often used because it has a nice industrial feel with it. Doing it in 2D is easy: We just add X and Y together, which will result in a diagonal gradient, and then we just turn that into an alternating pattern using, again, the modulo. All we need to do is limit it between two strips, and we have a perfectly functional "Police Line Do Not Cross" simulation.

return mod( uv.x + uv.y, 1 ) < 0.5 ? vec4(1) : vec4(0);

So let's stop here for a few moments; this isn't bad, but we're gonna need a few things. First, the repetition doesn't give us the nice symmetric look that Sasha recommends us to do, and secondly, we want them to look alive, to animate a bit.

Solving symmetry can be done just by modifying our repetition code a bit: instead of a straight up modulo with 1.0 that gives us a 0..1 range, let's use 2.0 to get a 0..2 range, then subtract 1.0 to get a -1..1 range, and then take the absolute value.

vec4 layer = fui( abs( mod( hitPos.xy, 2.0 ) - 1 ) );

This will give us a triangle-wave-like function, that goes from 0 to 1, then back to 0, then back to 1; in terms of texture coordinates, it will go back and forth between mirroring the texture in both directions, which, let's face it, looks Totally Sweet.

For animation, first I needed some sort of random value, but one that stayed deterministic based on a seed - in other words, I needed a function that took in a value, and returned a mangled version of it, but in a way that if I sent that value in twice, it would return the same mangled value twice. The most common way of doing it is taking the incoming "seed" value, and then driving it into some sort of function with a very large value that causes the function to alias, and then just returning the fraction portion of the number:

float rand(float x) { return fract(sin(x) * 430147.8193); }

Does it make any sense? No. Is it secure? No. Will it serve our purpose perfectly? Oh yes.

So how do we animate our layers? The obvious choice is animating both the hatch "gradient" value to make it crawl, and the start and end of our hatch pattern which causes the hatched strip to move up and down: simply take a random - seeded by our time value - of somewhere sensible (like between 0.2 and 0.8 so that it doesn't touch the edges) and add another random to it, seasoned to taste - we can even take a binary random to pick between horizontal and vertical strips:

The problems here are, of course, that currently they're moving 1) way too fast and 2) in unison. The fast motion obviously happens because the time value changes every frame, so it seeds our random differently every frame - this is easy to solve by just rounding our time value down to the nearest integer: this will result in some lovely jittery "digital" motion. The unison is also easy to solve: simply take the number of the layer, and add it to our time, thus shifting the time value for each layer; I also chose to multiply the layer ID with a random-ish number so that the layers actually animate independently, and the stutter doesn't happen in unison either:

vec4 fui( vec2 uv, float t ) { t = int(t); float start = rand(t) * 0.8 + 0.1; float end = start + 0.1; [...] } vec4 layer = fui( abs(mod(hitPos.xy, 2.0)-1), fGlobalTime + i * 4.7 );

Lovely!

Note: In hindsight using the Z coordinate of the plane would've given a more consistent result, but the way it animates, it doesn't really matter.

So let's think of more elements: the best looking one that seems to get the best mileage out in Sasha's blog is what I can best describe as the "slant" or "hockey stick" - a simple line, with a 45-degree turn in it. What I love about it is that the symmetry allows it to create little tunnels, gates, corridors, which will work great for our motion.

Creating it is easy: We just take a thin horizontal rectangle, and attach another rectangle to the end, but shift the coordinate of the second rectangle vertically, so that it gives us the 45-degree angle:

float p1 = 0.2; float p2 = 0.5; float p3 = 0.7; float y = 0.5; float thicc = 0.0025; if (p1 < uv.x && uv.x < p2 && y - thicc < uv.y && uv.y < y + thicc ) { return vec4(1); } if (p2 < uv.x && uv.x < p3 && y - thicc < uv.y - (uv.x - p2) && uv.y - (uv.x - p2) < y + thicc ) { return vec4(1); }

Note: In the final code, I had a rect() call which I originally intended to use as baking glow around my rectangle using a little routine I prototyped out earlier that morning, but I was ultimately too stressed to properly pull that off. Also, it's amazing how juvenile your variable names turn when people are watching.

Looks nice, but since this is such a thin sparse element, let's just... add more of it!

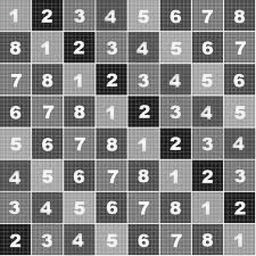

So what more can we add? Well, no sci-fi FUI is complete without random text and numbers, but we don't really have a font at hand. Or do we? For years, Bonzomatic has been "shipping" with this really gross checkerboard texture ostensibly for UV map testing:

What if we just desaturate and invert it?

We can then "slice" it up and render little sprites all over our texture: we already know how to draw a rectangle, so all we need is just 1) calculate which sprite we want to show 2) calculate the texture coordinate WITHIN that sprite and 3) sample the texture:

float sx = 0.3; float sy = 0.3; float size = 0.1; if (sx < uv.x && uv.x < sx + size && sy < uv.y &&uv.y < sy + size) { float spx = 2.0 / 8.0; // we have 8 tiles in the texture float spy = 3.0 / 8.0; vec2 spriteUV = (uv - vec2(sx,sy)) / size; vec4 sam = texture( texChecker, vec2(spx,spy) + spriteUV / 8.0 ); return dot( sam.rgb, vec3(0.33) ); }

Note: In the final code, I was only using the red component instead of desaturation because I forgot the texture doesn't always have red content - I stared at it for waaaay too long during the round trying to figure out why some sprites weren't working.

And again, let's just have more of it:

Getting there!

At this point the last thing I added was just circles and dots, because I was running out of ideas; but I also felt my visual content amount was getting to where I wanted them to be; it was also time to make it look a bit prettier.

Post-production / compositing

So we have our layers, they move, they might even have colors, but I'm still not happy with the visual result, since they are too single-colored, there's not enough tone in the picture.

The first thing I try nowadays when I'm on a black background is to just add either a single color, or a gradient:

vec4 colour = renderPlanes(uv); vec4 gradient = mix( vec4(0,0,0.2,1), vec4(0,0,0,1), uv.y); vec4 finalRender = mix( gradient, vec4(colour.xyz,1), colour.a);

This added a good chunk of depth considerably to the image, but I was still not happy with the too much separation between colors.

A very common method used in compositing in digital graphics is to just add bloom / glow; when used right, this helps us add us more luminance content to areas that would otherwise be solid color, and it helps the colors to blend a bit by providing some middle ground; unfortunately if we only have a single pass, the only way to get blur (and by extension, bloom) is repeatedly rendering the picture, and that'd tank our frame rate quickly.

Instead, I went back to one of the classics: the Variform "pixelize" overlay:

This is almost the same as a bloom effect, except instead of blurring the image, all you do is turn it into a lower resolution nearest point sampled version of itself, and blend that over the original image - since this doesn't need more than one sample per pixel (as we can reproduce pixelation by just messing with the texture coordinates), we can get away by rendering the scene only twice:

vec4 colour = renderPlanes(uv); colour += renderPlanes(uv - mod( uv, 0.1 ) ) * 0.4;

Much better tonal content!

So what else can we do? Well, most of the colors I chose are in the blue/orange/red range, and we don't get a lot of the green content; one of the things that I learned that it can look quite pretty if one takes a two-tone picture, and uses color-grading to push the midrange of a third tone - that way, the dominant colors will stay in the highlights, and the third tone will cover the mid-tones. (Naturally you have to be careful with this.)

"Boosting" a color in the mids is easy: lucky for us, if we consider the 0..1 range, exponential functions suit our purpose perfectly, because they start at 0, end at 1, but we can change how they get here:

So let's just push the green channel a tiny bit:

finalRender.g = pow(finalRender.g, 0.7);

Now all we need is to roll our camera for maximum cyberspace effect and we're done!

Best laid plans of OBS

As you can see from the code I posted the above, I wrote the final shader in GLSL; those who know me know that I'm a lot more comfortable with DirectX / HLSL, and may wonder why I switched, but of course there's another story here:

Given the remote nature of the event, all of the shader coding competition was performed online as well: since transmitting video from the coder's computer to a mixer, and then to another mixer, and then to a streaming provider, and then to the end user would've probably turned the image to mush, Alkama and Nusan came up with the idea of skipping a step and rigging up a version of Bonzo that ran on the coder's computer, but instead of streaming video, it sent the shader down to another instance of Bonzo, running on Diffty's computer, who then captured that instance and streamed it to the main Revision streaming hub. This, of course, meant that in a three-way, Diffty had to run three separate instances of Bonzo - but it worked fine with GLSL earlier, so why worry?

What we didn't necessarily realize at the time, is that the DirectX 11 shader compiler takes no hostages, and as soon as the shader reached un-unrollable level of complexity, it thoroughly locked down Diffty's machine, to the point that even the video of the DJ set he was playing started to drop out. I, on the other hand, didn't notice any of this, since my single local instance was doing fine, so I spent the first 15 minutes casually nuking Diffty's PC to shreds remotely, until I noticed Diffty and Havoc pleading on Discord to switch to GLSL because I'm setting things on fire unknowingly.

This is fine.

I was reluctant to do so, simply because of the muscle memory, but I was also aware that I should keep the show going if I can because if I bow out without a result, that would be a colossal embarrassment to everyone involved, and I only can take one of those once every week, and I was already above my quota - so, I quickly closed the DX11 version of Bonzo, loaded the shader up in a text editor, replaced "floatX" with "vecX" (fun drinking game: take a shot every time I messed it up during the live event), commented the whole thing out, loaded it into a GLSL bonzo, and quickly fixed all the other syntax differences (of which there were luckily not many, stuff like "mix" instead of "lerp", constructors, etc.), and within a few minutes I was back up and running.

This, weirdly, helped my morale a bit, because it was the kind of clutch move that for some reason appealed to me, and made me quite happy - although at that point I locked in so bad that not only did I pay absolutely not attention to the stream to see what the other two are doing, but that the drinks and snacks I prepared for the hour of battling went completely untouched.

In the end, when the hour clocked off, the shader itself turned out more or less how I wanted it, it worked really well with Bullet's techno-/psy-/hardtrance mix (not necessarily my jam, as everyone knows I'm more a broken beat guy, but pounding monotony can go well with coding focus), and I came away satisfied, although the perhaps saddest point of the adventure was yet to come: the lack of cathartic real-life ending that was taken from us due to the physical distance, when after all the excitement, all the cheers and hugs were merely lines of text on a screen - but you gotta deal with what you gotta deal with.

A small sampling of the Twitch reaction.

Conclusion

In the end, what was my takeaway from the experience?

First off, scoping is everything: Always aim to get an idea where you can maximize the outcome of the time invested with the highest amount of confidence of pulling it off. In this case, even though I was on short notice and in an environment I was unfamiliar with, I relied on something I knew, something I've done before, but no one else really has.

Secondly, broaden your influence: You never know when you can take something that seems initially unrelated, and bend it into something that you're doing with good results.

Thirdly, and perhaps most importantly, step out of your comfort zone every so often; you'll never know what you'll find.

(And don't agree to everything willy-nilly, you absolute moron.)

10 notes

·

View notes

Text

Star Citizen Monthly Report: February 2019

February saw Cloud Imperium devs around the world working hard to deliver the incredible content for the soon-to-be-released Alpha 3.5 patch. Progress was made everywhere, from locations like ArcCorp to the gameplay developments afforded by the New Flight Model. Read on for the full lowdown from February’s global workload.

Star Citizen Monthly Report: February 2019

AI – Character

February’s roundup starts with the AI Team, who made improvements to the existing character collision avoidance system. The changes began with adjustments to the smooth locomotion path, with the data now coming from the collision avoidance calculation to make sure the character has enough free space.

Time was spent generalizing the options a vendor can use so that designers no longer have to write them into the behaviors. Instead, the correct options are automatically selected based on the environment and (eventually) from the shop services.

They’re also restricting combat behavior to allow better scalability when adding new tactics and are investigating some of the bugs found in the Alpha 3.4 release.

AI – Ships

Throughout February, the AI Team improved various aspects of dogfighting gameplay, including evasive maneuvers. Now, when an AI pilot has an enemy on its tail, it will try to utilize different break-aways with increasing and varied angles. It will also try to keep momentum and chain together attack maneuvers. To achieve this, the team exposed new ‘SmoothTurning’ subsumption tasks to the behavior logic.

When detecting enemy fire, AI pilots will utilize evasive maneuvers to create a diversion.

They also implemented automatic incoming/outgoing ship traffic over planetary landing areas. They are currently generalizing ship behaviors to enable the designers to easily set up traffic on multiple cities, capital ships, and so on.

Animation

Last month, Animation provided the remaining animation sets for previous characters already found in the Persistent Universe (PU), including Hurston, Battaglia, and Pacheco. They also finished off a new batch of animations for the ship dealer. Work continues on animations for future yet-to-be-announced characters too, which includes getting approval for the initial poses and animations before going forward with the final clean-up.

American Sign Language (ASL) emotes are being added to the game and are currently being improved with the addition of facial animations.

Finally, Animation is currently syncing with Cinematics for a few interesting segments that backers will get to enjoy soon…

Art – Tech

Tech Art invested significant effort into optimizing rig assets so that they work better with the facial runtime rig logic and the ‘look at’ and ‘mocap’ re-direction components. Since eye contact is one of the fundamental means of human communication, any error or tiny deviation can cause the ‘uncanny valley’ effect and immediately break immersion.

“If the eyes of an actor converge just slightly too much, they appear cross-eyed. However, if they don’t converge enough, they appear to look through you, as if distracted. If the eyelids occlude the character’s iris just a little too much, which, depending on the distance, could amount to just 2-3 pixels vertically, they look sleepy or bored. Conversely, if they expose too much of the cornea, they appear more alert, surprised, or outright creepy.”

So, the alignment of the virtual skeleton’s eye joints with respect to the eyeball and eyelid geometry is of utmost importance. Likewise, the ‘look-at’ system needs to control all relevant rig parameters and corrective blendshapes (not just the rotation of the eyeballs themselves) to create truly-believable runtime re-directions of the mocap animations.

Alongside facial work, the team completed several weapons-related tasks, such as fixing offsets during reload animations and locomotion issues for the pistol set. They also completed R&D related to playing animations in sync with character usables within cinematic scenes and helped Design to unify the character tags in Mannequin.

Art – Environment

Predictably, the Environment Team is racing towards the completion of ArcCorp and Area 18 – they’re currently working with and implementing the custom advertising provided by the UI department. The planet itself is in the final art stage and now includes skyscrapers rising above the no-fly zone to provide the player with landing opportunities and interesting buildings to fly around.

Concurrently, the ‘Hi-Tech’ common elements are steadily progressing, with the transit, habitation, and security areas all moving to the art pass stage. Players will see these common elements (alongside garages and hangars) when they’re added to microTech’s landing zone, New Babbage.

The new transit connection between Lorville’s Teasa spaceport and the Central Business District (CBD) is almost ready for travellers. This route will allow players to move directly between the two locations and bypass L19, cutting travel time for high-end shoppers.

Work on organics is ongoing, as are improvements to planet tech, with the artists hard at work creating a library of exotic-looking flora to fill the biomes of New Babbage with. Players can see it for themselves towards the end of the year.

The community can also look forward to upcoming information on the early work the team has done on procedural caves.

Audio

Both the Audio Code Team and the sound designers finished their work on the new camera-shake and ship-vibration systems. Now, when an engine kicks in, the ship shakes and hums. This also extends to the player, with events like a ship powering up causing minor camera shake.

The sound designers also added new sound samples to a range of ships as part of the rollout of the New Flight Model. By adding ‘one-shot’ samples to each of the various thrusters, they brought out more complexity in the sounds heard during flight.

The Audio Team spent the majority of the month creating the sounds of Area 18. Due to the melting pot of ideas and themes present in the new area, the sound designers tested new methods to bring out the unique atmosphere. Additionally, they created the sound profiles and samples for the Gemini S71 assault rifle and Kastak Arms CODA pistol, both of which will appear in the PU and SQ42.

Currently, the Audio Code Team is working towards an updated tool that better allows the sound designers to implement created assets in-engine whilst simultaneously testing how they sound.

Backend Services

Backend Services continued to lay the foundation for the new diffusion network to help scalability for the backend structure of the game. Emphasis is on ensuring the Dedicated Game Servers (DGS) correctly connect to the new diffusion services, particularly the variable, leaderboard, and account services.

February marked the near-end of work on the new Item Cache Service (a massive portion of the backend has now turned micro-service) and began the end-point between DGS and this service, too. As work is completed on the new diffusion services, testing will ensure a smooth transition to the new network.

Support was also added for subsumption services to read directly into the DataCore P4k system for increased efficiency and unification.

With the approaching publish of Alpha 3.5, Backend Services began work on logistics, syncing closely with DevOps to ensure that new services are up and running correctly while maintaining legacy services where necessary.

Community

The team celebrated Valentine’s Day with community-made cards and limited-time ship offers, including Anvil’s F7C-M Heartseeker – a special version of the Super Hornet shooting straight for the heart. During the Be my Valentine greeting card contest, most Citizens got creative with their favorite image editing software, though some went old-school with scissors and crayons to create fantastic crafts to share their love across the galaxy.

Also this month, Argo Astronautics released their latest addition to the ‘verse, the SRV. The ‘Standard Recovery Vehicle’ is built for tugging ships, ground vehicles, and massive cargo containers through the stars using its integrated tractor tech. If you’re looking for more information about this rough and rugged ship, head to the Q&A that answers questions voted-on by the community. As a bonus, Shipmaster General John Crewe stopped by Reverse the Verse LIVE for some in-depth tug-talk.

In the February issue of Jump Point (our subscriber-exclusive magazine), Ben Lesnick took a detailed dive into the ARGO SRV’s design process and went on a worker’s tour of Hurston. The Narrative Team also introduced us to the Human holiday Stella Fortuna and shed light on the history of the revered Rust Society.

A major update to the Star Citizen roadmap gave a look at what’s coming to the Persistent Universe in 2019 and what can be expected in upcoming releases.

Released in January, but worthy of another mention, is the official Star Citizen Fankit, which was put together to help all of you share your enthusiasm and engagement. Star Citizen lives by the support it receives from the community, so take a look at this treasure trove of assets and get creating!

The team is also excited to announce that our physical merchandise will soon be receiving a well-deserved face-lift. Having received a lot of feedback over the years, it’s clear that Citizens are passionate about merch and to make the store experience the best it can be, your input was needed. Thanks to everyone who contributed feedback to our thread on Spectrum!

Content – Characters

The Character Team revisited the hair development pipeline in February. With the help of the Graphics Team, they developed new tools and shader tech to improve the realism of hair while maintaining quality and performance. More work went into mission-giver Pacheco, including textures and rigging, with her hairstyle being used to trial the new hair pipeline. Work continues on the assets required for DNA implementation and the female player character, while refinement of the Xi’an concept is making great progress.

Design

Throughout February, Design focused on implementing Area 18’s shops, NPCs, and usables. Last month marked the end of implementation, with March being used for polish to ensure a believable and immersive experience upon release. The team also gained a new member to help with mission implementation and improvement, who is currently setting their sights on the Emergency Communication Network (ECN) mission set.

Regarding the economy, the US Design Team worked with their UK counterparts on the objective criteria and value of objects in-game, laying down the track for acquiring item properties and their values. A system was built to help create an abstract representation, which is both robust and modular enough to allow easy adjustment in the future when the details are finalized.

DevOps

DevOps had a busy month working on the build system and pipeline that supports feature stream development. After several long nights, they rolled out the upgrades and have been happy with the results so far – internal systems are running smoothly without errors and each evolution improves efficiency and storage consumption.

They’re now attempting to further compress existing data which, when multiplied by hundreds of thousands of individual files, will make a real impact to the dev’s daily development efforts.

Engineering

February saw the Engine Team spend time on general Alpha 3.5 support, such as profiling, optimization, and bug fixing. They also improved the instance system used in compute skinning and refactored it on the CPU and shader for better maintainability, created a budget-based output-buffer system for skinning results (so they only have to skin once per frame), made more tangent reconstruction optimizations, and worked on wrap-deformation using the color stream.

Basic HDR display support was added to the editor, as was a new hue-preserving display mapping curve suitable for HDR display output. The team provided material layer support for planet tech v4 and continued to improve character hair, which included initial hair mask, support for edge masking, and pixel depth offset. Game physics is progressing with Projectile Manager 2.0, as well as optimizations to wrapped grids and state updates. Support was added for ocean Fast Fourier Transform (FFT) wave generation to physics buoyancy calculations, as well as exposed optimized terrain meshes.

A major system initialization clean-up was completed as part of an initiative to share core engine functionality with PU services, work began on the lockless job manager (a complete overhaul for faster response in high-load scenarios), and a new load time profiler was created. The team are currently wrapping up the ‘ImGUI’ integration and introducing a temporary allocator for more efficiency when containers are used on stack.

They made the switch to the Clang 6 compiler to build Linux targets (including compilation cleanup of the entire code base) and plan to switch to the latest stable release (Clang 8.x) in the near future.

Finally, they finished a ‘create compile time’ analysis tool (utilizing new Visual C++ front and backend profiler flags) to gather, condense, and visualize reasons for slow compile and link times. As a result, various improvements have already been submitted and further action-items defined.

Features – Gameplay

A large portion of Gameplay Feature’s month was dedicated to implementing the new DNA feature into the character customizer. In addition, the team was responsible for creating and setting up the user interface (UI) and accommodating the female playable character, both of which are scheduled for Alpha 3.5.

Another major focus was on video streaming for comms calls, which consisted of a refactor of the comms component to utilize the voice service call mechanism. Research was made into the VP9 streaming format and video streaming improvements were completed that will be rolled out in the upcoming release.

Lastly, support was given to the US-based Vehicle Features Team, with updates to the turret sensitivity HUD, gimbal assist UI, and the shopping service entity registration.

Features – Vehicles

Gimbal Assist and its related HUD improvements were finalized and polished, allowing for better balancing of this new weapon control scheme. Turrets were also improved, as the team added a HUD and keybinds for input sensitivity, implemented adjustable speeds for gimbal target movement based on proximity to center aim, and fixed bugs with snapping and erratic movement.

A lot of work went into scanning improvements, which included adjusting the area for navpoint scanning, enabling use of the navpoint hierarchy, and adding a Boolean to opt into the scanning data. This endeavor also covered adjustments to make scanning more involving by setting up AI turrets to generate signatures and be scannable and adding specific icons for scanned/unscanned targets. Ping and blob were implemented to display on the radar too, including focus angle and ping fire.

To round out the month, they continuing to make item port tech optimizations, developed tech for utilizing geometry component tags in the paint system, and fixed a handful of crash bugs.

Graphics

Last month, the Graphics Team’s work on the PU was spread between several smaller tasks. There were many shader requests from the artists, such as adding new features to the hard surface shader and ISO support for decals in the forward rendering pipeline.

The team also continued with the CPU optimizations from last month. This included a 3x performance saving on the cost of building per-instance data buffers for the GPU and better support for the depth pre-pass to help occlude hidden parts of the frame with less CPU overheads.

To help the artists optimize their content, the team worked on an improved render-debugging tool that reports how many draw instructions (draw-call) a particular object requires along with a breakdown of why each instruction was needed. Once complete, this will allow the artists to dig into their material and mesh setups to save valuable CPU time.

Level Design

The Level Design Team soldiered on with ArcCorp’s Area 18, bringing the designer whitebox up to greybox. They began planning the modular space stations that will be built this year too, including looking at the libraries, rooms, and content that goes into them. The procedural tool is also now at a stage where they can slowly start ramping up the modular station production.

Live Design

The Live Team refactored existing missions to make them scalable to make more content available in the planetary system (other than Crusader). Significant progress was made on a new drug-stealing mission for Twitch Pacheco, as well as a BlackJack Security counter-mission that tasks less morally-corrupt players with destroying the stash.

Another focus was on implementing a variety of encounters with security forces and bounty hunters when the player holds a high crime stat.

As well as practical work, time was taken to define the next tier of many aspects of the law system, such as punishment, paying fines, bounty hunting, and so on.

Lighting

Last month, the Lighting Team focused on developing the look of Area 18. Lighting Area 18 is a mixture of clean-up work from the previous versions to match new standards and lighting the new exterior layout to a series of targets set by the Art Director. The team is working closely with the Environment Art and VFX teams to ensure that new advertising assets and visual effects ‘pop’ from the environment and provide interesting and varied visuals.

Narrative

Working closely with the Environment Art and Mission Design teams, February saw the Narrative Team further fleshing out of lore relating to ArcCorp and its moons. From new mission giver contract text to the catchy slogans gracing Area 18’s numerous billboards, a lot of additional lore was created to bring these locations to life.

Additionally, expanded wildline sets for security pilots, bounty hunters, and combat assist pilots were scripted and recorded. The AI and Mission teams will use these sets to begin prototyping and testing out new gameplay for inclusion in future builds.

Also, the Narrative Team made progress on generating the specific text needed for on-screen mission objectives. Currently, this is placeholder text from the designers who worked on levels, but moving forward, the hope is to begin using the proper in-lore objectives.

Player Relations

The Player Relations Team was busy preparing for Alpha 3.5 (including getting ready to test the New Flight Model) as well as boxing off the work created over the holiday period.

“As always, we’d like to point all players to our growing Knowledge Base, which now has 120+ articles and saw almost 450,000 visitors this month! We will continue to grow this by adding more ‘How To’ articles, patch notes, and live service notifications there as well as on Spectrum.”

Props

February saw headway into Area 18’s props: the core street furniture is now in and the team has moved onto the dressing pass, adding in new assets to give life to the streets, alleyways, and landing zone.

As the month closed out, the team jumped into release mode to get a head start squashing bugs and generally tightening up the upcoming release.

QA

Things ramped up on the publishing side in February as the team prepared Alpha 3.5 for the Evocati and PTU. Testing continues on the New Flight Model and other systems as they come online, such as the new weapons, ships, and locations. QA leadership continues to train the newer testers and improve the overall testing process.

The AI Feature Team kept the Frankfurt-based QA testers busy with new features, such as the improved avoidance system and new break-away maneuvers. Testing mainly consists of making sure they’re working as intended, as well as noting visible improvements to what was already in place (in the case of the avoidance system). Combat AI received perception updates which were tested by QA to address issues where the FPS AI would not recognize the player being present in their vicinity.

On the backend, changes to the subsumption visualizer are being tested to ensure no new issues have been introduced in preparation for their full integration into the editor. Testing for ArcCorp and Area 18 is currently underway too.

The Universe Team discovered that mining entities were not appearing in the client due to discrepancies in how they were spawned in the server. This was tracked down and fixed, though testing will continue to make sure it’s working as intended.

Ships

The Vehicle Content Team wrapped up the MISC Reliant Mako, Tana, and Sen variants for Alpha 3.5. They’re now in testing with QA who are addressing bugs before the vehicles go live. The designers and tech artists have been busy with the Origin 300i, which will reach QA for testing in the near future.

Back in the UK, the team continued production on the 890 Jump, bringing more rooms into the final art stage from greybox (including the hangar area). The Carrack is heading towards a greybox-complete state and select areas are being polished for review.

Development continues on the Banu Defender which is utilizing a new style of production that caters to its organic art style. ZBrush is being used to sculpt the interior before transferring the high-density model to 3ds Max, where it is then rebuilt (low-poly) for the game engine. A large portion of the exterior greybox is complete and looking fantastic.

Last but by no means least, the interior updates to the Vanguard wrapped up with essentially the entire area from the cockpit seat backwards being completely redone. This is more than was initially anticipated, but the team feels that it’s worth it. Now that the interior rework has been finalized and the framework for the variants agreed upon, the Ship Team can start on the exterior changes to accommodate them and continue with the variant-specific items.

System Design

The System Design Team is working on improving and upgrading the no-fly zones used across ArcCorp. Since the existing system now needs to support an entire planet, it has proven quite a challenge.

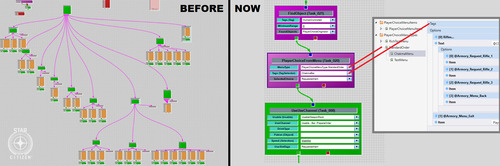

For social AI, the team’s working on unifying vendor behaviors and making sure they’re built in a modular fashion. For example, the team can easily graft new actions onto the base behavior of a shop keeper to allow them to pick up objects, give them to the player, and interact with things on the counter without having to build new ones from scratch.

As with social AI, the team focused on restructuring FPS AI behaviors to make them more modular, with the goal to make it easier to implement specific chunks of logic. For mining, they added new mineable rocks on ArcCorp’s moons. Wala in particular will have a new type of rock that fits better with the crystalline formations available on the moon.

Finally for System Design, AI traffic over Area 18 is currently being developed. The team’s starting small, with a few ships landing and taking off around the spaceport, but they’re also investigating ways to expand it while being mindful of performance.

Turbulent

RSI Platform: On February 14th, Turbulent supported the announcement of a new flyable variant of the Super Hornet, the F7C-M Heartseeker. They also made major updates to the CMS backend which required all hands on deck.

Services: This month’s game service work was focused around developing support for transporting video streams over the comms channels. This will allow the streaming of a user’s face/in-game texture to another player outside of the bind culling bubble, enabling in-game video calls over wider distances. This method also enables the transmission of in-game video streams to web clients.

Turbulent spent considerable time standardizing services to enable them to run within a new local development environment. This will allow the entire Star Citizen universe’s services to run locally on dev systems to develop and iterate with the entire stack.

The Turbulent Services Team also began work on an administration interface for game designers and game operators to display real-time information about the state of the universe. This application can display information about groups, lobbies, and voice channels along with details of online players, quantum routes, and probability volumes.

UI

As in January, UI supported the Environment Team with in-fiction advertising and branding for Area 18, including animation and hologram textures. They also made headway on the 3D area map using the concepts shown last month as visual targets. Finally, they began working out how to bring the rental functionality from the Arena Commander frontend to in-game consoles in Area 18.

VFX

The VFX Team updated the existing particle lighting system to a more modern system. The previous version was based on tessellation, which increased the rendering cost and had limitations on shadow resolution. The new one is a global change that will remove the need for tessellation and improve shadow receiving for crisper, smoother shadows. ArcCorp’s Lyria and Wala will be the first moons to use this new particle lighting system when it’s ready for deployment. It will help the particles integrate into the moons more realistically and address issues when the particles have long shadows going through them, such as during sunrise and sunset.

They also continued to iterate on thruster damage effects and began rolling it out to all ships.

Several new weapon effects were worked on, including a new ballistic hand cannon and ballistic assault rifle. They also carried out extensive visual exploration for the new Tachyon energy weapon class.

Finally, significant time was invested in improving the VFX editor’s UI layout and functionality. Although not as glamorous as planet dressing and effects, improving the quality-of-life for artists is important and helps them to work faster too.

Weapons

The Weapon Art Team completed the Gemini S71, Kastak Arms Coda, Banu Singe Tachyon cannons, Gallenson Tactical ballistic cannon reworks, and five variants of the Aegis Vanguard nose guns.

Conclusion

WE’LL SEE YOU NEXT MONTH…

$(function() { Page.init(); window.Page = new RSI.Game.About(); });

4 notes

·

View notes

Text

5 Game Design Tips to Help You.

Game design is hard. It takes a lot of time, planning and is, essentially, the core of a game. I mean, within game design you have visuals, graphics, level design, soundtrack, the objectives. There’s really a lot into it.

1 - Making a Game Feel Alive

To make a game feel alive is kinda easy, but people seem to keep forgetting about it. There are a lot of ways to make a scene feel alive, and I’ll cover some of them.

Make Nature Feel Natural

That should be an obvious one, but if you didn’t think of that, here it goes. You could make trees bend to the wind, grass shake when the player steps on it, birds that fly away when the player gets close to them, and much more. There’s really no limits to creativity.

Air Particles

Look around. Look at a window, where there’s light coming in. There’s a chance that you’ll notice some dust or something like that floating around. Your game should have that too. It doesn’t need to be crazy, it’s just a subtle effect.

Interaction

Don’t you love to blow those old crates to pieces? And to cut that grass and get a bit of XP? Or to get a hidden item by interacting with a tree? Or even to walk by and see that grass waving at your touch? So, if those things are in other games, why aren’t them in yours?

An Example in a Video

Blackthornprod explains everything I told really well. I recommend you to watch his video.

youtube

2 - Mechanics and Challenges

A level should be fun to play. It doesn’t matter if your game is based on cubes. Visuals don’t make great games, at least not all alone, even if it helps to sell a game. Always remember that.

Now, what makes a game great? Well, a lot of things can help, that’s for sure. Two of them are Mechanics and Challenges. If a game has great Mechanics, but poorly designed challenges, making it too easy or too boring for the player to surpass the game, the game will fail to entertain and, most importantly, to sell.

But that doesn’t mean that both of them need to be perfect. That’s because, by having poor mechanics and great challenges, challenges that make a great use of those mechanics, a player can and will get entertained.

Creativity and patience are the masters here. If you don’t feel creative enough to create a complex and fun mechanic, that’s no problem. You just need the creativity and patience to figure out how to make a simple mechanic fun to use, in interesting and unique ways.

An Example:

Let’s take a look at Super Mario Odyssey. It’s a great game, no doubts about that. But, let’s take a look at the core mechanic of the game; the mechanic that is the center, the root where the game’s tree grew around: the hat throw. Yes, you beat the game throwing hats. Mario’s hat. Doesn’t that sound silly? But what if I told you that you can control enemies when you throw your hat at them and that you can use their abilities? Now it sounds a lot more interesting, right?

3 - Polishing

Polishing is a damn important thing. The more you polish, the better your game will get. The better the game, more players will get entertained and hooked at it. Simple math, ain’t it? But, how does one polish a game? What is polishing after all?

Well, polishing is nothing more than tracking and solving problems. To find something that can be improved and improve it to its greatest level.

Example:

As an example, I’ll use my game, Land Of Enchantments. I’ve made a lot of trees for it, but that’s when I first started the project. I didn’t have a clue on which would be the visual style of my game, so I kept experimenting. Recently I’ve realized how ugly they are and I made an upgrade to them:

I found something that could be improved and I did it. If I were to examine every bit and pit of Land Of Enchantments, I’m really sure that, with time, the game would look like an AAA title. And that’s something that anyone can do.

Now, of course, experience counts, but if you never try you’ll never improve your skills, right? How long do you think I spent polishing this post you’re reading?

4 - Designing a Great Level

Well, there’s no secret formula to it. All I can say for sure is that you need to experiment and put people to play your game and test it out.