#nvidia gpu

Text

Nvidia Partners with Indonesia to Launch $200M AI Center in Global AI Expansion Effort

Nvidia's gaze turns to Indonesia, marking a significant leap in the global AI expansion saga. In a world where AI is no longer just buzz but a revolution, Nvidia's initiative to plant a $200M AI powerhouse in the heart of Indonesia is a game-changer. But what's this all about, and why should we keep our eyes peeled for this monumental project? Let's dive in and get the lowdown.

The Collaboration that Spells Future: Nvidia, a titan in AI and semiconductor innovation, has joined forces with Indonesia's government and the telecom titan, Indosat Ooredoo Hutchison, laying the foundation for what promises to be an AI mecca. This isn't just any partnership; it's a strategic move aiming at a grand slam in the AI arena.

Surakarta: The Chosen Ground: Picture this: Surakarta city, already buzzing with talent and tech, now set to be the epicenter of AI excellence. With a hefty $200 million investment, this AI center isn't just about hardware. It's where technology meets human genius, promising a fusion of telecommunication infrastructure and a human resource haven, all set against the backdrop of Central Java's vibrant community.

Why Surakarta, You Ask? Surakarta, affectionately known as Solo, isn't just chosen by chance. It's a city ready to leap into the future, boasting an arsenal of human resources and cutting-edge 5G infrastructure. According to Mayor Gibran Rakabuming Raka, Solo's readiness is not just about tech; it's about a community poised to embrace and lead in the AI revolution.

A Memorandum of Understanding with a Vision: Back in January 2022, Nvidia and the Indonesian government inked a deal not just on paper but on the future. This memorandum isn't just administrative; it's a commitment to empower over 20,000 university students and lecturers with AI prowess, sculpting Indonesia's next-gen AI maestros.

Riding the Wave of AI Frenzy: Post the unveiling of OpenAI's ChatGPT, the world hasn't just watched; it's leaped into the AI bandwagon, with the AI market ballooning from $134.89 billion to a staggering $241.80 billion in a year. Nvidia's move isn't just timely; it's a strategic chess move in a global AI match.

Beyond Borders: Nvidia's Southeast Asian Symphony: Indonesia's AI center is but a piece in Nvidia's grand Southeast Asian puzzle. From Singapore's collaboration for a new data center to initiatives with the Singapore Institute of Technology, Nvidia is weaving a network of AI excellence across the region, setting the stage for a tech renaissance.

A Global Race for AI Dominance: Nvidia's strides in Indonesia reflect a broader narrative. Giants like Google and Microsoft are not just spectators but active players, investing billions in AI ecosystems worldwide. This global sprint for AI supremacy is reshaping economies, technologies, and societies.

Tethering AI to Crypto: The AI craze isn't just confined to traditional tech realms. In the cryptosphere, firms like Tether are expanding their AI horizons, scouting for elite AI talent to pioneer new frontiers.

In Conclusion: Nvidia's foray into Indonesia with a $200M AI center is more than an investment; it's a testament to AI's transformative power and Indonesia's rising stature in the global tech arena. As we watch this partnership unfold, it's clear that the future of AI is not just being written; it's being coded, one innovation at a time.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

it is actually SUCH bullshit that i have to have an nvidia account and log into it to be able to update my fucking gpu driver. fuck you unnecessary bloatware fuck you obligatory accounts i miss when i could just download the updates from the nvidia website and that's it

0 notes

Text

I've been tinkering with my pc lately I finally increased my cpu fans to keep my rad cooler it's perfect for any game now crisp n cool woop woop!

#intel#cpu#nvidia gpu#gpu#fans#aio#cooler#rad#i7#icue#winnipeg#canadian#canada#relaxing#coffee#mine#chill#coffee time#therapy#ice#ddr4#ddr5#ddr5memory#msi

0 notes

Text

I, @Lusin333, have a Dell NVIDIA GeForce GTX 280 GPU (0X103G).

I will put this GPU in my crypto mining rig so I can mine more Bitcoin or some other cryptocurrency.

Thanks to Dell for giving this graphics card to me FOR FREE.

#Dell#Dell computer#Dell Computers#nvidia#nvidia geforce#nvidia gaming#nvidia gpu#nvidia graphics#nvidia graphics card#ultimate gamer#ultimate gangster#tech gang#tech gangster#Lusin333#nvidia rtx#nvidia geforce rtx#gpu#gpus#graphics card#graphics cards#gamer#gaming#tech#techstuff#black and white#linustechtips#tech meme#tech memes#gaming tech#gaming technology

0 notes

Text

No one wanted to be my valentine so you guys can have this I guess….😥

1 note

·

View note

Text

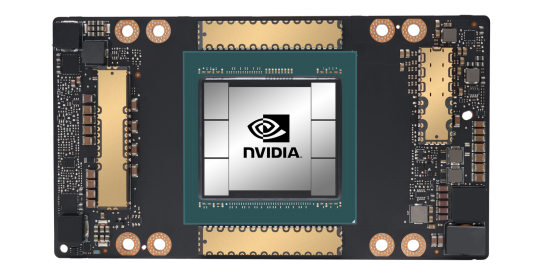

AI Arms Race: Meta's Bold Move with Nvidia GPUs

🚀 Meta's making moves in the AI arms race with Nvidia's H100 GPUs! Are you ready for the tech revolution? Dive in and explore the future with us at thunkdeep.com! #AIRevolution #MetaNvidia #TechFuture

View this post on Instagram A post shared by Mark Zuckerberg (@zuck)

Mark Zuckerberg, the big boss of Meta, just dropped some bombshell news that’s got the tech world wylin’. He’s out here saying Meta’s gearing up with a massive 600,000 H100 GPUs from Nvidia to train their new AI model, Llama 3. Hold up, let that sink in. We’re talking about a tech giant throwing down some serious cash on GPUs.…

View On WordPress

0 notes

Text

youtube

White Elegance, Raw Power: Explore The IT Gear's White Theme PC with Intel i7 14700K and Gigabyte RTX 4060 Aero

Dive into a realm of sophistication and performance with The IT Gear's Pre-built White Theme PC. Featuring the mighty Intel i7 14700K and Gigabyte RTX 4060 Aero, this curated collection blends aesthetics and power seamlessly. Unleash the potential of your setup with a touch of elegance – discover the White Theme PC at The IT Gear.

#Pre-built White Theme PC#White Theme PC#Pre-built PC#Intel i7 14700K#Gigabyte RTX 4060 Aero#RTX 4060#Intel#Nvidia#Nvidia GPU#The IT Gear#Youtube

0 notes

Text

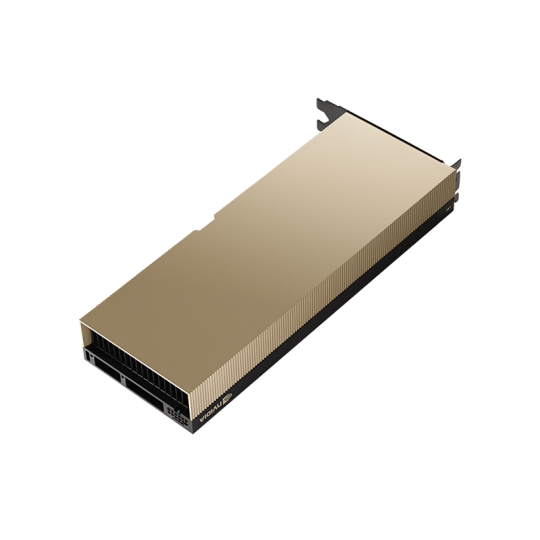

A Deep Dive into NVIDIA’s GPU: Transitioning from A100 to L40S and Preparing for GH200

Introducing NVIDIA L40S: A New Era in GPU Technology

When planning enhancements for your data center, it’s essential to grasp the entire range of available GPU technologies, particularly as they evolve to tackle the intricate requirements of heavy-duty workloads. This article presents a detailed comparison of two notable NVIDIA GPUs: the NVIDIA L40S and the A100. Each GPU is distinctively designed to cater to specific requirements in AI, graphics, and high-performance computing sectors. We will analyze their individual features, ideal applications, and detailed technical aspects to assist in determining which GPU aligns best with your organizational goals. It’s important to note that the NVIDIA A100 is being discontinued in January 2024, with the L40S emerging as a capable alternative. This change comes as NVIDIA prepares to launch the Grace Hopper 200 (GH200) card later this year. Additionally, for those eager to stay updated with the latest advancements in GPU technology.

Diverse Applications of the NVIDIA L40S GPU

Generative AI Tasks

The L40S GPU excels in the realm of generative AI, offering the requisite computational strength essential for creating new services, deriving fresh insights, and crafting unique content.

LLM Training and Inference

In the ever-growing field of natural language processing, the L40S stands out by providing ample capabilities for both the training and implementation of extensive language models.

3D Graphics and Rendering

The GPU is proficient in handling detailed creative processes, including 3D design and rendering. This makes it an excellent option for animation studios, architectural visualizations, and product design applications.

Enhanced Video Processing

Equipped with advanced media acceleration functionalities, the L40S is particularly effective for video processing, addressing the complex requirements of content creation and streaming platforms.

Overview of NVIDIA A100

The NVIDIA A100 GPU stands as a targeted solution in the realms of AI, data analytics, and high-performance computing (HPC) within data centers. It is renowned for its ability to deliver effective and scalable performance, particularly in specialized tasks. The A100 is not designed as a universal solution but is instead optimized for areas requiring intensive deep learning, sophisticated data analysis, and robust computational strength. Its architecture and features are ideally suited for handling large-scale AI models and HPC tasks, providing a considerable enhancement in performance for these particular applications.

Performance Face-Off: L40S vs. A100

In performance terms, the L40S boasts 1,466 TFLOPS Tensor Performance, making it a prime choice for AI and graphics-intensive workloads. Conversely, the A100 showcases 19.5 TFLOPS FP64 performance and 156 TFLOPS TF32 Tensor Core performance, positioning it as a powerful tool for AI training and HPC tasks.

Expertise in Integration by AMAX

AMAX specializes in incorporating these advanced NVIDIA GPUs into bespoke IT solutions. Our approach ensures that whether the focus is on AI, HPC, or graphics-heavy workloads, the performance is optimized. Our expertise also includes advanced cooling technologies, enhancing the longevity and efficiency of these GPUs.

Matching the Right GPU to Your Organizational Needs

Selecting between the NVIDIA L40S and A100 depends on specific workload requirements. The L40S is an excellent choice for entities venturing into generative AI and advanced graphics, while the A100, although being phased out in January 2024, remains a strong option for AI and HPC applications. As NVIDIA transitions to the L40S and prepares for the release of the GH200, understanding the nuances of each GPU will be crucial for leveraging their capabilities effectively.

In conclusion, NVIDIA’s transition from the A100 to the L40S represents a significant shift in GPU technology, catering to the evolving needs of modern data centers. With the upcoming GH200, the landscape of GPU technology is set to witness further advancements. Understanding these changes and aligning them with your specific requirements will be key to harnessing the full potential of NVIDIA’s GPU offerings.

M.Hussnain

Visit us on social media: Facebook | Twitter | LinkedIn | Instagram | YouTube TikTok

#nvidia#nvidia rtx 4080#nvidia gpu#nvidia's gpu#L40S#L40#Nvidia L40#Nvidia A100#gh200#viperatech#vipera

0 notes

Text

Exeton: NVIDIA A16 Enterprise 64GB 250W — Revolutionizing Ray Tracing Power and Performance

The landscape of artificial intelligence (AI), high-performance computing (HPC), and graphics is swiftly evolving, necessitating more potent and efficient hardware solutions. NVIDIA® Accelerators for HPE lead this technological revolution, delivering unprecedented capabilities to address some of the most demanding scientific, industrial, and business challenges. Among these cutting-edge solutions is the NVIDIA A16 Enterprise 64GB 250W GPU, a powerhouse designed to redefine performance and efficiency standards across various computing environments.

The World’s Most Powerful Ray Tracing GPU

The NVIDIA A16 transcends being merely a GPU; it serves as a gateway to the future of computing. Engineered to effortlessly handle demanding AI training and inference, HPC, and graphics tasks, this GPU is an integral component of Hewlett Packard Enterprise servers tailored for the era of elastic computing. These servers provide unmatched acceleration at every scale, empowering users to visualize complex content, extract insights from massive datasets, and reshape the future of cities and storytelling.

Performance Features of the NVIDIA A16

The NVIDIA A16 64GB Gen4 PCIe Passive GPU presents an array of features that distinguish it in the realm of virtual desktop infrastructure (VDI) and beyond:

1- Designed For Accelerated VDI

Optimized for user density, this GPU, in conjunction with NVIDIA vPC software, enables graphics-rich virtual PCs accessible from anywhere, delivering a seamless user experience.

2- Affordable Virtual Workstations

With a substantial frame buffer per user, the NVIDIA A16 facilitates entry-level virtual workstations, ideal for running workloads like computer-aided design (CAD), powered by NVIDIA RTX vWS software.

3- Flexibility for Diverse User Types

The unique quad-GPU board design allows for mixed user profile sizes and types on a single board, catering to both virtual PCs and workstations.

4- Superior User Experience

Compared to CPU-only VDI, the NVIDIA A16 significantly boosts frame rates and reduces end-user latency, resulting in more responsive applications and a user experience akin to a native PC or workstation.

5- Double The User Density

Tailored for graphics-rich VDI, the NVIDIA A16 supports up to 64 concurrent users per board in a dual-slot form factor, effectively doubling user density.

6- High-Resolution Display Support

Supporting multiple high-resolution monitors, the GPU enables maximum productivity and photorealistic quality in a VDI environment.

7- Enhanced Encoder Throughput

With over double the encoder throughput compared to the previous generation M10, the NVIDIA A16 delivers high-performance transcoding and the multi-user performance required for multi-stream video and multimedia.

8- Highest Quality Video

Supporting the latest codecs, including H.265 encode/decode, VP9, and AV1 decode, the NVIDIA A16 ensures the highest-quality video experiences.

NVIDIA Ampere Architecture

The GPU features NVIDIA Ampere architecture-based CUDA cores, second-generation RT-Cores, and third-generation Tensor-Cores. This architecture provides the flexibility to host virtual workstations powered by NVIDIA RTX vWS software or leverage unused VDI resources for compute workloads with NVIDIA AI Enterprise software.

The NVIDIA A16 Enterprise 64GB 250W GPU underscores NVIDIA’s commitment to advancing technology’s frontiers. Its capabilities make it an ideal solution for organizations aiming to leverage the power of AI, HPC, and advanced graphics to drive innovation and overcome complex challenges. With this GPU, NVIDIA continues to redefine the possibilities in computing, paving the way for a future where virtual experiences are indistinguishable from reality.

Muhammad Hussnain

Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

Uhhhhhhh laptop?

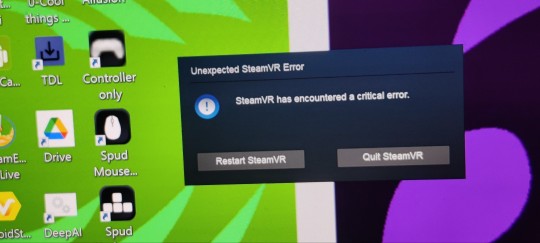

I don't even use share, I use only the fps feature. I did get VR working again at least.

#vr#i have vr#gaming#laptop#nvidia#nvidia failure#steam vr#steam#nvidia gpu#mixed reality#samsung headset#Odyssey plus#steamVr#RTX 3080

0 notes

Text

10 Best Places to sell GPU for cash for the Most Returns

I would like to share the following article and hope you will enjoy it.

"As GPUs tend to upgrade on a regular basis, it is necessary to know what to do with your old and unused GPUs. If you plan to replace the old ones with new GPUs, you can sell the GPU for cash. By doing so, you can get rid of old stuff. At the same time, it can give you a good number of returns. You can use the money towards upgrading your system. There are many places where you can sell GPUs for cash..." For more details, please read 10 Best Places to sell GPU for cash for the Most Returns.

This article is from BuySellRam.com, a BBB A+ rating ITAD company, where you can buy and sell new or used computer components and other electronics online. They pay top dollars for new or used surplus including, but not limited to, computer memory, CPU processors, GPU graphic cards, SSD hard drives, and networking equipment, test equipment, lab equipment, video/photo equipment, gaming equipment, and so on.

For your convenience, here are some of their main service pages:

How to sell RAM memory.

How to sell used GPU graphics cards.

How to sell SSD safely online.

#sell gpu#sell cpu#sell ssd#sell memory#information technology#computer network#test equipment#lab equipment#used server#GPU#nvidia gpu

1 note

·

View note

Text

I, @Lusin333, have a Gigabyte NVIDIA GeForce GTX 460 (GVN460OC1GI).

I will put this GPU in my crypto mining rig so I can mine more Bitcoin or some other crypto.

Thanks to Gigabyte for giving this GPU to me FOR FREE.

#gigabyte#gigabyte gaming#gigabyte graphics card#gigabyte gpu#nvidia#nvidia geforce#nvidiagaming#nvidia gpu#nvidia graphics#nvidiagraphicscard#gtx 770#ultimate gamer#ultimate gangster#tech gang#tech gangster#Lusin333#nvidiartx#nvidia geforce rtx#gpu#gpus#graphics card#graphics cards#gamer#gaming#tech#techstuff#black and white#linustechtips#tech meme#tech memes

0 notes

Text

Had some cash to burn so I decided to upgrade my GPU from a 2080 super to a RTX 4090 and my CPU from a i7-9700k to a i9-13900k. Playing Helldivers 2 at max settings with 100+ FPS is incredible.

21 notes

·

View notes