#image generation

Text

learn the fruits with the help of DALL-E3

more

1K notes

·

View notes

Photo

Envision Our Energizing-Vibrant Renewable Future Now

#earth day#earth month#renewable energy#renewable power#ai art#ai#image generation#design#climate art#renewable electricity#power sources#vibrant skies#solar power#solar#wind#wind energy#green future#solarpunk#renewables#midjourney#imagination#image building

248 notes

·

View notes

Text

“Oh I’d hate image generation/‘ai art’ (fucking hate that term it’s so goddamn nebulous. ‘’The cloud’’ levels of useless terms ) even if there wasn’t theft+labor issues involved, because there’s no human touch, no heart, no soul” bruh.

1) there can be, and there has been in some image generation artist’s works. It’s not the same as drawing, or photography, or other mediums. That doesn’t make it not art, just different. You don’t care to look because you saw assholes being annoying and decided to stick your head in the sand about it. Plus it’s currently trendy in investor spaces among people who suck ass, and therefor a lot of people with money actively pushing it are annoying hacks. There have always been annoying hacks in art. These people have always existed, and you’ve always existed, and I’m sick of both of you. Come on now.

2) the important matters right now ARE the theft and labor issues, focus on the real tangible problems and not stupid ass “real art” debates. Anything can be art. Duchamp’s Fountain has been kicking your ass for over 100 fucking year. You would see anti art and Dadaism and claw out your own throat. im going to eat bricks.

#ai discourse#image generation#fishy rambles#<- deciding to make posts on tumblr rambling bc I hate Twitter and it’s easier to block tags here.

29 notes

·

View notes

Text

So OpenAI finally did it. They created a model that can generate (relatively) realistic video...this is great the whole film industry can be destroyed even faster...

#ai#ai discourse#anti ai#chatGPT#openai#openAI#anti ai art#ai art#image generation#ai gen#image generator

12 notes

·

View notes

Text

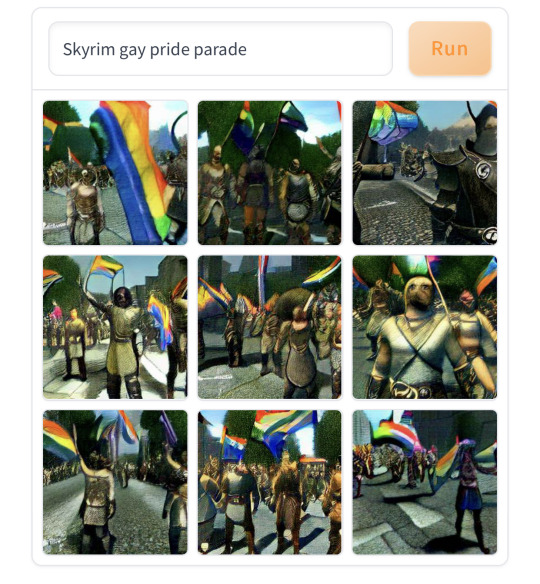

Solitude turn up

#skyrim#the elder scrolls#pride#gay pride#pride parade#Skyrim meme#tes#tes memes#tesblr#image generation

336 notes

·

View notes

Text

Are you wondering if your art has been stolen and illegally used in A.I. image generation models without properly purchasing the legal right to license and use your images in their datasets from you?

Well, there's a website for that.

-Here's an article from ARS TECHNICA about it.-

"In response to controversy over image synthesis models learning from artists' images scraped from the Internet without consent—and potentially replicating their artistic styles—a group of artists has released a new website that allows anyone to see if their artwork has been used to train AI.

The website "Have I Been Trained?" taps into the LAION-5B training data used to train Stable Diffusion and Google's Imagen AI models, among others. To build LAION-5B, bots directed by a group of AI researchers crawled billions of websites, including large repositories of artwork at DeviantArt, ArtStation, Pinterest, Getty Images, and more. Along the way, LAION collected millions of images from artists and copyright holders without consultation, which irritated some artists...." (cont. in article)

#A.I. Art#midjourney#ai art generation#ai image#aiartcommunity#ai generated#stable diffusion#image generation#artists on tumblr#art#copyright#ai art fail#digital art#traditional art#drawing#artwork#haveibeentrained#ars technica#cool websites#dalleart#illustration#digital painting

60 notes

·

View notes

Link

In the field of radiology, generative models, notably DALL-E 2, have tremendous promise for image production and modification. Scientists have shown that DALL-E 2 has learned relevant x-ray picture representations and is able to produce new images from text descriptions, expand images beyond their initial borders, and delete elements. Despite limitations, the authors feel that the use of generative models in radiology research is conceivable so long as the models are further refined and adapted to the specific areas of interest.

Continue Reading

#bioinformatics#radiology#medicalimaging#text to image#image generation#ai#deep learning#medical informatics

30 notes

·

View notes

Text

Explore Adobe Express with Firefly AI Features

Read the article here.

Follow WE AND THE COLOR on:

Facebook I Twitter I Pinterest I YouTube I Instagram I Reddit

6 notes

·

View notes

Text

I will judge your AI by how well it handles the Upgraded Roger Daltrey Test — generating a swimming pool filled with baked beans.

In order: Firefly, DALL•E 3, Bard, DeepAI

2 notes

·

View notes

Text

Yapay Zeka ile Metinden Görüntü Oluşturmak

#ai#tech#technews#technology#teknologi#teknoloji#yapay zeka#teknofest#yapayzekâ#blog#image#ai image#image generator#image generation#yapay zeka araçları#resim

2 notes

·

View notes

Text

Text-to-image generators work by being trained on large datasets that include millions or billions of images. Some generators, like those offered by Adobe or Getty, are only trained with images the generator’s maker owns or has a licence to use.

But other generators have been trained by indiscriminately scraping online images, many of which may be under copyright. This has led to a slew of copyright infringement cases where artists have accused big tech companies of stealing and profiting from their work.

This is also where the idea of “poison” comes in. Researchers who want to empower individual artists have recently created a tool named “Nightshade” to fight back against unauthorised image scraping.

The tool works by subtly altering an image’s pixels in a way that wreaks havoc to computer vision but leaves the image unaltered to a human’s eyes.

If an organisation then scrapes one of these images to train a future AI model, its data pool becomes “poisoned”. This can result in the algorithm mistakenly learning to classify an image as something a human would visually know to be untrue. As a result, the generator can start returning unpredictable and unintended results.

6 notes

·

View notes

Text

AI and fanworks - a dissection

In attempt to address some of the fears and uncertainty going around in the fan communities at the moment I wanted to have a look at AI interacts with art and fandom, and more specifically how it relates to theft in these fields. When AI comes up there has been a lot of knee jerk defensiveness and hurt from artists and writers in the fan community, and honestly, that’s fair enough. This is a field that has advanced rapidly, which has entered the scene with all the force of an explosion. I don’t think anyone was expecting it to hype up this much or so suddenly be splashed everywhere all at once, becoming the topic of debate in nearly every discipline and field, be it law and academia, digital art, and even fanfic writing.

It’s everywhere, it’s all over the place, and to be honest, it’s just a bit of a mess.

Currently we’ve had a strong outpouring of reaction, lashing out at everything to do with AI and its involvement in creative works. The thing is though, this whole issue of AI in art - especially its use on and in relation to fanworks - is something that's complicated and layered and isn’t composed so much of one single offense being committed, but rather multiple stacked issues all tangled together, each of which is simultaneously eliciting reactions all that all end up jumbled together.

What I want to do a bit here is unpick the different layers of how AI is causing offense and why people are reacting the way they are, and in doing so, hopefully give us to the tools to advance the conversation a little and explain more clearly why we don’t want AI used in certain ways.

There are, in essence, three layers of potential ‘theft’ in this AI cake. These are:

The training data that goes into the AI.

The issue regarding ‘styles’ and the question of theft in regards to that.

Use of AI for the completion of another person’s story.

This last one is the one that’s doing the rounds right now in the fanfic community and is causing a lot of strife and upset after a screenshot of a TikTok of someone saying that they were using ChatGPT to finish abandoned and/or incomplete fanfictions.

It’s a concept that has caused a lot of anger, a lot of hurt, and a lot of grief for authors who are facing the concept of this being done to their work and honestly that’s fair enough. As a fic writer myself, I also find the concept highly uncomfortable and have strong feelings about it. Maybe this person was trolling, maybe they were not, but the fact exists that this is increasingly coming a subject of debate.

So, let's go through this mess step by step, see how exactly ‘AI theft’ is being done, what different levels this ‘theft’ is operating at, and what, if anything, can be done about it. And I’m not claiming to have a magic solution, but just that in understanding a problem, you have a better toolkit to explain to others why it is a problem and how to ask for the specific things that might help improve the situation.

Training datasets - yeah, there’s some problems here

One thing we need to talk about going into this section is how AI actually functions - AI here being specifically image and word generators.

Text generators such as ChatGPT and Sudowrites are essentially word calculators. When someone feeds in an input, a prompt saying ‘write me this email’ or ‘write me a script for so and so story’ they essentially behave in the same manner as an autocomplete on your phone. Part of a sentence gets written, and based on the hundreds of thousands of pieces of writing they examined in their training data, they write what the next most expected thing in the sentence would be.

For art generators, it comes down more on pattern recognition. A picture on a screen is just a pattern of pixels, and by feeding them huge amounts of training images you can teach them what the pattern for say ‘an apple’ looks like. When prompted, they can then use that learned pattern to spit out the image of an apple, one that doesn’t look specifically like any single image that went in. In essence it is a new ‘original’ apple, one that never existed specifically just like that before.

All of these systems require huge amounts of training data to work. The more data they have, the better they can recognise patterns and spit out output that looks pretty or makes sense, or seems to properly match the prompt that was put in.

In both these situations, writing or art, you can put in a prompt and get out an output that was created on demand and is different from even the pieces of work it might have been trained on, something that is ‘original’. This is, I think, where a lot of the back and forth regarding use of generated writing and art as a whole comes from and why there’s a lot of debate about whether it counts as theft or not.

And honestly, whether generated content counts as ‘art’ or not, whether it counts as original, and whether the person who prompted it ‘owns it’ or the company who created the generator does isn’t a debate I want to get into here. Hello Future Me did a very good breakdown of this issue and the legalities of AI regarding copyright on YouTube, and I would recommend watching that if you want to learn more. Basically, it’s a murky pond with a lot of nuance and it’s not the debate we’re looking at here, so we’re just going to set it aside and focus on the other part of this instead - the training data.

Because yeah, currently the vast majority of these generative ‘AI’ models have been trained on materials taken without the creators consent. They need huge amount of training data to work, and a great deal of it has been sourced in an unethical way. We’ve seen this with OpenAI scraping AO3 for writing text, to use as training material for their software - and being caught out with it by the whole knot thing. We know that Stable Diffusion was trained using the work of thousands of digital artists works without permission and used their work as training to help educate their image generation software, now resulting in a class action lawsuit by artists against it.

And that fucking sucks.

It sucks on multiple levels. If someone creates a piece of art, copyright law and shit requires that someone wanting to make use of it ask permission. And that’s fair. Copyright law does have its issues, but it does provide some protection to artists and their work. Using someone else's work without permission is not okay, especially when you’re using it for your own profit. There’s a couple of different aspects we need to unpick here, so let’s unpick them.

Yes, a generated work might not be a specific copy of any work that was taken and put into it, but honestly that doesn’t even matter - the lack of consent on the part of the people whose work is being used in this situation is unethical. Web scraping has always been a thing, but there’s a difference between people gathering data for research purposes and a corporation gathering the work of independent small artists and writers without their consent for use in generating their own profits. Because yes, these systems are currently free to use but you know that monetisation is an any now kind of thing, right? Eventually these generative softwares will be paywalled and used as a means of earning profit for the companies that created them, and that work will be done off the back of all the artists/writers whose work was put in.

Already, on the level of being a regular writer or artist, this is pretty shitty (particularly when you consider that generated works are being eyed up by corporations as a way to avoid having to pay proper artists and writers for their work). As a fanworks author, the thought is even shittier. Part of what is lovely about the fan community is the fact that it’s all done for free. Fanworks exist in a specific legal framework that prevents monetisation, yes, and for the most part that’s something that’s celebrated. The joy of creating art or writing a story - of creating and sharing it with a community, just for the sake of doing it and not because of profit, is an integral part of what makes being a creator of fanworks so amazing. So to have something that you’ve created for free and shared for the love of it - specifically with the intent of never having it monetised - fed into a machine that will, in the end, be used to turn corporate profits is souring. People are entirely right to not feel pleased at that concept.

AI techbros would argue that there isn’t a way to create large generative models like this with big enough datasets unless they collect them the way they do, but is it really?

Dance Diffusion is a music generation program that creates music from purely public domain, volunteered, and licenced material. It uses only works where the musicians have either consented to be part of the dataset, or have made their work available broadly for public use. And yes, the reason that they’ve taken this route is probably because the music industry is cutthroat with copyright and they’d be sued out of existence otherwise, but at the very least it proves that taking an ethical approach to data sourcing is entirely possible.

Already some generative software for images are working to do the same. Adobe Firefly is an image generator that runs entirely on its own stock photos, licenced photos, and images that are public domain . This already seems like a more ethical direction, one which is essentially no different than a company using public domain images and modifying them for commercial purpose without using AI. Yes, it has a more constrained data set and therefore might be less developed, but honestly if you can’t create a quality product without doing it ethically, you just shouldn’t make that product.

This is the element of consent missing from many of the big generative softwares right now. The only reason this isn’t the mode of approach being used in selecting what goes into training data is because techbros are testing the waters of what they can get away with, because yeah, taking this step takes more effort and is more expensive, even if it is demonstrably more ethical, and god forbid anything standing in the way of silicon valley profit margins.

So yes, there is unethical shit going on in regards to how people’s art and writing is being put into training sets without their consent, for the final result of generating corporate profits, and as a fanwork author it’s particularly galling when such a model might be making use of work you specifically created in good faith with a desire for it not to be commercialized. You have a right to be upset about this and to have a desire for your work not to be used.

Work that is used in training AI should have to be volunteered, obtained with a licence and the artist’s consent, or be works of public domain. It has to be an opt-in system, end of story.

If you take away this issue of stolen training works, the ethical dilemma of generated works is already a lot more palatable. Yeah, it might not be the same as making a work from scratch yourself and some might argue it’s not ‘real art’, but at that point it just becomes another tool people can use without this bad-taste-in-the-mouth feeling that you’re using software exploiting stolen works from non-consenting artists and writers. (The undervaluing of artists and writers works is something I acknowledge, but that’s a different debate and we’re not going to get into it here – though it will be touched upon briefly in the next section.)

The thing is though, this issue isn’t one we can readily fix as individuals. This is something that’s going to come down to government regulation and legislation. If you feel strongly about it, that’s the areas you need to put pressure - learn about what regulation is being done, make known your support for regulations that do promote more ethical practice, and if you’re someone who is generating artistic works, when make your consumer vote count and do it using software that more ethically sources its training data.

And yeah, maybe this sort of sucks as a conclusion for this issue, but the cat’s out of the bag now and generated works are likely here to stay. We’re in the wild west of them right now, but things can be done and it doesn’t need to be the complete dystopia it currently feels like it might be becoming.

The ‘theft’ part of ‘art theft’

Here we move on from the issues inherent with the generative models themselves - or more specifically their training sets - and to the ways they’re being used. This issue is split into two parts, the first of which is a more prominent issue in visual art and the second of which is an issue more specific to fanfic writing.

Up until now you can sort of argue that use of a generative model is a victimless crime. The output you get may have been trained using someone else’s work, but it itself isn’t a strict copy of anything that exists. It’s created on demand and it’s unique. And yeah, you might say, maybe it sucks a bit that the training data stuff is a bit unethical but the harm there is already done and using it to generate a piece of art isn’t hurting anyone.

And to a point that’s true. If the ethical sourcing problem for data is solved, then there isn’t necessarily a lot of harm in using that software to generate something – it might not be the same process as creating art yourself, but it’s a tool that people can do doubt find some helpful uses for.

Using AI to copy someone’s style, or to finish one of their pieces of fiction, however, is something that hurts someone. It’s not a bad system causing the problem, but bad actors using that system to cause harm. In this this is distinct from the previous theft issue we’ve discussed. Even in a world where the big AI software’s all have de facto ethical training data, this would still be an issue.

‘Style’ theft – a problem for artists

One of the big things that’s been happening with image generation AIs is people training them to mimic certain styles, so that the user can then create new works in that style. There’s a lot of pretty furious debate back and forth about it in regards to whether using a style is art theft, whether its infringing copyright, with the weeping techbros often hurrying to cry ‘you can’t own a style!’

And to a certain point, yeah, they have a point. If you were to train an AI on impressionist artworks and tell it ‘paint me a tree in the style of Monet’ I don’t think anyone would necessarily have a problem with it. It’s a style, yes, but no one person owns it, and most of the people who developed that style are long dead – more works being created in that style and even sold commercially isn’t going to undercut a specific artist’s market. You could up and decide to learn to paint yourself and start churning out Monet-esque works by hand and it wouldn’t be copyright infringement or art theft or any of the like.

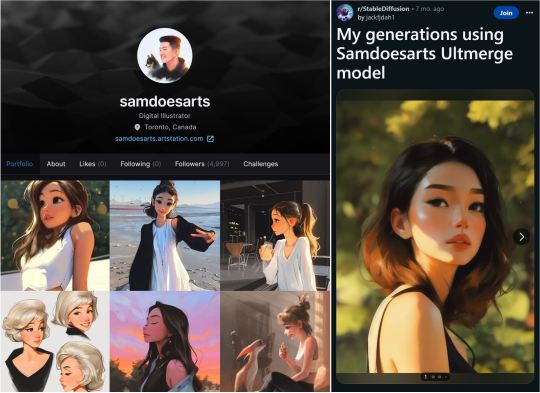

The situation is sort of different when you’re dealing with a living artist today. Already there have been cases of artists with distinct and beloved styles having their portfolios fed into AI without their consent, so that the prompter can then create works in their style.

(Original work by samdoesart on the left, and generated work trained on samdoesart’s style on the right, posted on reddit. A model was specifically created trained on this artist in order to create art that looks like it was made by them.)

And this is harmful in two separate ways. We’ve covered above why its unethical and shitty to feed someone else’s work into a training set without their consent, and for a work to be generated in a specific artist’s style, a large amount of their work needs to be put in in order for the AI to learn its patterns and mimic it. The artist hasn’t consented to their inclusion, and now their hard work and the style they’ve created has been absorbed and made available for corporate use. Remember – most of these programs retain full ownership over all works they produce, regardless of the user that ‘created’ then. All generated works are owned by the corporation.

Unlike general AI generation, where you’re creating some generic piece of art or writing and it doesn’t specifically harm anyone, this is a crime that directly has a victim. A specific artist has had their work used without consent, and often with the specific goal of creating work in that artist’s style to avoid having to commission or pay the artist for otherwise producing a work in their style. By creating this workaround where they don’t have to pay the artist for their work, they’re directly contributing to the harm of the artists financial prospects and the devaluing of their work.

When we talk about generic AI generation as a whole, the type where thousands of different distinct inputs go in and the thing that comes out doesn’t look specifically like any single one of them, you can make a case that it’s not copying any specific person’s work. This isn’t the case here. This is use of AI for the purposes of creating an art is a specific persons style. As Hello Future Me puts it “it is deriving a product from their data and their art without any attribution, payment, or credit.” And I don’t know about you, but that sounds a lot like theft to me. Yes, maybe a specific existing work was not explicitly copied, but you’re still making use of their style without a consent in a way that undermines their work and the market for their work, and that is a pretty shitty thing to do.

This whole section has talked about visual art so far, but you can see how it could also be applied to a written work. Telling an AI ‘write something in the style of Shakespeare’ is harmless, just as the Monet example was harmless, but you can readily see how this could also be applied to say, a poet who has a particular style of writing, with their portfolio of works fed in to create more poems in their style without their consent.

The key difference here, I think, is that works like Monet or Shakespeare are part of the public domain. No one owns them and everyone is free to use them. Generating derivitive works off their creations harms no one, because these creators are not alive and still producing - and selling - their work today. That cannot be said of living artists whose work is being exploited without compensation and whos livelihoods are being threatened by generated works.

Feeding an artist or writer’s work into an AI so that you can use their style, while not strictly ‘copying’ any single work of theirs, it still a harmful thing to do and should not be done. Even if you don’t intend to sell it and are just doing it for your private fun – even if you never even post the results online – you’ve still put their portfolio into the training set, making it a part of the resources a corporation can now use to turn a profit without repaying the artist or writer for their contribution.

The Fic Problem – or, completing someone else’s work

Now we moved onto the specific issue that first prompted me to write up this debate. There’s been noise going around in the fic writing community about people using AI to write fanfic and/or to finish other people’s incomplete works.

In general, using AI to write fanfic is no different than using AI to come up with any other kind of creative writing. It has the same underlying ethical issues inherent in the system – which is, currently, that all work is being generated based on training data that was obtained without consent. Leaving aside whether in general generating creative writing is a good thing to do (what’s the point in writing, after all, if you don’t enjoy the process of actually writing?) let’s talk instead about the issue of people finishing other people’s fics.

The fanfiction community – and the fanart community as well I would wager – has always had something of a problem with entitlement. And look, I sort of get it. When you find an amazing fic that isn’t finished, it can hurt to reach the end and wonder if you’ll ever get to read any more of it. But that’s just the way it is. The fanworks community is one that is built overwhelmingly upon people creating labours of love just for the joy of it and sharing them free of charge. It’s hobby work, it’s hours squeezed in around jobs and families and kids, it’s someone scraping time out of their busy day to create something and share it just for the sake of it. People move onto different projects for all manner of reasons – maybe they don’t enjoy the fandom anymore, maybe that story now has baggage, maybe their tastes have just changed and they want to write different stuff. Maybe they stopped writing fiction altogether. Maybe they died.

Some stories will never be finished, and honestly, no author owes you the end of one. They have every right to write whatever they want and work on what projects they like, even if that means leaving some unfinished.

Coming in to complain about it, or to demand new updates – or, in this case, to finish the work yourself without their consent, is a shitty thing to do. And yeah, some author’s do put their abandoned works up for adoption and are happy for people to finish them, but in most cases author’s don’t want this done. That’s a personal choice and varies author to author. Someone seeing an abandoned fic and choosing, with their own two hands, to write up an ending for it without asking the author is already a bit of a sketchy thing to do, even if it’s just done privately and never sees the light of day, and doing it using AI is even worse.

Because yeah, to do it, you have to feed that person’s fic into the AI. You as a person, are putting a piece of work made with love and shared freely with you as a gift into a corporate dataset, where it will be used to generate corporate profits off the back of that person’s work. And more, if you want it to write properly in their style and mimic them well, then hey, you have to probably put in even more of their work, so that the AI can copy them well, which means even more of their works taken without their consent and put into the AI.

And that fucking sucks. It’s unethical, it’s a shitty thing to do, and if it’s unacceptable for a corporation to be taking someone’s work without their consent for use in AI training, then it’s unacceptable for you as an individual to be handing someone else’s work to them of your own volition.

As a fanfiction author, I cannot imagine anything more disheartening. I have a lot of unfinished works yes, some of which are even completely abandoned, but even when I haven’t touched them in years I don’t stop caring about those fics. I have everything that will happening in them already planned out – all the arcs, the twists, the resolutions and the ends – and someone else finishing it without my consent robs me of that chance to do it myself. You don’t know if an author still cares about a fic, whether they’re trying to work up the motivation to put out a new chapter even years later. You don’t know why they stopped writing it, or what that story might mean to them.

And I think this is what a lot of it comes down to. People will assume that because a fic is abandoned that an author doesn’t care about it anymore, or that because it’s fanfiction they don’t ‘own it’. Nothing could be further from the truth. Author’s do still care, and yes, they do own their own work. A transformative work might be one based on another franchise and one that makes use of another person’s copyright, but everything you put in yourself is still yours. Any new material, new characters, new concepts, new settings – the prose you use, the way you write, your turns of phrase. Those legally belong to the fanfic author even though they’re writing fanfic.

And yeah, some people might argue that putting someone's fanfic through an AI is a transformative act in itself. People write fic of fic, after all, and is creating something with AI based on fic really any different? Yes and no. A transformative work is one that builds on a copyrighted work in a different manner or for a different purpose from the original, adding adds "new expression, meaning, or message". It is this transformative act that makes it fair use, rather than just theft.

Could an AI do this, creating something that is ‘transformative’? Maybe. It’s not for me to say, and AI generators are getting better every day. In the use of AI in continuing a fic, however, I would argue that it’s use is not transformative but instead derivative. A derivative work is based on a work that has already been copyrighted. The new work arises—or derives—from the previous work. It’s things like a sequel or an adaptation, and you could clearly argue that the continuation of a story, a ‘Part 2′, counts under this banner of derivative rather than transformative.

And okay, relying on copyright law while already in the nebulous space of fanfic feels like a bit of a weird thing, but it still counts here. Anything new that a person put into a fic belongs to them, and if you take it without their consent then yes, that is indeed stealing. Putting it through an AI or not doesn’t change that.

And if the legal arguments here don’t sway you, then how about this - it’s a dick thing to do. Even if you don’t mean for it to hurt anyone, you are hurting the author. If you enjoy their work at all - and you must, if you’re so attached to it that you're desperate to see more of it - then respect the author. Respect the work they’ve done, the gift they’ve shared with you. Read it, enjoy it, and then move on.

Fandom is built on a shared foundation of love and respect, a community where people create things and share them with others in good faith just for the love of it. This relationship is a two-way street. Authors and artists share their work with you and put in hours making it, but if the fan community stops respecting that then things might end up changing. An author burned by seeing their work fed into AI might stop writing, or stop sharing their works publicly. Already people are locking down their AO3 accounts so that only registered users can read and are creating workskins to prevent text copy and pasting out of fear that their work will be the one that someone chooses to ‘finish for them’. Dread over non-consenting use of AI is already having a direct impact in making fic more inaccessible.

Is that the direction that we as a community want to head in? Because what’s currently happening a real shame. I would prefer to share my works with non-users, to have them read by anyone who cares to read them, but I’ve had to change that, just in the hopes that that small extra step might provide a slight sliver of protection that will keep my work from being put in an AI.

And what after that? Currently sites like AO3 allow readers to download copies of the fic so that they can store them themselves. If authors are concerned about their work being taken and used in AI, will they begin petitioning for this feature to be removed, so as to better protect their work from this sort of use? Artists are already coming up with programs such as Glaze to help protect their work from use in training datasets, but what recourse to writers have save to make their work more and more inaccessible and private? No doubt some authors are already contemplating whether its better to just start only sharing their works privately with friends or in discord servers, in the hopes that it might better protect their works from being stolen and used.

Its worth noting as well that any way in which an author restricts fic on AO3 in order to prevent AI - such as using a workskin to prevent copy and pasting - is one that will also impact peoples ability to do things like ficbinding and translations. If access is restricted, then its restricted for everyone, good and bad.

And some of you might say - well what does it matter if your work does end up in an AI? It’s a drop in the ocean, they won’t be copying your exact work so what does it even matter? And all I can say in response to that is that its about the principle of it. If someone is making use of another person’s work, then that person deserves to be fairly credited and compensated. If I create work for free, in rebellion against a world that is racing towards the commercialisation of all and everything, then I don’t want my work being exploited by some fucking corporation to earn profits. That’s not why I made it, that’s not why I shared it, and they can go fuck themselves.

Unethical corporations are one thing, but the other component of this problem is people within the community acting in bad faith. We might not as individuals be able to regulate mass AI data gathering, but we can chose how we behave in community spaces, and what things we chose to find acceptable in our communities.

What I’m trying to say is, if you love fanfiction, if you love fanart, if you love the works people create and want to see more, then please treat their creators with respect. We put a lot of work into creating the art you enjoy, we put blood and sweat and tears into it, and we want to keep making more. We want to share it with you. We can’t do that if you make us scared to do it, out of fear of our works being put into an AI without our consent.

It's about respect, it’s about common decency. We as a community need to decide whether we think it’s acceptable for people to put someone’s work into an AI without their consent – whether it’s acceptable to finish someone else’s work using an AI without their consent. Maybe AO3 will make some sort of ruling in the future about it, or maybe they won’t. Even if they do, it likely won’t make that big of a difference. Bad actors will act badly no matter the rules, all we can do as a community is make it clear how we feel about that and discourage these sorts of behaviours.

AI generative tools exist and like it or not will be here to stay. They have a lot of potential and could become a really useful and interesting tool in both writing and the visual arts, but as it stands there are serious issues with how it is used – both on a system wide level, with the unethical gathering of data, and on a user level, with people using it in bad faith.

AI is what it is, but it doesn’t change the underlying choice here – you as a member of the community have the choice whether to behave like a dick. Don’t be a dick.

#artificial intelligence#chatgpt#sudowrite#openAI#stablediffusion#fanfiction#fanart#fantasy#creative writing#fic#ao3#ff.net#image generation#art theft#te talks

5 notes

·

View notes

Text

Dreamt about programming an image generator last night. Not an AI text to image thing, but a tool that could merge multiple filters and effects in user-defined ways to create something new.

It’s entirely possible for me to do this IRL; the only real question is whether or not anyone would find it useful or interesting.

6 notes

·

View notes

Text

Unleashing Creativity: The Revolutionary Role of Midjourney AI in Concept Art

In the dynamic world of creative design, technology has become a driving force behind the transformation of artistic processes. This is especially evident in the field of concept art, where artificial intelligence (AI) is pushing the boundaries of imagination and producing captivating visual representations. Leading the charge in this exciting development is Midjourney AI, a platform that empowers artists and designers to take their concept art to new heights.

The Evolution of Concept Art

Concept art has always been a crucial component in the creative industry, providing a visual blueprint for a variety of projects including video games, films, product designs, and architecture. It's the initial spark that ignites the imagination and assists artists and designers in bringing their ideas to fruition. Traditional concept art techniques have limitations, often requiring significant time and resources. However, with Midjourney AI, this process has been revolutionized. It offers a groundbreaking alternative that unleashes limitless creativity. Gone are the days of laborious and limited creative processes.

Midjourney AI: A creative Partner

Thanks to the advancement of technology, specifically AI, and platforms like Midjourney AI, artists and designers now have a powerful and innovative ally by their side. Midjourney AI is revolutionizing the world of concept art and design. With a fusion of AI and human imagination, this platform empowers artists to bring their ideas to life with speed and precision. The possibilities with Midjourney AI are truly remarkable. Whether it's character designs, landscapes, environments, or abstract concepts, the platform effortlessly generates captivating visuals that align with an artist's vision. All it takes is a few input parameters or descriptions, and Midjourney AI takes care of the rest. The results are not only stunning, but they also capture the essence of an artist's creativity.

Efficiency and creativity are at the core of Midjourney AI

By utilizing this tool, artists can shave hours off their concept art creation process. Gone are the days of endless revisions and tedious iterations. With Midjourney AI, ideas flow freely and rapidly, giving artists more time to perfect their creations. Beyond just saving time, Midjourney AI serves as a boundless source of inspiration. Its versatile capabilities allow artists to explore new styles and directions, opening endless possibilities for their projects. It's like having a constant, reliable partner by your side, always ready to push the boundaries and spark fresh ideas.

Embracing Collaboration and Creativity:

Midjourney AI also offers the flexibility for collaboration. Artists and designers can use the generated concepts as a starting point and then apply their personal touch to create unique, customized artwork. This collaborative approach combines the efficiency of AI with the artistic finesse of humans, resulting in the best of both worlds.

In the evolving world of concept art, the inclusion of AI, particularly through platforms like Midjourney AI, marks the beginning of a revolutionary era. Gone are the days of choosing between human skill and technology- now, it is a collaborative alliance, with both parties working together seamlessly to produce exceptional results.

Midjourney AI revolutionizes creativity by streamlining the concept art process and igniting fresh ideas. Not only does it save time, but it also elevates the calibre and diversity of concept art. As we witness the ever-changing landscape of creativity, Midjourney AI serves as a prime example of how technology can fuel inspiration and innovation.

Whether you're an aspiring artist, an experienced designer, or a visionary with a unique gift, Midjourney AI is your ultimate co-creator, empowering your artistic vision and propelling your concept art to new heights.

Check here for more

#graphic design#ai artwork#artificial intelligence#artwork#ai art#ai art gallery#ai art generator#midjourney#digital marketing#image generation#portrait#character design#illustration#digital illustration#illustrative art

2 notes

·

View notes

Text

'INTERLUDIO XLVII'. AI 🤖 hmm..

Just played with one of the AI image generators out of curiosity attempting to recreate some ethnological / historical reconstruction of an ancient amazon warrior:

My 'AI experience' - at this stage it's next to impossible to make these generators understand historical context and produce authentic historically accurate armour, props or costumes.

It looks like AI refers to available and easily searchable pop-culture 'fantasy' reference images [like phony Wonder Woman "amazons" or other spoofy interpretations], disregarding description criteria used such as "classical antiquity", "historical accuracy", "nomadic culture", "pelta shield", "phrygian cap", etc.

Nevertheless, being somewhat skeptical I find this experiment curious [and hope the AI image generating technology is yet at its initial stage of development having a whole lot of cultural and civilizational matters to learn, requiring more training] - what do you think?

Am keen to hear your opinion..

#interludio#ai#ai images#image generation#historical reconstruction#ai experiments#photorealistic#nomadic culture#ancient history#culture#mythology#images#amazons#αμαζόνες#amazones#amazzoni#amazonen#oiorpata#warrior woman#female warrior#women in history#ancient#civilization#historical context#ai technology#ai art#digital#reenactment#experiment#michaelsvetbird

4 notes

·

View notes