#large language models

Text

How plausible sentence generators are changing the bullshit wars

This Friday (September 8) at 10hPT/17hUK, I'm livestreaming "How To Dismantle the Internet" with Intelligence Squared.

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

In my latest Locus Magazine column, "Plausible Sentence Generators," I describe how I unwittingly came to use – and even be impressed by – an AI chatbot – and what this means for a specialized, highly salient form of writing, namely, "bullshit":

https://locusmag.com/2023/09/commentary-by-cory-doctorow-plausible-sentence-generators/

Here's what happened: I got stranded at JFK due to heavy weather and an air-traffic control tower fire that locked down every westbound flight on the east coast. The American Airlines agent told me to try going standby the next morning, and advised that if I booked a hotel and saved my taxi receipts, I would get reimbursed when I got home to LA.

But when I got home, the airline's reps told me they would absolutely not reimburse me, that this was their policy, and they didn't care that their representative had promised they'd make me whole. This was so frustrating that I decided to take the airline to small claims court: I'm no lawyer, but I know that a contract takes place when an offer is made and accepted, and so I had a contract, and AA was violating it, and stiffing me for over $400.

The problem was that I didn't know anything about filing a small claim. I've been ripped off by lots of large American businesses, but none had pissed me off enough to sue – until American broke its contract with me.

So I googled it. I found a website that gave step-by-step instructions, starting with sending a "final demand" letter to the airline's business office. They offered to help me write the letter, and so I clicked and I typed and I wrote a pretty stern legal letter.

Now, I'm not a lawyer, but I have worked for a campaigning law-firm for over 20 years, and I've spent the same amount of time writing about the sins of the rich and powerful. I've seen a lot of threats, both those received by our clients and sent to me.

I've been threatened by everyone from Gwyneth Paltrow to Ralph Lauren to the Sacklers. I've been threatened by lawyers representing the billionaire who owned NSOG roup, the notoroious cyber arms-dealer. I even got a series of vicious, baseless threats from lawyers representing LAX's private terminal.

So I know a thing or two about writing a legal threat! I gave it a good effort and then submitted the form, and got a message asking me to wait for a minute or two. A couple minutes later, the form returned a new version of my letter, expanded and augmented. Now, my letter was a little scary – but this version was bowel-looseningly terrifying.

I had unwittingly used a chatbot. The website had fed my letter to a Large Language Model, likely ChatGPT, with a prompt like, "Make this into an aggressive, bullying legal threat." The chatbot obliged.

I don't think much of LLMs. After you get past the initial party trick of getting something like, "instructions for removing a grilled-cheese sandwich from a VCR in the style of the King James Bible," the novelty wears thin:

https://www.emergentmind.com/posts/write-a-biblical-verse-in-the-style-of-the-king-james

Yes, science fiction magazines are inundated with LLM-written short stories, but the problem there isn't merely the overwhelming quantity of machine-generated stories – it's also that they suck. They're bad stories:

https://www.npr.org/2023/02/24/1159286436/ai-chatbot-chatgpt-magazine-clarkesworld-artificial-intelligence

LLMs generate naturalistic prose. This is an impressive technical feat, and the details are genuinely fascinating. This series by Ben Levinstein is a must-read peek under the hood:

https://benlevinstein.substack.com/p/how-to-think-about-large-language

But "naturalistic prose" isn't necessarily good prose. A lot of naturalistic language is awful. In particular, legal documents are fucking terrible. Lawyers affect a stilted, stylized language that is both officious and obfuscated.

The LLM I accidentally used to rewrite my legal threat transmuted my own prose into something that reads like it was written by a $600/hour paralegal working for a $1500/hour partner at a white-show law-firm. As such, it sends a signal: "The person who commissioned this letter is so angry at you that they are willing to spend $600 to get you to cough up the $400 you owe them. Moreover, they are so well-resourced that they can afford to pursue this claim beyond any rational economic basis."

Let's be clear here: these kinds of lawyer letters aren't good writing; they're a highly specific form of bad writing. The point of this letter isn't to parse the text, it's to send a signal. If the letter was well-written, it wouldn't send the right signal. For the letter to work, it has to read like it was written by someone whose prose-sense was irreparably damaged by a legal education.

Here's the thing: the fact that an LLM can manufacture this once-expensive signal for free means that the signal's meaning will shortly change, forever. Once companies realize that this kind of letter can be generated on demand, it will cease to mean, "You are dealing with a furious, vindictive rich person." It will come to mean, "You are dealing with someone who knows how to type 'generate legal threat' into a search box."

Legal threat letters are in a class of language formally called "bullshit":

https://press.princeton.edu/books/hardcover/9780691122946/on-bullshit

LLMs may not be good at generating science fiction short stories, but they're excellent at generating bullshit. For example, a university prof friend of mine admits that they and all their colleagues are now writing grad student recommendation letters by feeding a few bullet points to an LLM, which inflates them with bullshit, adding puffery to swell those bullet points into lengthy paragraphs.

Naturally, the next stage is that profs on the receiving end of these recommendation letters will ask another LLM to summarize them by reducing them to a few bullet points. This is next-level bullshit: a few easily-grasped points are turned into a florid sheet of nonsense, which is then reconverted into a few bullet-points again, though these may only be tangentially related to the original.

What comes next? The reference letter becomes a useless signal. It goes from being a thing that a prof has to really believe in you to produce, whose mere existence is thus significant, to a thing that can be produced with the click of a button, and then it signifies nothing.

We've been through this before. It used to be that sending a letter to your legislative representative meant a lot. Then, automated internet forms produced by activists like me made it far easier to send those letters and lawmakers stopped taking them so seriously. So we created automatic dialers to let you phone your lawmakers, this being another once-powerful signal. Lowering the cost of making the phone call inevitably made the phone call mean less.

Today, we are in a war over signals. The actors and writers who've trudged through the heat-dome up and down the sidewalks in front of the studios in my neighborhood are sending a very powerful signal. The fact that they're fighting to prevent their industry from being enshittified by plausible sentence generators that can produce bullshit on demand makes their fight especially important.

Chatbots are the nuclear weapons of the bullshit wars. Want to generate 2,000 words of nonsense about "the first time I ate an egg," to run overtop of an omelet recipe you're hoping to make the number one Google result? ChatGPT has you covered. Want to generate fake complaints or fake positive reviews? The Stochastic Parrot will produce 'em all day long.

As I wrote for Locus: "None of this prose is good, none of it is really socially useful, but there’s demand for it. Ironically, the more bullshit there is, the more bullshit filters there are, and this requires still more bullshit to overcome it."

Meanwhile, AA still hasn't answered my letter, and to be honest, I'm so sick of bullshit I can't be bothered to sue them anymore. I suppose that's what they were counting on.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/09/07/govern-yourself-accordingly/#robolawyers

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#chatbots#plausible sentence generators#robot lawyers#robolawyers#ai#ml#machine learning#artificial intelligence#stochastic parrots#bullshit#bullshit generators#the bullshit wars#llms#large language models#writing#Ben Levinstein

2K notes

·

View notes

Text

I suppose the thin silver lining to the discoverability of online resources going to shit because of SEO explotation is that all the folks who responded to reasonable questions with snarky "let me Google that for you" links which now lead to nothing but AI-generated gibberish look like real assholes now.

938 notes

·

View notes

Text

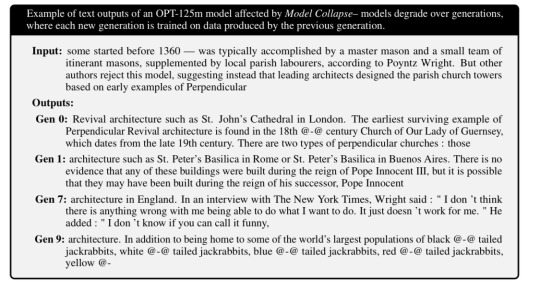

Training large language models on the outputs of previous large language models leads to degraded results. As all the nuance and rough edges get smoothed away, the result is less diversity, more bias, and …jackrabbits?

#neural networks#large language models#llm#internet training data#jackrabbits#is this the singularity#I have already made the joke about low botground data#ai eats itself#when grifters fill the internet with ai generated seo sludge

746 notes

·

View notes

Link

Popular large language models (LLMs) like OpenAI’s ChatGPT and Google’s Bard are energy intensive, requiring massive server farms to provide enough data to train the powerful programs. Cooling those same data centers also makes the AI chatbots incredibly thirsty. New research suggests training for GPT-3 alone consumed 185,000 gallons (700,000 liters) of water. An average user’s conversational exchange with ChatGPT basically amounts to dumping a large bottle of fresh water out on the ground, according to the new study. Given the chatbot’s unprecedented popularity, researchers fear all those spilled bottles could take a troubling toll on water supplies, especially amid historic droughts and looming environmental uncertainty in the US.

[...]

Water consumption issues aren’t limited to OpenAI or AI models. In 2019, Google requested more than 2.3 billion gallons of water for data centers in just three states. The company currently has 14 data centers spread out across North America which it uses to power Google Search, its suite of workplace products, and more recently, its LaMDa and Bard large language models. LaMDA alone, according to the recent research paper, could require millions of liters of water to train, larger than GPT-3 because several of Google’s thirsty data centers are housed in hot states like Texas; researchers issued a caveat with this estimation, though, calling it an “ approximate reference point.”

Aside from water, new LLMs similarly require a staggering amount of electricity. A Stanford AI report released last week looking at differences in energy consumption among four prominent AI models, estimating OpenAI’s GPT-3 released 502 metric tons of carbon during its training. Overall, the energy needed to train GPT-3 could power an average American’s home for hundreds of years.

2K notes

·

View notes

Text

Auto-Generated Junk Web Sites

I don't know if you heard the complaints about Google getting worse since 2018, or about Amazon getting worse. Some people think Google got worse at search. I think Google got worse because the web got worse. Amazon got worse because the supply side on Amazon got worse, but ultimately Amazon is to blame for incentivising the sale of more and cheaper products on its platform.

In any case, if you search something on Google, you get a lot of junk, and if you search for a specific product on Amazon, you get a lot of junk, even though the process that led to the junk is very different.

I don't subscribe to the "Dead Internet Theory", the idea that most online content is social media and that most social media is bots. I think Google search has gotten worse because a lot of content from as recently as 2018 got deleted, and a lot of web 1.0 and the blogosphere got deleted, comment sections got deleted, and content in the style of web 1.0 and the blogosphere is no longer produced. Furthermore, many links are now broken because they don't directly link to web pages, but to social media accounts and tweets that used to aggregate links.

I don't think going back to web 1.0 will help discoverability, and it probably won't be as profitable or even monetiseable to maintain a useful web 1.0 page compared to an entertaining but ephemeral YouTube channel. Going back to Web 1.0 means more long-term after-hours labour of love site maintenance, and less social media posting as a career.

Anyway, Google has gotten noticeably worse since GPT-3 and ChatGPT were made available to the general public, and many people blame content farms with language models and image synthesis for this. I am not sure. If Google had started to show users meaningless AI generated content from large content farms, that means Google has finally lost the SEO war, and Google is worse at AI/language models than fly-by-night operations whose whole business model is skimming clicks off Google.

I just don't think that's true. I think the reality is worse.

Real web sites run by real people are getting overrun by AI-generated junk, and human editors can't stop it. Real people whose job it is to generate content are increasingly turning in AI junk at their jobs.

Furthermore, even people who are setting up a web site for a local business or an online presence for their personal brand/CV are using auto-generated text.

I have seen at least two different TV commercials by web hosting and web design companies that promoted this. Are you starting your own business? Do you run a small business? A business needs a web site. With our AI-powered tools, you don't have to worry about the content of your web site. We generate it for you.

There are companies out there today, selling something that's probably a re-labelled ChatGPT or LLaMA plus Stable Diffusion to somebody who is just setting up a bicycle repair shop. All the pictures and written copy on the web presence for that repair shop will be automatically generated.

We would be living in a much better world if there was a small number of large content farms and bot operators poisoning our search results. Instead, we are living in a world where many real people are individually doing their part.

154 notes

·

View notes

Text

Three reasons not to use AI to write spells

Large language models generate text based on probability. This means that whatever spell you try to generate would be extremely similar, if not identical, to the first results that would come up if you searched DuckDuckGo.

The creators of large language models are unlikely to care about enough about cultural appropriation and environmental harm to ensure that all of the spell components are ethical on these matters.

Generative AI uses an absurd amount of resources to operate and contribute to pollution and water shortages. This might not be the case forever, but it's how things are right now. And there is simply no reason to have an LMM write a spell for you when you could just search DuckDuckGo.

#generative ai#ai#lmm#large language models#lmms#witchcraft#witchblr#spells#spellcrafting#witchcraft community

196 notes

·

View notes

Text

Yet Another Thing Black women and BIPOC women in general have been warning you about since forever that you (general You; societal You; mostly WytFolk You) have ignored or dismissed, only for it to come back and bite you in the butt.

I'd hoped people would have learned their lesson with Trump and the Alt-Right (remember, Black women in particular warned y'all that attacks on us by brigading trolls was the test run for something bigger), but I guess not.

Any time you wanna get upset about how AI is ruining things for artists or writers or workers at this job or that, remember that BIPOC Women Warned You and then go listen extra hard to the BIPOC women in your orbit and tell other people to listen to BIPOC women and also give BIPOC women money.

I'm not gonna sugarcoat it.

Give them money via PayPal or Ko-fi or Venmo or Patreon or whatever. Hire them. Suggest them for that creative project/gig you can't take on--or you could take it on but how about you toss the ball to someone who isn't always asked?

Oh, and stop asking BIPOC women to save us all. Because, as you see, we tried that already. We gave you the roadmap on how to do it yourselves. Now? We're tired.

Of the trolls, the alt-right, the colonizers, the tech bros, the billionaires, the other scum... and also you. You who claim to be progressive, claim to be an ally, spend your time talking about what sucks without doing one dang thing to boost the signal, make a change in your community (online or offline), or take even the shortest turn standing on the front lines and challenging all that human garbage that keeps collecting in the corners of every space with more than 10 inhabitants.

We Told You. Octavia Butler Told You. Audre Lorde Told You. Sydette Harry Told You. Mikki Kendall Told You. Timnit Gebru Told You.

When are you gonna listen?

#tw: alt-right#tw: alt right#AI#generative AI#LLM#large language models#GamerGate#online harassment#Black women#BIPOC#women of color

535 notes

·

View notes

Text

youtube

I'm doing something I don't usually do, posting a link to YouTube, to Stephen Fry reading a letter about Large Language Models, popularly if incorrectly known as AI. Iin this case the discussion is about ChatGPT but the letter he reads, written by Nick Cave, applies to the others as well.

The essence of art, music, writing and other creative endeavors, from embroidery to photography to games to other endeavors large and small is the time and care and love that some human being or beings have put into it. Without that you can create a commodity, yes, but you can't create meaning, the kind of meaning that nurtures us each time the results of creativity, modern or of any time, pass among us. That meaning which we share among all of us is the food of the human spirit and we need it just as we need the food we give to our bodies.

116 notes

·

View notes

Text

My New Article at WIRED

Tweet

So, you may have heard about the whole zoom “AI” Terms of Service clause public relations debacle, going on this past week, in which Zoom decided that it wasn’t going to let users opt out of them feeding our faces and conversations into their LLMs. In 10.1, Zoom defines “Customer Content” as whatever data users provide or generate (“Customer Input”) and whatever else Zoom generates from our uses of Zoom. Then 10.4 says what they’ll use “Customer Content” for, including “…machine learning, artificial intelligence.”

And then on cue they dropped an “oh god oh fuck oh shit we fucked up” blog where they pinky promised not to do the thing they left actually-legally-binding ToS language saying they could do.

Like, Section 10.4 of the ToS now contains the line “Notwithstanding the above, Zoom will not use audio, video or chat Customer Content to train our artificial intelligence models without your consent,” but it again it still seems a) that the “customer” in question is the Enterprise not the User, and 2) that “consent” means “clicking yes and using Zoom.” So it’s Still Not Good.

Well anyway, I wrote about all of this for WIRED, including what zoom might need to do to gain back customer and user trust, and what other tech creators and corporations need to understand about where people are, right now.

And frankly the fact that I have a byline in WIRED is kind of blowing my mind, in and of itself, but anyway…

Also, today, Zoom backtracked Hard. And while i appreciate that, it really feels like decided to Zoom take their ball and go home rather than offer meaningful consent and user control options. That’s… not exactly better, and doesn’t tell me what if anything they’ve learned from the experience. If you want to see what I think they should’ve done, then, well… Check the article.

Until Next Time.

Tweet

Read the rest of My New Article at WIRED at A Future Worth Thinking About

#ai#artificial intelligence#ethics#generative pre-trained transformer#gpt#large language models#philosophy of technology#public policy#science technology and society#technological ethics#technology#zoom#privacy

123 notes

·

View notes

Link

CancerGPT, an advanced machine learning model introduced by scientists from the University of Texas and the University of Massachusetts, USA, harnesses the power of large pre-trained language models (LLMs) to predict the outcomes of drug combination therapy on rare human tissues found in cancer patients. The significance of this innovative approach becomes even more apparent in medical research fields where data organization and sample sizes are limited. CancerGPT could pave the way for significant advancements in understanding and treating rare cancers, offering new hope for patients and researchers alike.

Drug combination therapy for cancer is a promising strategy for its treatment. However, predicting the synergy between drug pairs poses considerable challenges due to the vast number of possible combinations and complex biological interactions. This difficulty is particularly pronounced in rare tissues, where data is scarce. However, LLMs are here to address this formidable challenge head-on. Can these powerful language models unlock the secrets of drug pair synergy in rare tissues? Let’s find out!

Continue Reading

57 notes

·

View notes

Photo

https://arxiv.org/pdf/2304.13712v2.pdf

67 notes

·

View notes

Text

Supervised AI isn't

It wasn't just Ottawa: Microsoft Travel published a whole bushel of absurd articles, including the notorious Ottawa guide recommending that tourists dine at the Ottawa Food Bank ("go on an empty stomach"):

https://twitter.com/parismarx/status/1692233111260582161

After Paris Marx pointed out the Ottawa article, Business Insider's Nathan McAlone found several more howlers:

https://www.businessinsider.com/microsoft-removes-embarrassing-offensive-ai-assisted-travel-articles-2023-8

There was the article recommending that visitors to Montreal try "a hamburger" and went on to explain that a hamburger was a "sandwich comprised of a ground beef patty, a sliced bun of some kind, and toppings such as lettuce, tomato, cheese, etc" and that some of the best hamburgers in Montreal could be had at McDonald's.

For Anchorage, Microsoft recommended trying the local delicacy known as "seafood," which it defined as "basically any form of sea life regarded as food by humans, prominently including fish and shellfish," going on to say, "seafood is a versatile ingredient, so it makes sense that we eat it worldwide."

In Tokyo, visitors seeking "photo-worthy spots" were advised to "eat Wagyu beef."

There were more.

Microsoft insisted that this wasn't an issue of "unsupervised AI," but rather "human error." On its face, this presents a head-scratcher: is Microsoft saying that a human being erroneously decided to recommend the dining at Ottawa's food bank?

But a close parsing of the mealy-mouthed disclaimer reveals the truth. The unnamed Microsoft spokesdroid only appears to be claiming that this wasn't written by an AI, but they're actually just saying that the AI that wrote it wasn't "unsupervised." It was a supervised AI, overseen by a human. Who made an error. Thus: the problem was human error.

This deliberate misdirection actually reveals a deep truth about AI: that the story of AI being managed by a "human in the loop" is a fantasy, because humans are neurologically incapable of maintaining vigilance in watching for rare occurrences.

Our brains wire together neurons that we recruit when we practice a task. When we don't practice a task, the parts of our brain that we optimized for it get reused. Our brains are finite and so don't have the luxury of reserving precious cells for things we don't do.

That's why the TSA sucks so hard at its job – why they are the world's most skilled water-bottle-detecting X-ray readers, but consistently fail to spot the bombs and guns that red teams successfully smuggle past their checkpoints:

https://www.nbcnews.com/news/us-news/investigation-breaches-us-airports-allowed-weapons-through-n367851

TSA agents (not "officers," please – they're bureaucrats, not cops) spend all day spotting water bottles that we forget in our carry-ons, but almost no one tries to smuggle a weapons through a checkpoint – 99.999999% of the guns and knives they do seize are the result of flier forgetfulness, not a planned hijacking.

In other words, they train all day to spot water bottles, and the only training they get in spotting knives, guns and bombs is in exercises, or the odd time someone forgets about the hand-cannon they shlep around in their day-pack. Of course they're excellent at spotting water bottles and shit at spotting weapons.

This is an inescapable, biological aspect of human cognition: we can't maintain vigilance for rare outcomes. This has long been understood in automation circles, where it is called "automation blindness" or "automation inattention":

https://pubmed.ncbi.nlm.nih.gov/29939767/

Here's the thing: if nearly all of the time the machine does the right thing, the human "supervisor" who oversees it becomes incapable of spotting its error. The job of "review every machine decision and press the green button if it's correct" inevitably becomes "just press the green button," assuming that the machine is usually right.

This is a huge problem. It's why people just click "OK" when they get a bad certificate error in their browsers. 99.99% of the time, the error was caused by someone forgetting to replace an expired certificate, but the problem is, the other 0.01% of the time, it's because criminals are waiting for you to click "OK" so they can steal all your money:

https://finance.yahoo.com/news/ema-report-finds-nearly-80-130300983.html

Automation blindness can't be automated away. From interpreting radiographic scans:

https://healthitanalytics.com/news/ai-could-safely-automate-some-x-ray-interpretation

to autonomous vehicles:

https://newsroom.unsw.edu.au/news/science-tech/automated-vehicles-may-encourage-new-breed-distracted-drivers

The "human in the loop" is a figleaf. The whole point of automation is to create a system that operates at superhuman scale – you don't buy an LLM to write one Microsoft Travel article, you get it to write a million of them, to flood the zone, top the search engines, and dominate the space.

As I wrote earlier: "There's no market for a machine-learning autopilot, or content moderation algorithm, or loan officer, if all it does is cough up a recommendation for a human to evaluate. Either that system will work so poorly that it gets thrown away, or it works so well that the inattentive human just button-mashes 'OK' every time a dialog box appears":

https://pluralistic.net/2022/10/21/let-me-summarize/#i-read-the-abstract

Microsoft – like every corporation – is insatiably horny for firing workers. It has spent the past three years cutting its writing staff to the bone, with the express intention of having AI fill its pages, with humans relegated to skimming the output of the plausible sentence-generators and clicking "OK":

https://www.businessinsider.com/microsoft-news-cuts-dozens-of-staffers-in-shift-to-ai-2020-5

We know about the howlers and the clunkers that Microsoft published, but what about all the other travel articles that don't contain any (obvious) mistakes? These were very likely written by a stochastic parrot, and they comprised training data for a human intelligence, the poor schmucks who are supposed to remain vigilant for the "hallucinations" (that is, the habitual, confidently told lies that are the hallmark of AI) in the torrent of "content" that scrolled past their screens:

https://dl.acm.org/doi/10.1145/3442188.3445922

Like the TSA agents who are fed a steady stream of training data to hone their water-bottle-detection skills, Microsoft's humans in the loop are being asked to pluck atoms of difference out of a raging river of otherwise characterless slurry. They are expected to remain vigilant for something that almost never happens – all while they are racing the clock, charged with preventing a slurry backlog at all costs.

Automation blindness is inescapable – and it's the inconvenient truth that AI boosters conspicuously fail to mention when they are discussing how they will justify the trillion-dollar valuations they ascribe to super-advanced autocomplete systems. Instead, they wave around "humans in the loop," using low-waged workers as props in a Big Store con, just a way to (temporarily) cool the marks.

And what of the people who lose their (vital) jobs to (terminally unsuitable) AI in the course of this long-running, high-stakes infomercial?

Well, there's always the food bank.

"Go on an empty stomach."

Going to Burning Man? Catch me on Tuesday at 2:40pm on the Center Camp Stage for a talk about enshittification and how to reverse it; on Wednesday at noon, I'm hosting Dr Patrick Ball at Liminal Labs (6:15/F) for a talk on using statistics to prove high-level culpability in the recruitment of child soldiers.

On September 6 at 7pm, I'll be hosting Naomi Klein at the LA Public Library for the launch of Doppelganger.

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

--

West Midlands Police (modified)

https://www.flickr.com/photos/westmidlandspolice/8705128684/

CC BY-SA 2.0

https://creativecommons.org/licenses/by-sa/2.0/

#pluralistic#automation blindness#humans in the loop#stochastic parrots#habitual confident liars#ai#artificial intelligence#llms#large language models#microsoft

1K notes

·

View notes

Text

I'm trying to debug a fairly subtle syntax error in a customer inventory report, and out of sheer morbid curiosity I decided to see what my SQL syntax checker's shiny new "Fix Syntax With AI" feature had to say about it.

After "thinking" about it for nearly a full minute, it produced the following:

SELECT

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

SELECT

COUNT(id)

FROM

customers

WHERE

customers.deleted = 0

AND customers.id = NULL

UNION

I suspect my day job isn't in peril any time soon.

2K notes

·

View notes

Text

This is hilarious to me and not terrifying only because every example I've seen of this Gemini shit solidifies for me that it's intentional internal sabotage.

9 notes

·

View notes

Link

MW: I don’t think there’s any evidence that large machine learning models—that rely on huge amounts of surveillance data and the concentrated computational infrastructure that only a handful of corporations control—have the spark of consciousness.

We can still unplug the servers, the data centers can flood as the climate encroaches, we can run out of the water to cool the data centers, the surveillance pipelines can melt as the climate becomes more erratic and less hospitable.

I think we need to dig into what is happening here, which is that, when faced with a system that presents itself as a listening, eager interlocutor that’s hearing us and responding to us, that we seem to fall into a kind of trance in relation to these systems, and almost counterfactually engage in some kind of wish fulfillment: thinking that they’re human, and there’s someone there listening to us. It’s like when you’re a kid, and you’re telling ghost stories, something with a lot of emotional weight, and suddenly everybody is terrified and reacting to it. And it becomes hard to disbelieve.

FC: What you said just now—the idea that we fall into a kind of trance—what I’m hearing you say is that’s distracting us from actual threats like climate change or harms to marginalized people.

MW: Yeah, I think it’s distracting us from what’s real on the ground and much harder to solve than war-game hypotheticals about a thing that is largely kind of made up. And particularly, it’s distracting us from the fact that these are technologies controlled by a handful of corporations who will ultimately make the decisions about what technologies are made, what they do, and who they serve. And if we follow these corporations’ interests, we have a pretty good sense of who will use it, how it will be used, and where we can resist to prevent the actual harms that are occurring today and likely to occur.

257 notes

·

View notes

Text

AI: A Misnomer

As you know, Game AI is a misnomer, a misleading name. Games usually don't need to be intelligent, they just need to be fun. There is NPC behaviour (be they friendly, neutral, or antagonistic), computer opponent strategy for multi-player games ranging from chess to Tekken or StarCraft, and unit pathfinding. Some games use novel and interesting algorithms for computer opponents (Frozen Synapse uses deome sort of evolutionary algorithm) or for unit pathfinding (Planetary Annihilation uses flow fields for mass unit pathfinding), but most of the time it's variants or mixtures of simple hard-coded behaviours, minimax with alpha-beta pruning, state machines, HTN, GOAP, and A*.

Increasingly, AI outside of games has become a misleading term, too. It used to be that people called more things AI, then machine learning was called machine learning, robotics was called robotics, expert systems were called expert systems, then later ontologies and knowledge engineering were called the semantic web, and so on, with the remaining approaches and the original old-fashioned AI still being called AI.

AI used to be cool, then it was uncool, and the useful bits of AI were used for recommendation systems, spam filters, speech recognition, search engines, and translation. Calling it "AI" was hand-waving, a way to obscure what your system does and how it works.

With the advent if ChatGPT, we have arrived in the worst of both worlds. Calling things "AI" is cool again, but now some people use "AI" to refer specifically to large language models or text-to-image generators based on language models. Some people still use "AI" to mean autonomous robots. Some people use "AI" to mean simple artificial neuronal networks, bayesian filtering, and recommendation systems. Infuriatingly, the word "algorithm" has increasingly entered the vernacular to refer to bayesian filters and recommendation systems, for situations where a computer science textbook would still use "heuristic". Computer science textbooks still use "AI" to mean things like chess playing, maze solving, and fuzzy logic.

Let's look at a recent example! Scott Alexander wrote a blog post (https://www.astralcodexten.com/p/god-help-us-lets-try-to-understand) about current research (https://transformer-circuits.pub/2023/monosemantic-features/index.html) on distributed representations and sparsity, and the topology of the representations learned by a neural network. Scott Alexander is a psychiatrist with no formal training in machine learning or even programming. He uses the term "AI" to refer to neural networks throughout the blog post. He doesn't say "distributed representations", or "sparse representations". The original publication he did use technical terms like "sparse representation". These should be familiar to people who followed the debates about local representations versus distributed representations back in the 80s (or people like me who read those papers in university). But in that blog post, it's not called a neural network, it's called an "AI". Now this could have two reasons: Either Scott Alexander doesn't know any better, or more charitably he does but doesn't know how to use the more precise terminology correctly, or he intentionally wants to dumb down the research for people who intuitively understand what a latent feature space is, but have never heard about "machine learning" or "artificial neural networks".

Another example can come in the form of a thought experiment: You write an app that helps people tidy up their rooms, and find things in that room after putting them away, mostly because you needed that app for yourself. You show the app to a non-technical friend, because you want to know how intuitive it is to use. You ask him if he thinks the app is useful, and if he thinks people would pay money for this thing on the app store, but before he answers, he asks a question of his own: Does your app have any AI in it?

What does he mean?

Is "AI" just the new "blockchain"?

14 notes

·

View notes