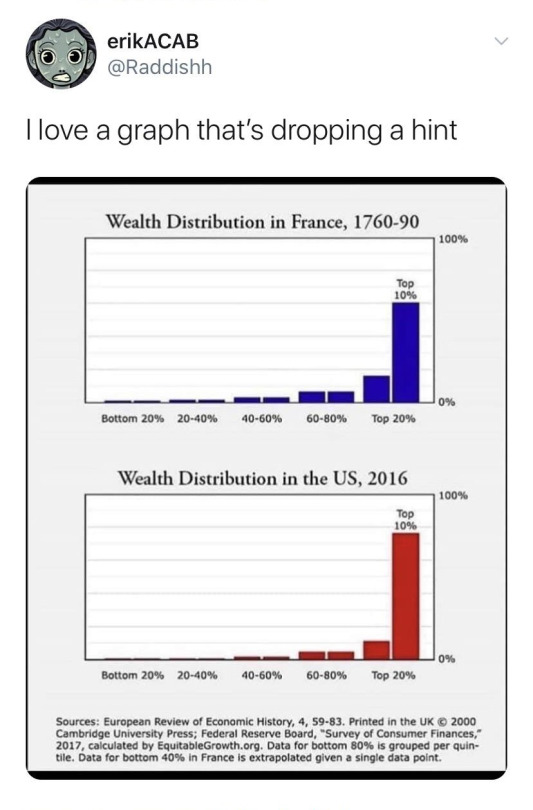

#& economics

Text

#Trump#gaza#biden#free palestine#democrats#republicans#politics#kamala harris#joe biden#nyt#economics

162 notes

·

View notes

Text

Appendix I: Swarms of Butterflies, Dragon-Flies, etc.

M.C. Piepers has published in Natuurkunding Tijdschrift voor Neederlandsch Indië, 1891, Deel L. p. 198 (analyzed in Naturwissenschaftliche Rundschau, 1891, vol. vi. p. 573), interesting researches into the mass-flights of butterflies which occur in Dutch East India, seemingly under the influence of great draughts occasioned by the west monsoon. Such mass-flights usually take place in the first months after the beginning of the monsoon, and it is usually individuals of both sexes of Catopsilia (Callidryas) crocale, Cr., which join in it, but occasionally the swarms consist of individuals belonging to three different species of the genus Euphœa. Copulation seems also to be the purpose of such flights. That these flights are not the result of concerted action but rather a consequence of imitation, or of a desire of following all others, is, of course, quite possible.

Bates saw, on the Amazon, the yellow and the orange Callidryas “assembling in densely packed masses, sometimes two or three yards in circumference, their wings all held in an upright position, so that the beach looked as though variegated with beds of crocuses.” Their migrating columns, crossing the river from north to south, “were uninterrupted, from an early hour in the morning till sunset” (Naturalist on the Amazon, p. 131).

Dragon-flies, in their long migrations across the Pampas, come together in countless numbers, and their immense swarms contain individuals belonging to different species (Hudson, Naturalist on the La Plata, pp. 130 seq.). The grasshoppers (Zoniopoda tarsata) are also eminently gregarious (Hudson, l.c. p. 125).

#organization#revolution#mutual aid#anarchism#daily posts#communism#anti capitalist#anti capitalism#late stage capitalism#anarchy#anarchists#libraries#leftism#social issues#economy#economics#climate change#anarchy works#environmentalism#environment#solarpunk#anti colonialism#a factor of evolution#petr kropotkin

15 notes

·

View notes

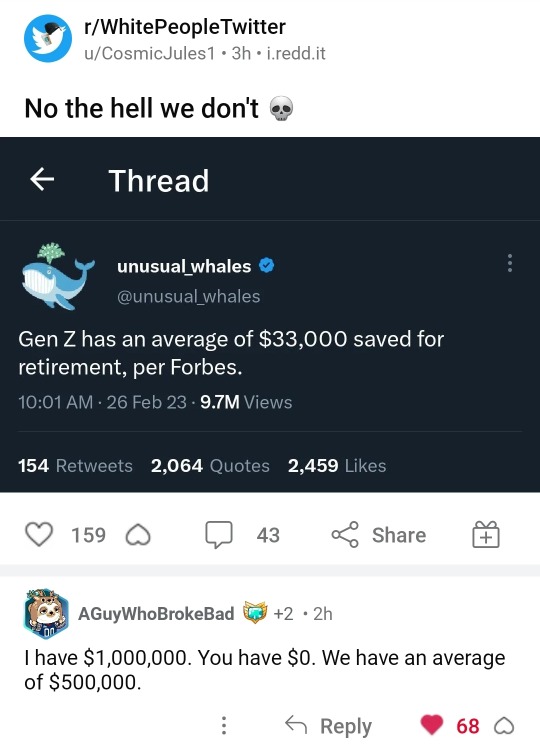

Text

#lgbt#lgbtq#lgbtqia#economics#economy#capitalism#politics#twitter#tweets#tweet#meme#memes#funny#lol#humor

72K notes

·

View notes

Text

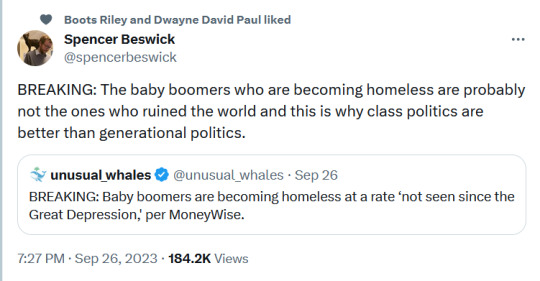

#twitter#tweet#tweets#boomers#economics#economy#homeless#class politics#generational politics#class#inequality#wealth inequality

44K notes

·

View notes

Text

19K notes

·

View notes

Text

I feel like a good shorthand for a lot of economics arguments is "if you want people to work minimum wage jobs in your city, you need to allow minimum wage apartments for them to live in."

"These jobs are just for teenagers on the weekends." Okay, so you'll use minimum wage services only on the weekends and after school. No McDonald's or Starbucks on your lunch break.

"They can get a roommate." For a one bedroom? A roommate for a one bedroom? Or a studio? Do you have a roommate to get a middle-wage apartment for your middle-wage job? No? Why should they?

"They can live farther from city center and just commute." Are there ways for them to commute that don't equate to that rent? Living in an outer borough might work in NYC, where public transport is a flat rate, but a city in Texas requires a car. Does the money saved in rent equal the money spent on the car loan, the insurance, the gas? Remember, if you want people to take the bus or a bike, the bus needs to be reliable and the bike lanes survivable.

If you want minimum wage workers to be around for you to rely on, then those minimum wage workers need a place to stay.

You either raise the minimum wage, or you drop the rent. There's only so long you can keep rents high and wages low before your workforce leaves for cheaper pastures.

"Nobody wants to work anymore" doesn't hold water if the reason nobody applies is because the commute is impossible at the wage you provide.

94K notes

·

View notes

Photo

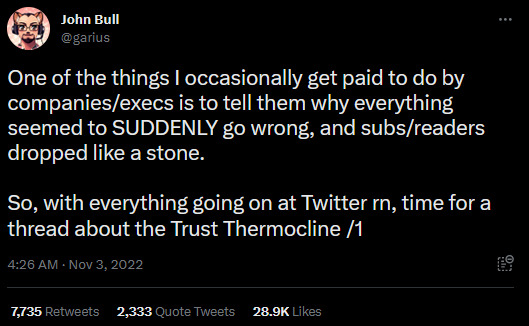

Really good Twitter thread originally about Elon Musk and Twitter, but also applies to Netflix and a lot of other corporations.

Full thread. Text transcription under cut.

John Bull @garius

One of the things I occasionally get paid to do by companies/execs is to tell them why everything seemed to SUDDENLY go wrong, and subs/readers dropped like a stone. So, with everything going on at Twitter rn, time for a thread about the Trust Thermocline /1

So: what's a thermocline?

Well large bodies of water are made of layers of differing temperatures. Like a layer cake. The top bit is where all the the waves happen and has a gradually decreasing temperature. Then SUDDENLY there's a point where it gets super-cold.

That suddenly is important. There's reasons for it (Science!) but it's just a good metaphor. Indeed you may also be interested in the "Thermocline of Truth" which a project management term for how things on a RAG board all suddenly go from amber to red.

But I digress.

The Trust Thermocline is something that, over (many) years of digital, I have seen both digital and regular content publishers hit time and time again. Despite warnings (at least when I've worked there). And it has a similar effect. You have lots of users then suddenly... nope.

And this does effect print publications as much as trendy digital media companies. They'll be flying along making loads of money, with lots of users/readers, rolling out new products that get bought. Or events. Or Sub-brands.

And then SUDDENLY those people just abandon them.

Often it's not even to "new" competitor products, but stuff they thought were already not a threat. Nor is there lots of obvious dissatisfaction reported from sales and marketing (other than general grumbling). Nor is it a general drift away, it's just a sudden big slide.

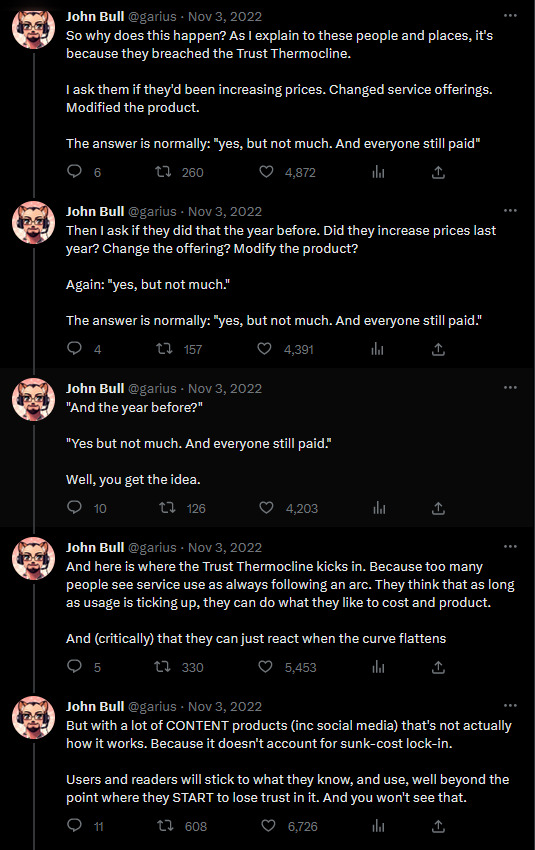

So why does this happen? As I explain to these people and places, it's because they breached the Trust Thermocline.

I ask them if they'd been increasing prices. Changed service offerings. Modified the product.

The answer is normally: "yes, but not much. And everyone still paid"

Then I ask if they did that the year before. Did they increase prices last year? Change the offering? Modify the product?

Again: "yes, but not much."

The answer is normally: "yes, but not much. And everyone still paid."

"And the year before?"

"Yes but not much. And everyone still paid."

Well, you get the idea.

And here is where the Trust Thermocline kicks in. Because too many people see service use as always following an arc. They think that as long as usage is ticking up, they can do what they like to cost and product.

And (critically) that they can just react when the curve flattens

But with a lot of CONTENT products (inc social media) that's not actually how it works. Because it doesn't account for sunk-cost lock-in.

Users and readers will stick to what they know, and use, well beyond the point where they START to lose trust in it. And you won't see that.

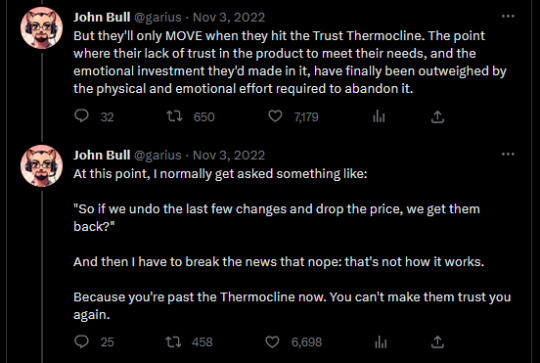

But they'll only MOVE when they hit the Trust Thermocline. The point where their lack of trust in the product to meet their needs, and the emotional investment they'd made in it, have finally been outweighed by the physical and emotional effort required to abandon it.

At this point, I normally get asked something like:

"So if we undo the last few changes and drop the price, we get them back?"

And then I have to break the news that nope: that's not how it works.

Because you're past the Thermocline now. You can't make them trust you again.

56K notes

·

View notes

Text

#gya#queer#lgbt#lgbtqia#dank memes#dark humor#economics#economy#politics#us politics#tweet#twitter#funny#lol#haha#humor#meme#memes

7K notes

·

View notes

Text

Every single craft has been paying “The Passion Tax” for generations. This term (coined by author and organizational psychologist Adam Grant) — and backed by scientific research — simply states that the more someone is passionate about their work, the more acceptable it is to take advantage of them. In short, loving what we do makes us easy to exploit.

Guest Column: If Writers Lose the Standoff With Studios, It Hurts All Filmmakers

#quotes#writing#economics#capitalism#the passion tax#adam grant#writers#wga#wga strong#writers strike

26K notes

·

View notes

Text

The French really don’t fuck around.

#France#emmanuel macron#capitalism#socialism#america#usa#democrats#republicans#gop#twitter#economics#fox news#pensions#macron

205K notes

·

View notes

Text

I love when newspapers are like "why are people so pessimistic about the economy when stocks are up and inflation is slowing?" Maybe because stocks mean next to nothing to the average person? Maybe because inflation slowing down still leaves it astronomically high when wages haven't kept up pace? Maybe because rents and housing in general have increased far beyond normal inflation and people are only left with the choice to pay up or be homeless? Maybe because most people's lives are still a complete hellscape in the real world regardless of the theoretical numbers on your little spreadsheet????

10K notes

·

View notes

Text

What kind of bubble is AI?

My latest column for Locus Magazine is "What Kind of Bubble is AI?" All economic bubbles are hugely destructive, but some of them leave behind wreckage that can be salvaged for useful purposes, while others leave nothing behind but ashes:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Think about some 21st century bubbles. The dotcom bubble was a terrible tragedy, one that drained the coffers of pension funds and other institutional investors and wiped out retail investors who were gulled by Superbowl Ads. But there was a lot left behind after the dotcoms were wiped out: cheap servers, office furniture and space, but far more importantly, a generation of young people who'd been trained as web makers, leaving nontechnical degree programs to learn HTML, perl and python. This created a whole cohort of technologists from non-technical backgrounds, a first in technological history. Many of these people became the vanguard of a more inclusive and humane tech development movement, and they were able to make interesting and useful services and products in an environment where raw materials – compute, bandwidth, space and talent – were available at firesale prices.

Contrast this with the crypto bubble. It, too, destroyed the fortunes of institutional and individual investors through fraud and Superbowl Ads. It, too, lured in nontechnical people to learn esoteric disciplines at investor expense. But apart from a smattering of Rust programmers, the main residue of crypto is bad digital art and worse Austrian economics.

Or think of Worldcom vs Enron. Both bubbles were built on pure fraud, but Enron's fraud left nothing behind but a string of suspicious deaths. By contrast, Worldcom's fraud was a Big Store con that required laying a ton of fiber that is still in the ground to this day, and is being bought and used at pennies on the dollar.

AI is definitely a bubble. As I write in the column, if you fly into SFO and rent a car and drive north to San Francisco or south to Silicon Valley, every single billboard is advertising an "AI" startup, many of which are not even using anything that can be remotely characterized as AI. That's amazing, considering what a meaningless buzzword AI already is.

So which kind of bubble is AI? When it pops, will something useful be left behind, or will it go away altogether? To be sure, there's a legion of technologists who are learning Tensorflow and Pytorch. These nominally open source tools are bound, respectively, to Google and Facebook's AI environments:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

But if those environments go away, those programming skills become a lot less useful. Live, large-scale Big Tech AI projects are shockingly expensive to run. Some of their costs are fixed – collecting, labeling and processing training data – but the running costs for each query are prodigious. There's a massive primary energy bill for the servers, a nearly as large energy bill for the chillers, and a titanic wage bill for the specialized technical staff involved.

Once investor subsidies dry up, will the real-world, non-hyperbolic applications for AI be enough to cover these running costs? AI applications can be plotted on a 2X2 grid whose axes are "value" (how much customers will pay for them) and "risk tolerance" (how perfect the product needs to be).

Charging teenaged D&D players $10 month for an image generator that creates epic illustrations of their characters fighting monsters is low value and very risk tolerant (teenagers aren't overly worried about six-fingered swordspeople with three pupils in each eye). Charging scammy spamfarms $500/month for a text generator that spits out dull, search-algorithm-pleasing narratives to appear over recipes is likewise low-value and highly risk tolerant (your customer doesn't care if the text is nonsense). Charging visually impaired people $100 month for an app that plays a text-to-speech description of anything they point their cameras at is low-value and moderately risk tolerant ("that's your blue shirt" when it's green is not a big deal, while "the street is safe to cross" when it's not is a much bigger one).

Morganstanley doesn't talk about the trillions the AI industry will be worth some day because of these applications. These are just spinoffs from the main event, a collection of extremely high-value applications. Think of self-driving cars or radiology bots that analyze chest x-rays and characterize masses as cancerous or noncancerous.

These are high value – but only if they are also risk-tolerant. The pitch for self-driving cars is "fire most drivers and replace them with 'humans in the loop' who intervene at critical junctures." That's the risk-tolerant version of self-driving cars, and it's a failure. More than $100b has been incinerated chasing self-driving cars, and cars are nowhere near driving themselves:

https://pluralistic.net/2022/10/09/herbies-revenge/#100-billion-here-100-billion-there-pretty-soon-youre-talking-real-money

Quite the reverse, in fact. Cruise was just forced to quit the field after one of their cars maimed a woman – a pedestrian who had not opted into being part of a high-risk AI experiment – and dragged her body 20 feet through the streets of San Francisco. Afterwards, it emerged that Cruise had replaced the single low-waged driver who would normally be paid to operate a taxi with 1.5 high-waged skilled technicians who remotely oversaw each of its vehicles:

https://www.nytimes.com/2023/11/03/technology/cruise-general-motors-self-driving-cars.html

The self-driving pitch isn't that your car will correct your own human errors (like an alarm that sounds when you activate your turn signal while someone is in your blind-spot). Self-driving isn't about using automation to augment human skill – it's about replacing humans. There's no business case for spending hundreds of billions on better safety systems for cars (there's a human case for it, though!). The only way the price-tag justifies itself is if paid drivers can be fired and replaced with software that costs less than their wages.

What about radiologists? Radiologists certainly make mistakes from time to time, and if there's a computer vision system that makes different mistakes than the sort that humans make, they could be a cheap way of generating second opinions that trigger re-examination by a human radiologist. But no AI investor thinks their return will come from selling hospitals that reduce the number of X-rays each radiologist processes every day, as a second-opinion-generating system would. Rather, the value of AI radiologists comes from firing most of your human radiologists and replacing them with software whose judgments are cursorily double-checked by a human whose "automation blindness" will turn them into an OK-button-mashing automaton:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

The profit-generating pitch for high-value AI applications lies in creating "reverse centaurs": humans who serve as appendages for automation that operates at a speed and scale that is unrelated to the capacity or needs of the worker:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

But unless these high-value applications are intrinsically risk-tolerant, they are poor candidates for automation. Cruise was able to nonconsensually enlist the population of San Francisco in an experimental murderbot development program thanks to the vast sums of money sloshing around the industry. Some of this money funds the inevitabilist narrative that self-driving cars are coming, it's only a matter of when, not if, and so SF had better get in the autonomous vehicle or get run over by the forces of history.

Once the bubble pops (all bubbles pop), AI applications will have to rise or fall on their actual merits, not their promise. The odds are stacked against the long-term survival of high-value, risk-intolerant AI applications.

The problem for AI is that while there are a lot of risk-tolerant applications, they're almost all low-value; while nearly all the high-value applications are risk-intolerant. Once AI has to be profitable – once investors withdraw their subsidies from money-losing ventures – the risk-tolerant applications need to be sufficient to run those tremendously expensive servers in those brutally expensive data-centers tended by exceptionally expensive technical workers.

If they aren't, then the business case for running those servers goes away, and so do the servers – and so do all those risk-tolerant, low-value applications. It doesn't matter if helping blind people make sense of their surroundings is socially beneficial. It doesn't matter if teenaged gamers love their epic character art. It doesn't even matter how horny scammers are for generating AI nonsense SEO websites:

https://twitter.com/jakezward/status/1728032634037567509

These applications are all riding on the coattails of the big AI models that are being built and operated at a loss in order to be profitable. If they remain unprofitable long enough, the private sector will no longer pay to operate them.

Now, there are smaller models, models that stand alone and run on commodity hardware. These would persist even after the AI bubble bursts, because most of their costs are setup costs that have already been borne by the well-funded companies who created them. These models are limited, of course, though the communities that have formed around them have pushed those limits in surprising ways, far beyond their original manufacturers' beliefs about their capacity. These communities will continue to push those limits for as long as they find the models useful.

These standalone, "toy" models are derived from the big models, though. When the AI bubble bursts and the private sector no longer subsidizes mass-scale model creation, it will cease to spin out more sophisticated models that run on commodity hardware (it's possible that Federated learning and other techniques for spreading out the work of making large-scale models will fill the gap).

So what kind of bubble is the AI bubble? What will we salvage from its wreckage? Perhaps the communities who've invested in becoming experts in Pytorch and Tensorflow will wrestle them away from their corporate masters and make them generally useful. Certainly, a lot of people will have gained skills in applying statistical techniques.

But there will also be a lot of unsalvageable wreckage. As big AI models get integrated into the processes of the productive economy, AI becomes a source of systemic risk. The only thing worse than having an automated process that is rendered dangerous or erratic based on AI integration is to have that process fail entirely because the AI suddenly disappeared, a collapse that is too precipitous for former AI customers to engineer a soft landing for their systems.

This is a blind spot in our policymakers debates about AI. The smart policymakers are asking questions about fairness, algorithmic bias, and fraud. The foolish policymakers are ensnared in fantasies about "AI safety," AKA "Will the chatbot become a superintelligence that turns the whole human race into paperclips?"

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

But no one is asking, "What will we do if" – when – "the AI bubble pops and most of this stuff disappears overnight?"

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/12/19/bubblenomics/#pop

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

--

tom_bullock (modified)

https://www.flickr.com/photos/tombullock/25173469495/

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/

4K notes

·

View notes

Text

Okay, I completely understand that getting time off work can be a Sisyphean ordeal these days, but every time I run into the whole "only rich people go on vacation" discourse I'm thinking surely I'm not the only one whose childhood experience of "going on vacation" was piling everybody into the car and driving for six hours to pay twenty dollars a day for the privilege of setting up some leaky tents on a fifty-foot-by-fifty-foot patch of dirt next to a mosquito-infested pond in a "private campground" whose only standout features were a. an outdoor miniature golf course that hadn't been maintained in twenty years, and b. a truly breathtaking fire ant population.

3K notes

·

View notes