Text

AI is here – and everywhere: 3 AI researchers look to the challenges ahead in 2024

by Anjana Susarla, Professor of Information Systems at Michigan State University, Casey Fiesler, Associate Professor of Information Science at the University of Colorado Boulder, and Kentaro Toyama

Professor of Community Information at the University of Michigan

2023 was an inflection point in the evolution of artificial intelligence and its role in society. The year saw the emergence of generative AI, which moved the technology from the shadows to center stage in the public imagination. It also saw boardroom drama in an AI startup dominate the news cycle for several days. And it saw the Biden administration issue an executive order and the European Union pass a law aimed at regulating AI, moves perhaps best described as attempting to bridle a horse that’s already galloping along.

We’ve assembled a panel of AI scholars to look ahead to 2024 and describe the issues AI developers, regulators and everyday people are likely to face, and to give their hopes and recommendations.

Casey Fiesler, Associate Professor of Information Science, University of Colorado Boulder

2023 was the year of AI hype. Regardless of whether the narrative was that AI was going to save the world or destroy it, it often felt as if visions of what AI might be someday overwhelmed the current reality. And though I think that anticipating future harms is a critical component of overcoming ethical debt in tech, getting too swept up in the hype risks creating a vision of AI that seems more like magic than a technology that can still be shaped by explicit choices. But taking control requires a better understanding of that technology.

One of the major AI debates of 2023 was around the role of ChatGPT and similar chatbots in education. This time last year, most relevant headlines focused on how students might use it to cheat and how educators were scrambling to keep them from doing so – in ways that often do more harm than good.

However, as the year went on, there was a recognition that a failure to teach students about AI might put them at a disadvantage, and many schools rescinded their bans. I don’t think we should be revamping education to put AI at the center of everything, but if students don’t learn about how AI works, they won’t understand its limitations – and therefore how it is useful and appropriate to use and how it’s not. This isn’t just true for students. The more people understand how AI works, the more empowered they are to use it and to critique it.

So my prediction, or perhaps my hope, for 2024 is that there will be a huge push to learn. In 1966, Joseph Weizenbaum, the creator of the ELIZA chatbot, wrote that machines are “often sufficient to dazzle even the most experienced observer,” but that once their “inner workings are explained in language sufficiently plain to induce understanding, its magic crumbles away.” The challenge with generative artificial intelligence is that, in contrast to ELIZA’s very basic pattern matching and substitution methodology, it is much more difficult to find language “sufficiently plain” to make the AI magic crumble away.

I think it’s possible to make this happen. I hope that universities that are rushing to hire more technical AI experts put just as much effort into hiring AI ethicists. I hope that media outlets help cut through the hype. I hope that everyone reflects on their own uses of this technology and its consequences. And I hope that tech companies listen to informed critiques in considering what choices continue to shape the future.

youtube

Kentaro Toyama, Professor of Community Information, University of Michigan

In 1970, Marvin Minsky, the AI pioneer and neural network skeptic, told Life magazine, “In from three to eight years we will have a machine with the general intelligence of an average human being.” With the singularity, the moment artificial intelligence matches and begins to exceed human intelligence – not quite here yet – it’s safe to say that Minsky was off by at least a factor of 10. It’s perilous to make predictions about AI.

Still, making predictions for a year out doesn’t seem quite as risky. What can be expected of AI in 2024? First, the race is on! Progress in AI had been steady since the days of Minsky’s prime, but the public release of ChatGPT in 2022 kicked off an all-out competition for profit, glory and global supremacy. Expect more powerful AI, in addition to a flood of new AI applications.

The big technical question is how soon and how thoroughly AI engineers can address the current Achilles’ heel of deep learning – what might be called generalized hard reasoning, things like deductive logic. Will quick tweaks to existing neural-net algorithms be sufficient, or will it require a fundamentally different approach, as neuroscientist Gary Marcus suggests? Armies of AI scientists are working on this problem, so I expect some headway in 2024.

Meanwhile, new AI applications are likely to result in new problems, too. You might soon start hearing about AI chatbots and assistants talking to each other, having entire conversations on your behalf but behind your back. Some of it will go haywire – comically, tragically or both. Deepfakes, AI-generated images and videos that are difficult to detect are likely to run rampant despite nascent regulation, causing more sleazy harm to individuals and democracies everywhere. And there are likely to be new classes of AI calamities that wouldn’t have been possible even five years ago.

Speaking of problems, the very people sounding the loudest alarms about AI – like Elon Musk and Sam Altman – can’t seem to stop themselves from building ever more powerful AI. I expect them to keep doing more of the same. They’re like arsonists calling in the blaze they stoked themselves, begging the authorities to restrain them. And along those lines, what I most hope for 2024 – though it seems slow in coming – is stronger AI regulation, at national and international levels.

Anjana Susarla, Professor of Information Systems, Michigan State University

In the year since the unveiling of ChatGPT, the development of generative AI models is continuing at a dizzying pace. In contrast to ChatGPT a year back, which took in textual prompts as inputs and produced textual output, the new class of generative AI models are trained to be multi-modal, meaning the data used to train them comes not only from textual sources such as Wikipedia and Reddit, but also from videos on YouTube, songs on Spotify, and other audio and visual information. With the new generation of multi-modal large language models (LLMs) powering these applications, you can use text inputs to generate not only images and text but also audio and video.

Companies are racing to develop LLMs that can be deployed on a variety of hardware and in a variety of applications, including running an LLM on your smartphone. The emergence of these lightweight LLMs and open source LLMs could usher in a world of autonomous AI agents – a world that society is not necessarily prepared for.

These advanced AI capabilities offer immense transformative power in applications ranging from business to precision medicine. My chief concern is that such advanced capabilities will pose new challenges for distinguishing between human-generated content and AI-generated content, as well as pose new types of algorithmic harms.

The deluge of synthetic content produced by generative AI could unleash a world where malicious people and institutions can manufacture synthetic identities and orchestrate large-scale misinformation. A flood of AI-generated content primed to exploit algorithmic filters and recommendation engines could soon overpower critical functions such as information verification, information literacy and serendipity provided by search engines, social media platforms and digital services.

The Federal Trade Commission has warned about fraud, deception, infringements on privacy and other unfair practices enabled by the ease of AI-assisted content creation. While digital platforms such as YouTube have instituted policy guidelines for disclosure of AI-generated content, there’s a need for greater scrutiny of algorithmic harms from agencies like the FTC and lawmakers working on privacy protections such as the American Data Privacy & Protection Act.

A new bipartisan bill introduced in Congress aims to codify algorithmic literacy as a key part of digital literacy. With AI increasingly intertwined with everything people do, it is clear that the time has come to focus not on algorithms as pieces of technology but to consider the contexts the algorithms operate in: people, processes and society.

#technology#science#futuristic#artificial intelligence#deepfakes#chatgpt#chatbot#AI education#AI#Youtube

14 notes

·

View notes

Text

Pluto: Netflix’s anime masterpiece explores how robots ‘feel’ when humans exploit them

by Thi Gammon, Research Associate in Culture, Media and Creative Industries Education at King's College London

There have been many TV shows and films inspired by the dual fear and excitement surrounding advances in artificial intelligence (AI). But not many exhibit such masterful craft and profound humanity as the new Netflix anime miniseries, Pluto.

Pluto is adapted from a manga series of the same title (2003-2009), created by Naoki Urasawa and Takashi Nagasaki. The manga version – considered a comic masterpiece for its beautiful art and sophisticated storyline – incorporated fundamental elements from Osamu Tezuka’s celebrated manga series Astro Boy (1952-1968), including the beloved android adolescent who was the titular character.

youtube

Pluto is set in a futuristic world in which humans and robots coexist, albeit within a hierarchy in favour of humans. Robots excel in various jobs ranging from nannies and butlers to architects and detectives, but they are treated as second-class citizens.

Although robots gradually gain their own rights codified into law, they are still exploited by humans, who downplay their worth and emotional intelligence. As much as humans depend on AI, they also feel threatened by it.

An AI murder mystery

Pluto, which has both Japanese and English audio versions, follows German robot detective Gesicht (Shinshū Fuji/Jason Vande Brake) as he traces the mysterious killings of robots and humans. The world’s seven most advanced robots (including Gesicht himself) and robot-friendly humans (including his creator) are the targets of this assassination scheme.

What’s most perplexing is that the murders appear untraceable. This suggests that the killer might be a very advanced robot, challenging the belief that robots can’t ever kill humans due to their programmed constraints.

This enigmatic case echoes the cautionary message found in Mary Shelley’s Frankenstein – beware of human beings’ ambitious dreams and creations. While the story begins as a murder mystery, it evolves into a thoughtful drama about the conflicted relationships between humans and androids.

While Pluto draws on many familiar sci-fi concepts, it distinguishes itself through its meticulous character development and the depth of its micro-stories. Every character is complex, and the audience is able to get to know them and become invested in their fates. The anime’s unhurried pace also allows viewers ample time to contemplate its philosophical questions about consciousness evolution and the powerful impacts of emotions.

Despite all its brilliance, however, the series is not without flaws. It has a dated representation of gender roles, with no female characters – whether human or robot – playing an important part. None of them break free from the stereotypical role of nurturing, stay-behind support for their exceptionally capable and powerful male partners.

Animation of the year

Pluto maintains a melancholic tone throughout – but despite this overarching dark ambience, it is at times romantic and moving. It exalts love, friendship and compassion without falling into sentimentality, evoking an emotional resonance reminiscent of Blade Runner (1982).

The series emphasises that life, or the process of living, imparts character and humanity, transcending biological organs and blood. Androids may initially be devoid of complex emotions, but they develop sentience through everyday experiences and interactions with fellow robots and humans.

Robots can even learn to appreciate music, as manifested by the charismatic North No.2 (Koichi Yamadera/Patrick Seitz), who was designed for intense combat but grows weary of warfare. The narrative underscores the simultaneous beauty and danger of emotions – particularly the destructive force of wrath.

With great technological advancements and comfort, this futuristic world is still torn by war. It poses the question: “Will war ever end?” – reminding us of the conflicts and tragedies happening in the real world. The anime suggests that an end to war is unlikely as long as hatred persists.

For me, with its beautiful art and riveting narrative, Pluto stands out as one of the best Netflix productions of all time. It’s certainly the best animated work of the year.

#science fiction#futuristic#artificial intelligence#Netflix#anime#science fiction and fantasy#Pluto#sci fi anime#naoki urasawa's pluto#Youtube

16 notes

·

View notes

Text

From the Moon’s south pole to an ice-covered ocean world, several exciting space missions are slated for launch in 2024

by Ali M. Bramson, Assistant Professor of Earth, Atmospheric, and Planetary Sciences at Purdue University

The year 2023 proved to be an important one for space missions, with NASA’s OSIRIS-REx mission returning a sample from an asteroid and India’s Chandrayaan-3 mission exploring the lunar south pole, and 2024 is shaping up to be another exciting year for space exploration.

Several new missions under NASA’s Artemis plan and Commercial Lunar Payload Services initiative will target the Moon.

The latter half of the year will feature several exciting launches, with the launch of the Martian Moons eXploration mission in September, Europa Clipper and Hera in October and Artemis II and VIPER to the Moon in November – if everything goes as planned.

I’m a planetary scientist, and here are six of the space missions I’m most excited to follow in 2024.

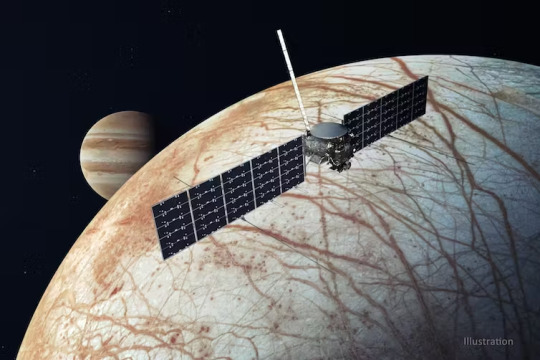

1. Europa Clipper

NASA will launch Europa Clipper, which will explore one of Jupiter’s largest moons, Europa. Europa is slightly smaller than Earth’s Moon, with a surface made of ice. Beneath its icy shell, Europa likely harbors a saltwater ocean, which scientists expect contains over twice as much water as all the oceans here on Earth combined.

With Europa Clipper, scientists want to investigate whether Europa’s ocean could be a suitable habitat for extraterrestrial life.

The mission plans to do this by flying past Europa nearly 50 times to study the moon’s icy shell, its surface’s geology and its subsurface ocean. The mission will also look for active geysers spewing out from Europa.

This mission will change the game for scientists hoping to understand ocean worlds like Europa.

The launch window – the period when the mission could launch and achieve its planned route – opens Oct. 10, 2024, and lasts 21 days. The spacecraft will launch on a SpaceX Falcon Heavy rocket and arrive at the Jupiter system in 2030.

2. Artemis II launch

The Artemis program, named after Apollo’s twin sister in Greek mythology, is NASA’s plan to go back to the Moon. It will send humans to the Moon for the first time since 1972, including the first woman and the first person of color. Artemis also includes plans for a longer-term, sustained presence in space that will prepare NASA for eventually sending people even farther – to Mars.

Artemis II is the first crewed step in this plan, with four astronauts planned to be on board during the 10-day mission.

The mission builds upon Artemis I, which sent an uncrewed capsule into orbit around the Moon in late 2022.

Artemis II will put the astronauts into orbit around the Moon before returning them home. It is currently planned for launch as early as November 2024. But there is a chance it will get pushed back to 2025, depending on whether all the necessary gear, such as spacesuits and oxygen equipment, is ready.

3. VIPER to search for water on the Moon

youtube

VIPER, which stands for Volatiles Investigating Polar Exploration Rover, is a robot the size of a golf cart that NASA will use to explore the Moon’s south pole in late 2024.

Originally scheduled for launch in 2023, NASA pushed the mission back to complete more tests on the lander system, which Astrobotic, a private company, developed as part of the Commercial Lunar Payload Services program.

This robotic mission is designed to search for volatiles, which are molecules that easily vaporize, like water and carbon dioxide, at lunar temperatures. These materials could provide resources for future human exploration on the Moon.

The VIPER robot will rely on batteries, heat pipes and radiators throughout its 100-day mission, as it navigates everything from the extreme heat of lunar daylight – when temperatures can reach 224 degrees Fahrenheit (107 degrees Celsius) – to the Moon’s frigid shadowed regions that can reach a mind-boggling -400 F (-240 C).

VIPER’s launch and delivery to the lunar surface is scheduled for November 2024.

4. Lunar Trailblazer and PRIME-1 missions

NASA has recently invested in a class of small, low-cost planetary missions called SIMPLEx, which stands for Small, Innovative Missions for PLanetary Exploration. These missions save costs by tagging along on other launches as what is called a rideshare, or secondary payload.

One example is the Lunar Trailblazer. Like VIPER, Lunar Trailblazer will look for water on the Moon.

But while VIPER will land on the Moon’s surface, studying a specific area near the south pole in detail, Lunar Trailblazer will orbit the Moon, measuring the temperature of the surface and mapping out the locations of water molecules across the globe.

Currently, Lunar Trailblazer is on track to be ready by early 2024.

However, because it is a secondary payload, Lunar Trailblazer’s launch timing depends on the primary payload’s launch readiness. The PRIME-1 mission, scheduled for a mid-2024 launch, is Lunar Trailblazer’s ride.

PRIME-1 will drill into the Moon – it’s a test run for the kind of drill that VIPER will use. But its launch date will likely depend on whether earlier launches go on time.

An earlier Commercial Lunar Payload Services mission with the same landing partner was pushed back to February 2024 at the earliest, and further delays could push back PRIME-1 and Lunar Trailblazer.

5. JAXA’s Martian Moon eXploration mission

youtube

While Earth’s Moon has many visitors – big and small, robotic and crewed – planned for 2024, Mars’ moons Phobos and Deimos will soon be getting a visitor as well. The Japanese Aerospace Exploration Agency, or JAXA, has a robotic mission in development called the Martian Moon eXploration, or MMX, planned for launch around September 2024.

The mission’s main science objective is to determine the origin of Mars’ moons. Scientists aren’t sure whether Phobos and Deimos are former asteroids that Mars captured into orbit with its gravity or if they formed out of debris that was already in orbit around Mars.

The spacecraft will spend three years around Mars conducting science operations to observe Phobos and Deimos. MMX will also land on Phobos’ surface and collect a sample before returning to Earth.

6. ESA’s Hera mission

Hera is a mission by the European Space Agency to return to the Didymos-Dimorphos asteroid system that NASA’s DART mission visited in 2022.

But DART didn’t just visit these asteroids, it collided with one of them to test a planetary defense technique called “kinetic impact.” DART hit Dimorphos with such force that it actually changed its orbit.

The kinetic impact technique smashes something into an object in order to alter its path. This could prove useful if humanity ever finds a potentially hazardous object on a collision course with Earth and needs to redirect it.

Hera will launch in October 2024, making its way in late 2026 to Didymos and Dimorphos, where it will study physical properties of the asteroids.

6 notes

·

View notes

Text

Tatahouine: ‘Star Wars meteorite’ sheds light on the early Solar System

by Ben Rider-Stokes, Post Doctoral Researcher in Achondrite Meteorites at The Open University

Locals watched in awe as a fireball exploded and hundreds of meteorite fragments rained down on the city of Tatahouine, Tunisia, on June 27, 1931. Fittingly, the city later became a major filming location of the Star Wars movie series. The desert climate and traditional villages became a huge inspiration to the director, George Lucas, who proceeded to name the fictional home planet of Luke Skywalker and Darth Vader “Tatooine”.

The mysterious 1931 meteorite, a rare type of achondrite (a meteorite that has experienced melting) known as a diogenite, is obviously not a fragment of Skywalker’s home planet. But it was similarly named after the city of Tatahouine. Now, a recent study has gleaned important insights into the the origin of the meteorite – and the early Solar System.

Lucas filmed various scenes for Star Wars in Tatahouine. These include Episode IV – A New Hope (1977), Star Wars: Episode I – The Phantom Menace (1999) and Star Wars: Episode 2 – Attack of the Clones (2002). Various famous scenes were filmed there, including scenes of “Mos Espa” and “Mos Eisley Cantina”.

Mark Hamill, the actor who played Luke Skywalker, reminisced about filming in Tunisia and discussed it with Empire Magazine: “If you could get into your own mind, shut out the crew and look at the horizon, you really felt like you were transported to another world”.

Composition and origin

Diogenites, named after the Greek philosopher Diogenes, are igneous meteorites (rocks that have solidified from lava or magma). They formed at depth within an asteroid and cooled slowly, resulting in the formation of relatively large crystals.

Tatahouine is no exception, containing crystals as big as 5mm with black veins cutting cross the sample throughout. The black veins are called shock-induced impact melt veins, and are a result of high temperatures and pressures caused by a projectile smashing into the surface of the meteorite’s parent body.

The presence of these veins and the structure of the grains of pyroxene (minerals containing calcium, magnesium, iron, and aluminum) suggest the sample has experienced pressures of up to 25 gigapascals (GPa) of pressure. To put that into perspective, the pressure at the bottom of the Mariana Trench, the deepest part of our ocean, is only 0.1 GPa. So it is safe to say this sample has experienced a pretty hefty impact.

By evaluating the spectrum (light reflecting off their surface, broken down by wavelength) of meteorites and comparing it to asteroids and planets in our Solar System, it has been suggested that diogenites, including Tatahouine, originate from the second largest asteroid in our asteroid belt, known as 4 Vesta.

This asteroid possesses interesting and exciting information about the early Solar System. Many of the meteorites from 4 Vesta are ancient, around ~4 billion years. Therefore, they offer a window to the past events of the early Solar System that we are unable to evaluate here on Earth.

Violent past

The recent study investigated 18 diogenites, including Tatahouine, all from 4 Vesta. The authors undertook “radiometric argon-argon age dating” techniques to determine the ages of the meteorites. This is based on looking at two different isotopes (versions of elements whose nuclei have more or fewer particles called neutrons). We know that a certain argon isotope in samples increases with age at a known rate, helping scientists estimate an age of a sample by comparing the ratio between two different isotopes.

The team also evaluated deformation caused by collisions, called impact events, using a type of electron microscope technique called electron backscatter diffraction.

By combining the age dating techniques and the microscope technique, the authors managed to map the timing of impact events on 4 Vesta and the early Solar System. The study suggests that 4 Vesta experienced ongoing impact events until 3.4 billion years ago when a catastrophic one occurred.

This catastrophic event, possibly another colliding asteroid, resulted in multiple smaller rubble pile asteroids being produced known as “vestoids”. Unravelling large scale impact events such as this, reveals the hostile nature of the early Solar System.

These smaller bodies experienced further collisions that caused material to hurtle to Earth over the last 50 to 60 million years – including the fireball in Tunisia.

Ultimately, this work demonstrates the importance of investigating meteorites – impacts have played a major role in the evolution of asteroids in our Solar System.

7 notes

·

View notes

Text

Sci-fi books are rare in school even though they help kids better understand science

by Emily Midkiff, Assistant Professor of Teaching, Leadership, and Professional Practice at the University of North Dakota

Claudia Wolff

Science fiction can lead people to be more cautious about the potential consequences of innovations. It can help people think critically about the ethics of science. Researchers have also found that sci-fi serves as a positive influence on how people view science. Science fiction scholar Istvan Csicsery-Ronay calls this “science-fictional habits of mind.”

Scientists and engineers have reported that their childhood encounters with science fiction framed their thinking about the sciences. Thinking critically about science and technology is an important part of education in STEM – or science, technology, engineering and mathematics.

Complicated content?

Despite the potential benefits of an early introduction to science fiction, my own research on science fiction for readers under age 12 has revealed that librarians and teachers in elementary schools treat science fiction as a genre that works best for certain cases, like reluctant readers or kids who like what they called “weird,” “freaky” or “funky” books.

Of the 59 elementary teachers and librarians whom I surveyed, almost a quarter of them identified themselves as science fiction fans, and nearly all of them expressed that science fiction is just as valuable as any other genre. Nevertheless, most of them indicated that while they recommend science fiction books to individual readers, they do not choose science fiction for activities or group readings.

The teachers and librarians explained that they saw two related problems with science fiction for their youngest readers: low availability and complicated content.

Why sci-fi books are scarce in schools

Several respondents said that there simply are not as many science fiction books available for elementary school students. To investigate further, I counted the number of science fiction books available in 10 randomly selected elementary school libraries from across the United States. Only 3% of the books in each library were science fiction. The rest of the books were: 49% nonfiction, 25% fantasy, 19% realistic fiction and 5% historical fiction. While historical fiction also seems to be in low supply, science fiction stands out as the smallest group.

When I spoke to a small publisher and several authors, they confirmed that science fiction for young readers is not considered a profitable genre, and so those books are rarely acquired. Due to the perception that many young readers do not like science fiction, it is not written, published and distributed as often.

With fewer books to choose from, the teachers and librarians said that they have difficulty finding options that are not too long and complicated for group readings. One explained: “I have to appeal to broad ability levels in chapter book read-aloud selections. These books typically have to be shorter, with more simple plots.” Another respondent explained that they believe “the kind of suppositions sci-fi is based on to be difficult for younger children to grasp. We do read some sci-fi in our middle grade book club.”

A question of maturity

Waiting for students to get older before introducing them to science fiction is a fairly common approach. Susan Fichtelberg – a longtime librarian – wrote a guide to teen fantasy and science fiction. In it, she recommends age 12 as the prime time to start. Other children’s literature experts have speculated whether children under 12 have sufficient knowledge to comprehend science fiction.

Reading researchers agree that comprehending complex texts is easier when the reader has more background knowledge. Yet, when I read some science fiction picture books with elementary school students, none of the children struggled to understand the stories. The most active child in my study often used his knowledge of “Star Wars” to interpret the books. While background knowledge can mean children’s knowledge of science, it also includes exposure to a genre. The more a reader is exposed to science fiction stories, the better they understand how to read them.

A matter of choice

Science fiction does not need to include detailed science or outlandish premises to offer valuable ideas. Simple picture books like “Farm Fresh Cats” by Scott Santoro rely on familiar ideas like farms and cats to help readers reconsider what is familiar and what is alien. “The Barnabus Project” by the Fan Brothers is both a simple escape adventure story and a story about the ethics of genetic experimentation on animals.

The good news is that elementary school students are choosing science fiction regardless of what adults might think they can or cannot understand. I found that the science fiction books in those 10 elementary school libraries were checked out at a higher rate per book than all of the other genres. Science fiction had 1-2 more checkouts per book, on average, than the other genres.

Using the lending data from these libraries, I built a statistical model that predicted that it is 58% more likely for one of the science fiction books to be checked out in these libraries than one of the fantasy books. The model predicted that a science fiction book is over twice as likely to be checked out than books in any of the other genres. In other words, since the children did not have nearly as many science fiction books to choose from, their readership was heavily concentrated on a few titles.

Children may discover science fiction on their own, but adults can do more to normalize the genre and provide opportunities for whole classes to become familiar with it. Encouraging children to explore science fiction may not guarantee science careers, but children deserve to learn from science fiction to help them navigate their increasingly high-tech world.

#science fiction#reading#science fiction books#education#k 12 education#literacy#science literacy#science fiction literature

32 notes

·

View notes

Text

Westworld at 50: Michael Crichton’s AI dystopia was ahead of its time

by Keith McDonald, Senior Lecturer Film Studies and Media at York St John University

Westworld turned 50 on November 21. Director Michael Crichton’s cautionary tale showed that high-concept feature films could act as a vehicle for social commentary. Westworld blended cinematic genres, taking into account the audience’s existing knowledge of well-worn narrative conventions and playfully subverting them as the fantasy turns to nightmare.

The film centres on a theme park where visitors, in this case the protagonists Peter (Richard Benjamin) and John (James Brolin), can enter a simulated fantasy world – Pompeii, Medieval Europe, or the Old West. Once there, they can live out their wildest fantasies. They can even have sex with the synthetic playthings that populate the worlds.

This sinister idea went on to be explored further in later films such as The Stepford Wives (1975 & 2004), A.I. Artificial Intelligence (2001) and Ex Machina (2014).

youtube

Of course, it all goes terribly wrong when a computer virus overruns the park and the androids become unbound from the safety protocols that have been encoded into them. The resultant horror climaxes with Peter being stalked through the park by the menacing gunslinger (Yul Brynner).

The film isn’t perfect. Westworld was Michael Crichton’s feature directorial debut, and it shows – as does the tight shooting schedule and frugal budget imposed by MGM. The studio was notorious at the time for mishandling projects and their directors.

Compared to some of the other notable films released that year, such as William Friedkin’s masterful The Exorcist, Nicolas Roeg’s terrifying Don’t Look Now and Clint Eastwood’s assured High Plains Drifter, Westworld has a B-movie aesthetic.

This is, though, elevated by a towering performance from Brynner, and the high-concept approach that later came to dominate the Hollywood system. The film also provided fruitful inspiration for an ambitious HBO adaptation in 2016.

Genre blending and bending

Westworld successfully blended science fiction with other genres. In this sense, it was a pioneering film, which made the most of its limitations due to the hugely influential imagination of Crichton and a postmodern masterstroke of the casting and performance of Brynner.

Today’s cinema is saturated with meta-textual references – moments when a film makes a critical commentary on itself or another movie. This was typified by Michael Keaton’s recent reprisal of his role as Batman in The Flash. But when Westworld was released, such creative choices were novel and fresh.

Bynner’s android gunslinger wears a costume almost identical to the one he donned for his role in The Magnificent Seven (1961). The choice adds dramatically to the thematic concerns of the film – the saturation of consumer culture and the postmodern bent towards repetition, simulation and cliche.

The simulated scenes in the theme park itself are built around cliched movie moments. The three settings which high-paying customers can enter represent film genres: The Medieval simulation, the “swords and sandals” recreation of the Roman Empire and the titular western.

When Westworld was released, each of these genres (the Medieval history, the Roman epic and the western) were already past their heyday, both in terms of popularity and reliability at the box office. Using them furthered the film’s comment on contemporary Hollywood – that it had run out of original ideas and was simply cashing in on nostalgia.

youtube

Westworld and AI

Though other films, like Stanley Kubrick’s 2001: A Space Odyssey (1968), were exploring the concept of nascent AI around this time, Westworld arguably did so in the most accessible style. And its legacy in doing so is clear in subsequent films, such as The Terminator (1984), The Matrix (1999) and recently in M3GAN (2023).

Crichton later revisited the notion of a theme park turning perilous due to the Promethean human instinct in his novel, Jurassic Park in 1990. Steven Spielberg’s adaptation remains one of the high points in American action-adventure cinema.

The fascinating scenarios Michael Crichton explored in his work successfully embodied the societal anxieties and technophobia of the 1970s. And in Westworld, he demonstrated a flair for capturing such fears in visual set pieces. This is no more evident than in the iconic, uncanny image of Yul Brynner’s deadly, sentient killer cowboy. Fifty years on, it remains one of the most memorable images in science fiction cinematic history.

#artificial intelligence#technology#movies#science fiction#science fiction and fantasy#futuristic#Westworld#Sci Fi Time Capsule#Youtube#featured

9 notes

·

View notes

Text

"Those Who Have Not" by Col Price

#art#science fiction#technology#futuristic#science fiction and fantasy#sci fi art#fantasy art#Col Price

23 notes

·

View notes

Text

The curious joy of being wrong – intellectual humility means being open to new information and willing to change your mind

by Daryl Van Tongeren, Associate Professor of Psychology at Hope College

Mark Twain apocryphally said, “I’m in favor of progress; it’s change I don’t like.” This quote pithily underscores the human tendency to desire growth while also harboring strong resistance to the hard work that comes with it. I can certainly resonate with this sentiment.

I was raised in a conservative evangelical home. Like many who grew up in a similar environment, I learned a set of religious beliefs that framed how I understood myself and the world around me. I was taught that God is loving and powerful, and God’s faithful followers are protected. I was taught that the world is fair and that God is good. The world seemed simple and predictable – and most of all, safe.

These beliefs were shattered when my brother unexpectedly passed away when I was 27 years old. His death at 34 with three young children shocked our family and community. In addition to reeling with grief, some of my deepest assumptions were challenged. Was God not good or not powerful? Why didn’t God save my brother, who was a kind and loving father and husband? And how unfair, uncaring and random is the universe?

This deep loss started a period where I questioned all of my beliefs in light of the evidence of my own experiences. Over a considerable amount of time, and thanks to an exemplary therapist, I was able to revise my worldview in a way that felt authentic. I changed my mind, about a lot things. The process sure wasn’t pleasant. It took more sleepless nights than I care to recall, but I was able to revise some of my core beliefs.

I didn’t realize it then, but this experience falls under what social science researchers call intellectual humility. And honestly, it is probably a large part of why, as a psychology professor, I am so interested in studying it. Intellectual humility has been gaining more attention, and it seems critically important for our cultural moment, when it’s more common to defend your position than change your mind.

What it means to be intellectually humble

Intellectual humility is a particular kind of humility that has to do with beliefs, ideas or worldviews. This is not only about religious beliefs; it can show up in political views, various social attitudes, areas of knowledge or expertise or any other strong convictions. It has both internal- and external-facing dimensions.

Within yourself, intellectual humility involves awareness and ownership of the limitations and biases in what you know and how you know it. It requires a willingness to revise your views in light of strong evidence.

Interpersonally, it means keeping your ego in check so you can present your ideas in a modest and respectful manner. It calls for presenting your beliefs in ways that are not defensive and admitting when you’re wrong. It involves showing that you care more about learning and preserving relationships than about being “right” or demonstrating intellectual superiority.

Another way of thinking about humility, intellectual or otherwise, is being the right size in any given situation: not too big (which is arrogance), but also not too small (which is self-deprecation).Having confidence in your area of expertise is different than thinking you know it all about everything.

Morsa Images/DigitalVision via Getty Images

I know a fair amount about psychology, but not much about opera. When I’m in professional settings, I can embrace the expertise that I’ve earned over the years. But when visiting the opera house with more cultured friends, I should listen and ask more questions, rather than confidently assert my highly uninformed opinion.

Four main aspects of intellectual humility include being:

Open-minded, avoiding dogmatism and being willing to revise your beliefs.

Curious, seeking new ideas, ways to expand and grow, and changing your mind to align with strong evidence.

Realistic, owning and admitting your flaws and limitations, seeing the world as it is rather than as you wish it to be.

Teachable, responding nondefensively and changing your behavior to align with new knowledge.

Intellectual humility is often hard work, especially when the stakes are high.

Starting with the admission that you, like everyone else, have cognitive biases and flaws that limit how much you know, intellectual humility might look like taking genuine interest in learning about your relative’s beliefs during a conversation at a family get-together, rather than waiting for them to finish so you can prove them wrong by sharing your – superior – opinion.

It could look like considering the merits of an alternative viewpoint on a hot-button political issue and why respectable, intelligent people might disagree with you. When you approach these challenging discussions with curiosity and humility, they become opportunities to learn and grow.

Why intellectual humility is an asset

Though I’ve been studying humility for years, I’ve not yet mastered it personally. It’s hard to swim against cultural norms that reward being right and punish mistakes. It takes constant work to develop, but psychological science has documented numerous benefits.

First, there are social, cultural and technological advances to consider. Any significant breakthrough in medicine, technology or culture has come from someone admitting they didn’t know something – and then passionately pursuing knowledge with curiosity and humility. Progress requires admitting what you don’t know and seeking to learn something new.Intellectual humility can make conversations less adversarial.

Compassionate Eye Foundation/Gary Burchell/DigitalVision via Getty Images

Relationships improve when people are intellectually humble. Research has found that intellectual humility is associated with greater tolerance toward people with whom you disagree.

For example, intellectually humble people are more accepting of people who hold differing religious and political views. A central part of it is an openness to new ideas, so folks are less defensive to potentially challenging perspectives. They’re more likely to forgive, which can help repair and maintain relationships.

Finally, humility helps facilitate personal growth. Being intellectually humble allows you to have a more accurate view of yourself.

When you can admit and take ownership of your limitations, you can seek help in areas where you have room to grow, and you’re more responsive to information. When you limit yourself to only doing things the way you’ve always done them, you miss out on countless opportunities for growth, expansion and novelty – things that strike you with awe, fill you with wonder and make life worth living.

Humility can unlock authenticity and personal development.

Humility doesn’t mean being a pushover

Despite these benefits, sometimes humility gets a bad rap. People can have misconceptions about intellectual humility, so it’s important to dispel some myths.

Intellectual humility isn’t lacking conviction; you can believe something strongly until your mind is changed and you believe something else. It also isn’t being wishy-washy. You should have a high bar for what evidence you require to change your mind. It also doesn’t mean being self-deprecating or always agreeing with others. Remember, it’s being the right size, not too small.

Researchers are working hard to validate reliable ways to cultivate intellectual humility. I’m part of a team that is overseeing a set of projects designed to test different interventions to develop intellectual humility.

Some scholars are examining different ways to engage in discussions, and some are exploring the role of enhancing listening. Others are testing educational programs, and still others are looking at whether different kinds of feedback and exposure to diverse social networks might boost intellectual humility.

Prior work in this area suggests that humility can be cultivated, so we’re excited to see what emerges as the most promising avenues from this new endeavor.

There was one other thing that religion taught me that was slightly askew. I was told that too much learning could be ruinous; after all, you wouldn’t want to learn so much that you might lose your faith.

But in my experience, what I learned through loss may have salvaged a version of my faith that I can genuinely endorse and feels authentic to my experiences. The sooner we can open our minds and stop resisting change, the sooner we’ll find the freedom offered by humility.

12 notes

·

View notes

Text

"Space Warfare" by Dreams in Frames

76 notes

·

View notes

Text

"We're Going To Mars" by Direwolf张艺骞

19 notes

·

View notes

Text

AI could improve your life by removing bottlenecks between what you want and what you get

by Bruce Schneier, Adjunct Lecturer in Public Policy, Harvard Kennedy School

Artificial intelligence is poised to upend much of society, removing human limitations inherent in many systems. One such limitation is information and logistical bottlenecks in decision-making.

Traditionally, people have been forced to reduce complex choices to a small handful of options that don’t do justice to their true desires. Artificial intelligence has the potential to remove that limitation. And it has the potential to drastically change how democracy functions.

AI researcher Tantum Collins and I, a public-interest technology scholar, call this AI overcoming “lossy bottlenecks.” Lossy is a term from information theory that refers to imperfect communications channels – that is, channels that lose information.

Multiple-choice practicality

Imagine your next sit-down dinner and being able to have a long conversation with a chef about your meal. You could end up with a bespoke dinner based on your desires, the chef’s abilities and the available ingredients. This is possible if you are cooking at home or hosted by accommodating friends.

But it is infeasible at your average restaurant: The limitations of the kitchen, the way supplies have to be ordered and the realities of restaurant cooking make this kind of rich interaction between diner and chef impossible. You get a menu of a few dozen standardized options, with the possibility of some modifications around the edges.

That’s a lossy bottleneck. Your wants and desires are rich and multifaceted. The array of culinary outcomes are equally rich and multifaceted. But there’s no scalable way to connect the two. People are forced to use multiple-choice systems like menus to simplify decision-making, and they lose so much information in the process.

People are so used to these bottlenecks that we don’t even notice them. And when we do, we tend to assume they are the inevitable cost of scale and efficiency. And they are. Or, at least, they were.

The possibilities

Artificial intelligence has the potential to overcome this limitation. By storing rich representations of people’s preferences and histories on the demand side, along with equally rich representations of capabilities, costs and creative possibilities on the supply side, AI systems enable complex customization at scale and low cost. Imagine walking into a restaurant and knowing that the kitchen has already started work on a meal optimized for your tastes, or being presented with a personalized list of choices.

There have been some early attempts at this. People have used ChatGPT to design meals based on dietary restrictions and what they have in the fridge. It’s still early days for these technologies, but once they get working, the possibilities are nearly endless. Lossy bottlenecks are everywhere.Imagine a future AI that knows your dietary wants and needs so well that you wouldn’t need to use detail prompts for meal plans, let alone iterate on them as the nutrition coach in this video does with ChatGPT.

Take labor markets. Employers look to grades, diplomas and certifications to gauge candidates’ suitability for roles. These are a very coarse representation of a job candidate’s abilities. An AI system with access to, for example, a student’s coursework, exams and teacher feedback as well as detailed information about possible jobs could provide much richer assessments of which employment matches do and don’t make sense.

Or apparel. People with money for tailors and time for fittings can get clothes made from scratch, but most of us are limited to mass-produced options. AI could hugely reduce the costs of customization by learning your style, taking measurements based on photos, generating designs that match your taste and using available materials. It would then convert your selections into a series of production instructions and place an order to an AI-enabled robotic production line.

Or software. Today’s computer programs typically use one-size-fits-all interfaces, with only minor room for modification, but individuals have widely varying needs and working styles. AI systems that observe each user’s interaction styles and know what that person wants out of a given piece of software could take this personalization far deeper, completely redesigning interfaces to suit individual needs.

Removing democracy’s bottleneck

These examples are all transformative, but the lossy bottleneck that has the largest effect on society is in politics. It’s the same problem as the restaurant. As a complicated citizen, your policy positions are probably nuanced, trading off between different options and their effects. You care about some issues more than others and some implementations more than others.

If you had the knowledge and time, you could engage in the deliberative process and help create better laws than exist today. But you don’t. And, anyway, society can’t hold policy debates involving hundreds of millions of people. So you go to the ballot box and choose between two – or if you are lucky, four or five – individual representatives or political parties.

Imagine a system where AI removes this lossy bottleneck. Instead of trying to cram your preferences to fit into the available options, imagine conveying your political preferences in detail to an AI system that would directly advocate for specific policies on your behalf. This could revolutionize democracy.Ballots are bottlenecks that funnel a voter’s diverse views into a few options. AI representations of individual voters’ desires overcome this bottleneck, promising enacted policies that better align with voters’ wishes. Tantum Collins, CC BY-ND

One way is by enhancing voter representation. By capturing the nuances of each individual’s political preferences in a way that traditional voting systems can’t, this system could lead to policies that better reflect the desires of the electorate. For example, you could have an AI device in your pocket – your future phone, for instance – that knows your views and wishes and continually votes in your name on an otherwise overwhelming number of issues large and small.

Combined with AI systems that personalize political education, it could encourage more people to participate in the democratic process and increase political engagement. And it could eliminate the problems stemming from elected representatives who reflect only the views of the majority that elected them – and sometimes not even them.

On the other hand, the privacy concerns resulting from allowing an AI such intimate access to personal data are considerable. And it’s important to avoid the pitfall of just allowing the AIs to figure out what to do: Human deliberation is crucial to a functioning democracy.

Also, there is no clear transition path from the representative democracies of today to these AI-enhanced direct democracies of tomorrow. And, of course, this is still science fiction.

First steps

These technologies are likely to be used first in other, less politically charged, domains. Recommendation systems for digital media have steadily reduced their reliance on traditional intermediaries. Radio stations are like menu items: Regardless of how nuanced your taste in music is, you have to pick from a handful of options. Early digital platforms were only a little better: “This person likes jazz, so we’ll suggest more jazz.”

Today’s streaming platforms use listener histories and a broad set of features describing each track to provide each user with personalized music recommendations. Similar systems suggest academic papers with far greater granularity than a subscription to a given journal, and movies based on more nuanced analysis than simply deferring to genres.

A world without artificial bottlenecks comes with risks – loss of jobs in the bottlenecks, for example – but it also has the potential to free people from the straightjackets that have long constrained large-scale human decision-making. In some cases – restaurants, for example – the impact on most people might be minor. But in others, like politics and hiring, the effects could be profound.

3 notes

·

View notes

Text

Doctor Who at 60: the show has always tapped into political issues – but never more so than in the 1970s

by Jamie Medhurst, Professor of Film and Media at Aberystwyth University

Doctor Who hit television screens at a key period in British television history. It launched on Saturday November 23, 1963, at 5.15pm, being somewhat overshadowed by the assassination of US president John F. Kennedy the previous day.

Set firmly within the BBC’s public service broadcasting ethos of informing, educating and entertaining, Doctor Who quickly became a mainstay of Saturday-evening viewing. By 1965, it was drawing in around 10 million viewers.

Throughout its history, Doctor Who has tapped into political, social and moral issues of the day – sometimes explicitly, other times more subtly. During the 1970s, when the Doctor was played by Jon Pertwee and Tom Baker, there were a number of examples of this.

Doctor Who in the 1970s

The 1970s were a period of political and social divisions: relationships between the government the unions in the first part of the decade was strained, exemplified by the miners’ strikes of 1972 and 1974. The political consensus that had dominated since 1945 was under pressure with talk of a break-up of the UK in the form of Welsh and Scottish Assemblies.

In his cultural history of Doctor Who, Inside the Tardis, television historian James Chapman argued that the 1970s painted “an uncomfortably sinister projection of the sort of society that Britain might come”.

It was never clear if Doctor Who storylines during this time were set in the present or at some point in the future. The fact that one of the lead characters, Brigadier Lethbridge-Stewart of the United Nations Intelligence Task Force (UNIT), calls the prime minister “Madam” in a telephone conversation in one episode suggests the latter.

youtube

As for some of the more politically engaged stories, The Green Death (1973), or “the one with the giant maggots” as it is known by fans, certainly pulled no punches. Described by Chapman as an “eco disaster narrative”, it pitted corporate greed and capitalism against environmental activists (portrayed here as Welsh hippies) and their concerns for the planet.

In the episode, Global Chemicals, run by a faceless machine, is tipping waste from its petrochemical plant into a disused mine in the south Wales valleys (cue awful Welsh stereotypes). The green sludge not only kills people, but creates mutant maggots which also attack. As fears grew and the green movement gained momentum in the early 1970s, this story would have resonated with large parts of the audience.

When the Doctor visits the planet Peladon in The Curse of Peladon (1972), the planet is attempting to join the Galactic Federation. There are those on the planet who argue for joining, while opponents are just as vociferous, arguing that joining the Federation would destroy the old ways of the planet.

Sound familiar? This is the time that Britain was negotiating to join the European Economic Community, as it did in 1973. Interestingly, the serial was broadcast during the time of the 1972 miners’ strike (leading to many viewers missing later episodes due to power cuts).

The follow-up story, The Monster of Peladon (1974), is set against a backdrop of industrial strife and conflict involving miners.

Tom Baker’s Doctor

In what many consider to be one of the best classic serials, Genesis of the Daleks (1975) Tom Baker’s doctor continued the tradition of raising complex political, social and moral issues.

youtube

Sent back in time by the Time Lords to change the course of history, the Doctor at one point has an opportunity to destroy the mutations which form the “body” of the Dalek (inside their metal casing) and destroy the Dalek race forever. Holding two wires close to each other, about to create an explosion in the incubation room, he asks himself and his companions: “Have I that right?”

Having the ability to see the future, he says that future planets will become allies in fighting the evil of the Daleks. Had he the right to change the course of history? Given the symbolism used in the story (salutes, black outfits, references to a “pure” race) this was a clear reference to the rise of the Nazis.

The political allegories didn’t end in the 1970s. One of the most blatant can be seen in the 1988 serial, The Happiness Patrol. The main antagonist, Helen A (played by Sheila Hancock), a ruthless and tyrannical leader is said to be modelled on Conservative prime minister, Margaret Thatcher. The fact that Hancock appears to be impersonating Thatcher lends a certain degree of credence to this belief.

Anybody who argues that the revival of Doctor Who in 2005 saw a more political edge to the storylines need only look back over 60 years. Now that we can do this thanks to the BBC uploading more than 800 episodes onto iPlayer, it will become clear to all.

Doctor Who – especially during its Golden Age in the 1970s – has always been political.

#science fiction#science fiction and fantasy#sci fi TV#Doctor Who#john pertwee#Tom Baker#Sci Fi Time Capsule#1970s tv#1970s sci fi#featured#Youtube

2 notes

·

View notes

Text

Winter brings more than just ugly sweaters – here’s how the season can affect your mind and behavior

by Michael Varnum, Associate Professor of Psychology at Arizona State University and Ian Hohm, Graduate Student of Psychology at the University of British Columbia

What comes to mind when you think about winter? Snowflakes? Mittens? Reindeer? In much of the Northern Hemisphere, winter means colder temperatures, shorter days and year-end holidays.

Along with these changes, a growing body of research in psychology and related fields suggests that winter also brings some profound changes in how people think, feel and behave.

While it’s one thing to identify seasonal tendencies in the population, it’s much trickier to try to untangle why they exist. Some of winter’s effects have been tied to cultural norms and practices, while others likely reflect our bodies’ innate biological responses to changing meteorological and ecological conditions. The natural and cultural changes that come with winter often occur simultaneously, making it challenging to tease apart the causes underlying these seasonal swings.

With our colleagues Alexandra Wormley and Mark Schaller, we recently conducted an extensive survey of these findings.

Wintertime blues and a long winter’s nap

Do you find yourself feeling down in the winter months? You’re not alone. As the days grow shorter, the American Psychiatric Association estimates that about 5% of Americans will experience a form of depression known as seasonal affective disorder, or SAD.

People experiencing SAD tend to have feelings of hopelessness, decreased motivation to take part in activities they generally enjoy, and lethargy. Even those who don’t meet the clinical threshold for this disorder may see increases in anxiety and depressive symptoms; in fact, some estimates suggest more than 40% of Americans experience these symptoms to some degree in the winter months.

Scientists link SAD and more general increases in depression in the winter to decreased exposure to sunlight, which leads to lower levels of the neurotransmitter serotonin. Consistent with the idea that sunlight plays a key role, SAD tends to be more common in more northern regions of the world, like Scandinavia and Alaska, where the days are shortest and the winters longest.

Humans, special as we may be, are not unique in showing some of these seasonally linked changes. For instance, our primate relative the Rhesus macaque shows seasonal declines in mood.

It can feel hard to get out of bed on dark mornings.

Some scientists have noted that SAD shows many parallels to hibernation – the long snooze during which brown bears, ground squirrels and many other species turn down their metabolism and skip out on the worst of winter. Seasonal affective disorder may have its roots in adaptations that conserve energy at a time of year when food was typically scarce and when lower temperatures pose greater energetic demands on the body.

Winter is well known as a time of year when many people put on a few extra pounds. Research suggests that diets are at their worst, and waistlines at their largest, during the winter. In fact, a recent review of studies on this topic found that average weight gains around the holiday season are around 1 to 3 pounds (0.5 to 1.3 kilograms), though those who are overweight or obese tend to gain more.

There’s likely more going on with year-end weight gain than just overindulgence in abundant holiday treats. In our ancestral past, in many places, winter meant that food became more scarce. Wintertime reductions in exercise and increases in how much and what people eat may have been an evolutionary adaptation to this scarcity. If the ancestors who had these reactions to colder, winter environments were at an advantage, evolutionary processes would make sure the adaptations were passed on to their descendants, coded into our genes.

Sex, generosity and focus

Beyond these winter-related shifts in mood and waistlines, the season brings with it a number of other changes in how people think and interact with others.

One less discussed seasonal effect is that people seem to get friskier in the winter months. Researchers know this from analyses of condom sales, sexually transmitted disease rates and internet searches for pornography and prostitution, all of which show biannual cycles, peaking in the late summer and then in the winter months. Data on birth rates also shows that in the United States and other countries in the Northern Hemisphere, babies are more likely to be conceived in the winter months than at other times of the year.There’s more to a holiday bump in romance than just opportunity.

Although this phenomenon is widely observed, the reason for its existence is unclear. Researchers have suggested many explanations, including health advantages for infants born in late summer, when food may historically have been more plentiful, changes in sex hormones altering libido, desires for intimacy motivated by the holiday season, and simply increased opportunities to engage in sex. However, changes in sexual opportunities are likely not the whole story, given that winter brings not just increased sexual behaviors, but greater desire and interest in sex as well.

Winter boosts more than sex drive. Studies find that during this time of year, people may have an easier time paying attention at school or work. Neuroscientists in Belgium found that performance on tasks measuring sustained attention was best during the wintertime. Research suggests that seasonal changes in levels of serotonin and dopamine driven by less exposure to daylight may help explain shifts in cognitive function during winter. Again, there are parallels with other animals – for instance, African striped mice navigate mazes better during winter.

And there may also be a kernel of truth to the idea of a generous Christmas spirit. In countries where the holiday is widely celebrated, rates of charitable giving tend to show a sizable increase around this time of year. And people become more generous tippers, leaving about 4% more for waitstaff during the holiday season. This tendency is likely not due to snowy surroundings or darker days, but instead a response to the altruistic values associated with winter holidays that encourage behaviors like generosity.

People change with the seasons

Like many other animals, we too are seasonal creatures. In the winter, people eat more, move less and mate more. You may feel a bit more glum, while also being kinder to others and having an easier time paying attention. As psychologists and other scientists research these kinds of seasonal effects, it may turn out that the ones we know about so far are only the tip of the iceberg.

10 notes

·

View notes

Text

‘Godzilla Minus One’: Finding paradise of shared co-operation through environmental disaster

by Chris Corker, PhD Student in the Humanities at York University, Canada

Godzilla Minus One, directed by Takashi Yamazaki, brings viewers back to post-war Japan and to the wholly belligerent monster of the original 1954 Godzilla — a beast bereft of the friendly connotations accrued in the later Toho Studios Japanese installments.

This original Godzilla represented what its director, Ishiro Honda, described as the “invisible fear” of the nuclear contamination of our planet.

Historian William Tsutsui asserts in his book dedicated to the series that the film allows us to neutralize our fears of potential annihilation. Cathartic or not, this apocalyptic trend remains a staple of science-fiction movies and series to this day.

Minus One returns to that fear, once perhaps invisible but now undeniable, of the disasters incurred by damage to our environment.

At the same time, the film asks how individuals and communities can tackle disaster while embracing an ethos of mutual aid that sidesteps nostalgia for nationalist policies that lead to even more harm.

youtube

Disaster response

In the two most recent instalments in the Godzilla franchise, 2016’s Shin Godzilla and 2023’s Godzilla Minus One, the monster can be read as a personification of a diminished belief in governmental abilities to prevent or respond adequately to disaster.

Shin Godzilla, directed by Hideaki Anno, dealt satirically with the limp governmental response to the 2011 Tohoku Earthquake and Tsunami. As the threat of the monster escalates to catastrophic levels, the politicians in the film are more concerned with optics and in which board room they should be conducting their meetings.

youtube

n contrast, 2023’s Minus One captures ire for a nationalistic government that guided Japan into imperial campaigns in Asia and finally to a total defeat with a devastating human cost.

When Godzilla arrives to further compound post-war misery, harried survivors don’t rely on the same government that has led them astray.

Putting aside ideological differences

Instead, as some reviews of the film have noted, they turn to a community with the power to act outside of official bodies, making use of technological skills earned through wartime experience.

While the state lends them a few ships, citizens are otherwise left to their own devices, relying on old and decommissioned machinery. They rise to face the monster not by developing a new weapon of destruction but by using what is already at hand.

ience. As engineer Noda notes, their lives have been undervalued. This realization leads them to turn away from the government and the nationalist policies that led to the war, and to rely instead on one another.

To do this they must put aside ideological differences to achieve the common goal of stopping Godzilla. This is best illustrated by the co-operation of Koichi Shikishima, a kamikaze pilot who questions the value of his death amid imminent defeat yet is dogged by the shame of his desertion, and an engineer, Sosaku Tachibana, who initially deems Shikishima a coward.

Revisiting values, alliances

These plotlines reflect contemporary interest in the local and political communities we should be forging in light of serious environmental threats.

Writer Rebecca Solnit laments self-serving governmental responses to disaster. But her A Paradise Built in Hell focuses on the positives that can come from disaster at the communal level.

She concludes that in enhancing social cohesion and bringing out the humanitarian in each of us, disaster “reveals what else the world could be like.” In short, a paradise of shared purpose and co-operation.

The key, however, is distinguishing between a benign social cohesion, like the aforementioned networks of mutual care, and a malignant one, as seen in destructive forms of nationalism and war.

In Godzilla Minus One, Shikishima and Tachibana band together to save lives. Their wider group insists on a victory without the sacrifice of human life, an ethos made possible by adopting a communal view in which humans are not statistics.

Dream together or die alone

At a time of an increasingly nationalist Japanese government that has been criticized for undermining freedom of the press, the film suggests how a nostalgic dream for a return to a time of stronger social ties and a sense of unified purpose is one easily manipulated by nationalist governments.

This has been seen in a host of recent examples including Donald Trump, Brexit and, as mentioned above, the policies of Shinzo Abe’s Liberal Democratic Party.

On the global scale, the rise of populism, diplomatic spats and outright conflict sees much of the world drawing away from their neighbours. This is happening when, to counteract the effects of climate change and face the exponential increase in disaster, we must unite.

Godzilla Minus One shows how we must rely on a fondness — even a nostalgia — for times of togetherness that do not mix with a nationalist sentiment that encourages isolationism and aggression towards others.

To do so really would be to go from zero to minus one. From there, there is little guarantee we can recover.

#movies#godzilla#kaiju#godzilla minus one#science fiction and fantasy#Japan#japanese film#featured#Youtube

12 notes

·

View notes

Text

2001: A Space Odyssey still leaves an indelible mark on our culture 55 years on

by Nathan Abrams, Professor of Film Studies at Bangor University

2001: A Space Odyssey is a landmark film in the history of cinema. It is a work of extraordinary imagination that has transcended film history to become something of a cultural marker. And since 1968, it has penetrated the psyche of not only other filmmakers but society in general.

It is not an exaggeration to say that 2001 single-handedly reinvented the science fiction genre. The visuals, music and themes of 2001 left an inedible mark on subsequent science fiction that is still evident today.

When Stanley Kubrick began work on 2001 in the mid-1960s, he was told by studio executive Lew Wasserman: “Kid, you don’t spend over a million dollars on science fiction movies. You just don’t do that.”

By that point, the golden age of science fiction film had run its course. During its heyday, there was a considerable variety of content within the overarching genre. There had been serious attempts to foretell space travel. Destination Moon, directed by Irving Pichel and produced by George Pal in 1950, and, in mid-century, Byron Haskin’s Conquest of Space both fantasised space travel and, in Haskin’s film, a space station, which Kubrick would elaborate on in 2001.

youtube

Most 1950s science fiction films, though, were cheap B-movie fare and looked it. They involved alien invasions with an ideological and allegorical subtext. They were cultural, cinematic imaginations of the danger of communism, which in the overheated political atmosphere of the time was seen as an imminent threat to the American way of life.

The aliens in most science fiction films were out simply to destroy or take over humanity; they were expressions, to use the title of a Susan Sontag essay, of “the imagination of disaster”. There were some exceptions, including Byron Haskin’s film version of The War of the Worlds and Robert Wise’s The Day the Earth Stood Still.

By 1968, then, as the lights went down, very few people knew what was about to transpire and they certainly were not prepared for what did. The film opened in near darkness as the strains of Thus Spake Zarathustra by Richard Strauss were heard. The cinema was dazzled into light, as if Kubrick had remade Genesis.

The subsequent 160 or so minutes (the length of his original cut before he edited 19 minutes out of it) took the viewer on what was marketed as “the ultimate trip”. Kubrick had excised almost every element of explanation leaving an elusive, ambiguous and thoroughly unclear film. His decisions contributed to long silent scenes, offered without elucidation. It contributed to the film’s almost immediate critical failure but its ultimate success. It was practically a silent movie.

2001 was an experiment in film form and content. It exploded the conventional narrative form, restructuring the conventions of the three-act drama. The narrative was linear, but radically, spanning aeons and ending in a timeless realm, all without a conventional movie score. Kubrick used 19th-century and modernist music, such as Strauss, György Ligeti and Aram Khachaturian.

Vietnam

The movie was made during a tumultuous period of American history, which it seemingly ignored. The war in Vietnam was already a highly divisive issue and was spiralling into a crisis. The Tet offensive, which began on January 31 1968, had claimed tens of thousands of lives. As US involvement in Vietnam escalated, domestic unrest and violence at home intensified.

Increasingly, young Americans expected their artists to address the chaos that roared around them. But in exploring the origins of humanity’s propensity for violence and its future destiny, 2001 dealt with the big questions and ones that were burning at the time of its release. They fuelled what Variety magazine called the “coffee cup debate” over “what the film means”, which is still ongoing today.

The design of the film has touched many other films. Silent Running by Douglas Trumbull (who worked on 2001’s special effects) owes the most obvious debt but Star Wars would be also unthinkable without it. Popular culture is full of imagery from the film. The music Kubrick used in the film, especially Strauss’s The Blue Danube, is now considered “space music”.

Images from the movie have appeared in iPhone adverts, in The Simpsons and even the trailer for the new Barbie movie.

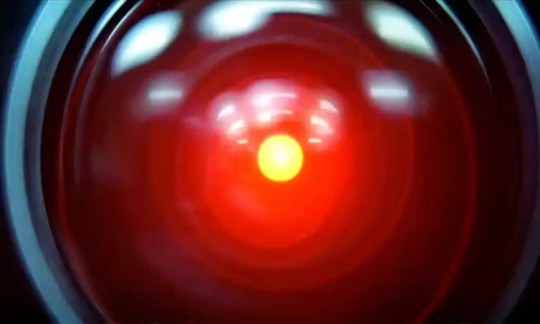

The warnings of the danger of technology embodied in the film’s murderous supercomputer HAL-9000 can be felt in the “tech noir” films of the late 1970s and 1980s, such as Westworld, Alien, Blade Runner and Terminator.

HAL’s single red eye can be seen in the children’s series, Q Pootle 5, and Pixar’s animated feature, Wall-E. HAL has become shorthand for the untrammelled march of artificial intelligence (AI).

In the age of ChatGPT and other AI, the metaphor of Kubrick’s computer is frequently evoked. But why when there have been so many other images such as Frankenstein, Prometheus, terminators and other murderous cyborgs? Because there is something so uncanny and human about HAL who was deliberately designed to be more empathic and human than the people in the film.

In making 2001, Stanley Kubrick created a cultural phenomenon that continues to speak to us eloquently today.

#movies#science fiction#2001: a space odyssey#stanley kubrick#space#technology#artificial intelligence#space exploration#tet offensive#featured#Youtube

2 notes

·

View notes

Text

Doctor Who at 60: what qualities make the best companion? A psychologist explains

by Sarita Robinson, Associate Dean of School for Psychology and Humanities at the University of Central Lancashire

Over the past 60 years, we have witnessed the Doctor’s adventures in time and space with a multitude of companions by his side. From his granddaughter Susan and her teachers, Ian and Barbara to Ryan, Graham and Yaz – the Doctor has had many travelling companions.

But what makes a person leave their everyday life and leap at the chance to join Team Tardis with a brilliant, yet at times unpredictable, Time Lord? What does it take to not only survive but to thrive as the Doctor’s companion? A degree of physical fitness is certainly needed for running up and down corridors, but the Doctor’s companions also need to be open to new experiences, keep going in the face of adversity and be resilient.

One thing that all successful companions share is a flexible, or growth, mindset. People with a flexible mindset are more likely to believe that they can deal with new situations and can gain the knowledge and skills needed to succeed.

One example of a companion with a flexible mindset is the fourth Doctor’s (Tom Baker) travelling companion, Leela (Louise Jameson). Leela belonged to a tribe of regressed humans, known as the Sevateem, who were descended from a survey team which crash-landed on the planet Mordee where they founded a colony. A great warrior, Leela demanded that the Doctor took her with him in the Tardis.

Before her travels with the Doctor, Leela had had no experience of technology or societies outside her own. But during her time with the Doctor she was always quick to adapt to new situations and saw all the new experiences she was exposed to as an opportunity for learning.