#Clausius

Text

Latina MILF Eva Davai fed dick before severe drilling

Hot latina MILF with perfect tits gets her pierced clit licked then rides massive dick

Babe gives pov tit fuck

VersKay fodendo o marido de frango assado

Webcam: Lesbian Weirdos Making Out & Tongue Sucking

Desi nude girl sexy lock

Vina Sky and Elle Voneva Share Foreigner Dick

Romi Rain busty brunette play with dildo

Big Tit Muscle Domme Dirty talk POV

Indian wife Rukhshana fucking with Call Boy full service in Hotal

#Fenelton#haboub#searcher#interkinesis#homoeomorphism#hostilities#jeepneys#adhesion#subnutritious#soled#Placean#Wendall#flaunche#double-reef#amblyaphia#vers-librist#Clausius#configuring#orchestrina#Gekko

0 notes

Text

me: i don't crave academic validation

also me: gets overly excited when my chem professor tells me my "graphs look nice"

#me when excel wasn't a bitch and graphed my silly little clausius clapeyron correctly the first time#lol why do any of you follow me when this is the nonsense i post#personal#stem major shenanigans

8 notes

·

View notes

Text

a sleepless night

I stayed up ALL fucking night tonight finishing way past due homework that I've had extensions for. I've used the Clausius-Clapeyron equation, calculated potential evapotranspiration, analyzed annual, monthly, and daily precipitation recorded at three weather stations in NC for twenty years, and wrote some fun stuff about hydraulic conductivity. I also did my meteorology studying ahead of time and I finished my reflection about the Atchafalaya River. I still need to finish my lab due today which is understanding pressure changes in aquifers and the relationship between these differences and gaining/losing streams AND my Bell Tower essay is due tomorrow. Still working on a problem set that I might end up not being able to submit but holy fucking HELL it feels so nice to actually complete things. Maybe I'm not a 4.0 student like I was at community college but here I am absolutely weathering this storm.

#im so tired yall#me#meteorology#hydrology#geoscience#geology#women in stem#university#geological science#clausius clapeyron#evapotranspiration#hydraulic conductivity#aquifers#nc#academia#i got this#at least i'll keep trying#Atchafalaya

4 notes

·

View notes

Text

ISI VE SICAKLIĞIN DOĞASI: BÖLÜM 1 | TERMODİNAMİK YASALARI NASIL GELİŞTİRİLDİ?

Isı ve sıcaklık nedir? Birer madde mi? Enerji mi? Yoksa bazı olguları açıklayabilmek için varsaydığımız kavramlar mı? Fiziğin önemli alt disiplinlerinden birisi olan termodinamik, bu iki kavramı merkeze alarak maddeyi inceliyor. Peki, termodinamik ilmi zaman içinde gelişerek ısı ve sıcaklığı nasıl açıkladı? Termodinamik Kanunları olarak bilinen 4 yasa nasıl ortaya çıktı? Günümüzde ısı ve sıcaklık…

View On WordPress

#antoine lavoisier kalorik#benjamin thompson deneyi#entropi ve termodinamik#ısı nedir#ısı ve sıcaklığın doğası#ısı ve sıcaklık#ısı ve sıcaklık konu anlatımı#ısı ve sıcaklık teorileri#james joule termodinamik#joseph fourier ısı iletimi formülü#julius robert von mayer vücut ısısı#kalorifik teorisi#kalorik#kinetik teori#ralph h. fowler termodinamik#rudolf clausius termodinamik#sıcaklık nedir#termodinamik nedir#termodinamik yasaları#walther nernst termodinamik

0 notes

Text

Explained | What is relative humidity and why does it matter on a hot day?

Relative humidity is a easy idea as climate phenomena go – however it has important, far-reaching penalties for a way we should care for ourselves on a hot or moist day.

Humidity is the quantity of moisture within the air round us, and there are 3 ways to trace it. The most typical of them is absolute humidity: the mass of water vapour in a given quantity of the air and water vapour combination,…

View On WordPress

#Can my phone sense humidity?#Can you test humidity with an I phone?#Clausius-Clapeyron equation#dry bulb temperature#evaporative cooling#heat index#heatwave#heatwaves#How do you measure relative humidity?#How to measure relative humidity?#India heatwave#Is there a relative humidity app?#Magnus parameters#psychrometric chart#relation between relative humidity and a hot day#relative humidity#relative humidity app#relative humidity formula#relative humidity meaning#relative humidity unit#relative humidity vs absolute humidity#saturated vapour pressure#science news#the hindu explains relative humidity#the hindu premium#types of humidity#wet bulb temperature#What does relative humidity imply physically?#what is relative humidity#What is the best app to check humidity levels?

0 notes

Text

'BKFC 39' results: Reginald Barnett Jr. vs Frank Alvarez, Britain Hart vs Jenny Clausius in Norfolk, Virginia

promotion: Bare Knuckle Fighting Championship

title: “BKFC 39″

venue: Scope Arena, Norfolk, Virginia, United States

date: March 24, 2023

FIGHTERS

FIGHT RESULTS

PRELIMINARY CARD

MAIN CARD

POST-FIGHT CONFERENCE

View On WordPress

0 notes

Text

BKFC 39 Fight Week is Here!

#BKFC #BKFC39

The excitement is palpable as BKFC gears up for its latest event, BKFC 39. An event that will take place at Scope Arena in Norfolk, Virginia. This event promises to be a huge occasion for both fans and fighters alike. It has two world championships on the line and several other exciting matchups scheduled throughout the night.

The Main Event for BKFC 39 will feature Reggie Barnett Jr., defending…

View On WordPress

#Bare Knucke Fighting Championship#BKFC#BKFC 39#BKFC39#Britain Hart#Daniel Alvarez#Jenny Clausius#Reggie Barnett#Savage

0 notes

Text

Siracusa ~ Johann G. Seume

Siracusa ~ Johann G. Seume

Henry Tresham (1751 – 1814) Rovine del Tempio di Zeus con vista di Ortygia, Siracusa

LETTERA XXIII

Siracusa

Io sono allegro, oggi, allegro, allegro,

non porgo orecchio ai saggi e costumati,

mi voltolo per terra e grido e strillo,

e il re, oh! il re deve lasciarmi fare.

Così canta Asmus[1] il primo di maggio a Wandsbeck: così posso cantare io quattro settimane in anticipo, il primo…

View On WordPress

#Agatocle#Alberto Romagnoli#Frederic Leighton#Henry Tresham#Johann Gottfried Seume#Matthias Clausius Asmus#Saverio Landolina#Siracusa#Tito Otacilio Crasso

0 notes

Text

Forget-you-not

There’s nothing like a train ride towards your trauma

Back to the place you don’t wanna be

To the people you let go

To the eye-rolls and the noise

To the shame you get for just trying to breathe

There’s nothing like searching for a moment of peace

‘Cause all these fake plants and motivational signs

They cover up the pain I feel inside

All these empty fields side-by-side

They remind me of the days…

View On WordPress

0 notes

Photo

Yellow stink bug, Antiteuchus macraspis, Pentatomidae

Found in South America

Photo 1 by birdernaturalist, 2-3 by gafischer, 4 by jeancmf, and 5 by clausius

331 notes

·

View notes

Text

Entropy

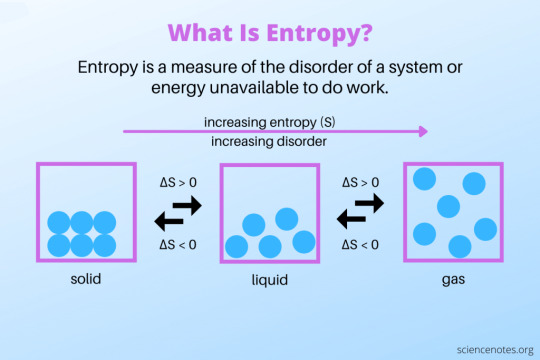

Though the concept of entropy - or aspects of it, at least - had been discussed for some time previously, the word itself wasn't coined until 1865 by German physicist Rudolf Clausius. Clausius defined entropy as based on the state or the configuration of a system, which remains a part of the definition to this day. Entropy, simply put, is a measure of the disorder of a system. Each state of a system has a different entropy, with the more disordered the state the higher the entropy. This is an extensive property, something that depends on how much matter is in a system (volume is an extensive property, thermal conductivity is not). However, entropy cannot be directly measured and is usually calculated in terms of a change in entropy. Mathematically, entropy is typically represented by a capital S and has units of energy over temperature (in SI units, J/K).

Sources/Further Reading: (Image source - Science Notes) (Wikipedia) (RSC)

49 notes

·

View notes

Text

entropy and life//entropy and death

This is a discussion that spun out of a post on web novel The Flower That Bloomed Nowhere. However, it's mostly a chance to lay out the entropy thing. So most of it is not Flower related at all...

the thermodynamics lesson

Entropy is one of those subjects that tends to be described quite vaguely. The rigorous definition, on the other hand, is packed full of jargon like 'macrostates', which I found pretty hard to wrap my head around as a university student back in the day. So let's begin this post with an attempt to lay it out a bit more intuitively.

In the early days of thermodynamics, as 19th-century scientists like Clausius attempted to get to grips with 'how do you build a better steam engine', entropy was a rather mysterious quantity that emerged from their networks of differential equations. It was defined in relation to measurable quantities temperature and heat. If you add heat to a system at a given temperature, its entropy goes up. In an idealised reversible process, like compressing a piston infinitely slowly, the entropy stays constant.

Strangely, this convenient quantity always seemed to go up or stay the same, never ever down. This was so strictly true that it was declared to be a 'law of thermodynamics'. Why the hell should that be true? Turns out they'd accidentally stumbled on one of the most fundamental principles of the universe.

So. What actually is it? When we talk about entropy, we are talking about a system that can be described in two related ways: a 'nitty-gritty details' one that's exhaustively precise, and a 'broad strokes' one that brushes over most of those details. (The jargon calls the first one a 'microstate' and the second one a 'macrostate'.)

For example, say the thing you're trying to describe is a gas. The 'nitty gritty details' description would describe the position and velocity of every single molecule zipping around in that gas. The 'broad strokes' description would sum it all up with a few quantities such as temperature, volume and pressure, which describe how much energy and momentum the molecules have on average, and the places they might be.

In general there are many different possible ways you could arrange the molecules and their kinetic energy match up with that broad-strokes description.

In statistical mechanics, entropy describes the relationship between the two. It measures the number of possible 'nitty gritty details' descriptions that match up with the 'broad strokes' description.

In short, entropy could be thought of as a measure of what is not known or indeed knowable. It is sort of like a measure of 'disorder', but it's a very specific sense of 'disorder'.

For another example, let's say that you are running along with two folders. Each folder contains 100 pages, and one of them is important to you. You know for sure it's in the left folder. But then you suffer a comical anime collision that leads to your papers going all over the floor! You pick them up and stuff them randomly back in the folders.

In the first state, the macrostate is 'the important page is in the left folder'. There are 100 positions it could be. After your accident, you don't know which folder has that page. The macrostate is 'It could be in either folder'. So there are now 200 positions it could be. This means your papers are now in a higher entropy state than they were before.

In general, if you start out a system in a given 'broad strokes' state, it will randomly explore all the different 'nitty gritty details' states available in its phase space (this is called the ergodic hypothesis). The more 'nitty gritty details' states that are associated with a given 'broad strokes' state, the more likely that it will end up in that state. In practice, once you have realistic numbers of particles involved, the probabilities involved are so extreme that we can say that the system will almost certainly end up in a 'broad strokes' state with equal or higher entropy. This is called the Second Law of Thermodynamics: it says entropy will always stay the same, or increase.

This is the modern, statistical view of entropy developed by Ludwig Boltzmann in the 1870s and really nailed down at the start of the 20th century, summed up by the famous formula S=k log W. This was such a big deal that they engraved it on his tombstone.

Since the Second Law of Thermodynamics is statistical in nature, it applies anywhere its assumptions hold, regardless of how the underlying physics works. This makes it astonishingly powerful. Before long, the idea of entropy in thermodynamics inspired other, related ideas. Claude Shannon used the word entropy for a measure of the maximum information conveyed in a message of a certain length.

the life of energy and entropy

So, everything is made of energy, and that energy is in a state with a certain amount of thermodynamic entropy. As we just discussed, every chemical process must globally increase the entropy. If the entropy of one thing goes down, the entropy of something else must increase by an equal or greater amount.

(A little caveat: traditional thermodynamics was mainly concerned with systems in equilibrium. Life is almost by definition not in thermodynamic equilibrium, which makes things generally a lot more complicated. Luckily I'm going to talk about things at such a high level of abstraction that it won't matter.)

There are generally speaking two ways to increase entropy. You can add more energy to the system, and you can take the existing energy and distribute it more evenly.

For example, a fridge in a warm room is in a low entropy state. Left to its own devices, energy from outside would make its way into the fridge, lowering the temperature of the outside slightly and increasing the temperature of the inside. This would increase the entropy: there are more ways for the energy to be distributed when the inside of the fridge is warmer.

To cool the fridge we want to move some energy back to the outside. But that would lower entropy, which is a no-no! To get around this, the heat pump on a fridge must always add a bit of extra energy to the outside of the fridge. In this way it's possible to link the cooling of the inside of the fridge to the increase in entropy outside, and the whole process becomes thermodynamically viable.

Likewise, for a coherent pattern such as life to exist, it must slot itself into the constant transition from low to high entropy in a way that can dump the excess entropy it adds somewhere else.

Fortunately, we live on a planet that is orbiting a bright star, and also radiating heat into space. The sun provides energy in a relatively low-entropy state: highly directional, in a certain limited range of frequencies. The electromagnetic radiation leaving our planet is in a higher entropy state. The earth as a whole is pretty near equilibrium (although it's presently warming, as you might have heard).

Using a multistep process and suitable enzymes, photosynthesis can convert a portion of the incoming sunlight energy into sugars, which are in a tasty low entropy state. This is a highly unfavoured process in general, and it requires some shenanigans to get away with it. But basically, the compensating increase in entropy is achieved by heating up the surroundings, which radiate away lower-temperature infrared radiation.

the reason we don't live forever

Nearly all other lifeforms depend on these helpfully packaged low-entropy molecules. We take in molecules from outside by breathing in and eating and drinking, put them through a bunch of chemical reactions (respiration and so forth), and emit molecules at a higher entropy (breathing out, pissing, shitting, etc.). Since we constantly have to throw away molecules to get rid of the excess entropy produced by the processes of living, we constantly have to eat more food. This is what I was alluding to in the Dungeon Meshi post from the other day.

That's the short-timescale battle against entropy. On longer timescales, we can more vaguely say that life depends on the ability to preserve a low-entropy, non-equilibrium state. On the simplest level, a human body is in a very low entropy state compared to a cloud of carbon dioxide and water, but we generally speaking do not spontaneously combust because there is a high enough energy barrier in the way. But in a more abstract one, our cells continue to function in specialised roles, the complex networks of reaction pathways continue to tick over, and the whole machine somehow keeps working.

However, the longer you try to maintain a pattern, the more low-probability problems start to become statistical inevitabilities.

For example, cells contain a whole mess of chemical reactions which can gradually accumulate errors, waste products etc. which can corrupt their functioning. To compensate for this, multicellular organisms are constantly rebuilding themselves. On the one hand, their cells divide to create new cells; on the other, stressed cells undergo apoptosis, i.e. die. However, sometimes cells become corrupt in a way that causes them to fail to die when instructed. Our body has an entire complicated apparatus designed to detect those cells and destroy them before they start replicating uncontrollably. Our various defensive mechanisms detect and destroy the vast majority of potentially cancerous cells... but over a long enough period, the odds are not in our favour. Every cell has a tiny potential to become cancerous.

At this point we're really not in the realm of rigorous thermodynamic entropy calculations. However, we can think of 'dead body' as generally speaking a higher-entropy set of states than 'living creature'. There are many more ways for the atoms that make us up to be arranged as a dead person, cloud of gas, etc. than an alive person. Worse still should we find we were in a metastable state, where only a small boost over the energy barrier is needed to cause a runaway reaction that drops us into a lower energy, higher entropy state.

In a sense, a viral infection could be thought of as a collapse of a metastable pattern. The replication machinery in our cells could produce human cells but it can equally produce viruses, and it turns out stamping out viruses is (in this loose sense) a higher entropy pattern; the main thing that stops us from turning into a pile of viruses is the absence of a virus to kick the process off.

So sooner or later, we inevitably(?) hit a level of disruption which causes a cascading failure in all these interlinked biological systems. The pattern collapses.

This is what we call 'death'.

an analogy

If you're familiar with cellular automata like Conway's Game of Life, you'll know it's possible to construct incredibly elaborate persistent patterns. You can even build the game of life in the game of life. But these systems can be quite brittle: if you scribble a little on the board, the coherent pattern will break and it will collapse back into a random mess of oscillators. 'Random mess of oscillators' is a high-entropy state for the Game of Life: there are many many different board states that correspond to it. 'Board that plays the Game of Life' is a low-entropy state: there are a scant few states that fit.

The ergodic hypothesis does not apply to the Game of Life. Without manual intervention, the 'game of life in game of life' board would keep simulating a giant version of the game of life indefinitely. However...

For physical computer systems, a vaguely similar process of accumulating problems can occur. For example, a program with a memory leak will gradually request more and more memory from the operating system, leaving more and more memory in an inaccessible state. Other programs may end up running slowly, starved of resources.

In general, there are a great many ways a computer can go wrong, and few that represent it going right.

One of the ways our body avoids collapsing like this is by dedicating resources to cells whose job is to monitor the other cells and intervene if they show heuristic signs of screwing up. This is the evolutionary arms race between immune system and virus. The same can be true on computers, which also support 'viruses' in the form of programs that are able to hijack a computer and replicate themselves onto other computers - and one of our solutions is similar, writing programs which detect and terminate programs which have the appearance of viruses.

When a computer is running slowly, the first thing to do is to reboot it. This will reload all the programs from the unchanging version on disc.

The animal body's version of a reboot is to dump all the accumulated decay onto a corpse and produce a whole new organism from a single pair of cells. This is one function of reproduction, a chance to wipe the slate clean. (One thing I remain curious about is how the body keeps the gamete cells in good shape.)

but what if we did live forever?

I am not particularly up to date on senescence research, but in general the theories do appear to go along broad lines of 'accumulating damage', with disagreement over what represents the most fundamental cause.

Here's how Su discusses the problem of living indefinitely in The Flower That Blooms Nowhere, chapter 2:

The trouble, however, is that the longer you try to preserve a system well into a length of time it is utterly not designed (well, evolved, in this case) for, the more strange and complicated problems appear. Take cancer, humanity’s oldest companion. For a young person with a body that's still running according to program, it's an easy problem to solve. Stick a scepter in their business, cast the Life-Slaying Arcana with the 'cancerous' addendum script – which identifies and eliminates around the 10,000 most common types of defective cell – and that's all it takes. No problem! A monkey could do it.

But the body isn’t a thing unto itself, a inherently stable entity that just gets worn down or sometimes infected with nasty things. And cancer cells aren’t just malevolent little sprites that hop out of the netherworld. They’re one of innumerable quasi-autonomous components that are themselves important to the survival of the body, but just happen to be doing their job slightly wrong. So even the act of killing them causes disruption. Maybe not major disruption, but disruption all the same. Which will cause little stressors on other components, which in turn might cause them to become cancerous, maybe in a more 'interesting' way that’s a little harder to detect. And if you stop that...

Or hell, forget even cancer. Cells mutate all the time just by nature, the anima script becoming warped slightly in the process of division. Most of the time, it's harmless; so long as you stay up to date with your telomere extensions, most dysfunctional cells don't present serious problems and can be easily killed off by your immune system. But live long enough, and by sheer mathematics, you'll get a mutation that isn't. And if you live a really long time, you'll get a lot of them, and unless you can detect them perfectly, they'll build up, with, again, interesting results.

At a deep enough level, the problem wasn't biology. It was physics. Entropy.

A few quirks of the setting emerge here. Rather than DNA we have 'the anima script'. It remains to be seen if this is just another name for DNA or reflecting some fundamental alt-biology that runs on magic or some shit. Others reflect real biology: 'telomeres' are a region at the end of the DNA strands in chromosomes. They serve as a kind of ablative shield, protecting the end of the DNA during replication. The loss of the telomeres have been touted as a major factor in the aging process.

A few chapters later we encounter a man who does not think of himself as really being the same person as he was a hundred years ago. Which, mood - I don't think I'm really the same person I was ten years ago. Or five. Or hell, even one.

The problem with really long-term scifi life extension ends up being a kind of signal-vs-noise problem. Humans change, a lot, as our lives advance. Hell, life is a process of constant change. We accumulate experiences and memories, learn new things, build new connections, change our opinions. Mostly this is desirable. Even if you had a perfect down-to-the-nucleon recording of the state of a person at a given point in time, overwriting a person with that state many years later would amount to killing them and replacing them with their old self. So the problem becomes distinguishing the good, wanted changes ('character development', even if contrary to what you wanted in the past) from the bad unwanted changes (cancer or whatever).

But then it gets squirly. Memories are physical too. If you experienced a deeply traumatic event, and learned a set of unwanted behaviours and associations that will shit up your quality of life, maybe you'd want to erase that trauma and forget or rewrite that memory. But if you're gonna do that... do you start rewriting all your memories? Does space become limited at some point? Can you back up your memories? What do you choose to preserve, and what do you choose to delete?

Living forever means forgetting infinitely many things, and Ship-of-Theseusing yourself into infinitely many people... perhaps infinitely many times each. Instead of death being sudden and taking place at a particular moment in time, it's a gradual transition into something that becomes unrecognisable from the point of view of your present self. I don't think there's any coherent self-narrative that can hold up in the face of infinity.

That's still probably better than dying I guess! But it is perhaps unsettling, in the same way that it's unsettling to realise that whether or not Everett quantum mechanics is true, and if there is a finite amount information in the observable universe, an infinite universe must contain infinite exact copies of that observable universe, and infinite near variations, and basically you end up with many-worlds through the back door. Unless the universe is finite or something.

Anyway, living forever probably isn't on the cards for us. Honestly I think we'll be lucky if complex global societies make it through the next century. 'Making it' in the really long term is going to require an unprecedented megaproject of effort to effect a complete renewable transition and reorganise society to a steady state economy which, just like life, takes in only low-entropy energy and puts out high-entropy energy in the form of photons, with all the other materials - minerals etc. - circulating in a closed loop. That probably won't happen but idk, never say never.

Looking forward to how this book plays with all this stuff.

#fiction#sff#web serials#entropy#physics#biology#thermodynamics#the flower that bloomed nowhere#ok that's all i have to say about the first twelve chapters lmaooo

28 notes

·

View notes

Text

yupppp i just treated the surroundings as a thermal reservoir despite their small heat capacity relative to the system. i pretty much don't give a fuck. if the three stooges carnot, clausius, and kelvin come aknocking tell them i'm not home

17 notes

·

View notes

Text

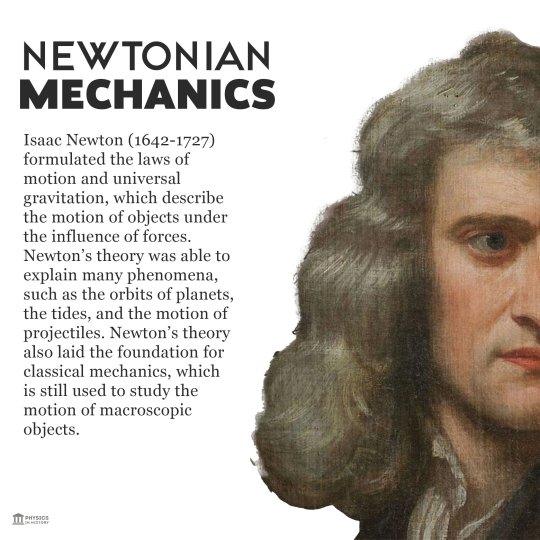

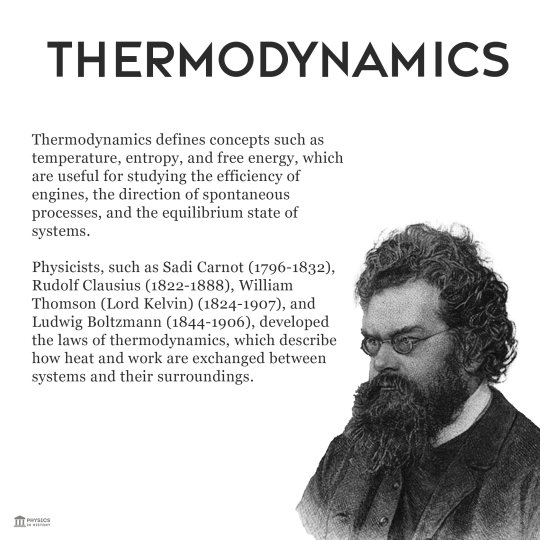

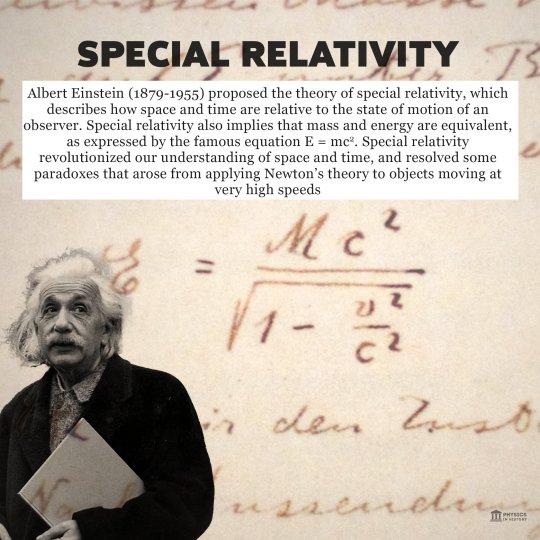

the greatest theories in physics: a visual thread by @PhysInHistory on twitter (with alt text) (part 1) (part 2 will be in reblogs)

image one: black unserifed text on white background, saying "the greatest theories in physics", with "greatest in manuscript handwriting style, and "physics" in bold. below this text, there is very small writing saying "a visual thread", also black, but the text has serifs. in the background there are equations and calculations, also in black font, but much thinner and smaller.

image two: on the right side of the image, there is a coloured photo of half of isaac newton's face and a bit of his shoulders. on the left side, there is large black unserifed text on white background, with large handwriting at the top saying "newtonian mechanics". "mechanics" is in bold. below, it says (in smaller black serifed writing) "isaac newton (1642 to 1727) formulated the laws of motion and universal gravitation, which describe the motion of objects under the influence of forces. newton's theory was able to explain many phenomena, such as the orbits of planets, the tides, and the motion of projectiles. newton's theory also laid the foundation for classical mechanics, which is still used to study the motion of macroscopic objects."

image three: at the top of the photo, there is large black capitalised thin unserifed writing saying "electromagnetism", and from the bottom of the text to around the middle of the image, there are images and equations, including a circuit diagram. in the bottom left, between the bottom of the image and the hypothetical horizontal middle line, there is a black and white image of james clerk maxwell. around the bottom right, there is black serifed text saying "james clerk maxwell (1831 to 1879) unified the theories of electricity and magnetism, which describe how electric charges and current produce electric and magnetic fields, and how these fields interact with each other. maxwell's theory also predicts the existence of electromagnetic waves, such as [visible] light, radio waves, and X-rays, which travel at a constant speed in a vacuum"

image four: at the top of the image, there is large bold capitalised black unserifed writing, saying "thermodynamics". at the mid to bottom right, there is a black and white image of ludwig boltzmann. under the large unserifed text, and next to ludwig boltzmann, there is small black serifed text, saying "thermodynamics defines concepts, such as temperature, entropy, and free energy, which are useful for studying the efficiency of engines, the direction of spontaneous processes, and the equilibrium state of systems. physicists such as sadi carnot (1796 to 1832), rudolph clausius (1822 to 1888), william thompson (lord kelvin) (1824 to 1907), and ludwig boltzmann (1844 to 1906), developed the laws of thermodynamics, which describe how heat and work are exchanged between systems and their surroundings"

image five: the background of the image is cream coloured, with the equation "E equals m c squared, all over root one minus v squared over c squared" and some other writing. in the bottom left corner, there is a black and white image of albert einstein. at the top of the image, there is bold large unserifed black writing saying "special relativity", which is directly against the cream background. below the writing, there is a white box, containing small black serifed text, which says "albert einstein (1879 to 1955) proposed the theory of special relativity, which describes how space and time are relative to the state of motion of an observer. special relativity also implies that mass and energy are equivalent, as expressed by the famous equation E = m c squared. special relativity revolutionized our understanding of space and time, and resolved some paradoxes that arose from applying Newton's theory to objects moving at very high speeds"

image six: this image has a background of grey-brown paper with small squares on it, as what one would would see in a maths exercise book. the paper has differential equations on it. like the previous image, there is a black and white image of albert einstein in the bottom left corner. at the top, there is a dark brown box, containing large white bold unserifed letters saying "general relativity". the writing below is directly against the paper but above the differential equations. it is white. in large bold unserifed text, it says "albert einstein". below that, it says (in smalled serifed text) "also proposed the theory of general relativity, which describes how gravity is not a force, but a consequence of the curvature of space and time caused by mass and energy. general relativity predicts phenomenona, such as gravitational lensing, gravitational redshift, gravitational waves, and black holes. general relativity also provide the framework for studying the origin and evolution of the universe"

(end of alt text, please check reblogs for more images and alt text)

#the greatest theories in physics#physics#physics theory#physician#maths#mathematics#mathematician#stem#newtonian mechanics#electromagnetism#thermodynamics#special relativity#general relativity

19 notes

·

View notes

Text

VARIANCE VARIANCE QUANTUM HALL WORTHY

DECISION TREE, PULL FORWARD FOR IMMEDIATE SEGMENT CAPITALIZATION WITH RESEARCH AND MARKET LANDSCAPE.

CRITERIA AND SELECTION OF VARIABLE WITH PLUS OR MINUS REQUESTS PER SECTOR AND AREA SELECTION.

SELECTION HIARCHY OF DECISION MAKING LABELED CATEGORY MANAGEMENT FROM OPERATIONAL EFFICINCY WITH

MOST SELECTED LAUNCH PROJECT

UPDATE SALES MODULES AND RELATIONSHIP BUILDING SALES FOR RESIDUAL INCOME WITH MODULAR BASE

Hey I got a scenario for you? Are you encountering in this field segment? MARKETING CAMPAIGN WITH NEEDS OF HAVE YOU HEARD?

THERE ARE GETTING TO YES AND YES + SMILE

DEDUCTIVE SALES PITCHES TO IDENTIFY CORE NEEDS WITH TRANSLATION FOR SALES PROCESS FOR FINISHED PRODUCT

SINCE I CAN"T INTERVEIW NOW SO I WUOLD EXPLAIN THE THOUGHT FIELD PROCESS AND PROCEDURES WITH PROTOCOL + BLOCKCHAIN with ENGINE WORKSTREAMS BASED ON INTAKE NUMBER COUNTs.

UPDATE UNIVERSITY EDUCATION TECHNOLOGY FOR IMPERIUM MILITARY COLLEGE WITH BASE LAYER + LEADERSHIP and Personal Growth Roadmaps to be (QUALIFIED FOR WORKSTREAM)

IMPERIUM UTALITARIAN PERSONA PRIME AI FOR UNDER THE COVERS COMMUNICATION DURING COG BOTH VERSIONS

WITH

SHANE ANDERSON PART OF IMPERIUM AVENGERS TEAM PLEASE EXPLORE MORE MINING LOCX

KPI WITH COMPANY TO JOJIK IMPERIUM CORE PROTECTION PROGRAM FOR ALL OF ALL MODULAR PROTOCOL DEPLOYMENT ROADMAPS

MEME SCAN

BEFORE AND AFTER TRAINNIG AND EXPERIENCE WITH EVENTS

The Van der Waals equation includes intermolecular interaction by adding to the observed pressure P in the equation of state a term of the form

�

/

�

�

2

{\displaystyle a/V_{m}^{2}}, where a is a constant whose value depends on the gas.

Van der Waals' early interests were primarily in the field of thermodynamics, where a first influence was Rudolf Clausius's published work on heat in 1857; other significant influences were the writings by James Clerk Maxwell, Ludwig Boltzmann, and Willard Gibbs.[2] After initial pursuit of teaching credentials, Van der Waals' undergraduate coursework in mathematics and physics at the University of Leiden in the Netherlands led (with significant hurdles) to his acceptance for doctoral studies at Leiden under Pieter Rijke. While his dissertation helps to explain the experimental observation in 1869 by Irish professor of chemistry Thomas Andrews (Queen's University Belfast) of the existence of a critical point in fluids,[3][non-primary source needed] science historian Martin J. Klein states that it is not clear whether Van der Waals was aware of Andrews' results when he began his doctorate work.[4]

The Van der Waals equation is mathematically simple, but it nevertheless predicts the experimentally observed transition between vapor and liquid, and predicts critical behaviour.[12]: 289 It also adequately predicts and explains the Joule–Thomson effect (temperature change during adiabatic expansion), which is not possible in ideal gas.

Above the critical temperature, TC, the Van der Waals equation is an improvement over the ideal gas law, and for lower temperatures, i.e., T < TC, the equation is also qualitatively reasonable for the liquid and low-pressure gaseous states; however, with respect to the first-order phase transition, i.e., the range of (p, V, T) where a liquid phase and a gas phase would be in equilibrium, the equation appears to fail to predict observed experimental behaviour, in the sense that p is typically observed to be constant as a function of V for a given temperature in the two-phase region. This apparent discrepancy is resolved in the context of vapour–liquid equilibrium: at a particular temperature, there exist two points on the Van der Waals isotherm that have the same chemical potential, and thus a system in thermodynamic equilibrium will appear to traverse a straight line on the p–V diagram as the ratio of vapour to liquid changes. However, in such a system, there are really only two points present (the liquid and the vapour) rather than a series of states connected by a line, so connecting the locus of points is incorrect: it is not an equation of multiple states, but an equation of (a single) state. It is indeed possible to compress a gas beyond the point at which it would typically condense, given the right conditions, and it is also possible to expand a liquid beyond the point at which it would usually boil. Such states are called "metastable" states. Such behaviour is qualitatively (though perhaps not quantitatively) predicted by the Van der Waals equation of state.[13]

However, the values of physical quantities as predicted with the Van der Waals equation of state "are in very poor agreement with experiment", so the model's utility is limited to qualitative rather than quantitative purposes.[12]: 289 Empirically-based corrections can easily be inserted into the Van der Waals model (see Maxwell's correction, below), but in so doing, the modified expression is no longer as simple an analytical model; in this regard, other models, such as those based on the principle of corresponding states, achieve a better fit with roughly the same work.[citation needed] Even with its acknowledged shortcomings, the pervasive use of the Van der Waals equation in standard university physical chemistry textbooks makes clear its importance as a pedagogic tool to aid understanding fundamental physical chemistry ideas involved in developing theories of vapour–liquid behavior and equations of state.[14][15][16] In addition, other (more accurate) equations of state such as the Redlich–Kwong and Peng–Robinson equation of state are essentially modifications of the Van der Waals equation of state.

19 notes

·

View notes

Text

Jenny Clausius takes on Britain Hart at BKFC 39 and She Seeks Revenge

#BKFC #BKFC39 #JennyClausius #BritainHart

On March 24th, 2023, the highly anticipated Bare Knuckle Fighting Championship (BKFC) 39 event will take place in Norfolk, Virginia. One of the most exciting fights on the card is the rematch between Jenny Clausius and Britain Hart. Clausius, who is seeking revenge, lost to Hart via TKO at BKFC 19 in 2021.

For Jenny Clausius, this fight is not just about avenging her loss. It’s also about…

View On WordPress

1 note

·

View note