#opportunity cost neural networks

Text

#opportunity#cost#neuralnetworks#neural network#neurals#networks#neural networks#opportunity cost#opportunity cost neural networks

3 notes

·

View notes

Note

real actual nonhostile question with a preamble: i think a lot of artists consider NN-generated images as an existential threat to their ability to use art as a tool to survive under capitalism, and it's frequently kind of disheartening to think about what this is going to do to artists who rely on commissions / freelance storyboarding / etc. i don't really care whether or not nn-generated images are "true art" because like, that's not really important or worth pursuing as a philosophical question, but i also don't understand how (under capitalism) the rise of it is anything except a bleak portent for the future of artists

thanks for asking! i feel like it's good addressing the idea of the existential threat, the fears and feelings that artists have as to being replaced are real, but personally i am cynical as to the extent that people make it out to be a threat. and also i wanna say my piece in defense of discussions about art and meaning.

the threat of automation, and implementation of technologies that make certain jobs obsolete is not something new at all in labor history and in art labor history. industrial printing, stock photography, art assets, cgi, digital art programs, etc, are all technologies that have cut down on the number of art jobs that weren't something you could cut corners and labor off at one point. so why do neural networks feel like more of a threat? one thing is that they do what the metaphorical "make an image" button that has been used countless times in arguments on digital art programs does, so if the fake button that was made up to win an argument on the validity of digital art exists, then what will become of digital art? so people panic.

but i think that we need to be realistic as to what neural net image generation does. no matter how insanely huge the data pool they pull from is, the medium is, in the simplest terms, limited as to the arrangement of pixels that are statistically likely to be together given certain keywords, and we only recognize the output as symbols because of pattern recognition. a neural net doesn't know about gestalt, visual appeal, continuity, form, composition, etc. there are whole areas of the art industry that ai art serves especially badly, like sequential arts, scientific illustration, drafting, graphic design, etc. and regardless, neural nets are tools. they need human oversight to work, and to deal with the products generated. and because of the medium's limitations and inherent jankiness, it's less work to hire a human professional to just do a full job than to try and wrangle a neural net.

as to the areas of the art industry that are at risk of losing job opportunities to ai like freelance illustration and concept art, they are seen as replaceable to an industry that already overworks, underpays, and treats them as disposable. with or without ai, artists work in precarized conditions without protections of organized labor, even moreso in case of freelancers. the fault is not of ai in itself, but in how it's yielded as a tool by capital to threaten workers. the current entertainment industry strikes are in part because of this, and if the new wga contract says anything, it's that a favorable outcome is possible. pressure capital to let go of the tools and question everyone who proposes increased copyright enforcement as the solution. intellectual property serves capital and not the working artist.

however, automation and ai implementation is not unique to the art industry. service jobs, manufacturing workers and many others are also at risk at losing out jobs to further automation due to capital's interest in maximizing profits at the cost of human lives, but you don't see as much online outrage because they are seen as unskilled and uncreative. the artist is seen as having a prestige position in society, if creativity is what makes us human, the artist symbolizes this belief - so if automation comes for the artist then people feel like all is lost. but art is an industry like any other and artists are not of more intrinsic value than any manual laborer. the prestige position of artist also makes artists act against class interest by cooperating with corporations and promoting ip law (which is a bad thing. take the shitshow of the music industry for example), and artists feel owed upward social mobility for the perceived merits of creativity and artistic genius.

as an artist and a marxist i say we need to exercise thinking about art, meaning and the role of the artist. the average prompt writer churning out big titty thomas kinkade paintings posting on twitter on how human made art will become obsolete doesnt know how to think about art. art isn't about making pretty pictures, but is about communication. the average fanartist underselling their work doesn't know that either. discussions on art and meaning may look circular and frustrating if you come in bad faith, but it's what exercises critical thinking and nuance.

207 notes

·

View notes

Text

Unleashing Gen AI: A Revolution in the Audio-Visual Landscape

Artificial Intelligence (AI) has consistently pushed the boundaries of what is possible in various industries, but now, we stand at the brink of a transformative leap: Generative AI, or Gen AI. Gen AI promises to reshape the audio-visual space in profound ways, and its impact extends to a plethora of industries. In this blog, we will delve into the essence of Gen AI and explore how it can bring about a sea change in numerous sectors.

Decoding Generative AI (Gen AI)

Generative AI is the frontier of AI where machines are capable of creating content that is remarkably human-like. Harnessing neural networks, particularly Recurrent Neural Networks (RNNs) and Generative Adversarial Networks (GANs), Gen AI can generate content that is not just contextually accurate but also creatively ingenious.

The Mechanics of Gen AI

Gen AI operates by dissecting and imitating patterns, styles, and structures from colossal datasets. These learned insights then fuel the creation of content, whether it be music, videos, images, or even deepfake simulations. The realm of audio-visual content is undergoing a monumental transformation courtesy of Gen AI.

Revolutionizing the Audio-Visual Realm

The influence of Generative AI in the audio-visual sphere is profound, impacting several dimensions of content creation and consumption:

1. Musical Masterpieces:

Gen AI algorithms have unlocked the potential to compose music that rivals the creations of human composers. They can effortlessly dabble in diverse musical genres, offering a treasure trove of opportunities for musicians, film score composers, and the gaming industry. Automated music composition opens the doors to boundless creative possibilities.

2. Cinematic Magic:

In the world of film production, Gen AI can conjure up realistic animations, special effects, and entirely synthetic characters. It simplifies video editing, making it more efficient and cost-effective. Content creators, filmmakers, and advertisers are poised to benefit significantly from these capabilities.

3. Artistic Expression:

Gen AI is the artist's secret tool, generating lifelike images and artworks. It can transform rudimentary sketches into professional-grade illustrations and graphics. Industries like fashion, advertising, and graphic design are harnessing this power to streamline their creative processes.

4. Immersive Reality:

Gen AI plays a pivotal role in crafting immersive experiences in virtual and augmented reality. It crafts realistic 3D models, environments, and textures, elevating the quality of VR and AR applications. This technological marvel has applications in gaming, architecture, education, and beyond.

Industries Set to Reap the Rewards

The versatile applications of Generative AI are a boon to numerous sectors:

1. Entertainment Industry:

Entertainment stands as a vanguard in adopting Gen AI. Film production, music composition, video game development, and theme park attractions are embracing Gen AI to elevate their offerings.

2. Marketing and Advertising:

Gen AI streamlines content creation for marketing campaigns. It generates ad copies, designs visual materials, and crafts personalized content, thereby saving time and delivering more engaging and relevant messages.

3. Healthcare and Medical Imaging:

In the realm of healthcare, Gen AI enhances medical imaging, aids in early disease detection, and generates 3D models for surgical planning and training.

4. Education:

Gen AI facilitates the creation of interactive learning materials, custom tutoring content, and immersive language learning experiences with its natural-sounding speech synthesis.

5. Design and Architecture:

Architects and designers benefit from Gen AI by generating detailed blueprints, 3D models, and interior design concepts based on precise user specifications.

The Future of Gen AI

The journey of Generative AI is far from over, and the future holds promise for even more groundbreaking innovations. However, it is imperative to navigate the ethical and societal implications thoughtfully. Concerns related to misuse, privacy, and authenticity should be addressed, and the responsible development and application of Gen AI must be prioritized.

In conclusion, Generative AI is on the cusp of redefining the audio-visual space, promising an abundance of creative and pragmatic solutions across diverse industries. Embracing and responsibly harnessing the power of Gen AI is the key to ushering these industries into a new era of ingenuity and innovation.

#artificial intelligence#technology#generative ai#audiovisual#future#startup#healthcare#education#architecture#ai#design#entertainment

5 notes

·

View notes

Text

ChatGPT in the agricultural sector: advantages and opportunities

Artificial intelligence technologies are increasingly being used in different sectors of the economy, including agriculture. One of the promising tools is ChatGPT, a generative artificial intelligence model that can be used to automate a number of processes in the agricultural sector.

In this article, we will look at how ChatGPT can be used in the agricultural sector with its advantages and possibilities.

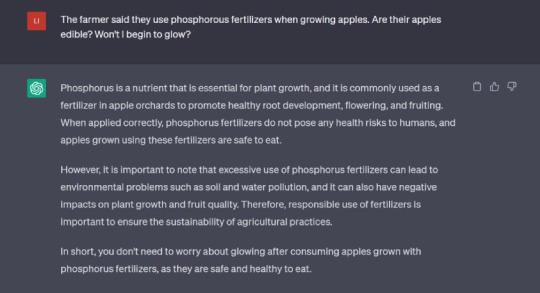

So far, ChatGPT cannot generate images, so we made this image using Kandinsky 2.1. According to the neural network, the farmer of the future manages their farm using artificial intelligence.

ChatGPT technology overview

ChatGPT is an innovative solution to develop a chatbot that can participate in a dialogue, search for errors in programming code, write poetry and scripts, and even argue. We will not go into technical details, but if you are interested, you can read about them here or here. Our goal is to consider the capabilities of this technology in the agricultural sector.

ChatGPT was trained using text selection from the web and Reinforcement Learning from Human Feedback. The neural network underwent multiple retraining sessions to make its answers even more accurate.

The main goal of developing ChatGPT was to make artificial intelligence as easy to use, correct and ‘human’ as possible. The system provides ample opportunities to automate various processes, reduce errors and improve work efficiency.

ChatGPT has many features and skills:

• Generating phrases, sentences or texts to create content for websites or advertisements.

• Requesting answers based on the input information on which the neural network was trained.

• Solving problems, for example, by formulating a specific problem and suggesting possible solutions.

• Generating various types of content, including advertisements, social media posts, news articles and other texts.

• Completing sentences and phrases automatically in applications when the user enters a text into a search box or writes an email.

• Creating various kinds of chatbots that can help in customer service: answering questions, learning about customer preferences or making recommendations.

• Extracting information from texts, as well as identifying the most important information in the text.

These are just some of the features of ChatGPT and similar applications. Developers can use this technology to develop innovative applications that not only save time and resources, but also provide a deeper understanding of user needs and preferences.

How AI is being used in agriculture today

Artificial intelligence is becoming more popular and coming into use in the agricultural sector. Today, artificial intelligence technologies offer an opportunity to solve many problems that arise in the agricultural sector, from increasing yields to reducing production costs.

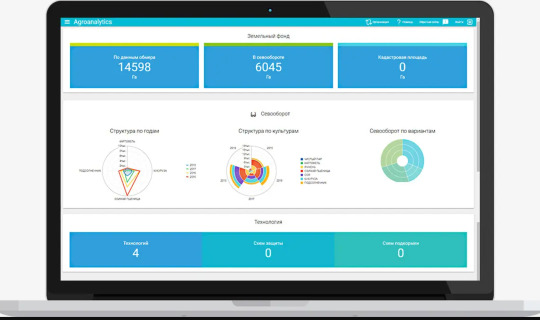

SmartAgro

SmartAGRO Agroanalytics interface

One of the companies using artificial intelligence to improve production is SmartAGRO, a Russian IT company specializing in the development and implementation of intelligent systems to solve complex problems in the industrial agriculture.

Their core product, Agroanalytics-IoT, automates up to 90% of the industry’s business processes, which significantly reduces crop losses.

The company uses artificial intelligence algorithms to predict the harvest throughout the growing season, so that it has the opportunity to adjust processes if something goes wrong.

SR Data

SR Data solution that allows ordering satellite images (photographs of agricultural land)

Another developer of intelligent solutions in the agricultural sector is SR Data, a private Russian tech company that provides high and ultra-high resolution space images and analyzes them using mathematical modeling and artificial intelligence systems. In addition to optical images, the company uses radar images to minimize the negative impact of weather on the quality of information extracted from images. This solution is particularly useful in the agricultural sector as it allows specialists to receive high-quality data exactly when it is needed. However, the company cannot calculate vegetation indexes from these images directly, so it uses AI algorithms to predict those.

Autonomous Greenhouse Challenge

Vertical farming

A good example of the use of artificial intelligence in the agricultural sector are automated greenhouses. Using up to date machine learning and data analysis systems, AI can help optimize plant management processes to boost production and reduce costs. The solution was developed in 2022 by a team from Russian Agricultural Bank and Moscow Institute of Physics and Technology as part of the Autonomous Greenhouse Challenge.

Analyzing data from sensors and monitors in a greenhouse, including light, humidity, temperature and other parameters, AI algorithms determined the optimal conditions for plant growth. After that, the system automatically controlled and adjusted environmental parameters. This greenhouse management system helps specialists optimize plant growth conditions and get higher yield.

These examples prove the benefits of using AI in the agricultural sector. However, ChatGPT is not a standard AI algorithm and its capabilities are not so obvious. Let us try to find them out.

We asked ChatGPT about its role in agriculture, and this is what we got:

• Data reporting automation and documentation generation. ChatGPT can make automatic reports on crops and harvest, generate accounting reports and other documents significantly reducing the time and resources required to perform these tasks.

• Improved communication between farmers and consumers. ChatGPT helps create chatbots to improve communication between farmers and consumers. It enables customers to ask questions about growing methods and product quality, and farmers — to get feedback on their products and services, which will help improve the quality of the products and transform the approach to production.

• Weather forecasting and vegetation management. ChatGPT algorithms can be used to obtain weather reports and manage the vegetation. This requires collecting data on weather, soil moisture, pests, and other parameters, and then using these data to develop models that help predict the best time to plant or harvest, as well as the most effective pest control methods.

• Pattern and organic material recognition. ChatGPT algorithms can be used for pattern and organic material recognition, which helps determine which labels are needed for each package and which shipping method is the best. Specialists can also use ChatGPT to detect irrigation system leaks and other technical problems.

Our opinion on using ChatGPT in agriculture

The previous section covers various use cases for ChatGPT in agriculture, which it has generated itself. However, some of them require fine-tuning the neural network or using it as an addition to the existing instruments.

The application that we see as the most realistic is to improve communication between farmers and consumers. Chatbots providing communication between farmers and consumers will enable buyers to ask questions about growing methods and product quality, and farmers — to get feedback on their products and services, which will help improve the quality of the products and transform the approach to production.

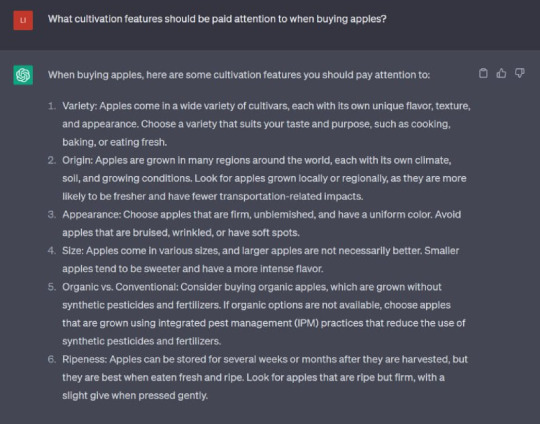

For example, we asked ChatGPT how to choose apples:

We also asked it about safety of phosphate fertilizers:

ChatGPT successfully answered our questions and helped choose and buy apples from the farm, which proved its usefulness.

What others think about ChatGPT applicability in the agricultural sector

To find more ideas about the possibilities of using ChatGPT in the agricultural sector, we conducted a survey and got the following results:

• Most people are suspicious about ChatGPT technology because of the possible false information it provides, its lack of responsibility and the absence of direct interaction with ‘real-world’ processes.

• Some people see the benefits of using ChatGPT in the agricultural sector. After some adjustment, the neural network can be used to create and fill out documentation. It may take the approach of an offline AI assistant that can combine data from the Internet, as in ChatGPT-plus complete with its plugins.

• There are those who have not yet figured out how the technology works and believe that the agricultural sector does not need new technologies, but needs more labor force instead.

The survey showed that the role of ChatGPT in the agricultural sector is ambiguous. Most people are skeptical about this technology, but there are those who see its benefits. The results of the survey show that the use of ChatGPT in the agricultural sector requires in-depth study.

Real case of using ChatGPT to grow potatoes

However, while some are skeptical of new technologies, others are enthusiastic about testing them. One of the recent tests is growing potatoes using ChatGPT.

You can read about this experiment in the Telegram channel of the project. ChatGPT understands user’s requests for growing seed potatoes in an aeroponic system and is calling itself a ‘professional agronomist and AI botanist’.

The neural network will provide information about nutrient mixes, watering cycles, lighting conditions, pH and EC target values, and take into account the effect of pH adjusters on the nutrient solution. If needed, it will also ask for additional information about a particular potato variety, local climate, and available resources.

ChatGPT also recognizes fertilizer requirements and requests additional information about available fertilizers.

The author of the Telegram channel shows test-tube potato plants, saying that the hands in the picture will work together with AI.

The prospects of AI technology in the agricultural sector

Artificial intelligence in the agricultural sector can be a useful tool to improve product quality and increase production efficiency. AI can be used to process and analyze data, manage various processes, develop chatbots and other tools to help process automation.

ChatGPT is one of the AI systems that can be used in the agricultural sector to process and analyze text data and develop chatbots.

However, keep in mind that ChatGPT may not give reliable answers, which can impair decision making. When using a neural network, specialists need to be careful and check the information using other sources.

In addition, ChatGPT cannot be trained on a chosen database, which limits its use in some business areas. This problem can be partially solved by using open-source models, but they most likely will not be able to offer the required quality.

In general, the use of ChatGPT in the agricultural sector can improve the efficiency and productivity of the area, but requires caution and compliance with legal regulations.

2 notes

·

View notes

Text

Some Known Factual Statements About "Machine Learning in Finance: Opportunities and Challenges Ahead"

Device learning is a swiftly expanding area that has become an integral part of modern-day modern technology. From vocal assistants like Siri and Alexa to fraudulence discovery units in banks, device learning is utilized in a large variety of applications. If you're curious in getting began with equipment learning, this beginner's quick guide are going to offer you along with the rudiments.

Understand What Maker Learning Is

Maker learning is a type of fabricated knowledge that makes it possible for pcs to know coming from record without being explicitly configured. It involves creating formulas that may identify designs in data, make forecasts based on those designs, and boost their reliability over opportunity.

There are three main styles of equipment learning: closely watched learning, unsupervised learning, and encouragement learning.

Closely watched Learning: This style of machine learning includes providing the computer with identified information (information that has currently been categorized or classified). The protocol then makes use of this record to discover how to classify new data.

Unsupervised Learning: In this type of maker learning, the personal computer is given unlabeled record and must locate patterns or resemblances on its own without any type of direction.

Reinforcement Learning: This kind of machine learning entails the pc taking actions in an setting to make the most of its rewards while decreasing its fines. The protocol learns through test and inaccuracy until it hits superior actions.

Choose Your Programming Language

Python is one of the very most popular plan foreign languages for equipment learning due to its simpleness and huge neighborhood support. Various other foreign languages frequently used for maker learning feature R, Java, C++, and MATLAB.

Set up Necessary Tools

Once you've chosen your plan foreign language, you'll require to put up some devices such as Jupyter Notebook or Spyder for Python customers. These devices provide an atmosphere where you can easily write code and assess your versions easily.

Know Data Preparation

Data preparation is a important measure in any sort of equipment discovering task. It includes cleaning the record (removing missing worths), enhancing the information (converting categorical variables into mathematical ones), scaling the attribute (guaranteeing all component are on the exact same range), and splitting the information into training and testing sets.

Select a Version

There are numerous device finding out models to choose coming from, featuring direct regression, selection plants, neural systems, and help angle makers. The option of style depends on the kind of complication you're attempting to solve and the type of record you possess.

Educate Your Model

After selecting your version, it's time to teach it on your record. This involves nourishing the formula along with your tagged or unlabeled information and changing its criteria until it efficiently categorizes or predicts brand new information.

Assess Your Style

Once your version is qualified, you'll need to assess its performance using metrics such as reliability score, accuracy rating, recollect credit rating, F1-score one of others. Click Here For Additional Info will help you determine if your design is overfitting (conducting effectively on instruction record but inadequately on brand new record) or underfitting (carrying out inadequately on both instruction and new information).

Song Your Version

If your style is underperforming or overfitting, you might need to have to tune its hyperparameters. Hyperparameters are variables that impact the actions of the algorithm such as finding out cost or amount of hidden coatings in a neural network.

Deploy Your Style

Lastly, when you're delighted along with your model's performance, it's opportunity to deploy it in a real-world setting. This could possibly include combining it in to an existing function or developing a new app around it.

Verdict:

Equipment learning can easily seem frustrating at first glance but adhering to these steps may assist help make it more workable for newbies. By understanding what equipment learning is and picking the ideal system language and resources for your task, preparing data effectively choosing an appropriate maker learning protocol tuning hyperparameters when important deploying styles in real-world atmospheres can be performed quickly. Along with method and patience anyone may build skill-sets in this exciting industry!

2 notes

·

View notes

Text

Jay Dawani is Co-founder & CEO of Lemurian Labs – Interview Series

New Post has been published on https://thedigitalinsider.com/jay-dawani-is-co-founder-ceo-of-lemurian-labs-interview-series/

Jay Dawani is Co-founder & CEO of Lemurian Labs – Interview Series

Jay Dawani is Co-founder & CEO of Lemurian Labs. Lemurian Labs is on a mission to deliver affordable, accessible, and efficient AI computers, driven by the belief that AI should not be a luxury but a tool accessible to everyone. The founding team at Lemurian Labs combines expertise in AI, compilers, numerical algorithms, and computer architecture, united by a single purpose: to reimagine accelerated computing.

Can you walk us through your background and what got you into AI to begin with?

Absolutely. I’d been programming since I was 12 and building my own games and such, but I actually got into AI when I was 15 because of a friend of my fathers who was into computers. He fed my curiosity and gave me books to read such as Von Neumann’s ‘The Computer and The Brain’, Minsky’s ‘Perceptrons’, Russel and Norvig’s ‘AI A Modern Approach’. These books influenced my thinking a lot and it felt almost obvious then that AI was going to be transformative and I just had to be a part of this field.

When it came time for university I really wanted to study AI but I didn’t find any universities offering that, so I decided to major in applied mathematics instead and a little while after I got to university I heard about AlexNet’s results on ImageNet, which was really exciting. At that time I had this now or never moment happen in my head and went full bore into reading every paper and book I could get my hands on related to neural networks and sought out all the leaders in the field to learn from them, because how often do you get to be there at the birth of a new industry and learn from its pioneers.

Very quickly I realized I don’t enjoy research, but I do enjoy solving problems and building AI enabled products. That led me to working on autonomous cars and robots, AI for material discovery, generative models for multi-physics simulations, AI based simulators for training professional racecar drivers and helping with car setups, space robots, algorithmic trading, and much more.

Now, having done all that, I’m trying to reign in the cost of AI training and deployments because that will be the greatest hurdle we face on our path to enabling a world where every person and company can have access to and benefit from AI in the most economical way possible.

Many companies working in accelerated computing have founders that have built careers in semiconductors and infrastructure. How do you think your past experience in AI and mathematics impacts your ability to understand the market and compete effectively?

I actually think not coming from the industry gives me the benefit of having the outsider advantage. I have found it to be the case quite often that not having knowledge of industry norms or conventional wisdoms gives one the freedom to explore more freely and go deeper than most others would because you’re unencumbered by biases.

I have the freedom to ask ‘dumber’ questions and test assumptions in a way that most others wouldn’t because a lot of things are accepted truths. In the past two years I’ve had several conversations with folks within the industry where they are very dogmatic about something but they can’t tell me the provenance of the idea, which I find very puzzling. I like to understand why certain choices were made, and what assumptions or conditions were there at that time and if they still hold.

Coming from an AI background I tend to take a software view by looking at where the workloads today, and here are all the possible ways they may change over time, and modeling the entire ML pipeline for training and inference to understand the bottlenecks, which tells me where the opportunities to deliver value are. And because I come from a mathematical background I like to model things to get as close to truth as I can, and have that guide me. For example, we have built models to calculate system performance for total cost of ownership and we can measure the benefit we can bring to customers with software and/or hardware and to better understand our constraints and the different knobs available to us, and dozens of other models for various things. We are very data driven, and we use the insights from these models to guide our efforts and tradeoffs.

It seems like progress in AI has primarily come from scaling, which requires exponentially more compute and energy. It seems like we’re in an arms race with every company trying to build the biggest model, and there appears to be no end in sight. Do you think there is a way out of this?

There are always ways. Scaling has proven extremely useful, and I don’t think we’ve seen the end yet. We will very soon see models being trained with a cost of at least a billion dollars. If you want to be a leader in generative AI and create bleeding edge foundation models you’ll need to be spending at least a few billion a year on compute. Now, there are natural limits to scaling, such as being able to construct a large enough dataset for a model of that size, getting access to people with the right know-how, and getting access to enough compute.

Continued scaling of model size is inevitable, but we also can’t turn the entire earth’s surface into a planet sized supercomputer to train and serve LLMs for obvious reasons. To get this into control we have several knobs we can play with: better datasets, new model architectures, new training methods, better compilers, algorithmic improvements and exploitations, better computer architectures, and so on. If we do all that, there’s roughly three orders of magnitude of improvement to be found. That’s the best way out.

You are a believer in first principles thinking, how does this mold your mindset for how you are running Lemurian Labs?

We definitely employ a lot of first principles thinking at Lemurian. I have always found conventional wisdom misleading because that knowledge was formed at a certain point in time when certain assumptions held, but things always change and you need to retest assumptions often, especially when living in such a fast paced world.

I often find myself asking questions like “this seems like a really good idea, but why might this not work”, or “what needs to be true in order for this to work”, or “what do we know that are absolute truths and what are the assumptions we’re making and why?”, or “why do we believe this particular approach is the best way to solve this problem”. The goal is to invalidate and kill off ideas as quickly and cheaply as possible. We want to try and maximize the number of things we’re trying out at any given point in time. It’s about being obsessed with the problem that needs to be solved, and not being overly opinionated about what technology is best. Too many folks tend to overly focus on the technology and they end up misunderstanding customers’ problems and miss the transitions happening in the industry which could invalidate their approach resulting in their inability to adapt to the new state of the world.

But first principles thinking isn’t all that useful by itself. We tend to pair it with backcasting, which basically means imagining an ideal or desired future outcome and working backwards to identify the different steps or actions needed to realize it. This ensures we converge on a meaningful solution that is not only innovative but also grounded in reality. It doesn’t make sense to spend time coming up with the perfect solution only to realize it’s not feasible to build because of a variety of real world constraints such as resources, time, regulation, or building a seemingly perfect solution but later on finding out you’ve made it too hard for customers to adopt.

Every now and then we find ourselves in a situation where we need to make a decision but have no data, and in this scenario we employ minimum testable hypotheses which give us a signal as to whether or not something makes sense to pursue with the least amount of energy expenditure.

All this combined is to give us agility, rapid iteration cycles to de-risk items quickly, and has helped us adjust strategies with high confidence, and make a lot of progress on very hard problems in a very short amount of time.

Initially, you were focused on edge AI, what caused you to refocus and pivot to cloud computing?

We started with edge AI because at that time I was very focused on trying to solve a very particular problem that I had faced in trying to usher in a world of general purpose autonomous robotics. Autonomous robotics holds the promise of being the biggest platform shift in our collective history, and it seemed like we had everything needed to build a foundation model for robotics but we were missing the ideal inference chip with the right balance of throughput, latency, energy efficiency, and programmability to run said foundation model on.

I wasn’t thinking about the datacenter at this time because there were more than enough companies focusing there and I expected they would figure it out. We designed a really powerful architecture for this application space and were getting ready to tape it out, and then it became abundantly clear that the world had changed and the problem truly was in the datacenter. The rate at which LLMs were scaling and consuming compute far outstrips the pace of progress in computing, and when you factor in adoption it starts to paint a worrying picture.

It felt like this is where we should be focusing our efforts, to bring down the energy cost of AI in datacenters as much as possible without imposing restrictions on where and how AI should evolve. And so, we got to work on solving this problem.

Can you share the genesis story of Co-Founding Lemurian Labs?

The story starts in early 2018. I was working on training a foundation model for general purpose autonomy along with a model for generative multiphysics simulation to train the agent in and fine-tune it for different applications, and some other things to help scale into multi-agent environments. But very quickly I exhausted the amount of compute I had, and I estimated needing more than 20,000 V100 GPUs. I tried to raise enough to get access to the compute but the market wasn’t ready for that kind of scale just yet. It did however get me thinking about the deployment side of things and I sat down to calculate how much performance I would need for serving this model in the target environments and I realized there was no chip in existence that could get me there.

A couple of years later, in 2020, I met up with Vassil – my eventual cofounder – to catch up and I shared the challenges I went through in building a foundation model for autonomy, and he suggested building an inference chip that could run the foundation model, and he shared that he had been thinking a lot about number formats and better representations would help in not only making neural networks retain accuracy at lower bit-widths but also in creating more powerful architectures.

It was an intriguing idea but was way out of my wheelhouse. But it wouldn’t leave me, which drove me to spending months and months learning the intricacies of computer architecture, instruction sets, runtimes, compilers, and programming models. Eventually, building a semiconductor company started to make sense and I had formed a thesis around what the problem was and how to go about it. And, then towards the end of the year we started Lemurian.

You’ve spoken previously about the need to tackle software first when building hardware, could you elaborate on your views of why the hardware problem is first and foremost a software problem?

What a lot of people don’t realize is that the software side of semiconductors is much harder than the hardware itself. Building a useful computer architecture for customers to use and get benefit from is a full stack problem, and if you don’t have that understanding and preparedness going in, you’ll end up with a beautiful looking architecture that is very performant and efficient, but totally unusable by developers, which is what is actually important.

There are other benefits to taking a software first approach as well, of course, such as faster time to market. This is crucial in today’s fast moving world where being too bullish on an architecture or feature could mean you miss the market entirely.

Not taking a software first view generally results in not having derisked the important things required for product adoption in the market, not being able to respond to changes in the market for example when workloads evolve in an unexpected way, and having underutilized hardware. All not great things. That’s a big reason why we care a lot about being software centric and why our view is that you can’t be a semiconductor company without really being a software company.

Can you discuss your immediate software stack goals?

When we were designing our architecture and thinking about the forward looking roadmap and where the opportunities were to bring more performance and energy efficiency, it started becoming very clear that we were going to see a lot more heterogeneity which was going to create a lot of issues on software. And we don’t just need to be able to productively program heterogeneous architectures, we have to deal with them at datacenter scale, which is a challenge the likes of which we haven’t encountered before.

This got us concerned because the last time we had to go through a major transition was when the industry moved from single-core to multi-core architectures, and at that time it took 10 years to get software working and people using it. We can’t afford to wait 10 years to figure out software for heterogeneity at scale, it has to be sorted out now. And so, we got to work on understanding the problem and what needs to exist in order for this software stack to exist.

We are currently engaging with a lot of the leading semiconductor companies and hyperscalers/cloud service providers and will be releasing our software stack in the next 12 months. It is a unified programming model with a compiler and runtime capable of targeting any kind of architecture, and orchestrating work across clusters composed of different kinds of hardware, and is capable of scaling from a single node to a thousand node cluster for the highest possible performance.

Thank you for the great interview, readers who wish to learn more should visit Lemurian Labs.

#000#accelerated computing#ai#ai training#Algorithms#amp#applications#approach#architecture#autonomous cars#background#billion#bleeding#book#Books#Brain#Building#Careers#Cars#CEO#challenge#change#Cloud#cloud computing#cloud service#cluster#clusters#Collective#Companies#computer

0 notes

Text

Exploring the Boundless Potential of DEVIKA AI: Revolutionizing Artificial Intelligence

In the ever-evolving landscape of artificial intelligence (AI), one name stands out prominently: DEVIKA AI. This cutting-edge AI platform has garnered significant attention for its revolutionary capabilities and transformative impact across various industries. Harnessing the power of advanced algorithms, machine learning, and natural language processing, DEVIKA AI is reshaping the way businesses operate, revolutionizing customer experiences, and driving innovation to unprecedented heights.

DEVIKA AI: Unveiling the Technology

At the heart of DEVIKA AI lies a sophisticated array of technologies designed to emulate human cognitive abilities and surpass conventional AI systems. With its neural networks and deep learning algorithms, DEVIKA AI can analyze vast amounts of data, recognize patterns, and make intelligent decisions with remarkable accuracy and efficiency.

The platform's natural language processing (NLP) capabilities enable it to comprehend and generate human-like text, facilitating seamless communication and interaction between humans and machines. Additionally, DEVIKA AI's machine learning models continuously improve and adapt to new information, enhancing performance and predictive capabilities over time.

Applications Across Diverse Industries

DEVIKA AI's versatility and adaptability make it invaluable across a wide range of industries, from healthcare and finance to retail and manufacturing. Let's delve into some of the notable applications of DEVIKA AI in various sectors:

Healthcare: In the healthcare sector, DEVIKA AI is revolutionizing patient care, diagnosis, and treatment. Through advanced medical imaging analysis, predictive analytics, and personalized medicine, DEVIKA AI helps healthcare professionals make informed decisions, improve outcomes, and optimize resource allocation.

Finance: DEVIKA AI is transforming the financial services industry by automating tasks, detecting fraudulent activities, and enhancing customer experiences. From algorithmic trading and risk management to chatbot-based customer support, DEVIKA AI enables financial institutions to operate more efficiently and effectively in an increasingly digital world.

Retail: In the retail sector, DEVIKA AI is reshaping the customer journey, from personalized recommendations and virtual shopping assistants to inventory management and supply chain optimization. By analyzing consumer behavior and market trends in real-time, DEVIKA AI empowers retailers to deliver tailored experiences and drive sales growth.

Manufacturing: DEVIKA AI is driving innovation and efficiency in the manufacturing sector through predictive maintenance, quality control, and autonomous robotics. By optimizing production processes and minimizing downtime, DEVIKA AI helps manufacturers improve productivity, reduce costs, and enhance competitiveness in global markets.

Education: DEVIKA AI is revolutionizing education by personalizing learning experiences, automating administrative tasks, and providing intelligent tutoring systems. Through adaptive learning algorithms and virtual classrooms, DEVIKA AI empowers educators to cater to individual student needs and enhance learning outcomes.

The Ethical and Social Implications of DEVIKA AI

While DEVIKA AI offers unprecedented opportunities for progress and innovation, it also raises important ethical and social considerations that cannot be ignored. As AI systems become increasingly autonomous and pervasive, concerns about data privacy, algorithmic bias, and job displacement have come to the forefront.

Ensuring transparency, accountability, and fairness in AI decision-making is crucial to mitigating these risks and building trust among users and stakeholders. Furthermore, proactive measures must be taken to address the potential impact of AI on employment, education, and societal dynamics.

Conclusion

In conclusion, DEVIKA AI represents a paradigm shift in the field of artificial intelligence, offering unparalleled capabilities and transformative potential across diverse industries. From healthcare and finance to retail and manufacturing, DEVIKA AI is revolutionizing how businesses operate, innovate, and engage with customers.

However, as we embrace the limitless possibilities of DEVIKA AI, we must also remain vigilant about its ethical and social implications. By fostering collaboration, dialogue, and responsible AI governance, we can harness the full potential of DEVIKA AI while safeguarding the interests and values of society as a whole.

With its groundbreaking technology and visionary approach, DEVIKA AI continues to push the boundaries of what's possible in the realm of artificial intelligence, inspiring innovation and shaping the future of humanity.

0 notes

Text

New Study Reveals 75% Decrease in Youth Unemployment Rates - Concrete Data Uncovered!

RoamNook Blog Post

New Discoveries in Data Analysis and Their Impact on Digital Growth

Welcome to the RoamNook's blog post, where we bring you the latest and most groundbreaking discoveries in data analysis. In this article, we will dive deep into the world of hard facts, numbers, and concrete data to uncover new information that will have a direct impact on digital growth. So sit back, relax, and get ready to expand your knowledge!

The Power of Data: Unveiling Key Facts and Figures

Data has become the backbone of the digital world, driving innovation, decision-making, and growth. In today's interconnected world, businesses rely on data to gain an edge over their competitors. Here are some key facts and figures that highlight the significance of data:

Over 2.5 quintillion bytes of data are created every single day.

90% of the world's data has been generated in the last two years alone.

By 2025, it is estimated that 463 exabytes of data will be created globally each day.

Data-driven companies are 23 times more likely to acquire customers and 6 times more likely to retain them.

The big data market is projected to reach $103 billion by 2027.

These numbers demonstrate the exponential growth and importance of data in today's digital landscape. It is crucial for businesses to harness the power of data to stay competitive and drive digital growth.

New Discoveries in Data Analysis

Advancements in technology and data analysis techniques continue to unveil new insights and possibilities. Here are some recent discoveries that have revolutionized the field of data analysis:

Quantum Machine Learning: Quantum computers have the potential to significantly enhance machine learning algorithms, enabling faster and more accurate predictions.

Graph Analytics: Analyzing complex networks and relationships through graph analytics has opened up new opportunities in various fields, including social networks, logistics, and healthcare.

Natural Language Processing: Advancements in natural language processing have paved the way for chatbots, virtual assistants, and sentiment analysis tools that can interpret and respond to human language.

Deep Learning: Deep learning models, such as neural networks, have revolutionized image and speech recognition, making it possible to train machines to perform complex tasks with high accuracy.

Blockchain Data Analysis: The rise of blockchain technology has created a new avenue for data analysis, enabling transparent and secure transactions across various industries.

These discoveries are just the tip of the iceberg, and the potential for data analysis is vast. As technology continues to evolve, we can expect to unlock even more insights and applications for data-driven decision-making.

Real-World Applications and the Importance of Digital Growth

So, why does all this matter? The real-world applications and importance of digital growth are undeniable. Here are some tangible benefits of embracing data analysis and fueling digital growth:

Improved Decision-Making: Data-driven insights allow businesses to make informed decisions based on real evidence, minimizing risks and maximizing opportunities.

Enhanced Customer Experience: By understanding customer behavior and preferences through data analysis, businesses can tailor their products and services to meet the needs of their target audience.

Increased Efficiency and Productivity: Automation and optimization of processes through data analysis can lead to significant cost savings and improved efficiency in operations.

Competitive Advantage: Businesses that leverage data analysis gain a competitive edge by staying ahead of trends, identifying emerging markets, and predicting customer demands.

Personalization: Data analysis allows companies to deliver personalized experiences, recommendations, and offers to individual customers, leading to higher customer satisfaction and loyalty.

By embracing data-driven decision-making and fueling digital growth, businesses can unlock a multitude of benefits and position themselves for long-term success in a rapidly evolving digital landscape.

Introducing RoamNook: Your Partner in Digital Transformation

As an innovative technology company, RoamNook specializes in IT consultation, custom software development, and digital marketing. Our main goal is to fuel digital growth by leveraging cutting-edge technologies and data analysis techniques.

At RoamNook, we understand the power of data in driving business success. Our team of experts combines technical expertise with industry knowledge to deliver customized solutions that address your unique needs. Whether you're looking to optimize your operations, improve your customer experience, or gain a competitive edge, RoamNook has the expertise to guide you through your digital transformation journey.

To learn more about how RoamNook can help your business achieve digital growth, visit our website at https://www.roamnook.com.

Copyright © 2024 RoamNook. All rights reserved.

Source: https://medium.datadriveninvestor.com/react-native-challenges-and-benefits-in-2021-ccb6f22a742c&sa=U&ved=2ahUKEwjK5Ov4iK-FAxW_k4kEHdF7DqUQxfQBegQIBRAC&usg=AOvVaw2GwG4SL235krHGTDk1eEEP

0 notes

Text

Anti-piracy Protection Market to Witness Massive Growth By 2029

Advance Market Analytics added research publication document on Worldwide Anti-piracy Protection Market breaking major business segments and highlighting wider level geographies to get deep dive analysis on market data. The study is a perfect balance bridging both qualitative and quantitative information of Worldwide Anti-piracy Protection market. The study provides valuable market size data for historical (Volume** & Value) from 2018 to 2022 which is estimated and forecasted till 2028*. Some are the key & emerging players that are part of coverage and have being profiled are Vitrium Systems Inc. (Canada), McAfee, LLC (United States), Kudelski SA (NAGRA) (Switzerland), Group-IB (Singapore), Viaccess-Orca (France), Digimarc Corporation (United States), Corsearch, Inc. (United States), Red Points (Spain), MUSO TNT (United Kingdom), Irdeto (Netherlands).

Get free access to Sample Report in PDF Version along with Graphs and Figures @ https://www.advancemarketanalytics.com/sample-report/168197-global-anti-piracy-protection-market

Anti-piracy protection solutions and services are required to protect the online digital content piracy that directly affects the software, gaming, film, music, TV, e-Learning material video, and ebooks industries, which rely on paid subscriptions or download fees. This piracy of content can amount main loss of revenue to the digital content provider companies or owners. According to a study, 98% of data transferred using a P2P network is copyrighted, which can subsequently fuel the demand for anti-piracy protection solutions.

Keep yourself up-to-date with latest market trends and changing dynamics due to COVID Impact and Economic Slowdown globally. Maintain a competitive edge by sizing up with available business opportunity in Anti-piracy Protection Market various segments and emerging territory.

Influencing Market Trend

Peer-To-Peer (P2P) Stream Discovery Such As Sopcast and Ace Stream

Market Drivers

Rise in the Regular Download of Pirated Content from the Internet

Rapid Digitization, High Internet Penetration, and Rising Enterprises Efforts to Safeguard the Integrity of the Data

Opportunities:

Technological Advancements Such As Deep Learning/Neural Network-Based Machine-Learning System

Rising Government Regulations Related To Data Privacy and High Spending On Safeguarding the IT Infrastructure

Demand from International Markets, especially the

Challenges:

High Cost Associated To Genuine Products and Availability of Required Content from Anonymous Internet Users

Have Any Questions Regarding Global Anti-piracy Protection Market Report, Ask Our Experts@ https://www.advancemarketanalytics.com/enquiry-before-buy/168197-global-anti-piracy-protection-market

Analysis by Type (Software (CAS, DRM), Services (Personal Agent Provision)), Application (TV On-Demand & Cinema, Press & Publishing, Live Events & Streaming, Software, Apps & Videogames, E-Learning & Info Products), (), Enterprise Size (Large Enterprises, Small and Medium Enterprises), Subscription Type (Monthly Subscription, Annual Subscription)

Competitive landscape highlighting important parameters that players are gaining along with the Market Development/evolution

• % Market Share, Segment Revenue, Swot Analysis for each profiled company [Vitrium Systems Inc. (Canada), McAfee, LLC (United States), Kudelski SA (NAGRA) (Switzerland), Group-IB (Singapore), Viaccess-Orca (France), Digimarc Corporation (United States), Corsearch, Inc. (United States), Red Points (Spain), MUSO TNT (United Kingdom), Irdeto (Netherlands)]

• Business overview and Product/Service classification

• Product/Service Matrix [Players by Product/Service comparative analysis]

• Recent Developments (Technology advancement, Product Launch or Expansion plan, Manufacturing and R&D etc)

• Consumption, Capacity & Production by Players

The regional analysis of Global Anti-piracy Protection Market is considered for the key regions such as Asia Pacific, North America, Europe, Latin America and Rest of the World. North America is the leading region across the world. Whereas, owing to rising no. of research activities in countries such as China, India, and Japan, Asia Pacific region is also expected to exhibit higher growth rate the forecast period 2023-2028.

Table of Content

Chapter One: Industry Overview

Chapter Two: Major Segmentation (Classification, Application and etc.) Analysis

Chapter Three: Production Market Analysis

Chapter Four: Sales Market Analysis

Chapter Five: Consumption Market Analysis

Chapter Six: Production, Sales and Consumption Market Comparison Analysis

Chapter Seven: Major Manufacturers Production and Sales Market Comparison Analysis

Chapter Eight: Competition Analysis by Players

Chapter Nine: Marketing Channel Analysis

Chapter Ten: New Project Investment Feasibility Analysis

Chapter Eleven: Manufacturing Cost Analysis

Chapter Twelve: Industrial Chain, Sourcing Strategy and Downstream Buyers

Read Executive Summary and Detailed Index of full Research Study @ https://www.advancemarketanalytics.com/reports/168197-global-anti-piracy-protection-market

Highlights of the Report

• The future prospects of the global Anti-piracy Protection market during the forecast period 2023-2028 are given in the report.

• The major developmental strategies integrated by the leading players to sustain a competitive market position in the market are included in the report.

• The emerging technologies that are driving the growth of the market are highlighted in the report.

• The market value of the segments that are leading the market and the sub-segments are mentioned in the report.

• The report studies the leading manufacturers and other players entering the global Anti-piracy Protection market. Thanks for reading this article; you can also get individual chapter wise section or region wise report version like North America, Middle East, Africa, Europe or LATAM, Southeast Asia.

Contact US :

Craig Francis (PR & Marketing Manager)

AMA Research & Media LLP

Unit No. 429, Parsonage Road Edison, NJ

New Jersey USA – 08837

Phone: +1 201 565 3262, +44 161 818 8166

[email protected]

#Global Anti-piracy Protection Market#Anti-piracy Protection Market Demand#Anti-piracy Protection Market Trends#Anti-piracy Protection Market Analysis#Anti-piracy Protection Market Growth#Anti-piracy Protection Market Share#Anti-piracy Protection Market Forecast#Anti-piracy Protection Market Challenges

0 notes

Text

POST - 5

AI in Visual Effects: Advancing Realism and Efficiency in Film Production

Visual effects (VFX) play a vital role in modern filmmaking, allowing filmmakers to create stunning and immersive worlds that captivate audiences. Recent advancements in artificial intelligence (AI) are revolutionizing the field of VFX, enabling filmmakers to achieve new levels of realism, efficiency, and creativity in their productions.

One significant recent development in AI-driven VFX is demonstrated by Wavelength Studios, who have developed a groundbreaking system that uses machine learning algorithms to automate and streamline various aspects of the VFX production pipeline. From asset generation and compositing to rendering and post-production, this AI-driven system accelerates the production process, reduces costs, and enables filmmakers to bring their creative visions to life more efficiently than ever before.

Moreover, AI-powered VFX solutions offer unparalleled flexibility and scalability in film production. By automating repetitive and time-consuming tasks, such as rotoscoping, motion tracking, and CGI rendering, filmmakers can focus more on the creative aspects of storytelling and visual design. This not only enhances the quality of the final product but also allows for greater experimentation and iteration during the production process.

Furthermore, AI-driven VFX solutions are pushing the boundaries of realism in filmmaking. By analyzing vast amounts of visual data, including real-world physics simulations and reference imagery, AI algorithms can generate lifelike effects and animations that seamlessly blend with live-action footage. This not only enhances immersion but also opens up new creative possibilities for filmmakers to explore.

As AI technology continues to evolve, we can expect even greater innovations in VFX production, pushing the boundaries of what is possible in visual storytelling. With AI as a powerful tool in their arsenal, filmmakers have the opportunity to create truly immersive and unforgettable cinematic experiences that captivate audiences and redefine the filmmaking landscape.

References:

Ward, B., et al. (2019). "Deep Learning for Real-Time Hollywood Visual Effects: A Case Study." Proceedings of the ACM Conference on Computer Graphics and Interactive Techniques (SIGGRAPH), 1-12.

Criminisi, A., et al. (2012). "Neural Networks for the Simulation and Rendering of Realistic Textures." ACM Transactions on Graphics, 31(4), 1-10.

0 notes

Text

The Rise of Artificial Intelligence: A Look into the Future of Technology

The Impact of AI on Society and Industry

Artificial Intelligence (AI) has become one of the most significant technological advancements of our time. With its ability to mimic human intelligence and perform tasks that were once exclusive to humans, AI has revolutionized various industries and transformed the way we live and work. In this article, we will explore the rise of AI and its implications for society and industry.

From self-driving cars to virtual assistants, AI has permeated almost every aspect of our lives. Its potential to enhance efficiency, improve decision-making, and solve complex problems has captured the attention of businesses, researchers, and policymakers alike. However, as AI continues to evolve, questions arise about its impact on jobs, privacy, and ethics.

Let's delve deeper into this fascinating subject.

youtube

The Evolution of AI

AI has come a long way since its inception. Initially, AI was limited to rule-based systems that followed predefined instructions. However, with the advent of machine learning algorithms and deep neural networks, AI has become more sophisticated and capable of learning from vast amounts of data.

Machine learning allows AI systems to recognize patterns, make predictions, and adapt to new situations. This has led to breakthroughs in areas such as natural language processing, computer vision, and robotics. AI is now capable of understanding human speech, analyzing images, and even performing complex tasks like surgical procedures.

AI in Industry

The impact of AI on various industries cannot be overstated. In healthcare, AI is being used to diagnose diseases, develop personalized treatment plans, and improve patient outcomes. AI-powered robots are assisting surgeons in performing intricate procedures with greater precision and efficiency.

In the finance sector, AI algorithms are used for fraud detection, credit scoring, and investment analysis. AI-powered chatbots are revolutionizing customer service by providing instant support and personalized recommendations.

The manufacturing industry is also benefiting from AI. Autonomous robots are being used for tasks such as assembly, quality control, and inventory management. AI systems are optimizing supply chains, reducing costs, and improving overall operational efficiency.

The Impact on Jobs and the Workforce

While AI presents numerous opportunities, it also raises concerns about job displacement. As AI systems become more capable, there is a fear that they will replace human workers in various industries. However, experts argue that AI will not eliminate jobs but rather transform them.

AI has the potential to automate repetitive and mundane tasks, allowing humans to focus on more creative and complex work. It can augment human capabilities and improve productivity. However, to ensure a smooth transition, reskilling and upskilling programs need to be implemented to equip the workforce with the necessary skills to thrive in an AI-driven world.

Ethical Considerations and Privacy Concerns

As AI becomes more integrated into our lives, ethical considerations and privacy concerns come to the forefront. AI systems are only as unbiased as the data they are trained on. If the training data is biased, it can lead to discriminatory outcomes.

Ensuring fairness and transparency in AI algorithms is crucial to prevent unintended consequences.

Privacy is another major concern. AI systems collect vast amounts of data, raising questions about data security and individual privacy. Striking the right balance between harnessing the power of AI and protecting personal information is a challenge that needs to be addressed.

The Future of AI

The future of AI holds immense possibilities. As AI continues to evolve, it is expected to have an even greater impact on society and industry. AI-powered virtual assistants will become more intelligent and personalized, revolutionizing the way we interact with technology.

AI will play a crucial role in addressing global challenges such as climate change, healthcare disparities, and food security. It has the potential to optimize energy consumption, develop personalized healthcare solutions, and improve agricultural practices.

Artificial Intelligence has emerged as a transformative force that is reshaping the world as we know it. Its impact on society and industry is undeniable, and its potential for future advancements is boundless. As we navigate the ever-changing landscape of AI, it is essential to embrace its benefits while addressing the ethical, social, and economic implications.

By doing so, we can harness the power of AI to create a better and more inclusive future for all.

0 notes

Text

Generative AI: A Powerful New Technology

Inhaltverzeichnis

What Type of Content Can Generative AI Text Models Create—and Where Does It Come From?

How big a role will generative AI play in the future of business?

> Education Applications

Mathematical functions are used to measure how well the reconstructed data matches the original data. So, researchers can discover new trends and patterns that may not be otherwise apparent. These algorithms can summarize content, outline multiple solution paths, brainstorm ideas, and create detailed documentation from research notes.

youtube

Like all artificial intelligence, generative AI works by using machine learning models—very large models that are pretrained on vast amounts of data.

Developing code is possible through this quality not only for professionals but also for non-technical people.

In drug discovery, it can speed up the process of identifying new potential drug candidates.

As the technology continues to evolve, gen AI’s applications and use cases will only continue to grow.

Our experts work with you to tailor generative AI tools to your organisation's specific challenges and opportunities, ensuring its ability to create real results.

For example, ChatGPT can be trained on a company’s FAQ page or knowledge base to recognize and respond to common customer questions. When a customer sends a message with a question, ChatGPT can analyze the message and provide a response that answers the customer’s question or directs them to additional resources. Generative AI models can simulate various production scenarios, predict demand, and help optimize inventory levels. It can use historical customer data to predict demand, thereby enabling more accurate production schedules and optimal inventory levels.

What Type of Content Can Generative AI Text Models Create—and Where Does It Come From?

Our updates examined use cases of generative AI—specifically, how generative AI techniques (primarily transformer-based neural networks) can be used to solve problems not well addressed by previous technologies. Some of this impact will overlap with cost reductions in the use case analysis described above, which we assume are the result of improved labor productivity. Generative AI’s impact on productivity could add trillions of dollars in value to the global economy. Our latest research estimates that generative AI could add the equivalent of $2.6 trillion to $4.4 trillion annually across the 63 use cases we analyzed—by comparison, the United Kingdom’s entire GDP in 2021 was $3.1 trillion. This estimate would roughly double if we include the impact of embedding generative AI into software that is currently used for other tasks beyond those use cases.

Security agencies have made moves to ensure AI systems are built with safety and security in mind. In November 2023, 16 agencies including the U.K.’s National Cyber Security Centre and the U.S. Cybersecurity and Infrastructure Security Agency released the Guidelines for Secure AI System Development, which promote security as a fundamental aspect of AI development and deployment. On Feb. 13, 2024, the European Council approved the AI Act, a first-of-kind piece of legislation designed to regulate the use of AI in Europe. The legislation takes a risk-based approach to regulating AI, with some AI systems banned outright. The popularity of generative AI has exploded in recent years, largely thanks to the arrival of OpenAI’s ChatGPT and DALL-E models, which put accessible AI tools into the hands of consumers.

How big a role will generative AI play in the future of business?

A generative AI bot trained on proprietary knowledge such as policies, research, and customer interaction could provide always-on, deep technical support. Today, frontline spending is dedicated mostly to validating offers and interacting with clients, but giving frontline workers access to data as well could improve the customer experience. The technology could also monitor industries and clients and send alerts on semantic queries from public sources. The model combines search and content creation so wealth managers can find and tailor information for any client at any moment.

Generative AI covers a range of machine learning and deep learning techniques, such as Generative Adversarial Networks (GANs) and transformer models. ChatGPT, for example, is based on the GPT (Generative Pre-trained Transformer) architecture, which is a type of transformer model designed for natural language processing (NLP) tasks such as text generation, translation and question-answering. DALL-E is another popular generative AI system in which the GPT architecture has been adapted to generate images from written prompts. A large language model (LLM) is a powerful machine learning model that can process and identify complex relationships in natural language, generate text and have conversations with users.

Since the release of new generative artificial intelligence (AI) tools, including ChatGPT, we have all been navigating our way through both the landscape of AI in education and its implications for teaching. As we adapt to these quickly evolving tools and observe how students are using them, many of us are still formulating our own values around what this means for our classes. The overall impact of generative AI on the workforce and society at large is prompting serious discussion.

Traditional AI and advanced analytics solutions have helped companies manage vast pools of data across large numbers of SKUs, expansive supply chain and warehousing networks, and complex product categories such as consumables. In addition, the industries are heavily customer facing, which offers opportunities for generative AI to complement previously existing artificial intelligence. For example, generative AI’s ability to personalize offerings could optimize marketing and sales activities already handled by existing AI solutions. Similarly, generative AI tools excel at data management and could support existing AI-driven pricing tools.

In select learning programs, you can apply for financial aid or a scholarship if you can’t afford the enrollment fee. If fin aid or scholarship is available for your learning program selection, you’ll find a link to apply on the description page. When you purchase a Certificate you get access to all course materials, including graded assignments. Upon completing the course, your electronic Certificate will be added to your Accomplishments page - from there, you can print your Certificate or add it to your LinkedIn profile. If you only want to read and view the course content, you can audit the course for free.

At a high level, attention refers to the mathematical description of how things (e.g., words) relate to, complement and modify each other. The breakthrough technique could also discover relationships, or hidden orders, between other things buried in the data that humans might have been unaware of because they were too complicated to express or discern. Researchers have been creating AI and other tools for programmatically generating content since the early days of AI. The earliest approaches, known as rule-based systems and later as "expert systems," used explicitly crafted rules for generating responses or data sets.

Generative AI has taken hold rapidly in marketing and sales functions, in which text-based communications and personalization at scale are driving forces. All of us are at the beginning of a journey to understand generative AI’s power, reach, and capabilities. This research is the latest in our efforts to assess the impact of this new era of AI. It suggests that generative AI is poised to transform roles and boost performance across functions such as sales and marketing, customer operations, and software development. In the process, it could unlock trillions of dollars in value across sectors from banking to life sciences. The speed at which generative AI technology is developing isn’t making this task any easier.

In drug discovery, it can speed up the process of identifying https://textie.ai/ new potential drug candidates. In clinical research, generative AI has the potential to extract information from complex data to create synthetic data and digital twins, which are representative of individuals (a way to protect privacy). Other applications include identifying safety signals or finding new uses for existing treatments.

0 notes

Text

Bridging the Educational Divide with AI: A Vision for the Future

Amid a revolution in technology and education, we are granted the opportunity to make learning accessible to all, irrespective of social standing. As the Chief Technology Officer of our startup, I am eager to share our vision for using Artificial Intelligence (AI) to address educational inequality. Our goal is rooted in the belief that education should be a basic human right, not a privilege, thus we are committed to using AI to include those who are marginalized or living in poverty in the educational landscape.

Our approach integrates three essential AI concepts: natural language processing (NLP), machine learning, and deep learning neural networks, to democratize education.

Firstly, at the core of our solution is machine learning. Traditional educational models often follow a one-size-fits-all approach, leaving behind those students who do not fit into the conventional norms. To counter this, our platform utilizes machine learning algorithms to create personalized learning pathways. The system adapts the curriculum based on patterns in a student's engagement with the material, ensuring a tailored educational experience for each learner.

Moreover, language often poses a significant barrier to accessing quality education. Our platform employs NLP to translate and generate educational content in multiple languages, including indigenous and less commonly spoken ones. This initiative not only facilitates access to education but also helps in preserving cultural heritage by providing learning materials in native languages. Additionally, NLP enables the creation of interactive and engaging learning experiences, making education accessible and enjoyable for everyone.

Lastly, the challenge of scaling quality education is addressed by using deep learning neural networks. These networks analyze vast amounts of educational data to identify the most effective teaching methodologies and content. This process ensures that our platform continually improves the quality of education it provides, keeping it in line with the latest educational research. Furthermore, deep learning neural networks enable the development of virtual tutors, capable of offering instant feedback and assistance, thereby replicating the advantages of one-on-one tutoring.

Our mission extends beyond innovation, ensuring every child receives a deserved education regardless of background. By integrating AI with strategies like partnerships with governments, and mobile technology, we tackle technological hurdles and uphold education as a basic right. Our low-cost, scalable methods aim to democratize AI education, breaking economic barriers and fostering inclusivity for a transformative impact worldwide.

0 notes

Text

Artificial Intelligence Chip Market: Shaping Tomorrow's Intelligent Systems

According to the study by Next Move Strategy Consulting, the global Artificial Intelligence Chip Market size is predicted to reach USD 304.09 billion with a CAGR of 29.0% by 2030. This staggering growth projection underscores the pivotal role that AI chips are set to play in shaping the landscape of intelligent systems in the near future.

Introduction

Artificial Intelligence (AI) has emerged as one of the most transformative technologies of the 21st century, revolutionizing industries and reshaping the way we live, work, and interact. At the heart of this technological revolution lie AI chips, specialized microprocessors designed to perform AI-related tasks with remarkable speed and efficiency. These chips, also known as AI accelerators or neural processing units (NPUs), are driving the development of intelligent systems capable of autonomous decision-making, natural language processing, image recognition, and more.

Request for a sample, here: https://www.nextmsc.com/artificial-intelligence-chip-market/request-sample

The Rise of AI Chips

The development of AI chips can be traced back to the early days of AI research, but it was the advent of deep learning algorithms and neural networks that catalyzed their rapid evolution and adoption. Deep learning, a subset of machine learning that mimics the human brain's neural networks, requires vast amounts of computational power to train and deploy models effectively. Traditional central processing units (CPUs) and graphics processing units (GPUs), while capable of performing general-purpose computing tasks, were ill-suited for the highly parallelized computations inherent in deep learning algorithms.

Recognizing this limitation, researchers and engineers began exploring specialized hardware architectures optimized for AI workloads. The result was the emergence of AI chips, purpose-built microprocessors designed to accelerate AI tasks such as matrix multiplications, convolutions, and activations. These chips leverage parallel processing, reduced precision arithmetic, and other techniques to deliver orders of magnitude improvements in performance and energy efficiency compared to traditional processors.

Key Drivers of Market Growth