#cloud service

Text

My page piece for Love Letters: A Cloud Service 💌 zine!

You can buy it here or in person at Rutgers Comic Con on the 21st of October!

25 notes

·

View notes

Photo

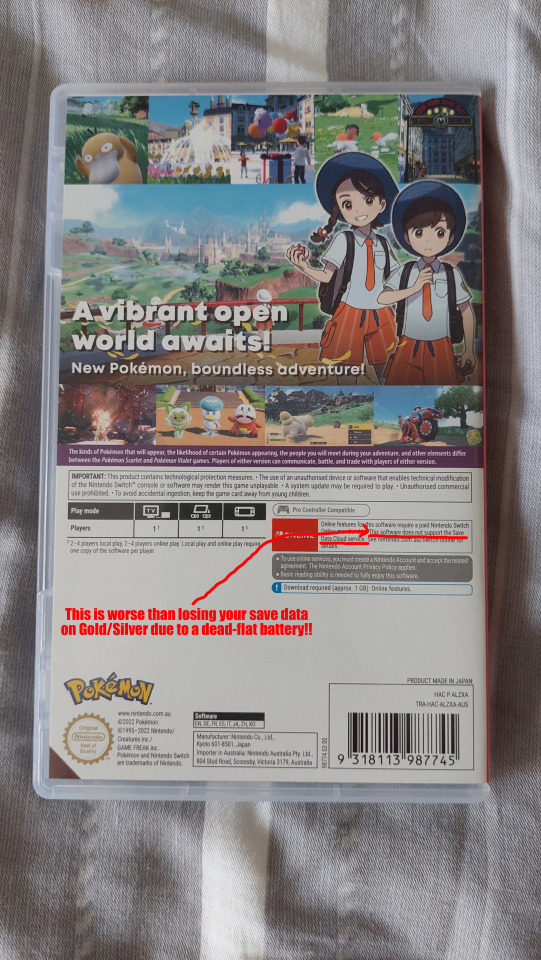

Please take heed that neither Nintendo nor the developers are responsible for any sudden loss of data tied to the system memory (and other factors beyond the consumer’s control) due to to fire, flood, theft, storm, Davey Jones Locker, etc. Once all your prized Pokemon (especially those transferred from Gen 3!) is gone, it’s GONE FOR GOOD!

So always keep in in Pokemon Home when you don’t need them.

#pokemon#scarlet#violet#gen 9#switch#nintendo#save date#cloud service#lacking#rant#flaw#caution#warning#advice#important

5 notes

·

View notes

Text

Create Service User in AEM As Cloud Service

Learn how to create and use Service Users or System Users in AEM as cloud service environment. In this tutorial we will be using Repository initialization (repoinit) feature and below mentioned best practices are valid for AEM 6.5.4+ or AEM as a Cloud Service version.

The purpose for writing this tutorial is to show the best practices that we should follow when creating a service user in AEM…

View On WordPress

0 notes

Text

Jay Dawani is Co-founder & CEO of Lemurian Labs – Interview Series

New Post has been published on https://thedigitalinsider.com/jay-dawani-is-co-founder-ceo-of-lemurian-labs-interview-series/

Jay Dawani is Co-founder & CEO of Lemurian Labs – Interview Series

Jay Dawani is Co-founder & CEO of Lemurian Labs. Lemurian Labs is on a mission to deliver affordable, accessible, and efficient AI computers, driven by the belief that AI should not be a luxury but a tool accessible to everyone. The founding team at Lemurian Labs combines expertise in AI, compilers, numerical algorithms, and computer architecture, united by a single purpose: to reimagine accelerated computing.

Can you walk us through your background and what got you into AI to begin with?

Absolutely. I’d been programming since I was 12 and building my own games and such, but I actually got into AI when I was 15 because of a friend of my fathers who was into computers. He fed my curiosity and gave me books to read such as Von Neumann’s ‘The Computer and The Brain’, Minsky’s ‘Perceptrons’, Russel and Norvig’s ‘AI A Modern Approach’. These books influenced my thinking a lot and it felt almost obvious then that AI was going to be transformative and I just had to be a part of this field.

When it came time for university I really wanted to study AI but I didn’t find any universities offering that, so I decided to major in applied mathematics instead and a little while after I got to university I heard about AlexNet’s results on ImageNet, which was really exciting. At that time I had this now or never moment happen in my head and went full bore into reading every paper and book I could get my hands on related to neural networks and sought out all the leaders in the field to learn from them, because how often do you get to be there at the birth of a new industry and learn from its pioneers.

Very quickly I realized I don’t enjoy research, but I do enjoy solving problems and building AI enabled products. That led me to working on autonomous cars and robots, AI for material discovery, generative models for multi-physics simulations, AI based simulators for training professional racecar drivers and helping with car setups, space robots, algorithmic trading, and much more.

Now, having done all that, I’m trying to reign in the cost of AI training and deployments because that will be the greatest hurdle we face on our path to enabling a world where every person and company can have access to and benefit from AI in the most economical way possible.

Many companies working in accelerated computing have founders that have built careers in semiconductors and infrastructure. How do you think your past experience in AI and mathematics impacts your ability to understand the market and compete effectively?

I actually think not coming from the industry gives me the benefit of having the outsider advantage. I have found it to be the case quite often that not having knowledge of industry norms or conventional wisdoms gives one the freedom to explore more freely and go deeper than most others would because you’re unencumbered by biases.

I have the freedom to ask ‘dumber’ questions and test assumptions in a way that most others wouldn’t because a lot of things are accepted truths. In the past two years I’ve had several conversations with folks within the industry where they are very dogmatic about something but they can’t tell me the provenance of the idea, which I find very puzzling. I like to understand why certain choices were made, and what assumptions or conditions were there at that time and if they still hold.

Coming from an AI background I tend to take a software view by looking at where the workloads today, and here are all the possible ways they may change over time, and modeling the entire ML pipeline for training and inference to understand the bottlenecks, which tells me where the opportunities to deliver value are. And because I come from a mathematical background I like to model things to get as close to truth as I can, and have that guide me. For example, we have built models to calculate system performance for total cost of ownership and we can measure the benefit we can bring to customers with software and/or hardware and to better understand our constraints and the different knobs available to us, and dozens of other models for various things. We are very data driven, and we use the insights from these models to guide our efforts and tradeoffs.

It seems like progress in AI has primarily come from scaling, which requires exponentially more compute and energy. It seems like we’re in an arms race with every company trying to build the biggest model, and there appears to be no end in sight. Do you think there is a way out of this?

There are always ways. Scaling has proven extremely useful, and I don’t think we’ve seen the end yet. We will very soon see models being trained with a cost of at least a billion dollars. If you want to be a leader in generative AI and create bleeding edge foundation models you’ll need to be spending at least a few billion a year on compute. Now, there are natural limits to scaling, such as being able to construct a large enough dataset for a model of that size, getting access to people with the right know-how, and getting access to enough compute.

Continued scaling of model size is inevitable, but we also can’t turn the entire earth’s surface into a planet sized supercomputer to train and serve LLMs for obvious reasons. To get this into control we have several knobs we can play with: better datasets, new model architectures, new training methods, better compilers, algorithmic improvements and exploitations, better computer architectures, and so on. If we do all that, there’s roughly three orders of magnitude of improvement to be found. That’s the best way out.

You are a believer in first principles thinking, how does this mold your mindset for how you are running Lemurian Labs?

We definitely employ a lot of first principles thinking at Lemurian. I have always found conventional wisdom misleading because that knowledge was formed at a certain point in time when certain assumptions held, but things always change and you need to retest assumptions often, especially when living in such a fast paced world.

I often find myself asking questions like “this seems like a really good idea, but why might this not work”, or “what needs to be true in order for this to work”, or “what do we know that are absolute truths and what are the assumptions we’re making and why?”, or “why do we believe this particular approach is the best way to solve this problem”. The goal is to invalidate and kill off ideas as quickly and cheaply as possible. We want to try and maximize the number of things we’re trying out at any given point in time. It’s about being obsessed with the problem that needs to be solved, and not being overly opinionated about what technology is best. Too many folks tend to overly focus on the technology and they end up misunderstanding customers’ problems and miss the transitions happening in the industry which could invalidate their approach resulting in their inability to adapt to the new state of the world.

But first principles thinking isn’t all that useful by itself. We tend to pair it with backcasting, which basically means imagining an ideal or desired future outcome and working backwards to identify the different steps or actions needed to realize it. This ensures we converge on a meaningful solution that is not only innovative but also grounded in reality. It doesn’t make sense to spend time coming up with the perfect solution only to realize it’s not feasible to build because of a variety of real world constraints such as resources, time, regulation, or building a seemingly perfect solution but later on finding out you’ve made it too hard for customers to adopt.

Every now and then we find ourselves in a situation where we need to make a decision but have no data, and in this scenario we employ minimum testable hypotheses which give us a signal as to whether or not something makes sense to pursue with the least amount of energy expenditure.

All this combined is to give us agility, rapid iteration cycles to de-risk items quickly, and has helped us adjust strategies with high confidence, and make a lot of progress on very hard problems in a very short amount of time.

Initially, you were focused on edge AI, what caused you to refocus and pivot to cloud computing?

We started with edge AI because at that time I was very focused on trying to solve a very particular problem that I had faced in trying to usher in a world of general purpose autonomous robotics. Autonomous robotics holds the promise of being the biggest platform shift in our collective history, and it seemed like we had everything needed to build a foundation model for robotics but we were missing the ideal inference chip with the right balance of throughput, latency, energy efficiency, and programmability to run said foundation model on.

I wasn’t thinking about the datacenter at this time because there were more than enough companies focusing there and I expected they would figure it out. We designed a really powerful architecture for this application space and were getting ready to tape it out, and then it became abundantly clear that the world had changed and the problem truly was in the datacenter. The rate at which LLMs were scaling and consuming compute far outstrips the pace of progress in computing, and when you factor in adoption it starts to paint a worrying picture.

It felt like this is where we should be focusing our efforts, to bring down the energy cost of AI in datacenters as much as possible without imposing restrictions on where and how AI should evolve. And so, we got to work on solving this problem.

Can you share the genesis story of Co-Founding Lemurian Labs?

The story starts in early 2018. I was working on training a foundation model for general purpose autonomy along with a model for generative multiphysics simulation to train the agent in and fine-tune it for different applications, and some other things to help scale into multi-agent environments. But very quickly I exhausted the amount of compute I had, and I estimated needing more than 20,000 V100 GPUs. I tried to raise enough to get access to the compute but the market wasn’t ready for that kind of scale just yet. It did however get me thinking about the deployment side of things and I sat down to calculate how much performance I would need for serving this model in the target environments and I realized there was no chip in existence that could get me there.

A couple of years later, in 2020, I met up with Vassil – my eventual cofounder – to catch up and I shared the challenges I went through in building a foundation model for autonomy, and he suggested building an inference chip that could run the foundation model, and he shared that he had been thinking a lot about number formats and better representations would help in not only making neural networks retain accuracy at lower bit-widths but also in creating more powerful architectures.

It was an intriguing idea but was way out of my wheelhouse. But it wouldn’t leave me, which drove me to spending months and months learning the intricacies of computer architecture, instruction sets, runtimes, compilers, and programming models. Eventually, building a semiconductor company started to make sense and I had formed a thesis around what the problem was and how to go about it. And, then towards the end of the year we started Lemurian.

You’ve spoken previously about the need to tackle software first when building hardware, could you elaborate on your views of why the hardware problem is first and foremost a software problem?

What a lot of people don’t realize is that the software side of semiconductors is much harder than the hardware itself. Building a useful computer architecture for customers to use and get benefit from is a full stack problem, and if you don’t have that understanding and preparedness going in, you’ll end up with a beautiful looking architecture that is very performant and efficient, but totally unusable by developers, which is what is actually important.

There are other benefits to taking a software first approach as well, of course, such as faster time to market. This is crucial in today’s fast moving world where being too bullish on an architecture or feature could mean you miss the market entirely.

Not taking a software first view generally results in not having derisked the important things required for product adoption in the market, not being able to respond to changes in the market for example when workloads evolve in an unexpected way, and having underutilized hardware. All not great things. That’s a big reason why we care a lot about being software centric and why our view is that you can’t be a semiconductor company without really being a software company.

Can you discuss your immediate software stack goals?

When we were designing our architecture and thinking about the forward looking roadmap and where the opportunities were to bring more performance and energy efficiency, it started becoming very clear that we were going to see a lot more heterogeneity which was going to create a lot of issues on software. And we don’t just need to be able to productively program heterogeneous architectures, we have to deal with them at datacenter scale, which is a challenge the likes of which we haven’t encountered before.

This got us concerned because the last time we had to go through a major transition was when the industry moved from single-core to multi-core architectures, and at that time it took 10 years to get software working and people using it. We can’t afford to wait 10 years to figure out software for heterogeneity at scale, it has to be sorted out now. And so, we got to work on understanding the problem and what needs to exist in order for this software stack to exist.

We are currently engaging with a lot of the leading semiconductor companies and hyperscalers/cloud service providers and will be releasing our software stack in the next 12 months. It is a unified programming model with a compiler and runtime capable of targeting any kind of architecture, and orchestrating work across clusters composed of different kinds of hardware, and is capable of scaling from a single node to a thousand node cluster for the highest possible performance.

Thank you for the great interview, readers who wish to learn more should visit Lemurian Labs.

#000#accelerated computing#ai#ai training#Algorithms#amp#applications#approach#architecture#autonomous cars#background#billion#bleeding#book#Books#Brain#Building#Careers#Cars#CEO#challenge#change#Cloud#cloud computing#cloud service#cluster#clusters#Collective#Companies#computer

0 notes

Text

In this article, we will take a look at how to deploy an angular application to an Amazon S3 Bucket and access it via AWS Cloud Front. Let's read here -

#angular web#deploy angular app#Angular Web Deployment#angular version#Aws Cloud#Cloud Service#angular tutorial#angular 17#habilelabs#ethicsfirst

0 notes

Text

slow internet, then visit us candid8 provide the speed internet service in texas

0 notes

Text

Unveiling the Top Cloud Service Providers in Mumbai: Harnessing the Power of the Cloud

Introduction:

In recent years, Mumbai has emerged as a hub for technological advancements and innovation, with businesses of all sizes recognizing the importance of migrating to the cloud. Cloud computing offers scalability, flexibility, and cost-effectiveness, making it a game-changer for organizations seeking to enhance their digital infrastructure. In this blog, we'll explore the top cloud service providers in Mumbai, paving the way for businesses to make informed decisions and leverage the full potential of the cloud.

Amazon Web Services (AWS): Mumbai's Cloud Pioneer

Amazon Web Services (AWS), a subsidiary of Amazon.com, stands tall as a pioneer in the cloud computing landscape. With its Mumbai data center, AWS provides a wide array of cloud services, including computing power, storage, and databases. Mumbai-based businesses can benefit from AWS's robust infrastructure, ensuring high availability and low-latency access to cloud resources.

Microsoft Azure: Empowering Mumbai's Digital Transformation

Microsoft Azure has established itself as a leading cloud service provider in Mumbai, offering a comprehensive suite of services, from virtual computing to analytics and artificial intelligence. Azure's Mumbai data centers enable businesses to build, deploy, and manage applications seamlessly, facilitating their digital transformation journey.

Google Cloud Platform (GCP): Mumbai's Gateway to Innovation

Google Cloud Platform (GCP) has gained traction among Mumbai-based enterprises, providing a secure and scalable cloud environment. With a focus on data analytics, machine learning, and container orchestration, GCP empowers businesses to innovate and stay ahead in the dynamic digital landscape.

IBM Cloud: Bridging Mumbai's Enterprise Solutions

IBM Cloud caters to the diverse needs of Mumbai's enterprises with a broad range of cloud services, including infrastructure as a service (IaaS) and platform as a service (PaaS). With a commitment to security and compliance, IBM Cloud serves as a strategic partner for businesses looking to enhance their IT capabilities.

Oracle Cloud: Elevating Mumbai's Database Management

Oracle Cloud is renowned for its database management solutions, offering a robust platform for Mumbai-based businesses to store, process, and analyze data efficiently. With a focus on high-performance computing, Oracle Cloud is a go-to choice for enterprises seeking advanced database services.

Alibaba Cloud: Mumbai's Gateway to Global Reach

Alibaba Cloud, the cloud computing arm of Alibaba Group, extends its global presence to Mumbai, providing businesses with a reliable and scalable cloud infrastructure. As an international player, Alibaba Cloud enables Mumbai-based enterprises to expand their reach and tap into global markets seamlessly.

Conclusion:

In conclusion, the burgeoning tech landscape in Mumbai demands a robust and flexible IT infrastructure, and cloud computing has become the cornerstone of digital transformation. The top cloud service providers in Mumbai, including AWS, Microsoft Azure, Google Cloud Platform, IBM Cloud, Oracle Cloud, and Alibaba Cloud, offer a diverse range of services to cater to the unique needs of businesses in the region.

As Mumbai continues to evolve as a technological hub, organizations must carefully evaluate their requirements and choose a cloud service provider that aligns with their goals and objectives. Whether it's scalability, security, or innovation, the cloud service providers mentioned above are well-equipped to propel Mumbai-based businesses into a future where the possibilities are limitless. Embracing the cloud is not just a technological upgrade; it's a strategic move that positions businesses at the forefront of the digital revolution.

0 notes

Text

Business News/Microsoft—31 Jan. 2024

The latest earnings report from Microsoft has exceeded expectations, with positive results across the board. In particular, the cloud service Azure, which features the chat GPT, has performed well for two consecutive quarters.

The latest earnings report from Microsoft has exceeded expectations, with positive results across the board. In particular, the cloud service Azure, which features the chat GPT, has performed well for two consecutive quarters. Bloomberg analyst Anurag Rana predicts that Microsoft’s future prospects are bright due to the continued growth of cloud consumption and demand. Rana also notes that the…

youtube

View On WordPress

1 note

·

View note

Text

Discover the ideal ERP solution tailored for your business needs, streamlining operations and enhancing efficiency for sustained growth and success

#erp solution#business growth#cloud service#it infrastructure management#business technology#tech news

0 notes

Text

The Future of It: Exploring Cloud Services

Cloud computing revolutionizes data storage and processing, offering scalable solutions for businesses. Cloud services provide flexibility, security, and accessibility, streamlining operations and driving innovation in the digital age Read more ...

0 notes

Text

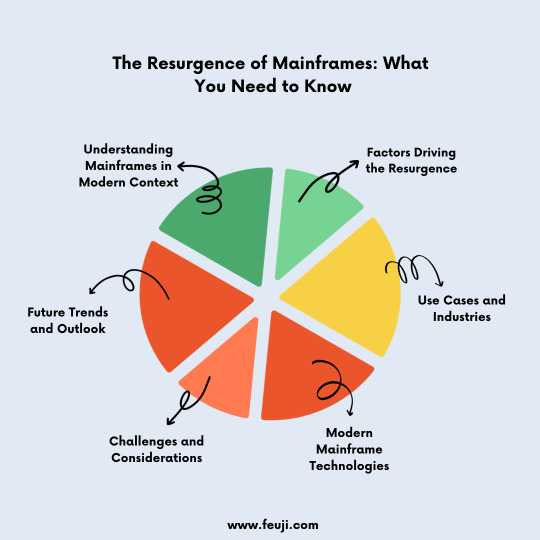

Mainframes are making a comeback, powering modern enterprises with unmatched reliability and performance.

0 notes

Text

7R Strategies For Seamless Cloud Application Migration To AWS

By employing the 7R strategies—Readiness Assessment, Rehosting, Replatforming, Repurchasing, Refactoring, Retiring, and Retaining—we optimize your AWS cloud migration process, enabling you to unlock the full potential of cloud technology.

0 notes

Text

How SASE Plays an Important Role in Addressing New Cyber Threats - Technology Org

New Post has been published on https://thedigitalinsider.com/how-sase-plays-an-important-role-in-addressing-new-cyber-threats-technology-org/

How SASE Plays an Important Role in Addressing New Cyber Threats - Technology Org

Cyber threats continue to evolve and become more difficult to address. The advent of more advanced artificial intelligence is making attacks worse in quantity and sophistication. Organizations have to contend with the reality of incessant attacks that only get worse over time, especially as they embrace more complex IT infrastructure that involve multiple cloud assets and SaaS solutions.

Cyber threats, cybersecurity – illustrative artistic photo. Image credit: Tima Miroshnichenko via Pexels, free license

With IT resources now located on the cloud and away from the protection of on-premises security solutions, it is important to adopt security tools that extend into resources wherever they may be. This is where the Secure Access Service Edge (SASE) solution comes into play. This security concept was introduced in 2019 by consulting firm Gartner, and has since become one of the most dependable security solutions for modern enterprises. It brings network security together with wide-area networking (WAN) functions to provide protection against a wide range of new threats. It offers a unified way to address various cyber attacks without compromising connectivity.

SASE’s approach in cyber defense

SASE is designed to address the weaknesses in the traditional hub-and-spoke network architecture, wherein traffic is usually routed through centralized data centers and security appliances. This arrangement is associated with a number of challenges, particularly the emergence of latency problems, reduced security efficacy, and increased infrastructure complexity.

With SASE, various network security functions are integrated with WAN capabilities to ensure secure access to apps and resources irrespective of their location. Secure Access Server Edge provides a comprehensive and adaptive cybersecurity approach that effectively addresses possible cyber attacks.

SASE’s defense model centers on the following key goals: the reduction of the complexity of using multiple network security tools, flexibility and scalability in protecting systems, and zero trust. These goals help attain key advantages that define SASE’s role in addressing new cyber threats.

Simplifying the complexity

One of the biggest challenges in the way organizations address security threats is the use of multiple cybersecurity products. They can result in cybersecurity tool bloat, which makes security operations inefficient. It is difficult to manage several tools and keep up with all the security incident alerts and related notifications. They pose a complexity problem that can be addressed with the help of SASE.

SASE provides a unified interface for the management of multiple security tools. It makes it easy to manage Firewall-as-a-Service, secure web gateways, data loss prevention systems, cloud access security brokers, and various other tools. Additionally, it enables centralized security policy orchestration and enforcement wherein administrators define granular access controls and security policies based on various factors for consistent enforcement across the entire network architecture.

It also leverages threat intelligence feeds from various sources, consolidating all relevant threat information to optimize their impact on threat detection. SASE also conducts advanced analytics in real-time to extract insights from threat intelligence beyond the threat identities. Through machine learning and behavioral analysis technologies, it is possible to anticipate threats or detect obscure vulnerabilities that would otherwise be overlooked given the multitude of security solutions used in an organization at the same time.

It is also worth noting that SASE emphasizes identity-driven security. It focuses on the identity of users and devices instead of prioritizing traditional network perimeters to determine the areas to be protected. It maximizes the use of identity and access management (IAM) tools to enforce granular controls and detect threats more accurately with the help of contextual information. This identity-focused defense does not only improve threat prevention; it also simplifies the complexities that come with using multiple threat detection solutions.

Ensuring scalability and flexibility

Another major challenge for cybersecurity at present is the need to adjust to the changing demands for cyber protection. Cybersecurity has to be dynamic and scalable. It must be capable of agilely keeping up with the changes in an organization’s IT infrastructure and expanding potential attack surfaces. It also needs to be flexible to address security across different platforms.

SASE provides the scalability and flexibility modern organizations need to ensure adequate cyber defense with its cloud-native protection. It integrates various security functions as a cloud-native service that can be used to secure systems in different locations. This cloud service can provide security across a variety of platforms, making it unnecessary for organizations to install security solutions locally and undertake maintenance routines per device.

Additionally, SASE delivers edge-centric security. This means that it brings security enforcement in close proximity to the users and devices, near the edge of the network. Instead of routing all traffic through centralized data centers, security controls are implemented at the edge. This results in significant improvements on latency and performance, ensuring faster responses to threats or attacks. This also infers the possibility of continuous threat monitoring and response. SASE platforms can include advanced capabilities to detect and remediate threats by continuously tracking network traffic and user behavior.

Moreover, SASE helps achieve optimized WAN connectivity while securing connections. It integrates software-defined wide area networking (SD-WAN) to dynamically choose the most efficient network paths based on the network conditions and what the apps require, ensuring the quality of connections between users and apps. This ensures consistent access to resources even in remote branch locations, which is important as the IT infrastructure of organizations keeps broadening with the adoption of new technologies and expansion of operations.

Implementing zero-trust security

SASE is also associated with zero-trust security, a crucial cybersecurity principle designed to combat the evolving sophistication of modern cyber threats. Security posture management systems that include SASE implement security scanning mechanisms that take away any presumption of regularity. No access or resource request is considered safe regardless of who makes the request. Everything is examined for possible anomalies or indications of malicious action.

Also, the zero-trust principle comes with the enforcement of the principle of least privilege, wherein the access privileges granted are always kept at a minimum. Users are only given the exact level of access or privileges they need to complete specific tasks to make sure that privileges are not abused or exploited especially in insider attacks.

Security without performance compromises

Secure Access Server Edge provides protection that competently addresses existing and emerging threats. Its cloud-native nature makes it suitable for the IT infrastructure of most modern organizations. The integration of multiple security tools under a unified interface simplifies the management of multiple security solutions while maximizing their impact on an organization’s overall security posture. Also, its edge-centric security approach makes it highly scalable and flexible and the implementation of zero-trust security makes SASE in-tune with the cybersecurity best practices at present. Importantly, SASE delivers all of these without having a noticeable impact on network performance.

#access management#alerts#Analysis#Analytics#approach#apps#architecture#arrangement#artificial#Artificial Intelligence#assets#Behavior#behavioral analysis#challenge#Cloud#cloud service#Cloud-Native#complexity#comprehensive#connectivity#consulting#continuous#cyber#cyber attacks#Cyber Threats#cybersecurity#data#Data Centers#data loss#defense

0 notes

Text

What Is Cloud Cost Optimization? 12 Best Practices To Cut Your Cloud Bill

Cloud Cost Optimization - Cloud optimization reduces spend by rightsizing to eliminating capacity waste and purchasing instances at discount.

Choosing the perfect cloud service provider can help in optimizing cloud costs.

Get in touch with the cloud experts now and reap the advantages of cloud cost optimizations! https://haripatel.me/cloud-cost-optimization/

0 notes

Text

What are the benefits of using a cloud service provider for businesses?

STL, or Sterlite Technologies Limited, is a global technology company that provides end-to-end data network solutions. Their products and services are used by telecom operators, cloud companies, and data center owners to build and operate high-performance networks.

1 note

·

View note